1.优化器介绍:

优化器集中在torch.optim中。

- Constructing it

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

optimizer = optim.Adam([var1, var2], lr=0.0001)

- Taking an optimization step

for input, target in dataset:

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()

2.代码实战:

import torch

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

#每个批次中加载的数据项数量

dataloader=DataLoader(dataset,batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1=Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,64,5,padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10)

)

def forward(self, x):

x=self.model1(x)

return x

loss=nn.CrossEntropyLoss()

tudui=Tudui()

optim=torch.optim.SGD(tudui.parameters(),lr=0.01)

for epoch in range(20):

running_loss=0.0

for data in dataloader:

imgs,targets = data

outputs =tudui(imgs)

result_loss=loss(outputs,targets)

#清零

optim.zero_grad()

result_loss.backward()

#调优

optim.step()

running_loss=running_loss+result_loss

print(running_loss)

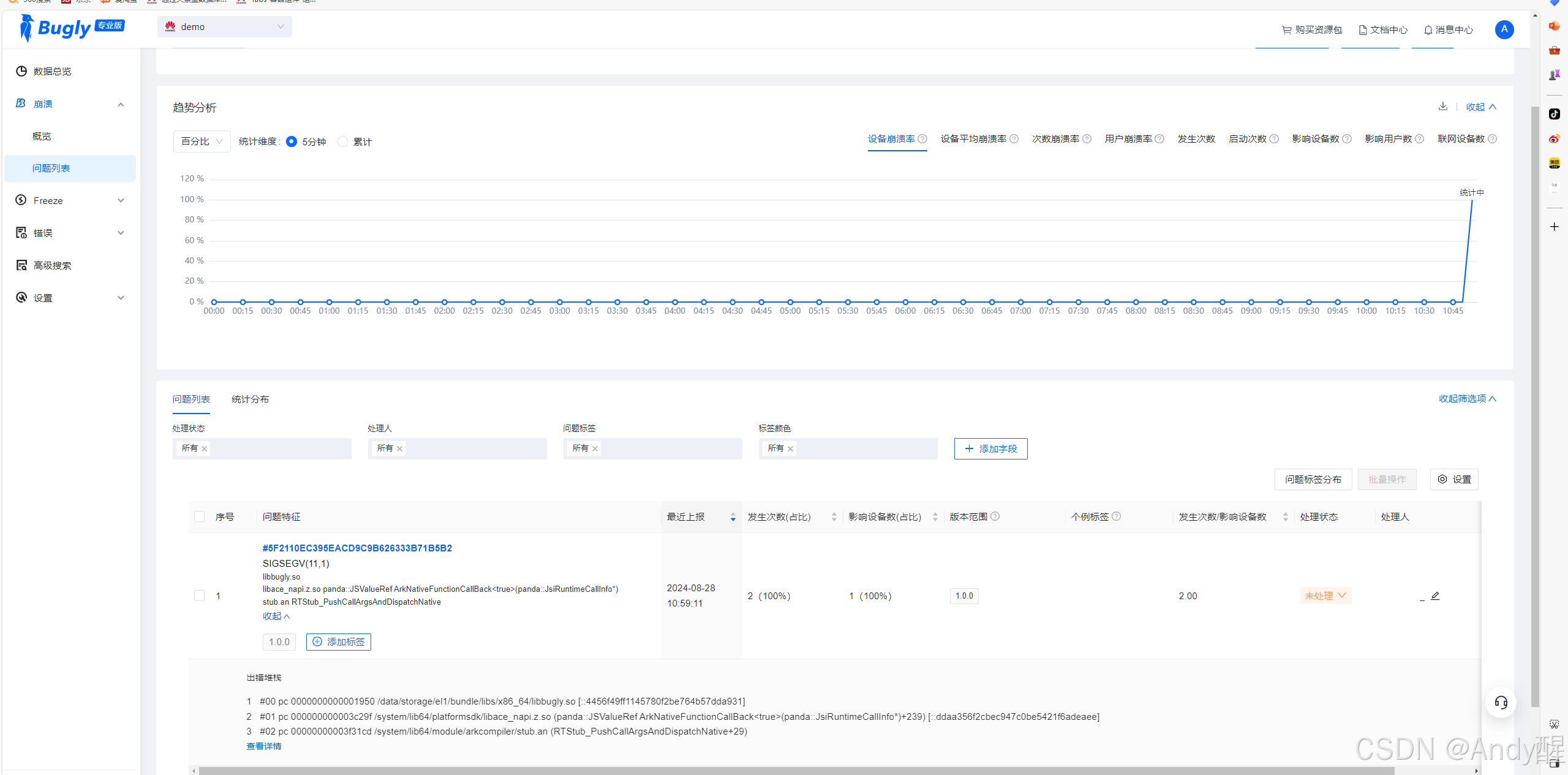

后面loss又升高,为反向优化

3.总结:

优化器的基本使用

- 如果要知道各个优化器的详细用法

- 需要对其有一定了解

- 注意要多训练几轮