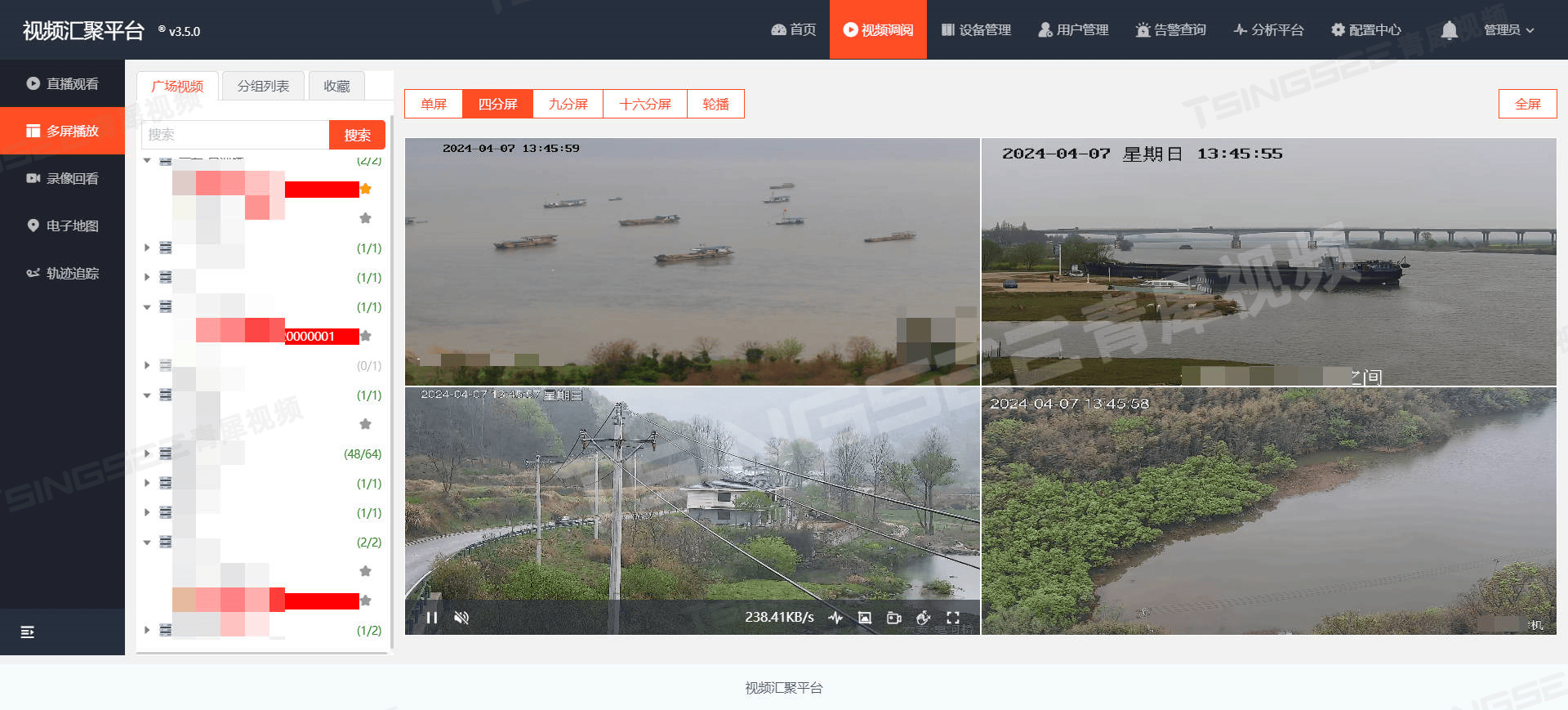

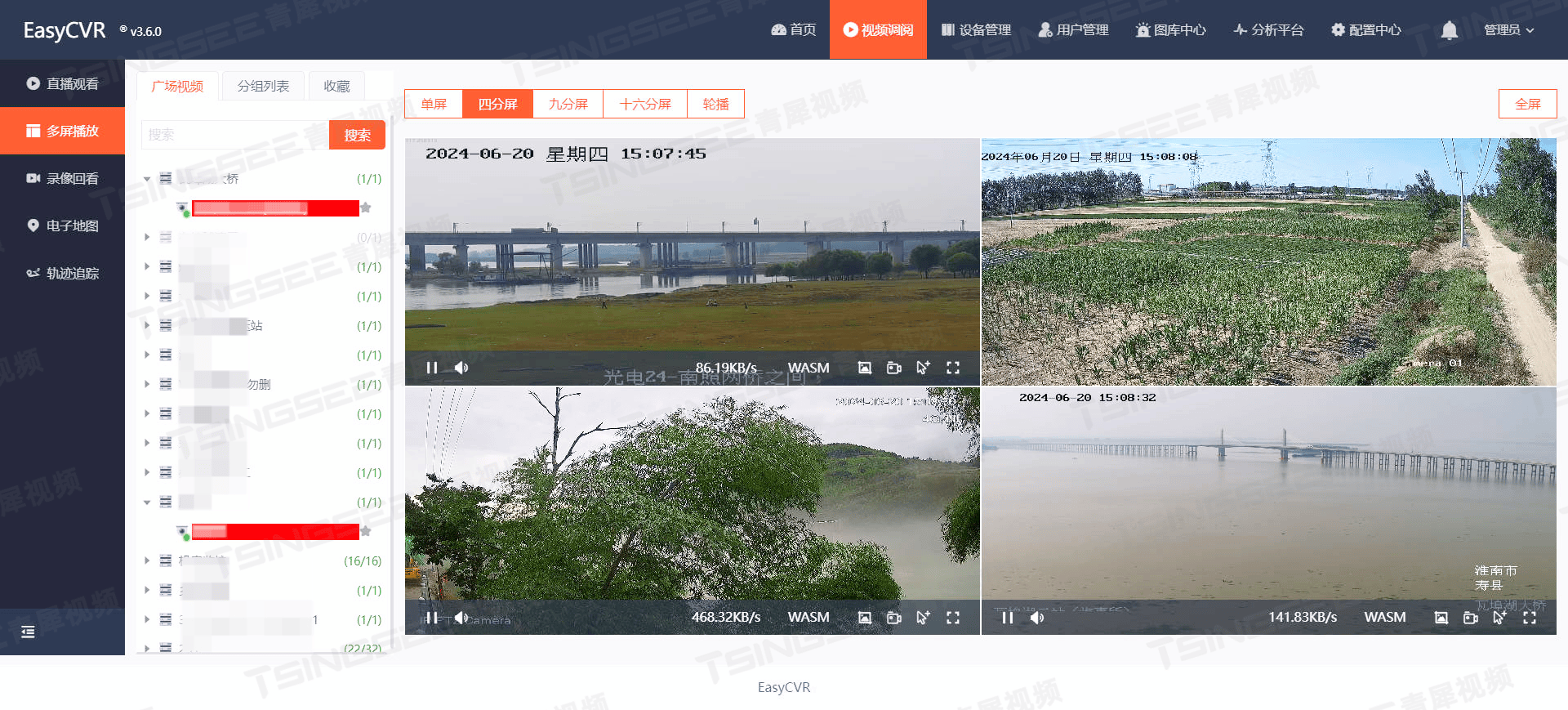

OpenCV图像拼接多频段融合源码重构

图像拼接是计算机视觉中的一个常见问题,OpenCV提供了十分完善的算法类库。作者使用OpenCV4.6.0进行图像拼接,其提供了包括曝光补偿、最佳缝合线检测以及多频段融合等图像拼接常用算法,测试发现多频段融合算法的融合效果极好。为了加深理解,作者将OpenCV源码中的多频段融合算法代码进行了重构,仅保留了CPU处理的代码,这里分享出来。

源码重构

#ifndef STITCHINGBLENDER_H

#define STITCHINGBLENDER_H

#include "opencv2/opencv.hpp"

class KBlender

{

public:

virtual ~KBlender() {}

virtual void prepare(const std::vector<cv::Point> &corners, const std::vector<cv::Size> &sizes);

virtual void prepare(cv::Rect dst_roi);

virtual void feed(cv::InputArray img, cv::InputArray mask, cv::Point tl);

virtual void blend(cv::InputOutputArray dst,cv::InputOutputArray dst_mask);

protected:

cv::UMat dst_, dst_mask_;

cv::Rect dst_roi_;

};

class KMultiBandBlender : public KBlender

{

public:

KMultiBandBlender(int num_bands = 5, int weight_type = CV_32F);

int numBands() const { return actual_num_bands_; }

void setNumBands(int val) { actual_num_bands_ = val; }

void prepare(cv::Rect dst_roi) CV_OVERRIDE;

void feed(cv::InputArray img, cv::InputArray mask, cv::Point tl) CV_OVERRIDE;

void blend(cv::InputOutputArray dst, cv::InputOutputArray dst_mask) CV_OVERRIDE;

private:

int actual_num_bands_, num_bands_;

std::vector<cv::UMat> dst_pyr_laplace_;

std::vector<cv::UMat> dst_band_weights_;

cv::Rect dst_roi_final_;

int weight_type_; //CV_32F or CV_16S

};

//

// Auxiliary functions

void normalizeUsingWeightMap(cv::InputArray weight, CV_IN_OUT cv::InputOutputArray src);

void createLaplacePyr(cv::InputArray img, int num_levels, CV_IN_OUT std::vector<cv::UMat>& pyr);

void restoreImageFromLaplacePyr(CV_IN_OUT std::vector<cv::UMat>& pyr);

#endif // STITCHINGBLENDER_H

#include "stitchingblender.h"

#include <QDebug>

static const float WEIGHT_EPS = 1e-5f;

void KBlender::prepare(const std::vector<cv::Point> &corners, const std::vector<cv::Size> &sizes)

{

prepare(cv::detail::resultRoi(corners, sizes));

}

void KBlender::prepare(cv::Rect dst_roi)

{

dst_.create(dst_roi.size(), CV_16SC3);

dst_.setTo(cv::Scalar::all(0));

dst_mask_.create(dst_roi.size(), CV_8U);

dst_mask_.setTo(cv::Scalar::all(0));

dst_roi_ = dst_roi;

}

void KBlender::feed(cv::InputArray _img, cv::InputArray _mask, cv::Point tl)

{

cv::Mat img = _img.getMat();

cv::Mat mask = _mask.getMat();

cv::Mat dst = dst_.getMat(cv::ACCESS_RW);

cv::Mat dst_mask = dst_mask_.getMat(cv::ACCESS_RW);

CV_Assert(img.type() == CV_16SC3);

CV_Assert(mask.type() == CV_8U);

int dx = tl.x - dst_roi_.x;

int dy = tl.y - dst_roi_.y;

for (int y = 0; y < img.rows; ++y)

{

const cv::Point3_<short> *src_row = img.ptr<cv::Point3_<short> >(y);

cv::Point3_<short> *dst_row = dst.ptr<cv::Point3_<short> >(dy + y);

const uchar *mask_row = mask.ptr<uchar>(y);

uchar *dst_mask_row = dst_mask.ptr<uchar>(dy + y);

for (int x = 0; x < img.cols; ++x)

{

if (mask_row[x])

dst_row[dx + x] = src_row[x];

dst_mask_row[dx + x] |= mask_row[x];

}

}

}

void KBlender::blend(cv::InputOutputArray dst, cv::InputOutputArray dst_mask)

{

cv::UMat mask;

compare(dst_mask_, 0, mask, cv::CMP_EQ);

dst_.setTo(cv::Scalar::all(0), mask);

dst.assign(dst_);

dst_mask.assign(dst_mask_);

dst_.release();

dst_mask_.release();

}

KMultiBandBlender::KMultiBandBlender(int num_bands, int weight_type)

{

num_bands_ = 0;

setNumBands(num_bands);

CV_Assert(weight_type == CV_32F || weight_type == CV_16S);

weight_type_ = weight_type;

}

void KMultiBandBlender::prepare(cv::Rect dst_roi)

{

dst_roi_final_ = dst_roi;

// Crop unnecessary bands

double max_len = static_cast<double>(std::max(dst_roi.width, dst_roi.height));

num_bands_ = std::min(actual_num_bands_, static_cast<int>(ceil(std::log(max_len) / std::log(2.0))));

// Add border to the final image, to ensure sizes are divided by (1 << num_bands_)

dst_roi.width += ((1 << num_bands_) - dst_roi.width % (1 << num_bands_)) % (1 << num_bands_);

dst_roi.height += ((1 << num_bands_) - dst_roi.height % (1 << num_bands_)) % (1 << num_bands_);

KBlender::prepare(dst_roi);

dst_pyr_laplace_.resize(num_bands_ + 1);

dst_pyr_laplace_[0] = dst_;

dst_band_weights_.resize(num_bands_ + 1);

dst_band_weights_[0].create(dst_roi.size(), weight_type_);

dst_band_weights_[0].setTo(0);

for (int i = 1; i <= num_bands_; ++i)

{

dst_pyr_laplace_[i].create((dst_pyr_laplace_[i - 1].rows + 1) / 2,

(dst_pyr_laplace_[i - 1].cols + 1) / 2, CV_16SC3);

dst_band_weights_[i].create((dst_band_weights_[i - 1].rows + 1) / 2,

(dst_band_weights_[i - 1].cols + 1) / 2, weight_type_);

dst_pyr_laplace_[i].setTo(cv::Scalar::all(0));

dst_band_weights_[i].setTo(0);

}

}

void KMultiBandBlender::feed(cv::InputArray _img, cv::InputArray mask, cv::Point tl)

{

int64 t = cv::getTickCount();

cv::UMat img;

img = _img.getUMat();

CV_Assert(img.type() == CV_16SC3 || img.type() == CV_8UC3);

CV_Assert(mask.type() == CV_8U);

// Keep source image in memory with small border

int gap = 3 * (1 << num_bands_);

cv::Point tl_new(std::max(dst_roi_.x, tl.x - gap),

std::max(dst_roi_.y, tl.y - gap));

cv::Point br_new(std::min(dst_roi_.br().x, tl.x + img.cols + gap),

std::min(dst_roi_.br().y, tl.y + img.rows + gap));

// Ensure coordinates of top-left, bottom-right corners are divided by (1 << num_bands_).

// After that scale between layers is exactly 2.

//

// We do it to avoid interpolation problems when keeping sub-images only. There is no such problem when

// image is bordered to have size equal to the final image size, but this is too memory hungry approach.

tl_new.x = dst_roi_.x + (((tl_new.x - dst_roi_.x) >> num_bands_) << num_bands_);

tl_new.y = dst_roi_.y + (((tl_new.y - dst_roi_.y) >> num_bands_) << num_bands_);

int width = br_new.x - tl_new.x;

int height = br_new.y - tl_new.y;

width += ((1 << num_bands_) - width % (1 << num_bands_)) % (1 << num_bands_);

height += ((1 << num_bands_) - height % (1 << num_bands_)) % (1 << num_bands_);

br_new.x = tl_new.x + width;

br_new.y = tl_new.y + height;

int dy = std::max(br_new.y - dst_roi_.br().y, 0);

int dx = std::max(br_new.x - dst_roi_.br().x, 0);

tl_new.x -= dx; br_new.x -= dx;

tl_new.y -= dy; br_new.y -= dy;

int top = tl.y - tl_new.y;

int left = tl.x - tl_new.x;

int bottom = br_new.y - tl.y - img.rows;

int right = br_new.x - tl.x - img.cols;

// Create the source image Laplacian pyramid

cv::UMat img_with_border;

copyMakeBorder(_img, img_with_border, top, bottom, left, right,cv::BORDER_REFLECT);

qDebug() << " Add border to the source image, time: " << ((cv::getTickCount() - t) / cv::getTickFrequency())*1000 << " ms";

t = cv::getTickCount();

std::vector<cv::UMat> src_pyr_laplace;

createLaplacePyr(img_with_border, num_bands_, src_pyr_laplace);

qDebug() << " Create the source image Laplacian pyramid, time: " << ((cv::getTickCount() - t) / cv::getTickFrequency())*1000 << " ms";

t = cv::getTickCount();

// Create the weight map Gaussian pyramid

cv::UMat weight_map;

std::vector<cv::UMat> weight_pyr_gauss(num_bands_ + 1);

if (weight_type_ == CV_32F)

{

mask.getUMat().convertTo(weight_map, CV_32F, 1./255.);

}

else // weight_type_ == CV_16S

{

mask.getUMat().convertTo(weight_map, CV_16S);

cv::UMat add_mask;

compare(mask, 0, add_mask, cv::CMP_NE);

add(weight_map, cv::Scalar::all(1), weight_map, add_mask);

}

copyMakeBorder(weight_map, weight_pyr_gauss[0], top, bottom, left, right, cv::BORDER_CONSTANT);

for (int i = 0; i < num_bands_; ++i)

pyrDown(weight_pyr_gauss[i], weight_pyr_gauss[i + 1]);

qDebug() << " Create the weight map Gaussian pyramid, time: " << ((cv::getTickCount() - t) / cv::getTickFrequency())*1000 << " ms";

t = cv::getTickCount();

int y_tl = tl_new.y - dst_roi_.y;

int y_br = br_new.y - dst_roi_.y;

int x_tl = tl_new.x - dst_roi_.x;

int x_br = br_new.x - dst_roi_.x;

// Add weighted layer of the source image to the final Laplacian pyramid layer

for (int i = 0; i <= num_bands_; ++i)

{

cv::Rect rc(x_tl, y_tl, x_br - x_tl, y_br - y_tl);

{

cv::Mat _src_pyr_laplace = src_pyr_laplace[i].getMat(cv::ACCESS_READ);

cv::Mat _dst_pyr_laplace = dst_pyr_laplace_[i](rc).getMat(cv::ACCESS_RW);

cv::Mat _weight_pyr_gauss = weight_pyr_gauss[i].getMat(cv::ACCESS_READ);

cv::Mat _dst_band_weights = dst_band_weights_[i](rc).getMat(cv::ACCESS_RW);

if (weight_type_ == CV_32F)

{

for (int y = 0; y < rc.height; ++y)

{

const cv::Point3_<short>* src_row = _src_pyr_laplace.ptr<cv::Point3_<short> >(y);

cv::Point3_<short>* dst_row = _dst_pyr_laplace.ptr<cv::Point3_<short> >(y);

const float* weight_row = _weight_pyr_gauss.ptr<float>(y);

float* dst_weight_row = _dst_band_weights.ptr<float>(y);

for (int x = 0; x < rc.width; ++x)

{

dst_row[x].x += static_cast<short>(src_row[x].x * weight_row[x]);

dst_row[x].y += static_cast<short>(src_row[x].y * weight_row[x]);

dst_row[x].z += static_cast<short>(src_row[x].z * weight_row[x]);

dst_weight_row[x] += weight_row[x];

}

}

}

else // weight_type_ == CV_16S

{

for (int y = 0; y < y_br - y_tl; ++y)

{

const cv::Point3_<short>* src_row = _src_pyr_laplace.ptr<cv::Point3_<short> >(y);

cv::Point3_<short>* dst_row = _dst_pyr_laplace.ptr<cv::Point3_<short> >(y);

const short* weight_row = _weight_pyr_gauss.ptr<short>(y);

short* dst_weight_row = _dst_band_weights.ptr<short>(y);

for (int x = 0; x < x_br - x_tl; ++x)

{

dst_row[x].x += short((src_row[x].x * weight_row[x]) >> 8);

dst_row[x].y += short((src_row[x].y * weight_row[x]) >> 8);

dst_row[x].z += short((src_row[x].z * weight_row[x]) >> 8);

dst_weight_row[x] += weight_row[x];

}

}

}

}

x_tl /= 2; y_tl /= 2;

x_br /= 2; y_br /= 2;

}

qDebug() << " Add weighted layer of the source image to the final Laplacian pyramid layer, time: " << ((cv::getTickCount() - t) / cv::getTickFrequency())*1000 << " ms";

}

void KMultiBandBlender::blend(cv::InputOutputArray dst, cv::InputOutputArray dst_mask)

{

cv::Rect dst_rc(0, 0, dst_roi_final_.width, dst_roi_final_.height);

cv::UMat dst_band_weights_0;

for (int i = 0; i <= num_bands_; ++i)

normalizeUsingWeightMap(dst_band_weights_[i], dst_pyr_laplace_[i]);

restoreImageFromLaplacePyr(dst_pyr_laplace_);

dst_ = dst_pyr_laplace_[0](dst_rc);

dst_band_weights_0 = dst_band_weights_[0];

dst_pyr_laplace_.clear();

dst_band_weights_.clear();

compare(dst_band_weights_0(dst_rc), WEIGHT_EPS, dst_mask_, cv::CMP_GT);

KBlender::blend(dst, dst_mask);

}

//

// Auxiliary functions

void normalizeUsingWeightMap(cv::InputArray _weight, cv::InputOutputArray _src)

{

cv::Mat src;

cv::Mat weight;

src = _src.getMat();

weight = _weight.getMat();

CV_Assert(src.type() == CV_16SC3);

if (weight.type() == CV_32FC1)

{

for (int y = 0; y < src.rows; ++y)

{

cv::Point3_<short> *row = src.ptr<cv::Point3_<short> >(y);

const float *weight_row = weight.ptr<float>(y);

for (int x = 0; x < src.cols; ++x)

{

row[x].x = static_cast<short>(row[x].x / (weight_row[x] + WEIGHT_EPS));

row[x].y = static_cast<short>(row[x].y / (weight_row[x] + WEIGHT_EPS));

row[x].z = static_cast<short>(row[x].z / (weight_row[x] + WEIGHT_EPS));

}

}

}

else

{

CV_Assert(weight.type() == CV_16SC1);

for (int y = 0; y < src.rows; ++y)

{

const short *weight_row = weight.ptr<short>(y);

cv::Point3_<short> *row = src.ptr<cv::Point3_<short> >(y);

for (int x = 0; x < src.cols; ++x)

{

int w = weight_row[x] + 1;

row[x].x = static_cast<short>((row[x].x << 8) / w);

row[x].y = static_cast<short>((row[x].y << 8) / w);

row[x].z = static_cast<short>((row[x].z << 8) / w);

}

}

}

}

void createLaplacePyr(cv::InputArray img, int num_levels, std::vector<cv::UMat> &pyr)

{

pyr.resize(num_levels + 1);

if(img.depth() == CV_8U)

{

if(num_levels == 0)

{

img.getUMat().convertTo(pyr[0], CV_16S);

return;

}

cv::UMat downNext;

cv::UMat current = img.getUMat();

pyrDown(img, downNext);

for(int i = 1; i < num_levels; ++i)

{

cv::UMat lvl_up;

cv::UMat lvl_down;

pyrDown(downNext, lvl_down);

pyrUp(downNext, lvl_up, current.size());

subtract(current, lvl_up, pyr[i-1], cv::noArray(), CV_16S);

current = downNext;

downNext = lvl_down;

}

{

cv::UMat lvl_up;

pyrUp(downNext, lvl_up, current.size());

subtract(current, lvl_up, pyr[num_levels-1], cv::noArray(), CV_16S);

downNext.convertTo(pyr[num_levels], CV_16S);

}

}

else

{

pyr[0] = img.getUMat();

for (int i = 0; i < num_levels; ++i)

pyrDown(pyr[i], pyr[i + 1]);

cv::UMat tmp;

for (int i = 0; i < num_levels; ++i)

{

pyrUp(pyr[i + 1], tmp, pyr[i].size());

subtract(pyr[i], tmp, pyr[i]);

}

}

}

void restoreImageFromLaplacePyr(std::vector<cv::UMat> &pyr)

{

if (pyr.empty())

return;

cv::UMat tmp;

for (size_t i = pyr.size() - 1; i > 0; --i)

{

pyrUp(pyr[i], tmp, pyr[i - 1].size());

add(tmp, pyr[i - 1], pyr[i - 1]);

}

}

测试代码

cv::Mat img0 = cv::imread("E:/test/google_satellite_0000.bmp",cv::IMREAD_COLOR);

cv::Mat img1 = cv::imread("E:/test/google_satellite_0001.bmp",cv::IMREAD_COLOR);

cv::Mat mask0 = cv::Mat_<uchar>(img0.size(),255);

cv::Mat mask1 = cv::Mat_<uchar>(img1.size(),255);

std::vector<cv::UMat> imgs_warped;

std::vector<cv::UMat> masks_warped;

std::vector<cv::Point> corners_warped;

std::vector<cv::Size> sizes_warped;

imgs_warped.push_back(img0.getUMat(cv::ACCESS_READ));

imgs_warped.push_back(img1.getUMat(cv::ACCESS_READ));

masks_warped.push_back(mask0.getUMat(cv::ACCESS_READ));

masks_warped.push_back(mask1.getUMat(cv::ACCESS_READ));

corners_warped.push_back(cv::Point(0,0));

corners_warped.push_back(cv::Point(0,img0.rows/2)); //假设img0和img1上下重叠1/2。ps:对于实际中的图像拼接来说,这个位置关系应当已经得到

sizes_warped.push_back(img0.size());

sizes_warped.push_back(img1.size());

std::vector<cv::UMat> imgs_warped_f(imgs_warped.size());

for (unsigned int i = 0; i < imgs_warped.size(); ++i)

imgs_warped[i].convertTo(imgs_warped_f[i], CV_32F);

//OpenCV最佳缝合线检测

cv::Ptr<cv::detail::SeamFinder> seam_finder;

seam_finder = cv::makePtr<cv::detail::DpSeamFinder>(cv::detail::DpSeamFinder::COLOR);

seam_finder->find(imgs_warped_f, corners_warped, masks_warped);

//多频段融合

cv::Ptr<KBlender> blender = cv::makePtr<KMultiBandBlender>();

KMultiBandBlender* mblender = dynamic_cast<KMultiBandBlender*>(blender.get());

mblender->setNumBands(5);

blender->prepare(corners_warped, sizes_warped);

for(unsigned int i=0;i<imgs_warped.size();i++)

{

cv::Mat img_warped_s;

imgs_warped[i].convertTo(img_warped_s, CV_16S);

cv::Mat mask_warped = masks_warped[i].getMat(cv::ACCESS_READ);

cv::Mat dilated_mask;

cv::dilate(mask_warped, dilated_mask, cv::Mat());

mask_warped = dilated_mask & mask_warped;

blender->feed(img_warped_s, mask_warped, corners_warped[i]);

}

cv::Mat result,result_mask;

blender->blend(result, result_mask);

result.convertTo(result,CV_8U);*

算法效果

拼接原图-上图/img0

拼接原图-下图/img1

最佳缝合线检测结果-上图掩膜

最佳缝合线检测结果-下图掩膜

拼接结果