文章目录

- 一、环境准备

- 二、所有节点执行

- 1、解压安装包

- 2、创建/etc/modules-load.d/containerd.conf配置文件

- 3、执行以下命令使配置生效

- 4、创建/etc/sysctl.d/99-kubernetes-cri.conf配置文件

- 5、加载ipvs内核模块–4.19以上版本内核

- 6、授权生效

- 7、关闭swap,并永久关闭

- 8、关闭防火墙和seliunx

- 9、配置/etc/hosts

- 10、安装containerd和cni,主节点和工作节点都要执行

- 11、安装 kubectl kubeadm kubelet 三剑客

- 12、启动kubelet

- 13、导入镜像

- 二、Master节点执行

- 1、kubeadm init

- 2、添加证书

- 三、Work节点执行

- 1、其他节点加入

- 四、Master节点执行

- 1、部署网络插件

- 五、检验是否安装成功

- 六、安装kubord

- 1、打标签

- 2、编辑kuboard-v3.yaml

- 3、部署

- 4、登录

一、环境准备

三台虚拟机、离线安装包(需要离线安装包请找我)

二、所有节点执行

1、解压安装包

tar -zxvf pkg20240805.tar.gz

2、创建/etc/modules-load.d/containerd.conf配置文件

cat << EOF > /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

3、执行以下命令使配置生效

modprobe overlay

modprobe br_netfilter

4、创建/etc/sysctl.d/99-kubernetes-cri.conf配置文件

# 创建文件

cat << EOF > /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

user.max_user_namespaces=28633

EOF

# 生效

sysctl -p /etc/sysctl.d/99-kubernetes-cri.conf

5、加载ipvs内核模块–4.19以上版本内核

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

6、授权生效

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

7、关闭swap,并永久关闭

swapoff -a

sed -i "s/^[^#].*swap/#&/" /etc/fstab

8、关闭防火墙和seliunx

systemctl disable firewalld && systemctl stop firewalld

sed -i 's/enforcing/disabled/g' /etc/selinux/config && setenforce 0

9、配置/etc/hosts

# 设置主机名称(根据自己设置主机名称)

# (master )

hostnamectl set-hostname master-11 && bash

# (work )

hostnamectl set-hostname node-12 && bash

hostnamectl set-hostname node-13 && bash

# host文件

cat >> /etc/hosts << EOF

192.168.56.11 master-11

192.168.56.12 node-12

192.168.56.13 node-13

EOF

# 配置完之后互相ping一下看通不通

10、安装containerd和cni,主节点和工作节点都要执行

# 进入到我们解压的目录

cd pkg/runc

#直接解压到根目录

tar zxvf cri-containerd-1.6.25-linux-amd64.tar.gz -C /

#生成默认配置文件

mkdir /etc/containerd && containerd config default > /etc/containerd/config.toml

#修改默认配置

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

sed -i 's#k8s.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i 's#registry.gcr.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i 's#registry.k8s.io/pause#registry.cn-hangzhou.aliyuncs.com/google_containers/pause#g' /etc/containerd/config.toml

sed -i s/pause:3.6/pause:3.9/g /etc/containerd/config.toml

#启动containerd

systemctl daemon-reload

systemctl start containerd

systemctl enable containerd

# 安装cni

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

# 更新libseccomp

# 查看是否安装并保证版本大于2.3.1

rpm -qa | grep libseccomp

rpm -e --nodeps libseccomp-2.3.1-4.el7.x86_64

rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm

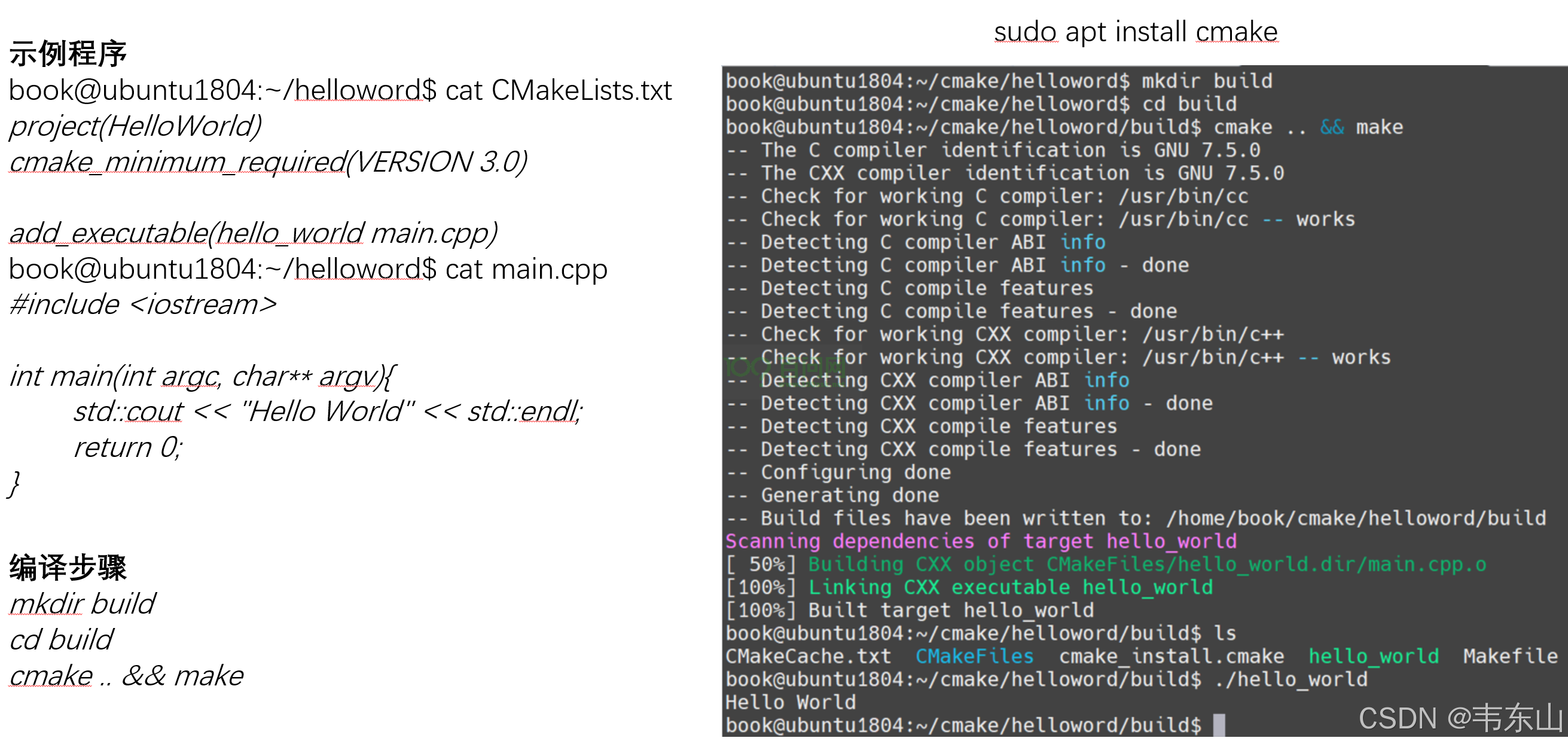

11、安装 kubectl kubeadm kubelet 三剑客

# 注意目录

cd pkg/kubeadm && yum localinstall *.rpm -y

12、启动kubelet

systemctl enable kubelet && systemctl start kubelet

13、导入镜像

cd pkg/images && sh images_import.sh

二、Master节点执行

1、kubeadm init

# kubernetes-version:版本

# apiserver-advertise-address:主节点ip;

# pod-network-cidr:pod的ip,可以不指定,没什么影响

kubeadm init --apiserver-advertise-address=192.168.56.11 --kubernetes-version v1.28.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

# 注意【若要重新初始化集群状态:kubeadm reset,然后再进行上述操作】

2、添加证书

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 保留方便后续加入work节点

kubeadm join 192.168.56.11:6443 --token 4ors48.0ph175l15lqw5bez \

--discovery-token-ca-cert-hash sha256:ac3ecc79034c8d7ed3240f3d6a354441a84a496d51f4705da98c30c58c2a4ecc

三、Work节点执行

1、其他节点加入

kubeadm join 192.168.56.11:6443 --token 4ors48.0ph175l15lqw5bez \

--discovery-token-ca-cert-hash sha256:ac3ecc79034c8d7ed3240f3d6a354441a84a496d51f4705da98c30c58c2a4ecc

四、Master节点执行

1、部署网络插件

# 一定确保全部Running状态,再安装网络插件!(除了前两个)

[root@master-11 images]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6554b8b87f-p8vp9 0/1 Pending 0 4m11s

coredns-6554b8b87f-wdmgn 0/1 Pending 0 4m11s

etcd-master-11 1/1 Running 0 4m23s

kube-apiserver-master-11 1/1 Running 0 4m23s

kube-controller-manager-master-11 1/1 Running 0 4m23s

kube-proxy-9s57h 1/1 Running 0 2m11s

kube-proxy-ksqm6 1/1 Running 0 4m11s

kube-proxy-q5dxn 1/1 Running 0 2m19s

kube-scheduler-master-11 1/1 Running 0 4m23s

cd pkg/calico && kubectl apply -f calico.yaml

五、检验是否安装成功

[root@master-11 calico]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7c968b5878-v8djq 1/1 Running 0 2m51s

calico-node-6vxq8 1/1 Running 0 2m51s

calico-node-rc4z8 1/1 Running 0 2m51s

calico-node-sx2z7 1/1 Running 0 2m51s

coredns-6554b8b87f-p8vp9 1/1 Running 0 7m18s

coredns-6554b8b87f-wdmgn 1/1 Running 0 7m18s

etcd-master-11 1/1 Running 0 7m30s

kube-apiserver-master-11 1/1 Running 0 7m30s

kube-controller-manager-master-11 1/1 Running 0 7m30s

kube-proxy-9s57h 1/1 Running 0 5m18s

kube-proxy-ksqm6 1/1 Running 0 7m18s

kube-proxy-q5dxn 1/1 Running 0 5m26s

kube-scheduler-master-11 1/1 Running 0 7m30s

[root@master-11 calico]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-11 Ready control-plane 7m56s v1.28.1

node-12 Ready <none> 5m49s v1.28.1

node-13 Ready <none> 5m40s v1.28.1

六、安装kubord

官网:https://www.kuboard.cn/install/v3/install-in-k8s.html#%E5%AE%89%E8%A3%85

1、打标签

# 打标签 只给主打即可

kubectl label nodes master-11 k8s.kuboard.cn/role=etcd

2、编辑kuboard-v3.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: kuboard

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kuboard-v3-config

namespace: kuboard

data:

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-built-in.html

# [common]

KUBOARD_SERVER_NODE_PORT: '30080'

KUBOARD_AGENT_SERVER_UDP_PORT: '30081'

KUBOARD_AGENT_SERVER_TCP_PORT: '30081'

KUBOARD_SERVER_LOGRUS_LEVEL: info # error / debug / trace

# KUBOARD_AGENT_KEY 是 Agent 与 Kuboard 通信时的密钥,请修改为一个任意的包含字母、数字的32位字符串,此密钥变更后,需要删除 Kuboard Agent 重新导入。

KUBOARD_AGENT_KEY: 32b7d6572c6255211b4eec9009e4a816

KUBOARD_AGENT_IMAG: registry.cn-hangzhou.aliyuncs.com/cxfpublic/kuboard-agent

KUBOARD_QUESTDB_IMAGE: registry.cn-hangzhou.aliyuncs.com/cxfpublic/questdb:6.0.5

KUBOARD_DISABLE_AUDIT: 'false' # 如果要禁用 Kuboard 审计功能,将此参数的值设置为 'true',必须带引号。

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-gitlab.html

# [gitlab login]

# KUBOARD_LOGIN_TYPE: "gitlab"

# KUBOARD_ROOT_USER: "your-user-name-in-gitlab"

# GITLAB_BASE_URL: "http://gitlab.mycompany.com"

# GITLAB_APPLICATION_ID: "7c10882aa46810a0402d17c66103894ac5e43d6130b81c17f7f2d8ae182040b5"

# GITLAB_CLIENT_SECRET: "77c149bd3a4b6870bffa1a1afaf37cba28a1817f4cf518699065f5a8fe958889"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-github.html

# [github login]

# KUBOARD_LOGIN_TYPE: "github"

# KUBOARD_ROOT_USER: "your-user-name-in-github"

# GITHUB_CLIENT_ID: "17577d45e4de7dad88e0"

# GITHUB_CLIENT_SECRET: "ff738553a8c7e9ad39569c8d02c1d85ec19115a7"

# 关于如下参数的解释,请参考文档 https://kuboard.cn/install/v3/install-ldap.html

# [ldap login]

# KUBOARD_LOGIN_TYPE: "ldap"

# KUBOARD_ROOT_USER: "your-user-name-in-ldap"

# LDAP_HOST: "ldap-ip-address:389"

# LDAP_BIND_DN: "cn=admin,dc=example,dc=org"

# LDAP_BIND_PASSWORD: "admin"

# LDAP_BASE_DN: "dc=example,dc=org"

# LDAP_FILTER: "(objectClass=posixAccount)"

# LDAP_ID_ATTRIBUTE: "uid"

# LDAP_USER_NAME_ATTRIBUTE: "uid"

# LDAP_EMAIL_ATTRIBUTE: "mail"

# LDAP_DISPLAY_NAME_ATTRIBUTE: "cn"

# LDAP_GROUP_SEARCH_BASE_DN: "dc=example,dc=org"

# LDAP_GROUP_SEARCH_FILTER: "(objectClass=posixGroup)"

# LDAP_USER_MACHER_USER_ATTRIBUTE: "gidNumber"

# LDAP_USER_MACHER_GROUP_ATTRIBUTE: "gidNumber"

# LDAP_GROUP_NAME_ATTRIBUTE: "cn"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-boostrap-crb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-boostrap

namespace: kuboard

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

name: kuboard-etcd

namespace: kuboard

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-etcd

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-etcd

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

- matchExpressions:

- key: k8s.kuboard.cn/role

operator: In

values:

- etcd

containers:

- env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

image: 'registry.cn-hangzhou.aliyuncs.com/cxfpublic/etcd-host:3.4.16-1'

imagePullPolicy: Always

name: etcd

ports:

- containerPort: 2381

hostPort: 2381

name: server

protocol: TCP

- containerPort: 2382

hostPort: 2382

name: peer

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 2381

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

volumeMounts:

- mountPath: /data

name: data

dnsPolicy: ClusterFirst

hostNetwork: true

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

- key: node-role.kubernetes.io/control-plane

operator: Exists

volumes:

- hostPath:

path: /usr/share/kuboard/etcd

name: data

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/name: kuboard-v3

template:

metadata:

labels:

k8s.kuboard.cn/name: kuboard-v3

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

weight: 100

- preference:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: Exists

weight: 100

containers:

- env:

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

envFrom:

- configMapRef:

name: kuboard-v3-config

image: 'registry.cn-hangzhou.aliyuncs.com/cxfpublic/kuboard:v3'

imagePullPolicy: Always

livenessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: kuboard

ports:

- containerPort: 80

name: web

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 10081

name: peer

protocol: TCP

- containerPort: 10081

name: peer-u

protocol: UDP

readinessProbe:

failureThreshold: 3

httpGet:

path: /kuboard-resources/version.json

port: 80

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources: {}

# startupProbe:

# failureThreshold: 20

# httpGet:

# path: /kuboard-resources/version.json

# port: 80

# scheme: HTTP

# initialDelaySeconds: 5

# periodSeconds: 10

# successThreshold: 1

# timeoutSeconds: 1

dnsPolicy: ClusterFirst

restartPolicy: Always

serviceAccount: kuboard-boostrap

serviceAccountName: kuboard-boostrap

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

---

apiVersion: v1

kind: Service

metadata:

annotations: {}

labels:

k8s.kuboard.cn/name: kuboard-v3

name: kuboard-v3

namespace: kuboard

spec:

ports:

- name: web

nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

- name: tcp

nodePort: 30081

port: 10081

protocol: TCP

targetPort: 10081

- name: udp

nodePort: 30081

port: 10081

protocol: UDP

targetPort: 10081

selector:

k8s.kuboard.cn/name: kuboard-v3

sessionAffinity: None

type: NodePort

3、部署

kubectl apply -f kuboard-v3.yaml

# 执行指令 watch kubectl get pods -n kuboard,等待 kuboard 名称空间中所有的 Pod 就绪

kubectl get pods -n kuboard

4、登录

安装Kuboard的地址,也是master节点IP:

http://192.168.56.11:30080/

用户名:admin 密码:Kuboard123

![[Linux#43][线程] 死锁 | 同步 | 基于 BlockingQueue 的生产者消费者模型](https://img-blog.csdnimg.cn/img_convert/972053f30a6f66157a53adfccea9b84f.jpeg)