在本文中,我们将介绍如何使用Hugging Face的大型语言模型(LLM)构建一些常见的应用,包括摘要(Summarization)、情感分析(Sentiment analysis)、翻译(Translation)、零样本分类(Zero-shot classification)和少样本学习(Few-shot learning)。我们将探索现有的开源和专有模型,展示如何直接应用于各种应用场景。同时,我们还将介绍简单的提示工程(prompt engineering),以及如何使用Hugging Face的API配置LLM管道。

学习目标:

-

使用各种现有模型构建常见应用。

-

理解基本的提示工程。

-

了解LLM推理中的搜索和采样方法。

-

熟悉Hugging Face的主要抽象概念:数据集、管道、分词器和模型。

环境安装

常见的大预言模型应用

本节旨在让您对几种常见的LLM应用有所了解,并展示使用LLM的入门方法的简易性。

在浏览示例时,请注意所使用的数据集、模型、API和选项。这些简单的示例可作为构建自己应用程序的起点。

摘要(Summarization)

摘要可以分为两种形式:

-

抽取式摘要(extractive):从文本中选择代表性的摘录作为摘要。

-

生成式摘要(abstractive):通过生成新的文本来形成摘要。

在本文中,我们将使用生成式摘要模型。

背景阅读:Hugging Face的摘要任务页面列出了支持摘要的模型架构。摘要章节提供了详细的操作指南。

在本节中,我们将使用以下内容:

-

数据集:xsum数据集,该数据集提供了一系列BBC新闻文章和相应的摘要。

-

模型:t5-small模型,该模型具有6000万个参数(对于PyTorch而言是242MB)。T5是由Google创建的编码器-解码器模型,支持多个任务,包括摘要、翻译、问答和文本分类。有关更多详细信息,请参阅Google的博客文章、GitHub上的代码或研究论文。

数据集加载

`xsum_dataset = load_dataset(

"xsum", version="1.2.0", cache_dir="/root/home/LLMs/week1"

) # Note: We specify cache_dir to use predownloaded data.

xsum_dataset # The printed representation of this object shows the `num_rows` of each dataset split.

# 输出

DatasetDict({

train: Dataset({

features: ['document', 'summary', 'id'],

num_rows: 204045

})

validation: Dataset({

features: ['document', 'summary', 'id'],

num_rows: 11332

})

test: Dataset({

features: ['document', 'summary', 'id'],

num_rows: 11334

})

}) `

该数据集提供了三列:

-

document:包含BBC文章的文本内容。

-

summary:一个“ground-truth”摘要。请注意,“ground-truth”摘要是主观的,可能与您所写的摘要不同。这是一个很好的例子,说明许多LLM应用程序没有明显的“正确”答案。

-

id:文章的唯一标识符。

xsum_sample = xsum_dataset["train"].select(range(10))

display(xsum_sample.to_pandas())

接下来,我们将使用Hugging Face的pipeline工具加载一个预训练模型。在LLM(Language Model)pipeline的构造函数中,我们需要指定以下参数:

-

task:第一个参数用于指定主要任务。您可以参考Hugging Face的task文档获取更多信息。

-

model:这是从Hugging Face Hub加载的预训练模型的名称。

-

min_length、max_length:我们可以设置生成的摘要的最小和最大标记长度范围。

-

truncation:一些输入文章可能过长,超出了LLM处理的限制。大多数LLM模型对输入序列的长度有固定的限制。通过设置此选项,我们可以告诉pipeline在需要时对输入进行截断。

# Apply to 1 article

summarizer(xsum_sample["document"][0])

# Apply to a batch of articles

results = summarizer(xsum_sample["document"])

# Display the generated summary side-by-side with the reference summary and original document.

# We use Pandas to join the inputs and outputs together in a nice format.

import pandas as pd

display(

pd.DataFrame.from_dict(results)

.rename({"summary_text": "generated_summary"}, axis=1)

.join(pd.DataFrame.from_dict(xsum_sample))[

["generated_summary", "summary", "document"]

]

)

# 输出

results

[{'summary_text': 'the full cost of damage in Newton Stewart is still being assessed . many roads in peeblesshire remain badly affected by standing water . a flood alert remains in place across the'},

{'summary_text': 'a fire alarm went off at the Holiday Inn in Hope Street on Saturday . guests were asked to leave the hotel . the two buses were parked side-by-side in'},

{'summary_text': 'Sebastian Vettel will start third ahead of team-mate Kimi Raikkonen . stewards only handed Hamilton a reprimand after governing body said "n'},

{'summary_text': 'the 67-year-old is accused of committing the offences between March 1972 and October 1989 . he denies all the charges, including two counts of indecency'},

{'summary_text': 'a man receiving psychiatric treatment at the clinic threatened to shoot himself and others . the incident comes amid tension in Istanbul following several attacks on the reina nightclub .'},

{'summary_text': 'Gregor Townsend gave a debut to powerhouse wing Taqele Naiyaravoro . the dragons gave first starts of the season to wing a'},

{'summary_text': 'Veronica Vanessa Chango-Alverez, 31, was killed and another man injured in the crash . police want to trace Nathan Davis, 27, who has links to the Audi .'},

{'summary_text': 'the 25-year-old was hit by a motorbike during the Gent-Wevelgem race . he was riding for the Wanty-Gobert team and was taken'},

{'summary_text': 'gundogan will not be fit for the start of the premier league season at Brighton on 12 august . the 26-year-old says his recovery time is now being measured in "week'},

{'summary_text': 'the crash happened about 07:20 GMT at the junction of the A127 and Progress Road in leigh-on-Sea, Essex . the man, aged in his 20s'}]

情感分析(Sentiment analysis)

情感分析是一种文本分类任务,旨在对一段文本进行评估,判断其是积极的、消极的还是其他类型的情感。具体的情感标签可以根据不同的应用而有所不同。

背景阅读:可以参考Hugging Face的文本分类任务页面或维基百科上的情感分析页面,以了解更多相关信息。

在本节中,我们将使用以下内容:

-

数据集:poem_sentiment,该数据集提供了带有负面(0)、积极(1)、无影响(2)或混合(3)情感标签的诗句。

-

模型:我们将使用经过微调的BERT模型。BERT(Bidirectional Encoder Representations from Transformers)是Google开发的一种仅包含编码器的模型,可用于处理多达11个不同的任务,包括情感分析和实体识别等。如果需要更详细的信息,可以参考Hugging Face的博客文章或维基百科上的相关页面。

我们选择使用任务text-classification来加载pipeline,因为我们的目标是对文本进行分类。

# Display the predicted sentiment side-by-side with the ground-truth label and original text.

# The score indicates the model's confidence in its prediction.

# Join predictions with ground-truth data

joined_data = (

pd.DataFrame.from_dict(results)

.rename({"label": "predicted_label"}, axis=1)

.join(pd.DataFrame.from_dict(poem_sample).rename({"label": "true_label"}, axis=1))

) #

Change label indices to text labels

sentiment_labels = {0: "negative", 1: "positive", 2: "no_impact", 3: "mixed"}

joined_data = joined_data.replace({"true_label": sentiment_labels})

display(joined_data[["predicted_label", "true_label", "score", "verse_text"]])

翻译(Translation)

翻译模型可以专门设计用于支持特定的语言对,也可以支持多种语言。

在本节中,我们将使用以下内容:

-

数据集:我们将使用一些示例的硬编码句子。然而,Hugging Face提供了各种翻译数据集供使用。

-

模型:Helsinki-NLP/opus-mt-en-es用于英语(“en”)到西班牙语(“es”)的翻译示例。该模型基于Marian NMT,这是由微软和其他研究人员开发的神经机器翻译框架。请参阅GitHub页面以获取代码和相关资源的链接。t5-small模型,它具有6000万个参数(对于PyTorch而言,大小为242MB)。T5是由Google创建的编码器-解码器模型,支持多种任务,包括摘要、翻译、问答和文本分类。

en_to_es_translation_pipeline = pipeline(

task="translation",

model="Helsinki-NLP/opus-mt-en-es",

model_kwargs={"cache_dir": "/root/home/LLMs/week1"},

)

en_to_es_translation_pipeline(

"Existing, open-source (and proprietary) models can be used out-of-the-box for many applications."

)

# 输出

[{'translation_text': 'Los modelos existentes, de código abierto (y propietario) se pueden utilizar fuera de la caja para muchas aplicaciones.'}]

一些模型能够支持多种语言翻译。下面,我们使用t5-small模型展示这一点。请注意,由于它支持多种语言(和任务),我们会明确指示它从一种语言翻译到另一种语言。

t5_small_pipeline = pipeline(

task="text2text-generation",

model="t5-small",

max_length=50,

model_kwargs={"cache_dir": "/root/home/LLMs/week1"},

)

t5_small_pipeline(

"translate English to Romanian: Existing, open-source (and proprietary) models can be used out-of-the-box for many applications."

)

# 输出

[{'generated_text': 'Modelele existente, deschise (şi proprietăţi) pot fi utilizate în afara legii pentru multe aplicaţii.'}]

t5_small_pipeline(

"translate English to Desutchland: I love you."

)

# 输出

[{'generated_text': 'Desutchland: Ich liebe dich.'}]

零样本分类(Zero-shot classification)

零样本分类(或零样本学习)是一种将文本分为给定的几个类别或标签之一的任务,而无需事先明确训练模型来预测这些类别。这个概念在现代语言模型出现之前就在文献中被提出,但最近语言模型的进展使得零样本学习变得更加灵活和强大。

在本节中,我们将使用以下内容:

-

数据集:我们将使用上一节摘要部分中提到的xsum数据集中的一些示例文章。我们的目标是为这些新闻文章打上几个类别的标签。

-

模型:我们将使用nli-deberta-v3-small模型,这是对DeBERTa模型进行微调的版本。DeBERTa基础模型是由微软开发的,它是从BERT模型派生出的几个模型之一。

zero_shot_pipeline = pipeline(

task="zero-shot-classification",

model="cross-encoder/nli-deberta-v3-small",

model_kwargs={"cache_dir": "/root/home/LLMs/week1"},

)

def categorize_article(article: str) -> None:

"""

This helper function defines the categories (labels) which the model must use to label articles.

Note that our model was NOT fine-tuned to use these specific labels,

but it "knows" what the labels mean from its more general training.

This function then prints out the predicted labels alongside their confidence scores.

"""

results = zero_shot_pipeline(

article,

candidate_labels=[

"politics",

"finance",

"sports",

"science and technology",

"pop culture",

"breaking news",

],

)

# Print the results nicely

del results["sequence"]

display(pd.DataFrame(results))

categorize_article(

"""

Simone Favaro got the crucial try with the last move of the game, following earlier touchdowns by Chris Fusaro, Zander Fagerson and Junior Bulumakau.

Rynard Landman and Ashton Hewitt got a try in either half for the Dragons.

Glasgow showed far superior strength in depth as they took control of a messy match in the second period.

Home coach Gregor Townsend gave a debut to powerhouse Fijian-born Wallaby wing Taqele Naiyaravoro, and centre Alex Dunbar returned from long-term injury, while the Dragons gave first starts of the season to wing Aled Brew and hooker Elliot Dee.

Glasgow lost hooker Pat McArthur to an early shoulder injury but took advantage of their first pressure when Rory Clegg slotted over a penalty on 12 minutes.

It took 24 minutes for a disjointed game to produce a try as Sarel Pretorius sniped from close range and Landman forced his way over for Jason Tovey to convert - although it was the lock's last contribution as he departed with a chest injury shortly afterwards.

Glasgow struck back when Fusaro drove over from a rolling maul on 35 minutes for Clegg to convert.

But the Dragons levelled at 10-10 before half-time when Naiyaravoro was yellow-carded for an aerial tackle on Brew and Tovey slotted the easy goal.

The visitors could not make the most of their one-man advantage after the break as their error count cost them dearly.

It was Glasgow's bench experience that showed when Mike Blair's break led to a short-range score from teenage prop Fagerson, converted by Clegg.

Debutant Favaro was the second home player to be sin-binned, on 63 minutes, but again the Warriors made light of it as replacement wing Bulumakau, a recruit from the Army, pounced to deftly hack through a bouncing ball for an opportunist try.

The Dragons got back within striking range with some excellent combined handling putting Hewitt over unopposed after 72 minutes.

However, Favaro became sinner-turned-saint as he got on the end of another effective rolling maul to earn his side the extra point with the last move of the game, Clegg converting.

Dragons director of rugby Lyn Jones said: "We're disappointed to have lost but our performance was a lot better [than against Leinster] and the game could have gone either way.

"Unfortunately too many errors behind the scrum cost us a great deal, though from where we were a fortnight ago in Dublin our workrate and desire was excellent.

"It was simply error count from individuals behind the scrum that cost us field position, it's not rocket science - they were correct in how they played and we had a few errors, that was the difference."

Glasgow Warriors: Rory Hughes, Taqele Naiyaravoro, Alex Dunbar, Fraser Lyle, Lee Jones, Rory Clegg, Grayson Hart; Alex Allan, Pat MacArthur, Zander Fagerson, Rob Harley (capt), Scott Cummings, Hugh Blake, Chris Fusaro, Adam Ashe.

Replacements: Fergus Scott, Jerry Yanuyanutawa, Mike Cusack, Greg Peterson, Simone Favaro, Mike Blair, Gregor Hunter, Junior Bulumakau.

Dragons: Carl Meyer, Ashton Hewitt, Ross Wardle, Adam Warren, Aled Brew, Jason Tovey, Sarel Pretorius; Boris Stankovich, Elliot Dee, Brok Harris, Nick Crosswell, Rynard Landman (capt), Lewis Evans, Nic Cudd, Ed Jackson.

Replacements: Rhys Buckley, Phil Price, Shaun Knight, Matthew Screech, Ollie Griffiths, Luc Jones, Charlie Davies, Nick Scott.

"""

)

# 输出

labels scores

0 sports 0.469011

1 breaking news 0.223165

2 science and technology 0.107025

3 pop culture 0.104471

4 politics 0.057390

5 finance 0.03893

少样本学习(Few-shot learning)

在少样本学习任务中,我们向模型提供一个指令、一些查询-响应示例,并要求模型生成一个新查询的响应。这种技术具有强大的能力,可以使模型在更广泛的应用中得到重复使用。然而,少样本学习也有其挑战之处,需要进行大量的提示工程才能获得良好且可靠的结果。

在本节中,我们将使用以下内容:

-

任务:少样本学习可以应用于多种任务。在本例中,我们将进行情感分析任务,该任务在之前已经进行了介绍。然而,通过少样本学习,我们可以自定义标签,而不仅仅局限于之前模型所调整的特定标签集。此外,我们还将展示其他玩具任务。在Hugging Face的任务指定中,少样本学习被视为文本生成任务。

-

数据:我们使用了一些示例数据,其中包括了一篇博客文章中的推文示例。

-

模型:我们使用了gpt-neo-1.3B模型,这是GPT-Neo模型的一个版本。GPT-Neo是由Eleuther AI开发的Transformer模型,具有13亿个参数。如果您想了解更多详细信息,请参阅GitHub上的代码或相关的研究论文。

Tip: 在下面的少样本提示中,我们将使用特殊标记"###"来分隔示例,并使用相同的标记来提示语言模型在回答查询后结束输出。我们将告诉pipeline将该特殊标记作为序列的结束标记(EOS)。

`# This example sometimes works and sometimes does not, when sampling. Too abstract?

results = few_shot_pipeline(

"""Given a word describing how someone is feeling, suggest a description of that person. The description should not include the original word.

[word]: happy

[description]: smiling, laughing, clapping

###

[word]: nervous

[description]: glancing around quickly, sweating, fidgeting

###

[word]: sleepy [description]: heavy-lidded, slumping, rubbing eyes #

##

[word]: confused

[description]:""",

eos_token_id=eos_token_id,

)

print(results[0]["generated_text"])

# 输出

Setting `pad_token_id` to `eos_token_id`:21017 for open-end generation.

Given a word describing how someone is feeling, suggest a description of that person. The description should not include the original word.

[word]: happy

[description]: smiling, laughing, clapping

###

[word]: nervous

[description]: glancing around quickly, sweating, fidgeting

###

[word]: sleepy

[description]: heavy-lidded, slumping, rubbing eyes

###

[word]: confused

[description]: staring at one's own reflection in the water, `

`# We override max_new_tokens to generate longer answers.

# These book descriptions were taken from their corresponding Wikipedia pages.

results = few_shot_pipeline(

"""Generate a book summary from the title:

[book title]: "Stranger in a Strange Land"

[book description]: "This novel tells the story of Valentine Michael Smith, a human who comes to Earth in early adulthood after being born on the planet Mars and raised by Martians, and explores his interaction with and eventual transformation of Terran culture."

###

[book title]: "The Adventures of Tom Sawyer"

[book description]: "This novel is about a boy growing up along the Mississippi River. It is set in the 1840s in the town of St. Petersburg, which is based on Hannibal, Missouri, where Twain lived as a boy. In the novel, Tom Sawyer has several adventures, often with his friend Huckleberry Finn."

###

[book title]: "Dune"

[book description]: "This novel is set in the distant future amidst a feudal interstellar society in which various noble houses control planetary fiefs. It tells the story of young Paul Atreides, whose family accepts the stewardship of the planet Arrakis. While the planet is an inhospitable and sparsely populated desert wasteland, it is the only source of melange, or spice, a drug that extends life and enhances mental abilities. The story explores the multilayered interactions of politics, religion, ecology, technology, and human emotion, as the factions of the empire confront each other in a struggle for the control of Arrakis and its spice."

###

[book title]: "Blue Mars"

[book description]:""",

eos_token_id=eos_token_id,

max_new_tokens=50,

)

print(results[0]["generated_text"])

# 输出

Setting `pad_token_id` to `eos_token_id`:21017 for open-end generation.

Generate a book summary from the title:

[book title]: "Stranger in a Strange Land"

[book description]: "This novel tells the story of Valentine Michael Smith, a human who comes to Earth in early adulthood after being born on the planet Mars and raised by Martians, and explores his interaction with and eventual transformation of Terran culture."

###

[book title]: "The Adventures of Tom Sawyer"

[book description]: "This novel is about a boy growing up along the Mississippi River. It is set in the 1840s in the town of St. Petersburg, which is based on Hannibal, Missouri, where Twain lived as a boy. In the novel, Tom Sawyer has several adventures, often with his friend Huckleberry Finn."

###

[book title]: "Dune"

[book description]: "This novel is set in the distant future amidst a feudal interstellar society in which various noble houses control planetary fiefs. It tells the story of young Paul Atreides, whose family accepts the stewardship of the planet Arrakis. While the planet is an inhospitable and sparsely populated desert wasteland, it is the only source of melange, or spice, a drug that extends life and enhances mental abilities. The story explores the multilayered interactions of politics, religion, ecology, technology, and human emotion, as the factions of the empire confront each other in a struggle for the control of Arrakis and its spice."

###

[book title]: "Blue Mars"

[book description]: "This is a post-apocalyptic story about humanity, where the last survivors are forced to struggle to build a new world. A small group of survivors are forced to build a new civilization in order to survive and learn the lessons of the past." `

提示工程(Prompt engineering)在与语言模型交互时起着关键作用。随着使用更通用和强大的模型,如GPT-3.5,构建良好的提示变得越来越重要。好的提示可以帮助模型更好地理解用户的意图,并生成准确、有用的响应。

Hugging Face API

在本节中,我们将深入介绍一些Hugging Face API的细节。

-

搜索和采样生成文本

-

Auto*自动加载器

-

特定模型的分词器和模型加载器

回顾一下上文的Summarization部分使用到的xsum数据集:

display(xsum_sample.to_pandas())

# 输出

document summary id

0 The full cost of damage in Newton Stewart, one... Clean-up operations are continuing across the ... 35232142

1 A fire alarm went off at the Holiday Inn in Ho... Two tourist buses have been destroyed by fire ... 40143035

2 Ferrari appeared in a position to challenge un... Lewis Hamilton stormed to pole position at the... 35951548

3 John Edward Bates, formerly of Spalding, Linco... A former Lincolnshire Police officer carried o... 36266422

4 Patients and staff were evacuated from Cerahpa... An armed man who locked himself into a room at... 38826984

5 Simone Favaro got the crucial try with the las... Defending Pro12 champions Glasgow Warriors bag... 34540833

6 Veronica Vanessa Chango-Alverez, 31, was kille... A man with links to a car that was involved in... 20836172

7 Belgian cyclist Demoitie died after a collisio... Welsh cyclist Luke Rowe says changes to the sp... 35932467

8 Gundogan, 26, told BBC Sport he "can see the f... Manchester City midfielder Ilkay Gundogan says... 40758845

9 The crash happened about 07:20 GMT at the junc... A jogger has been hit by an unmarked police ca... 30358490

推理过程中的搜索与采样

在Hugging Face的pipeline中,有一些与推理相关的参数,例如num_beams和do_sample。

语言模型通过预测下一个标记来工作,然后是下一个标记,以此类推。目标是生成一个高概率的标记序列,这实际上是在潜在的序列空间中进行搜索。

为了进行这种搜索,主要有以下两种方法:

-

搜索:根据已生成的标记,从候选标记中选择下一个最有可能的标记。

-

1)贪婪搜索(默认):在贪婪搜索中,选择单个最有可能的下一个标记。

-

2)束搜索(Beam search):通过束搜索,在多个序列路径上进行搜索,通过参数num_beams来控制搜索的路径数。

-

采样:根据已生成的标记,通过从预测的标记分布中进行采样来选择下一个标记。

-

1)Top-K采样:通过将采样限制为最有可能的k个标记来修改采样。

-

2)Top-p采样:通过将采样限制为最有可能的概率总和为p的标记来修改采样。

您可以通过设置参数do_sample来切换搜索和采样方法。选择合适的搜索和采样方法可以影响生成文本的质量和多样性,根据任务需求选择合适的方法非常重要。

# We previously called the summarization pipeline using the default inference configuration.

# This does greedy search.

summarizer(xsum_sample["document"][0])

# 输出

[{'summary_text': 'the full cost of damage in Newton Stewart is still being assessed . many roads in peeblesshire remain badly affected by standing water . a flood alert remains in place across the'}]

# We can instead do a beam search by specifying num_beams.

# This takes longer to run, but it might find a better (more likely) sequence of text.

summarizer(xsum_sample["document"][0], num_beams=10)

# 输出

[{'summary_text': 'the full cost of damage in Newton Stewart is still being assessed . many roads in peeblesshire remain badly affected by standing water . a flood alert remains in place across the'}]

# Alternatively, we could use sampling.

summarizer(xsum_sample["document"][0], do_sample=True)

# 输出

[{'summary_text': 'many businesses and householders were affected by flooding in Newton Stewart . the water breached a retaining wall, flooding many commercial properties . a flood alert remains in place across'}]

# We can modify sampling to be more greedy by limiting sampling to the top_k or top_p most likely next tokens.

summarizer(xsum_sample["document"][0], do_sample=True, top_k=10, top_p=0.8)

# 输出

[{'summary_text': 'the full cost of damage in Newton Stewart is still being assessed . many roads in peeblesshire remain badly affected by standing water . a flood alert remains in place across the'}]

Auto*自动加载器

我们已经了解了Hugging Face的数据集和流水线抽象。虽然流水线是一种快速设置特定任务的语言模型的方法,但稍微低级的抽象模型和分词器(tokenizer)可以提供更多对选项的控制。我们将简要介绍如何使用这些抽象,按照以下步骤进行:

-

给定输入

-

对文章进行分词,将其转换为标记索引

-

在经过分词的数据上应用模型,生成摘要(表示为标记索引)

-

将摘要解码为可读的人类文本

现在我们来看一下用于分词器和模型类型的Auto*类,它们可以简化加载预训练的分词器和模型的过程。

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

# Load the pre-trained tokenizer and model.

tokenizer = AutoTokenizer.from_pretrained("t5-small", cache_dir="/root/home/LLMs/week1")

model = AutoModelForSeq2SeqLM.from_pretrained("t5-small", cache_dir="/root/home/LLMs/week1")

# For summarization, T5-small expects a prefix "summarize: ", so we prepend that to each article as a prompt.

articles = list(map(lambda article: "summarize: " + article, xsum_sample["document"]))

display(pd.DataFrame(articles, columns=["prompts"]))

# Tokenize the input

inputs = tokenizer(

articles, max_length=1024, return_tensors="pt", padding=True, truncation=True

)

print("input_ids:")

print(inputs["input_ids"])

print("attention_mask:")

print(inputs["attention_mask"])

# Generate summaries

summary_ids = model.generate(

inputs.input_ids,

attention_mask=inputs.attention_mask,

num_beams=2,

min_length=0,

max_length=40,

)

print(summary_ids)

# Decode the generated summaries

decoded_summaries = tokenizer.batch_decode(summary_ids, skip_special_tokens=True)

display(pd.DataFrame(decoded_summaries, columns=["decoded_summaries"]))

特定模型的分词器和模型加载器

您还可以手动选择加载特定的分词器和模型类型,而不必依赖于Auto*类来自动选择合适的类型。这样可以更灵活地控制系统的行为。

from transformers import T5Tokenizer, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("t5-small", cache_dir="/root/home/LLMs/week1")

model = T5ForConditionalGeneration.from_pretrained(

"t5-small", cache_dir="/root/home/LLMs/week1"

)

# The tokenizer and model can then be used similarly to how we used the ones loaded by the Auto* classes.

inputs = tokenizer(

articles, max_length=1024, return_tensors="pt", padding=True, truncation=True

)

summary_ids = model.generate(

inputs.input_ids,

attention_mask=inputs.attention_mask,

num_beams=2,

min_length=0,

max_length=40,

)

decoded_summaries = tokenizer.batch_decode(summary_ids, skip_special_tokens=True)

display(pd.DataFrame(decoded_summaries, columns=["decoded_summaries"]))

总结

在本文中,我们已经介绍了一些常见的语言模型应用,并学习了如何利用Hugging Face Hub中的预训练模型快速开始这些应用。此外,我们还了解了如何调整一些配置选项,以便更好地满足特定需求。通过这些知识,您可以更加灵活地应用语言模型,并获得更好的结果。

如何学习大模型

现在社会上大模型越来越普及了,已经有很多人都想往这里面扎,但是却找不到适合的方法去学习。

作为一名资深码农,初入大模型时也吃了很多亏,踩了无数坑。现在我想把我的经验和知识分享给你们,帮助你们学习AI大模型,能够解决你们学习中的困难。

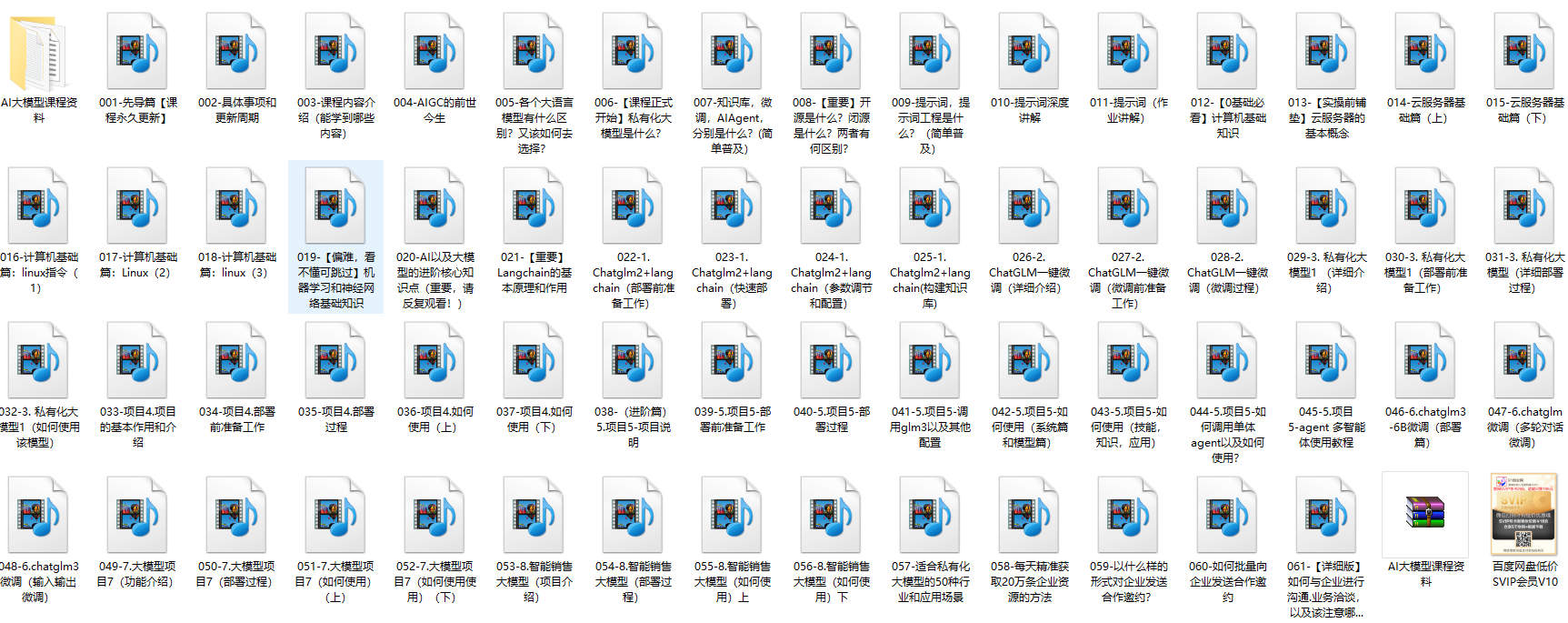

我已将重要的AI大模型资料包括市面上AI大模型各大白皮书、AGI大模型系统学习路线、AI大模型视频教程、实战学习,等录播视频免费分享出来,需要的小伙伴可以扫取。

一、AGI大模型系统学习路线

很多人学习大模型的时候没有方向,东学一点西学一点,像只无头苍蝇乱撞,我下面分享的这个学习路线希望能够帮助到你们学习AI大模型。

二、AI大模型视频教程

三、AI大模型各大学习书籍

四、AI大模型各大场景实战案例

五、结束语

学习AI大模型是当前科技发展的趋势,它不仅能够为我们提供更多的机会和挑战,还能够让我们更好地理解和应用人工智能技术。通过学习AI大模型,我们可以深入了解深度学习、神经网络等核心概念,并将其应用于自然语言处理、计算机视觉、语音识别等领域。同时,掌握AI大模型还能够为我们的职业发展增添竞争力,成为未来技术领域的领导者。

再者,学习AI大模型也能为我们自己创造更多的价值,提供更多的岗位以及副业创收,让自己的生活更上一层楼。

因此,学习AI大模型是一项有前景且值得投入的时间和精力的重要选择。