环境说明

RHEL7.9+11.2.0.4 RAC,双节点。

问题描述

巡检发现节点2的GI无法启动,发现是olr文件丢失导致。

问题复现

故意把OLR删掉,重启后发现GI无法启动

查看/etc/oracle/olr.loc

--查看/etc/oracle/olr.loc 该文件记录有olr文件位置和gi_home位置

[root@orcl01:/root]$ cat /etc/oracle/olr.loc

olrconfig_loc=/u01/app/11.2.0/grid/cdata/orcl01.olr

crs_home=/u01/app/11.2.0/grid删除olr文件

节点1

--删除olr文件

节点1

cd /u01/app/11.2.0/grid/cdata/

rm -f orcl01.olr节点2

节点2

cd /u01/app/11.2.0/grid/cdata/

rm -f orcl02.olr重启GI

crsctl stop crs 只对本地节点生效

节点1

--重启GI

crsctl stop crs

crsctl start crs

--检查GI状态

crsctl check crs输出如下:

[root@orcl01:/u01/app/11.2.0/grid/cdata]$ crsctl start crs

CRS-4640: Oracle High Availability Services is already active

CRS-4000: Command Start failed, or completed with errors.

[root@orcl01:/u01/app/11.2.0/grid/cdata]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4535: Cannot communicate with Cluster Ready Services

CRS-4530: Communications failure contacting Cluster Synchronization Services daemon

CRS-4534: Cannot communicate with Event Manager节点2

--重启GI

crsctl stop crs

crsctl start crs

--检查GI状态

crsctl check crs输出如下:

[root@orcl02:/u01/app/11.2.0/grid/cdata]$ crsctl start crs

CRS-4124: Oracle High Availability Services startup failed.

CRS-4000: Command Start failed, or completed with errors.

[root@orcl02:/u01/app/11.2.0/grid/cdata]$ crsctl check crs

CRS-4639: Could not contact Oracle High Availability Services分析过程

由于问题是节点2的GI无法启动,首先需要确认GI启动到了哪一个阶段

确认 GI启动到了哪一个阶段

su - grid

crsctl stat res -t -init输入如下:

--节点1:

[root@orcl01:/u01/app/11.2.0/grid/cdata]$ crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 OFFLINE OFFLINE Instance Shutdown

ora.cluster_interconnect.haip

1 OFFLINE OFFLINE

ora.crf

1 ONLINE ONLINE orcl01

ora.crsd

1 OFFLINE OFFLINE

ora.cssd

1 OFFLINE OFFLINE

ora.cssdmonitor

1 OFFLINE OFFLINE

ora.ctssd

1 OFFLINE OFFLINE

ora.diskmon

1 OFFLINE OFFLINE

ora.evmd

1 OFFLINE OFFLINE

ora.gipcd

1 ONLINE ONLINE orcl01

ora.gpnpd

1 ONLINE ONLINE orcl01

ora.mdnsd

1 ONLINE ONLINE orcl01

--节点2:

[root@orcl02:/u01/app/11.2.0/grid/cdata]$ crsctl stat res -t -init

CRS-4639: Could not contact Oracle High Availability Services

CRS-4000: Command Status failed, or completed with errors.ohasd层面都没有启动,有可能是/etc/inittab中启动集群的init.ohasd 脚本没有被调用,或者是ohasd.bin守护进程没有启动成功。

/u01/app/11.2.0/grid/crs/init/init.ohasd

/u01/app/11.2.0/grid/crs/utl/init.ohasd

查看进程

ps -ef | grep has输出如下:

--节点1:

[root@orcl01:/u01/app/11.2.0/grid/cdata]$ ps -ef | grep has

root 900 1 0 09:35 ? 00:00:00 /bin/sh /etc/init.d/init.ohasd run >/dev/null 2>&1 </dev/null

root 1358 1 0 09:35 ? 00:01:10 /u01/app/11.2.0/grid/bin/ohasd.bin reboot

--节点2:

[root@orcl02:/u01/app/11.2.0/grid/cdata]$ ps -ef | grep has

root 888 1 0 09:35 ? 00:00:00 /bin/sh /etc/init.d/init.ohasd run >/dev/null 2>&1 </dev/null根据上面的输出可推出 init.ohasd 脚本的确被调用了,而且 ohasd.bin 守护进程也已经被启动那么问题在于 ohasd 没有被成功启动。因此,需要看一下ohasd 的日志文件以进行分析。

查看ohasd 的日志文件以进行分析

ohasd 的日志文件位置:<gi_home>/log/<节点>/ohasd/ohasd.log

节点1

su - grid

cd $ORACLE_HOME/log/orcl01/ohasd/

tail -300f ohasd.log输出如下:

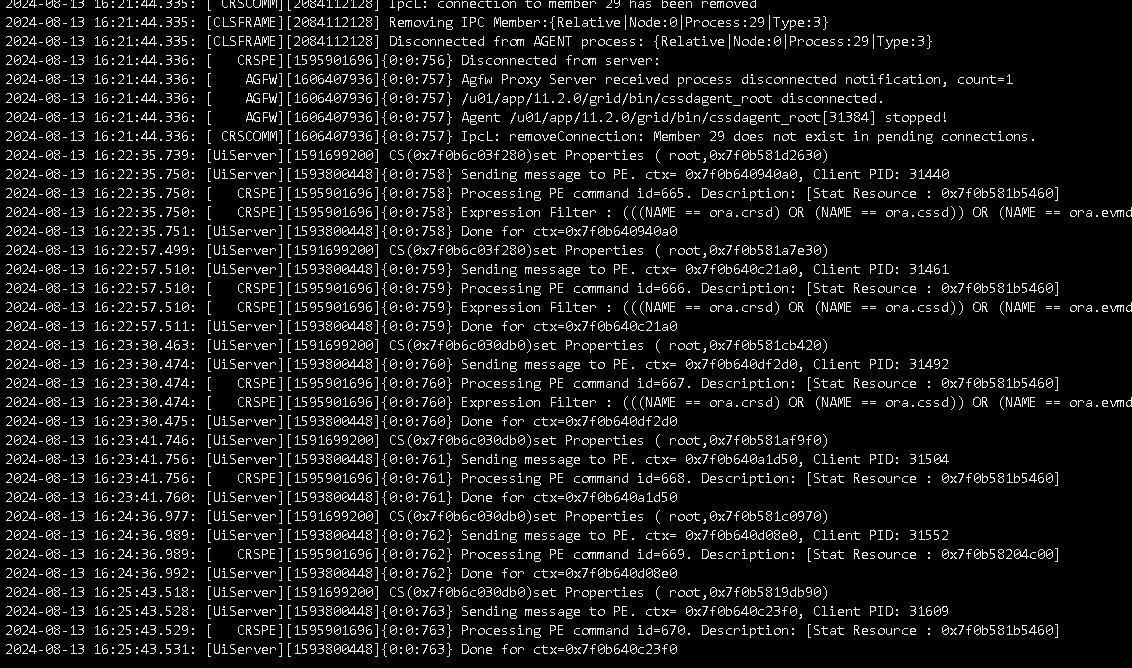

节点2

su - grid

cd $ORACLE_HOME/log/orcl02/ohasd/

tail -300f ohasd.log输出如下:

2024-08-13 16:22:36.018: [ default][3782379328] OHASD Daemon Starting. Command string :restart

2024-08-13 16:22:36.018: [ default][3782379328] Initializing OLR

2024-08-13 16:22:36.019: [ OCROSD][3782379328]utopen:6m': failed in stat OCR file/disk /u01/app/11.2.0/grid/cdata/orcl02.olr, errno=2, os err string=No such file or directory

2024-08-13 16:22:36.019: [ OCROSD][3782379328]utopen:7: failed to open any OCR file/disk, errno=2, os err string=No such file or directory

2024-08-13 16:22:36.019: [ OCRRAW][3782379328]proprinit: Could not open raw device

2024-08-13 16:22:36.019: [ OCRAPI][3782379328]a_init:16!: Backend init unsuccessful : [26]

2024-08-13 16:22:36.019: [ CRSOCR][3782379328] OCR context init failure. Error: PROCL-26: Error while accessing the physical storage Operating System error [No such file or directory] [2]

2024-08-13 16:22:36.019: [ default][3782379328] Created alert : (:OHAS00106:) : OLR initialization failed, error: PROCL-26: Error while accessing the physical storage Operating System error [No such file or directory] [2]

2024-08-13 16:22:36.019: [ default][3782379328][PANIC] OHASD exiting; Could not init OLR

2024-08-13 16:22:36.019: [ default][3782379328] Done.无法访问 OLR 导致的GI启动失败

查看OLR文件是否存在

OLR 的确不存在,问题的原因是 2个节点的OLR 丢失。

补充:

OLR文件位置:<gi_home>/cdata

OLR文件备份位置:<gi_home>/cdata/<节点>

节点1

[grid@orcl01:/u01/app/11.2.0/grid]$ cd /u01/app/11.2.0/grid/cdata/

[grid@orcl01:/u01/app/11.2.0/grid/cdata]$ ls -l

total 0

drwxr-xr-x. 2 grid oinstall 6 Aug 18 2023 localhost

drwxr-xr-x. 2 grid oinstall 40 Aug 18 2023 orcl01

drwxrwxr-x. 2 grid oinstall 97 Aug 13 15:59 orcl-cluster节点2

[grid@orcl02:/home/grid]$ cd /u01/app/11.2.0/grid/cdata/

[grid@orcl02:/u01/app/11.2.0/grid/cdata]$ ls -l

total 0

drwxr-xr-x. 2 grid oinstall 6 Aug 18 2023 localhost

drwxr-xr-x. 2 grid oinstall 40 Aug 18 2023 orcl02

drwxrwxr-x. 2 grid oinstall 97 Jun 21 18:32 orcl-cluster解决办法

由于OLR默认会在 GI安装时产生备份,可以从默认的备份位置进行恢复。

OLR文件位置:<gi_home>/cdata

OLR文件备份位置:<gi_home>/cdata/<节点>

检查备份 OLR 文件是否存在

节点1

cd /u01/app/11.2.0/grid/cdata/orcl01

ls -l 输出如下:

[root@orcl01:/u01/app/11.2.0/grid/cdata]$ cd /u01/app/11.2.0/grid/cdata/orcl01

[root@orcl01:/u01/app/11.2.0/grid/cdata/orcl01]$ ls -l

total 6628

-rw-------. 1 root root 6787072 Aug 18 2023 backup_20230818_082650.olr节点2

cd /u01/app/11.2.0/grid/cdata/orcl02

ls -l 输出如下:

[root@orcl02:/root]$ cd /u01/app/11.2.0/grid/cdata/orcl02

[root@orcl02:/u01/app/11.2.0/grid/cdata/orcl02]$ ls -l

total 6628

-rw-------. 1 root oinstall 6787072 Aug 18 2023 backup_20230818_083142.olr恢复OLR文件

需使用root用户操作。

必须先手动创建touch orcl02.olr 文件,不然恢复OLR文件会报如下错误:

[root@orcl01:/root]$ cd /u01/app/11.2.0/grid/bin/

[root@orcl01:/u01/app/11.2.0/grid/bin]$ ./ocrconfig -local -restore /u01/app/11.2.0/grid/cdata/orcl01/backup_20230818_082650.olr

PROTL-35: The configured OLR location is not accessible.节点1

cd /u01/app/11.2.0/grid/cdata

touch orcl01.olr

cd /u01/app/11.2.0/grid/bin/

./ocrconfig -local -restore /u01/app/11.2.0/grid/cdata/orcl01/backup_20230818_082650.olr节点2

cd /u01/app/11.2.0/grid/cdata

touch orcl02.olr

cd /u01/app/11.2.0/grid/bin/

./ocrconfig -local -restore /u01/app/11.2.0/grid/cdata/orcl02/backup_20230818_083142.olr问题处理

PROTL-35: The configured OLR location is not accessible.

--问题描述

恢复OLR文件报错

[root@orcl01:/root]$ cd /u01/app/11.2.0/grid/bin/

[root@orcl01:/u01/app/11.2.0/grid/bin]$ ./ocrconfig -local -restore /u01/app/11.2.0/grid/cdata/orcl01/backup_20230818_082650.olr

PROTL-35: The configured OLR location is not accessible.

--解决办法

cd /u01/app/11.2.0/grid/cdata

touch orcl01.olr

cd /u01/app/11.2.0/grid/bin/

./ocrconfig -local -restore /u01/app/11.2.0/grid/cdata/orcl01/backup_20230818_082650.olr启动GI

启动成功。

crsctl start crs -all知识点补充

从版本 11gR2版本开始,ohasd 变成了集群启动的唯一始点,而所有的其他守护进程和集群管理的资源统统被定义为资源。OCR是用于保存 CRSD所管理的资源的注册表,但是在CRSD启动之前集群还有很多初始化资源(例如asm实例)需要启动,所以只有 OCR是不够的。Oracle在11gR2版本中推出了另一个集群注册表OLR(Oracle Local Registry)。

OLR是保存在本地的集群注册表,也就是说OLR是保存在每个节点本地的而且其中的信息大部分是针对每个节点的。OLR的主要作用就是为ohasd守护进程提供集群的配置信息和初始化资源的定义信息。

当集群启动时ohasd 会从/etc/oracle/olr.1oc文件(不同平台,文件位置会不同)中读取 OLR的位置,OLR默认保存在<gi_home>/cdata下,文件名为<节点名 >.olr。

OLR的备份策略和OCR的有所不同,默认情况下GI在初始安装时会在路径<gi_home>/cdata/<节点>下产生一个备份。

[root@orcl01:/root]$ cat /etc/oracle/olr.loc

olrconfig_loc=/u01/app/11.2.0/grid/cdata/orcl01.olr

crs_home=/u01/app/11.2.0/gridOLR文件位置:<gi_home>/cdata

OLR文件备份位置:<gi_home>/cdata/<节点>

参考链接:由于丢失OLR导致的节点无法启动_the configured olr location is not accessible-CSDN博客