onnxruntime动态输入推理

lenet的onnxruntime动态输入推理

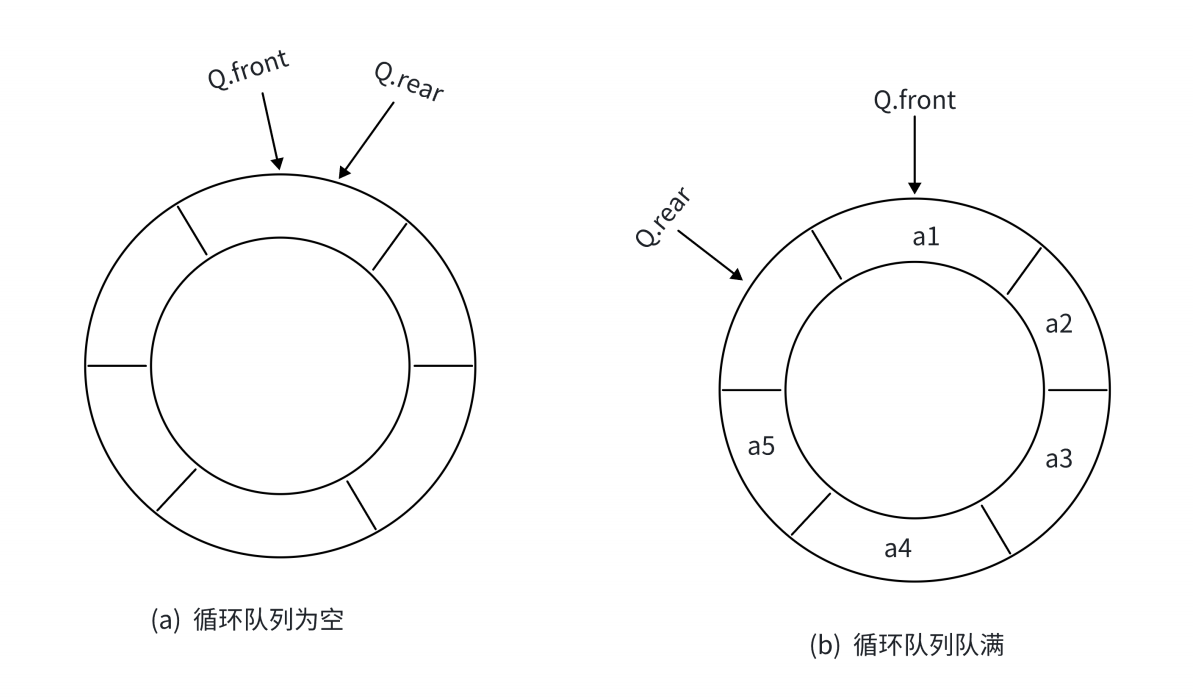

导出下面的onnx模型:

可以看到,该模型的输入batch是动态的。

onnx动态输入推理(python):

import cv2

import numpy as np

import onnxruntime

from pathlib import Path

onnx_session = onnxruntime.InferenceSession("lenet.onnx", providers=['CPUExecutionProvider'])

image_files = Path('./images').glob('*')

input_tensor = []

for image_file in image_files:

image = cv2.imread(str(image_file), -1)

blob = cv2.dnn.blobFromImage(image, 1/255., size=(28,28))

input_tensor.append(np.squeeze(blob, axis=0))

input_name = []

for node in onnx_session.get_inputs():

input_name.append(node.name)

output_name = []

for node in onnx_session.get_outputs():

output_name.append(node.name)

inputs = {}

for name in input_name:

inputs[name] = np.array(input_tensor)

outputs = onnx_session.run(None, inputs)[0]

print(np.argmax(outputs, axis=1))

onnx动态输入推理(cpp):

#include <iostream>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

int main(int argc, char* argv[])

{

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "lenet");

Ort::SessionOptions session_options;

session_options.SetIntraOpNumThreads(1);

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);

const wchar_t* model_path = L"lenet.onnx";

Ort::Session session(env, model_path, session_options);

Ort::AllocatorWithDefaultOptions allocator;

std::vector<const char*> input_node_names;

for (size_t i = 0; i < session.GetInputCount(); i++)

{

input_node_names.push_back(session.GetInputName(i, allocator));

}

std::vector<const char*> output_node_names;

for (size_t i = 0; i < session.GetOutputCount(); i++)

{

output_node_names.push_back(session.GetOutputName(i, allocator));

}

std::vector<std::string> filenames;

std::vector<cv::Mat> images;

cv::glob("./images", filenames);

for (const auto& filename : filenames)

{

cv::Mat image = cv::imread(filename, -1);

images.push_back(image);

}

const size_t input_tensor_size = images.size() * 1 * 28 * 28;

std::vector<float> input_tensor_values(input_tensor_size);

for (size_t n = 0; n < images.size(); n++)

{

images[n].convertTo(images[n], CV_32F, 1.0 / 255);

for (int i = 0; i < 28; i++)

{

for (int j = 0; j < 28; j++)

{

input_tensor_values[n* 28 * 28 + i * 28 + j] = images[n].at<float>(i, j);

}

}

}

std::vector<int64_t> input_node_dims = { int(images.size()), 1, 28, 28 };

auto memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, input_tensor_values.data(), input_tensor_size, input_node_dims.data(), input_node_dims.size());

std::vector<Ort::Value> inputs;

inputs.push_back(std::move(input_tensor));

std::vector<Ort::Value> outputs = session.Run(Ort::RunOptions{ nullptr }, input_node_names.data(), inputs.data(), input_node_names.size(), output_node_names.data(), output_node_names.size());

const float* rawOutput = outputs[0].GetTensorData<float>();

std::vector<int64_t> outputShape = outputs[0].GetTensorTypeAndShapeInfo().GetShape();

size_t count = outputs[0].GetTensorTypeAndShapeInfo().GetElementCount();

std::vector<float> preds(rawOutput, rawOutput + count);

for (size_t i = 0; i < images.size(); i++)

{

std::cout << std::max_element(preds.begin() + 10 * i, preds.begin() + 10 * (i + 1)) - preds.begin() - 10 * i << std::endl;

}

return 0;

}

yolov5的onnxruntime动态输入推理

下载yolov5-7.0的代码:https://github.com/ultralytics/yolov5/releases/tag/v7.0,通过

python export.py --dynamic --include ['onnx']

导出下面的onnx模型:

可以看到,该模型的输入batch以及宽高都是动态的。

onnx动态输入推理(python):

import cv2

import numpy as np

import onnxruntime

from pathlib import Path

class_names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush'] #coco80类别

input_shape = (640, 640)

score_threshold = 0.2

nms_threshold = 0.5

confidence_threshold = 0.2

def nms(boxes, scores, score_threshold, nms_threshold):

x1 = boxes[:, 0]

y1 = boxes[:, 1]

x2 = boxes[:, 2]

y2 = boxes[:, 3]

areas = (y2 - y1 + 1) * (x2 - x1 + 1)

keep = []

index = scores.argsort()[::-1]

while index.size > 0:

i = index[0]

keep.append(i)

x11 = np.maximum(x1[i], x1[index[1:]])

y11 = np.maximum(y1[i], y1[index[1:]])

x22 = np.minimum(x2[i], x2[index[1:]])

y22 = np.minimum(y2[i], y2[index[1:]])

w = np.maximum(0, x22 - x11 + 1)

h = np.maximum(0, y22 - y11 + 1)

overlaps = w * h

ious = overlaps / (areas[i] + areas[index[1:]] - overlaps)

idx = np.where(ious <= nms_threshold)[0]

index = index[idx + 1]

return keep

def xywh2xyxy(x):

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2

y[:, 1] = x[:, 1] - x[:, 3] / 2

y[:, 2] = x[:, 0] + x[:, 2] / 2

y[:, 3] = x[:, 1] + x[:, 3] / 2

return y

def filter_box(outputs): #过滤掉无用的框

outputs = np.squeeze(outputs)

outputs = outputs[outputs[..., 4] > confidence_threshold]

classes_scores = outputs[..., 5:]

boxes = []

scores = []

class_ids = []

for i in range(len(classes_scores)):

class_id = np.argmax(classes_scores[i])

outputs[i][4] *= classes_scores[i][class_id]

outputs[i][5] = class_id

if outputs[i][4] > score_threshold:

boxes.append(outputs[i][:6])

scores.append(outputs[i][4])

class_ids.append(outputs[i][5])

boxes = np.array(boxes)

boxes = xywh2xyxy(boxes)

scores = np.array(scores)

indices = nms(boxes, scores, score_threshold, nms_threshold)

output = boxes[indices]

return output

def letterbox(im, new_shape=(416, 416), color=(114, 114, 114)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = (new_shape[1] - new_unpad[0])/2, (new_shape[0] - new_unpad[1])/2 # wh padding

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im

def scale_boxes(boxes, shape):

# Rescale boxes (xyxy) from input_shape to shape

gain = min(input_shape[0] / shape[0], input_shape[1] / shape[1]) # gain = old / new

pad = (input_shape[1] - shape[1] * gain) / 2, (input_shape[0] - shape[0] * gain) / 2 # wh padding

boxes[..., [0, 2]] -= pad[0] # x padding

boxes[..., [1, 3]] -= pad[1] # y padding

boxes[..., :4] /= gain

boxes[..., [0, 2]] = boxes[..., [0, 2]].clip(0, shape[1]) # x1, x2

boxes[..., [1, 3]] = boxes[..., [1, 3]].clip(0, shape[0]) # y1, y2

return boxes

def draw(image, box_data):

box_data = scale_boxes(box_data, image.shape)

boxes = box_data[...,:4].astype(np.int32)

scores = box_data[...,4]

classes = box_data[...,5].astype(np.int32)

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 1)

cv2.putText(image, '{0} {1:.2f}'.format(class_names[cl], score), (top, left), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 1)

if __name__=="__main__":

image_files = Path('./images').glob('*')

images = []

input_tensor = []

for image_file in image_files:

image = cv2.imread(str(image_file))

images.append(image)

input = letterbox(image, input_shape)

input = input[:, :, ::-1].transpose(2, 0, 1).astype(dtype=np.float32) #BGR2RGB和HWC2CHW

input = input / 255.0

input_tensor.append(input)

onnx_session = onnxruntime.InferenceSession('yolov5n_dynamic.onnx', providers=['CPUExecutionProvider', 'CUDAExecutionProvider'])

input_name = []

for node in onnx_session.get_inputs():

input_name.append(node.name)

output_name = []

for node in onnx_session.get_outputs():

output_name.append(node.name)

inputs = {}

for name in input_name:

inputs[name] = np.array(input_tensor)

outputs = onnx_session.run(None, inputs)[0]

for i in range(outputs.shape[0]):

boxes = filter_box(outputs[i])

draw(images[i], boxes)

cv2.imwrite('result'+str(i)+'.jpg', images[i])

onnx动态输入推理(cpp):

#include <iostream>

#include <opencv2/opencv.hpp>

#include <onnxruntime_cxx_api.h>

const std::vector<std::string> class_names = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" }; //类别名称

const int input_width = 640;

const int input_height = 640;

const float score_threshold = 0.2;

const float nms_threshold = 0.5;

const float confidence_threshold = 0.2;

const int num_classes = class_names.size();

const int output_numprob = 5 + num_classes;

const int output_numbox = 3 * (input_width / 8 * input_height / 8 + input_width / 16 * input_height / 16 + input_width / 32 * input_height / 32);

std::vector<std::string> filenames;

//LetterBox处理

void LetterBox(const cv::Mat & image, cv::Mat & outImage,

const cv::Size & newShape = cv::Size(640, 640), const cv::Scalar & color = cv::Scalar(114, 114, 114))

{

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height, (float)newShape.width / (float)shape.width);

float ratio[2]{ r, r };

int new_un_pad[2] = { (int)std::round((float)shape.width * r),(int)std::round((float)shape.height * r) };

auto dw = (float)(newShape.width - new_un_pad[0]) / 2;

auto dh = (float)(newShape.height - new_un_pad[1]) / 2;

if (shape.width != new_un_pad[0] && shape.height != new_un_pad[1])

cv::resize(image, outImage, cv::Size(new_un_pad[0], new_un_pad[1]));

else

outImage = image.clone();

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

cv::Vec4d params;

params[0] = ratio[0];

params[1] = ratio[1];

params[2] = left;

params[3] = top;

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

//预处理

void pre_process(std::vector<cv::Mat> & images, std::vector<float> & inputs)

{

cv::Vec4d params;

cv::Mat letterbox;

std::vector<cv::Mat> split_images;

for (size_t i = 0; i < images.size(); i++)

{

LetterBox(images[i], letterbox, cv::Size(input_width, input_height));

cv::cvtColor(letterbox, letterbox, cv::COLOR_BGR2RGB);

letterbox.convertTo(letterbox, CV_32FC3, 1.0f / 255.0f);

cv::split(letterbox, split_images);

for (size_t i = 0; i < letterbox.channels(); ++i)

{

std::vector<float> split_image_data = split_images[i].reshape(1, 1);

inputs.insert(inputs.end(), split_image_data.begin(), split_image_data.end());

}

}

}

//网络推理

void process(const wchar_t* model, std::vector<float> & inputs, std::vector<Ort::Value> & outputs)

{

Ort::Env env(ORT_LOGGING_LEVEL_WARNING, "yolov5n");

Ort::SessionOptions session_options;

session_options.SetIntraOpNumThreads(12);//设置线程数

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);//启用模型优化策略

//CUDA option set

OrtCUDAProviderOptions cuda_option;

cuda_option.device_id = 0;

cuda_option.arena_extend_strategy = 0;

cuda_option.cudnn_conv_algo_search = OrtCudnnConvAlgoSearchExhaustive;

cuda_option.gpu_mem_limit = SIZE_MAX;

cuda_option.do_copy_in_default_stream = 1;

session_options.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);

session_options.AppendExecutionProvider_CUDA(cuda_option);

Ort::Session session(env, model, session_options);

Ort::AllocatorWithDefaultOptions allocator;//通过使用Ort::AllocatorWithDefaultOptions类,可以方便地进行内存分配和管理,而无需手动实现内存分配和释放的细节。

std::vector<const char*> input_node_names;

for (size_t i = 0; i < session.GetInputCount(); i++)

{

input_node_names.push_back("images");

}

std::vector<const char*> output_node_names;

for (size_t i = 0; i < session.GetOutputCount(); i++)

{

output_node_names.push_back("output0");

}

// create input tensor object from data values

std::vector<int64_t> input_node_dims = { (int)filenames.size(), 3, input_width, input_height };

auto memory_info = Ort::MemoryInfo::CreateCpu(OrtArenaAllocator, OrtMemTypeDefault);

Ort::Value input_tensor = Ort::Value::CreateTensor<float>(memory_info, inputs.data(), inputs.size(), input_node_dims.data(), input_node_dims.size());

std::vector<Ort::Value> ort_inputs;

ort_inputs.push_back(std::move(input_tensor));//右值引用,避免不必要的拷贝和内存分配操作

// score model & input tensor, get back output tensor

outputs = session.Run(Ort::RunOptions{ nullptr }, input_node_names.data(), ort_inputs.data(), input_node_names.size(), output_node_names.data(), output_node_names.size());

session.release();

env.release();

}

//NMS

void nms(std::vector<cv::Rect> & boxes, std::vector<float> & scores, float score_threshold, float nms_threshold, std::vector<int> & indices)

{

assert(boxes.size() == scores.size());

struct BoxScore

{

cv::Rect box;

float score;

int id;

};

std::vector<BoxScore> boxes_scores;

for (size_t i = 0; i < boxes.size(); i++)

{

BoxScore box_conf;

box_conf.box = boxes[i];

box_conf.score = scores[i];

box_conf.id = i;

if (scores[i] > score_threshold) boxes_scores.push_back(box_conf);

}

std::sort(boxes_scores.begin(), boxes_scores.end(), [](BoxScore a, BoxScore b) { return a.score > b.score; });

std::vector<float> area(boxes_scores.size());

for (size_t i = 0; i < boxes_scores.size(); ++i)

{

area[i] = boxes_scores[i].box.width * boxes_scores[i].box.height;

}

std::vector<bool> isSuppressed(boxes_scores.size(), false);

for (size_t i = 0; i < boxes_scores.size(); ++i)

{

if (isSuppressed[i]) continue;

for (size_t j = i + 1; j < boxes_scores.size(); ++j)

{

if (isSuppressed[j]) continue;

float x1 = (std::max)(boxes_scores[i].box.x, boxes_scores[j].box.x);

float y1 = (std::max)(boxes_scores[i].box.y, boxes_scores[j].box.y);

float x2 = (std::min)(boxes_scores[i].box.x + boxes_scores[i].box.width, boxes_scores[j].box.x + boxes_scores[j].box.width);

float y2 = (std::min)(boxes_scores[i].box.y + boxes_scores[i].box.height, boxes_scores[j].box.y + boxes_scores[j].box.height);

float w = (std::max)(0.0f, x2 - x1);

float h = (std::max)(0.0f, y2 - y1);

float inter = w * h;

float ovr = inter / (area[i] + area[j] - inter);

if (ovr >= nms_threshold) isSuppressed[j] = true;

}

}

for (int i = 0; i < boxes_scores.size(); ++i)

{

if (!isSuppressed[i]) indices.push_back(boxes_scores[i].id);

}

}

//box缩放到原图尺寸

void scale_boxes(cv::Rect & box, cv::Size size)

{

float gain = std::min(input_width * 1.0 / size.width, input_height * 1.0 / size.height);

int pad_w = (input_width - size.width * gain) / 2;

int pad_h = (input_height - size.height * gain) / 2;

box.x -= pad_w;

box.y -= pad_h;

box.x /= gain;

box.y /= gain;

box.width /= gain;

box.height /= gain;

}

//可视化函数

void draw_result(cv::Mat & image, std::string label, cv::Rect box)

{

cv::rectangle(image, box, cv::Scalar(255, 0, 0), 1);

int baseLine;

cv::Size label_size = cv::getTextSize(label, 1, 1, 1, &baseLine);

cv::Point tlc = cv::Point(box.x, box.y);

cv::Point brc = cv::Point(box.x, box.y + label_size.height + baseLine);

cv::putText(image, label, cv::Point(box.x, box.y), cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 0, 255), 1);

}

//后处理

void post_process(std::vector<cv::Mat> & images, std::vector<cv::Mat> & results, std::vector<Ort::Value> & outputs)

{

for (int n = 0; n < images.size(); ++n)

{

std::vector<cv::Rect> boxes;

std::vector<float> scores;

std::vector<int> class_ids;

for (int i = 0; i < output_numbox; ++i)

{

float* ptr = const_cast<float*> (outputs[0].GetTensorData<float>()) + i * output_numprob + n * output_numbox * output_numprob;

float objness = ptr[4];

if (objness < confidence_threshold)

continue;

float* classes_scores = ptr + 5;

int class_id = std::max_element(classes_scores, classes_scores + num_classes) - classes_scores;

float max_class_score = classes_scores[class_id];

float score = max_class_score * objness;

if (score < score_threshold)

continue;

float x = ptr[0];

float y = ptr[1];

float w = ptr[2];

float h = ptr[3];

int left = int(x - 0.5 * w);

int top = int(y - 0.5 * h);

int width = int(w);

int height = int(h);

cv::Rect box = cv::Rect(left, top, width, height);

scale_boxes(box, images[n].size());

boxes.push_back(box);

scores.push_back(score);

class_ids.push_back(class_id);

}

std::vector<int> indices;

nms(boxes, scores, score_threshold, nms_threshold, indices);

for (int i = 0; i < indices.size(); i++)

{

int idx = indices[i];

cv::Rect box = boxes[idx];

std::string label = class_names[class_ids[idx]] + ":" + cv::format("%.2f", scores[idx]); //class_ids[idx]是class_id

draw_result(results[n], label, box);

}

}

}

int main(int argc, char* argv[])

{

std::vector<cv::Mat> images;

cv::glob("./images", filenames);

for (const auto& filename : filenames)

{

cv::Mat image = cv::imread(filename);

images.push_back(image);

}

std::vector<cv::Mat> results = images;

std::vector<float> inputs;

pre_process(images, inputs);

const wchar_t* model = L"yolov5n_dynamic.onnx";

std::vector<Ort::Value> outputs;

process(model, inputs, outputs);

post_process(images, results, outputs);

for (size_t i = 0; i < results.size(); i++)

{

cv::imwrite("result" + std::to_string(i) + ".jpg", results[i]);

}

return 0;

}

tensorrt动态输入推理

lenet的tensorrt动态输入推理

使用trtexec工具转换模型:

./trtexec.exe --onnx=lenet.onnx --saveEngine=lenet.engine --minShapes=input:1x1x28x28 --optShapes=input:2x1x28x28 --maxShapes=input:8x1x28x28

tensorrt动态输入推理(python):

import cv2

import numpy as np

import tensorrt as trt

import pycuda.autoinit

import pycuda.driver as cuda

from pathlib import Path

logger = trt.Logger(trt.Logger.WARNING)

with open("lenet.engine", "rb") as f, trt.Runtime(logger) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

image_files = Path('./images').glob('*')

input_tensor = []

for image_file in image_files:

print(str(image_file))

image = cv2.imread(str(image_file), 0)

blob = cv2.dnn.blobFromImage(image, 1/255., size=(28,28))

input_tensor.append(np.squeeze(blob, axis=0))

h_input = cuda.pagelocked_empty(trt.volume((len(input_tensor),1,28,28)), dtype=np.float32)

h_output = cuda.pagelocked_empty(trt.volume((len(input_tensor),10)), dtype=np.float32)

d_input = cuda.mem_alloc(h_input.nbytes)

d_output = cuda.mem_alloc(h_output.nbytes)

stream = cuda.Stream()

np.copyto(h_input, np.array(input_tensor).ravel())

with engine.create_execution_context() as context:

context.set_input_shape("input", (len(input_tensor),1,28,28))

cuda.memcpy_htod_async(d_input, h_input, stream)

context.execute_async_v2(bindings=[int(d_input), int(d_output)], stream_handle=stream.handle)

cuda.memcpy_dtoh_async(h_output, d_output, stream)

stream.synchronize()

pred = np.argmax(h_output.reshape(len(input_tensor),10), axis=1)

print(pred)

tensorrt动态输入推理(cpp):

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

#include <cuda_runtime.h>

#include <NvInfer.h>

#include <NvInferRuntime.h>

#include <NvOnnxParser.h>

inline const char* severity_string(nvinfer1::ILogger::Severity t)

{

switch (t)

{

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger

{

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override

{

if (severity <= Severity::kINFO)

{

if (severity == Severity::kWARNING)

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

else if (severity <= Severity::kERROR)

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

else

printf("%s: %s\n", severity_string(severity), msg);

}

}

} logger;

std::vector<unsigned char> load_file(const std::string & file)

{

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open())

return {};

in.seekg(0, std::ios::end);

size_t length = in.tellg();

std::vector<uint8_t> data;

if (length > 0)

{

in.seekg(0, std::ios::beg);

data.resize(length);

in.read((char*)& data[0], length);

}

in.close();

return data;

}

int main()

{

std::vector<std::string> filenames;

std::vector<cv::Mat> images;

cv::glob("./images/*.png", filenames);

for (const auto& filename : filenames)

{

cv::Mat image = cv::imread(filename, -1);

images.push_back(image);

}

TRTLogger logger;

auto engine_data = load_file("lenet.engine");

nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(logger);

nvinfer1::ICudaEngine* engine = runtime->deserializeCudaEngine(engine_data.data(), engine_data.size());

if (engine == nullptr)

{

printf("Deserialize cuda engine failed.\n");

runtime->destroy();

return -1;

}

nvinfer1::IExecutionContext* execution_context = engine->createExecutionContext();

execution_context->setBindingDimensions(0, nvinfer1::Dims4{ (int)images.size(), 1, 28, 28 });

cudaStream_t stream = nullptr;

cudaStreamCreate(&stream);

int input_numel = images.size() * 1 * 28 * 28;

int output_numel = images.size() * 10;

float* input_data_host = nullptr;

cudaMallocHost(&input_data_host, input_numel * sizeof(float));

for (size_t n = 0; n < images.size(); n++)

{

images[n].convertTo(images[n], CV_32FC1, 1.0f / 255.0f);

float* pimage = (float*)images[n].data;

for (int i = 0; i < 28 * 28; i++)

{

input_data_host[n * 28 * 28 + i] = pimage[i];

}

}

float* input_data_device = nullptr;

float* output_data_host = new float[output_numel];

float* output_data_device = nullptr;

cudaMalloc(&input_data_device, input_numel * sizeof(float));

cudaMalloc(&output_data_device, output_numel * sizeof(float));

cudaMemcpyAsync(input_data_device, input_data_host, input_numel * sizeof(float), cudaMemcpyHostToDevice, stream);

float* bindings[] = { input_data_device, output_data_device };

bool success = execution_context->enqueueV2((void**)bindings, stream, nullptr);

cudaMemcpyAsync(output_data_host, output_data_device, output_numel * sizeof(float), cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

for (size_t i = 0; i < images.size(); i++)

{

std::cout << std::max_element(output_data_host + 10 * i, output_data_host + 10 * (i + 1)) - output_data_host - 10 * i << std::endl;

}

cudaStreamDestroy(stream);

execution_context->destroy();

engine->destroy();

runtime->destroy();

return 0;

}

yolov5的tensorrt动态输入推理

使用trtexec工具转换模型:

trtexec.exe --onnx=yolov5n.onnx --saveEngine=yolov5n.engine --minShapes=images:1x3x480x480 --optShapes=images:1x3x640x640 --maxShapes=images:8x3x960x960

tensorrt动态输入推理(python):

import cv2

import numpy as np

import tensorrt as trt

import pycuda.autoinit

import pycuda.driver as cuda

from pathlib import Path

class_names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush'] #coco80类别

input_shape = (640, 640)

score_threshold = 0.2

nms_threshold = 0.5

confidence_threshold = 0.2

def nms(boxes, scores, score_threshold, nms_threshold):

x1 = boxes[:, 0]

y1 = boxes[:, 1]

x2 = boxes[:, 2]

y2 = boxes[:, 3]

areas = (y2 - y1 + 1) * (x2 - x1 + 1)

keep = []

index = scores.argsort()[::-1]

while index.size > 0:

i = index[0]

keep.append(i)

x11 = np.maximum(x1[i], x1[index[1:]])

y11 = np.maximum(y1[i], y1[index[1:]])

x22 = np.minimum(x2[i], x2[index[1:]])

y22 = np.minimum(y2[i], y2[index[1:]])

w = np.maximum(0, x22 - x11 + 1)

h = np.maximum(0, y22 - y11 + 1)

overlaps = w * h

ious = overlaps / (areas[i] + areas[index[1:]] - overlaps)

idx = np.where(ious <= nms_threshold)[0]

index = index[idx + 1]

return keep

def xywh2xyxy(x):

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2

y[:, 1] = x[:, 1] - x[:, 3] / 2

y[:, 2] = x[:, 0] + x[:, 2] / 2

y[:, 3] = x[:, 1] + x[:, 3] / 2

return y

def filter_box(outputs): #过滤掉无用的框

outputs = np.squeeze(outputs).astype(dtype=np.float32)

outputs = outputs[outputs[..., 4] > confidence_threshold]

classes_scores = outputs[..., 5:]

boxes = []

scores = []

class_ids = []

for i in range(len(classes_scores)):

class_id = np.argmax(classes_scores[i])

outputs[i][4] *= classes_scores[i][class_id]

outputs[i][5] = class_id

if outputs[i][4] > score_threshold:

boxes.append(outputs[i][:6])

scores.append(outputs[i][4])

class_ids.append(outputs[i][5])

boxes = np.array(boxes)

boxes = xywh2xyxy(boxes)

scores = np.array(scores)

indices = nms(boxes, scores, score_threshold, nms_threshold)

output = boxes[indices]

return output

def letterbox(im, new_shape=(416, 416), color=(114, 114, 114)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

# Scale ratio (new / old)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = (new_shape[1] - new_unpad[0])/2, (new_shape[0] - new_unpad[1])/2 # wh padding

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im

def scale_boxes(input_shape, boxes, shape):

# Rescale boxes (xyxy) from input_shape to shape

gain = min(input_shape[0] / shape[0], input_shape[1] / shape[1]) # gain = old / new

pad = (input_shape[1] - shape[1] * gain) / 2, (input_shape[0] - shape[0] * gain) / 2 # wh padding

boxes[..., [0, 2]] -= pad[0] # x padding

boxes[..., [1, 3]] -= pad[1] # y padding

boxes[..., :4] /= gain

boxes[..., [0, 2]] = boxes[..., [0, 2]].clip(0, shape[1]) # x1, x2

boxes[..., [1, 3]] = boxes[..., [1, 3]].clip(0, shape[0]) # y1, y2

return boxes

def draw(image, box_data):

box_data = scale_boxes(input_shape, box_data, image.shape)

boxes = box_data[...,:4].astype(np.int32)

scores = box_data[...,4]

classes = box_data[...,5].astype(np.int32)

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 1)

cv2.putText(image, '{0} {1:.2f}'.format(class_names[cl], score), (top, left), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 1)

if __name__=="__main__":

logger = trt.Logger(trt.Logger.WARNING)

with open("yolov5n.engine", "rb") as f, trt.Runtime(logger) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

image_files = Path('./images').glob('*')

images = []

input_tensor = []

for image_file in image_files:

print(str(image_file))

image = cv2.imread(str(image_file), -1)

images.append(image)

input = letterbox(image, input_shape)

input = input[:, :, ::-1].transpose(2, 0, 1).astype(dtype=np.float32) #BGR2RGB和HWC2CHW

input = input / 255.0

input_tensor.append(input)

inputs_host = cuda.pagelocked_empty(trt.volume((len(input_tensor),3,640,640)), dtype=np.float32)

outputs_host = cuda.pagelocked_empty(trt.volume((len(input_tensor),25200,85)), dtype=np.float32)

inputs_device = cuda.mem_alloc(inputs_host.nbytes)

outputs_device = cuda.mem_alloc(outputs_host.nbytes)

stream = cuda.Stream()

np.copyto(inputs_host, np.array(input_tensor).ravel())

with engine.create_execution_context() as context:

context.set_input_shape("images", (len(input_tensor),3,640,640))

cuda.memcpy_htod_async(inputs_device, inputs_host, stream)

context.execute_async_v2(bindings=[int(inputs_device), int(outputs_device)], stream_handle=stream.handle)

cuda.memcpy_dtoh_async(outputs_host, outputs_device, stream)

stream.synchronize()

outputs_host = outputs_host.reshape(len(input_tensor),25200,85)

for i in range(len(input_tensor)):

boxes = filter_box(outputs_host[i])

draw(images[i], boxes)

cv2.imwrite('result'+str(i)+'.jpg', images[i])

tensorrt动态输入推理(cpp):

#include <iostream>

#include <fstream>

#include <vector>

#include <opencv2/opencv.hpp>

#include <cuda_runtime.h>

#include <NvInfer.h>

#include <NvInferRuntime.h>

const std::vector<std::string> class_names = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" }; //类别名称

const int input_width = 640;

const int input_height = 640;

const float score_threshold = 0.2;

const float nms_threshold = 0.5;

const float confidence_threshold = 0.2;

const int num_classes = class_names.size();

const int output_numprob = 5 + num_classes;

const int output_numbox = 3 * (input_width / 8 * input_height / 8 + input_width / 16 * input_height / 16 + input_width / 32 * input_height / 32);

int input_numel;

int output_numel;

std::vector<std::string> filenames;

inline const char* severity_string(nvinfer1::ILogger::Severity t)

{

switch (t)

{

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger

{

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override

{

if (severity <= Severity::kINFO)

{

if (severity == Severity::kWARNING)

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

else if (severity <= Severity::kERROR)

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

else

printf("%s: %s\n", severity_string(severity), msg);

}

}

} logger;

std::vector<unsigned char> load_file(const std::string& file)

{

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open())

return {};

in.seekg(0, std::ios::end);

size_t length = in.tellg();

std::vector<uint8_t> data;

if (length > 0)

{

in.seekg(0, std::ios::beg);

data.resize(length);

in.read((char*)&data[0], length);

}

in.close();

return data;

}

//LetterBox处理

void LetterBox(const cv::Mat& image, cv::Mat& outImage,

const cv::Size& newShape = cv::Size(640, 640), const cv::Scalar& color = cv::Scalar(114, 114, 114))

{

cv::Size shape = image.size();

float r = std::min((float)newShape.height / (float)shape.height, (float)newShape.width / (float)shape.width);

float ratio[2]{ r, r };

int new_un_pad[2] = { (int)std::round((float)shape.width * r),(int)std::round((float)shape.height * r) };

auto dw = (float)(newShape.width - new_un_pad[0]) / 2;

auto dh = (float)(newShape.height - new_un_pad[1]) / 2;

if (shape.width != new_un_pad[0] && shape.height != new_un_pad[1])

cv::resize(image, outImage, cv::Size(new_un_pad[0], new_un_pad[1]));

else

outImage = image.clone();

int top = int(std::round(dh - 0.1f));

int bottom = int(std::round(dh + 0.1f));

int left = int(std::round(dw - 0.1f));

int right = int(std::round(dw + 0.1f));

cv::Vec4d params;

params[0] = ratio[0];

params[1] = ratio[1];

params[2] = left;

params[3] = top;

cv::copyMakeBorder(outImage, outImage, top, bottom, left, right, cv::BORDER_CONSTANT, color);

}

//预处理

void pre_process(std::vector<cv::Mat>& images, float* input_data_host)

{

cv::Mat letterbox;

int image_area = input_width * input_height;

for (size_t i = 0; i < images.size(); i++)

{

LetterBox(images[i], letterbox, cv::Size(input_width, input_height));

letterbox.convertTo(letterbox, CV_32FC3, 1.0f / 255.0f);

float* pimage = (float*)letterbox.data;

float* phost_b = input_data_host + image_area * (3 * i + 0);

float* phost_g = input_data_host + image_area * (3 * i + 1);

float* phost_r = input_data_host + image_area * (3 * i + 2);

for (int i = 0; i < image_area; ++i, pimage += 3)

{

*phost_r++ = pimage[0];

*phost_g++ = pimage[1];

*phost_b++ = pimage[2];

}

}

}

//网络推理

void process(std::string model, float* input_data_host, float* output_data_host)

{

TRTLogger logger;

auto engine_data = load_file(model);

auto runtime = nvinfer1::createInferRuntime(logger);

auto engine = runtime->deserializeCudaEngine(engine_data.data(), engine_data.size());

cudaStream_t stream = nullptr;

cudaStreamCreate(&stream);

auto execution_context = engine->createExecutionContext();

execution_context->setBindingDimensions(0, nvinfer1::Dims4{ (int)filenames.size(), 3, input_width, input_height });

float* input_data_device = nullptr;

cudaMalloc(&input_data_device, sizeof(float) * input_numel);

cudaMemcpyAsync(input_data_device, input_data_host, sizeof(float) * input_numel, cudaMemcpyHostToDevice, stream);

float* output_data_device = nullptr;

cudaMalloc(&output_data_device, sizeof(float) * output_numel);

float* bindings[] = { input_data_device, output_data_device };

execution_context->enqueueV2((void**)bindings, stream, nullptr);

cudaMemcpyAsync(output_data_host, output_data_device, sizeof(float) * output_numel, cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

cudaStreamDestroy(stream);

cudaFree(input_data_device);

cudaFree(output_data_device);

}

//NMS

void nms(std::vector<cv::Rect>& boxes, std::vector<float>& scores, float score_threshold, float nms_threshold, std::vector<int>& indices)

{

assert(boxes.size() == scores.size());

struct BoxScore

{

cv::Rect box;

float score;

int id;

};

std::vector<BoxScore> boxes_scores;

for (size_t i = 0; i < boxes.size(); i++)

{

BoxScore box_conf;

box_conf.box = boxes[i];

box_conf.score = scores[i];

box_conf.id = i;

if (scores[i] > score_threshold) boxes_scores.push_back(box_conf);

}

std::sort(boxes_scores.begin(), boxes_scores.end(), [](BoxScore a, BoxScore b) { return a.score > b.score; });

std::vector<float> area(boxes_scores.size());

for (size_t i = 0; i < boxes_scores.size(); ++i)

{

area[i] = boxes_scores[i].box.width * boxes_scores[i].box.height;

}

std::vector<bool> isSuppressed(boxes_scores.size(), false);

for (size_t i = 0; i < boxes_scores.size(); ++i)

{

if (isSuppressed[i]) continue;

for (size_t j = i + 1; j < boxes_scores.size(); ++j)

{

if (isSuppressed[j]) continue;

float x1 = (std::max)(boxes_scores[i].box.x, boxes_scores[j].box.x);

float y1 = (std::max)(boxes_scores[i].box.y, boxes_scores[j].box.y);

float x2 = (std::min)(boxes_scores[i].box.x + boxes_scores[i].box.width, boxes_scores[j].box.x + boxes_scores[j].box.width);

float y2 = (std::min)(boxes_scores[i].box.y + boxes_scores[i].box.height, boxes_scores[j].box.y + boxes_scores[j].box.height);

float w = (std::max)(0.0f, x2 - x1);

float h = (std::max)(0.0f, y2 - y1);

float inter = w * h;

float ovr = inter / (area[i] + area[j] - inter);

if (ovr >= nms_threshold) isSuppressed[j] = true;

}

}

for (int i = 0; i < boxes_scores.size(); ++i)

{

if (!isSuppressed[i]) indices.push_back(boxes_scores[i].id);

}

}

//box缩放到原图尺寸

void scale_boxes(cv::Rect& box, cv::Size size)

{

float gain = std::min(input_width * 1.0 / size.width, input_height * 1.0 / size.height);

int pad_w = (input_width - size.width * gain) / 2;

int pad_h = (input_height - size.height * gain) / 2;

box.x -= pad_w;

box.y -= pad_h;

box.x /= gain;

box.y /= gain;

box.width /= gain;

box.height /= gain;

}

//可视化函数

void draw_result(cv::Mat& image, std::string label, cv::Rect box)

{

cv::rectangle(image, box, cv::Scalar(255, 0, 0), 1);

int baseLine;

cv::Size label_size = cv::getTextSize(label, 1, 1, 1, &baseLine);

cv::Point tlc = cv::Point(box.x, box.y);

cv::Point brc = cv::Point(box.x, box.y + label_size.height + baseLine);

cv::putText(image, label, cv::Point(box.x, box.y), cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 0, 255), 1);

}

//后处理

void post_process(std::vector<cv::Mat>& images, std::vector<cv::Mat>& results, float* output_data_host)

{

for (int n = 0; n < images.size(); ++n)

{

std::vector<cv::Rect> boxes;

std::vector<float> scores;

std::vector<int> class_ids;

for (int i = 0; i < output_numbox; ++i)

{

float* ptr = output_data_host + i * output_numprob + n * output_numbox * output_numprob;

float objness = ptr[4];

if (objness < confidence_threshold)

continue;

float* classes_scores = 5 + ptr;

int class_id = std::max_element(classes_scores, classes_scores + num_classes) - classes_scores;

float max_class_score = classes_scores[class_id];

float score = max_class_score * objness;

if (score < score_threshold)

continue;

float x = ptr[0];

float y = ptr[1];

float w = ptr[2];

float h = ptr[3];

int left = int(x - 0.5 * w);

int top = int(y - 0.5 * h);

int width = int(w);

int height = int(h);

cv::Rect box = cv::Rect(left, top, width, height);

scale_boxes(box, images[n].size());

boxes.push_back(box);

scores.push_back(score);

class_ids.push_back(class_id);

}

//std::cout << boxes.size() << std::endl;

std::vector<int> indices;

nms(boxes, scores, score_threshold, nms_threshold, indices);

for (int i = 0; i < indices.size(); i++)

{

int idx = indices[i];

cv::Rect box = boxes[idx];

std::string label = class_names[class_ids[idx]] + ":" + cv::format("%.2f", scores[idx]); //class_ids[idx]是class_id

draw_result(results[n], label, box);

}

}

}

int main(int argc, char* argv[])

{

std::vector<cv::Mat> images;

cv::glob("./images", filenames);

for (const auto& filename : filenames)

{

cv::Mat image = cv::imread(filename);

images.push_back(image);

}

std::vector<cv::Mat> results = images;

float* inputs = nullptr;

float* outputs = nullptr;

input_numel = images.size() * 3 * input_width * input_height;

output_numel = images.size() * output_numbox * output_numprob;

cudaMallocHost(&inputs, sizeof(float) * input_numel);

cudaMallocHost(&outputs, sizeof(float) * output_numel);

pre_process(images, inputs);

std::string model = "yolov5n.engine";

process(model, inputs, outputs);

post_process(images, results, outputs);

for (size_t i = 0; i < results.size(); i++)

{

cv::imwrite("result" + std::to_string(i) + ".jpg", results[i]);

}

cudaFreeHost(inputs);

cudaFreeHost(outputs);

return 0;

}

![PostgreSQL的学习心得和知识总结(一百五十)|[performance]更好地处理冗余 IS [NOT] NULL 限定符](https://i-blog.csdnimg.cn/direct/0429c52cc29e4daf8b54d936a535c2fe.png)