目录

- Actor-Critic 方法

- QAC 算法

- Advantage Actor-Critic 算法

- Baseline invariance

- Off-policy Actor-Critic

- 重要性采样

- Deterministic Policy Gradient (DPG)

系列笔记:

【强化学习的数学原理】课程笔记–1(基本概念,贝尔曼公式)

【强化学习的数学原理】课程笔记–2(贝尔曼最优公式,值迭代与策略迭代)

【强化学习的数学原理】课程笔记–3(蒙特卡洛方法)

【强化学习的数学原理】课程笔记–4(随机近似与随机梯度下降,时序差分方法)

【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法)

Actor-Critic 方法

Actor-Critic 属于策略梯度(PG)方法,实际上是将 值函数近似 和 Policy gradient 方法进行了结合。具体来说,上一节 介绍的 Policy gradient 迭代式是:

θ

t

+

1

=

θ

t

+

α

∇

θ

J

(

θ

t

)

=

θ

t

+

α

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

q

π

(

S

,

A

)

]

=

随机梯度

θ

t

+

α

∇

θ

ln

π

(

a

t

∣

s

t

,

θ

t

)

q

t

(

s

t

,

a

t

)

\begin{aligned} \theta_{t+1} &= \theta_t + \alpha \nabla_{\theta} J(\theta_t)\\ &= \theta_t + \alpha E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) q_{\pi}(S,A)]\\ &\overset{随机梯度}{=} \theta_t + \alpha \nabla_{\theta} \ln \pi(a_t|s_t,\theta_t) q_t(s_t,a_t) \end{aligned}

θt+1=θt+α∇θJ(θt)=θt+αES∼η,A∼π[∇θlnπ(A∣S,θt)qπ(S,A)]=随机梯度θt+α∇θlnπ(at∣st,θt)qt(st,at)

这里面求解 π \pi π 就是 policy-based (Actor) ,然后求解 π \pi π 需要知道 action value q t ( s t , a t ) q_t(s_t,a_t) qt(st,at),这一步就是 value-based (Critic) 。在之前的章节中,介绍了两种计算 action value q t ( s t , a t ) q_t(s_t,a_t) qt(st,at) 的方法:

- 蒙特卡洛方法:生成一个完整的 episode,使用 episode 中所有从 ( s t , a t ) (s_t, a_t) (st,at) 出发得到的 action value 的均值来估计 q t ( s t , a t ) q_t(s_t,a_t) qt(st,at),即为 上一节 介绍的 REINFORCE 算法

- 时序差分方法:每走一步生成一个样本,就可以更新对应的 action value,走足够多步,也可也逐渐估计到比较准确的 q t ( s t , a t ) q_t(s_t,a_t) qt(st,at),即为本节的 Actor-Critic 算法

QAC 算法

QAC 算法是一种比较简单的 Actor-Critic 方法,它在 update action value 时用的是时序差分方法中的 Sarsa 算法:

其中 value update 的公式:

w

t

+

1

=

w

t

+

α

t

[

r

t

+

1

+

γ

q

(

s

t

+

1

,

a

t

+

1

,

w

t

)

−

q

(

s

t

,

a

t

,

w

t

)

]

∇

w

q

(

s

t

,

a

t

,

w

t

)

w_{t+1} = w_t + \alpha_t [r_{t+1} + \gamma q(s_{t+1},a_{t+1},w_t) - q(s_t,a_t,w_t)] \nabla_w q(s_t,a_t,w_t)

wt+1=wt+αt[rt+1+γq(st+1,at+1,wt)−q(st,at,wt)]∇wq(st,at,wt)

是 Sarsa 的值函数形式,详细可见 【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法)

Advantage Actor-Critic 算法

Baseline invariance

这里先介绍一下 Baseline invariance,旨在引入一个

b

(

s

)

b(s)

b(s),使得加入这个偏置之后,不影响

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

q

π

(

S

,

A

)

\nabla_{\theta} \ln \pi(A|S,\theta_t) q_{\pi}(S,A)

∇θlnπ(A∣S,θt)qπ(S,A) 的期望,且会减小其方差。这里的含义是:在 Policy gradient 中,

θ

t

+

1

=

θ

t

+

α

∇

θ

J

(

θ

t

)

=

θ

t

+

α

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

q

π

(

S

,

A

)

]

=

随机梯度

θ

t

+

α

∇

θ

ln

π

(

a

t

∣

s

t

,

θ

t

)

q

t

(

s

t

,

a

t

)

\begin{aligned} \theta_{t+1} &= \theta_t + \alpha \nabla_{\theta} J(\theta_t)\\ &= \theta_t + \alpha E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) q_{\pi}(S,A)]\\ &\overset{随机梯度}{=} \theta_t + \alpha \nabla_{\theta} \ln \pi(a_t|s_t,\theta_t) q_t(s_t,a_t) \end{aligned}

θt+1=θt+α∇θJ(θt)=θt+αES∼η,A∼π[∇θlnπ(A∣S,θt)qπ(S,A)]=随机梯度θt+α∇θlnπ(at∣st,θt)qt(st,at)

我们用通过多次的单个样本迭代来拟合随机变量的期望,但如果

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

q

π

(

S

,

A

)

\nabla_{\theta} \ln \pi(A|S,\theta_t) q_{\pi}(S,A)

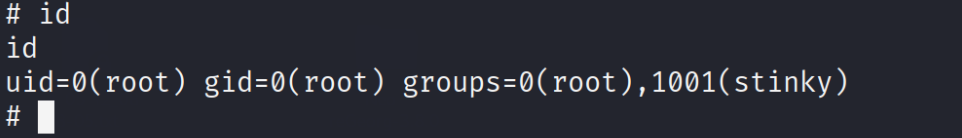

∇θlnπ(A∣S,θt)qπ(S,A) 的方差本身是比较大的(由于真实分布未知,不排除这种可能),那么在样本量不足够多时,对期望的估计有更大的可能是不准的。eg:见下图,当从方差大的分布中采样时,其离我们希望拟合的期望值 0 往往距离较远;而从小方差分布中采样,则基本都离期望值 0 很近了。

1.不影响期望

要证明

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

q

π

(

S

,

A

)

]

=

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

(

q

π

(

S

,

A

)

−

b

(

S

)

)

]

E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) q_{\pi}(S,A)] = E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) (q_{\pi}(S,A) - b(S))]

ES∼η,A∼π[∇θlnπ(A∣S,θt)qπ(S,A)]=ES∼η,A∼π[∇θlnπ(A∣S,θt)(qπ(S,A)−b(S))]

只需证:

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

b

(

S

)

]

=

0

E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) b(S)] = 0

ES∼η,A∼π[∇θlnπ(A∣S,θt)b(S)]=0

这个过程比较trivial:

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

b

(

S

)

]

=

∑

s

η

(

s

)

∑

a

π

(

a

∣

s

,

θ

t

)

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

b

(

s

)

=

∑

s

η

(

s

)

∑

a

∇

θ

π

(

A

∣

S

,

θ

t

)

b

(

s

)

(

由于

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

=

∇

θ

π

(

A

∣

S

,

θ

t

)

π

(

a

∣

s

,

θ

t

)

)

=

∑

s

η

(

s

)

b

(

s

)

∑

a

∇

θ

π

(

A

∣

S

,

θ

t

)

=

∑

s

η

(

s

)

b

(

s

)

∇

θ

∑

a

π

(

A

∣

S

,

θ

t

)

=

∑

s

η

(

s

)

b

(

s

)

∇

θ

1

=

0

\begin{aligned} E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) b(S)] &= \sum_s \eta(s) \sum_a \pi(a|s,\theta_t) \nabla_{\theta} \ln \pi(A|S,\theta_t) b(s)\\ &= \sum_s \eta(s) \sum_a \nabla_{\theta}\pi(A|S,\theta_t) b(s) \quad (由于 \nabla_{\theta} \ln \pi(A|S,\theta_t) = \frac{\nabla_{\theta}\pi(A|S,\theta_t)}{\pi(a|s,\theta_t)})\\ &=\sum_s \eta(s) b(s) \sum_a \nabla_{\theta}\pi(A|S,\theta_t)\\ &= \sum_s \eta(s) b(s) \nabla_{\theta} \sum_a \pi(A|S,\theta_t)\\ &= \sum_s \eta(s) b(s) \nabla_{\theta} 1 = 0 \end{aligned}

ES∼η,A∼π[∇θlnπ(A∣S,θt)b(S)]=s∑η(s)a∑π(a∣s,θt)∇θlnπ(A∣S,θt)b(s)=s∑η(s)a∑∇θπ(A∣S,θt)b(s)(由于∇θlnπ(A∣S,θt)=π(a∣s,θt)∇θπ(A∣S,θt))=s∑η(s)b(s)a∑∇θπ(A∣S,θt)=s∑η(s)b(s)∇θa∑π(A∣S,θt)=s∑η(s)b(s)∇θ1=0

1.减小方差

记 X ( S , A ) = ∇ θ ln π ( A ∣ S , θ t ) ( q π ( S , A ) − b ( S ) ) X(S,A) = \nabla_{\theta} \ln \pi(A|S,\theta_t) (q_{\pi}(S,A) - b(S)) X(S,A)=∇θlnπ(A∣S,θt)(qπ(S,A)−b(S))

tr [ var ( X ) ] = tr [ E [ ( X − E ( X ) ) 2 ] ] = tr [ E [ ( X − E ( X ) ) ( X − E ( X ) ) T ] ] = tr [ E [ X X T − E ( X ) X T − X E ( X ) T + E ( X ) E ( X ) T ] ] = E [ X T X − X T E ( X ) − E ( X ) T X + E ( X ) T E ( X ) ] ( 由于tr ( A B ) = tr ( B A ) ) = E [ X T X ] − E [ X ] T E ( X ) − E ( X ) T E ( X ) + E ( X ) T E ( X ) = E [ X T X ] − E [ X ] T E ( X ) \begin{aligned} \text{tr}[\text{var} (X)] &= \text{tr}[E[(X - E(X))^2]]\\ &= \text{tr}[E[ (X-E(X))(X-E(X))^T]]\\ &= \text{tr}[E[ XX^T - E(X)X^T - XE(X)^T + E(X)E(X)^T]]\\ &= E[X^TX - X^TE(X) - E(X)^T X + E(X)^TE(X) ] \quad (由于 \text{tr}(AB) = \text{tr}(BA))\\ &= E[X^TX] - E[X]^TE(X) - E(X)^TE(X) + E(X)^TE(X)\\ &= E[X^TX] - E[X]^TE(X) \end{aligned} tr[var(X)]=tr[E[(X−E(X))2]]=tr[E[(X−E(X))(X−E(X))T]]=tr[E[XXT−E(X)XT−XE(X)T+E(X)E(X)T]]=E[XTX−XTE(X)−E(X)TX+E(X)TE(X)](由于tr(AB)=tr(BA))=E[XTX]−E[X]TE(X)−E(X)TE(X)+E(X)TE(X)=E[XTX]−E[X]TE(X)

由于

E

(

X

)

E(X)

E(X) 与

b

(

S

)

b(S)

b(S) 无关,因此要最小化

tr

[

var

(

X

)

]

\text{tr}[\text{var} (X)]

tr[var(X)],只需要考虑最小化

E

[

X

T

X

]

E[X^TX]

E[XTX]:

∇

b

E

[

X

T

X

]

=

∇

b

E

[

(

∇

θ

ln

π

)

T

(

∇

θ

ln

π

)

(

q

π

(

S

,

A

)

−

b

(

S

)

)

2

]

=

∇

b

E

[

∣

∣

∇

θ

ln

π

∣

∣

2

(

q

π

(

S

,

A

)

−

b

(

S

)

)

2

]

=

∇

b

∑

s

η

(

s

)

E

A

∼

π

[

∣

∣

∇

θ

ln

π

∣

∣

2

(

q

π

(

S

,

A

)

−

b

(

S

)

)

2

]

=

−

2

∑

s

η

(

s

)

E

A

∼

π

[

∣

∣

∇

θ

ln

π

∣

∣

2

(

q

π

(

S

,

A

)

−

b

(

S

)

)

]

=

0

\begin{aligned} \nabla_{b} E[X^TX] &= \nabla_{b}E[(\nabla_{\theta} \ln \pi)^T (\nabla_{\theta} \ln \pi) (q_{\pi}(S,A) - b(S))^2]\\ &= \nabla_{b}E[||\nabla_{\theta} \ln \pi||^2 (q_{\pi}(S,A) - b(S))^2]\\ &=\nabla_{b}\sum_s \eta(s) E_{A \sim \pi} [||\nabla_{\theta} \ln \pi||^2 (q_{\pi}(S,A) - b(S))^2]\\ &= -2 \sum_s \eta(s) E_{A \sim \pi} [||\nabla_{\theta} \ln \pi||^2 (q_{\pi}(S,A) - b(S))]\\ &= 0 \end{aligned}

∇bE[XTX]=∇bE[(∇θlnπ)T(∇θlnπ)(qπ(S,A)−b(S))2]=∇bE[∣∣∇θlnπ∣∣2(qπ(S,A)−b(S))2]=∇bs∑η(s)EA∼π[∣∣∇θlnπ∣∣2(qπ(S,A)−b(S))2]=−2s∑η(s)EA∼π[∣∣∇θlnπ∣∣2(qπ(S,A)−b(S))]=0

⇒

E

A

∼

π

[

∣

∣

∇

θ

ln

π

∣

∣

2

(

q

π

(

S

,

A

)

−

b

(

S

)

)

]

=

0

,

∀

s

\Rightarrow \qquad E_{A \sim \pi} [||\nabla_{\theta} \ln \pi||^2 (q_{\pi}(S,A) - b(S))] = 0 , \quad \forall s

⇒EA∼π[∣∣∇θlnπ∣∣2(qπ(S,A)−b(S))]=0,∀s

因此

b

∗

(

s

)

=

E

A

∼

π

[

∣

∣

∇

θ

ln

π

∣

∣

2

q

π

]

E

A

∼

π

[

∣

∣

∇

θ

ln

π

∣

∣

2

]

,

∀

s

b^*(s) = \frac{E_{A \sim \pi} [||\nabla_{\theta} \ln \pi||^2 q_{\pi}]}{E_{A \sim \pi} [||\nabla_{\theta} \ln \pi||^2 ]}, \quad \forall s

b∗(s)=EA∼π[∣∣∇θlnπ∣∣2]EA∼π[∣∣∇θlnπ∣∣2qπ],∀s

不过上式比较复杂,实践中常常使用 b ∗ ( s ) = E A ∼ π [ q π ( s , A ) ] = v π ( s ) , ∀ s b^*(s) = E_{A \sim \pi} [q_{\pi}(s,A)] = v_{\pi}(s) , \quad \forall s b∗(s)=EA∼π[qπ(s,A)]=vπ(s),∀s 也有还不错的效果。

Advantage Actor-Critic (A2C)算法就是将

b

(

S

)

b(S)

b(S) 取为

b

∗

(

s

)

=

v

π

(

s

)

b^*(s) = v_{\pi}(s)

b∗(s)=vπ(s) 时的算法,因此:

θ

t

+

1

=

θ

t

+

α

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

(

q

π

(

S

,

A

)

−

v

π

(

S

)

)

]

=

.

θ

t

+

α

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

δ

(

S

,

A

)

]

=

随机梯度

θ

t

+

α

∇

θ

ln

π

(

a

t

∣

s

t

,

θ

t

)

δ

(

s

t

,

a

t

)

\begin{aligned} \theta_{t+1} &= \theta_t + \alpha E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) (q_{\pi}(S,A) - v_{\pi}(S))]\\ &\overset{.}{=} \theta_t + \alpha E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) \delta(S,A)]\\ &\overset{随机梯度}{=} \theta_t + \alpha \nabla_{\theta} \ln \pi(a_t|s_t,\theta_t) \delta(s_t,a_t) \end{aligned}

θt+1=θt+αES∼η,A∼π[∇θlnπ(A∣S,θt)(qπ(S,A)−vπ(S))]=.θt+αES∼η,A∼π[∇θlnπ(A∣S,θt)δ(S,A)]=随机梯度θt+α∇θlnπ(at∣st,θt)δ(st,at)

这里 δ ( S , A ) = q π ( S , A ) − v π ( S ) \delta(S,A) = q_{\pi}(S,A) - v_{\pi}(S) δ(S,A)=qπ(S,A)−vπ(S) 称为 优势函数。这里的含义是,由于 v π ( S ) = E A ∼ π [ q π ( s , A ) ] v_{\pi}(S) =E_{A \sim \pi} [q_{\pi}(s,A)] vπ(S)=EA∼π[qπ(s,A)],因此 δ ( S , A ) \delta(S,A) δ(S,A) 越大,表示当前 action 的 value 比平均值来的更大,即更有优势。根据 δ ( s t , a t ) \delta(s_t,a_t) δ(st,at) 的值来判断 θ t + 1 \theta_{t+1} θt+1 的改进方向也确实比单纯只用 q π ( s t , a t ) q_{\pi}(s_t,a_t) qπ(st,at) 更为精准,因为 action value 的相对大小比绝对大小更有意义。

这里由于

q

π

(

s

t

,

a

t

)

=

E

[

R

t

+

1

+

γ

v

π

(

S

t

+

1

)

∣

S

t

=

s

t

,

A

t

=

a

t

]

q_{\pi}(s_t, a_t) = E [R_{t+1} + \gamma v_{\pi}(S_{t+1}) | S_t = s_t, A_t = a_t]

qπ(st,at)=E[Rt+1+γvπ(St+1)∣St=st,At=at]

因此求解

δ

(

s

t

,

a

t

)

=

q

π

(

s

t

,

a

t

)

−

v

π

(

s

t

)

=

E

[

R

t

+

1

+

γ

v

π

(

S

t

+

1

)

−

v

π

(

S

t

)

∣

S

t

=

s

t

,

A

t

=

a

t

]

\delta(s_t,a_t) = q_{\pi}(s_t,a_t) - v_{\pi}(s_t) = E [R_{t+1} + \gamma v_{\pi}(S_{t+1}) - v_{\pi}(S_t) | S_t = s_t, A_t = a_t]

δ(st,at)=qπ(st,at)−vπ(st)=E[Rt+1+γvπ(St+1)−vπ(St)∣St=st,At=at]

转化求解 state value

v

π

(

s

t

)

v_{\pi}(s_t)

vπ(st),根据 【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法),其值函数近似迭代为:

w

t

+

1

=

w

t

+

α

t

(

r

t

+

1

+

γ

v

t

(

s

t

+

1

)

−

v

(

s

t

,

w

k

)

)

∇

w

v

(

s

t

,

w

k

)

=

w

t

+

α

t

δ

t

∇

w

v

(

s

t

,

w

k

)

w_{t+1} = w_t + \alpha_t ( r_{t+1} + \gamma v_t(s_{t+1}) - v(s_t,w_k))\nabla_w v(s_t,w_k) = w_t + \alpha_t \delta_t \nabla_w v(s_t,w_k)

wt+1=wt+αt(rt+1+γvt(st+1)−v(st,wk))∇wv(st,wk)=wt+αtδt∇wv(st,wk)

Off-policy Actor-Critic

不难发现之前学习的几个 Policy grandient 算法:REINFORCE, QAC 以及 A2C ,都是 on-policy 算法,因为其目标函数:

E

S

∼

η

,

A

∼

π

[

∇

θ

ln

π

(

A

∣

S

,

θ

t

)

(

q

π

(

S

,

A

)

−

b

(

S

)

)

]

E_{S \sim \eta , A \sim \pi}[\nabla_{\theta} \ln \pi(A|S,\theta_t) (q_{\pi}(S,A) - b(S))]

ES∼η,A∼π[∇θlnπ(A∣S,θt)(qπ(S,A)−b(S))]

在采样时,都要依赖策略

π

\pi

π (因为

A

∼

π

A \sim \pi

A∼π)。

在实际使用时,要想将这些 on-policy 算法转成 off-policy 的,需要用到一种技术叫 重要性采样(事实上,所有 on-policy 的强化学习算法都可以通过这个技术转成 off-policy 的,并且重要性采样这个技术也可以用于其他领域,当要估计的分布与数据采样的分布不同的情况)

重要性采样

重要性采样主要用于处理如下问题:

目标是估计 E X ∼ p 0 [ X ] E_{X \sim p_0}[X] EX∼p0[X]

但现在我们只有一批根据分布 p 1 p_1 p1 采到的样本 { x 1 , x 2 , . . . } \{x_1, x_2, ...\} {x1,x2,...},现在想用这些样本来估计 E X ∼ p 0 [ X ] E_{X \sim p_0}[X] EX∼p0[X]

由于

E

X

∼

p

0

[

X

]

=

∑

x

p

0

(

x

)

x

=

∑

x

p

1

(

x

)

p

0

(

x

)

p

1

(

x

)

x

=

E

X

∼

p

1

[

f

(

X

)

]

,其中

f

(

x

)

=

p

0

(

x

)

p

1

(

x

)

x

E_{X \sim p_0}[X] = \sum_x p_0(x)x = \sum_x p_1(x) \frac{p_0(x)}{p_1(x)} x = E_{X \sim p_1}[f(X)],\text{其中 } f(x) = \frac{p_0(x)}{p_1(x)} x

EX∼p0[X]=x∑p0(x)x=x∑p1(x)p1(x)p0(x)x=EX∼p1[f(X)],其中 f(x)=p1(x)p0(x)x

根据 大数定理 (见 【强化学习的数学原理】课程笔记–3(蒙特卡洛方法)),

1

n

∑

i

=

1

n

f

(

x

i

)

=

1

n

∑

i

=

1

n

p

0

(

x

i

)

p

1

(

x

i

)

x

i

\frac{1}{n} \sum_{i=1}^n f(x_i) = \frac{1}{n} \sum_{i=1}^n \frac{p_0(x_i)}{p_1(x_i)} x_i

n1i=1∑nf(xi)=n1i=1∑np1(xi)p0(xi)xi

是

E

X

∼

p

1

[

f

(

X

)

]

E_{X \sim p_1}[f(X)]

EX∼p1[f(X)] 的无偏估计。其中

p

0

(

x

i

)

p

1

(

x

i

)

\frac{p_0(x_i)}{p_1(x_i)}

p1(xi)p0(xi) 也称 重要性权重。一个直观的理解是:当

p

0

(

x

i

)

>

p

1

(

x

i

)

p_0(x_i) > p_1(x_i)

p0(xi)>p1(xi) 时,说明

p

1

p_1

p1 分布中,采到样本

x

i

x_i

xi 的概率要小一些,那么为了拟合

p

0

p_0

p0 分布的采样情况,当采到一个

x

i

x_i

xi 时,要增加它的权重,才能更近似

p

0

p_0

p0 分布的采样效果。(实际使用中,

p

0

p_0

p0 和

p

1

p_1

p1 分布是两个神经网络,

p

1

p_1

p1 是一个已经训好的网络,而

p

0

p_0

p0 是我们要训的网络)

现在可以描述 Off-policy Actor-Critic 算法,【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法) 给出了 Policy Gradient 方法的目标函数:

E

[

v

π

(

S

)

]

=

∑

s

d

(

s

)

v

π

(

s

)

E[v_{\pi}(S)] = \sum_{s} d(s)v_{\pi}(s)

E[vπ(S)]=s∑d(s)vπ(s)

其中

d

(

s

)

d(s)

d(s) 是平稳分布。这里我们记

β

\beta

β 为 behavior policy,则 Off-policy 算法的目标函数变成:

J

(

θ

)

=

E

S

∼

d

β

[

v

π

(

S

)

]

=

∑

s

d

β

(

s

)

v

π

(

s

)

J(\theta) = E_{S \sim d_{\beta}}[v_{\pi}(S)] = \sum_{s} d_{\beta}(s)v_{\pi}(s)

J(θ)=ES∼dβ[vπ(S)]=s∑dβ(s)vπ(s)

其梯度为:

∇ θ J ( θ ) = E S ∼ ρ , A ∼ β [ π ( A ∣ S , θ ) β ( A ∣ S ) ∇ θ ln π ( A ∣ S , θ ) q π ( S , A ) ] \nabla_{\theta} J(\theta) = E_{S \sim \rho, A \sim \beta}[\frac{\pi(A|S,\theta)}{\beta(A|S)} \nabla_{\theta} \ln \pi(A|S,\theta) q_{\pi}(S,A)] ∇θJ(θ)=ES∼ρ,A∼β[β(A∣S)π(A∣S,θ)∇θlnπ(A∣S,θ)qπ(S,A)]

其中 ρ ( s ) = ∑ s ′ d β ( s ′ ) ∑ k = 0 ∞ γ k [ P π k ] s ′ s \rho(s) = \sum_s' d_{\beta}(s') \sum_{k=0}^{\infin} \gamma^k [P_{\pi}^k]_{s's} ρ(s)=s∑′dβ(s′)k=0∑∞γk[Pπk]s′s 即所有从 s ′ s' s′ 到 s s s 的 trajectory 的 discounted probability 之和。

Proof:在 【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法) 中已经证明:

∇

θ

v

π

(

s

)

=

∑

s

′

∑

k

=

0

∞

γ

k

[

P

π

k

]

s

s

′

∑

a

∇

θ

π

(

a

∣

s

′

,

θ

)

q

π

(

s

′

,

a

)

\nabla_{\theta} v_{\pi}(s) = \sum_{s'} \sum_{k=0}^{\infin} \gamma^k [P_{\pi}^k]_{ss'} \sum_a \nabla_{\theta} \pi(a|s',\theta) q_{\pi}(s',a)

∇θvπ(s)=s′∑k=0∑∞γk[Pπk]ss′a∑∇θπ(a∣s′,θ)qπ(s′,a)

因此:

∇

θ

J

(

θ

)

=

∇

θ

∑

s

d

β

(

s

)

v

π

(

s

)

=

∑

s

d

β

(

s

)

∇

θ

v

π

(

s

)

=

∑

s

d

β

(

s

)

∑

s

′

∑

k

=

0

∞

γ

k

[

P

π

k

]

s

s

′

∑

a

∇

θ

π

(

a

∣

s

′

,

θ

)

q

π

(

s

′

,

a

)

=

∑

s

′

(

∑

s

d

β

(

s

)

∑

k

=

0

∞

γ

k

[

P

π

k

]

s

s

′

)

∑

a

∇

θ

π

(

a

∣

s

′

,

θ

)

q

π

(

s

′

,

a

)

=

∑

s

′

ρ

(

s

′

)

∑

a

∇

θ

π

(

a

∣

s

′

,

θ

)

q

π

(

s

′

,

a

)

=

E

S

∼

ρ

[

∑

a

∇

θ

π

(

a

∣

S

,

θ

)

q

π

(

S

,

a

)

]

=

E

S

∼

ρ

[

∑

a

β

(

a

∣

S

)

π

(

a

∣

S

,

θ

)

β

(

a

∣

S

)

∇

θ

π

(

a

∣

S

,

θ

)

π

(

a

∣

S

,

θ

)

q

π

(

S

,

a

)

]

=

E

S

∼

ρ

[

∑

a

β

(

a

∣

S

)

π

(

a

∣

S

,

θ

)

β

(

a

∣

S

)

∇

θ

ln

π

(

a

∣

S

,

θ

)

q

π

(

S

,

a

)

]

=

E

S

∼

ρ

,

A

∼

β

[

π

(

A

∣

S

,

θ

)

β

(

A

∣

S

)

∇

θ

ln

π

(

A

∣

S

,

θ

)

q

π

(

S

,

A

)

]

\begin{aligned} \nabla_{\theta} J(\theta) &=\nabla_{\theta} \sum_{s} d_{\beta}(s)v_{\pi}(s) = \sum_{s} d_{\beta}(s) \nabla_{\theta} v_{\pi}(s)\\ &= \sum_{s} d_{\beta}(s) \sum_{s'} \sum_{k=0}^{\infin} \gamma^k [P_{\pi}^k]_{ss'} \sum_a \nabla_{\theta} \pi(a|s',\theta) q_{\pi}(s',a)\\ &= \sum_{s'} (\sum_{s} d_{\beta}(s) \sum_{k=0}^{\infin} \gamma^k [P_{\pi}^k]_{ss'}) \sum_a \nabla_{\theta} \pi(a|s',\theta) q_{\pi}(s',a)\\ &= \sum_{s'} \rho(s') \sum_a \nabla_{\theta} \pi(a|s',\theta) q_{\pi}(s',a)\\ &= E_{S \sim \rho}[\sum_a \nabla_{\theta} \pi(a|S,\theta) q_{\pi}(S,a)]\\ &= E_{S \sim \rho}[\sum_a \beta(a|S) \frac{ \pi(a|S,\theta)}{\beta(a|S)} \frac{\nabla_{\theta} \pi(a|S,\theta)}{\pi(a|S,\theta)} q_{\pi}(S,a)]\\ &= E_{S \sim \rho}[\sum_a \beta(a|S) \frac{ \pi(a|S,\theta)}{\beta(a|S)} \nabla_{\theta} \ln \pi(a|S,\theta) q_{\pi}(S,a)]\\ &= E_{S \sim \rho, A \sim \beta}[\frac{\pi(A|S,\theta)}{\beta(A|S)} \nabla_{\theta} \ln \pi(A|S,\theta) q_{\pi}(S,A)] \end{aligned}

∇θJ(θ)=∇θs∑dβ(s)vπ(s)=s∑dβ(s)∇θvπ(s)=s∑dβ(s)s′∑k=0∑∞γk[Pπk]ss′a∑∇θπ(a∣s′,θ)qπ(s′,a)=s′∑(s∑dβ(s)k=0∑∞γk[Pπk]ss′)a∑∇θπ(a∣s′,θ)qπ(s′,a)=s′∑ρ(s′)a∑∇θπ(a∣s′,θ)qπ(s′,a)=ES∼ρ[a∑∇θπ(a∣S,θ)qπ(S,a)]=ES∼ρ[a∑β(a∣S)β(a∣S)π(a∣S,θ)π(a∣S,θ)∇θπ(a∣S,θ)qπ(S,a)]=ES∼ρ[a∑β(a∣S)β(a∣S)π(a∣S,θ)∇θlnπ(a∣S,θ)qπ(S,a)]=ES∼ρ,A∼β[β(A∣S)π(A∣S,θ)∇θlnπ(A∣S,θ)qπ(S,A)]

综上,Off-policy Actor-Critic 算法的迭代式为(考虑 Baseline invariance):

θ t + 1 = θ t + α E S ∼ ρ , A ∼ β [ π ( A ∣ S , θ ) β ( A ∣ S ) ∇ θ ln π ( A ∣ S , θ t ) ( q π ( S , A ) − v π ( S ) ) ] = . θ t + α E S ∼ ρ , A ∼ β [ π ( A ∣ S , θ ) β ( A ∣ S ) ∇ θ ln π ( A ∣ S , θ t ) δ ( S , A ) ] = 随机梯度 θ t + α π ( a t ∣ s t , θ t ) β ( a t ∣ s t ) ∇ θ ln π ( a t ∣ s t , θ t ) δ ( s t , a t ) \begin{aligned} \theta_{t+1} &= \theta_t + \alpha E_{S \sim \rho , A \sim \beta}[\frac{\pi(A|S,\theta)}{\beta(A|S)} \nabla_{\theta} \ln \pi(A|S,\theta_t) (q_{\pi}(S,A) - v_{\pi}(S))]\\ &\overset{.}{=} \theta_t + \alpha E_{S \sim \rho , A \sim \beta}[\frac{\pi(A|S,\theta)}{\beta(A|S)}\nabla_{\theta} \ln \pi(A|S,\theta_t) \delta(S,A)]\\ &\overset{随机梯度}{=} \theta_t + \alpha \frac{\pi(a_t|s_t,\theta_t) }{\beta(a_t|s_t)} \nabla_{\theta} \ln \pi(a_t|s_t,\theta_t) \delta(s_t,a_t) \end{aligned} θt+1=θt+αES∼ρ,A∼β[β(A∣S)π(A∣S,θ)∇θlnπ(A∣S,θt)(qπ(S,A)−vπ(S))]=.θt+αES∼ρ,A∼β[β(A∣S)π(A∣S,θ)∇θlnπ(A∣S,θt)δ(S,A)]=随机梯度θt+αβ(at∣st)π(at∣st,θt)∇θlnπ(at∣st,θt)δ(st,at)

其算法为:

Deterministic Policy Gradient (DPG)

【强化学习的数学原理】课程笔记–5(值函数近似,策略梯度方法) 中推导了 statistical policy 的目标函数梯度的统一形式:

∇ θ J ( θ ) = ∑ s η ( s ) ∑ a ∇ θ π ( a ∣ s , θ ) q π ( s , a ) \nabla_{\theta} J(\theta) = \sum_s \eta(s) \sum_a \nabla_{\theta} \pi(a|s,\theta) q_{\pi}(s,a) ∇θJ(θ)=s∑η(s)a∑∇θπ(a∣s,θ)qπ(s,a)

上式的一个等价形式: ∇ θ J ( θ ) = E S ∼ η , A ∼ π ( S , θ ) [ ∇ θ ln π ( A ∣ S , θ ) q π ( S , A ) ] \nabla_{\theta} J(\theta) = E _{S \sim \eta, A \sim \pi(S,\theta)} [\nabla_{\theta} \ln \pi(A|S,\theta) q_{\pi}(S,A)] ∇θJ(θ)=ES∼η,A∼π(S,θ)[∇θlnπ(A∣S,θ)qπ(S,A)]

类似的,Deterministic policy

μ

\mu

μ 是贪婪策略,因此

μ

(

a

∣

s

)

=

{

1

,

a

=

arg max

a

∈

A

q

(

s

,

a

)

0

,

a

≠

arg max

a

∈

A

q

(

s

,

a

)

\mu(a|s) = \begin{cases} 1, \quad a = \argmax_{a \in A} q(s,a)\\ 0, \quad a \neq \argmax_{a \in A} q(s,a) \end{cases}

μ(a∣s)={1,a=argmaxa∈Aq(s,a)0,a=argmaxa∈Aq(s,a)

其对应的目标函数梯度的统一形式为:

∇ θ J ( θ ) = ∑ s η ( s ) ∇ θ μ ( s ) ∇ a q μ ( s , a = μ ( s ) ) = E S ∼ η [ ∇ θ μ ( s ) ∇ a q μ ( s , a = μ ( s ) ) ] \begin{aligned} \nabla_{\theta} J(\theta) &= \sum_s \eta(s) \nabla_{\theta} \mu(s) \nabla_{a}q_{\mu}(s,a=\mu(s))\\ &= E _{S \sim \eta} [\nabla_{\theta} \mu(s) \nabla_{a}q_{\mu}(s,a=\mu(s))] \end{aligned} ∇θJ(θ)=s∑η(s)∇θμ(s)∇aqμ(s,a=μ(s))=ES∼η[∇θμ(s)∇aqμ(s,a=μ(s))]

具体证明见 强化学习的数学原理

综上,Deterministic Actor-Critic 算法的迭代式为(考虑 Baseline invariance):

θ t + 1 = θ t + α E S ∼ η [ ∇ θ μ ( s ) ∇ a q μ ( s , a = μ ( s ) ) ] = 随机梯度 θ t + α ∇ θ μ ( s t ) ∇ a q μ ( s t , a = μ ( s t ) ) \begin{aligned} \theta_{t+1} &= \theta_t + \alpha E _{S \sim \eta} [\nabla_{\theta} \mu(s) \nabla_{a}q_{\mu}(s,a=\mu(s))]\\ &\overset{随机梯度}{=} \theta_t + \alpha \nabla_{\theta} \mu(s_t) \nabla_{a}q_{\mu}(s_t,a=\mu(s_t)) \end{aligned} θt+1=θt+αES∼η[∇θμ(s)∇aqμ(s,a=μ(s))]=随机梯度θt+α∇θμ(st)∇aqμ(st,a=μ(st))

由于采样时无需依赖 policy μ \mu μ,因此 Deterministic Actor-Critic 很自然是 off-policy 的。

这里利用了对 action value

q

(

s

t

,

a

t

)

q(s_t,a_t)

q(st,at) 的值函数估计:

w

t

+

1

=

w

t

+

α

t

[

r

t

+

1

+

γ

q

(

s

t

+

1

,

μ

(

s

t

+

1

,

θ

t

)

,

w

t

)

−

q

(

s

t

,

a

t

,

w

t

)

]

∇

w

q

(

s

t

,

a

t

,

w

t

)

w_{t+1} = w_t + \alpha_t [r_{t+1} + \gamma q(s_{t+1},\mu(s_{t+1},\theta_t),w_t) - q(s_t,a_t,w_t)] \nabla_w q(s_t,a_t,w_t)

wt+1=wt+αt[rt+1+γq(st+1,μ(st+1,θt),wt)−q(st,at,wt)]∇wq(st,at,wt)

注意这里的样本虽然是用到了 { s t , a t , r t + 1 , s t + 1 , a ^ t + 1 } \{s_t, a_t, r_{t+1}, s_{t+1}, \hat a_{t+1}\} {st,at,rt+1,st+1,a^t+1},但其中 a ^ t + 1 \hat a_{t+1} a^t+1 ,其实是由当前 target policy μ ( s t + 1 , θ t ) \mu(s_{t+1},\theta_t) μ(st+1,θt) 取得的,而不是采样来的。下一步的样本 ( s t + 1 , a t + 1 ) (s_{t+1}, a_{t+1}) (st+1,at+1) 才是再根据 behavior policy 采样得到的。

Reference:

1.强化学习的数学原理