YOLOv8添加注意力模块并测试和训练

参考bilibili视频

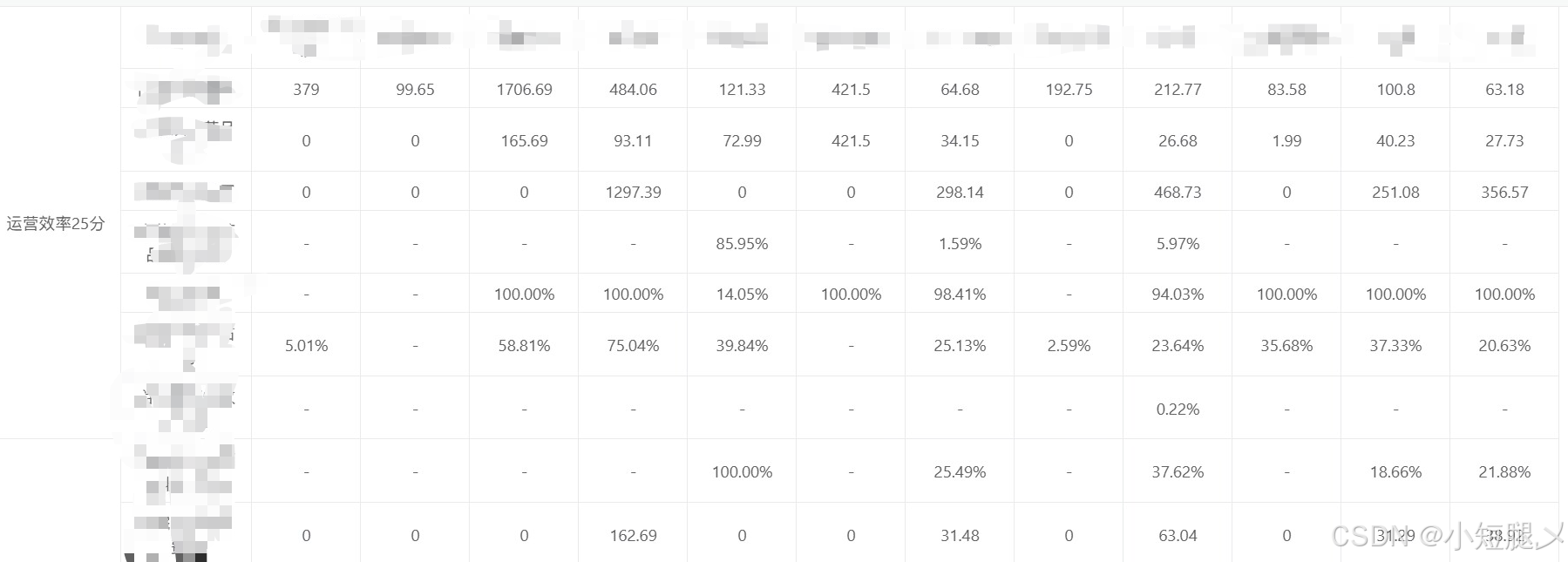

yolov8代码库中写好了注意力模块,但是yolov8的yaml文件中并没用使用它,如下图的通道注意力和空间注意力以及两者的结合CBAM,打开conv.py文件可以看到,其中包含了各种卷积块的定义,因此yolov8是把通道注意力和空间注意力以及两者的结合CBAM当作卷积块来处理:

2 逐层写入自定义的注意力模块

(1)ultralytics/nn/modules/conv.py中写入自定义的注意力模块:

(2)ultralytics/nn/modules/init.py中添加自定义的注意力模块名:

只有逐层添加模块名,才能封装成ultralytics.nn.modules的内部模块

(3)ultralytics/nn/tasks.py中添加自定义的注意力模块名,以便任务执行时调用自定义的注意力模块。

接着在ultralytics/nn/tasks.py–>parse_model函数中解析yaml文件时,判断是否有自定义的注意力模块:

由于CBAM可以看成只是给卷积块Conv加权重,并不会改变输入、输出通道数,因此可以仿照Conv块的处理,在下面判断的语句中它只会执行以下几句:

c1,c2为输入输出通道数,if 后面的语句是的作用是除了最后一层类别输出通道数,其它层的通道数都要是8的整数倍。args存放了c1,c2和args[1]之后的所有参数组成新的args,需要注意,args至少要两个元素,如果只有一个元素,agrs[1:]时会报错超出范围,因此模型的yaml文件中args位置,必须至少2个元素,如:

- [-1, 3, CBAM, [1024, 7]] # 输入1024个通道数,kenel size=7

3 修改模型的yaml文件

在ultralytics/cfg/models/v8中复制一个yolov8-seg.yaml文件新建yaml文件命名为yolov8CBAM-seg.yaml:

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8-seg instance segmentation model. For Usage examples see https://docs.ultralytics.com/tasks/segment

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-seg.yaml' will call yolov8-seg.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024]

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 768]

l: [1.00, 1.00, 512]

x: [1.00, 1.25, 512]

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]] #-->2

- [-1, 1, CBAM, [128, 7]] #CBAM 3

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8-->4

- [-1, 6, C2f, [256, True]]

- [-1, 1, CBAM, [256, 7]] #CBAM 6

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16-->7

- [-1, 6, C2f, [512, True]]

- [-1, 1, CBAM, [512, 7]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32-->10

- [-1, 3, C2f, [1024, True]]

- [-1, 1, CBAM, [1024, 7]]

- [-1, 1, SPPF, [1024, 5]] # 9-->13

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 8], 1, Concat, [1]] #[[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12 -->16

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 5], 1, Concat, [1]] #[[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 15 (P3/8-small)--->19

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 16], 1, Concat, [1]] #[[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, C2f, [512]] # 18 (P4/16-medium)-->22

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 13], 1, Concat, [1]] #[[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, C2f, [1024]] # 21 (P5/32-large)--->25

# - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Segment(P3, P4, P5)

- [[19, 22, 25], 1, Segment, [nc, 32, 256]] # Segment(P3, P4, P5)

这里在主干backbone中的c2f块后面添加了重复一次的CBAM共添加了四个。由于head层需要Concat backbone的相应层,因此,原来的层序号需要逐一修改,注释中 " -->x "表示新的序号,将原来的序号替换成新的即可。

4 测试是否修改成功

复制一份tests/test_python.py文件中的测试代码,新建文件命名为test_yolov8_CBAM_model.py,只保留下方代码:

# Ultralytics YOLO 🚀, AGPL-3.0 license

import contextlib

import urllib

from copy import copy

from pathlib import Path

import cv2

import numpy as np

import pytest

import torch

import yaml

from PIL import Image

from tests import CFG, IS_TMP_WRITEABLE, MODEL, SOURCE, TMP

from ultralytics import RTDETR, YOLO

from ultralytics.cfg import MODELS, TASK2DATA, TASKS

from ultralytics.data.build import load_inference_source

from ultralytics.utils import (

ASSETS,

DEFAULT_CFG,

DEFAULT_CFG_PATH,

LOGGER,

ONLINE,

ROOT,

WEIGHTS_DIR,

WINDOWS,

checks,

)

from ultralytics.utils.downloads import download

from ultralytics.utils.torch_utils import TORCH_1_9

CFG = 'ultralytics/cfg/models/v8/yolov8l-CBAMseg.yaml' #使用l模型加一个l字母

SOURCE = ASSETS / "bus.jpg"

def test_model_forward():

"""Test the forward pass of the YOLO model."""

model = YOLO(CFG)

model(source=SOURCE, imgsz=[512,512], augment=True) # also test no source and augment

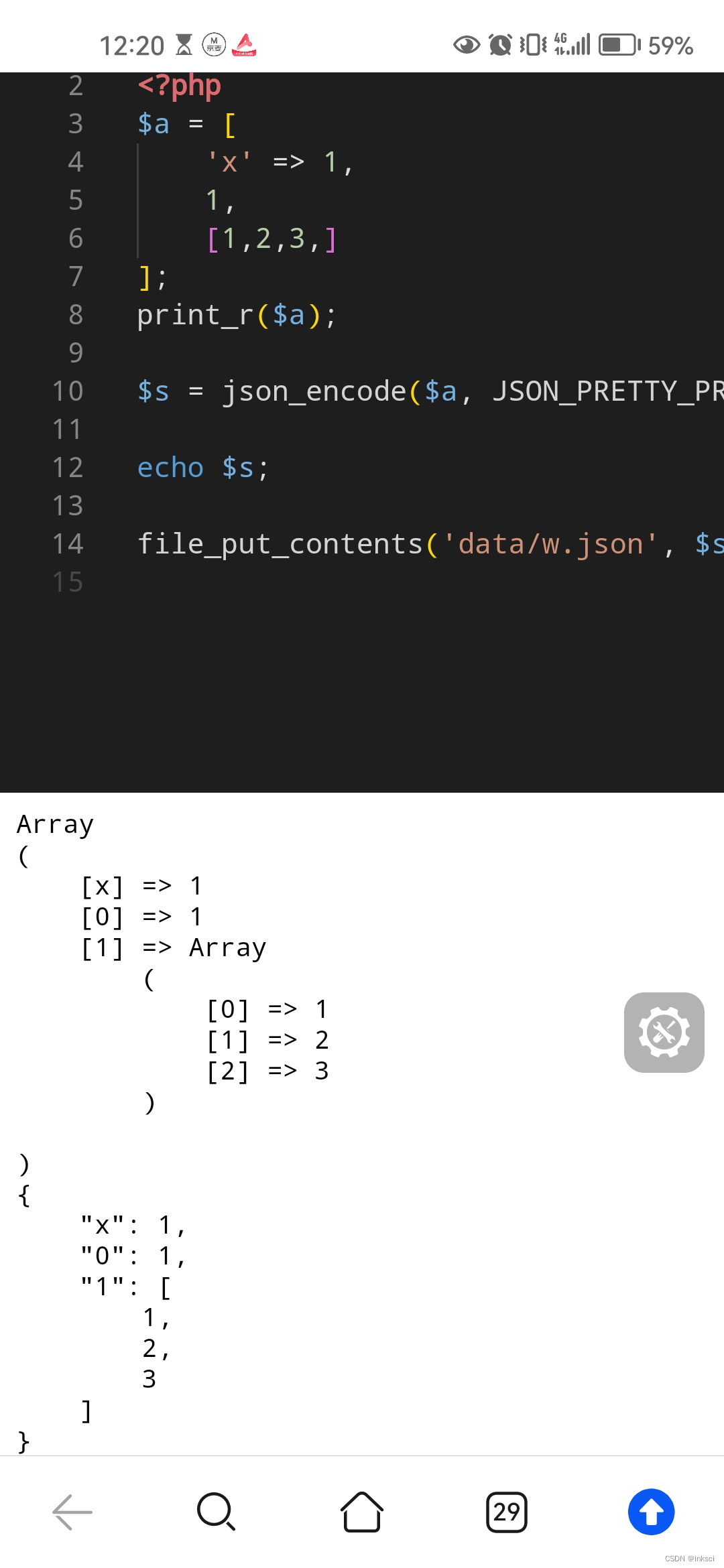

先在ultralytics/nn/tasks.py的parse_model函数中增加一行代码用于查看模型结构:

print(f"{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}")

运行test_yolov8_CBAM_model.py的结果如下:

============================= test session starts ==============================

collected 1 item

test_yolov8_CBAM_model.py::test_model_forward PASSED [100%] 0 -1 1 1856 ultralytics.nn.modules.conv.Conv [3, 64, 3, 2]

1 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

2 -1 3 279808 ultralytics.nn.modules.block.C2f [128, 128, 3, True]

3 -1 1 16610 ultralytics.nn.modules.conv.CBAM [128, 7]

4 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

5 -1 6 2101248 ultralytics.nn.modules.block.C2f [256, 256, 6, True]

6 -1 1 65890 ultralytics.nn.modules.conv.CBAM [256, 7]

7 -1 1 1180672 ultralytics.nn.modules.conv.Conv [256, 512, 3, 2]

8 -1 6 8396800 ultralytics.nn.modules.block.C2f [512, 512, 6, True]

9 -1 1 262754 ultralytics.nn.modules.conv.CBAM [512, 7]

10 -1 1 2360320 ultralytics.nn.modules.conv.Conv [512, 512, 3, 2]

11 -1 3 4461568 ultralytics.nn.modules.block.C2f [512, 512, 3, True]

12 -1 1 262754 ultralytics.nn.modules.conv.CBAM [512, 7]

13 -1 1 656896 ultralytics.nn.modules.block.SPPF [512, 512, 5]

14 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

15 [-1, 8] 1 0 ultralytics.nn.modules.conv.Concat [1]

16 -1 3 4723712 ultralytics.nn.modules.block.C2f [1024, 512, 3]

17 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

18 [-1, 5] 1 0 ultralytics.nn.modules.conv.Concat [1]

19 -1 3 1247744 ultralytics.nn.modules.block.C2f [768, 256, 3]

20 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

21 [-1, 16] 1 0 ultralytics.nn.modules.conv.Concat [1]

22 -1 3 4592640 ultralytics.nn.modules.block.C2f [768, 512, 3]

23 -1 1 2360320 ultralytics.nn.modules.conv.Conv [512, 512, 3, 2]

24 [-1, 13] 1 0 ultralytics.nn.modules.conv.Concat [1]

25 -1 3 4723712 ultralytics.nn.modules.block.C2f [1024, 512, 3]

26 [19, 22, 25] 1 7950688 ultralytics.nn.modules.head.Segment [80, 32, 256, [256, 512, 512]]

image 1/1 /XXXXXXXXXXXXXXXXX/ultralyticsv8_2-main/ultralytics/assets/bus.jpg: 640x480 (no detections), 116.5ms

Speed: 2.7ms preprocess, 116.5ms inference, 0.7ms postprocess per image at shape (1, 3, 640, 480)

======================== 1 passed, 4 warnings in 7.04s =========================

进程已结束,退出代码0

至此,注意力模块添加完成。

5 训练

如上图,这里使用x超大模型,只需yolov8-CBAMseg.yaml中加一个x变成yolov8x-CBAMseg.yaml,优化器为上一篇博客yolov8更改的Lion优化器。可以看到arguments参数按照x模型发生了调整,模型开始训练。

![[windows10]win10永久禁用系统自动更新操作方法](https://i-blog.csdnimg.cn/direct/0e4d85a6ae9042038189f92436e8440b.png)

![MySQL 预处理、如何在 [Node.js] 中使用 MySQL?](https://i-blog.csdnimg.cn/direct/9534abbed70e4be4869a222847e25f3b.png)