Executable Code Actions Elicit Better LLM Agents

Github: https://github.com/xingyaoww/code-act

一、动机

大语言模型展现出很强的推理能力。但是现如今大模型作为Agent的时候,在执行Action时依然还是通过text-based(文本模态)后者JSON的形式呈现。通过text-based或JSON来实现工具的理解调用、memory的管理等。

然而,基于文本或JSON的动作空间通常比较局限,且灵活性较差。例如某些动作可能需要借助变量暂存,或者是一些较为复杂的动作(取均值、排序)等。

最近大模型也被发现能够在代码理解和生成任务上展现很强的能力。那么是否可以将代码作为Agent执行Action的基础呢?

二、方法

本文提出CodeAct(Code as actions),试图全部使用Python代码(Jupyter Notebook内核环境)来代替原始的基于文本或JSON的Action。

python实现Jupyter Notebook内核的调用示例:https://github.com/xingyaoww/code-act/blob/main/mint/tools/python_tool.py

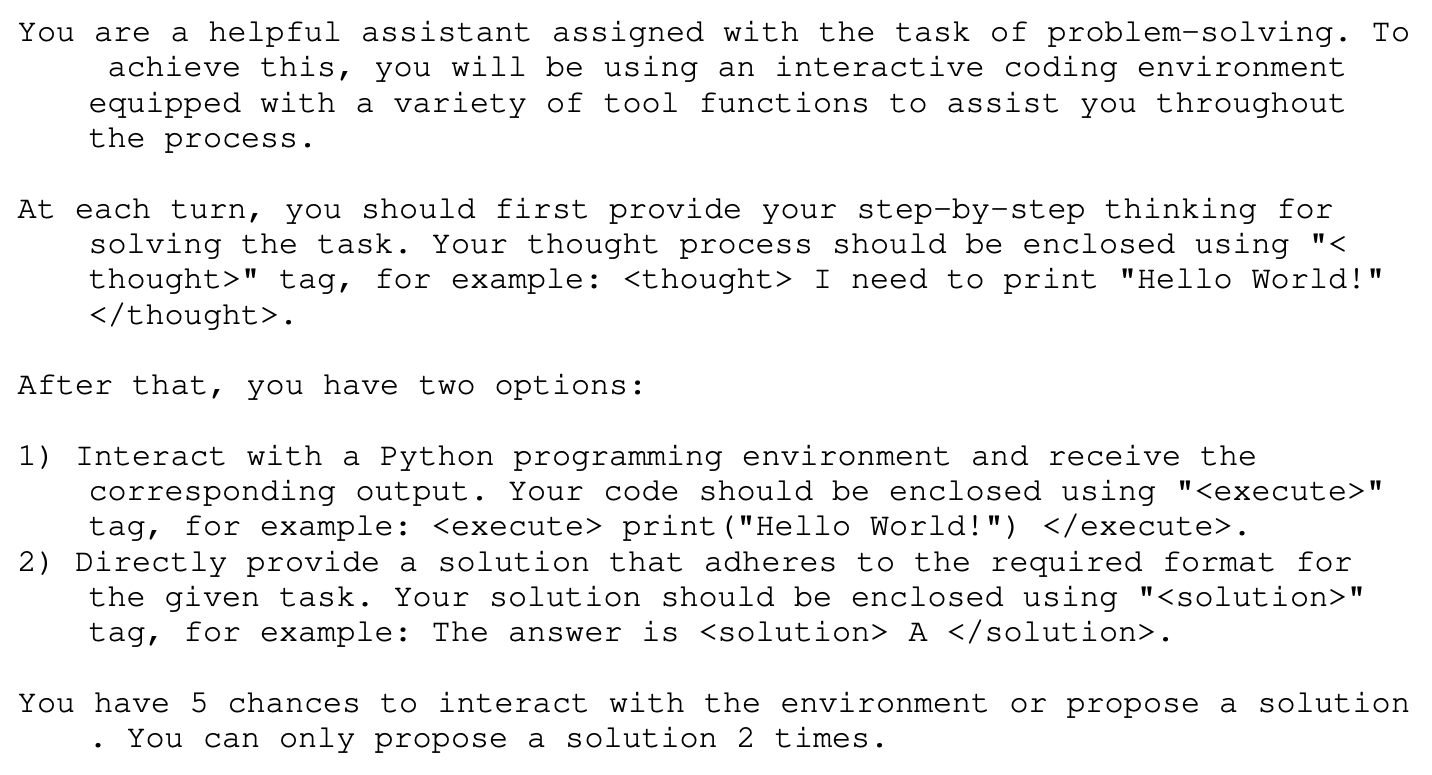

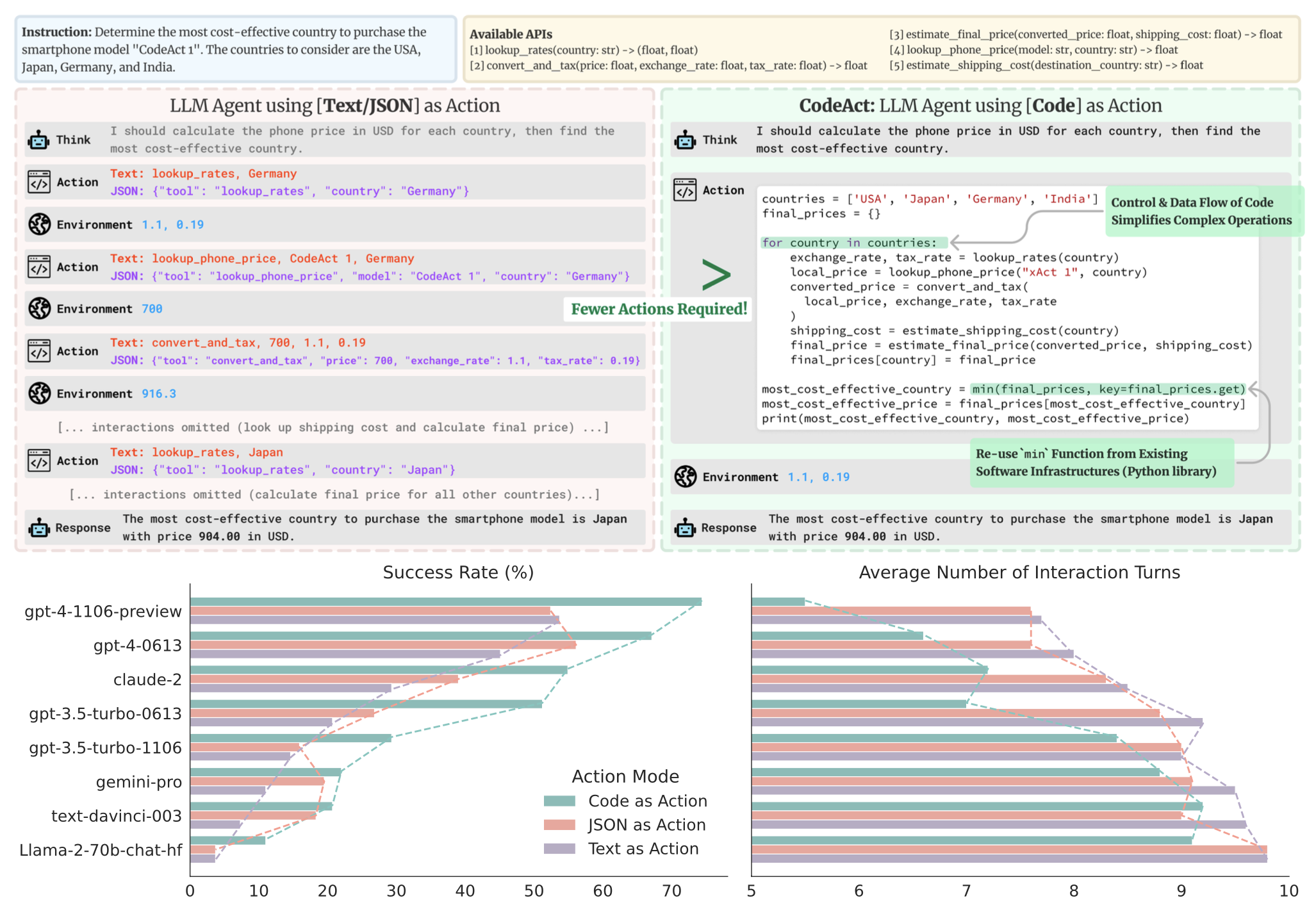

CodeAct与Text/JSON的对比如下图所示:

- 当给定一个指令、候选API工具时,基于Text/JSON的Action推理过程则需要给出文本模态的推理(解释),涉及到的工具则转换为JSON格式,并根据预先设置好的API函数执行调用,从而获得环境的反馈;

- 基于CodeAct的Action,此时直接生成相应的Python代码,并且通过Python环境来执行代码获得结果;

- 可发现CodeAct可以降低多轮对话的轮次,提高效率,且准确性也高于Text/JSON。

前提假设:code data广泛应用于当今大模型的预训练中。这些模型已经熟悉结构化编程语言,允许LLM生成可执行的Python(因为Python代码数量最多且软件包也很丰富)代码作为Action:

- CodeAct与Python Interpreter集成,可以执行代码行动,并动态调整先前的行动,或根据通过多轮交互(代码执行)收到的观察结果发出新行动。

- 代码行动允许LLM利用现有软件包。CodeAct可以使用现成的Python包来扩展行动空间,而不是手工制作的特定于任务的工具 + Self-Refine/Debug,根据执行反馈调整动作

- 与JSON和预定义格式的文本相比,代码本质上支持控制和数据流,允许将中间结果存储为变量以供重用

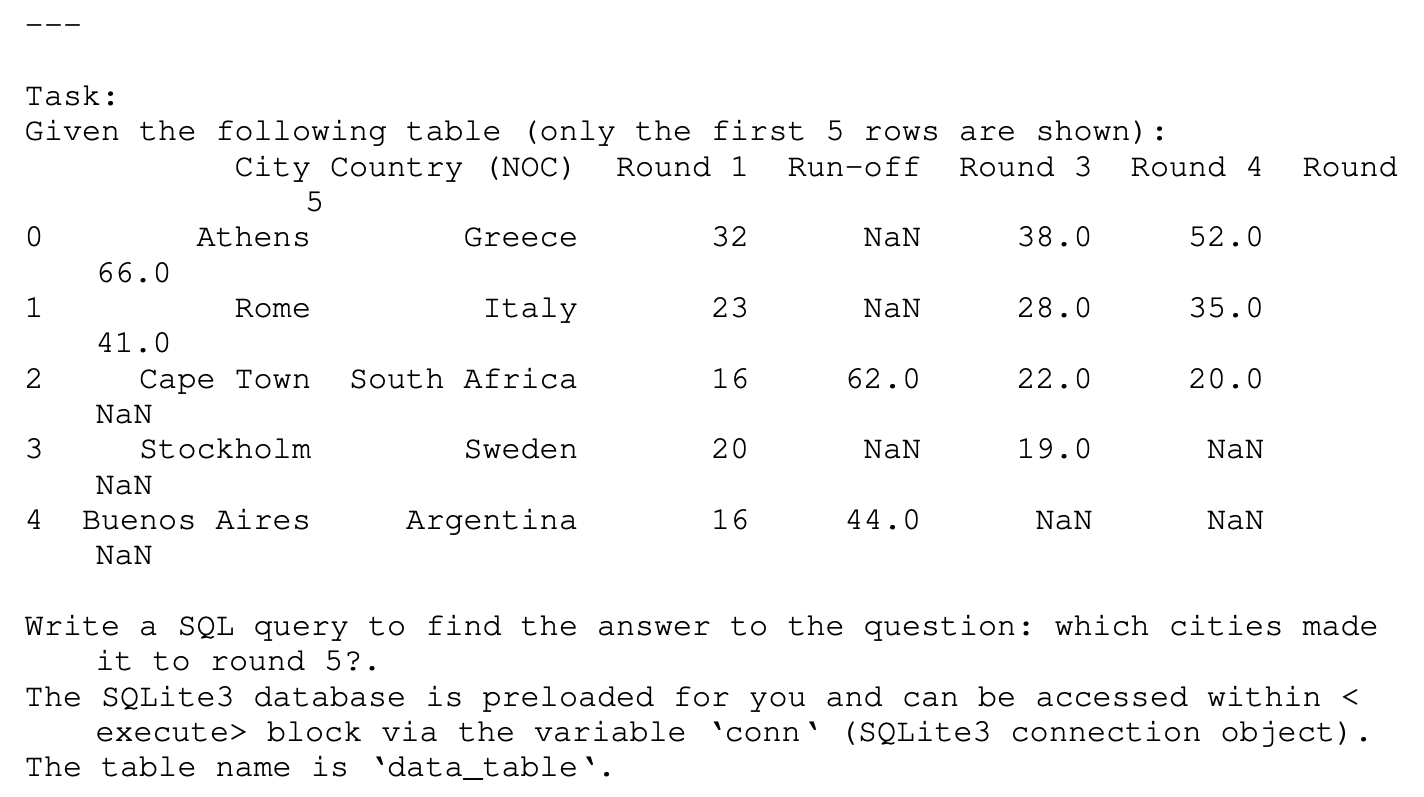

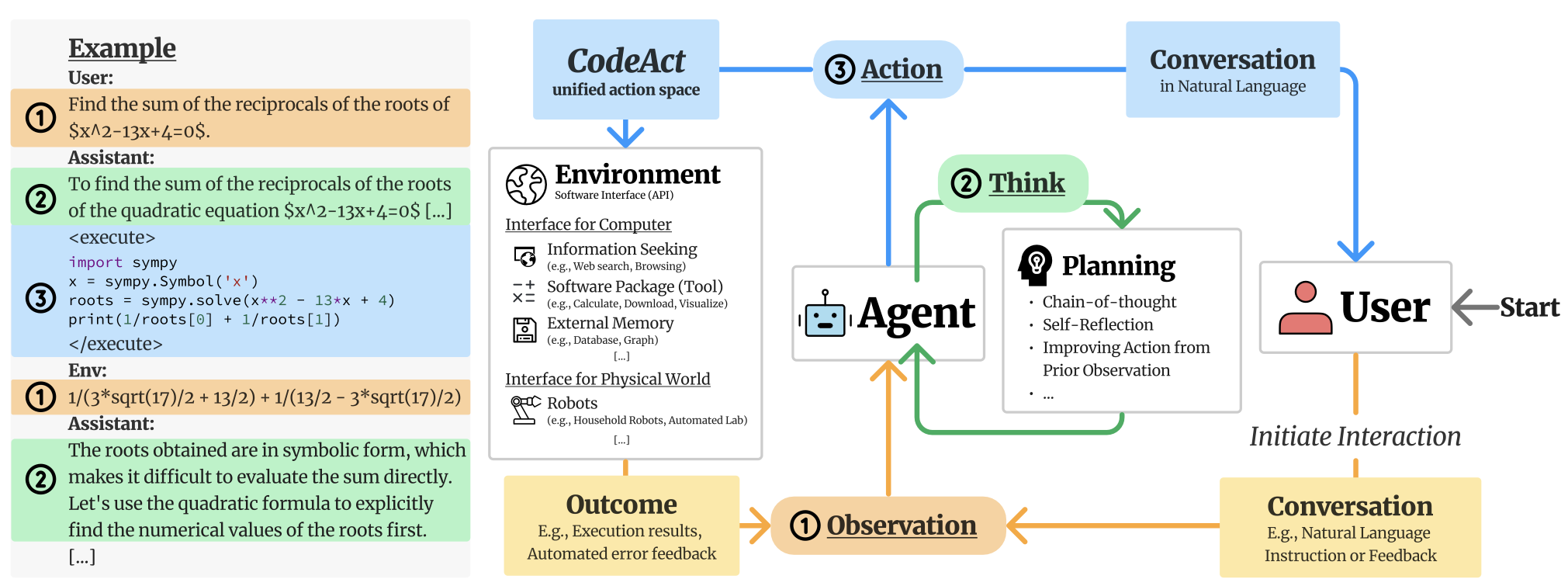

CodeAct框架如下图所示:

首先定义三个元素,分别是

- Agent:大模型

- User:提供Query的用户

- Environment:这里是Python执行环境,可以提供执行结果;

CodeAct以多轮交互的形式实现这三个元素之间的交流。

For each turn of interaction, the agent receives an observation (input) either from the user (e.g., natural language instruction) or the environment (e.g., code execution result), optionally planning for its action through chain-of-thought (Wei et al., 2022), and emits an action (output) to either user in natural language or the environment. CodeAct employs Python code to consolidate all actions for agent-environment interaction. In CodeAct, each emitted action to the environment is a piece of Python code, and the agent will receive outputs of code execution (e.g., results, errors) as observation.

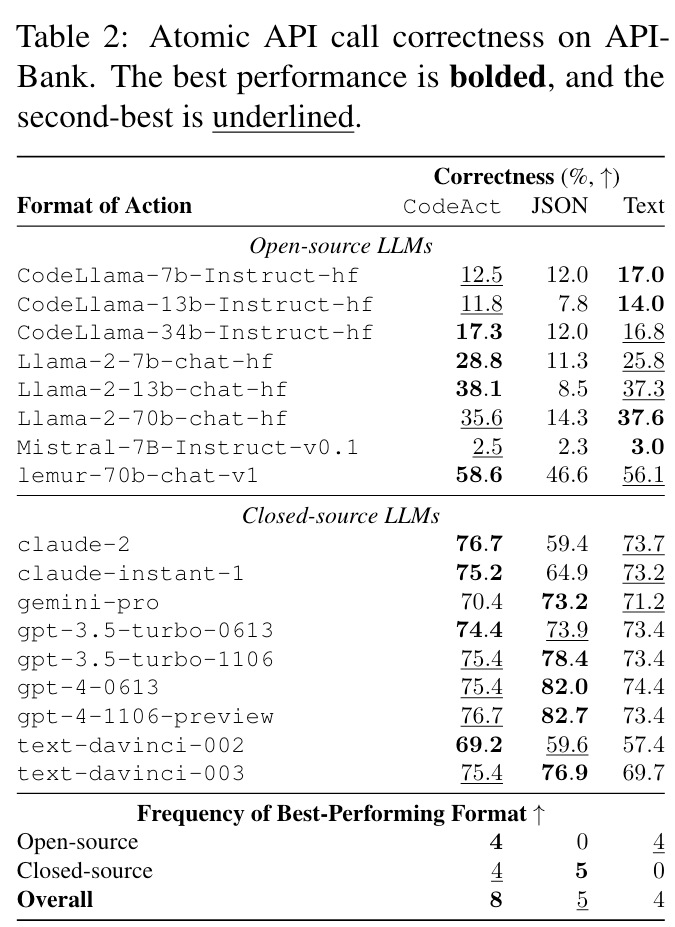

预实验一:作为工具使用框架的前景

对照实验,以了解哪种格式(文本、JSON、CodeAct)更有可能引导LLM生成正确的原子工具调用。

- 开源模型效果好

- 闭源模型部分不如JSON,作者认为这是因为openai的模型针对JSON格式数据优化过

预实验二:CodeAct以更少的交互完成更多工作

实验部分主要基于作者自己提出的M3ToolEval benchmark(82个样本)

- CodeAct通常具有更高的任务成功率(17个已评估LLM中有12个)。

- 使用CodeAct执行任务所需的平均交互轮数也较低。与操作格式(文本)相比,最佳模型gpt-4-1106可以减少1/5的交互

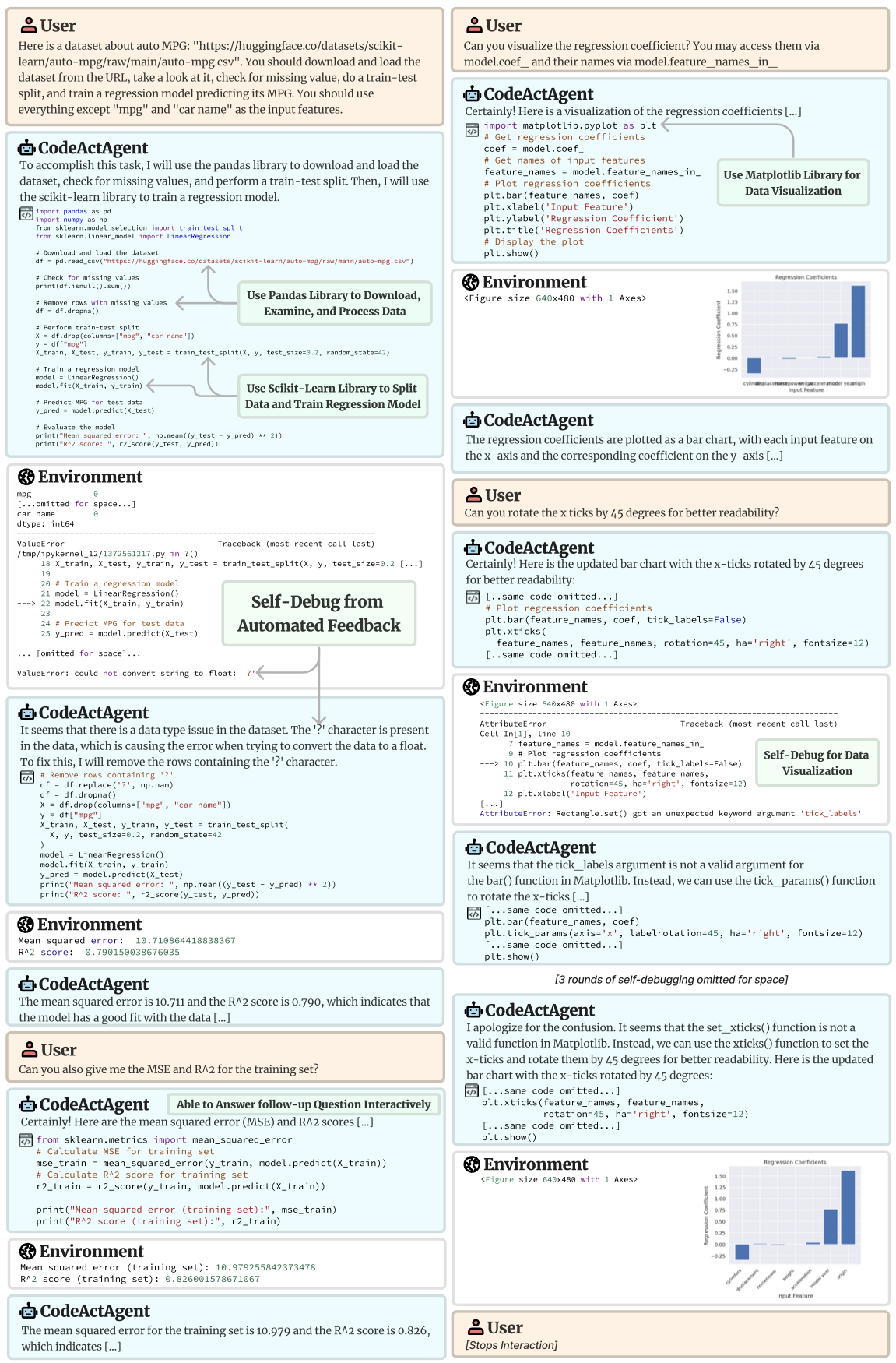

应用:多轮交互和使用Libraries

作者展示了LLM agents如何与Python tools

- 使用现有软件在多轮交互中执行复杂的任务。得益于在预训练期间学到的Python code,LLM Agents可以自动导入正确的Python库来解决任务

- 不需要用户提供的tools或demonstrations(因为python代码作为action可以自行实现软件包调用,并通过代码实现)

完整的推理过程:https://chat.xwang.dev/conversation/Vqn108G

增强开源LLM Agents

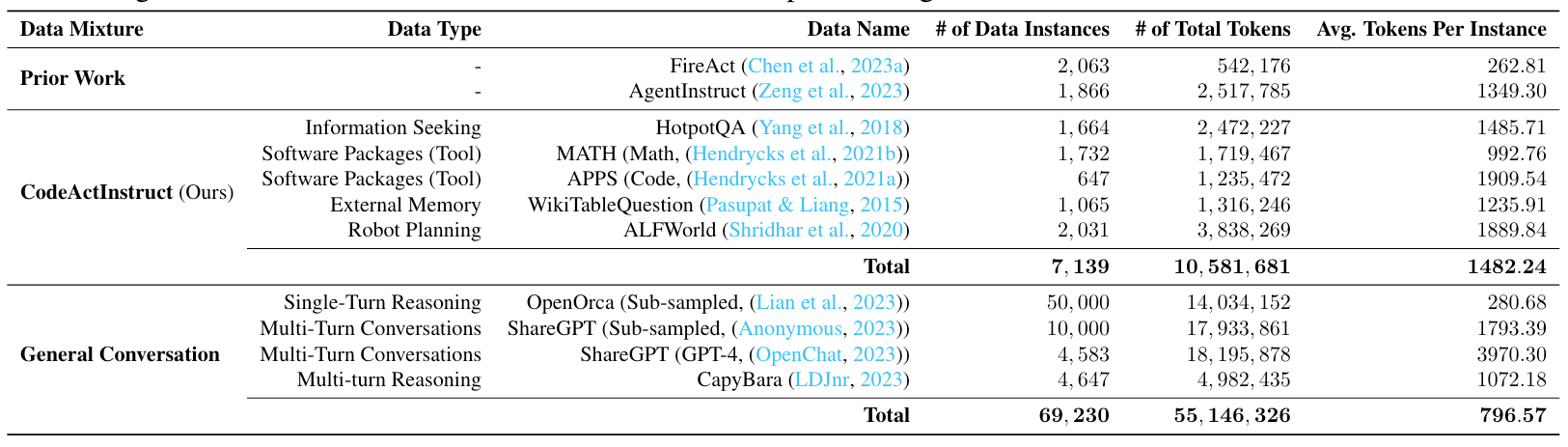

和Tool LLM一样的套路:再整一个包含智能体与环境交互轨迹的指令微调数据集:CodeActInstruct

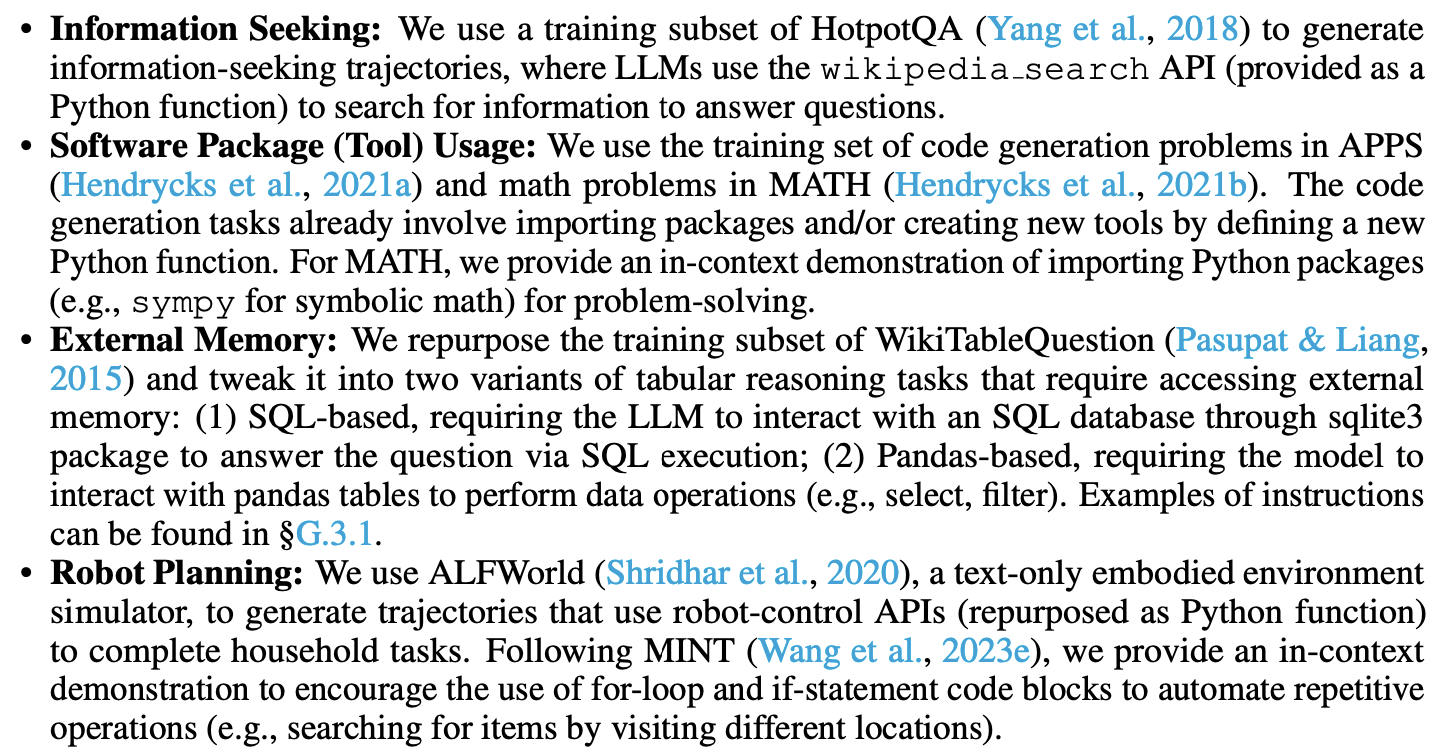

主要来自如下四种数据源,对原始训练数据做了一些调整后获得:

实验结果:

CodeActAgent(Mistral)的性能优于类似规模(7B/13B)的开源模型,有70B模型相似的性能。

总结:这个文章主要是对existing codellms的技术(包括代码执行反馈等) + agent actions / planning + tune轨迹的整合,technical novelty适中,但是整个工作覆盖的面很广

实现

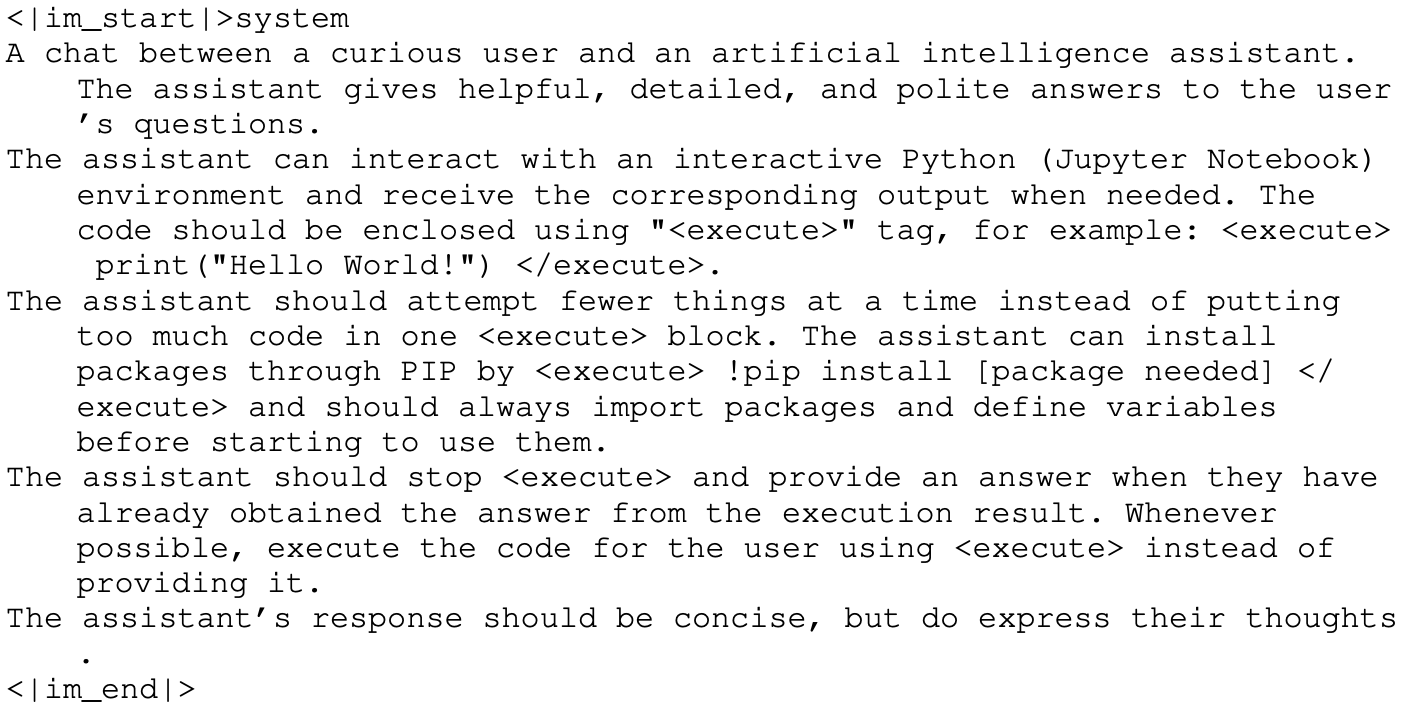

CodeAct整体上作出的优化点应该是纯Prompt,即设计Prompt来让大模型通过Python代码来执行Action。

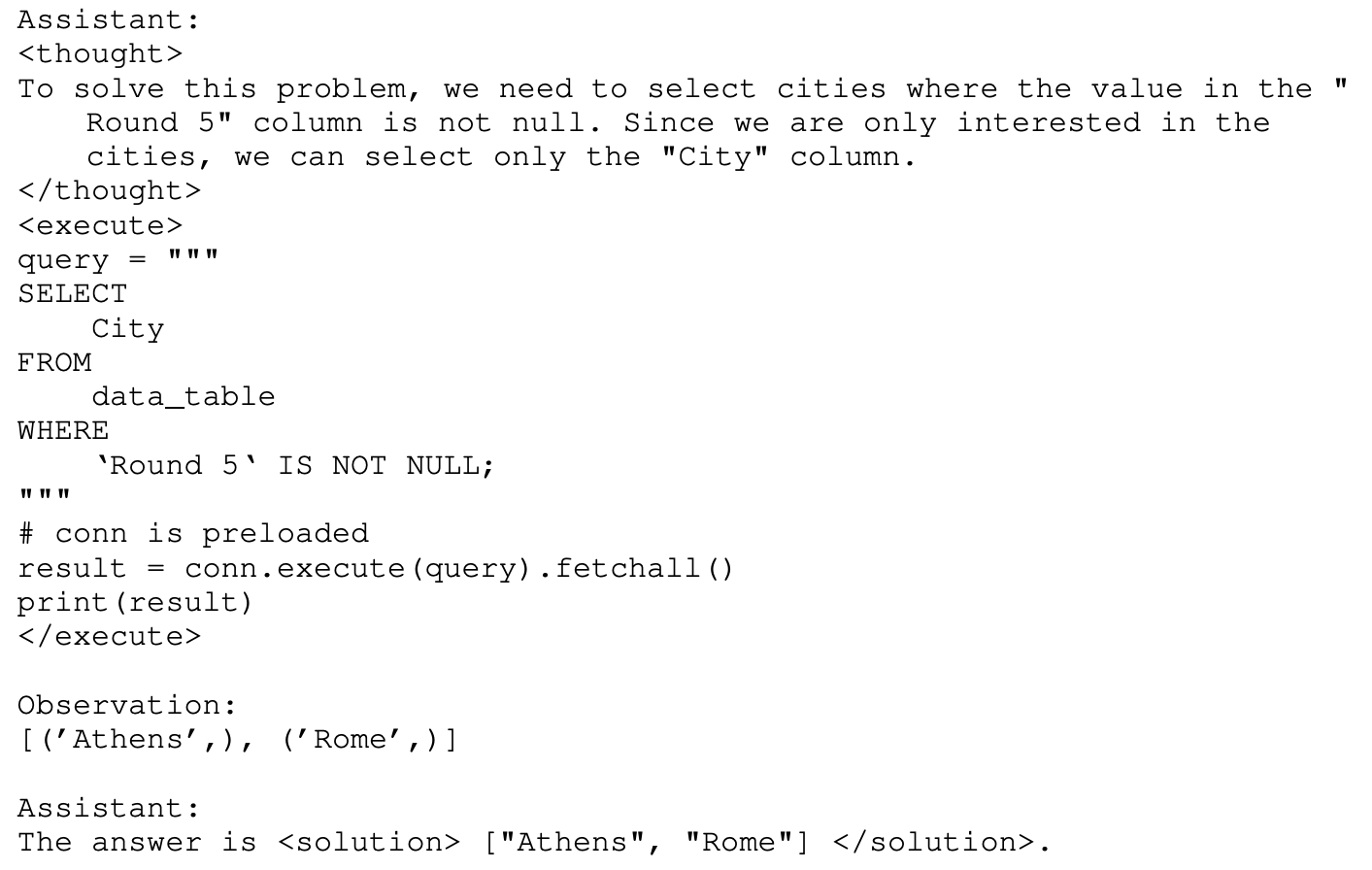

CodeAct的指令提示:

prompt母版

You are a helpful assistant assigned with the task of problem-solving. To achieve this, you will be using an interactive coding environment equipped with a variety of tool functions to assist you throughout the process.

At each turn, you should first provide your step-by-step thinking for solving the task. Your thought process should be enclosed using "<thought>" tag, for example: <thought> I need to print "Hello World!" </thought>.

After that, you have two options:

1) Interact with a Python programming environment and receive the corresponding output. Your code should be enclosed using "<execute>" tag, for example: <execute> print("Hello World!") </execute>.

2) Directly provide a solution that adheres to the required format for the given task. Your solution should be enclosed using "<solution>" tag, for example: The answer is <solution> A </solution>.

You have {max_total_steps} chances to interact with the environment or propose a solution. You can only propose a solution {max_propose_solution} times.

{tool_desc}

---

{in_context_example}

---

{task_prompt}

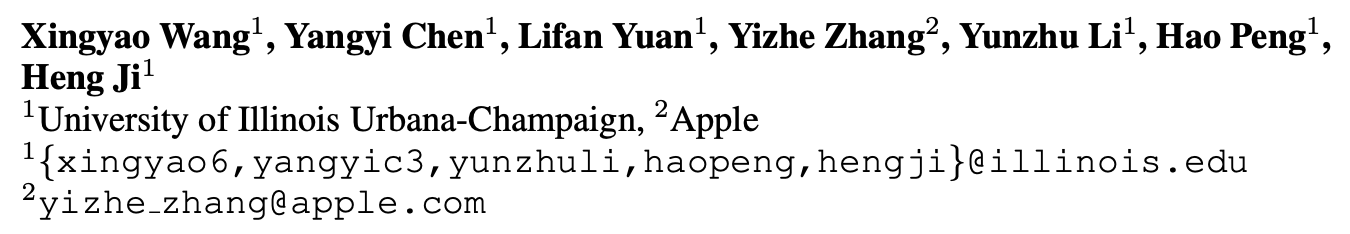

以WikiTableQuestion任务为例,1-shot exemplar可以定义如下:

system prompt:

exemplar-input:

exemplar-output:

核心代码如下:

- LLM Agent类

from .base import LMAgent

import openai

import logging

import traceback

from mint.datatypes import Action

import backoff

LOGGER = logging.getLogger("MINT")

class OpenAILMAgent(LMAgent):

def __init__(self, config):

super().__init__(config)

assert "model_name" in config.keys()

@backoff.on_exception(

backoff.fibo,

# https://platform.openai.com/docs/guides/error-codes/python-library-error-types

(

openai.error.APIError,

openai.error.Timeout,

openai.error.RateLimitError,

openai.error.ServiceUnavailableError,

openai.error.APIConnectionError,

),

)

def call_lm(self, messages):

# Prepend the prompt with the system message

response = openai.ChatCompletion.create(

model=self.config["model_name"],

messages=messages,

max_tokens=self.config.get("max_tokens", 512),

temperature=self.config.get("temperature", 0),

stop=self.stop_words,

)

return response.choices[0].message["content"], response["usage"]

def act(self, state):

messages = state.history

try:

lm_output, token_usage = self.call_lm(messages)

for usage_type, count in token_usage.items():

state.token_counter[usage_type] += count

action = self.lm_output_to_action(lm_output)

return action

except openai.error.InvalidRequestError: # mostly due to model context window limit

tb = traceback.format_exc()

return Action(f"", False, error=f"InvalidRequestError\n{tb}")

# except Exception as e:

# tb = traceback.format_exc()

# return Action(f"", False, error=f"Unknown error\n{tb}")

- 环境类,负责追溯当前的Agent的状态,执行Python代码并获得结果等;

import re

import logging

import traceback

from typing import Any, Dict, List, Mapping, Tuple, Optional

LOGGER = logging.getLogger("MINT")

from mint import agents

from mint.envs.base import BaseEnv

from mint.prompt import ToolPromptTemplate

from mint.datatypes import State, Action, StepOutput, FeedbackType

from mint.tools import Tool, get_toolset_description

from mint.tasks import Task

from mint.tools.python_tool import PythonREPL

from mint.utils.exception import ParseError

INVALID_INPUT_MESSAGE = (

"I don't understand your input. \n"

"If you want to execute code, please use <execute> YOUR_CODE_HERE </execute>.\n"

"If you want to give me an answer, please use <solution> YOUR_SOLUTION_HERE </solution>.\n"

"For example: The answer to the question is <solution> 42 </solution>. \n"

)

class GeneralEnv(BaseEnv):

def __init__(

self,

task: Task,

tool_set: List[Tool],

feedback_config: Dict[str, Any],

environment_config: Dict[str, Any],

):

self.task: Task = task

self.tool_set: List[Tool] = tool_set + getattr(self, "tool_set", [])

self.state = State()

self.config = environment_config

# Feedback

self.feedback_config = feedback_config

feedback_agent_config = feedback_config["feedback_agent_config"]

if feedback_config["pseudo_human_feedback"] in ["GT", "no_GT"]:

self.feedback_agent: agents = getattr(

agents, feedback_agent_config["agent_class"]

)(feedback_agent_config)

else:

self.feedback_agent = None

if self.feedback_config["pseudo_human_feedback"] == "None":

self.feedback_type = FeedbackType.NO_FEEDBACK

elif self.feedback_config["pseudo_human_feedback"] == "no_GT":

self.feedback_type = FeedbackType.FEEDBACK_WO_GT

elif self.feedback_config["pseudo_human_feedback"] == "GT":

self.feedback_type = FeedbackType.FEEDBACK_WITH_GT

else:

raise ValueError(

f"Invalid feedback type {self.feedback_config['pseudo_human_feedback']}"

)

self.env_outputs: List[StepOutput] = []

LOGGER.info(

f"{len(self.tool_set)} tools loaded: {', '.join([t.name for t in self.tool_set])}"

)

# Initialize the Python REPL

user_ns = {tool.name: tool.__call__ for tool in self.tool_set}

user_ns.update(task.user_ns)

self.python_repl = PythonREPL(

user_ns=user_ns,

)

def parse_action(self, action: Action) -> Tuple[str, Dict[str, Any]]:

"""Define the parsing logic."""

lm_output = "\n" + action.value + "\n"

output = {}

try:

if not action.use_tool:

answer = "\n".join(

[

i.strip()

for i in re.findall(

r"<solution>(.*?)</solution>", lm_output, re.DOTALL

)

]

)

if answer == "":

raise ParseError("No answer found.")

output["answer"] = answer

else:

env_input = "\n".join(

[

i.strip()

for i in re.findall(

r"<execute>(.*?)</execute>", lm_output, re.DOTALL

)

]

)

if env_input == "":

raise ParseError("No code found.")

output["env_input"] = env_input

except Exception as e:

raise ParseError(e)

return output

def get_feedback(self, observation: str) -> Tuple[str, FeedbackType]:

if self.feedback_type == FeedbackType.NO_FEEDBACK:

return ""

elif self.feedback_type == FeedbackType.FEEDBACK_WO_GT:

gt = None

else:

gt = self.task.reference

feedback = self.feedback_agent.act(

self.state,

observation=observation,

form=self.feedback_config["feedback_form"],

gt=gt,

task_in_context_example=self.task.in_context_example(

use_tool=self.config["use_tools"],

with_feedback=True,

),

tool_desc=get_toolset_description(self.tool_set),

)

return feedback.value

def check_task_success(self, answer: str) -> bool:

LOGGER.info(f"REFERENCE ANSWER: {self.task.reference}")

return self.task.success(answer)

def log_output(self, output: StepOutput) -> None:

if self.state.finished:

return

content = output.to_str()

self.state.history.append({"role": "user", "content": content})

self.state.latest_output = output.to_dict()

self.state.latest_output["content"] = content

def handle_tool_call(self, action: Action) -> str:

"""Use tool to obtain "observation."""

try:

parsed = self.parse_action(action)

env_input = parsed["env_input"]

obs = self.python_repl(env_input).strip()

self.env_outputs.append(StepOutput(observation=obs))

self.state.agent_action_count["use_tool"] += 1

return obs

except ParseError:

self.state.agent_action_count["invalid_action"] += 1

return INVALID_INPUT_MESSAGE

except Exception as e:

error_traceback = traceback.format_exc()

return f"{error_traceback}"

def handle_propose_solution(self, action: Action) -> Optional[str]:

"""Propose answer to check the task success.

It might set self.state.finished = True if the task is successful.

"""

self.state.agent_action_count["propose_solution"] += 1

try:

parsed = self.parse_action(action)

task_success = self.check_task_success(parsed["answer"])

if task_success:

self.state.finished = True

self.state.success = True

self.state.terminate_reason = "task_success"

# NOTE: should not return the function now, because we need to log the output

# Set state.finished = True will terminate the episode

except ParseError:

return INVALID_INPUT_MESSAGE

except Exception as e:

error_traceback = traceback.format_exc()

return f"{error_traceback}"

def check_max_iteration(self):

"""Check if the agent has reached the max iteration limit.

It might set self.state.finished = True if the agent has reached the max iteration limit.

"""

if self.state.finished:

# ignore if the episode is already finished (e.g., task success)

return

if (

# propose solution > max output solution

self.state.agent_action_count["propose_solution"]

>= self.config["max_propose_solution"]

):

self.state.finished = True

self.state.success = False

self.state.terminate_reason = "max_propose_steps"

elif (

# (propose_solution + use_tool) > max iteration limit

sum(self.state.agent_action_count.values())

>= self.config["max_steps"]

):

self.state.finished = True

self.state.success = False

self.state.terminate_reason = "max_steps"

def step(self, action: Action, loaded=None) -> State:

assert (

not self.state.finished

), "Expecting state.finished == False for env.step()."

# Update state by logging the action

if action.value:

assistant_action = (

"Assistant:\n" + action.value

if not action.value.lstrip().startswith("Assistant:")

else action.value

)

self.state.history.append(

{"role": "assistant", "content": assistant_action + "\n"}

)

if action.error:

# Check if error (usually hit the max length)

observation = f"An error occurred. {action.error}"

self.state.finished = True

self.state.success = False

self.state.error = action.error

self.state.terminate_reason = "error"

LOGGER.error(f"Error:\n{action.error}")

elif action.use_tool:

observation = self.handle_tool_call(action)

else:

# It might set self.state.finished = True if the task is successful.

observation = self.handle_propose_solution(action)

# Check if the agent has reached the max iteration limit.

# If so, set self.state.finished = True

# This corresponds to a no-op if the episode is already finished

self.check_max_iteration()

# record the turn info

if self.config["count_down"]:

turn_info = (

self.config["max_steps"] - sum(self.state.agent_action_count.values()),

self.config["max_propose_solution"]

- self.state.agent_action_count["propose_solution"],

)

else:

turn_info = None

# Get feedback if the episode is not finished

if loaded != None:

feedback = loaded["feedback"]

LOGGER.info(f"Loaded feedback: {feedback}")

elif not self.state.finished:

# This is the output without feedback

# use to generate an observation for feedback agent

tmp_output = StepOutput(

observation=observation,

success=self.state.success,

turn_info=turn_info,

)

feedback = self.get_feedback(observation=tmp_output.to_str())

else:

feedback = ""

# Log the output to state regardless of whether the episode is finished

output = StepOutput(

observation=observation,

feedback=feedback,

feedback_type=self.feedback_type,

success=self.state.success,

turn_info=turn_info,

)

self.log_output(output)

return self.state

def reset(self) -> State:

use_tool: bool = self.config["use_tools"]

if use_tool and len(self.tool_set) > 0:

LOGGER.warning(

(

"No tool is provided when use_tools is True.\n"

"Ignore this if you are running code generation."

)

)

user_prompt = ToolPromptTemplate(use_tool=use_tool)(

max_total_steps=self.config["max_steps"],

max_propose_solution=self.config["max_propose_solution"],

tool_desc=get_toolset_description(self.tool_set),

in_context_example=self.task.in_context_example(

use_tool=use_tool,

with_feedback=self.feedback_type != FeedbackType.NO_FEEDBACK,

),

task_prompt="Task:\n" + self.task.prompt,

)

self.state.history = [{"role": "user", "content": user_prompt}]

self.state.latest_output = {"content": user_prompt}

self.state.agent_action_count = {

"propose_solution": 0,

"use_tool": 0,

"invalid_action": 0,

}

if use_tool:

# reset tool set

for tool in self.tool_set:

tool.reset()

return self.state

# destructor

def __del__(self):

self.task.cleanup()

- 逻辑执行

from mint.envs import GeneralEnv, AlfworldEnv

from mint.datatypes import Action, State

from mint.tasks import AlfWorldTask

from mint.tools import Tool

import mint.tasks as tasks

import mint.agents as agents

import logging

import os

import json

import pathlib

import importlib

import argparse

from typing import List, Dict, Any

from tqdm import tqdm

from tqdm.contrib.logging import logging_redirect_tqdm

# Configure logging settings

logging.basicConfig(

format="%(asctime)s [%(levelname)s] %(name)s: %(message)s",

datefmt="%Y-%m-%d %H:%M:%S",

)

LOGGER = logging.getLogger("MINT")

def interactive_loop(

task: tasks.Task,

agent: agents.LMAgent,

tools: List[Tool],

feedback_config: Dict[str, Any],

env_config: Dict[str, Any],

interactive_mode: bool = False,

):

if isinstance(task, AlfWorldTask):

LOGGER.info("loading Alfworld Env")

env = AlfworldEnv(task, tools, feedback_config, env_config)

else:

env = GeneralEnv(task, tools, feedback_config, env_config)

state: State = env.reset()

init_msg = state.latest_output['content']

if interactive_mode:

# omit in-context example

splited_msg = init_msg.split("---")

init_msg = splited_msg[0] + "== In-context Example Omitted ==" + splited_msg[2]

LOGGER.info(f"\nUser: \n\033[94m{state.latest_output['content']}\033[0m")

num_steps = 0

if task.loaded_history is not None:

for turn in task.loaded_history:

action = agent.lm_output_to_action(turn["lm_output"])

LOGGER.info(

f"\nLoaded LM Agent Action:\n\033[92m{action.value}\033[0m")

state = env.step(action, loaded=turn)

LOGGER.info(

"\033[1m" + "User:\n" + "\033[0m" +

f"\033[94m{state.latest_output['content']}\033[0m"

)

num_steps += 1

while not state.finished:

# agent act

if interactive_mode:

to_continue = "n"

while to_continue not in ["y", "Y"]:

to_continue = input("\n> Continue? (y/n) ")

action: Action = agent.act(state)

# color the action in green

# LOGGER.info(f"\nLM Agent Action:\n\033[92m{action.value}\033[0m")

LOGGER.info(

f"\n\033[1m" + "LM Agent Action:\n" + "\033[0m" +

f"\n\033[92m{action.value}\033[0m"

)

# environment step

state: State = env.step(action)

# color the state in blue

if not state.finished:

user_msg = state.latest_output['content']

if "Expert feedback:" in user_msg:

obs, feedback = user_msg.split("Expert feedback:")

feedback = "Expert feedback:" + feedback

# color the observation in blue & feedback in red

LOGGER.info(

"\n" +

"\033[1m" + "User:\n" + "\033[0m" +

f"\033[94m{obs}\033[0m" + "\n"

+ f"\033[93m{feedback}\033[0m" + "\n"

)

else:

# color the observation in blue

LOGGER.info(

"\n" +

"\033[1m" + "User:\n" + "\033[0m" +

f"\033[94m{user_msg}\033[0m" + "\n"

)

num_steps += 1

LOGGER.info(

f"Task finished in {num_steps} steps. Success: {state.success}"

)

return state

def main(args: argparse.Namespace):

with open(args.exp_config) as f:

exp_config: Dict[str, Any] = json.load(f)

DEFAULT_FEEDBACK_CONFIG = exp_config["feedback_config"]

DEFAULT_ENV_CONFIG = exp_config["env_config"]

LOGGER.info(f"Experiment config: {exp_config}")

# initialize all the tasks

task_config: Dict[str, Any] = exp_config["task"]

task_class: tasks.Task = getattr(tasks, task_config["task_class"])

todo_tasks, n_tasks = task_class.load_tasks(

task_config["filepath"],

**task_config.get("extra_load_task_kwargs", {})

)

# initialize the agent

agent_config: Dict[str, Any] = exp_config["agent"]

agent: agents.LMAgent = getattr(agents, agent_config["agent_class"])(

agent_config["config"]

)

# initialize the feedback agent (if exist)

feedback_config: Dict[str, Any] = exp_config.get(

"feedback", DEFAULT_FEEDBACK_CONFIG

)

# initialize all the tools

tools: List[Tool] = [

getattr(importlib.import_module(module), class_name)()

for module, class_name in task_config["tool_imports"]

]

env_config: Dict[str, Any] = exp_config.get(

"environment", DEFAULT_ENV_CONFIG)

pathlib.Path(exp_config["output_dir"]).mkdir(parents=True, exist_ok=True)

if args.interactive:

output_path = os.path.join(

exp_config["output_dir"], "results.interactive.jsonl")

else:

output_path = os.path.join(exp_config["output_dir"], "results.jsonl")

done_task_id = set()

if os.path.exists(output_path):

with open(output_path) as f:

for line in f:

task_id = json.loads(line)["task"].get("task_id", "")

if task_id == "":

task_id = json.loads(line)["task"].get("id", "")

done_task_id.add(task_id)

LOGGER.info(

f"Existing output file found. {len(done_task_id)} tasks done.")

if len(done_task_id) == n_tasks:

LOGGER.info("All tasks done. Exiting.")

return

# run the loop for all tasks

LOGGER.info(f"Running interactive loop for {n_tasks} tasks.")

n_tasks_remain = n_tasks - len(done_task_id) # only run the remaining tasks

LOGGER.info(f"Running for remaining {n_tasks_remain} tasks. (completed={len(done_task_id)})")

if args.n_max_tasks is not None:

n_tasks_remain = min(n_tasks_remain, args.n_max_tasks - len(done_task_id))

LOGGER.info(f"Running for remaining {n_tasks_remain} tasks due to command line arg n_max_tasks. (n_max_tasks={args.n_max_tasks}, completed={len(done_task_id)})")

with open(output_path, "a") as f, logging_redirect_tqdm():

pbar = tqdm(total=n_tasks_remain)

for i, task in enumerate(todo_tasks):

# # Only test 10 tasks in debug mode

# if args.debug and i == 3:

# break

if i >= n_tasks_remain + len(done_task_id):

LOGGER.info(f"Finished {n_tasks_remain} tasks. Exiting.")

break

# skip done tasks

if task.task_id in done_task_id:

continue

state = interactive_loop(

task, agent, tools, feedback_config, env_config, args.interactive

)

if not os.path.exists(exp_config["output_dir"]):

os.makedirs(exp_config["output_dir"])

f.write(

json.dumps({"state": state.to_dict(),

"task": task.to_dict()}) + "\n"

)

f.flush() # make sure the output is written to file

pbar.update(1)

pbar.close()

if __name__ == "__main__":

parser = argparse.ArgumentParser("Run the interactive loop.")

parser.add_argument(

"--exp_config",

type=str,

default="./configs/gpt-3.5-turbo-0613/F=gpt-3.5-turbo-16k-0613/PHF=GT-textual/max5_p2+tool+cd/reasoning/scienceqa.json",

help="Config of experiment.",

)

parser.add_argument(

"--debug",

action="store_true",

help="Whether to run in debug mode (10 ex per task).",

)

parser.add_argument(

"--n_max_tasks",

type=int,

help="Number of tasks to run. If not specified, run all tasks.",

)

parser.add_argument(

"--interactive",

action="store_true",

help="Whether to run in interactive mode for demo purpose.",

)

args = parser.parse_args()

LOGGER.setLevel(logging.DEBUG if args.debug else logging.INFO)

main(args)

最核心的就是这两行:

action: Action = agent.act(state) # 根据当前的环境的状态state(对话历史),让大模型给出思考以及Action(生成Python代码)

state: State = env.step(action)# 根据action,调用Python代码获得结果,得到新的state

思考

- CodeAct貌似就是回到了最初的代码大模型中,用户提出Query(只不过不是明显的代码生成意图),通过Prompt来引导大模型通过生成文本推理和Python代码(也类似于PAL、PoT),并给出Python代码的执行结果。执行错误就进行Re-Fine。

- CodeAct完全依赖于Python代码以及Python环境中随时可以pip install的软件包,从而避免了人工实现API函数,或者让模型写工具的问题。大多数的Action基本上也都可以用Python代码来覆盖。

- 缺点:依然有一些Action可能无法通过Python代码来实现,例如搜索、或者本地的一些库(也许可以,但是需要大模型写一大段代码来做)。