运行程序。该程序会自动打开摄像头,识别并定位摄像头前的人脸以及眼睛部位。

输入q或者Q,退出程序。

或进行文本中所包含的图片路径 或 单个图片进行检测,自行修改代码即可

配置环境项目,debug

解决error C4996: ‘fopen’: This function or variable may be unsafe. Consider using fopen_s instead…

https://blog.csdn.net/muzihuaner/article/details/109886974

确保lib,dll,头文件都配置正确

也可将dll文件添加到系统环境变量path里,便无需移动了,最简单

后续单独打包exe,再额外将dll放一起

OpenCV源代码在此路径查找

将所需的data数据放在.cpp文件下

数据集在opencv的sources文件夹下,必需,一定要正确放置data文件,避免报错

两者的路径不一致,非必需,可自行修改选择图片,仅用于测试

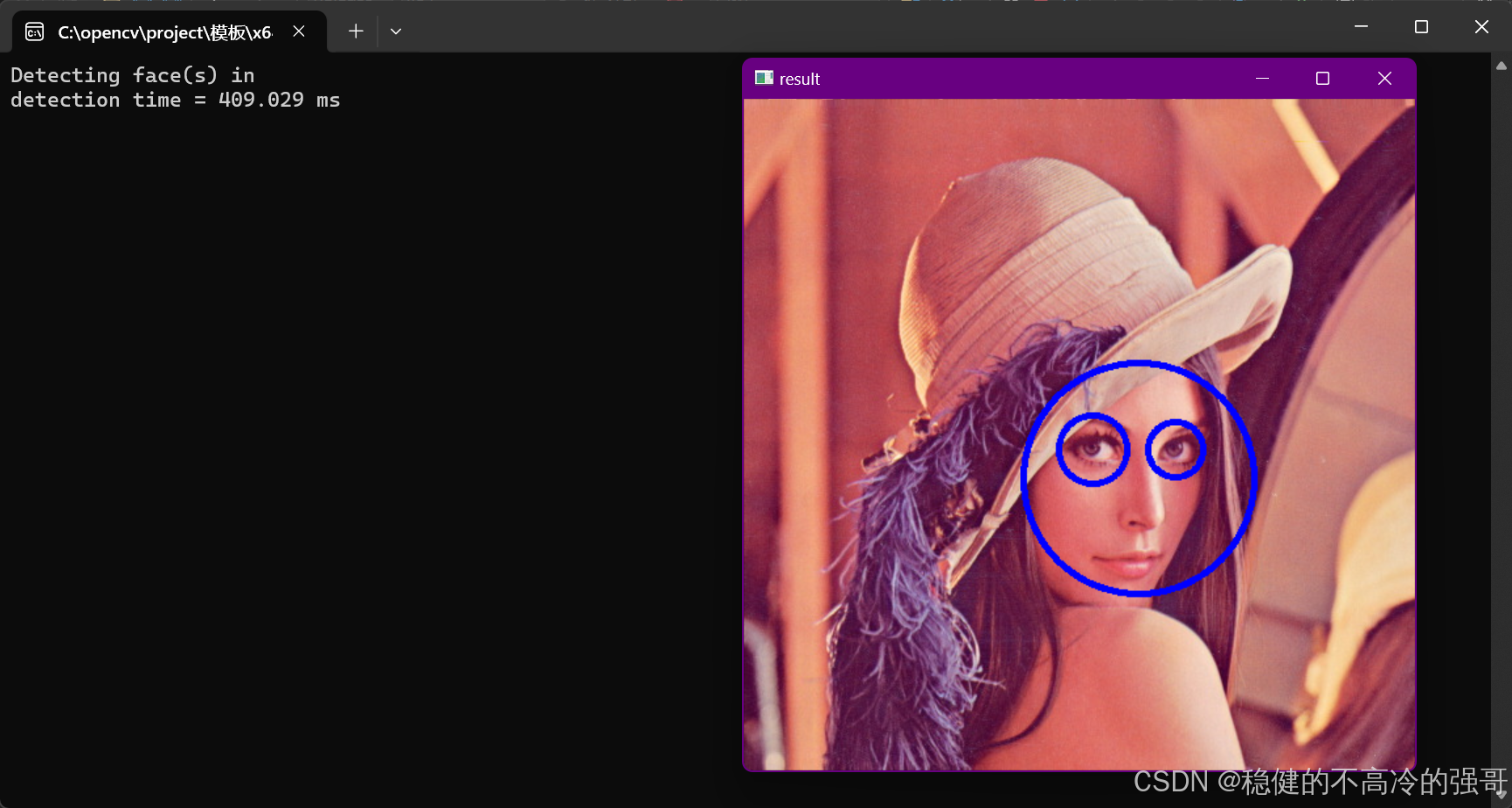

修改一下代码,对默认的lema图片检测如下所示

源代码,自行添加了注释,未修改源代码

#include "opencv2/objdetect.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/videoio.hpp"

#include <iostream>

using namespace std;

using namespace cv;

static void help(const char **argv) {

cout << "\nThis program demonstrates the use of cv::CascadeClassifier class to detect objects (Face + eyes). You can use Haar or LBP features.\n"

"This classifier can recognize many kinds of rigid objects, once the appropriate classifier is trained.\n"

"It's most known use is for faces.\n"

"Usage:\n"

<< argv[0]

<< " [--cascade=<cascade_path> this is the primary trained classifier such as frontal face]\n"

" [--nested-cascade[=nested_cascade_path this an optional secondary classifier such as eyes]]\n"

" [--scale=<image scale greater or equal to 1, try 1.3 for example>]\n"

" [--try-flip]\n"

" [filename|camera_index]\n\n"

"example:\n"

<< argv[0]

<< " --cascade=\"data/haarcascades/haarcascade_frontalface_alt.xml\" --nested-cascade=\"data/haarcascades/haarcascade_eye_tree_eyeglasses.xml\" --scale=1.3\n\n"

"During execution:\n\tHit any key to quit.\n"

"\tUsing OpenCV version " << CV_VERSION << "\n" << endl;

}

void detectAndDraw(Mat &img, CascadeClassifier &cascade,

CascadeClassifier &nestedCascade,

double scale, bool tryflip);

string cascadeName;

string nestedCascadeName;

int main(int argc, const char **argv) {

VideoCapture capture;

Mat frame, image;

string inputName;

bool tryflip;

CascadeClassifier cascade, nestedCascade;

double scale;

cv::CommandLineParser parser(argc, argv,

"{help h||}"

"{cascade|data/haarcascades/haarcascade_frontalface_alt.xml|}"

"{nested-cascade|data/haarcascades/haarcascade_eye_tree_eyeglasses.xml|}"

"{scale|1|}{try-flip||}{@filename||}"

);

if (parser.has("help")) {

help(argv);

return 0;

}

cascadeName = parser.get<string>("cascade");

nestedCascadeName = parser.get<string>("nested-cascade");

scale = parser.get<double>("scale");

if (scale < 1)

scale = 1;

tryflip = parser.has("try-flip");

inputName = parser.get<string>("@filename");

if (!parser.check()) {

parser.printErrors();

return 0;

}

if (!nestedCascade.load(samples::findFileOrKeep(nestedCascadeName)))

cerr << "WARNING: Could not load classifier cascade for nested objects" << endl;

if (!cascade.load(samples::findFile(cascadeName))) {

cerr << "ERROR: Could not load classifier cascade" << endl;

help(argv);

return -1;

}

//如果 inputName 为空或是一个单个数字字符,则尝试打开对应索引的摄像头。

if (inputName.empty() || (isdigit(inputName[0]) && inputName.size() == 1)) {

//奥比中光的gemini 2接入,1可以使用,0和2无法使用,3则是笔记本内置摄像头

//不接入设备,0是笔记本内置摄像头

//如果 inputName 为空,则默认使用摄像头0;否则,将 inputName 的第一个字符转换为整数作为摄像头索引。

int camera = inputName.empty() ? 0 : inputName[0] - '0';

if (!capture.open(camera)) {

cout << "Capture from camera #" << camera << " didn't work" << endl;

return 1;

}

} else if (!inputName.empty()) {

//如果 inputName 不为空且不是一个单个数字字符,则尝试读取指定的图像文件。

image = imread(samples::findFileOrKeep(inputName), IMREAD_COLOR);

if (image.empty()) {

if (!capture.open(samples::findFileOrKeep(inputName))) {

cout << "Could not read " << inputName << endl;

return 1;

}

}

} else {//读取默认的图像文件 lena.jpg。

image = imread(samples::findFile("lena.jpg"), IMREAD_COLOR);

if (image.empty()) {

cout << "Couldn't read lena.jpg" << endl;

return 1;

}

}

if (capture.isOpened()) {

cout << "Video capturing has been started ..." << endl;

/*从视频捕获设备读取帧。

对每一帧进行人脸和眼睛的检测。

在图像上绘制检测结果。

持续运行,直到帧为空或用户按下退出键。(Esc、'q'或'Q')*/

for (;;) {

capture >> frame;

if (frame.empty())

break;

Mat frame1 = frame.clone();

detectAndDraw(frame1, cascade, nestedCascade, scale, tryflip);

char c = (char)waitKey(10);

if (c == 27 || c == 'q' || c == 'Q')

break;

}

} else {

cout << "Detecting face(s) in " << inputName << endl;

if (!image.empty()) {

detectAndDraw(image, cascade, nestedCascade, scale, tryflip);

waitKey(0);

} else if (!inputName.empty()) {

/* assume it is a text file containing the

list of the image filenames to be processed - one per line */

//如果已经加载了单张图像,则直接进行人脸检测并等待用户按键。

//如果输入是一个包含图像文件列表的文本文件,则逐行读取文件内容,尝试读取每行指定的图像文件,并进行人脸检测。如果读取失败,则输出错误信息。

//在检测每张图像后,等待用户按键,如果用户按下特定键(Esc、'q'或'Q'),则退出处理流程。

FILE *f = fopen(inputName.c_str(), "rt");

if (f) {

char buf[1000 + 1];

while (fgets(buf, 1000, f)) {

//计算字符串buf的长度,然后从字符串末尾向前遍历,直到遇到非空白字符为止。最后,将字符串的结束符\0放在最后一个非空白字符之后,从而去除了行尾的空白字符。

int len = (int)strlen(buf);

while (len > 0 && isspace(buf[len - 1]))

len--;

buf[len] = '\0';

cout << "file " << buf << endl;

image = imread(buf, IMREAD_COLOR);

if (!image.empty()) {

detectAndDraw(image, cascade, nestedCascade, scale, tryflip);

char c = (char)waitKey(0);

if (c == 27 || c == 'q' || c == 'Q')

break;

} else {

cerr << "Aw snap, couldn't read image " << buf << endl;

}

}

fclose(f);

}

}

}

return 0;

}

void detectAndDraw(Mat &img, CascadeClassifier &cascade,

CascadeClassifier &nestedCascade,

double scale, bool tryflip) {

/*t:用于计时。

faces 和 faces2:存储检测到的人脸矩形区域。

colors:用于绘制检测结果的颜色数组。

gray 和 smallImg:用于存储灰度图像和缩放后的图像。*/

double t = 0;

vector<Rect> faces, faces2;

const static Scalar colors[] =

{

Scalar(255,0,0),

Scalar(255,128,0),

Scalar(255,255,0),

Scalar(0,255,0),

Scalar(0,128,255),

Scalar(0,255,255),

Scalar(0,0,255),

Scalar(255,0,255)

};

Mat gray, smallImg;

/*将输入图像转换为灰度图像。

计算缩放比例 fx。

将灰度图像缩放到指定比例。

对缩放后的图像进行直方图均衡化,以提高对比度。*/

cvtColor(img, gray, COLOR_BGR2GRAY);

double fx = 1 / scale;

resize(gray, smallImg, Size(), fx, fx, INTER_LINEAR_EXACT);

equalizeHist(smallImg, smallImg);

//记录检测开始时间。

// 使用主分类器 cascade 检测人脸,结果存储在 faces 中。

// 如果 tryflip 为真,则翻转图像并再次检测人脸,结果存储在 faces2 中,并将 faces2 中的结果转换为原始图像坐标系后添加到 faces 中。

// 计算并输出检测时间。

t = (double)getTickCount();

cascade.detectMultiScale(smallImg, faces,

1.1, 2, 0

//|CASCADE_FIND_BIGGEST_OBJECT

//|CASCADE_DO_ROUGH_SEARCH

| CASCADE_SCALE_IMAGE,

Size(30, 30));

if (tryflip) {

flip(smallImg, smallImg, 1);

cascade.detectMultiScale(smallImg, faces2,

1.1, 2, 0

//|CASCADE_FIND_BIGGEST_OBJECT

//|CASCADE_DO_ROUGH_SEARCH

| CASCADE_SCALE_IMAGE,

Size(30, 30));

for (vector<Rect>::const_iterator r = faces2.begin(); r != faces2.end(); ++r) {

faces.push_back(Rect(smallImg.cols - r->x - r->width, r->y, r->width, r->height));

}

}

t = (double)getTickCount() - t;

printf("detection time = %g ms\n", t * 1000 / getTickFrequency());

/*遍历检测到的人脸区域。

计算人脸区域的宽高比,如果宽高比在合理范围内,则绘制圆形标记;否则绘制矩形标记。

如果嵌套分类器不为空,则使用嵌套分类器检测眼睛,并在检测到的眼睛位置绘制圆形标记。

显示检测结果图像。*/

for (size_t i = 0; i < faces.size(); i++) {

Rect r = faces[i];

Mat smallImgROI;

vector<Rect> nestedObjects;

Point center;

Scalar color = colors[i % 8];

int radius;

double aspect_ratio = (double)r.width / r.height;

if (0.75 < aspect_ratio && aspect_ratio < 1.3) {

center.x = cvRound((r.x + r.width * 0.5) * scale);

center.y = cvRound((r.y + r.height * 0.5) * scale);

radius = cvRound((r.width + r.height) * 0.25 * scale);

circle(img, center, radius, color, 3, 8, 0);

} else

rectangle(img, Point(cvRound(r.x * scale), cvRound(r.y * scale)),

Point(cvRound((r.x + r.width - 1) * scale), cvRound((r.y + r.height - 1) * scale)),

color, 3, 8, 0);

if (nestedCascade.empty())

continue;

smallImgROI = smallImg(r);

nestedCascade.detectMultiScale(smallImgROI, nestedObjects,

1.1, 2, 0

//|CASCADE_FIND_BIGGEST_OBJECT

//|CASCADE_DO_ROUGH_SEARCH

//|CASCADE_DO_CANNY_PRUNING

| CASCADE_SCALE_IMAGE,

Size(30, 30));

for (size_t j = 0; j < nestedObjects.size(); j++) {

Rect nr = nestedObjects[j];

center.x = cvRound((r.x + nr.x + nr.width * 0.5) * scale);

center.y = cvRound((r.y + nr.y + nr.height * 0.5) * scale);

radius = cvRound((nr.width + nr.height) * 0.25 * scale);

circle(img, center, radius, color, 3, 8, 0);

}

}

imshow("result", img);

}