目录

- 4 Implementing a GPT model from Scratch To Generate Text

- This chapter covers

- 4.1 Coding an LLM architecture

- Listing 4.1 A placeholder GPT model architecture class

- 4.2 Normalizing activations with layer normalization

- 4.3 Implementing a feed forward network with GELU activations

- 4.4 Adding shortcut connections

- 4.5 Connecting attention and linear layers in a transformer block

- 4.6 Coding the GPT model

- 4.7 Generating text

- 4.8 Summary

4 Implementing a GPT model from Scratch To Generate Text

4 从零开始实现GPT模型以生成文本

This chapter covers

- Coding a GPT-like large language model (LLM) that can be trained to generate human-like text

- 编码一个类似GPT的大型语言模型(LLM),可以训练生成类似人类的文本

- Normalizing layer activations to stabilize neural network training

- 归一化层激活以稳定神经网络训练

- Adding shortcut connections in deep neural networks to train models more effectively

- 在深度神经网络中添加快捷连接以更有效地训练模型

- Implementing transformer blocks to create GPT models of various sizes

- 实现transformer块以创建各种规模的GPT模型

- Computing the number of parameters and storage requirements of GPT models

- 计算GPT模型的参数数量和存储需求

In the previous chapter, you learned and coded the multi-head attention mechanism, one of the core components of LLMs. In this chapter, we will now code the other building blocks of an LLM and assemble them into a GPT-like model that we will train in the next chapter to generate human-like text, as illustrated in Figure 4.1.

在上一章中,您学习并编码了多头注意力机制,这是LLM的核心组件之一。在本章中,我们将编写LLM的其他构建模块,并将它们组装成一个类似GPT的模型,我们将在下一章中训练它以生成类似人类的文本,如图4.1所示。

Figure 4.1 A mental model of the three main stages of coding an LLM, pretraining the LLM on a general text dataset, and finetuning it on a labeled dataset. This chapter focuses on implementing the LLM architecture, which we will train in the next chapter.

图4.1 一个关于编写LLM的三个主要阶段的心智模型,包括在通用文本数据集上预训练LLM,并在标注数据集上进行微调。本章重点在于实现LLM架构,我们将在下一章中训练它。

The LLM architecture, referenced in Figure 4.1, consists of several building blocks that we will implement throughout this chapter. We will begin with a top-down view of the model architecture in the next section before covering the individual components in more detail.

图4.1中提到的LLM架构由几个构建模块组成,我们将在本章中逐一实现这些模块。我们将从下一节中模型架构的自上而下视图开始,然后详细介绍各个组件。

4.1 Coding an LLM architecture

4.1 编写LLM架构

LLMs, such as GPT (which stands forGenerative Pretrained Transformer), are large deep neural network architectures designed to generate new text one word (or token) at a time. However, despite their size, the model architecture is less complicated than you might think, since many of its components are repeated, as we will see later. Figure 4.2 provides a top-down view of a GPT-like LLM, with its main components highlighted.

LLM,例如GPT(生成预训练transformer),是大型深度神经网络架构,旨在一次生成一个单词(或词元的新文本。然而,尽管它们很大,但模型架构并不像您想象的那样复杂,因为其许多组件是重复的,如我们稍后将看到的。图4.2提供了一个类似GPT的LLM的自上而下视图,突出了其主要组件。

Figure 4.2 A mental model of a GPT model. Next to the embedding layers, it consists of one or more transformer blocks containing the masked multi-head attention module we implemented in the previous chapter.

图4.2 GPT模型的心智模型。紧挨着嵌入层,它包含一个或多个transformer块,这些transformer块包含我们在上一章中实现的遮蔽多头注意力模块。

As you can see in Figure 4.2, we have already covered several aspects, such as input tokenization and embedding, as well as the masked multi-head attention module. The focus of this chapter will be on implementing the core structure of the GPT model, including its transformer blocks, which we will then train in the next chapter to generate human-like text.

如图4.2所示,我们已经涵盖了几个方面,例如输入分词和嵌入,以及遮蔽多头注意力模块。本章的重点是实现GPT模型的核心结构,包括其transformer块,我们将在下一章中训练这些模块以生成类似人类的文本。

In the previous chapters, we used smaller embedding dimensions for simplicity, ensuring that the concepts and examples could comfortably fit on a single page. Now, in this chapter, we are scaling up to the size of a small GPT-2 model, specifically the smallest version with 124 million parameters, as described in Radford et al.’s paper, “Language Models are Unsupervised Multitask Learners.” Note that while the original report mentions 117 million parameters, this was later corrected.

在前几章中,为了简单起见,我们使用了较小的嵌入维度,确保概念和示例可以轻松适应单页内容。现在,在本章中,我们将扩展到一个小型GPT-2模型的规模,特别是拥有1.24亿参数的最小版本,如Radford等人在其论文《语言模型是无监督的多任务学习者》中所述。请注意,尽管原始报告提到1.17亿参数,但后来更正为1.24亿。

Chapter 6 will focus on loading pretrained weights into our implementation and adapting it for larger GPT-2 models with 345, 762, and 1,542 million parameters. In the context of deep learning and LLMs like GPT, the term “parameters” refers to the trainable weights of the model. These weights are essentially the internal variables of the model that are adjusted and optimized during the training process to minimize a specific loss function. This optimization allows the model to learn from the training data.

第6章将重点介绍将预训练权重加载到我们的实现中,并将其适配于拥有3.45亿、7.62亿和15.42亿参数的更大型GPT-2模型。在深度学习和像GPT这样的LLM中,术语“参数”是指模型的可训练权重。这些权重本质上是模型的内部变量,在训练过程中进行调整和优化,以最小化特定的损失函数。这种优化使模型能够从训练数据中学习。

For example, in a neural network layer that is represented by a 2,048x2,048-dimensional matrix (or tensor) of weights, each element of this matrix is a parameter. Since there are 2,048 rows and 2,048 columns, the total number of parameters in this layer is 2,048 multiplied by 2,048, which equals 4,194,304 parameters.

例如,在一个由2,048x2,048维矩阵(或张量)表示的神经网络层中,该矩阵的每个元素都是一个参数。由于有2,048行和2,048列,因此该层中的参数总数为2,048乘以2,048,等于4,194,304个参数。

GPT-2 VERSUS GPT-3

GPT-2对比GPT-3

Note that we are focusing on GPT-2 because OpenAI has made the weights of the pretrained model publicly available, which we will load into our implementation in chapter 6. GPT-3 is fundamentally the same in terms of model architecture, except that it is scaled up from 1.5 billion parameters in GPT-2 to 175 billion parameters in GPT-3, and it is trained on more data. As of this writing, the weights for GPT-3 are not publicly available. GPT-2 is also a better choice for learning how to implement LLMs, as it can be run on a single laptop computer, whereas GPT-3 requires a GPU cluster for training and inference. According to Lambda Labs, it would take 355 years to train GPT-3 on a single V100 datacenter GPU, and 665 years on a consumer RTX 8000 GPU.

请注意,我们关注的是GPT-2,因为OpenAI已公开发布了预训练模型的权重,我们将在第6章中将其加载到我们的实现中。GPT-3在模型架构方面基本相同,只是它从GPT-2的15亿参数扩展到了GPT-3的1750亿参数,并且在更多数据上进行了训练。截至撰写本文时,GPT-3的权重尚未公开。GPT-2也是学习如何实现LLM的更好选择,因为它可以在单台笔记本电脑上运行,而GPT-3则需要GPU集群进行训练和推理。根据Lambda Labs的数据,在单个V100数据中心GPU上训练GPT-3需要355年,而在消费级RTX 8000 GPU上则需要665年。

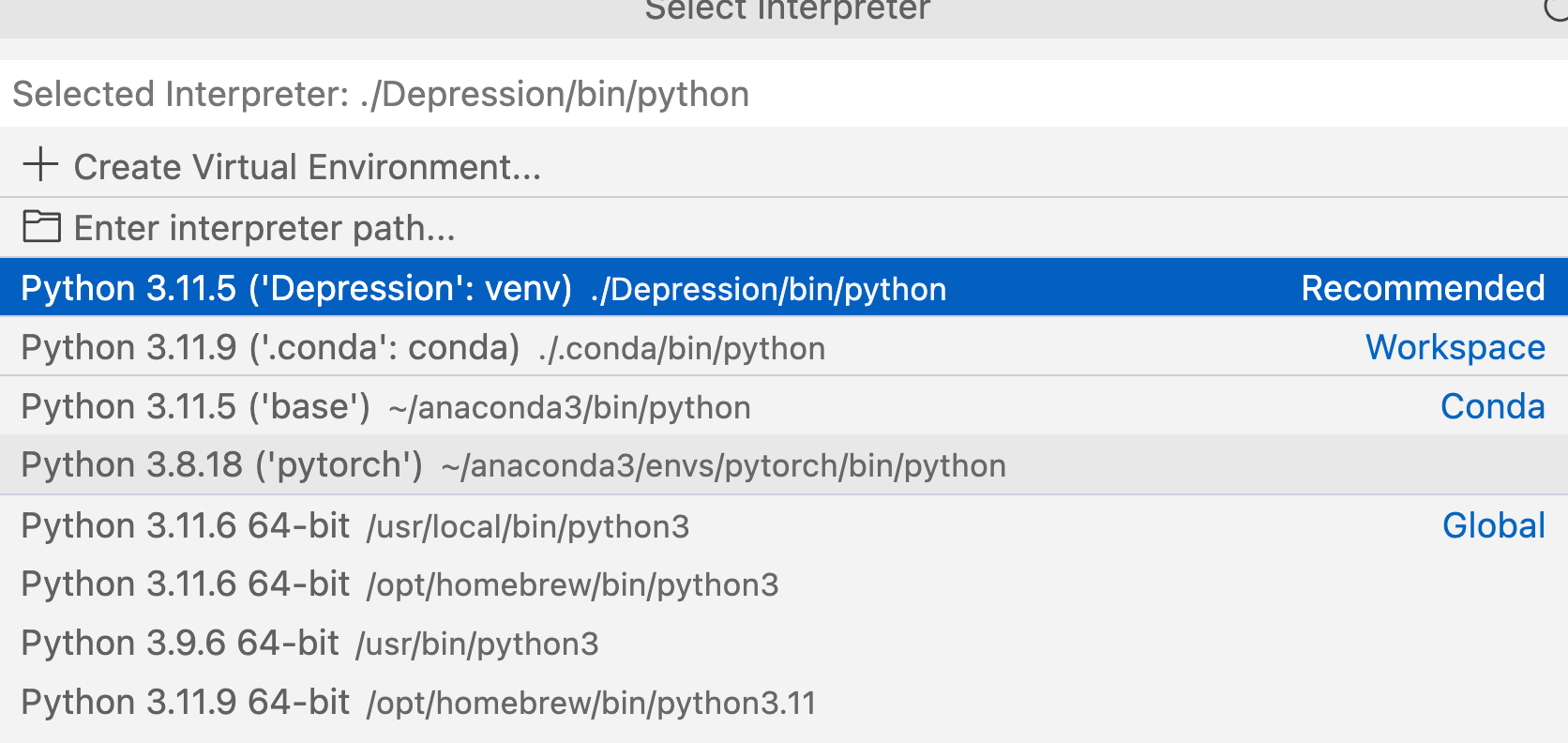

We specify the configuration of the small GPT-2 model via the following Python dictionary, which we will use in the code examples later:

我们通过以下Python字典指定小型GPT-2模型的配置,我们将在后面的代码示例中使用它:

GPT_CONFIG_124M = {

"vocab_size": 50257,

# 词汇量大小

"context_length": 1024, # 上下文长度

"emb_dim": 768, # 嵌入维度

"n_heads": 12, # 注意力头数

"n_layers": 12, # 层数

"drop_rate": 0.1, # 丢弃率

"qkv_bias": False # 查询-键-值偏差

}

In the GPT_CONFIG_124M dictionary, we use concise variable names for clarity and to prevent long lines of code:

在GPT_CONFIG_124M字典中,我们使用简洁的变量名以清晰表达并防止代码行过长:

-

“vocab_size” refers to a vocabulary of 50,257 words, as used by the BPE tokenizer from chapter 2.

-

"vocab_size"指的是包含50,257个词汇的词汇表,由第2章的BPE分词器使用。

-

“context_length” denotes the maximum number of input tokens the model can handle, via the positional embeddings discussed in chapter 2.

-

“context_length” 表示模型可以处理的最大输入词元数,通过第2章讨论的位置嵌入实现。

-

“emb_dim” represents the embedding size, transforming each token into a 768-dimensional vector.

-

“emb_dim” 表示嵌入大小,将每个词元转换为768维向量。

-

“n_heads” indicates the count of attention heads in the multi-head attention mechanism, as implemented in chapter 3.

-

“n_heads” 表示多头注意力机制中的注意力头数,如第3章实现。

-

“n_layers” specifies the number of transformer blocks in the model, which will be elaborated on in upcoming sections.

-

“n_layers” 指定模型中的transformer块的数量,这将在后续章节中详细说明。

-

“drop_rate” indicates the intensity of the dropout mechanism (0.1 implies a 10% drop of hidden units) to prevent overfitting, as covered in chapter 3.

-

“drop_rate” 表示丢弃机制的强度(0.1表示丢弃10%的隐藏单元)以防止过拟合,如第3章所述。

-

“qkv_bias” determines whether to include a bias vector in the linear layers of the multi-head attention for query, key, and value computations.We will initially disable this, following the norms of modern LLMs, but will revisit it in chapter 6 when we load pretrained GPT-2 weights from OpenAI into our model.

-

“qkv_bias” 决定是否在多头注意力的查询、键和值计算的线性层中包含偏置向量。我们最初将禁用此功能,遵循现代LLM的规范,但将在第6章中加载OpenAI预训练的GPT-2权重到我们的模型中时重新审视它。

Using the configuration above, we will start this chapter by implementing a GPT placeholder architecture (DummyGPTModel) in this section, as shown in Figure 4.3. This will provide us with a big-picture view of how everything fits together and what other components we need to code in the upcoming sections to assemble the full GPT model architecture.

使用上述配置,我们将在本节开始实现一个GPT占位架构(DummyGPTModel),如图4.3所示。这将为我们提供一个整体视图,展示所有部分如何组合在一起,以及在接下来的部分中组装完整的GPT模型架构所需的其他组件。

Figure 4.3 A mental model outlining the order in which we code the GPT architecture. In this chapter, we will start with the GPT backbone, a placeholder architecture, before we get to the individual core pieces and eventually assemble them in a transformer block for the final GPT architecture.

图4.3 描述了我们编写GPT架构的顺序的心智模型。在本章中,我们将从GPT骨干开始,这是一个占位架构,然后再逐步实现各个核心部分,并最终将它们组装成transformer块以构建最终的GPT架构。

The numbered boxes shown in Figure 4.3 illustrate the order in which we tackle the individual concepts required to code the final GPT architecture. We will start with step 1, a placeholder GPT backbone we call DummyGPTModel:

图4.3中显示的编号框说明了我们处理编写最终GPT架构所需的各个概念的顺序。我们将从第1步开始,这是我们称之为DummyGPTModel的占位GPT骨干:

Listing 4.1 A placeholder GPT model architecture class

import torch # 导入torch库

import torch.nn as nn # 导入torch.nn库并重命名为nn

class DummyGPTModel(nn.Module): # 定义DummyGPTModel类,继承nn.Module

def __init__(self, cfg): # 定义初始化方法

super().__init__() # 调用父类的初始化方法

self.tok_emb = nn.Embedding(cfg["vocab_size"], cfg["emb_dim"]) # 定义词元嵌入层

self.pos_emb = nn.Embedding(cfg["context_length"], cfg["emb_dim"]) # 定义位置嵌入层

self.drop_emb = nn.Dropout(cfg["drop_rate"]) # 定义丢弃层

self.trf_blocks = nn.Sequential( # 定义顺序容器,用于存放transformer块

*[DummyTransformerBlock(cfg) for _ in range(cfg["n_layers"])]) # 使用占位transformer块

self.final_norm = DummyLayerNorm(cfg["emb_dim"]) # 使用占位层归一化

self.out_head = nn.Linear( # 定义线性层

cfg["emb_dim"], cfg["vocab_size"], bias=False)

def forward(self, in_idx): # 定义前向传播方法

batch_size, seq_len = in_idx.shape # 获取批量大小和序列长度

tok_embeds = self.tok_emb(in_idx) # 获取词元嵌入

pos_embeds = self.pos_emb(torch.arange(seq_len, device=in_idx.device)) # 获取位置嵌入

x = tok_embeds + pos_embeds # 合并嵌入

x = self.drop_emb(x) # 应用丢弃层

x = self.trf_blocks(x) # 应用transformer块

x = self.final_norm(x) # 应用归一化层

logits = self.out_head(x) # 计算输出

return logits # 返回输出

class DummyTransformerBlock(nn.Module): # 定义占位transformer块类,继承nn.Module

def __init__(self, cfg): # 定义初始化方法

super().__init__() # 调用父类的初始化方法

def forward(self, x): # 定义前向传播方法

return x # 返回输入

class DummyLayerNorm(nn.Module): # 定义占位层归一化类,继承nn.Module

def __init__(self, normalized_shape, eps=1e-5): # 定义初始化方法

super().__init__() # 调用父类的初始化方法

def forward(self, x): # 定义前向传播方法

return x # 返回输入

The DummyGPTModel class in this code defines a simplified version of a GPT-like model using PyTorch’s neural network module (nn.Module). The model architecture in the DummyGPTModel class consists of token and positional embeddings, dropout, a series of transformer blocks (DummyTransformerBlock), a final layer normalization (DummyLayerNorm), and a linear output layer (out_head). The configuration is passed in via a Python dictionary, for instance, the GPT_CONFIG_124M dictionary we created earlier.

这段代码中的DummyGPTModel类定义了一个简化版的类似GPT的模型,使用了PyTorch的神经网络模块(nn.Module)。DummyGPTModel类中的模型架构包括词元和位置嵌入、丢弃层、一系列transformer块(DummyTransformerBlock)、最终层归一化(DummyLayerNorm)和线性输出层(out_head)。配置通过一个Python字典传入,例如我们之前创建的GPT_CONFIG_124M字典。

The forward method describes the data flow through the model: it computes token and positional embeddings for the input indices, applies dropout, processes the data through the transformer blocks, applies normalization, and finally produces logits with the linear output layer.

forward方法描述了数据在模型中的流动:它计算输入索引的词元和位置嵌入,应用丢弃层,通过transformer块处理数据,应用归一化,最后通过线性输出层生成logits。

The code above is already functional, as we will see later in this section after we prepare the input data. However, for now, note in the code above that we have used placeholders (DummyLayerNorm and DummyTransformerBlock) for the transformer block and layer normalization, which we will develop in later sections.

上述代码已经具备功能性,我们将在本节后面准备输入数据时看到这一点。然而,目前请注意,上述代码中我们使用了占位符(DummyLayerNorm和DummyTransformerBlock)来表示transformer块和层归一化,我们将在后面的章节中进行开发。

Next, we will prepare the input data and initialize a new GPT model to illustrate its usage. Building on the figures we have seen in chapter 2, where we coded the tokenizer, Figure 4.4 provides a high-level overview of how data flows in and out of a GPT model.

接下来,我们将准备输入数据并初始化一个新的GPT模型来说明其使用方法。基于我们在第2章中看到的代码编写分词器的图,图4.4提供了数据在GPT模型中进出流动的高级概述。

Figure 4.4 A big-picture overview showing how the input data is tokenized, embedded, and fed to the GPT model. Note that in our DummyGPTClass coded earlier, the token embedding is handled inside the GPT model. In LLMs, the embedded input token dimension typically matches the output dimension. The output embeddings here represent the context vectors we discussed in chapter 3.

图4.4 显示了输入数据如何被分词、嵌入并馈送到GPT模型的整体视图。请注意,在我们之前编写的DummyGPTClass中,词元嵌入在GPT模型内部处理。在LLM中,嵌入的输入词元维度通常与输出维度相匹配。这里的输出嵌入表示我们在第3章中讨论的上下文向量。

To implement the steps shown in Figure 4.4, we tokenize a batch consisting of two text inputs for the GPT model using the tokenizer introduced in chapter 2:

为了实现图4.4中显示的步骤,我们使用第2章中介绍的分词器对包含两个文本输入的批处理进行分词,以用于GPT模型:

import tiktoken # 导入tiktoken库

tokenizer = tiktoken.get_encoding("gpt2") # 获取GPT-2编码器

batch = [] # 初始化批处理列表

txt1 = "Every effort moves you" # 文本1

txt2 = "Every day holds a" # 文本2

batch.append(torch.tensor(tokenizer.encode(txt1))) # 将文本1编码并添加到批处理列表

batch.append(torch.tensor(tokenizer.encode(txt2))) # 将文本2编码并添加到批处理列表

batch = torch.stack(batch, dim=0) # 将批处理列表堆叠成张量

print(batch) # 输出批处理张量

The resulting token IDs for the two texts are as follows:

下面是两个文本对应的词元ID:

tensor([[ 6109, 3626, 6100, 345], #A

[ 6109, 1110, 6622, 257]]) #A

#A The first row corresponds to the first text, and the second row corresponds to the second text

#A 第一行对应第一个文本,第二行对应第二个文本

Next, we initialize a new 124 million parameter DummyGPTModel instance and feed it the tokenized batch:

接下来,我们初始化一个拥有1.24亿参数的DummyGPTModel实例,并将分词后的批处理输入其中:

torch.manual_seed(123) # 设置随机种子

model = DummyGPTModel(GPT_CONFIG_124M) # 创建DummyGPTModel实例

logits = model(batch) # 获取模型输出logits

print("Output shape:", logits.shape) # 输出logits的形状

print(logits) # 输出logits

The model outputs, which are commonly referred to as logits, are as follows:

模型输出,即通常所说的logits如下:

Output shape: torch.Size([2, 4, 50257])

tensor([[[-1.2034, 0.3201, -0.7130, ..., -1.5548, -0.2390, -0.4667],

[-0.1192, 0.4539, -0.4432, ..., 0.2392, 1.3469, 1.2430],

[ 0.5307, 1.6720, -0.4695, ..., 1.1966, 0.0111, 0.5835],

[ 0.0139, 1.6755, -0.3388, ..., 1.1586, -0.0435, -1.0400]],

[[-1.9088, 0.1798, -0.9484, ..., -1.6047, 0.2439, -0.4530],

[-0.7860, 0.5581, -0.0610, ..., 0.4835, -0.0077, 1.6621],

[ 0.3567, 1.2698, -0.6398, ..., -0.0162, -0.1296, 0.3771],

[-0.2407, -0.7349, -0.5102, ..., 2.0057, -0.3694, 0.1814]]],

grad_fn=<UnsafeViewBackward0>)

The output tensor has two rows corresponding to the two text samples. Each text sample consists of 4 tokens; each token is a 50,257-dimensional vector, which matches the size of the tokenizer’s vocabulary.

输出张量有两行,对应于两个文本样本。每个文本样本由4个词元组成;每个词元是一个50257维的向量,这与分词器的词汇表大小相匹配。

The embedding has 50,257 dimensions because each of these dimensions refers to a unique token in the vocabulary. At the end of this chapter, when we implement the postprocessing code, we will convert these 50,257-dimensional vectors back into token IDs, which we can then decode into words.

嵌入有50257个维度,因为这些维度中的每一个都对应于词汇表中的一个唯一词元。在本章末尾,当我们实现后处理代码时,我们将把这些50257维向量转换回词元ID,然后我们可以将其解码为单词。

Now that we have taken a top-down look at the GPT architecture and its in- and outputs, we will code the individual placeholders in the upcoming sections, starting with the real layer normalization class that will replace the DummyLayerNorm in the previous code.

现在我们已经从上到下看了一下GPT的架构及其输入和输出,我们将在接下来的部分中编写各个占位符,从真实的层归一化类开始,以替换之前代码中的DummyLayerNorm。

4.2 Normalizing activations with layer normalization

Training deep neural networks with many layers can sometimes prove challenging due to issues like vanishing or exploding gradients. These issues lead to unstable training dynamics and make it difficult for the network to effectively adjust its weights, which means the learning process struggles to find a set of parameters (weights) for the neural network that minimizes the loss function. In other words, the network has difficulty learning the underlying patterns in the data to a degree that would allow it to make accurate predictions or decisions. (If you are new to neural network training and the concepts of gradients, a brief introduction to these concepts can be found in Section A.4, Automatic Differentiation Made Easy in Appendix A: Introduction to PyTorch. However, a deep mathematical understanding of gradients is not required to follow the contents of this book.)

用许多层训练深度神经网络有时会因梯度消失或梯度爆炸等问题而变得具有挑战性。这些问题导致训练动态不稳定,并且难以使网络有效调整其权重,这意味着学习过程难以找到一组参数(权重),以使神经网络最小化损失函数。换句话说,网络难以学习数据中的基本模式,难以进行准确的预测或决策。(如果您是神经网络训练和梯度概念的新手,可以在附录A的第A.4节《自动微分简易》中找到这些概念的简要介绍。然而,要理解本书内容并不需要对梯度有深入的数学理解。)

In this section, we will implement layer normalization to improve the stability and efficiency of neural network training.

在本节中,我们将实现层归一化,以提高神经网络训练的稳定性和效率。

The main idea behind layer normalization is to adjust the activations (outputs) of a neural network layer to have a mean of 0 and a variance of 1, also known as unit variance. This adjustment speeds up the convergence to effective weights and ensures consistent, reliable training. As we have seen in the previous section, based on the DummyLayerNorm placeholder, in GPT-2 and modern transformer architectures, layer normalization is typically applied before and after the multi-head attention module and before the final output layer.

层归一化的主要思想是调整神经网络层的激活(输出),使其均值为0,方差为1也称为单位方差。此调整加速了有效权重的收敛,并确保一致可靠的训练。正如我们在前一节中所看到的,基于DummyLayerNorm占位符,在GPT-2和现代transformer架构中,层归一化通常应用于多头注意力模块之前和之后以及最终输出层之前。

Before we implement layer normalization in code, Figure 4.5 provides a visual overview of how layer normalization functions.

在我们用代码实现层归一化之前,图4.5提供了层归一化功能的视觉概述。

Figure 4.5 An illustration of layer normalization where the 5 layer outputs, also called activations, are normalized such that they have a zero mean and variance of 1.

图4.5 层归一化的示意图,其中5个层输出(也称为激活)被归一化,使它们的均值为0,方差为1。

We can recreate the example shown in Figure 4.5 via the following code, where we implement a neural network layer with 5 inputs and 6 outputs that we apply to two input examples:

我们可以通过以下代码重现图4.5中显示的示例,其中我们实现了一个具有5个输入和6个输出的神经网络层,并将其应用于两个输入示例:

torch.manual_seed(123) # 设置随机种子

batch_example = torch.randn(2, 5) #A

layer = nn.Sequential(nn.Linear(5, 6), nn.ReLU()) # 创建顺序容器,包括线性层和ReLU激活函数

out = layer(batch_example) # 计算输出

print(out) # 输出结果

This prints the following tensor, where the first row lists the layer outputs for the first input and the second row lists the layer outputs for the second row:

这将打印以下张量,其中第一行列出第一个输入的层输出,第二行列出第二个输入的层输出:

tensor([[0.2260, 0.3470, 0.0000, 0.2216, 0.0000, 0.0000],

[0.2133, 0.2394, 0.0000, 0.5198, 0.3297, 0.0000]],

grad_fn=<ReluBackward0>)

The neural network layer we have coded consists of a Linear layer followed by a non-linear activation function, ReLU (short for Rectified Linear Unit), which is a standard activation function in neural networks. If you are unfamiliar with ReLU, it simply thresholds negative inputs to 0, ensuring that a layer outputs only positive values, which explains why the resulting layer output does not contain any negative values. (Note that we will use another, more sophisticated activation function in GPT, which we will introduce in the next section).

我们编写的神经网络层由一个线性层和一个非线性激活函数ReLU(整流线性单元的缩写)组成,这是神经网络中的标准激活函数。如果您不熟悉ReLU,它只是将负输入设为0,确保层只输出正值,这解释了为什么生成的层输出不包含任何负值。(请注意,我们将在GPT中使用另一种更复杂的激活函数,我们将在下一节中介绍它。)

Before we apply layer normalization to these outputs, let’s examine the mean and variance:

在我们对这些输出应用层归一化之前,让我们检查一下均值和方差:

mean = out.mean(dim=-1, keepdim=True) # 计算均值

var = out.var(dim=-1, keepdim=True) # 计算方差

print("Mean:\n", mean) # 打印均值

print("Variance:\n", var) # 打印方差

The output is as follows:

输出如下:

Mean:

tensor([[0.1324],

[0.2170]], grad_fn=<MeanBackward1>)

Variance:

tensor([[0.0231],

[0.0398]], grad_fn=<VarBackward0>)

The first row in the mean tensor above contains the mean value for the first input row, and the second output row contains the mean for the second input row.

上面均值张量的第一行包含第一个输入行的均值,第二输出行包含第二个输入行的均值。

Using keepdim=True in operations like mean or variance calculation ensures that the output tensor retains the same number of dimensions as the input tensor, even though the operation reduces the tensor along the dimension specified via dim. For instance, without keepdim=True, the returned mean tensor would be a 2-dimensional vector [0.1324, 0.2170] instead of a 2x1-dimensional matrix [[0.1324], [0.2170]].

在计算均值或方差等操作中使用keepdim=True可以确保输出张量保持与输入张量相同的维度数,即使操作在指定的维度上减少了张量。例如,如果不使用keepdim=True,返回的均值张量将是一个二维向量[0.1324, 0.2170],而不是一个2x1的矩阵[[0.1324], [0.2170]]。

The dim parameter specifies the dimension along which the calculation of the statistic (here, mean or variance) should be performed in a tensor, as shown in Figure 4.6.

dim参数指定统计量(这里是均值或方差)应在张量的哪个维度上进行计算,如图4.6所示。

Figure 4.6 An illustration of the dim parameter when calculating the mean of a tensor. For instance, if we have a 2D tensor (matrix) with dimensions [rows, columns], using dim=0 will perform the operation across rows (vertically, as shown at the bottom), resulting in an output that aggregates the data for each column. Using dim=1 or dim=-1 will perform the operation across columns (horizontally, as shown at the top), resulting in an output aggregating the data for each row.

图4.6 计算张量均值时dim参数的示意图。例如,如果我们有一个二维张量(矩阵),其维度为[行,列],使用dim=0将在行之间执行操作(垂直,如底部所示),产生一个汇总每列数据的输出。使用dim=1或dim=-1将在列之间执行操作(水平,如顶部所示),产生一个汇总每行数据的输出。

As Figure 4.6 explains, for a 2D tensor (like a matrix), using dim=-1 for operations such as mean or variance calculation is the same as using dim=1. This is because -1 refers to the tensor’s last dimension, which corresponds to the columns in a 2D tensor. Later, when adding layer normalization to the GPT model, which produces 3D tensors with shape [batch_size, num_tokens, embedding_size], we can still use dim=-1 for normalization across the last dimension, avoiding a change from dim=1 to dim=2.

如图4.6所述,对于二维张量(如矩阵),使用dim=-1进行均值或方差计算等操作与使用dim=1相同。这是因为-1指的是张量的最后一个维度,对应于二维张量中的列。稍后,当将层归一化添加到GPT模型中时,该模型生成形状为[batch_size, num_tokens, embedding_size]的三维张量,我们仍然可以使用dim=-1进行最后一个维度的归一化,避免从dim=1变为dim=2。

Next, let us apply layer normalization to the layer outputs we obtained earlier. The operation consists of subtracting the mean and dividing by the square root of the variance (also known as standard deviation):

接下来,让我们对先前获得的层输出应用层归一化。操作包括减去均值并除以方差的平方根(也称为标准差):

out_norm = (out - mean) / torch.sqrt(var) # 标准化输出

mean = out_norm.mean(dim=-1, keepdim=True) # 计算标准化输出的均值

var = out_norm.var(dim=-1, keepdim=True) # 计算标准化输出的方差

print("Normalized layer outputs:\n", out_norm) # 打印标准化层输出

print("Mean:\n", mean) # 打印均值

print("Variance:\n", var) # 打印方差

As we can see based on the results, the normalized layer outputs, which now also contain negative values, have zero mean and a variance of 1:

根据结果,我们可以看到,标准化的层输出现在也包含负值,其均值为零,方差为1:

Normalized layer outputs:

tensor([[ 0.6159, 1.4126, -0.8719, 0.5872, -0.8719, -0.8719],

[-0.0189, 0.1121, -1.0876, 1.5173, 0.5647, -1.0876]],

grad_fn=<DivBackward0>)

Mean:

tensor([[2.9802e-08],

[3.9736e-08]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.],

[1.]], grad_fn=<VarBackward0>)

Note that the value 2.9802e-08 in the output tensor is the scientific notation for 2.9802 × 10^-8, which is 0.0000000298 in decimal form. This value is very close to 0, but it is not exactly 0 due to small numerical errors that can accumulate because of the finite precision with which computers represent numbers.

请注意,输出张量中的值2.9802e-08是2.9802 × 10^-8的科学记数法,即小数形式的0.0000000298。这个值非常接近0,但由于计算机表示数字的有限精度,会累积一些小的数值误差,因此不完全是0。

To improve readability, we can also turn off the scientific notation when printing tensor values by setting sci_mode to False:

为了提高可读性,我们也可以通过将sci_mode设置为False来关闭打印张量值时的科学记数法:

torch.set_printoptions(sci_mode=False) # 设置打印选项,不使用科学记数法

print("Mean:\n", mean) # 打印均值

print("Variance:\n", var) # 打印方差

The output is as follows:

输出如下:

Mean:

tensor([[0.0000],

[0.0000]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.],

[1.]], grad_fn=<VarBackward0>)

So far, in this section, we have coded and applied layer normalization in a step-by-step process. Let’s now encapsulate this process in a PyTorch module that we can use in the GPT model later:

到目前为止,在本节中,我们已经逐步编写和应用了层归一化。现在让我们将此过程封装在一个PyTorch模块中,以便我们稍后在GPT模型中使用:

Listing 4.2 A layer normalization class

清单 4.2 层标准化类

class LayerNorm(nn.Module): # 定义LayerNorm类,继承nn.Module

def __init__(self, emb_dim): # 初始化方法

super().__init__() # 调用父类的初始化方法

self.eps = 1e-5 # 设置epsilon值

self.scale = nn.Parameter(torch.ones(emb_dim)) # 定义可训练的scale参数

self.shift = nn.Parameter(torch.zeros(emb_dim)) # 定义可训练的shift参数

def forward(self, x): # 定义前向传播方法

mean = x.mean(dim=-1, keepdim=True) # 计算均值

var = x.var(dim=-1, keepdim=True, unbiased=False) # 计算方差

norm_x = (x - mean) / torch.sqrt(var + self.eps) # 进行归一化

return self.scale * norm_x + self.shift # 返回归一化后的输出

This specific implementation of layer normalization operates on the last dimension of the input tensor x, which represents the embedding dimension (emb_dim). The variable eps is a small constant (epsilon) added to the variance to prevent division by zero during normalization. The scale and shift are two trainable parameters (of the same dimension as the input) that the LLM automatically adjusts during training if it is determined that doing so would improve the model’s performance on its training task. This allows the model to learn appropriate scaling and shifting that best suit the data it is processing.

这种具体的层归一化实现操作于输入张量x的最后一个维度,即嵌入维度(emb_dim)。变量eps是一个小常数(epsilon),在归一化期间添加到方差中以防止除零。scale和shift是两个可训练的参数(与输入维度相同),如果确定这样做可以提高模型在其训练任务上的性能,LLM会在训练期间自动调整这些参数。这允许模型学习适当的缩放和移位,以最佳适应其正在处理的数据。

BIASED VARIANCE

偏差方差

In our variance calculation method, we have opted for an implementation detail by setting unbiased=False. For those curious about what this means, in the variance calculation, we divide by the number of inputs n in the variance formula. This approach does not apply Bessel’s correction, which typically uses n-1 instead of n in the denominator to adjust for bias in sample variance estimation. This decision results in a so-called biased estimate of the variance. For large-scale language models (LLMs), where the embedding dimension n is significantly large, the difference between using n and n-1 is practically negligible. We chose this approach to ensure compatibility with the GPT-2 model’s normalization layers and because it reflects TensorFlow’s default behavior, which was used to implement the original GPT-2 model. Using a similar setting ensures our method is compatible with the pretrained weights we will load in chapter 6.

在我们的方差计算方法中,我们通过设置unbiased=False选择了一种实现细节。对于那些好奇这意味着什么的人,在方差计算中,我们在方差公式中除以输入数n。这种方法不适用贝塞尔校正,后者通常使用n-1而不是n作为分母来调整样本方差估计中的偏差。这个决定导致了所谓的偏差方差估计。对于大规模语言模型(LLM),其中嵌入维度n显著大,使用n和n-1之间的差异几乎可以忽略不计。我们选择这种方法是为了确保与GPT-2模型的归一化层兼容,并且因为它反映了实现原始GPT-2模型的TensorFlow的默认行为。使用类似设置可以确保我们的方法与我们将在第6章中加载的预训练权重兼容。

Let’s now try the LayerNorm module in practice and apply it to the batch input:

现在让我们实际尝试LayerNorm模块并将其应用于批处理输入:

ln = LayerNorm(emb_dim=5) # 创建LayerNorm实例

out_ln = ln(batch_example) # 对批处理示例应用LayerNorm

mean = out_ln.mean(dim=-1, keepdim=True) # 计算标准化输出的均值

var = out_ln.var(dim=-1, unbiased=False, keepdim=True) # 计算标准化输出的方差

print("Mean:\n", mean) # 打印均值

print("Variance:\n", var) # 打印方差

As we can see based on the results, the layer normalization code works as expected and normalizes the values of each of the two inputs such that they have a mean of 0 and a variance of 1:

根据结果,我们可以看到,层归一化代码按预期工作,并且归一化了每个输入的值,使它们的均值为0,方差为1:

Mean:

tensor([[-0.0000],

[ 0.0000]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.0000],

[1.0000]], grad_fn=<VarBackward0>)

In this section, we covered one of the building blocks we will need to implement the GPT architecture, as shown in the mental model in Figure 4.7.

在本节中,我们介绍了实现GPT架构所需的一个构建模块,如图4.7中的心智模型所示。

Figure 4.7 A mental model listing the different building blocks we implement in this chapter to assemble the GPT architecture.

图4.7 列出了我们在本章中实现的不同构建模块,以组装GPT架构的心智模型。

In the next section, we will look at the GELU activation function, which is one of the activation functions used in LLMs, instead of the traditional ReLU function we used in this section.

在下一节中,我们将研究GELU激活函数,这是LLM中使用的激活函数之一,而不是我们在本节中使用的传统ReLU函数。

LAYER NORMALIZATION VERSUS BATCH NORMALIZATION

** 层归一化与批量归一化**

If you are familiar with batch normalization, a common and traditional normalization method for neural networks, you may wonder how it compares to layer normalization. Unlike batch normalization, which normalizes across the batch dimension, layer normalization normalizes across the feature dimension. LLMs often require significant computational resources, and the available hardware or the specific use case can dictate the batch size during training or inference. Since layer normalization normalizes each input independently of the batch size, it offers more flexibility and stability in these scenarios. This is particularly beneficial for distributed training or when deploying models in environments where resources are constrained.

如果你熟悉批归一化,这是一种常见且传统的神经网络归一化方法,你可能会想知道它与层归一化相比如何。与批归一化不同,批归一化在批次维度上进行归一化,而层归一化在特征维度上进行归一化。LLM通常需要大量的计算资源,可用的硬件或特定的用例可以决定训练或推理期间的批大小。由于层归一化独立于批大小归一化每个输入,因此在这些情况下提供了更多的灵活性和稳定性。这对于分布式训练或在资源受限的环境中部署模型特别有利。

4.3 Implementing a feed forward network with GELU activations

In this section, we implement a small neural network submodule that is used as part of the transformer block in LLMs. We begin with implementing the GELU activation function, which plays a crucial role in this neural network submodule. (For additional information on implementing neural networks in PyTorch, please see section A.5 Implementing multilayer neural networks in Appendix A.)

在本节中,我们实现了一个小型神经网络子模块,作为LLM中transformer块的一部分。我们从实现GELU激活函数开始,它在这个神经网络子模块中起着至关重要的作用。(有关在PyTorch中实现神经网络的更多信息,请参见附录A的第A.5节《实现多层神经网络》。)

Historically, the ReLU activation function has been commonly used in deep learning due to its simplicity and effectiveness across various neural network architectures. However, in LLMs, several other activation functions are employed beyond the traditional ReLU. Two notable examples are GELU (Gaussian Error Linear Unit) and SwiGLU (Swish-Gated Linear Unit).

历史上,ReLU激活函数由于其简单性和在各种神经网络架构中的有效性而被广泛用于深度学习。然而,在LLM中,除了传统的ReLU之外,还采用了其他几种激活函数。两个显著的例子是GELU(高斯误差线性单元)和SwiGLU(Swish门控线性单元)。

GELU and SwiGLU are more complex and smooth activation functions incorporating Gaussian and sigmoid-gated linear units, respectively. They offer improved performance for deep learning models, unlike the simpler ReLU.

GELU和SwiGLU是更复杂和平滑的激活函数,分别结合了高斯和sigmoid门控线性单元。与简单的ReLU不同,它们为深度学习模型提供了更好的性能。

The GELU activation function can be implemented in several ways; the exact version is defined as GELU(x)=x·Φ(x), where Φ(x) is the cumulative distribution function of the standard Gaussian distribution. In practice, however, it’s common to implement a computationally cheaper approximation (the original GPT-2 model was also trained with this approximation):

GELU激活函数可以通过多种方式实现;其确切版本定义为GELU(x)=x·Φ(x),其中Φ(x)是标准高斯分布的累积分布函数。然而,在实践中,通常实现一种计算成本较低的近似(最初的GPT-2模型也是用这种近似训练的):

GELU ( x ) ≈ 0.5 ⋅ x ⋅ ( 1 + tanh ( 2 / π ⋅ ( x + 0.044715 ⋅ x 3 ) ) ) \text{GELU}(x) \approx 0.5 \cdot x \cdot (1 + \tanh(\sqrt{2 / \pi} \cdot (x + 0.044715 \cdot x^3))) GELU(x)≈0.5⋅x⋅(1+tanh(2/π⋅(x+0.044715⋅x3)))

In code, we can implement this function as PyTorch module as follows:

在代码中,我们可以将此函数实现为PyTorch模块,如下所示:

Listing 4.3 An implementation of the GELU activation function

清单 4.3 GELU 激活函数的实现

class GELU(nn.Module): # 定义GELU类,继承nn.Module

def __init__(self): # 初始化方法

super().__init__() # 调用父类的初始化方法

def forward(self, x): # 定义前向传播方法

return 0.5 * x * (1 + torch.tanh(

torch.sqrt(torch.tensor(2.0 / torch.pi)) *

(x + 0.044715 * torch.pow(x, 3))

))

Next, to get an idea of what this GELU function looks like and how it compares to the ReLU function, let’s plot these functions side by side:

接下来,为了了解这个GELU函数的样子以及它与ReLU函数的比较,让我们将这些函数并排绘制出来:

import matplotlib.pyplot as plt # 导入matplotlib.pyplot模块并重命名为plt

gelu, relu = GELU(), nn.ReLU() # 创建GELU和ReLU实例

x = torch.linspace(-3, 3, 100) # 在-3到3的范围内创建100个样本数据点

y_gelu, y_relu = gelu(x), relu(x) # 分别计算GELU和ReLU的输出

plt.figure(figsize=(8, 3)) # 设置图形大小

for i, (y, label) in enumerate(zip([y_gelu, y_relu], ["GELU", "ReLU"]), 1): # 枚举GELU和ReLU输出及其标签

plt.subplot(1, 2, i) # 创建子图

plt.plot(x, y) # 绘制函数图形

plt.title(f"{label} activation function") # 设置图形标题

plt.xlabel("x") # 设置x轴标签

plt.ylabel(f"{label}(x)") # 设置y轴标签

plt.grid(True) # 显示网格线

plt.tight_layout() # 紧凑布局

plt.show() # 显示图形

As we can see in the resulting plot in Figure 4.8, ReLU is a piecewise linear function that outputs the input directly if it is positive; otherwise, it outputs zero. GELU is a smooth, non-linear function that approximates ReLU but with a non-zero gradient for negative values.

正如我们在图4.8的结果图中所见,ReLU是一个分段线性函数,如果输入为正,则直接输出输入;否则,输出为零。GELU是一个平滑的非线性函数,它近似于ReLU,但对于负值有一个非零梯度。

Figure 4.8 The output of the GELU and ReLU plots using matplotlib. The x-axis shows the function inputs and the y-axis shows the function outputs.

图4.8 使用matplotlib绘制的GELU和ReLU图的输出。x轴显示函数输入,y轴显示函数输出。

The smoothness of GELU, as shown in Figure 4.8, can lead to better optimization properties during training, as it allows for more nuanced adjustments to the model’s parameters. In contrast, ReLU has a sharp corner at zero, which can sometimes make optimization harder, especially in networks that are very deep or have complex architectures. Moreover, unlike ReLU, which outputs zero for any negative input, GELU allows for a small, non-zero output for negative values. This characteristic means that during the training process, neurons that receive negative input can still contribute to the learning process, albeit to a lesser extent than positive inputs.

如图4.8所示,GELU的平滑性在训练过程中可以导致更好的优化特性,因为它允许对模型参数进行更细致的调整。相比之下,ReLU在零处有一个尖锐的拐角,这有时会使优化变得更困难,尤其是在非常深或具有复杂架构的网络中。此外,与ReLU不同,ReLU对于任何负输入输出为零,GELU允许负值有一个小的非零输出。这一特性意味着在训练过程中,接收负输入的神经元仍然可以为学习过程做出贡献,尽管贡献比正输入小。

Next, let’s use the GELU function to implement the small neural network module, FeedForward, that we will be using in the LLM’s transformer block later:

接下来,让我们使用GELU函数来实现一个小型神经网络模块FeedForward,我们将在LLM的transformer块中使用它:

Listing 4.4 A feed forward neural network module

清单 4.4 前馈神经网络模块

class FeedForward(nn.Module): # 定义FeedForward类,继承nn.Module

def __init__(self, cfg): # 初始化方法

super().__init__() # 调用父类的初始化方法

self.layers = nn.Sequential( # 使用顺序容器定义网络层

nn.Linear(cfg["emb_dim"], 4 * cfg["emb_dim"]), # 线性层1

GELU(), # GELU激活函数

nn.Linear(4 * cfg["emb_dim"], cfg["emb_dim"]), # 线性层2

)

def forward(self, x): # 定义前向传播方法

return self.layers(x) # 返回网络层的输出

As we can see in the preceding code, the FeedForward module is a small neural network consisting of two Linear layers and a GELU activation function. In the 124 million parameter GPT model, it receives the input batches with tokens that have an embedding size of 768 each via the GPT_CONFIG_124M dictionary where GPT_CONFIG_124M[“emb_dim”] = 768.

如前面的代码所示,FeedForward模块是一个由两个线性层和一个GELU激活函数组成的小型神经网络。在拥有1.24亿参数的GPT模型中,它通过GPT_CONFIG_124M字典接收具有嵌入大小为768的词元输入批次,其中GPT_CONFIG_124M[“emb_dim”] = 768。

Figure 4.9 shows how the embedding size is manipulated inside this small feed forward neural network when we pass it some inputs.

图4.9 显示了当我们传递一些输入时,嵌入大小在这个小型前馈神经网络中的操作方式。

Figure 4.9 provides a visual overview of the connections between the layers of the feed forward neural network. It is important to note that this neural network can accommodate variable batch sizes and numbers of tokens in the input. However, the embedding size for each token is determined and fixed when initializing the weights.

图4.9 提供了前馈神经网络层之间连接的视觉概述。重要的是要注意,这个神经网络可以适应输入中的可变批大小和词元数量。然而,每个词元的嵌入大小在初始化权重时是确定和固定的。

Following the example in Figure 4.9, let’s initialize a new FeedForward module with a token embedding size of 768 and feed it a batch input with 2 samples and 3 tokens each:

按照图4.9中的示例,我们初始化一个新的FeedForward模块,其词元嵌入大小为768,并将其输入一个包含2个样本和每个样本3个词元的批处理输入:

ffn = FeedForward(GPT_CONFIG_124M) # 初始化FeedForward模块

x = torch.rand(2, 3, 768) #创建批大小为2的样本输入

out = ffn(x) # 获取FeedForward模块的输出

print(out.shape) # 打印输出张量的形状

As we can see, the shape of the output tensor is the same as that of the input tensor:

正如我们所见,输出张量的形状与输入张量的形状相同:

torch.Size([2, 3, 768])

The FeedForward module we implemented in this section plays a crucial role in enhancing the model’s ability to learn from and generalize the data. Although the input and output dimensions of this module are the same, it internally expands the embedding dimension into a higher-dimensional space through the first linear layer as illustrated in Figure 4.10. This expansion is followed by a non-linear GELU activation, and then a contraction back to the original dimension with the second linear transformation. Such a design allows for the exploration of a richer representation space.

我们在本节中实现的FeedForward模块在增强模型从数据中学习和泛化数据的能力方面起着至关重要的作用。虽然这个模块的输入和输出维度是相同的,但它通过第一个线性层在内部将嵌入维度扩展到更高维空间,如图4.10所示。这种扩展之后是一个非线性的GELU激活,然后通过第二次线性变换收缩回原始维度。这种设计允许探索更丰富的表示空间。

Figure 4.10 An illustration of the expansion and contraction of the layer outputs in the feed forward neural network. First, the inputs expand by a factor of 4 from 768 to 3072 values. Then, the second layer compresses the 3072 values back into a 768-dimensional representation.

图4.10 图示了前馈神经网络中层输出的扩展和收缩。首先,输入从768值按4倍扩展到3072值。然后,第二层将3072值压缩回768维表示。

Moreover, the uniformity in input and output dimensions simplifies the architecture by enabling the stacking of multiple layers, as we will do later, without the need to adjust dimensions between them, thus making the model more scalable.

此外,输入和输出维度的一致性通过允许多个层的堆叠简化了架构,我们将在稍后进行,而无需调整它们之间的维度,从而使模型更具可扩展性。

As illustrated in Figure 4.11, we have now implemented most of the LLM’s building blocks.

如图4.11所示,我们现在已经实现了大部分LLM的构建模块。

Figure 4.11 A mental model showing the topics we cover in this chapter, with the black checkmarks indicating those that we have already covered.

图4.11 一个心智模型,显示了我们在本章中涵盖的主题,黑色对勾标记表示我们已经涵盖的部分。

In the next section, we will go over the concept of shortcut connections that we insert between different layers of a neural network, which are important for improving the training performance in deep neural network architectures.

在下一节中,我们将讨论在神经网络的不同层之间插入的shortcut connections的概念,这对于提高深度神经网络架构中的训练性能非常重要。

4.4 Adding shortcut connections

Next, let’s discuss the concept behind shortcut connections, also known as skip or residual connections. Originally, shortcut connections were proposed for deep networks in computer vision (specifically, in residual networks) to mitigate the challenge of vanishing gradients. The vanishing gradient problem refers to the issue where gradients (which guide weight updates during training) become progressively smaller as they propagate backward through the layers, making it difficult to effectively train earlier layers, as illustrated in Figure 4.12.

接下来,让我们讨论shortcut connections背后的概念,也称为跳跃连接或残差连接。最初,shortcut connections是为计算机视觉中的深度网络(特别是在残差网络中)提出的,以减轻梯度消失的挑战。梯度消失问题指的是梯度(在训练期间指导权重更新)在向后传播通过层时逐渐变小,使得很难有效地训练早期层,如图4.12所示。

Figure 4.12 A comparison between a deep neural network consisting of 5 layers without (on the left) and with shortcut connections (on the right). Shortcut connections involve adding the inputs of a layer to its outputs, effectively creating an alternate path that bypasses certain layers. The gradient illustrated in Figure 1.1 denotes the mean absolute gradient at each layer, which we will compute in the code example that follows.

图4.12 对比了一个由5层组成的深度神经网络(左侧没有shortcut connections,右侧有shortcut connections)。shortcut connections通过将一层的输入添加到其输出,实际上创建了一条绕过某些层的替代路径。图1.1中(这里应该是笔误)说明的梯度表示每层的平均绝对梯度,我们将在接下来的代码示例中计算。

As illustrated in Figure 4.12, a shortcut connection creates an alternative, shorter path for the gradient to flow through the network by skipping one or more layers, which is achieved by adding the output of one layer to the output of a later layer. This is why these connections are also known as skip connections. They play a crucial role in preserving the flow of gradients during the backward pass in training.

如图4.12所示,shortcut connections通过跳过一个或多个层,为梯度在网络中的流动创建了一条替代的、更短的路径,这是通过将一层的输出添加到后面一层的输出来实现的。这就是为什么这些连接也被称为跳跃连接。它们在训练期间的反向传播过程中保持梯度流动方面起着至关重要的作用。

In the code example below, we implement the neural network shown in Figure 4.12 to see how we can add shortcut connections in the forward method:

在下面的代码示例中,我们实现了图4.12中显示的神经网络,以查看如何在前向方法中添加shortcut connections:

Listing 4.5 A neural network to illustrate shortcut connections

清单 4.5 说明 shortcut connections的神经网络

class ExampleDeepNeuralNetwork(nn.Module): # 定义ExampleDeepNeuralNetwork类,继承nn.Module

def __init__(self, layer_sizes, use_shortcut): # 初始化方法

super().__init__() # 调用父类的初始化方法

self.use_shortcut = use_shortcut # 设置是否使用shortcut

self.layers = nn.ModuleList([ # 使用模块列表定义网络层

nn.Sequential(nn.Linear(layer_sizes[0], layer_sizes[1]), GELU()), # 实现5层

nn.Sequential(nn.Linear(layer_sizes[1], layer_sizes[2]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[2], layer_sizes[3]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[3], layer_sizes[4]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[4], layer_sizes[5]), GELU())

])

def forward(self, x): # 定义前向传播方法

for layer in self.layers: # 遍历每一层

layer_output = layer(x) # 计算当前层的输出

if self.use_shortcut and x.shape == layer_output.shape: # 检查是否可以应用shortcut

x = x + layer_output # 应用shortcut

else:

x = layer_output # 否则直接输出当前层结果

return x # 返回最终输出

The code implements a deep neural network with 5 layers, each consisting of a Linear layer and a GELU activation function. In the forward pass, we iteratively pass the input through the layers and optionally add the shortcut connections depicted in Figure 4.12 if the self.use_shortcut attribute is set to True.

该代码实现了一个具有5层的深度神经网络,每层由一个线性层和一个GELU激活函数组成。在前向传播中,我们迭代地通过层传递输入,如果self.use_shortcut属性设置为True,则可以选择性地添加图4.12中描绘的shortcut connections。

Let’s use this code to first initialize a neural network without shortcut connections. Here, each layer will be initialized such that it accepts an example with 3 input values and returns 3 output values. The last layer returns a single output value:

让我们使用这段代码首先初始化一个没有shortcut connections的神经网络。在这里,每层将被初始化为接受一个具有3个输入值的示例并返回3个输出值。最后一层返回一个单一的输出值:

layer_sizes = [3, 3, 3, 3, 3, 1] # 定义每层的大小

sample_input = torch.tensor([[1., 0., -1.]]) # 样本输入

torch.manual_seed(123) # 指定初始权重的随机种子以确保结果可复现

model_without_shortcut = ExampleDeepNeuralNetwork( # 初始化没有shortcut的神经网络

layer_sizes, use_shortcut=False

)

Next, we implement a function that computes the gradients in the model’s backward pass:

接下来,我们实现一个函数来计算模型在反向传播中的梯度:

def print_gradients(model, x): # 定义打印梯度的函数

# Forward pass

output = model(x) # 前向传播计算输出

target = torch.tensor([[0.]]) # 目标张量

# Calculate loss based on how close the target and output are

loss = nn.MSELoss() # 使用均方误差损失

loss = loss(output, target) # 计算损失

# Backward pass to calculate the gradients

loss.backward() # 反向传播计算梯度

for name, param in model.named_parameters(): # 遍历模型的每个参数

if 'weight' in name: # 如果参数名中包含'weight'

# Print the mean absolute gradient of the weights

print(f"{name} has gradient mean of {param.grad.abs().mean().item()}") # 打印权重的平均绝对梯度

In the preceding code, we specify a loss function that computes how close the model output and a user-specified target (here, for simplicity, the value 0) are. Then, when calling loss.backward(), PyTorch computes the loss gradient for each layer in the model. We can iterate through the weight parameters via model.named_parameters(). Suppose we have a 3×3 weight parameter matrix for a given layer. In that case, this layer will have 3×3 gradient values, and we print the mean absolute gradient of these 3×3 gradient values to obtain a single gradient value per layer to compare the gradients between layers more easily.

在上述代码中,我们指定了一个计算模型输出与用户指定目标(这里为了简化,值为0)之间差距的损失函数。然后,当调用loss.backward()时,PyTorch会计算模型中每一层的损失梯度。我们可以通过model.named_parameters()迭代遍历权重参数。假设我们有一个3×3的权重参数矩阵。此时,这一层将有3×3的梯度值,我们打印这些3×3梯度值的平均绝对梯度,以便更容易比较各层之间的梯度。

In short, the .backward() method is a convenient method in PyTorch that computes loss gradients, which are required during model training, without implementing the math for the gradient calculation ourselves, thereby making working with deep neural networks much more accessible. If you are unfamiliar with the concept of gradients and neural network training, I recommend reading sections A.4, Automatic differentiation made easy and A.7 A typical training loop in appendix A.

简而言之,.backward()方法是PyTorch中计算损失梯度的便捷方法,在模型训练期间需要这些梯度,而无需自己实现梯度计算的数学方法,从而使得使用深度神经网络更容易。如果你不熟悉梯度和神经网络训练的概念,我建议阅读附录A的A.4节《自动微分轻松实现》和A.7节《典型的训练循环》。

Let’s now use the print_gradients function and apply it to the model without skip connections:

现在让我们使用print_gradients函数并将其应用于没有跳跃连接的模型:

print_gradients(model_without_shortcut, sample_input) # 打印没有shortcut的模型的梯度

The output is as follows:

输出如下:

layers.0.0.weight has gradient mean of 0.0002017358786325169

layers.1.0.weight has gradient mean of 0.0001201116101583466

layers.2.0.weight has gradient mean of 0.0007512046153711182

layers.3.0.weight has gradient mean of 0.001398783664673078

layers.4.0.weight has gradient mean of 0.00504946366387606

As we can see based on the output of the print_gradients function, the gradients become smaller as we progress from the last layer (layers.4) to the first layer (layers.0), which is a phenomenon called the vanishing gradient problem.

正如我们在print_gradients函数的输出中所见,梯度从最后一层(layers.4)到第一层(layers.0)逐渐变小,这是一种称为梯度消失的问题。

Let’s now instantiate a model with skip connections and see how it compares:

现在让我们实例化一个带有跳跃连接的模型,看看它的比较结果如何:

torch.manual_seed(123) # 指定随机种子

model_with_shortcut = ExampleDeepNeuralNetwork( # 初始化带有shortcut的神经网络

layer_sizes, use_shortcut=True

)

print_gradients(model_with_shortcut, sample_input) # 打印带有shortcut的模型的梯度

The output is as follows:

输出如下:

layers.0.0.weight has gradient mean of 0.22169792652130127

layers.1.0.weight has gradient mean of 0.20694105327129364

layers.2.0.weight has gradient mean of 0.32896995544433594

layers.3.0.weight has gradient mean of 0.2665732502937317

layers.4.0.weight has gradient mean of 1.325841822433472

As we can see, based on the output, the last layer (layers.4) still has a larger gradient than the other layers. However, the gradient value stabilizes as we progress towards the first layer (layers.0) and doesn’t shrink to a vanishingly small value.

正如我们所见,根据输出,最后一层(layers.4)的梯度仍然比其他层大。然而,梯度值在接近第一层(layers.0)时趋于稳定,并没有缩小到一个极小的值。

In conclusion, shortcut connections are important for overcoming the limitations posed by the vanishing gradient problem in deep neural networks. Shortcut connections are a core building block of very large models such as LLMs, and they will help facilitate more effective training by ensuring consistent gradient flow across layers when we train the GPT model in the next chapter.

总之,shortcut connections对于克服深度神经网络中梯度消失问题的局限性非常重要。shortcut connections是像LLM这样的大型模型的核心构建块,它们将通过确保层间一致的梯度流动来帮助更有效的训练,在下一章中我们训练GPT模型时将会使用。

After introducing shortcut connections, we will now connect all of the previously covered concepts (layer normalization, GELU activations, feed forward module, and shortcut connections) in a transformer block in the next section, which is the final building block we need to code the GPT architecture.

在介绍了shortcut connections之后,我们现在将在下一节中将前面介绍的所有概念(层归一化、GELU激活、前馈模块和shortcut connections)连接到一个transformer块中,这是我们编码GPT架构所需的最后一个构建块。

4.5 Connecting attention and linear layers in a transformer block

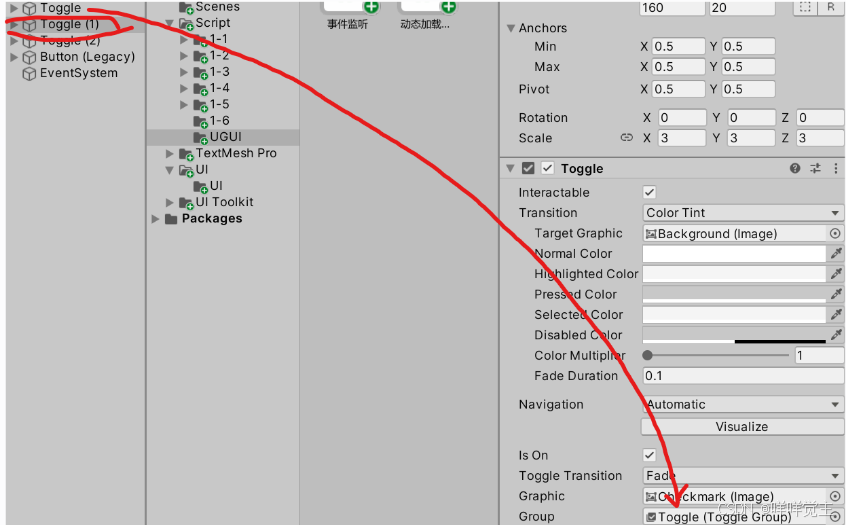

In this section, we are implementing the transformer block, a fundamental building block of GPT and other LLM architectures. This block, which is repeated a dozen times in the 124 million parameter GPT-2 architecture, combines several concepts we have previously covered: multi-head attention, layer normalization, dropout, feed forward layers, and GELU activations, as illustrated in Figure 4.13. In the next section, we will then connect this transformer block to the remaining parts of the GPT architecture.

在本节中,我们实现了transformer块,这是GPT和其他LLM架构的基本构建块。这个块在具有1.24亿参数的GPT-2架构中重复了十几次,结合了我们之前介绍的几个概念:多头注意力、层归一化、dropout、前馈层和GELU激活,如图4.13所示。在下一节中,我们将把这个transformer块连接到GPT架构的其余部分。

Figure 4.13 An illustration of a transformer block. The bottom of the diagram shows input tokens that have been embedded into 768-dimensional vectors. Each row corresponds to one token’s vector representation. The outputs of the transformer block are vectors of the same dimension as the input, which can then be fed into subsequent layers in an LLM.

图4.13 transformer块的图示。图的底部显示了已嵌入768维向量的输入词元。每一行对应一个词元的向量表示。transformer块的输出是与输入相同维度的向量,可以输入到LLM的后续层中。

As shown in Figure 4.13, the transformer block combines several components, including the masked multi-head attention module from chapter 3 and the FeedForward module we implemented in Section 4.3.

如图4.13所示,transformer块结合了几个组件,包括第3章的masked multi-head attention模块和第4.3节实现的FeedForward模块。

When a transformer block processes an input sequence, each element in the sequence (for example, a word or subword token) is represented by a fixed-size vector (in the case of Figure 4.13, 768 dimensions). The operations within the transformer block, including multi-head attention and feed forward layers, are designed to transform these vectors in a way that preserves their dimensionality.

当transformer块处理输入序列时,序列中的每个元素(例如,一个词或子词词元)由一个固定大小的向量表示(在图4.13中为768维)。transformer块内的操作,包括多头注意力和前馈层,旨在以保持其维度的方式转换这些向量。

The idea is that the self-attention mechanism in the multi-head attention block identifies and analyzes relationships between elements in the input sequence. In contrast, the feed forward network modifies the data individually at each position. This combination not only enables a more nuanced understanding and processing of the input but also enhances the model’s overall capacity for handling complex data patterns.

其思想是,多头注意力块中的自注意力机制识别并分析输入序列中元素之间的关系。相比之下,前馈网络在每个位置单独修改数据。这种组合不仅使对输入的理解和处理更为细致,而且增强了模型处理复杂数据模式的整体能力。

In code, we can create the TransformerBlock as follows:

在代码中,我们可以创建TransformerBlock如下:

Listing 4.6 The transformer block component of GPT

清单 4.6 GPT 的transformer 块组件

from previous_chapters import MultiHeadAttention # 从之前章节导入MultiHeadAttention

class TransformerBlock(nn.Module): # 定义TransformerBlock类,继承nn.Module

def __init__(self, cfg): # 初始化方法

super().__init__() # 调用父类的初始化方法

self.att = MultiHeadAttention( # 实例化多头注意力

d_in=cfg["emb_dim"],

d_out=cfg["emb_dim"],

context_length=cfg["context_length"],

num_heads=cfg["n_heads"],

dropout=cfg["drop_rate"],

qkv_bias=cfg["qkv_bias"]

)

self.ff = FeedForward(cfg) # 实例化前馈层

self.norm1 = LayerNorm(cfg["emb_dim"]) # 实例化层归一化1

self.norm2 = LayerNorm(cfg["emb_dim"]) # 实例化层归一化2

self.drop_shortcut = nn.Dropout(cfg["drop_rate"]) # 实例化dropout

def forward(self, x): # 定义前向传播方法

shortcut = x # 保存输入以便添加shortcut

x = self.norm1(x) # 层归一化1

x = self.att(x) # 多头注意力

x = self.drop_shortcut(x) # dropout

x = x + shortcut # 添加原始输入回去

shortcut = x # 保存输入以便添加shortcut

x = self.norm2(x) # 层归一化2

x = self.ff(x) # 前馈层

x = self.drop_shortcut(x) # dropout

x = x + shortcut # 添加原始输入回去

return x # 返回最终输出

The given code defines a TransformerBlock class in PyTorch that includes a multi-head attention mechanism (MultiHeadAttention) and a feed forward network (FeedForward), both configured based on a provided configuration dictionary (cfg), such as GPT_CONFIG_124M.

给定的代码在PyTorch中定义了一个TransformerBlock类,其中包括多头注意力机制(MultiHeadAttention)和前馈网络(FeedForward),两者都根据提供的配置字典(cfg)进行配置,例如GPT_CONFIG_124M。

Layer normalization (LayerNorm) is applied before each of these two components, and dropout is applied after them to regularize the model and prevent overfitting. This is also known as Pre-LayerNorm. Older architectures, such as the original transformer model, applied layer normalization after the self-attention and feed-forward networks instead, known as Post-LayerNorm, which often leads to worse training dynamics.

层归一化(LayerNorm)在这两个组件之前应用,dropout在它们之后应用,以正则化模型并防止过拟合。这也被称为Pre-LayerNorm。较早的架构,如原始的transformer模型,在自注意力和前馈网络之后应用层归一化,称为是Post-LayerNorm,这通常会导致更差的训练动态。

The class also implements the forward pass, where each component is followed by a shortcut connection that adds the input of the block to its output. This critical feature helps gradients flow through the network during training and improves the learning of deep models as explained in section 4.4.

该类还实现了前向传递,其中每个组件之后都有一个shortcut连接,将块的输入添加到其输出中。这个关键特性有助于梯度在训练期间通过网络流动,并改进了第4.4节中解释的深度模型的学习。

Using the GPT_CONFIG_124M dictionary we defined earlier, let’s instantiate a transformer block and feed it some sample data:

使用我们之前定义的GPT_CONFIG_124M字典,让我们实例化一个transformer块并为其提供一些示例数据:

torch.manual_seed(123) # 设置随机数种子为123,以确保生成的随机数具有可重复性

x = torch.rand(2, 4, 768) # 创建一个形状为[2, 4, 768]的随机张量,表示输入数据,包含2个批次,每个批次4个token,每个token用768维向量表示

block = TransformerBlock(GPT_CONFIG_124M) # 实例化一个TransformerBlock类,使用配置字典GPT_CONFIG_124M

output = block(x) # 将输入张量x传递给TransformerBlock实例,获取输出

print("Input shape:", x.shape) # 打印输入张量的形状

print("Output shape:", output.shape) # 打印输出张量的形状

The output is as follows:

输出如下:

Input shape: torch.Size([2, 4, 768])

Output shape: torch.Size([2, 4, 768])

As we can see from the code output, the transformer block maintains the input dimensions in its output, indicating that the transformer architecture processes sequences of data without altering their shape throughout the network.

从代码输出中可以看到,transformer块在其输出中保持了输入的维度,这表明transformer架构在整个网络中处理数据序列时不改变它们的形状。

The preservation of shape throughout the transformer block architecture is not incidental but a crucial aspect of its design. This design enables its effective application across a wide range of sequence-to-sequence tasks, where each output vector directly corresponds to an input vector, maintaining a one-to-one relationship. However, the output is a context vector that encapsulates information from the entire input sequence, as we learned in chapter 3.

This means that while the physical dimensions of the sequence (length and feature size) remain unchanged as it passes through the transformer block, the content of each output vector is re-encoded to integrate contextual information from across the entire input sequence.

在整个transformer块架构中保持形状不是偶然的,而是其设计的关键方面。此设计使其能够有效应用于广泛的序列到序列任务中,其中每个输出向量直接对应于一个输入向量,保持一对一的关系。然而,输出是一个上下文向量,封装了整个输入序列的信息,如我们在第3章中所学。这意味着,尽管序列的物理维度(长度和特征大小)在通过transformer块时保持不变,但每个输出向量的内容都会重新编码,以整合来自整个输入序列的上下文信息。

With the transformer block implemented in this section, we now have all the building blocks, as shown in Figure 4.14, needed to implement the GPT architecture in the next section.

随着本节中transformer块的实现,我们现在拥有了实现下一节GPT架构所需的所有构建块,如图4.14所示。

Figure 4.14 A mental model of the different concepts we have implemented in this chapter so far.

图4.14我们在本章中迄今为止实现的不同概念的思维模型。

4.6 Coding the GPT model

We started this chapter with a big-picture overview of a GPT architecture that we called DummyGPTModel. In this DummyGPTModel code implementation, we showed the input and outputs to the GPT model, but its building blocks remained a black box using a DummyTransformerBlock and DummyLayerNorm class as placeholders.

我们从DummyGPTModel的GPT架构的大概概述开始了本章。在DummyGPTModel代码实现中,我们展示了GPT模型的输入和输出,但其构建块仍然是一个黑盒,使用DummyTransformerBlock和DummyLayerNorm类作为占位符。

In this section, we are now replacing the DummyTransformerBlock and DummyLayerNorm placeholders with the real TransformerBlock and LayerNorm classes we coded later in this chapter to assemble a fully working version of the original 124 million parameter version of GPT-2. In chapter 5, we will pretrain a GPT-2 model, and in chapter 6, we will load in the pretrained weights from OpenAI.

在本节中,我们现在用本章稍后编写的真实TransformerBlock和LayerNorm类替换DummyTransformerBlock和DummyLayerNorm占位符,以组装一个完整工作的最初1.24亿参数版本的GPT-2。在第5章中,我们将预训练一个GPT-2模型,并在第6章中加载OpenAI的预训练权重。

Before we assemble the GPT-2 model in code, let’s look at its overall structure in Figure 4.15, which combines all the concepts we covered so far in this chapter.

在我们用代码组装GPT-2模型之前,让我们先看看图4.15中的总体结构,这结合了我们在本章到目前为止所涵盖的所有概念。

Figure 4.15 An overview of the GPT model architecture. This figure illustrates the flow of data through the GPT model. Starting from the bottom, tokenized text is first converted into token embeddings, which are then augmented with positional embeddings. This combined information forms a tensor that is passed through a series of transformer blocks shown in the center (each containing multi-head attention and feed forward neural network layers with dropout and layer normalization), which are stacked on top of each other and repeated 12 times.

图4.15 GPT模型架构概述。本图说明了数据通过GPT模型的流程。从底部开始,分词文本首先转换为分词嵌入,然后与位置嵌入进行增强。这些组合信息形成一个张量,传递通过中间显示的一系列Transformer块(每个块包含多头注意力和前馈神经网络层,具有dropout和层归一化),这些块彼此堆叠并重复12次。

As shown in Figure 4.15, the transformer block we coded in Section 4.5 is repeated many times throughout a GPT model architecture. In the case of the 124 million parameter GPT-2 model, it’s repeated 12 times, which we specify via the “n_layers” entry in the GPT_CONFIG_124M dictionary. In the case of the largest GPT-2 model with 1,542 million parameters, this transformer block is repeated 36 times.

如图4.15所示,我们在4.5节中编写的Transformer块在整个GPT模型架构中重复多次。对于具有1.24亿参数的GPT-2模型,该块重复12次,我们通过GPT_CONFIG_124M字典中的"n_layers"项指定。在具有15.42亿参数的最大GPT-2模型中,此Transformer块重复36次。

As shown in Figure 4.15, the output from the final transformer block then goes through a final layer normalization step before reaching the linear output layer. This layer maps the transformer’s output to a high-dimensional space (in this case, 50,257 dimensions, corresponding to the model’s vocabulary size) to predict the next token in the sequence.

如图4.15所示,最终Transformer块的输出在到达线性输出层之前经过最终的层归一化步骤。该层将Transformer的输出映射到一个高维空间(在这种情况下,为50,257维,对应模型的词汇量大小),以预测序列中的下一个词。

Let’s now implement the architecture we see in Figure 4.15 in code:

现在让我们用代码实现图4.15中看到的架构:

class GPTModel(nn.Module): # 定义GPTModel类,继承nn.Module

def __init__(self, cfg): # 初始化方法,接受配置参数cfg

super().__init__() # 调用父类的初始化方法

self.tok_emb = nn.Embedding(cfg["vocab_size"], cfg["emb_dim"]) # 创建分词嵌入层

self.pos_emb = nn.Embedding(cfg["context_length"], cfg["emb_dim"]) # 创建位置嵌入层

self.drop_emb = nn.Dropout(cfg["drop_rate"]) # 创建dropout层

self.trf_blocks = nn.Sequential( # 创建一系列Transformer块

*[TransformerBlock(cfg) for _ in range(cfg["n_layers"])]

)

self.final_norm = LayerNorm(cfg["emb_dim"]) # 创建最终层归一化层

self.out_head = nn.Linear(cfg["emb_dim"], cfg["vocab_size"], bias=False) # 创建输出层

def forward(self, in_idx): # 前向传播方法

batch_size, seq_len = in_idx.shape # 获取输入的批量大小和序列长度

tok_embeds = self.tok_emb(in_idx) # 获取分词嵌入

pos_embeds = self.pos_emb(torch.arange(seq_len, device=in_idx.device)) # 获取位置嵌入

x = tok_embeds + pos_embeds # 将分词嵌入和位置嵌入相加

x = self.drop_emb(x) # 应用dropout

x = self.trf_blocks(x) # 通过Transformer块

x = self.final_norm(x) # 应用最终层归一化

logits = self.out_head(x) # 通过输出层得到logits

return logits # 返回logits

Thanks to the TransformerBlock class we implemented in Section 4.5, the GPTModel class is relatively small and compact.

感谢我们在4.5节中实现的TransformerBlock类,GPTModel类相对小巧紧凑。

The init constructor of this GPTModel class initializes the token and positional embedding layers using the configurations passed in via a Python dictionary, cfg. These embedding layers are responsible for converting input token indices into dense vectors and adding positional information, as discussed in chapter 2.

GPTModel类的__init__构造函数使用通过Python字典cfg传递的配置初始化词元和位置嵌入层。这些嵌入层负责将输入的词元索引转换为密集向量,并添加位置信息,如第2章所讨论的。

Next, the init method creates a sequential stack of TransformerBlock modules equal to the number of layers specified in cfg. Following the transformer blocks, a LayerNorm layer is applied, standardizing the outputs from the transformer blocks to stabilize the learning process. Finally, a linear output head without bias is defined, which projects the transformer’s output into the vocabulary space of the tokenizer to generate logits for each token in the vocabulary.

接下来,__init__方法创建了一个顺序堆栈的TransformerBlock模块,其数量等于cfg中指定的层数。在transformer块之后,应用了LayerNorm层,标准化transformer块的输出以稳定学习过程。最后,定义了一个没有偏差的线性输出头,将transformer的输出投射到词汇表的词元空间中,以生成词汇中每个词元的logits。

The forward method takes a batch of input token indices, computes their embeddings, applies the positional embeddings, passes the sequence through the transformer blocks, normalizes the final output, and then computes the logits, representing the next token’s unnormalized probabilities. We will convert these logits into tokens and text outputs in the next section.

forward方法接收一批输入词元索引,计算它们的嵌入,应用位置嵌入,将序列传递通过transformer块,规范化最终输出,然后计算logits,表示下一个词元的非规范化概率。我们将在下一节中将这些logits转换为词元和文本输出。

Let’s now initialize the 124 million parameter GPT model using the GPT_CONFIG_124M dictionary we pass into the cfg parameter and feed it with the batch text input we created at the beginning of this chapter:

现在,让我们使用传递到cfg参数中的GPT_CONFIG_124M字典初始化124百万参数的GPT模型,并使用我们在本章开头创建的批量文本输入来喂养它:

torch.manual_seed(123) # 设置随机种子

model = GPTModel(GPT_CONFIG_124M) # 初始化GPT模型

out = model(batch) # 获取模型输出

print("Input batch:\n", batch) # 打印输入批次

print("\nOutput shape:", out.shape) # 打印输出形状

print(out) # 打印输出

The preceding code prints the contents of the input batch followed by the output tensor:

上面的代码打印了输入批次的内容,然后打印输出张量:

Input batch:

输入批次:

tensor([[ 6109, 3626, 6100, 345], # 文本1的词元ID

[ 6109, 1110, 6622, 257]]) # 文本2的词元ID

Output shape: torch.Size([2, 4, 50257])

输出形状:torch.Size([2, 4, 50257])

tensor([[[ 0.3613, 0.4222, -0.0711, ..., 0.3483, 0.4661, -0.2838], # 文本1的输出

[-0.1792, -0.5666, -0.9485, ..., 0.0477, 0.5181, -0.3168],

[ 0.7120, 0.0332, 0.1085, ..., -0.1018, -0.4327, -0.2553],

[-1.0076, 0.3418, -0.1190, ..., 0.7195, 0.4023, -0.0532]],

[[-0.2564, 0.0900, 0.0335, ..., 0.2659, 0.4454, -0.6806], # 文本2的输出

[ 0.1230, 0.3653, -0.2074, ..., 0.7705, 0.2710, 0.2246],

[ 1.0558, 1.0318, -0.2800, ..., 0.6936, 0.3295, -0.3178],

[-0.1565, 0.3926, 0.3288, ..., 1.2630, -0.1858, 0.0388]]],

grad_fn=<UnsafeViewBackward0>)

As we can see, the output tensor has the shape [2, 4, 50257], since we passed in 2 input texts with 4 tokens each. The last dimension, 50,257, corresponds to the vocabulary size of the tokenizer. In the next section, we will see how to convert each of these 50,257-dimensional output vectors back into tokens.

正如我们所见,输出张量的形状为[2, 4, 50257],因为我们传入了2个输入文本,每个文本包含4个词元。最后一个维度50,257对应于词汇表的大小。在下一节中,我们将看到如何将这些50,257维的输出向量转换回词元。

Before we move on to the next section and code the function that converts the model outputs into text, let’s spend a bit more time with the model architecture itself and analyze its size.

在进入下一节并编写将模型输出转换为文本的函数之前,让我们花一点时间来了解模型架构本身并分析其大小。

Using the numel() method, short for “number of elements,” we can collect the total number of parameters in the model’s parameter tensors:

使用numel()方法,numel是“元素数量”的缩写,我们可以收集模型参数张量中的参数总数:

total_params = sum(p.numel() for p in model.parameters()) # 计算参数总数

print(f"Total number of parameters: {total_params:,}") # 打印参数总数

The result is as follows:

结果如下:

Total number of parameters: 163,009,536

Now, a curious reader might notice a discrepancy. Earlier, we spoke of initializing a 124 million parameter GPT model, so why is the actual number of parameters 163 million, as shown in the preceding code output?

现在,好奇的读者可能会注意到一个差异。之前我们提到初始化一个1.24亿参数的GPT模型,那么为什么实际参数数量是1.63亿,如上面的代码输出所示?

The reason is a concept called weight tying that is used in the original GPT-2 architecture, which means that the original GPT-2 architecture is reusing the weights from the token embedding layer in its output layer. To understand what this means, let’s take a look at the shapes of the token embedding layer and linear output layer that we initialized on the model via the GPTModel earlier:

原因是原始GPT-2架构中使用的一个名为权重共享的概念,这意味着原始GPT-2架构在其输出层中重用了词元嵌入层的权重。要理解这意味着什么,让我们看看我们早些时候通过GPTModel在模型上初始化的词元嵌入层和线性输出层的形状:

print("Token embedding layer shape:", model.tok_emb.weight.shape) # 打印词元嵌入层的形状

print("Output layer shape:", model.out_head.weight.shape) # 打印输出层的形状

As we can see based on the print outputs, the weight tensors for both these layers have the same shape:

正如我们从打印输出中看到的,这两个层的权重张量具有相同的形状:

Token embedding layer shape: torch.Size([50257, 768]) # 词元嵌入层形状

Output layer shape: torch.Size([50257, 768]) # 输出层形状

The token embedding and output layers are very large due to the number of rows for the 50,257 in the tokenizer’s vocabulary. Let’s remove the output layer parameter count from the total GPT-2 model count according to the weight tying:

词元嵌入层和输出层非常大,因为词汇表中有50257行。让我们根据权重共享从GPT-2模型的总参数中移除输出层的参数数量:

total_params_gpt2 = total_params - sum(p.numel() for p in model.out_head.parameters()) # 计算移除输出层后的总参数

print(f"Number of trainable parameters considering weight tying: {total_params_gpt2:,}") # 打印考虑权重共享后的可训练参数数量

The output is as follows:

输出如下:

Number of trainable parameters considering weight tying: 124,412,160

As we can see, the model is now only 124 million parameters large, matching the original size of the GPT-2 model.

正如我们所见,模型现在只有1.24亿参数,与原始GPT-2模型的大小相匹配。

Weight tying reduces the overall memory footprint and computational complexity of the model. However, in my experience, using separate token embedding and output layers results in better training and model performance; hence, we are using separate layers in our GPTModel implementation. The same is true for modern LLMs. However, we will revisit and implement the weight tying concept later in chapter 6 when we load the pretrained weights from OpenAI.

权重共享减少了模型的总体内存占用和计算复杂性。然而,根据我的经验,使用单独的词元嵌入和输出层会带来更好的训练和模型性能;因此,我们在GPTModel实现中使用了单独的层。现代LLM也是如此。不过,我们将在第6章中重新讨论并实现权重共享概念,当时我们将从OpenAI加载预训练权重。

EXERCISE 4.1 NUMBER OF PARAMETERS IN FEED FORWARD AND ATTENTION MODULES

练习4.1 前馈和注意力模块中的参数数量

Calculate and compare the number of parameters that are contained in the feed forward module and those that are contained in the multi-head attention module.

计算并比较前馈模块中的参数数量与多头注意力模块中的参数数量。

Lastly, let us compute the memory requirements of the 163 million parameters in our GPTModel object:

最后,让我们计算GPTModel对象中1.63亿参数的内存需求:

total_size_bytes = total_params * 4 # 计算总大小(假设float32,每个参数4字节)

total_size_mb = total_size_bytes / (1024 * 1024) # 转换为兆字节

print(f"Total size of the model: {total_size_mb:.2f} MB") # 打印模型总大小

The result is as follows:

结果如下:

Total size of the model: 621.83 MB

In conclusion, by calculating the memory requirements for the 163 million parameters in our GPTModel object and assuming each parameter is a 32-bit float taking up 4 bytes, we find that the total size of the model amounts to 621.83 MB, illustrating the relatively large storage capacity required to accommodate even relatively small LLMs.

总之,通过计算GPTModel对象中1.63亿参数的内存需求,并假设每个参数是32位浮点数,占用4字节,我们发现模型的总大小为621.83 MB,说明了即使是相对较小的LLM也需要相对较大的存储容量。

In this section, we implemented the GPTModel architecture and saw that it outputs numeric tensors of shape [batch_size, num_tokens, vocab_size]. In the next section, we will write the code to convert these output tensors into text.

在本节中,我们实现了GPTModel架构,并看到它输出形状为[batch_size, num_tokens, vocab_size]的数值张量。在下一节中,我们将编写代码将这些输出张量转换为文本。

EXERCISE 4.2 INITIALIZING LARGER GPT MODELS

练习 4.2 初始化更大的 GPT 模型

In this chapter, we initialized a 124 million parameter GPT model, which is known as “GPT-2 small.” Without making any code modifications besides updating the configuration file, use the GPTModel class to implement GPT-2 medium (using 1024-dimensional embeddings, 24 transformer blocks, 16 multi-head attention heads), GPT-2 large (1280-dimensional embeddings, 36 transformer blocks, 20 multi-head attention heads), and GPT-2 XL (1600-dimensional embeddings, 48 transformer blocks, 25 multi-head attention heads). As a bonus, calculate the total number of parameters in each GPT model.

在本章中,我们初始化了一个具有 1.24 亿参数的 GPT 模型,这被称为“GPT-2 small”。在不修改任何代码的情况下,除了更新配置文件,使用 GPTModel 类实现 GPT-2 medium(使用 1024 维嵌入、24 个 transformer 块、16 个多头注意力头)、GPT-2 large(1280 维嵌入、36 个 transformer 块、20 个多头注意力头)和 GPT-2 XL(1600 维嵌入、48 个 transformer 块、25 个多头注意力头)。作为附加任务,计算每个 GPT 模型中的参数总数。

4.7 Generating text

4.7 生成文本

In this final section of this chapter, we will implement the code that converts the tensor outputs of the GPT model back into text. Before we get started, let’s briefly review how a generative model like an LLM generates text one word (or token) at a time, as shown in Figure 4.16.

在本章的最后一节中,我们将实现将 GPT 模型的张量输出转换回文本的代码。在开始之前,让我们简要回顾一下生成模型(如 LLM)如何一次生成一个单词(或词元)的文本,如图 4.16 所示。

Figure 4.16 This diagram illustrates the step-by-step process by which an LLM generates text, one token at a time. Starting with an initial input context (“Hello, I am”), the model predicts a subsequent token during each iteration, appending it to the input context for the next round of prediction. As shown, the first iteration adds “a”, the second “model”, and the third “ready”, progressively building the sentence.

图 4.16 该图展示了 LLM 一次生成一个词元的逐步过程。从初始输入上下文(“Hello, I am”)开始,模型在每次迭代期间预测下一个词元,并将其附加到下一轮预测的输入上下文中。如图所示,第一次迭代添加了“a”,第二次迭代添加了“model”,第三次迭代添加了“ready”,逐步构建句子。

Figure 4.16 illustrates the step-by-step process by which a GPT model generates text given an input context, such as “Hello, I am,” on a big-picture level. With each iteration, the input context grows, allowing the model to generate coherent and contextually appropriate text. By the 6th iteration, the model has constructed a complete sentence: “Hello, I am a model ready to help.”

图 4.16 说明了在大局上,GPT 模型在给定输入上下文(例如“Hello, I am”)的情况下生成文本的逐步过程。随着每次迭代,输入上下文会增加,使模型能够生成连贯且上下文合适的文本。在第六次迭代时,模型已经构建了一个完整的句子:“Hello, I am a model ready to help”。

In the previous section, we saw that our current GPTModel implementation outputs tensors with shape [batch_size, num_token, vocab_size]. Now, the question is, how does a GPT model go from these output tensors to the generated text shown in Figure 4.16?

在上一节中,我们看到当前的GPTModel实现输出形状为 [batch_size, num_token, vocab_size] 的张量。现在的问题是,GPT 模型如何从这些输出张量生成图 4.16 所示的文本?

The process by which a GPT model goes from output tensors to generated text involves several steps, as illustrated in Figure 4.17. These steps include decoding the output tensors, selecting tokens based on a probability distribution, and converting these tokens into human-readable text.

GPT 模型从输出张量到生成文本的过程涉及几个步骤,如图 4.17 所示。这些步骤包括解码输出张量、根据概率分布选择词元以及将这些词元转换成人类可读的文本。

Figure 4.17 details the mechanics of text generation in a GPT model by showing a single iteration in the token generation process. The process begins by encoding the input text into token IDs, which are then fed into the GPT model. The outputs of the model are then converted back into text and appended to the original input text.

图 4.17 通过展示词元生成过程中的单次迭代,详细说明了 GPT 模型中文本生成的机制。该过程从将输入文本编码为词元 ID 开始,这些 ID 然后被输入到 GPT 模型中。模型的输出随后被转换回文本,并附加到原始输入文本中。

The next-token generation process detailed in Figure 4.17 illustrates a single step where the GPT model generates the next token given its input.

图 4.17 详细说明的下一个词元生成过程展示了 GPT 模型在给定输入的情况下生成下一个词元的单个步骤。

In each step, the model outputs a matrix with vectors representing potential next tokens. The vector corresponding to the next token is extracted and converted into a probability distribution via the softmax function. Within the vector containing the resulting probability scores, the index of the highest value is located, which translates to the token ID. This token ID is then decoded back into text, producing the next token in the sequence. Finally, this token is appended to the previous inputs, forming a new input sequence for the subsequent iteration. This step-by-step process enables the model to generate text sequentially, building coherent phrases and sentences from the initial input context.

在每一步中,模型输出一个包含表示潜在下一个词元的向量的矩阵。对应于下一个词元的向量通过 softmax 函数被提取并转换为概率分布。在包含结果概率分数的向量中,找到最高值的索引,这对应于词元 ID。然后将此词元 ID 解码回文本,生成序列中的下一个词元。最后,这个词元被附加到之前的输入中,形成下一个迭代的新输入序列。这个逐步过程使模型能够顺序生成文本,从初始输入上下文中构建连贯的短语和句子。

In practice, we repeat this process over many iterations, such as shown in Figure 4.16 earlier, until we reach a user-specified number of generated tokens.

在实践中,我们会在多次迭代中重复这个过程,如前面的图 4.16 所示,直到我们达到用户指定的生成词元数量。

In code, we can implement the token-generation process as follows:

在代码中,我们可以按如下方式实现词元生成过程:

def generate_text_simple(model, idx, max_new_tokens, context_size): # 定义生成简单文本的函数

for _ in range(max_new_tokens): # 循环生成新词元

idx_cond = idx[:, -context_size:] # 截取上下文

with torch.no_grad(): # 禁用梯度计算

logits = model(idx_cond) # 通过模型获得logits

logits = logits[:, -1, :] # 只关注最后一个时间步的logits

probas = torch.softmax(logits, dim=-1) # 应用softmax函数获取概率分布

idx_next = torch.argmax(probas, dim=-1, keepdim=True) # 选择具有最高概率的词元ID

idx = torch.cat([idx, idx_next], dim=1) # 将生成的词元ID附加到当前上下文

return idx # 返回生成的词元序列

In the preceding code, the generate_text_simple function, we use a softmax function to convert the logits into a probability distribution from which we identify the position with the highest value via torch.argmax. The softmax function is monotonic, meaning it preserves the order of its inputs when transformed into outputs. So, in practice, the softmax step is redundant since the position with the highest score in the softmax output tensor is the same position in the logit tensor. In other words, we could apply the torch.argmax function to the logits tensor directly and get identical results. However, we coded the conversion to illustrate the full process of transforming logits to probabilities, which can add additional intuition, such that the model generates the most likely next token, which is known as greedy decoding.

在前面的代码中,generate_text_simple函数使用softmax函数将logits转换为概率分布,然后通过torch.argmax确定具有最高值的位置。softmax函数是单调的,意味着在转换为输出时它保持输入的顺序。因此,在实践中,softmax步骤是多余的,因为softmax输出张量中得分最高的位置与logit张量中的位置相同。换句话说,我们可以直接对logits张量应用torch.argmax函数,并得到相同的结果。然而,我们编写了转换过程以说明从logits到概率的完整过程,这可以增加额外的直观性,使模型生成最可能的下一个词元,这称为贪婪解码。

In the next chapter, when we will implement the GPT training code, we will also introduce additional sampling techniques where we modify the softmax outputs such that the model doesn’t always select the most likely token, which introduces variability and creativity in the generated text.

在下一章中,当我们实现GPT训练代码时,我们还将介绍额外的采样技术,我们会修改softmax输出,使模型不总是选择最可能的词元,从而在生成的文本中引入变化和创造性。

This process of generating one token ID at a time and appending it to the context using the generate_text_simple function is further illustrated in Figure 4.18. (The token ID generation process for each iteration is detailed in Figure 4.17.)

使用generate_text_simple函数一次生成一个词元ID并将其附加到上下文中的过程在图4.18中进一步说明。(每次迭代的词元ID生成过程在图4.17中详细说明。)

Figure 4.18 An illustration showing six iterations of a token prediction cycle, where the model takes a sequence of initial token IDs as input, predicts the next token, and appends this token to the input sequence for the next iteration. (The token IDs are also translated into their corresponding text for better understanding.)

图4.18展示了词元预测循环的六次迭代,其中模型将一系列初始词元ID作为输入,预测下一个词元,并将该词元附加到下一次迭代的输入序列中。(为了更好地理解,词元ID也被翻译成相应的文本。)

As shown in Figure 4.18, we generate the token IDs in an iterative fashion. For instance, in iteration 1, the model is provided with the tokens corresponding to “Hello, I am”, predicts the next token (with ID 257, which is “a”), and appends it to the input. This process is repeated until the model produces the complete sentence “Hello, I am a model ready to help.” after six iterations.

如图4.18所示,我们以迭代方式生成词元ID。例如,在第1次迭代中,模型被提供了与“Hello, I am”对应的词元,预测下一个词元(ID为257,即“a”),并将其附加到输入中。这个过程重复进行,直到模型在六次迭代后生成完整的句子“Hello, I am a model ready to help”。