文章目录

- 1. What

- 2. Why

- 2.1 Introduction

- 2.2 Related work and background

- 3. How: Multiresolution hash encoding

- 3.1 Structure

- 3.2 Input coordinate

- 3.3 Hash mapping

- 3.4 Interpolation

- 3.5 Performance vs. quality

- 3.6 Hash collision

- 4. Experiment on Nerf

1. What

To reduce the cost of a fully connected network, this paper utilizes a multiresolution hash table of trainable features that can permit the use of a smaller network without sacrificing quality.

2. Why

2.1 Introduction

Computer graphics primitives are fundamentally represented by mathematical functions. Functions represented by MLPs, used as neural graphics primitives, have been shown to have the ability to capture high-frequency and local details. It can map neural network inputs to a higher-dimensional space.

Most successful among these encodings are trainable,

task-specific data structures. However such data structures rely on heuristics and structural modifications (such as pruning, splitting, or merging), limit the method to a specific task, or limit performance on GPUs.

The current method utilizes hash encoding which will not update the structure during training and only needs O ( 1 ) O(1) O(1) when looking up value. These are its adaptivity and efficiency.

2.2 Related work and background

- Encoding: Frequency encodings such as sin and cos are common methods. Recently, state-of-the-art results have been achieved by parametric encodings which blur the line between classical data structures and neural approaches. Grid and tree are common in this encoding, but tree needs a greater computational cost.

- When using the grid, the dense grid is wasteful in two ways. One is the number of parameters grows as O ( N 3 ) O(N^3) O(N3) , while the visible surface of interest has surface area that grows only as O ( N 2 ) O(N^2) O(N2). The other is the natural scenes exhibit smoothness, motivating the use of a multi-resolution decomposition.

3. How: Multiresolution hash encoding

3.1 Structure

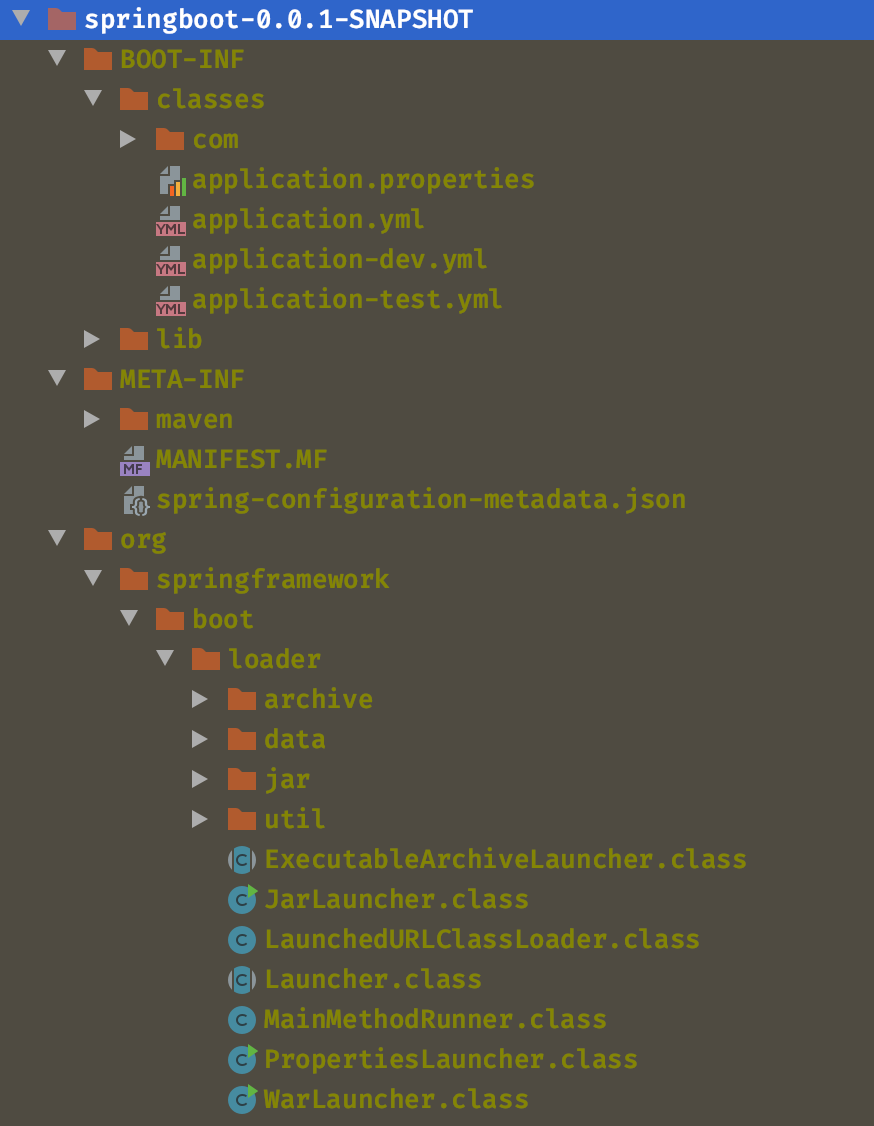

Given a fully connected neural network m ( y ; Φ ) m(y; \Phi) m(y;Φ), we are interested in an encoding of its inputs y = e n c ( x ; θ ) y=enc(\mathbf{x};\theta) y=enc(x;θ). The parameters θ \theta θ are trainable and the encoding structure is arranged into L L L levels, each containing up to T T T feature vectors with dimensionality F F F.

Each level is independent and conceptually stores feature vectors at the vertices of a grid, the resolution of which is chosen to be a geometric progression between the coarsest and finest resolutions [ N m i n , N m a x ] [N_{min}, N_{max}] [Nmin,Nmax]:

N l : = ⌊ N m i n ⋅ b C l ⌋ b : = exp ( ln N max − ln N min L − 1 ) . N_{l}:=\left\lfloor N_{\mathrm{min}}\cdot b_{C}^{l}\right\rfloor \\ b:=\exp\biggl(\frac{\ln N_{\max}-\ln N_{\min}}{L-1}\biggr) . Nl:=⌊Nmin⋅bCl⌋b:=exp(L−1lnNmax−lnNmin).

According to the hyperparameters we choose, b ∈ [ 1.26 , 6 ] b \in [1.26,6] b∈[1.26,6].

3.2 Input coordinate

When we have a normalized input x ∈ R d \mathbf{x}\in\mathbb{R}^{d} x∈Rd, it is scaled by the level’s grid resultion before rounding down and up:

⌊ x l ⌋ : = ⌊ x ⋅ N l ⌋ , ⌈ x l ⌉ : = ⌈ x ⋅ N l ⌉ . \lfloor\mathbf{x}_l\rfloor:= \lfloor\mathbf{x}\cdot N_{l}\rfloor,\lceil\mathbf{x}_{l}\rceil:=\lceil\mathbf{x}\cdot N_{l}\rceil. ⌊xl⌋:=⌊x⋅Nl⌋,⌈xl⌉:=⌈x⋅Nl⌉.

⌊ x l ⌋ \lfloor\mathbf{x}_l\rfloor ⌊xl⌋ and ⌈ x l ⌉ \lceil\mathbf{x}_{l}\rceil ⌈xl⌉ can be mapped to the integer vertices in the grid and they span 2 d 2^d 2d voxel.

3.3 Hash mapping

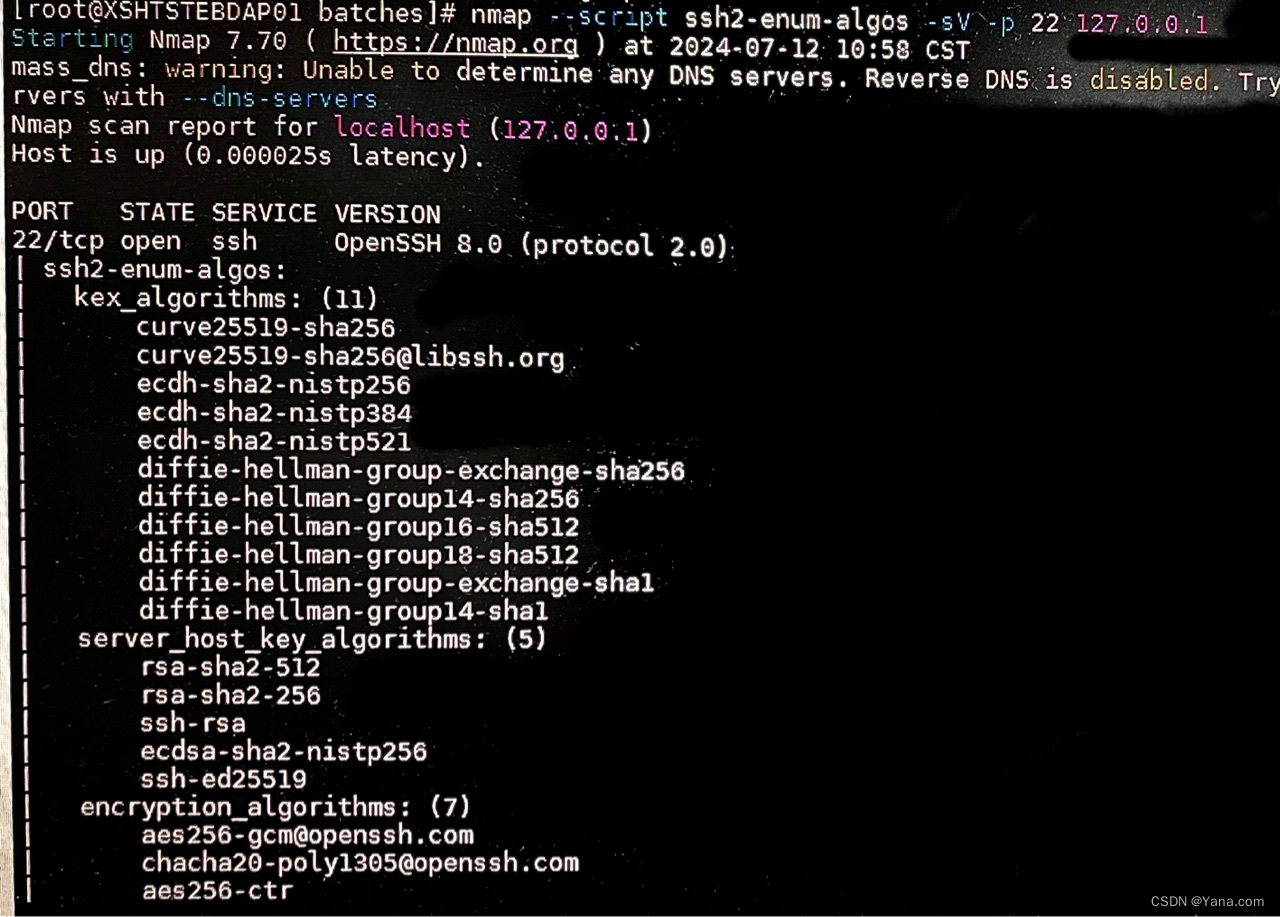

Each integer vertex on the grid will correspond to a position in the hash table. It is calculated by:

h ( x ) = ( ⨁ i = 1 d x i π i ) m o d T h(\mathbf{x})=\left(\bigoplus_{i=1}^dx_i\pi_i\right)\mod T h(x)=(i=1⨁dxiπi)modT

where we choose π 1 : = 1 , π 2 : = 2654435761 , π 3 : = 80549861 \pi_1:=1,\pi_2:=2654435761,\pi_3:=80549861 π1:=1,π2:=2654435761,π3:=80549861 and ⨁ \bigoplus ⨁ represents the bit-wise XOR.

After this transformation, each integer vertex will be reflected to an integer index in the hash table with a 2 dimensions feature.

For coarse levels where a dense grid requires fewer than T T T parameters, i.e. ( N l + 1 ) d ≤ T (N_l+1)^d\leq T (Nl+1)d≤T, this mapping is 1:1. At finer levels, we use a hash function h : Z d → Z T h:\mathbb{Z}^d\to\mathbb{Z}_T h:Zd→ZT to index into the array, effectively treating it as a hash table, although there is no explicit collision handling.

3.4 Interpolation

Lastly, the feature vectors at each corner are 𝑑-linearly interpolated according to the relative position of x within its hypercube, i.e. the interpolation weight is w l : = x l − ⌊ x l ⌋ . \mathbf{w}_{l}:=\mathbf{x}_{l}-\lfloor\mathbf{x}_{l}\rfloor. wl:=xl−⌊xl⌋.

Recall that this process takes place independently for each of the L L L levels. The interpolated feature vectors of each level, as well as auxiliary inputs ξ ∈ R E \xi\in\mathbb{R}^E ξ∈RE (such as the encoded view direction and textures in neural radiance caching), are concatenated to produce y ∈ R L F + E \mathbf{y}\in\mathbb{R}^{LF+E} y∈RLF+E, which is the encoded input enc ( x ; θ ) (\mathbf{x};\theta) (x;θ) to the MLP m ( y ; Φ ) m(\mathbf{y};\Phi) m(y;Φ).

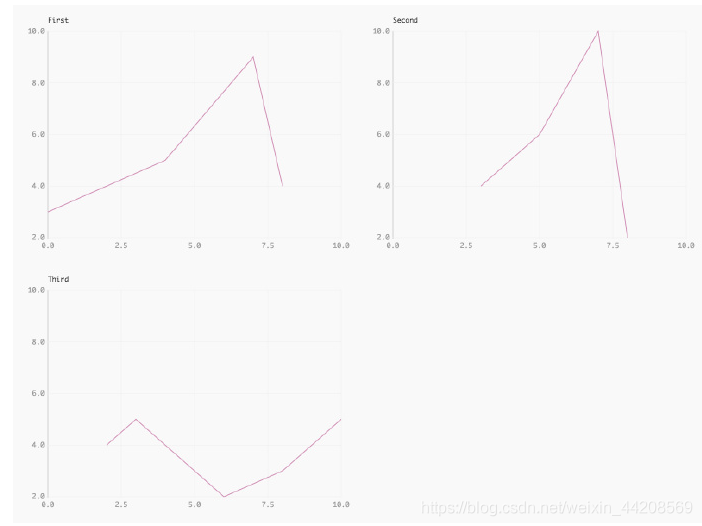

3.5 Performance vs. quality

The hyperparameters L L L(number of levels), F F F **(number of feature dimensions), and T T T(table size) trade off quality and performance

3.6 Hash collision

In finer resolution, disparate points that hash to the same table entry mean collision.

When training samples collide, their gradients average. Samples rarely have equal importance to the final reconstruction. A point on a visible surface of a radiance field strongly contributes to the image, causing large changes to its table entries. In contrast, a point in empty space referring to the same entry has a smaller weight. Thus, the gradients of more important samples dominate, optimizing the aliased table entry to reflect the needs of the higher-weighted point.

4. Experiment on Nerf

-

Model Architecture: Informed by the analysis in Figure 10, our results were generated with a 1-hidden-layer density MLP and a 2-hidden-layer color MLP, both 64 neurons wide.

-

Accelerated ray marching: We concentrate samples near surfaces by maintaining an occupancy grid that coarsely marks empty vs. nonempty space.

We utilize three techniques with imperceivable error to optimize our implementation:

(1) exponential stepping for large scenes,

(2) skipping of empty space and occluded regions, and

(3) compaction of samples into dense buffers for efficient execution. More information please refer to Appendix E.

![[笔记] SEW的振动分析工具DUV40A](https://i-blog.csdnimg.cn/direct/65d162135ec64fb89b9dc68a153bf980.png)