秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录: 《YOLOv5入门 + 改进涨点》专栏介绍 & 专栏目录 |目前已有50+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进

视觉特征金字塔在广泛的应用中显示出其有效性和效率的优越性。然而,现有的方法过分地集中于层间特征交互,而忽略了层内特征规则,这是经验证明是有益的。尽管一些方法试图借助注意机制或视觉变换器学习紧凑的层内特征表示,但它们忽略了对密集预测任务很重要的被忽略的角点区域。为了解决这一问题,本文提出了一种基于全局显式集中式特征规则的集中式特征金字塔(CFP)对象检测方法。具体而言,我们首先提出了一种空间显式视觉中心方案,其中使用轻量级MLP来捕捉全局长距离依赖关系,并使用并行可学习视觉中 心机制来捕捉输入图像的局部角区域。在此基础上,我们以自顶向下的方式对常用的特征金字塔提出了一个全局集中的规则,其中使用从最深层内特征获得的显式视觉中心信息。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

专栏地址:YOLOv5改进+入门——持续更新各种有效涨点方法——点击即可跳转

目录

1. 原理

2. 将EVC添加到YOLOv5中

2.1 EVC代码实现

2.2 新增yaml文件

2.3 注册模块

2.4 执行程序

3. 完整代码分享

4. GFLOPs

5. 进阶

6. 总结

1. 原理

论文地址:Centralized Feature Pyramid for Object Detection——点击即可跳转

官方代码:官方代码仓库——点击即可跳转

Explicit Visual Center (EVC) 主要原理如下:

-

整体架构: EVC 主要由两个并行连接的模块组成:一个轻量级的多层感知器(MLP)用于捕捉顶层特征的全局长距离依赖(即全局信息),另一个是可学习的视觉中心机制,用于聚合层内的局部区域特征。这两个模块的结果特征图在通道维度上连接在一起,作为 EVC 的输出,用于下游识别任务。

-

输入特征处理: 输入图像首先通过一个主干网络(如 Modified CSP v5)提取五层特征金字塔(X0, X1, X2, X3, X4),其中每层特征的空间大小分别是输入图像的1/2, 1/4, 1/8, 1/16, 1/32。EVC 实现于特征金字塔的顶层(X4)上。

-

轻量级 MLP 模块: 轻量级 MLP 模块主要包括两个残差模块:基于深度卷积的模块和基于通道 MLP 的模块。深度卷积模块通过组归一化和深度卷积处理输入特征,然后进行通道缩放和 DropPath 操作,最后通过残差连接输出特征。

-

视觉中心机制: 可学习的视觉中心机制通过计算特征图中每个像素点相对于一组可学习的视觉词的位置信息来聚合局部特征。具体过程包括计算像素点与视觉词之间的距离,通过一个全连接层和一个 1x1 卷积层预测出突出关键类别的特征,然后与 Stem block 的输入特征进行通道乘法和通道加法操作。

-

输出特征整合: 最终,两个并行模块的输出特征图在通道维度上连接在一起,形成 EVC 的输出,用于下游的分类和回归任务。

EVC 机制通过结合全局和局部信息,有效地提升了视觉特征的表示能力,对于密集预测任务(如目标检测)非常重要。

2. 将EVC添加到YOLOv5中

2.1 EVC代码实现

关键步骤一:将下面代码粘贴到/yolov5-6.1/models/common.py文件中

class Encoding(nn.Module):

def __init__(self, in_channels, num_codes):

super(Encoding, self).__init__()

# init codewords and smoothing factor

self.in_channels, self.num_codes = in_channels, num_codes

num_codes = 64

std = 1. / ((num_codes * in_channels) ** 0.5)

# [num_codes, channels]

self.codewords = nn.Parameter(

torch.empty(num_codes, in_channels, dtype=torch.float).uniform_(-std, std), requires_grad=True)

# [num_codes]

self.scale = nn.Parameter(torch.empty(num_codes, dtype=torch.float).uniform_(-1, 0), requires_grad=True)

@staticmethod

def scaled_l2(x, codewords, scale):

num_codes, in_channels = codewords.size()

b = x.size(0)

expanded_x = x.unsqueeze(2).expand((b, x.size(1), num_codes, in_channels))

# ---处理codebook (num_code, c1)

reshaped_codewords = codewords.view((1, 1, num_codes, in_channels))

# 把scale从1, num_code变成 batch, c2, N, num_codes

reshaped_scale = scale.view((1, 1, num_codes)) # N, num_codes

# ---计算rik = z1 - d # b, N, num_codes

scaled_l2_norm = reshaped_scale * (expanded_x - reshaped_codewords).pow(2).sum(dim=3)

return scaled_l2_norm

@staticmethod

def aggregate(assignment_weights, x, codewords):

num_codes, in_channels = codewords.size()

# ---处理codebook

reshaped_codewords = codewords.view((1, 1, num_codes, in_channels))

b = x.size(0)

# ---处理特征向量x b, c1, N

expanded_x = x.unsqueeze(2).expand((b, x.size(1), num_codes, in_channels))

# 变换rei b, N, num_codes,-

assignment_weights = assignment_weights.unsqueeze(3) # b, N, num_codes,

# ---开始计算eik,必须在Rei计算完之后

encoded_feat = (assignment_weights * (expanded_x - reshaped_codewords)).sum(1)

return encoded_feat

def forward(self, x):

assert x.dim() == 4 and x.size(1) == self.in_channels

b, in_channels, w, h = x.size()

# [batch_size, height x width, channels]

x = x.view(b, self.in_channels, -1).transpose(1, 2).contiguous()

# assignment_weights: [batch_size, channels, num_codes]

assignment_weights = torch.softmax(self.scaled_l2(x, self.codewords, self.scale), dim=2)

# aggregate

encoded_feat = self.aggregate(assignment_weights, x, self.codewords)

return encoded_feat

class Mlp(nn.Module):

"""

Implementation of MLP with 1*1 convolutions. Input: tensor with shape [B, C, H, W]

"""

def __init__(self, in_features, hidden_features=None,

out_features=None, act_layer=nn.GELU, drop=0.):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Conv2d(in_features, hidden_features, 1)

self.act = act_layer()

self.fc2 = nn.Conv2d(hidden_features, out_features, 1)

self.drop = nn.Dropout(drop)

self.apply(self._init_weights)

def _init_weights(self, m):

if isinstance(m, nn.Conv2d):

trunc_normal_(m.weight, std=.02)

if m.bias is not None:

nn.init.constant_(m.bias, 0)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

# 1*1 3*3 1*1

class ConvBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1, res_conv=False, act_layer=nn.SiLU, groups=1,

norm_layer=partial(nn.BatchNorm2d, eps=1e-6)):

super(ConvBlock, self).__init__()

self.in_channels = in_channels

expansion = 4

c = out_channels // expansion

self.conv1 = Conv(in_channels, c, act=nn.SiLU())

self.conv2 = Conv(c, c, k=3, s=stride, g=groups, act=nn.SiLU())

self.conv3 = Conv(c, out_channels, 1, act=False)

self.act3 = act_layer(inplace=True)

if res_conv:

self.residual_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=1, padding=0, bias=False)

self.residual_bn = norm_layer(out_channels)

self.res_conv = res_conv

def zero_init_last_bn(self):

nn.init.zeros_(self.bn3.weight)

def forward(self, x, return_x_2=True):

residual = x

x = self.conv1(x)

x2 = self.conv2(x) # if x_t_r is None else self.conv2(x + x_t_r)

x = self.conv3(x2)

if self.res_conv:

residual = self.residual_conv(residual)

residual = self.residual_bn(residual)

x += residual

x = self.act3(x)

if return_x_2:

return x, x2

else:

return x

class Mean(nn.Module):

def __init__(self, dim, keep_dim=False):

super(Mean, self).__init__()

self.dim = dim

self.keep_dim = keep_dim

def forward(self, input):

return input.mean(self.dim, self.keep_dim)

class LVCBlock(nn.Module):

def __init__(self, in_channels, out_channels, num_codes, channel_ratio=0.25, base_channel=64):

super(LVCBlock, self).__init__()

self.out_channels = out_channels

self.num_codes = num_codes

num_codes = 64

self.conv_1 = ConvBlock(in_channels=in_channels, out_channels=in_channels, res_conv=True, stride=1)

self.LVC = nn.Sequential(

Conv(in_channels, in_channels, 1, act=nn.SiLU()),

Encoding(in_channels=in_channels, num_codes=num_codes),

nn.BatchNorm1d(num_codes),

nn.SiLU(inplace=True),

Mean(dim=1))

self.fc = nn.Sequential(nn.Linear(in_channels, in_channels), nn.Sigmoid())

def forward(self, x):

x = self.conv_1(x, return_x_2=False)

en = self.LVC(x)

gam = self.fc(en)

b, in_channels, _, _ = x.size()

y = gam.view(b, in_channels, 1, 1)

x = F.relu_(x + x * y)

return x

class GroupNorm(nn.GroupNorm):

"""

Group Normalization with 1 group.

Input: tensor in shape [B, C, H, W]

"""

def __init__(self, num_channels, **kwargs):

super().__init__(1, num_channels, **kwargs)

class DWConv_LMLP(nn.Module):

"""Depthwise Conv + Conv"""

def __init__(self, in_channels, out_channels, ksize, stride=1, act="silu"):

super().__init__()

self.dconv = Conv(

in_channels,

in_channels,

k=ksize,

s=stride,

g=in_channels,

)

self.pconv = Conv(

in_channels, out_channels, k=1, s=1, g=1

)

def forward(self, x):

x = self.dconv(x)

return self.pconv(x)

# LightMLPBlock

class LightMLPBlock(nn.Module):

def __init__(self, in_channels, out_channels, ksize=1, stride=1, act="silu",

mlp_ratio=4., drop=0., act_layer=nn.GELU,

use_layer_scale=True, layer_scale_init_value=1e-5, drop_path=0.,

norm_layer=GroupNorm): # act_layer=nn.GELU,

super().__init__()

self.dw = DWConv_LMLP(in_channels, out_channels, ksize=1, stride=1, act="silu")

self.linear = nn.Linear(out_channels, out_channels) # learnable position embedding

self.out_channels = out_channels

self.norm1 = norm_layer(in_channels)

self.norm2 = norm_layer(in_channels)

mlp_hidden_dim = int(in_channels * mlp_ratio)

self.mlp = Mlp(in_features=in_channels, hidden_features=mlp_hidden_dim, act_layer=nn.GELU,

drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. \

else nn.Identity()

self.use_layer_scale = use_layer_scale

if use_layer_scale:

self.layer_scale_1 = nn.Parameter(

layer_scale_init_value * torch.ones(out_channels), requires_grad=True)

self.layer_scale_2 = nn.Parameter(

layer_scale_init_value * torch.ones(out_channels), requires_grad=True)

def forward(self, x):

if self.use_layer_scale:

x = x + self.drop_path(self.layer_scale_1.unsqueeze(-1).unsqueeze(-1) * self.dw(self.norm1(x)))

x = x + self.drop_path(self.layer_scale_2.unsqueeze(-1).unsqueeze(-1) * self.mlp(self.norm2(x)))

else:

x = x + self.drop_path(self.dw(self.norm1(x)))

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x

# EVCBlock

class EVCBlock(nn.Module):

def __init__(self, in_channels, out_channels, channel_ratio=4, base_channel=16):

super().__init__()

expansion = 2

ch = out_channels * expansion

# Stem stage: get the feature maps by conv block (copied form resnet.py) 进入conformer框架之前的处理

self.conv1 = Conv(in_channels, in_channels, k=3, act=nn.SiLU())

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=1, padding=1) # 1 / 4 [56, 56]

# LVC

self.lvc = LVCBlock(in_channels=in_channels, out_channels=out_channels, num_codes=64) # c1值暂时未定

# LightMLPBlock

self.l_MLP = LightMLPBlock(in_channels, out_channels, ksize=3, stride=1, act="silu", act_layer=nn.GELU,

mlp_ratio=4., drop=0.,

use_layer_scale=True, layer_scale_init_value=1e-5, drop_path=0.,

norm_layer=GroupNorm)

self.cnv1 = nn.Conv2d(ch, out_channels, kernel_size=1, stride=1, padding=0)

def forward(self, x):

x1 = self.maxpool((self.conv1(x)))

# LVCBlock

x_lvc = self.lvc(x1)

# LightMLPBlock

x_lmlp = self.l_MLP(x1)

# concat

x = torch.cat((x_lvc, x_lmlp), dim=1)

x = self.cnv1(x)

return xEVCBlock 处理图像的主要流程如下:

-

输入图像处理:

输入图像首先通过一个主干网络(如 Modified CSP v5)提取出五层特征金字塔(X0, X1, X2, X3, X4),每层特征图的空间大小分别是输入图像的1/2, 1/4, 1/8, 1/16, 1/32。 -

轻量级 MLP 模块:

对于顶层特征图(X4),首先输入到轻量级 MLP 模块。这个模块包括基于深度卷积的残差模块和基于通道 MLP 的残差模块。深度卷积模块通过组归一化和深度卷积处理输入特征,然后进行通道缩放和 DropPath 操作,最后通过残差连接输出特征。 -

视觉中心机制:

顶层特征图(X4)同时也输入到视觉中心机制。这个机制通过计算特征图中每个像素点相对于一组可学习的视觉词的位置信息来聚合局部特征。具体过程如下:-

然后与 Stem block 的输入特征进行通道乘法和通道加法操作。

-

通过一个全连接层和一个 1x1 卷积层预测出突出关键类别的特征。

-

计算像素点与视觉词之间的距离。

-

-

特征整合:

轻量级 MLP 模块和视觉中心机制的输出特征图在通道维度上连接在一起,形成 EVC 的输出特征。 -

下游任务处理:

最终的 EVC 输出特征图用于下游的分类和回归任务,提升了视觉特征的表示能力,有效地增强了密集预测任务(如目标检测)的性能。

2.2 新增yaml文件

关键步骤二:在下/yolov5-6.1/models下新建文件 yolov5_EVC.yaml并将下面代码复制进去

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[-1, 1, EVCBlock, [512]],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]温馨提示:本文只是对yolov5基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。

# YOLOv5n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

# YOLOv5s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# YOLOv5l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

# YOLOv5m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

# YOLOv5x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple2.3 注册模块

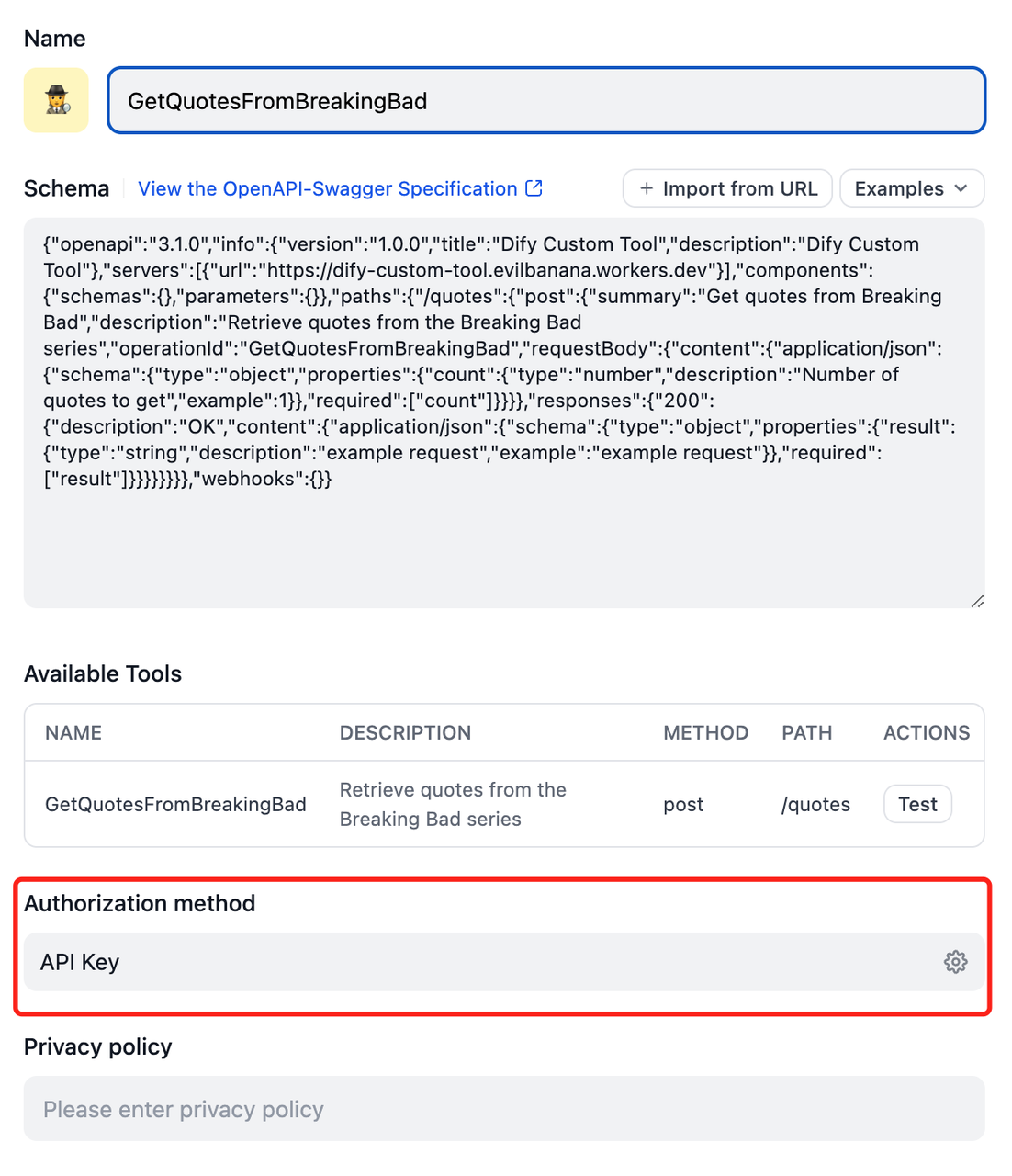

关键步骤三:在yolo.py的parse_model函数中注册 添加“EVCBlock",

elif m is EVCBlock:

c1, c2 = ch[f], args[0]

args = [c1, c2]2.4 执行程序

在train.py中,将cfg的参数路径设置为yolov5_EVC.yaml的路径

建议大家写绝对路径,确保一定能找到

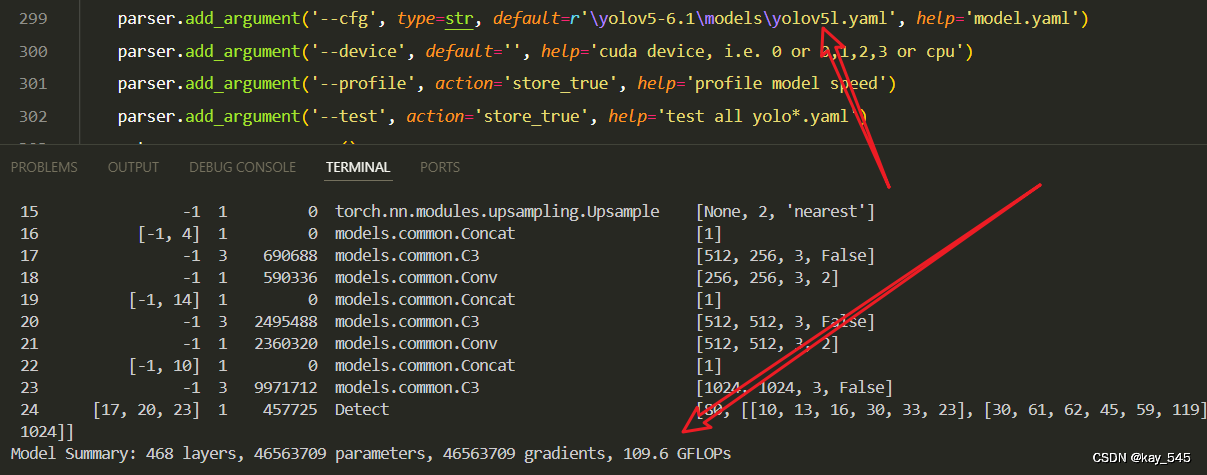

🚀运行程序,如果出现下面的内容则说明添加成功🚀

3. 完整代码分享

https://pan.baidu.com/s/1NMCYG0Wh9lmo8mFdnhiqsw?pwd=ojhm提取码:ojhm 链接过期评论区提示我,我看到后及时更新

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的GFLOPs

改进后的GFLOPs

5. 进阶

可以结合损失函数或者卷积模块进行多重改进

6. 总结

Explicit Visual Center (EVC) 是一种结合全局和局部信息的新型视觉特征提取机制。其主要通过两个并行模块处理图像顶层特征,一个是轻量级的多层感知器(MLP)模块,用于捕捉全局长距离依赖,另一个是可学习的视觉中心机制,用于聚合局部区域特征。轻量级 MLP 模块包括基于深度卷积和通道 MLP 的残差模块,而视觉中心机制通过计算特征图中每个像素点与可学习视觉词之间的距离来突出关键类别的特征。最终,这两个模块的输出特征图在通道维度上连接在一起,生成的特征图用于下游识别任务,从而有效提升了视觉特征的表示能力,特别是在密集预测任务中表现出色。