Kubernetes集群性能测试之kubemark集群搭建

Kubemark是K8s官方提供的一个对K8s集群进行性能测试的工具。它可以模拟出一个K8s cluster(Kubemark cluster),不受资源限制,从而能够测试的集群规模比真实集群大的多。这个cluster中master是真实的机器,所有的nodes是Hollow nodes。Hollow nodes执行的还是真实的K8s程序,只是不会调用Docker,因此测试会走一套K8s API调用的完整流程,但是不会真正创建pod。

Kubermark是在模拟的Kubemark cluster上跑E2E测试,从而获得集群的性能指标。Kubermark cluster的测试数据,虽然与真实集群的稍微有点误差,不过可以代表真实集群的数据,具体数据见Updates to Performance and Scalability in Kubernetes 1.3 – 2,000 node 60,000 pod clusters。因此,可以借用Kubermark,直接在真实集群上跑E2E测试,从而对我们真实集群进行性能测试。

kubemark包括两个部分:

- 一个真实的kubemark master控制面,可以是单节点也可以是多节点。

- 一组注册到kubemark集群中的Hollow node,通常是由另一个k8s集群(external集群)中的Pod模拟,该pod ip即为kubemark集群中对应Hollow node的IP。

说明:

上面的external和kubemark集群也可以使用一个集群进行测试。

kubemark项目编译及镜像制作

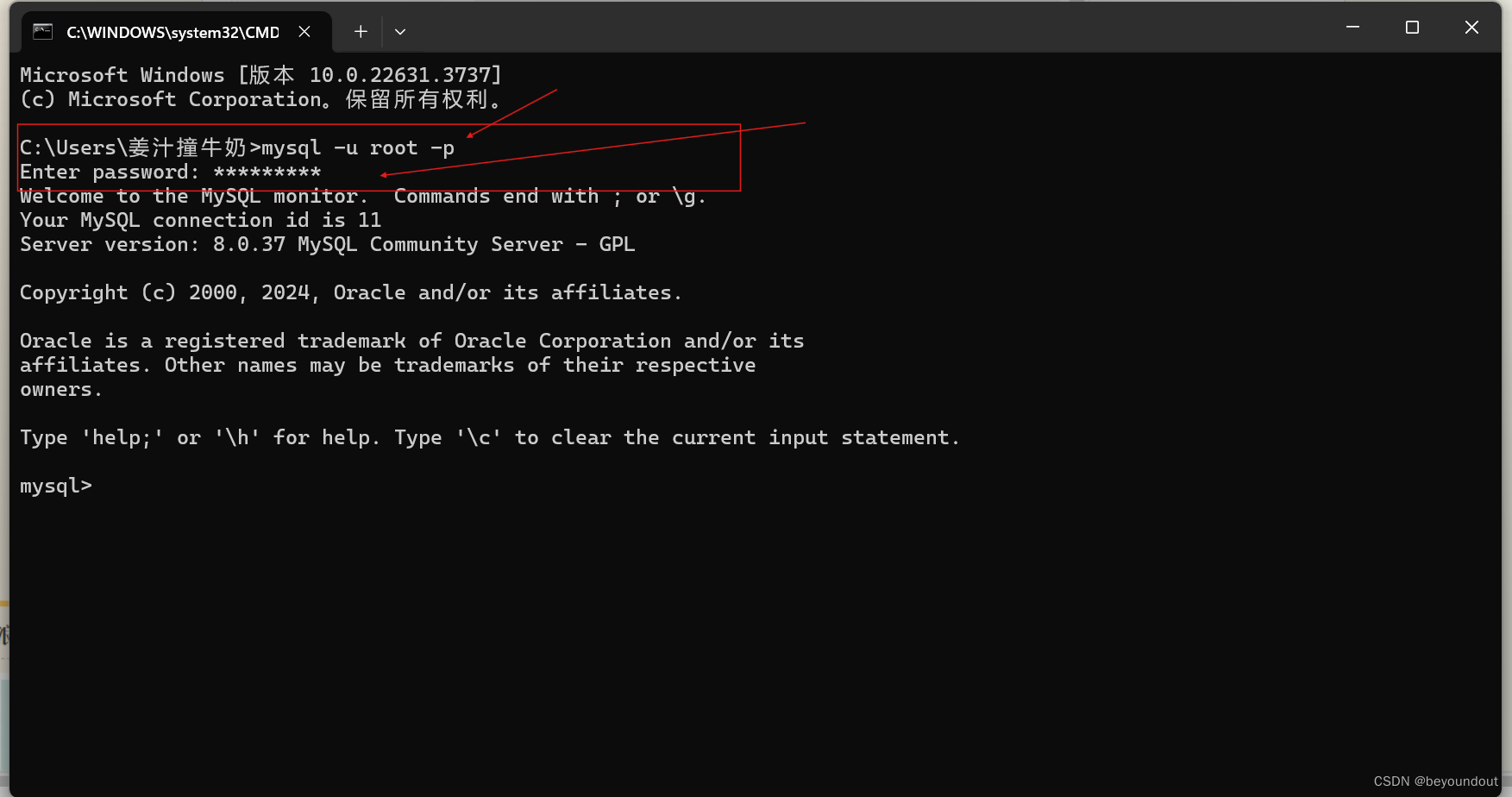

kubemark镜像可以使用网上已经构建好的,也可以自己构建。kubemark源码位于kubernetes项目中,编辑及制作kubemark镜像是搭建kubemark集群的第一步。准备好go语言环境,环境中需要安装docker,如果使用国内网络请自行配置goproxy及docker镜像加速源,然后执行命令:

mkdir -p go/src/k8s.io/

cd go/src/k8s.io/

# 克隆kubernetes源码

git clone https://github.com/kubernetes/kubernetes.git

cd kubernetes

# 根据自己的需要,checkout目标分支

git checkout v1.19.0 -f

# 编译

make WHAT='cmd/kubemark'

cp _output/bin/kubemark cluster/images/kubemark/

cd cluster/images/kubemark/

# 构建镜像,过程其实就是将编译构建的kubemark二进制文件拷贝到基础镜像中,可以根据需要修改Dockerfile文件。

sudo make build

说明:

默认情况下构建kubemark镜像的base镜像会从gcr.io仓库拉取base镜像,一方面该仓库需要魔法上网才能拉取,另外就是测试发现该镜像做了很多裁剪,不方面后续进入容器排查。这里可以改为使用其他镜像,例如centos:7。

制作出的容器镜像为staging-k8s.gcr.io/kubemark, 可以通过docker image ls命令看到:

test@ubuntu:~$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

lldhsds/kubemark 1.19.0 e38978301036 3 hours ago 116MB

staging-k8s.gcr.io/kubemark 1.19.0 e38978301036 3 hours ago 116MB

staging-k8s.gcr.io/kubemark latest e38978301036 3 hours ago 116MB

此镜像用于启动模拟k8s节点的pod容器,镜像构建完成后打包并导入到external k8s集群的各节点中。

这里构建镜像的时候默认会到gcr去拉取基础镜像,国内无法pull镜像,可以修改Dockefile文件改用其他基础镜像:

[root@k8s-master kubemark]# pwd

/root/go/src/k8s.io/kubernetes/cluster/images/kubemark

# 修改Dockerfile,改用centos7.0作为基础镜像构建kubemark镜像

[root@k8s-master kubemark]# cat Dockerfile

FROM centos:centos7.0.1406

COPY kubemark /kubemark

# 构建镜像

[root@k8s-master kubemark]# IMAGE_TAG=v1.19.0 make build

[root@k8s-master kubemark]# docker images | grep kubemark

staging-k8s.gcr.io/kubemark v1.19.0 b3c808cf091c 3 hours ago 309MB

kubemark集群搭建实战

准备好两套K8S环境:

-

kubemark cluster: 进行性能测试的集群,上面会出现一些 hollow node

-

external cluster: 运行一些名为 hollow-node-* 的 pod 的集群,这些 pod 通过 kubemark cluster 的 kubeconfig 文件注册为 kubemark cluster 的 node

创建hollow-nodes步骤如下:

说明:

下面用到的一些配置文件来自源码路径,

kubernetes/test/kubemark/resources/,不同版本配置文件可能不同。本文部署针对源码配置文件做了部分删减。

external集群创建 kubemark namespace

[root@k8s-master ~]# kubectl create ns kubemark

external集群创建配置:

# 在 external cluster创建configmap

[root@k8s-master ~]# kubectl create configmap node-configmap -n kubemark --from-literal=content.type="test-cluster"

准备 kubemark cluster 的 kubeconfig 文件:kubeconfig.kubemark

# 在 external cluster 上创建secret,其中kubeconfig为kubemark集群的kubeconfig文件

[root@k8s-master ~]# kubectl create secret generic kubeconfig --type=Opaque --namespace=kubemark --from-file=kubelet.kubeconfig=kubeconfig.kubemark --from-file=kubeproxy.kubeconfig=kubeconfig.kubemark

在k8s各个节点导入kubemark相关镜像,将上述构建的kubemark镜像,同时还会用到busybox镜像

# docker环境

docker load -i busybox.tar

docker load -i kubemark-v1.19.0.tar

# containerd环境

ctr -n=k8s.io images import busybox.tar

ctr -n=k8s.io images import kubemark-v1.27.6.tar

准备hollow-node.yaml文件,该文件为创建hollow节点的配置文件:

以下yaml文件在k8s 1.19.0 + flannel 环境下测试正常:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hollow-node

namespace: kubemark

labels:

name: hollow-node

spec:

replicas: 2

selector:

matchLabels:

name: hollow-node

template:

metadata:

labels:

name: hollow-node

spec:

nodeSelector:

name: hollow-node

initContainers:

- name: init-inotify-limit

image: busybox

imagePullPolicy: IfNotPresent

command: ['sysctl', '-w', 'fs.inotify.max_user_instances=524288']

securityContext:

privileged: true

volumes:

- name: kubeconfig-volume

secret:

secretName: kubeconfig

containers:

- name: hollow-kubelet

image: staging-k8s.gcr.io/kubemark:v1.19.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 4194

- containerPort: 10250

- containerPort: 10255

env:

- name: CONTENT_TYPE

valueFrom:

configMapKeyRef:

name: node-configmap

key: content.type

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

command:

- /bin/sh

- -c

- /kubemark --morph=kubelet --name=$(NODE_NAME) --kubeconfig=/kubeconfig/kubelet.kubeconfig --alsologtostderr --v=2

volumeMounts:

- name: kubeconfig-volume

mountPath: /kubeconfig

readOnly: true

securityContext:

privileged: true

- name: hollow-proxy

image: staging-k8s.gcr.io/kubemark:v1.19.0

imagePullPolicy: IfNotPresent

env:

- name: CONTENT_TYPE

valueFrom:

configMapKeyRef:

name: node-configmap

key: content.type

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

command:

- /bin/sh

- -c

- /kubemark --morph=proxy --name=$(NODE_NAME) --use-real-proxier=false --kubeconfig=/kubeconfig/kubeproxy.kubeconfig --alsologtostderr --v=2

volumeMounts:

- name: kubeconfig-volume

mountPath: /kubeconfig

readOnly: true

tolerations:

- key: key

value: value

effect: NoSchedule

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: name

operator: In

values:

- hollow-node

说明:

上述配置文件中kubemark运行参数,由于pod启动报错,kubemark不识别,所以去掉了$(CONTENT_TYPE)参数。

如果集群是通过域名访问的,需要在hollow-node.yaml文件中添加如下配置:

spec:

hostAliases:

- ip: "10.233.0.1" ###如果是高可用,则填写集群的vip地址

hostnames:

- "vip.sanyi.com" ###集群域名

nodeSelector:

name: hollow-node

以下yaml文件在k8s 1.27.6 + flannel 环境下测试正常,主要区别就是需要映射的volume和kubemark参数不同:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hollow-node

namespace: kubemark

labels:

name: hollow-node

spec:

replicas: 3

selector:

matchLabels:

name: hollow-node

template:

metadata:

labels:

name: hollow-node

spec:

nodeSelector:

name: hollow-node

initContainers:

- name: init-inotify-limit

image: busybox

imagePullPolicy: IfNotPresent

command: ['sysctl', '-w', 'fs.inotify.max_user_instances=524288']

securityContext:

privileged: true

volumes:

- name: kubeconfig-volume

secret:

secretName: kubeconfig

- name: containerd

hostPath:

path: /run/containerd

- name: logs-volume

hostPath:

path: /var/log

containers:

- name: hollow-kubelet

image: staging-k8s.gcr.io/kubemark:v1.27.6

imagePullPolicy: IfNotPresent

ports:

- containerPort: 4194

- containerPort: 10250

- containerPort: 10255

env:

- name: CONTENT_TYPE

valueFrom:

configMapKeyRef:

name: node-configmap

key: content.type

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

command:

- /bin/sh

- -c

- /kubemark --morph=kubelet --name=$(NODE_NAME) --kubeconfig=/kubeconfig/kubelet.kubeconfig --v=2

volumeMounts:

- name: kubeconfig-volume

mountPath: /kubeconfig

readOnly: true

- name: logs-volume

mountPath: /var/log

- name: containerd

mountPath: /run/containerd

securityContext:

privileged: true

- name: hollow-proxy

image: staging-k8s.gcr.io/kubemark:v1.27.6

imagePullPolicy: IfNotPresent

env:

- name: CONTENT_TYPE

valueFrom:

configMapKeyRef:

name: node-configmap

key: content.type

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

command:

- /bin/sh

- -c

- /kubemark --morph=proxy --name=$(NODE_NAME) --use-real-proxier=false --kubeconfig=/kubeconfig/kubeproxy.kubeconfig --v=2

volumeMounts:

- name: kubeconfig-volume

mountPath: /kubeconfig

readOnly: true

- name: logs-volume

mountPath: /var/log

- name: containerd

mountPath: /run/containerd

tolerations:

- key: key

value: value

effect: NoSchedule

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: name

operator: In

values:

- hollow-node

创建hollow pod和node:

# 为节点设置label,label与上述配置文件中的labels保持一致

[root@k8s-master1 kubemark]# kubectl label node k8s-master1 name=hollow-node

node/k8s-master1 labeled

[root@k8s-master1 kubemark]# kubectl label node k8s-node1 name=hollow-node

node/k8s-node1 labeled

[root@k8s-master1 kubemark]# kubectl label node k8s-node2 name=hollow-node

node/k8s-node2 labeled

# 在 external cluster 创建相应的 hollow-node pod

[root@k8s-master ~]# kubectl create -f hollow-node.yaml

# 在 external cluster 检查相应的 hollow-node pod

[root@k8s-master ~]# kubectl get pod -n kubemark

NAME READY STATUS RESTARTS AGE

hollow-node-7b9b96674c-4kp7r 2/2 Running 0 23m

hollow-node-7b9b96674c-wzzf2 2/2 Running 0 23m

# 在 kubemark cluster 上检查相应的 hollow node

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

hollow-node-7b9b96674c-4kp7r Ready <none> 22m v1.19.0-dirty

hollow-node-7b9b96674c-wzzf2 Ready <none> 23m v1.19.0-dirty

k8s-master Ready master 7d22h v1.19.0

k8s-node1 Ready <none> 7d22h v1.19.0

k8s-node2 Ready <none> 7d22h v1.19.0

# 进一步查看模拟hollow-node节点的容器和hollow-node节点上的容器(flannel容器没有运行,应该是kubemark没有适配该cni插件)

[root@k8s-master ~]# kubectl get pod -A -o wide | grep hollow-node-7b9b96674c-4kp7r

kube-flannel kube-flannel-ds-bvh8b 0/1 Init:0/2 0 34m 192.168.192.168 hollow-node-7b9b96674c-4kp7r <none> <none>

kube-system kube-proxy-c92nr 1/1 Running 0 34m 192.168.192.168 hollow-node-7b9b96674c-4kp7r <none> <none>

kubemark hollow-node-7b9b96674c-4kp7r 2/2 Running 0 34m 10.244.2.105 k8s-node2 <none> <none>

monitoringnode-exporter-cdck9 2/2 Running 0 34m 192.168.192.168 hollow-node-7b9b96674c-4kp7r <none> <none>

说明:关于模拟hollow node节点的pod资源,官方给出的建议是每个pod 0.1 CPU核心和220MB内存。

在hollow节点启动pod测试:

# 为hollow node节点添加标签,如下:

[root@k8s-master ~]# kubectl label node hollow-node-7b9b96674c-4kp7r app=nginx

[root@k8s-master ~]# kubectl label node hollow-node-7b9b96674c-wzzf2 app=nginx

# 执行yaml脚本,在hollow节点启动pod,如下:

kubectl apply -f deploy-pod.yaml

###yaml问价内容如下:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

nodeSelector:

app: nginx

containers:

- name: nginx-deploy

image: nginx:latest

imagePullPolicy: IfNotPresent

# 查看部署的容器

[root@k8s-master ~]# kubectl get pod -A -o wide | grep nginx-deploy

default nginx-deploy-9654cffc5-l8c2w 1/1 Running 0 12m 192.168.192.168 hollow-node-7b9b96674c-wzzf2 <none> <none>

default nginx-deploy-9654cffc5-smqpj 1/1 Running 0 12m 192.168.192.168 hollow-node-7b9b96674c-wzzf2 <none> <none>

参考文档

kubernetes 性能测试工具 kubemark-CSDN博客

Kubernetes集群性能测试 - 乐金明的博客 | Robin Blog (supereagle.github.io)

Kubernetes Community

![[Godot3.3.3] – 人物死亡动画 part-2](https://i-blog.csdnimg.cn/direct/36a50a547dba46d9be44585bc8f789bd.png)