1、安装Ranger

参照上一个文章

2、修改配置

把各种plugin转到统一目录(源码编译的target目录下拷贝过来),比如 tar zxvf ranger-2.4.0-hdfs-plugin.tar.gz

tar zxvf ranger-2.4.0-hdfs-plugin.tar.gz

vim install.properties

POLICY_MGR_URL==http://localhost:6080

REPOSITORY_NAME=hdfs_repo

COMPONENT_INSTALL_DIR_NAME=/BigData/run/hadoop

CUSTOM_USER=hadoop

CUSTOM_GROUP=hadoop3、生效配置

./enable-hdfs-plugin.sh

出现下面关键字代表安装plugin成功,需要重启所有namenode服务

Ranger Plugin for hadoop has been enabled. Please restart hadoop to ensure that changes are effective.4、 相关报错

4.1 hadoop common冲突

[root@tv3-hadoop-01 ranger-2.4.0-hdfs-plugin]# ./enable-hdfs-plugin.sh

Custom user and group is available, using custom user and group.

+ Sun Jun 30 16:22:24 CST 2024 : hadoop: lib folder=/BigData/run/hadoop/share/hadoop/hdfs/lib conf folder=/BigData/run/hadoop/etc/hadoop

+ Sun Jun 30 16:22:24 CST 2024 : Saving current config file: /BigData/run/hadoop/etc/hadoop/hdfs-site.xml to /BigData/run/hadoop/etc/hadoop/.hdfs-site.xml.20240630-162224 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving current config file: /BigData/run/hadoop/etc/hadoop/ranger-hdfs-audit.xml to /BigData/run/hadoop/etc/hadoop/.ranger-hdfs-audit.xml.20240630-162224 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving current config file: /BigData/run/hadoop/etc/hadoop/ranger-hdfs-security.xml to /BigData/run/hadoop/etc/hadoop/.ranger-hdfs-security.xml.20240630-162224 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving current config file: /BigData/run/hadoop/etc/hadoop/ranger-policymgr-ssl.xml to /BigData/run/hadoop/etc/hadoop/.ranger-policymgr-ssl.xml.20240630-162224 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving lib file: /BigData/run/hadoop/share/hadoop/hdfs/lib/ranger-hdfs-plugin-impl to /BigData/run/hadoop/share/hadoop/hdfs/lib/.ranger-hdfs-plugin-impl.20240630162225 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving lib file: /BigData/run/hadoop/share/hadoop/hdfs/lib/ranger-hdfs-plugin-shim-2.4.0.jar to /BigData/run/hadoop/share/hadoop/hdfs/lib/.ranger-hdfs-plugin-shim-2.4.0.jar.20240630162225 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving lib file: /BigData/run/hadoop/share/hadoop/hdfs/lib/ranger-plugin-classloader-2.4.0.jar to /BigData/run/hadoop/share/hadoop/hdfs/lib/.ranger-plugin-classloader-2.4.0.jar.20240630162225 ...

+ Sun Jun 30 16:22:25 CST 2024 : Saving current JCE file: /etc/ranger/hdfs_repo/cred.jceks to /etc/ranger/hdfs_repo/.cred.jceks.20240630162225 ...

Unable to store password in non-plain text format. Error: [SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/thirdparty/com/google/common/collect/Interners

at org.apache.hadoop.util.StringInterner.<clinit>(StringInterner.java:40)

at org.apache.hadoop.conf.Configuration$Parser.handleEndElement(Configuration.java:3335)

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3417)

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3191)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3084)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3045)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2923)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2905)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1413)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1385)

at org.apache.ranger.credentialapi.CredentialReader.getDecryptedString(CredentialReader.java:55)

at org.apache.ranger.credentialapi.buildks.createCredential(buildks.java:87)

at org.apache.ranger.credentialapi.buildks.main(buildks.java:41)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.thirdparty.com.google.common.collect.Interners

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 13 more]

Exiting plugin installation解决方案:

确保打包的pom.xml文件中的hadoop.version和ranger部署的hadoop环境版本保持一致(如果冲突,建议保留源码版本)

4.2 其他报错

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/thirdparty/com/google/common/base/Preconditions

at org.apache.hadoop.conf.Configuration$DeprecationDelta.<init>(Configuration.java:430)

at org.apache.hadoop.conf.Configuration$DeprecationDelta.<init>(Configuration.java:443)

at org.apache.hadoop.conf.Configuration.<clinit>(Configuration.java:525)

at org.apache.ranger.credentialapi.CredentialReader.getDecryptedString(CredentialReader.java:39)

at org.apache.ranger.credentialapi.buildks.createCredential(buildks.java:87)

at org.apache.ranger.credentialapi.buildks.main(buildks.java:41)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.thirdparty.com.google.common.base.Preconditions

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 6 more]解决方案:

确保打包的pom.xml文件中的hadoop.version和ranger部署的hadoop环境版本保持一致(如果冲突,建议保留源码版本)

4.3 ranger 集成kerbos hadoop报错

2024-06-30 12:20:53,246 [timed-executor-pool-0] INFO [BaseClient.java:122] Init Login: using username/password

2024-06-30 12:20:53,282 [timed-executor-pool-0] ERROR [HdfsResourceMgr.java:49] <== HdfsResourceMgr.testConnection Error: Unable to login to Hadoop environment [hdfs_repo]

org.apache.ranger.plugin.client.HadoopException: Unable to login to Hadoop environment [hdfs_repo]

at org.apache.ranger.plugin.client.BaseClient.createException(BaseClient.java:153)

at org.apache.ranger.plugin.client.BaseClient.createException(BaseClient.java:149)

at org.apache.ranger.plugin.client.BaseClient.login(BaseClient.java:140)

at org.apache.ranger.plugin.client.BaseClient.<init>(BaseClient.java:61)

at org.apache.ranger.services.hdfs.client.HdfsClient.<init>(HdfsClient.java:50)

at org.apache.ranger.services.hdfs.client.HdfsClient.connectionTest(HdfsClient.java:217)

at org.apache.ranger.services.hdfs.client.HdfsResourceMgr.connectionTest(HdfsResourceMgr.java:47)

at org.apache.ranger.services.hdfs.RangerServiceHdfs.validateConfig(RangerServiceHdfs.java:74)

at org.apache.ranger.biz.ServiceMgr$ValidateCallable.actualCall(ServiceMgr.java:655)

at org.apache.ranger.biz.ServiceMgr$ValidateCallable.actualCall(ServiceMgr.java:642)

at org.apache.ranger.biz.ServiceMgr$TimedCallable.call(ServiceMgr.java:603)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Login failure for hadoop using password ****

at org.apache.hadoop.security.SecureClientLogin.loginUserWithPassword(SecureClientLogin.java:82)

at org.apache.ranger.plugin.client.BaseClient.login(BaseClient.java:123)

... 12 common frames omitted

Caused by: javax.security.auth.login.LoginException: Client not found in Kerberos database (6) - CLIENT_NOT_FOUND

at com.sun.security.auth.module.Krb5LoginModule.attemptAuthentication(Krb5LoginModule.java:804)

at com.sun.security.auth.module.Krb5LoginModule.login(Krb5LoginModule.java:596)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at javax.security.auth.login.LoginContext.invoke(LoginContext.java:755)

at javax.security.auth.login.LoginContext.access$000(LoginContext.java:195)

at javax.security.auth.login.LoginContext$4.run(LoginContext.java:682)

at javax.security.auth.login.LoginContext$4.run(LoginContext.java:680)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.login.LoginContext.invokePriv(LoginContext.java:680)

at javax.security.auth.login.LoginContext.login(LoginContext.java:587)

at org.apache.hadoop.security.SecureClientLogin.loginUserWithPassword(SecureClientLogin.java:79)

... 13 common frames omitted

Caused by: sun.security.krb5.KrbException: Client not found in Kerberos database (6) - CLIENT_NOT_FOUND

at sun.security.krb5.KrbAsRep.<init>(KrbAsRep.java:82)

at sun.security.krb5.KrbAsReqBuilder.send(KrbAsReqBuilder.java:316)

at sun.security.krb5.KrbAsReqBuilder.action(KrbAsReqBuilder.java:361)

at com.sun.security.auth.module.Krb5LoginModule.attemptAuthentication(Krb5LoginModule.java:766)

... 26 common frames omitted

Caused by: sun.security.krb5.Asn1Exception: Identifier doesn't match expected value (906)

at sun.security.krb5.internal.KDCRep.init(KDCRep.java:140)

at sun.security.krb5.internal.ASRep.init(ASRep.java:64)

at sun.security.krb5.internal.ASRep.<init>(ASRep.java:59)

at sun.security.krb5.KrbAsRep.<init>(KrbAsRep.java:60)

... 29 common frames omitted如果ranger同时集成kerbos的话需要增加如下操作

增加证书

addprinc -randkey rangeradmin/tv3-hadoop-01@AB.ELONG.COM

addprinc -randkey rangerlookup/tv3-hadoop-01@AB.ELONG.COM

addprinc -randkey rangerusersync/tv3-hadoop-01@AB.ELONG.COM

addprinc -randkey HTTP/tv3-hadoop-01@AB.ELONG.COM

HTTP如果之前已经有了,可以不重新生成

导出证书

xst -k /BigData/run/hadoop/keytab/ranger.keytab rangeradmin/tv3-hadoop-01@AB.ELONG.COM rangerlookup/tv3-hadoop-01@AB.ELONG.COM rangerusersync/tv3-hadoop-01@AB.ELONG.COM HTTP/tv3-hadoop-01@AB.ELONG.COM

赋权限:

chown ranger:ranger /BigData/run/hadoop/keytab/ranger.keytab

进行证书初始化

su - ranger

kinit -kt /BigData/run/hadoop/keytab/ranger.keytab HTTP/$HOSTNAME@AB.ELONG.COM

kinit -kt /BigData/run/hadoop/keytab/ranger.keytab rangeradmin/$HOSTNAME@AB.ELONG.COM

kinit -kt /BigData/run/hadoop/keytab/ranger.keytab rangerlookup/$HOSTNAME@AB.ELONG.COM

kinit -kt /BigData/run/hadoop/keytab/ranger.keytab rangerusersync/$HOSTNAME@AB.ELONG.COM

安装前可以提前配置:

spnego_principal=HTTP/tv3-hadoop-01@AB.ELONG.COM

spnego_keytab=/BigData/run/hadoop/keytab/ranger.keytab

admin_principal=rangeradmin/tv3-hadoop-01@AB.ELONG.COM

admin_keytab=/BigData/run/hadoop/keytab/ranger.keytab

lookup_principal=rangerlookup/tv3-hadoop-01@AB.ELONG.COM

lookup_keytab=/BigData/run/hadoop/keytab/ranger.keytab

hadoop_conf=/BigData/run/hadoop/etc/hadoop/

ranger.service.host=tv3-hadoop-01

4.4 SSL 报错

2024-06-30 13:01:43,237 [timed-executor-pool-0] ERROR [HdfsResourceMgr.java:49] <== HdfsResourceMgr.testConnection Error: listFilesInternal: Unable to get listing of files for directory /null] from Hadoop environment [hdfs_repo].

org.apache.ranger.plugin.client.HadoopException: listFilesInternal: Unable to get listing of files for directory /null] from Hadoop environment [hdfs_repo].

at org.apache.ranger.services.hdfs.client.HdfsClient.listFilesInternal(HdfsClient.java:138)

at org.apache.ranger.services.hdfs.client.HdfsClient.access$000(HdfsClient.java:44)

at org.apache.ranger.services.hdfs.client.HdfsClient$1.run(HdfsClient.java:167)

at org.apache.ranger.services.hdfs.client.HdfsClient$1.run(HdfsClient.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.ranger.services.hdfs.client.HdfsClient.listFiles(HdfsClient.java:170)

at org.apache.ranger.services.hdfs.client.HdfsClient.connectionTest(HdfsClient.java:221)

at org.apache.ranger.services.hdfs.client.HdfsResourceMgr.connectionTest(HdfsResourceMgr.java:47)

at org.apache.ranger.services.hdfs.RangerServiceHdfs.validateConfig(RangerServiceHdfs.java:74)

at org.apache.ranger.biz.ServiceMgr$ValidateCallable.actualCall(ServiceMgr.java:655)

at org.apache.ranger.biz.ServiceMgr$ValidateCallable.actualCall(ServiceMgr.java:642)

at org.apache.ranger.biz.ServiceMgr$TimedCallable.call(ServiceMgr.java:603)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: DestHost:destPort tv3-hadoop-06:15820 , LocalHost:localPort tv3-hadoop-01/10.177.42.98:0. Failed on local exception: java.io.IOException: javax.security.sasl.SaslException: No common protection layer between client and server

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:837)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:812)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1566)

at org.apache.hadoop.ipc.Client.call(Client.java:1508)

at org.apache.hadoop.ipc.Client.call(Client.java:1405)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:234)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:119)

at com.sun.proxy.$Proxy107.getListing(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getListing(ClientNamenodeProtocolTranslatorPB.java:687)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy108.getListing(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.listPaths(DFSClient.java:1694)

at org.apache.hadoop.hdfs.DFSClient.listPaths(DFSClient.java:1678)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:1093)

at org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:144)

at org.apache.hadoop.hdfs.DistributedFileSystem$24.doCall(DistributedFileSystem.java:1157)

at org.apache.hadoop.hdfs.DistributedFileSystem$24.doCall(DistributedFileSystem.java:1154)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:1164)

at org.apache.ranger.services.hdfs.client.HdfsClient.listFilesInternal(HdfsClient.java:83)

... 16 common frames omitted

Caused by: java.io.IOException: javax.security.sasl.SaslException: No common protection layer between client and server

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:778)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1845)

at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:732)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:835)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:413)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1636)

at org.apache.hadoop.ipc.Client.call(Client.java:1452)

... 40 common frames omitted

Caused by: javax.security.sasl.SaslException: No common protection layer between client and server

at com.sun.security.sasl.gsskerb.GssKrb5Client.doFinalHandshake(GssKrb5Client.java:251)

at com.sun.security.sasl.gsskerb.GssKrb5Client.evaluateChallenge(GssKrb5Client.java:186)

at org.apache.hadoop.security.SaslRpcClient.saslEvaluateToken(SaslRpcClient.java:481)

at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:423)

at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:622)

at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:413)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:822)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1845)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:818)

... 43 common frames omitted解决方案:

hadoop.rpc.protection需要设置和集群保持一致,比如当前设置为

<property>

<name>hadoop.rpc.protection</name>

<value>authentication</value>

<description>authentication : authentication only (default); integrity : integrity check in addition to authentication; privacy : data encryption in addition to integrity</description>

</property>

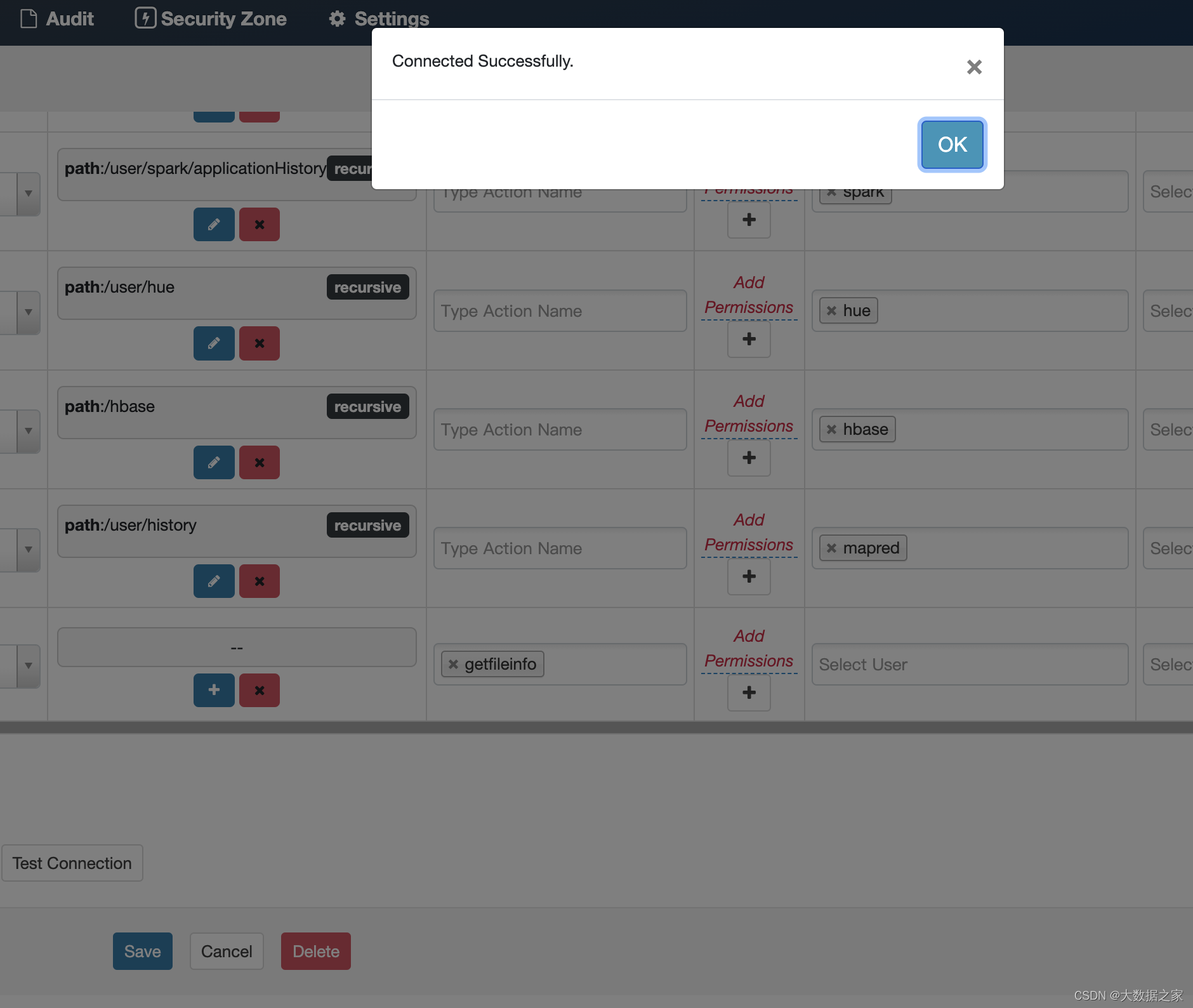

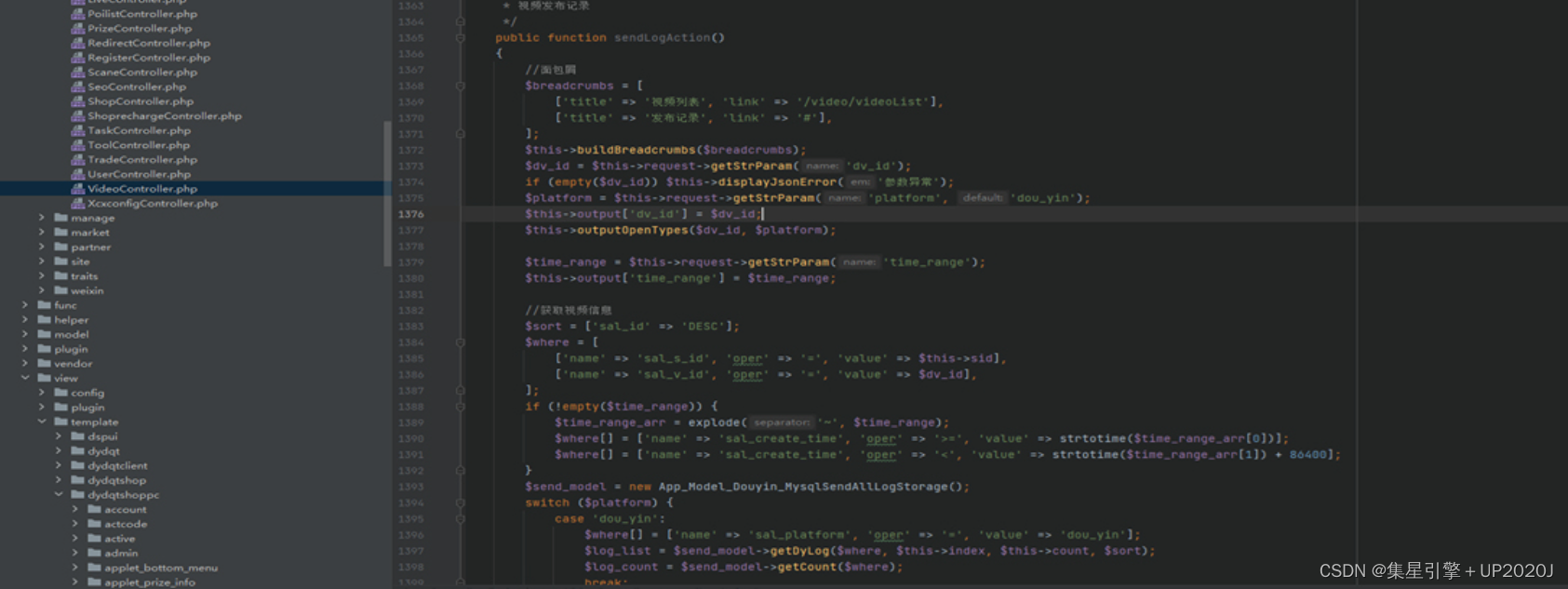

5、验证连接是否成功

Service Name hdfs_repo

Display Name hdfs_repo

Description --

Active Status Enabled

Tag Service --

Username hadoop

Password *****

Namenode URL hdfs://localhost:15820

Authorization Enabled true

Authentication Type kerberos

hadoop.security.auth_to_local RULE:[2:$1@$0](hadoop@.*EXAMPLE.COM)s/.*/hadoop/ RULE:[2:$1@$0](HTTP@.*EXAMPLE.COM)s/.*/hadoop/ DEFAULT

dfs.datanode.kerberos.principal hadoop/_HOST@CC.LOCAL

dfs.namenode.kerberos.principal hadoop/_HOST@CC.LOCAL

dfs.secondary.namenode.kerberos.principal --

RPC Protection Type authentication

Common Name for Certificate --

policy.download.auth.users hadoop

tag.download.auth.users hadoop

dfs.journalnode.kerberos.principal hadoop/_HOST@CC.LOCAL