(个人能力有限,本文如有错误之处,欢迎交流指正)

1 简介

在进行网络数据 接收 和 发送 过程中,网卡设备到L3(网络层) 中间会经历流量控制(Traffic control)。

《BPF之巅.洞悉Linux系统和应⽤性能》P413

qdisc这个可选层可以⽤来管理⽹络包的流量分类(tc)、调度、修改、过滤 以及 整形操作。

《BPF之巅.洞悉Linux系统和应⽤性能》P417

Traffic control is the name given to the sets of queuing systems and mechanisms by which packets are received and transmitted on a router. This includes deciding (if and) which packets to accept at what rate on the input of an interface and determining which packets to transmit in what order at what rate on the output of an interface.

2. Overview of Concepts

When the kernel has several packets to send out over a network device, it has to decide which ones to send first, which ones to delay, and which ones to drop. This is the job of the queueing disciplines, several different algorithms for how to do this "fairly" have been proposed.

This is useful for example if some of your network devices are real time devices that need a certain minimum data flow rate, or if you need to limit the maximum data flow rate for traffic which matches specified criteria. This code is considered to be experimental.

<kernel-src>/net/sched/Kconfig

2 功能组成

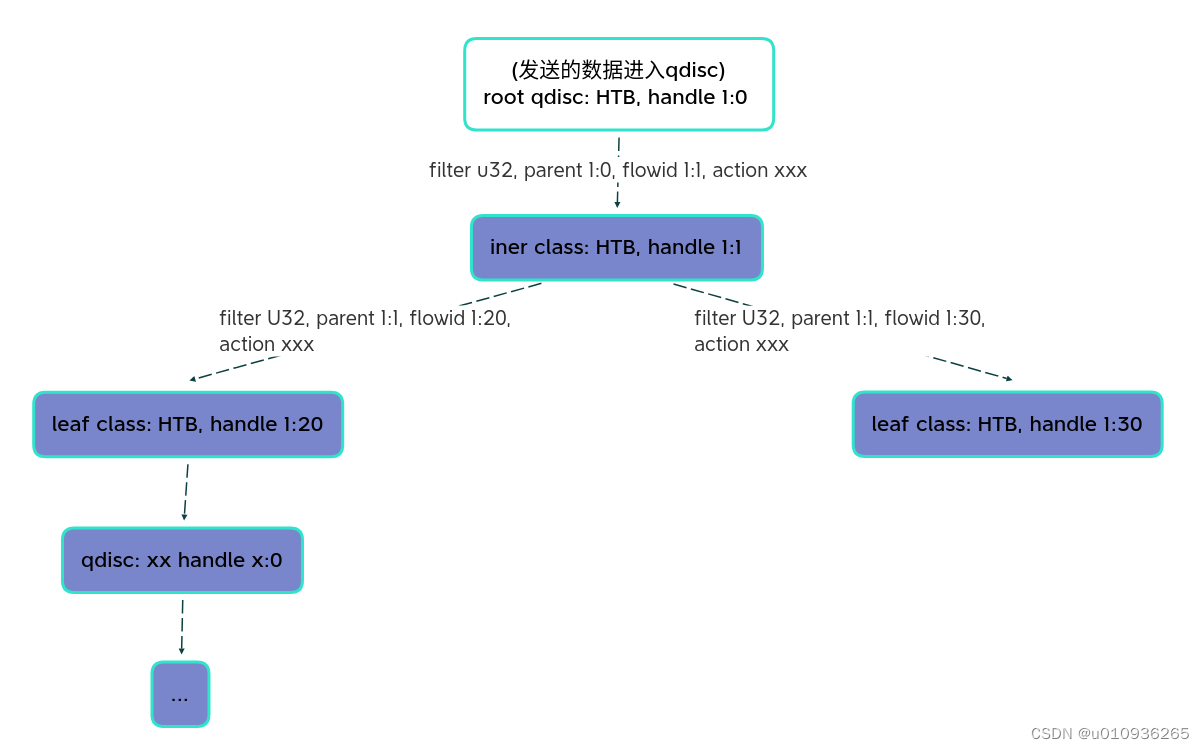

2.1 流量控制的树状图

以HTB(qdisc)和U32(filter)为例

2.2 qdisc (queueing discipline)

2.2.1 简介

A qdisc is a scheduler. Every output interface needs a scheduler of some kind, and the default scheduler is a FIFO. Other qdiscs available under Linux will rearrange the packets entering the scheduler's queue in accordance with that scheduler's rules.

4. Components of Linux Traffic Control

⼏乎所有设备都会使⽤队列调度出⼝流量,⽽内核可以使⽤名为队列规则(queuing discipline)的算法安排帧,使其以最有效率的次序传输。

《深⼊理解LINUX⽹络技术内幕》P251, P252

qdisc对应的内核代码如下:

# ls net/sched/sch_

sch_api.c sch_cbs.c sch_etf.c sch_gred.c sch_mq.c sch_plug.c sch_sfq.c

sch_atm.c sch_choke.c sch_fifo.c sch_hfsc.c sch_mqprio.c sch_prio.c sch_skbprio.c

sch_blackhole.c sch_codel.c sch_fq.c sch_hhf.c sch_multiq.c sch_qfq.c sch_taprio.c

sch_cake.c sch_drr.c sch_fq_codel.c sch_htb.c sch_netem.c sch_red.c sch_tbf.c

sch_cbq.c sch_dsmark.c sch_generic.c sch_ingress.c sch_pie.c sch_sfb.c sch_teql.c 2.2.2 可分类(classful) qdisc

2.2.2.1 简介

The flexibility and control of Linux traffic control can be unleashed through the agency of the classful qdiscs. Remember that the classful queuing disciplines can have filters attached to them, allowing packets to be directed to particular classes and subqueues.

7. Classful Queuing Disciplines (qdiscs)

2.2.2.2 包括的 qdisc

CBQ、HTB、HFSC、PRIO、WRR、ATM、DRR、DSMARK 和 QFQ 等。

相关资料:

https://linux-tc-notes.sourceforge.net/tc/doc/sch_cbq.txt

https://linux-tc-notes.sourceforge.net/tc/doc/sch_hfsc.txt

https://linux-tc-notes.sourceforge.net/tc/doc/sch_prio.txt

7. Classful Queuing Disciplines (qdiscs)

2.2.3 不可分类(classless) qdisc

2.2.3.1 简介

The classless qdiscs can contain no classes, nor is it possible to attach filter to a classless qdisc. Because a classless qdisc contains no children of any kind, there is no utility to classifying. This means that no filter can be attached to a classless qdisc

4. Components of Linux Traffic Control

Each of these queuing disciplines can be used as the primary qdisc on an interface, or can be used inside a leaf class of a classful qdiscs. These are the fundamental schedulers used under Linux. Note that the default scheduler is the pfifo_fast.

6. Classless Queuing Disciplines (qdiscs)

2.2.3.2 包括的 qdisc

SFQ、ESFQ、RED、GRED 和 TBF 等。

相关资料:

https://linux-tc-notes.sourceforge.net/tc/doc/sch_tbf.txt

6. Classless Queuing Disciplines (qdiscs)

2.2.4 控制网络接收流量的qdisc——ingress

前面章节介绍的qdisc是用于网络数据发送的流量控制。用于网络数据接收流量的控制的qdisc是ingress。

代码:<kernel_src>/net/sched/sch_ingress.c

2.3 class

Classes only exist inside a classful qdisc (e.g., HTB and CBQ). Classes are immensely flexible and can always contain either multiple children classes or a single child qdisc [5]. There is no prohibition against a class containing a classful qdisc itself, which facilitates tremendously complex traffic control scenarios.

Any class can also have an arbitrary number of filters attached to it, which allows the selection of a child class or the use of a filter to reclassify or drop traffic entering a particular class.

A leaf class is a terminal class in a qdisc. It contains a qdisc (default FIFO) and will never contain a child class. Any class which contains a child class is an inner class (or root class) and not a leaf class.

4. Components of Linux Traffic Control

2.4 qdisc 和 class 的 handle

Every class and classful qdisc requires a unique identifier within the traffic control structure. This unique identifier is known as a handle and has two constituent members, a major number and a minor number.

The numbering of handles for classes and qdiscs

major

This parameter is completely free of meaning to the kernel. The user may use an arbitrary numbering scheme, however all objects in the traffic control structure with the same parent must share a major handle number. Conventional numbering schemes start at 1 for objects attached directly to the root qdisc.

minor

This parameter unambiguously identifies the object as a qdisc if minor is 0. Any other value identifies the object as a class. All classes sharing a parent must have unique minor numbers.

The special handle ffff:0 is reserved for the ingress qdisc.

4. Components of Linux Traffic Control

2.5 filter

2.5.1 简介

The filter is the most complex component in the Linux traffic control system. The filter provides a convenient mechanism for gluing together several of the key elements of traffic control. The simplest and most obvious role of the filter is to classify (see Section 3.3, “Classifying”) packets. Linux filters allow the user to classify packets into an output queue with either several different filters or a single filter.

-

A filter must contain a classifier phrase.

-

A filter may contain a policer phrase.

Filters can be attached either to classful qdiscs or to classes, however the enqueued packet always enters the root qdisc first. After the filter attached to the root qdisc has been traversed, the packet may be directed to any subclasses (which can have their own filters) where the packet may undergo further classification.

4. Components of Linux Traffic Control

2.5.2 classifier

2.5.2.1 简介

Filter objects

The classifiers are tools which can be used as part of a filter to identify characteristics of a packet or a packet's metadata. The Linux classfier object is a direct analogue to the basic operation and elemental mechanism of traffic control classifying.

4. Components of Linux Traffic Control

2.5.2.2 内核⾥的 classifier

# ls net/sched/cls_

cls_api.c cls_bpf.c cls_flow.c cls_fw.c cls_route.c cls_rsvp.c cls_tcindex.c

cls_basic.c cls_cgroup.c cls_flower.c cls_matchall.c cls_rsvp6.c cls_rsvp.h cls_u32.c2.6 action

2.6.1 简介

Actions get attached to classifiers and are invoked after a successful classification. They are used to overwrite the classification result, instantly drop or redirect packets, etc.

<kernel_src>/net/sched/Kconfig

2.6.2 对网络数据进行修改的actions——skbedit、pedit 和 skbmod

2.6.3 对接收到的数据进行镜像(复制)的action——mirred

The mirred action allows packet mirroring (copying) or redirecting (stealing) the packet it receives. Mirroring is what is sometimes referred to as Switch Port Analyzer (SPAN) and is commonly used to analyze and/or debug flows.

man tc-mirred

2.6.4 对接收到的数据进行流量控制的action——police

The police action allows to limit bandwidth of traffic matched by the filter it is attached to.

A typical application of the police action is to enforce ingress traffic rate by dropping exceeding packets.

man tc-police

2.6.5 内核下的actions

# ls net/sched/act_

act_api.c act_ct.c act_ipt.c act_mirred.c act_police.c act_skbmod.c

act_bpf.c act_ctinfo.c act_meta_mark.c act_mpls.c act_sample.c act_tunnel_key.c

act_connmark.c act_gact.c act_meta_skbprio.c act_nat.c act_simple.c act_vlan.c

act_csum.c act_ife.c act_meta_skbtcindex.c act_pedit.c act_skbedit.c 3 linux系统下配置流量控制 的方式

3.1 通过tc命令

第4章会举例说明

3.2 通过脚本(tcng)

参考:Traffic Control using tcng and HTB HOWTO

4 使用tc命令配置发送数据时的流量控制

4.1 简介

dev_queue_xmit函数是TCP/IP协议栈网络层执行发送操作与设备驱动程序之间的接口。

《嵌⼊式Linux⽹络体系结构设计与TCP/IP协议栈》6.5.4 dev_queue_xmit函数

在dev_queue_xmit函数内部会调用流量控制的功能。

《深⼊理解 LINUX ⽹络技术内幕》P260

4.2 tc命令用法演示

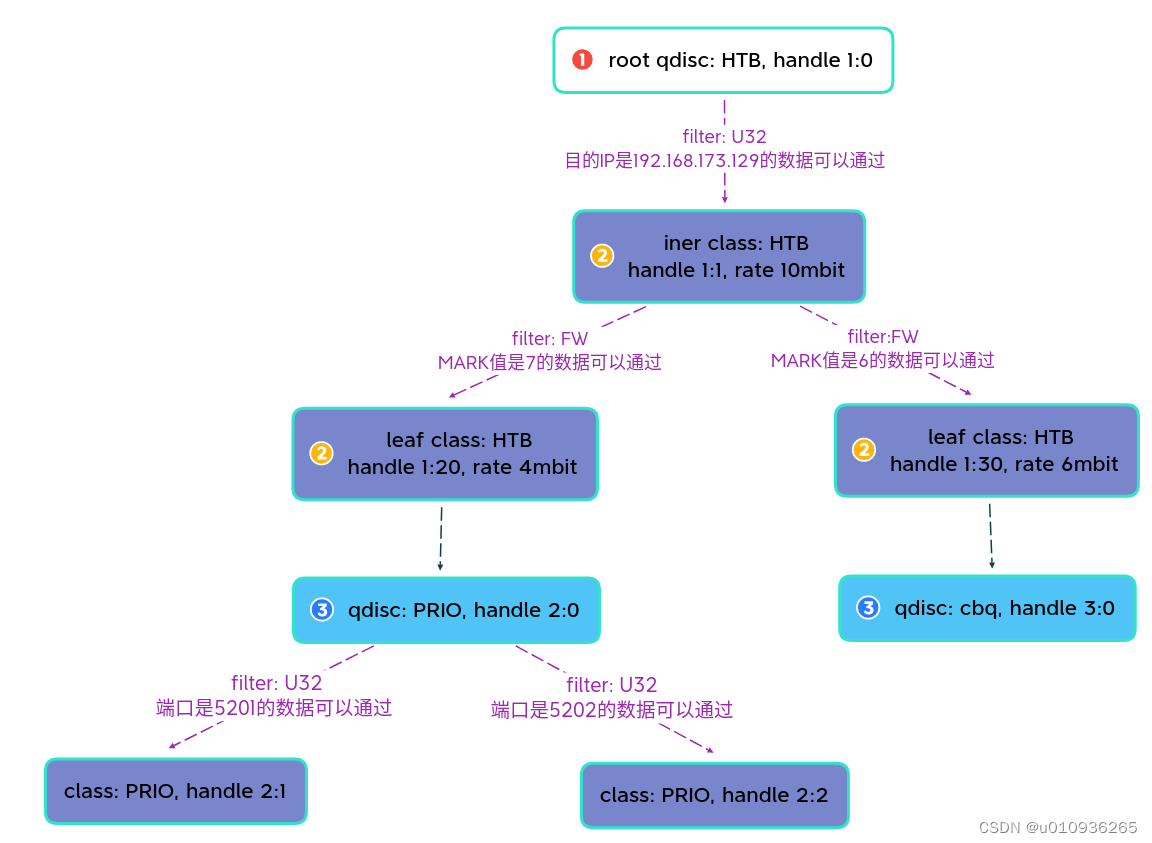

4.2.1 网络数据发送端流控示意图

4.2.2 tc命令

想要实现4.2.1小节图片中的功能,在网络数据发送端机器上执行以下tc命令:

tc qdisc add dev vmnet8 root handle 1: htb default 1

tc class add dev vmnet8 parent 1: classid 1:1 htb rate 10mbit

tc class add dev vmnet8 parent 1:1 classid 1:20 htb rate 4mbit

tc class add dev vmnet8 parent 1:1 classid 1:30 htb rate 6mbit

tc qdisc add dev vmnet8 parent 1:20 handle 2: prio

tc qdisc add dev vmnet8 parent 1:30 handle 3: cbq avpkt 1500 bandwidth 1mbit

tc filter add dev vmnet8 protocol ip parent 1:0 prio 1 u32 match ip dst 192.168.173.129 flowid 1:1 action skbedit mark 7

tc filter add dev vmnet8 parent 1:1 handle 7 fw flowid 1:20

tc filter add dev vmnet8 parent 1:1 handle 6 fw flowid 1:30

tc filter add dev vmnet8 protocol ip parent 2:0 prio 1 u32 match ip dport 5201 0xffff flowid 2:1

tc filter add dev vmnet8 protocol ip parent 2:0 prio 1 u32 match ip dport 5202 0xffff flowid 2:2 4.2.3 mark值为7时的统计信息

4.2.3.1 通过iperf命令收发数据

在数据接收端机器上执行:iperf -s -p 5201

在数据发送端机器上执行

# iperf -c 192.168.173.129 -p 5201

------------------------------------------------------------

Client connecting to 192.168.173.129, TCP port 5201

TCP window size: 128 KByte (default)

------------------------------------------------------------

[ 3] local 192.168.173.1 port 42760 connected with 192.168.173.129 port 5201

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 4.88 MBytes 4.08 Mbits/sec

#4.2.3.2 查看qdisc统计信息

# tc -s qdisc ls dev vmnet8

qdisc htb 1: root refcnt 2 r2q 10 default 0x1 direct_packets_stat 9 direct_qlen 1000

Sent 5346774 bytes 3543 pkt (dropped 0, overlimits 2259 requeues 0)

backlog 0b 0p requeues 0

qdisc prio 2: parent 1:20 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

Sent 5345060 bytes 3534 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cbq 3: parent 1:30 rate 1Mbit (bounded,isolated) prio no-transmit

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

borrowed 0 overactions 0 avgidle 187500 undertime 04.2.3.3 查看class统计信息

# tc -s -d class ls dev vmnet8

class htb 1:1 root rate 10Mbit ceil 10Mbit linklayer ethernet burst 1600b/1 mpu 0b cburst 1600b/1 mpu 0b level 7

Sent 5345060 bytes 3534 pkt (dropped 0, overlimits 1057 requeues 0)

backlog 0b 0p requeues 0

lended: 0 borrowed: 0 giants: 0

tokens: 19175 ctokens: 19175

class htb 1:20 parent 1:1 leaf 2: prio 0 quantum 50000 rate 4Mbit ceil 4Mbit linklayer ethernet burst 1600b/1 mpu 0b cburst 1600b/1 mpu 0b level 0

Sent 5345060 bytes 3534 pkt (dropped 0, overlimits 1202 requeues 0)

backlog 0b 0p requeues 0

lended: 1204 borrowed: 0 giants: 0

tokens: -688 ctokens: -688

class htb 1:30 parent 1:1 leaf 3: prio 0 quantum 75000 rate 6Mbit ceil 6Mbit linklayer ethernet burst 1599b/1 mpu 0b cburst 1599b/1 mpu 0b level 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

lended: 0 borrowed: 0 giants: 0

tokens: 33328 ctokens: 33328

class prio 2:1 parent 2:

Sent 5345060 bytes 3534 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 2:2 parent 2:

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 2:3 parent 2:

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class cbq 3: root rate 1Mbit linklayer ethernet cell 16b (bounded,isolated) prio no-transmit/8 weight 1Mbit allot 1514b

level 0 ewma 5 avpkt 1500b maxidle 374us

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

borrowed 0 overactions 0 avgidle 187500 undertime 0在4.2.2小节中,使用了skbedit action,将MARK值设置为7,所以最终发送的数据会进入prio 队列。

4.2.4 mark值为6时的统计信息

4.2.4.1 修改mark值为6

在执行完4.2.2小节中的tc命令后,修改skbedit action的mark值为6,执行以下命令

# tc actions change skbedit mark 6 index 1

# tc actions list action skbedit

total acts 1

action order 0: skbedit mark 6 pipe

index 1 ref 1 bind 1mark值除了可以使用skbedit action设置,还可以使用iptables命令设置,如下:

iptables -t mangle -A POSTROUTING -o vmnet8 -j MARK --set-mark 64.2.4.2 通过iperf命令收发数据

在数据接收端机器上执行:iperf -s -p 5202

在数据发送端机器上执行

# iperf -c 192.168.173.129 -p 5202

------------------------------------------------------------

Client connecting to 192.168.173.129, TCP port 5202

TCP window size: 128 KByte (default)

------------------------------------------------------------

[ 3] local 192.168.173.1 port 57256 connected with 192.168.173.129 port 5202

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.2 sec 7.38 MBytes 6.04 Mbits/sec4.2.4.3 查看qdisc统计信息

# tc -s qdisc ls dev vmnet8

qdisc htb 1: root refcnt 2 r2q 10 default 0x1 direct_packets_stat 5 direct_qlen 1000

Sent 8086838 bytes 5349 pkt (dropped 0, overlimits 2159 requeues 0)

backlog 0b 0p requeues 0

qdisc prio 2: parent 1:20 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

qdisc cbq 3: parent 1:30 rate 1Mbit (bounded,isolated) prio no-transmit

Sent 8085960 bytes 5344 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

borrowed 0 overactions 0 avgidle -2.52893e+07 undertime 0

4.2.4.4 查看class统计信息

# tc -s -d class ls dev vmnet8

class htb 1:1 root rate 10Mbit ceil 10Mbit linklayer ethernet burst 1600b/1 mpu 0b cburst 1600b/1 mpu 0b level 7

Sent 8085960 bytes 5344 pkt (dropped 0, overlimits 1075 requeues 0)

backlog 0b 0p requeues 0

lended: 0 borrowed: 0 giants: 0

tokens: 19175 ctokens: 19175

class htb 1:20 parent 1:1 leaf 2: prio 0 quantum 50000 rate 4Mbit ceil 4Mbit linklayer ethernet burst 1600b/1 mpu 0b cburst 1600b/1 mpu 0b level 0

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

lended: 0 borrowed: 0 giants: 0

tokens: 50000 ctokens: 50000

class htb 1:30 parent 1:1 leaf 3: prio 0 quantum 75000 rate 6Mbit ceil 6Mbit linklayer ethernet burst 1599b/1 mpu 0b cburst 1599b/1 mpu 0b level 0

Sent 8085960 bytes 5344 pkt (dropped 0, overlimits 1084 requeues 0)

backlog 0b 0p requeues 0

lended: 1087 borrowed: 0 giants: 0

tokens: 31953 ctokens: 31953

class prio 2:1 parent 2:

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 2:2 parent 2:

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class prio 2:3 parent 2:

Sent 0 bytes 0 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

class cbq 3: root rate 1Mbit linklayer ethernet cell 16b (bounded,isolated) prio no-transmit/8 weight 1Mbit allot 1514b

level 0 ewma 5 avpkt 1500b maxidle 374us

Sent 8085894 bytes 1086 pkt (dropped 0, overlimits 0 requeues 0)

backlog 0b 0p requeues 0

borrowed 0 overactions 0 avgidle -2.52893e+07 undertime 2.50558e+07

5 使用tc命令配置接收数据时的流量控制

5.1 使用police

A typical application of the police action is to enforce ingress traffic rate by dropping exceeding packets. Although better done on the

sender's side, especially in scenarios with lack of peer control (e.g. with dial-up providers) this is often the best one can do in order to

keep latencies low under high load. The following establishes input bandwidth policing to 1mbit/s using the ingress qdisc and u32 filter:

# tc qdisc add dev eth0 handle ffff: ingress

# tc filter add dev eth0 parent ffff: u32 \

match u32 0 0 \

police rate 1mbit burst 100k参考:man tc-police

5.2 使用mirred

Limit ingress bandwidth on eth0 to 1mbit/s, redirect exceeding traffic to lo for debugging purposes:

# tc qdisc add dev eth0 handle ffff: ingress

# tc filter add dev eth0 parent ffff: u32 \

match u32 0 0 \

action police rate 1mbit burst 100k conform-exceed pipe \

action mirred egress redirect dev lo6 参考资料

Linux TC 流量控制与排队规则 qdisc 树型结构详解(以HTB和RED为例)-CSDN博客

Traffic Control HOWTO

流量控制 · GitBook

Linux TC(Traffic Control)框架原理解析_linux tc原理-CSDN博客

Index of /tc/doc

https://www.cnblogs.com/acool/p/7779159.html