背景

依据上一篇kyuubi与spark集成,并发布spark sql到k8s集群,上一篇的将kyuubi和spark环境放在本地某台服务器上的,为了高可用,本篇将其打包镜像,并发布到k8s。

其实就是将本地的kyuubi,spark,jdk等打包到镜像中,设置好环境变量,打包成镜像,过程也很简单,参考以下Dockerfile

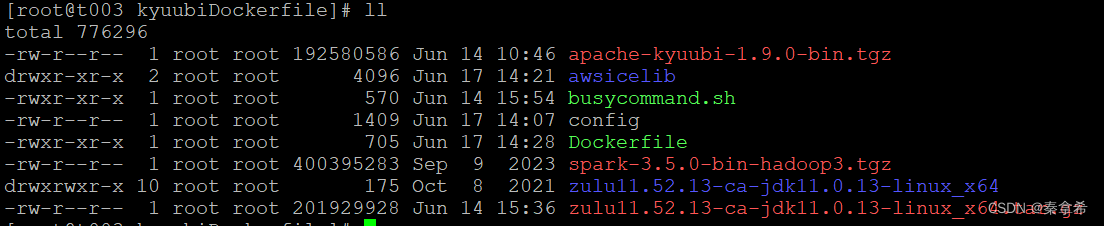

Dockerfile的目录

准备好kyuubi的包,spark包,jdk包,还有上篇中重点提到的aws包:awsicelib目录下的jar,还有一点将k8s的config也要打包进来,否则无法通过k8s的认证,没法提交任务。

Dockerfile

FROM centos

MAINTAINER 682556

RUN mkdir -p /opt

RUN mkdir -p /root/.kube

#将看k8s的配置加进来

ADD config /root/.kube

ADD apache-kyuubi-1.9.0-bin.tgz /opt

ADD spark-3.5.0-bin-hadoop3.tgz /opt

ADD zulu11.52.13-ca-jdk11.0.13-linux_x64.tar.gz /opt/java

#这个是busybox,在启动kyuubi后,让容器仍然启动着,不会completed

ADD busycommand.sh /opt/rcommand.sh

RUN ls /opt/java

RUN ln -s /opt/apache-kyuubi-1.9.0-bin /opt/kyuubi

RUN ln -s /opt/spark-3.5.0-bin-hadoop3 /opt/spark

#添加aws的包,访问minio

COPY awsicelib/ /opt/spark/jars

ENV JAVA_HOME /opt/java/zulu11.52.13-ca-jdk11.0.13-linux_x64

ENV SPARK_HOME /opt/spark

ENV KYUUBI_HOME /opt/kyuubi

ENV PATH $PATH:$JAVA_HOME/bin:$SPARK_HOME/bin:$KYUUBI_HOME/bin

ENV AWS_REGION=us-east-1

EXPOSE 10009

#RUN chmod -R 777 /opt

ENTRYPOINT ["/opt/rcommand.sh"]我的busycommand,10s钟检测一次,如果进程没了,重新启动kyuubi

#! /bin/bash

i=1

while ((i<100000000))

do

flag="true"

if((i<2)); then

$KYUUBI_HOME/bin/kyuubi start

echo `date "+%Y-%m-%d %H:%M:%S"` "first started" >> /opt/kyuubi.log

pwd;

else

PID=$(ps -e|grep java|awk '{printf $1}')

if [ $PID ]; then

echo `date "+%Y-%m-%d %H:%M:%S"` "process id:$PID" >> /opt/kyuubi.log

else

echo `date "+%Y-%m-%d %H:%M:%S"` "process kyuubi-server not exit" >> /opt/kyuubi.log

$KYUUBI_HOME/bin/kyuubi start

echo `date "+%Y-%m-%d %H:%M:%S"` "process kyuubi-server started" >> /opt/kyuubi.log

fi

sleep 10s

fi

let i++

done打包镜像为10.38.199.203:1443/fhc/kyuubi:v1.0

编写yaml发布

kyuubi-condfigMap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kyuubi-config-cm

#namespace: ingress-nginx

labels:

app: kyuubi

data:

kyuubi-env.sh: |-

export JAVA_HOME=/opt/java/zulu11.52.13-ca-jdk11.0.13-linux_x64

export SPARK_HOME=/opt/spark

#export KYUUBI_JAVA_OPTS="-Xmx10g -XX:MaxMetaspaceSize=512m -XX:MaxDirectMemorySize=1024m -XX:+UseG1GC -XX:+UseStringDeduplication -XX:+UnlockDiagnosticVMOptions -XX:+UseCondCardMark -XX:+UseGCOverheadLimit -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=./logs -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -verbose:gc -Xloggc:./logs/kyuubi-server-gc-%t.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=20M"

#export KYUUBI_BEELINE_OPTS="-Xmx2g -XX:+UseG1GC -XX:+UnlockDiagnosticVMOptions -XX:+UseCondCardMark"

kyuubi-defaults.conf: |-

spark.master=k8s://https://10.38.199.201:443/k8s/clusters/c-m-l7gflsx7

spark.home=/opt/spark

spark.hadoop.fs.s3a.access.key=apPeWWr5KpXkzEW2jNKW

spark.hadoop.fs.s3a.secret.key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM

spark.hadoop.fs.s3a.endpoint=http://wuxdihadl01b.seagate.com:30009

spark.hadoop.fs.s3a.path.style.access=true

spark.hadoop.fs.s3a.aws.region=us-east-1

spark.hadoop.fs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSystem

spark.sql.catalog.default=spark_catalog

spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkCatalog

spark.sql.catalog.spark_catalog.type=rest

#spark.sql.catalog.spark_catalog.catalog-impl=org.apache.iceberg.rest.RESTCatalog

spark.sql.catalog.spark_catalog.uri=http://10.40.8.42:31000

spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions

spark.sql.catalog.spark_catalog.io-impl=org.apache.iceberg.aws.s3.S3FileIO

spark.sql.catalog.spark_catalog.warehouse=s3a://wux-hoo-dev-01/ice_warehouse

spark.sql.catalog.spark_catalog.s3.endpoint=http://wuxdihadl01b.seagate.com:30009

spark.sql.catalog.spark_catalog.s3.path-style-access=true

spark.sql.catalog.spark_catalog.s3.access-key-id=apPeWWr5KpXkzEW2jNKW

spark.sql.catalog.spark_catalog.s3.secret-access-key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM

spark.sql.catalog.spark_catalog.region=us-east-1

spark.kubernetes.container.image=10.38.199.203:1443/fhc/spark350:v1.0

spark.kubernetes.namespace=default

spark.kubernetes.authenticate.driver.serviceAccountName=spark

spark.ssl.kubernetes.enabled=falsespark-configMap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: spark-config-cm

#namespace: ingress-nginx

labels:

app: kyuubi

data:

spark-env.sh: |-

spark-defaults.conf: |-

spark.master=k8s://https://10.38.199.201:443/k8s/clusters/c-m-l7gflsx7

spark.home=/opt/spark

spark.hadoop.fs.s3a.access.key=apPeWWr5KpXkzEW2jNKW

spark.hadoop.fs.s3a.secret.key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM

spark.hadoop.fs.s3a.endpoint=http://wuxdihadl01b.seagate.com:30009

spark.hadoop.fs.s3a.path.style.access=true

spark.hadoop.fs.s3a.aws.region=us-east-1

spark.hadoop.fs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSystem

spark.sql.catalog.default=spark_catalog

spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkCatalog

spark.sql.catalog.spark_catalog.type=rest

#spark.sql.catalog.spark_catalog.catalog-impl=org.apache.iceberg.rest.RESTCatalog

spark.sql.catalog.spark_catalog.uri=http://10.40.8.42:31000

spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions

spark.sql.catalog.spark_catalog.io-impl=org.apache.iceberg.aws.s3.S3FileIO

spark.sql.catalog.spark_catalog.warehouse=s3a://wux-hoo-dev-01/ice_warehouse

spark.sql.catalog.spark_catalog.s3.endpoint=http://wuxdihadl01b.seagate.com:30009

spark.sql.catalog.spark_catalog.s3.path-style-access=true

spark.sql.catalog.spark_catalog.s3.access-key-id=apPeWWr5KpXkzEW2jNKW

spark.sql.catalog.spark_catalog.s3.secret-access-key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM

spark.sql.catalog.spark_catalog.region=us-east-1

spark.kubernetes.container.image=10.38.199.203:1443/fhc/spark350:v1.0

spark.kubernetes.namespace=default

spark.kubernetes.authenticate.driver.serviceAccountName=spark

spark.ssl.kubernetes.enabled=false

log4j2.properties: |-

# Set everything to be logged to the console

rootLogger.level = info

rootLogger.appenderRef.stdout.ref = console

appender.console.type = Console

appender.console.name = console

appender.console.target = SYSTEM_ERR

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n%ex

logger.repl.name = org.apache.spark.repl.Main

logger.repl.level = warn

logger.thriftserver.name = org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver

logger.thriftserver.level = warn

logger.jetty1.name = org.sparkproject.jetty

logger.jetty1.level = warn

logger.jetty2.name = org.sparkproject.jetty.util.component.AbstractLifeCycle

logger.jetty2.level = error

logger.replexprTyper.name = org.apache.spark.repl.SparkIMain$exprTyper

logger.replexprTyper.level = info

logger.replSparkILoopInterpreter.name = org.apache.spark.repl.SparkILoop$SparkILoopInterpreter

logger.replSparkILoopInterpreter.level = info

logger.parquet1.name = org.apache.parquet

logger.parquet1.level = error

logger.parquet2.name = parquet

logger.parquet2.level = error

logger.RetryingHMSHandler.name = org.apache.hadoop.hive.metastore.RetryingHMSHandler

logger.RetryingHMSHandler.level = fatal

logger.FunctionRegistry.name = org.apache.hadoop.hive.ql.exec.FunctionRegistry

logger.FunctionRegistry.level = error

appender.console.filter.1.type = RegexFilter

appender.console.filter.1.regex = .*Thrift error occurred during processing of message.*

appender.console.filter.1.onMatch = deny

appender.console.filter.1.onMismatch = neutralkyuubi-dev.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kyuubi

#namespace: ingress-nginx

spec:

replicas: 1

revisionHistoryLimit: 1

selector:

matchLabels:

app: kyuubi

template:

metadata:

labels:

app: kyuubi

spec:

#nodeSelector:

#type: lab1

#hostNetwork: true

containers:

- name: kyuubi

imagePullPolicy: Always

image: 10.38.199.203:1443/fhc/kyuubi:v1.0

resources:

requests:

cpu: 1000m

memory: 5000Mi

limits:

cpu: 2000m

memory: 20240Mi

ports:

- containerPort: 10009

env:

- name: COORDINATOR_NODE

value: "true"

volumeMounts:

- name: kyuubi-config-volume

mountPath: /opt/kyuubi/conf

readOnly: false

- name: spark-config-volume

mountPath: /opt/spark/conf

readOnly: false

volumes:

- name: kyuubi-config-volume

configMap:

name: kyuubi-config-cm

- name: spark-config-volume

configMap:

name: spark-config-cm

- name: kyuubi-data-volume

emptyDir: {}

imagePullSecrets:

- name: harborkyuubi-service.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: kyuubi

name: kyuubi-service

#namespace: ingress-nginx

spec:

type: NodePort

#sessionAffinity: ClientIP

ports:

- port: 10009

targetPort: 10009

name: http

#protocol: TCP

nodePort: 30009

selector:

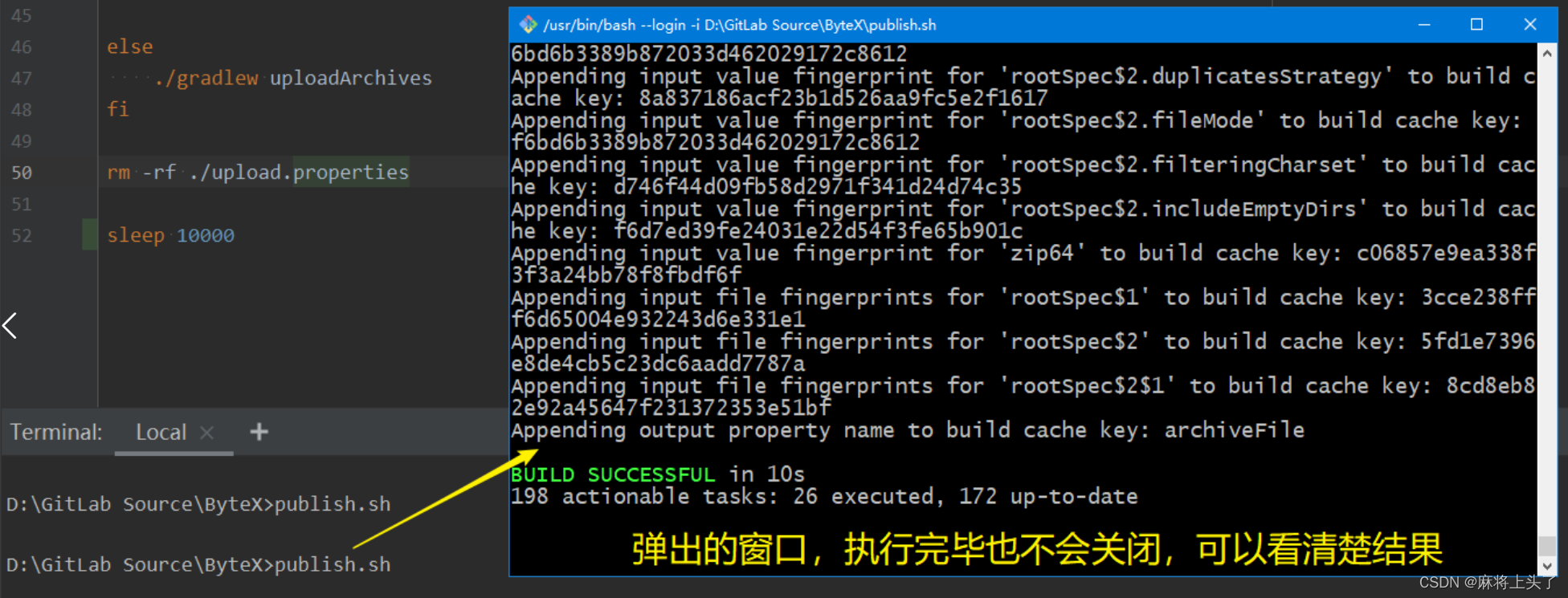

app: kyuubi发布yaml

kubectl apply -f kyuubi-condfigMap.yaml

kubectl apply -f spark-configMap.yaml

kubectl apply -f kyuubi-dev.yaml

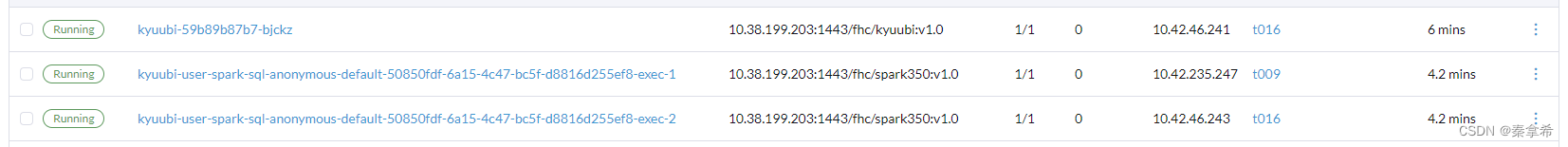

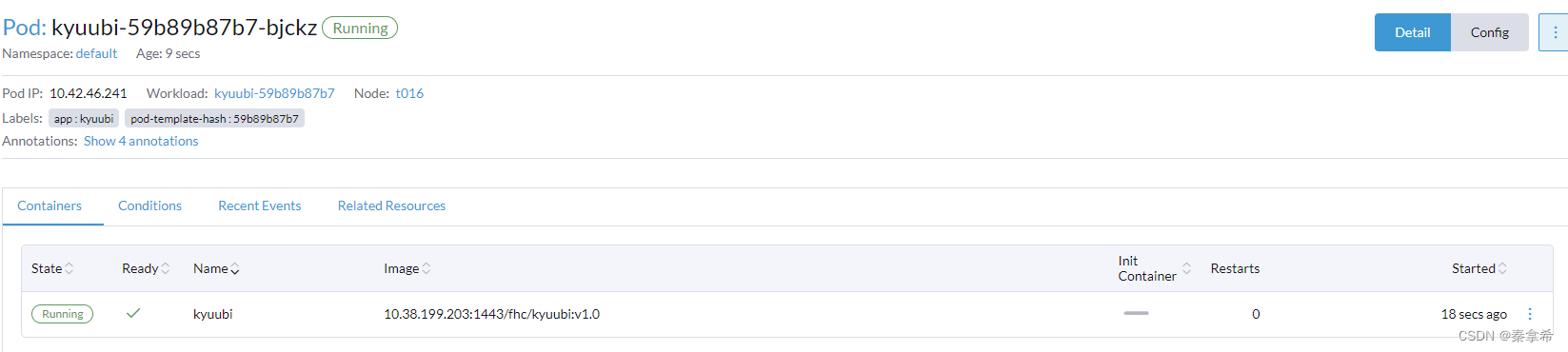

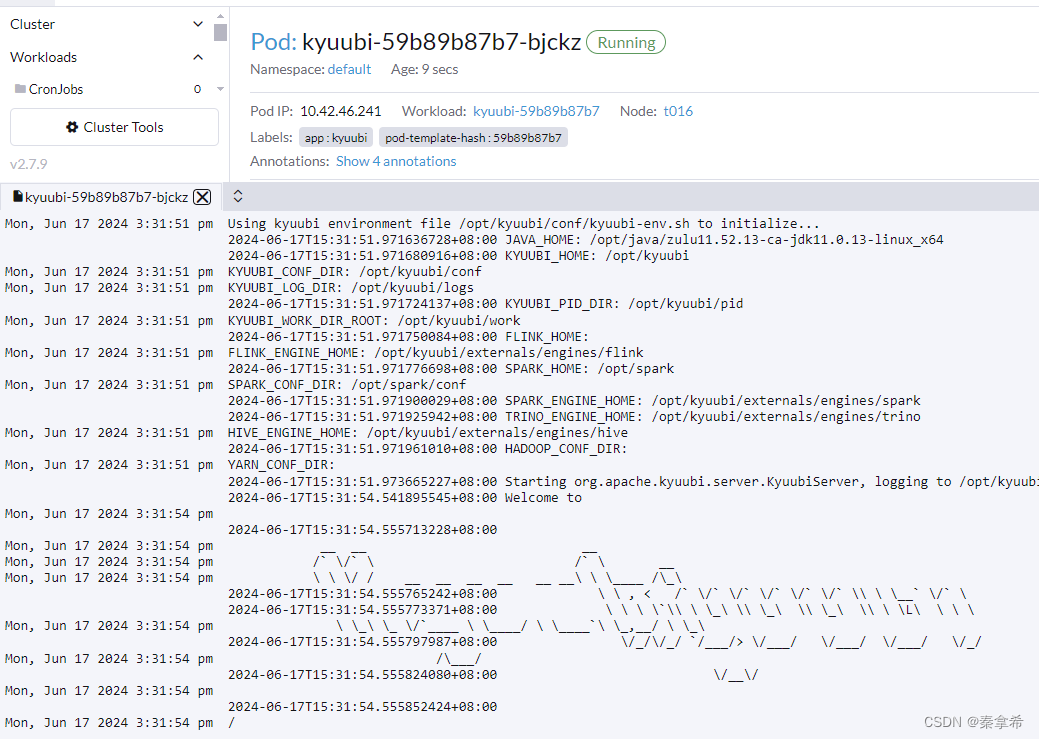

kubectl apply -f kyuubi-service.yaml查看k8s pod

本地连接kyuubi jdbc,及执行sql

./beeline -u 'jdbc:hive2://10.38.199.201:30009'[root@t001 bin]# ./beeline -u 'jdbc:hive2://10.38.199.201:30009'

Connecting to jdbc:hive2://10.38.199.201:30009

2024-06-17 07:33:45.668 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.operation.LaunchEngine: Processing anonymous's query[c612b8d0-8bc7-4efa-acc8-e6c477fbda20]: PENDING_STATE -> RUNNING_STATE, statement:

LaunchEngine

2024-06-17 07:33:45.673 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.shaded.curator.framework.imps.CuratorFrameworkImpl: Starting

2024-06-17 07:33:45.673 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.shaded.zookeeper.ZooKeeper: Initiating client connection, connectString=10.42.46.241:2181 sessionTimeout=60000 watcher=org.apache.kyuubi.shaded.curator.ConnectionState@59e92870

2024-06-17 07:33:45.676 INFO KyuubiSessionManager-exec-pool: Thread-54-SendThread(kyuubi-59b89b87b7-bjckz:2181) org.apache.kyuubi.shaded.zookeeper.ClientCnxn: Opening socket connection to server kyuubi-59b89b87b7-bjckz/10.42.46.241:2181. Will not attempt to authenticate using SASL (unknown error)

2024-06-17 07:33:45.678 INFO KyuubiSessionManager-exec-pool: Thread-54-SendThread(kyuubi-59b89b87b7-bjckz:2181) org.apache.kyuubi.shaded.zookeeper.ClientCnxn: Socket connection established to kyuubi-59b89b87b7-bjckz/10.42.46.241:2181, initiating session

2024-06-17 07:33:45.702 INFO KyuubiSessionManager-exec-pool: Thread-54-SendThread(kyuubi-59b89b87b7-bjckz:2181) org.apache.kyuubi.shaded.zookeeper.ClientCnxn: Session establishment complete on server kyuubi-59b89b87b7-bjckz/10.42.46.241:2181, sessionid = 0x101113150210001, negotiated timeout = 60000

2024-06-17 07:33:45.703 INFO KyuubiSessionManager-exec-pool: Thread-54-EventThread org.apache.kyuubi.shaded.curator.framework.state.ConnectionStateManager: State change: CONNECTED

2024-06-17 07:33:45.728 WARN KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.shaded.curator.utils.ZKPaths: The version of ZooKeeper being used doesn't support Container nodes. CreateMode.PERSISTENT will be used instead.

2024-06-17 07:33:45.829 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.engine.ProcBuilder: Creating anonymous's working directory at /opt/kyuubi/work/anonymous

2024-06-17 07:33:45.852 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.engine.ProcBuilder: Logging to /opt/kyuubi/work/anonymous/kyuubi-spark-sql-engine.log.0

2024-06-17 07:33:45.860 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.Utils: Loading Kyuubi properties from /opt/spark/conf/spark-defaults.conf

2024-06-17 07:33:45.869 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.engine.EngineRef: Launching engine:

/opt/spark-3.5.0-bin-hadoop3/bin/spark-submit \

--class org.apache.kyuubi.engine.spark.SparkSQLEngine \

--conf spark.hive.server2.thrift.resultset.default.fetch.size=1000 \

--conf spark.kyuubi.client.ipAddress=10.38.199.201 \

--conf spark.kyuubi.client.version=1.9.0 \

--conf spark.kyuubi.engine.engineLog.path=/opt/kyuubi/work/anonymous/kyuubi-spark-sql-engine.log.0 \

--conf spark.kyuubi.engine.submit.time=1718609625821 \

--conf spark.kyuubi.ha.addresses=10.42.46.241:2181 \

--conf spark.kyuubi.ha.engine.ref.id=50850fdf-6a15-4c47-bc5f-d8816d255ef8 \

--conf spark.kyuubi.ha.namespace=/kyuubi_1.9.0_USER_SPARK_SQL/anonymous/default \

--conf spark.kyuubi.ha.zookeeper.auth.type=NONE \

--conf spark.kyuubi.server.ipAddress=10.42.46.241 \

--conf spark.kyuubi.session.connection.url=kyuubi-59b89b87b7-bjckz:10009 \

--conf spark.kyuubi.session.real.user=anonymous \

--conf spark.app.name=kyuubi_USER_SPARK_SQL_anonymous_default_50850fdf-6a15-4c47-bc5f-d8816d255ef8 \

--conf spark.hadoop.fs.s3a.access.key=apPeWWr5KpXkzEW2jNKW \

--conf spark.hadoop.fs.s3a.aws.region=us-east-1 \

--conf spark.hadoop.fs.s3a.endpoint=http://wuxdihadl01b.seagate.com:30009 \

--conf spark.hadoop.fs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSystem \

--conf spark.hadoop.fs.s3a.path.style.access=true \

--conf spark.hadoop.fs.s3a.secret.key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM \

--conf spark.home=/opt/spark \

--conf spark.kubernetes.authenticate.driver.oauthToken=kubeconfig-user-8g2wl8jd6v:t6fm8dphfzr8r2dhhz9f9m57nzcmbcjrmmx6txj4vpfvv799c4sj84 \

--conf spark.kubernetes.authenticate.driver.serviceAccountName=spark \

--conf spark.kubernetes.container.image=10.38.199.203:1443/fhc/spark350:v1.0 \

--conf spark.kubernetes.driver.label.kyuubi-unique-tag=50850fdf-6a15-4c47-bc5f-d8816d255ef8 \

--conf spark.kubernetes.executor.podNamePrefix=kyuubi-user-spark-sql-anonymous-default-50850fdf-6a15-4c47-bc5f-d8816d255ef8 \

--conf spark.kubernetes.namespace=default \

--conf spark.master=k8s://https://10.38.199.201:443/k8s/clusters/c-m-l7gflsx7 \

--conf spark.sql.catalog.default=spark_catalog \

--conf spark.sql.catalog.spark_catalog=org.apache.iceberg.spark.SparkCatalog \

--conf spark.sql.catalog.spark_catalog.io-impl=org.apache.iceberg.aws.s3.S3FileIO \

--conf spark.sql.catalog.spark_catalog.region=us-east-1 \

--conf spark.sql.catalog.spark_catalog.s3.access-key-id=apPeWWr5KpXkzEW2jNKW \

--conf spark.sql.catalog.spark_catalog.s3.endpoint=http://wuxdihadl01b.seagate.com:30009 \

--conf spark.sql.catalog.spark_catalog.s3.path-style-access=true \

--conf spark.sql.catalog.spark_catalog.s3.secret-access-key=cRt3inWAhDYtuzsDnKGLGg9EJSbJ083ekuW7PejM \

--conf spark.sql.catalog.spark_catalog.type=rest \

--conf spark.sql.catalog.spark_catalog.uri=http://10.40.8.42:31000 \

--conf spark.sql.catalog.spark_catalog.warehouse=s3a://wux-hoo-dev-01/ice_warehouse \

--conf spark.sql.extensions=org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions \

--conf spark.ssl.kubernetes.enabled=false \

--conf spark.kubernetes.driverEnv.SPARK_USER_NAME=anonymous \

--conf spark.executorEnv.SPARK_USER_NAME=anonymous \

--proxy-user anonymous /opt/apache-kyuubi-1.9.0-bin/externals/engines/spark/kyuubi-spark-sql-engine_2.12-1.9.0.jar

24/06/17 07:33:48 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

24/06/17 07:33:49 INFO SignalRegister: Registering signal handler for TERM

24/06/17 07:33:49 INFO SignalRegister: Registering signal handler for HUP

24/06/17 07:33:49 INFO SignalRegister: Registering signal handler for INT

24/06/17 07:33:49 INFO HiveConf: Found configuration file null

24/06/17 07:33:49 INFO SparkContext: Running Spark version 3.5.0

24/06/17 07:33:49 INFO SparkContext: OS info Linux, 3.10.0-1160.114.2.el7.x86_64, amd64

24/06/17 07:33:49 INFO SparkContext: Java version 11.0.13

24/06/17 07:33:49 INFO ResourceUtils: ==============================================================

24/06/17 07:33:49 INFO ResourceUtils: No custom resources configured for spark.driver.

24/06/17 07:33:49 INFO ResourceUtils: ==============================================================

24/06/17 07:33:49 INFO SparkContext: Submitted application: kyuubi_USER_SPARK_SQL_anonymous_default_50850fdf-6a15-4c47-bc5f-d8816d255ef8

24/06/17 07:33:49 INFO ResourceProfile: Default ResourceProfile created, executor resources: Map(cores -> name: cores, amount: 1, script: , vendor: , memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0)

24/06/17 07:33:49 INFO ResourceProfile: Limiting resource is cpus at 1 tasks per executor

24/06/17 07:33:49 INFO ResourceProfileManager: Added ResourceProfile id: 0

24/06/17 07:33:49 INFO SecurityManager: Changing view acls to: root,anonymous

24/06/17 07:33:49 INFO SecurityManager: Changing modify acls to: root,anonymous

24/06/17 07:33:49 INFO SecurityManager: Changing view acls groups to:

24/06/17 07:33:49 INFO SecurityManager: Changing modify acls groups to:

24/06/17 07:33:49 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: root, anonymous; groups with view permissions: EMPTY; users with modify permissions: root, anonymous; groups with modify permissions: EMPTY

24/06/17 07:33:50 INFO Utils: Successfully started service 'sparkDriver' on port 38583.

24/06/17 07:33:50 INFO SparkEnv: Registering MapOutputTracker

24/06/17 07:33:50 INFO SparkEnv: Registering BlockManagerMaster

24/06/17 07:33:50 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

24/06/17 07:33:50 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

24/06/17 07:33:50 INFO SparkEnv: Registering BlockManagerMasterHeartbeat

24/06/17 07:33:50 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-533c82c5-bfd4-40d1-b8f1-0a348b15a1cf

24/06/17 07:33:50 INFO MemoryStore: MemoryStore started with capacity 434.4 MiB

24/06/17 07:33:50 INFO SparkEnv: Registering OutputCommitCoordinator

24/06/17 07:33:50 INFO JettyUtils: Start Jetty 0.0.0.0:0 for SparkUI

24/06/17 07:33:50 INFO Utils: Successfully started service 'SparkUI' on port 42553.

24/06/17 07:33:50 INFO SparkContext: Added JAR file:/opt/apache-kyuubi-1.9.0-bin/externals/engines/spark/kyuubi-spark-sql-engine_2.12-1.9.0.jar at spark://10.42.46.241:38583/jars/kyuubi-spark-sql-engine_2.12-1.9.0.jar with timestamp 1718609629708

24/06/17 07:33:50 INFO SparkKubernetesClientFactory: Auto-configuring K8S client using current context from users K8S config file

24/06/17 07:33:51 INFO ExecutorPodsAllocator: Going to request 2 executors from Kubernetes for ResourceProfile Id: 0, target: 2, known: 0, sharedSlotFromPendingPods: 2147483647.

24/06/17 07:33:52 INFO KubernetesClientUtils: Spark configuration files loaded from Some(/opt/spark/conf) : spark-env.sh,log4j2.properties

24/06/17 07:33:52 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

24/06/17 07:33:52 INFO KubernetesClientUtils: Spark configuration files loaded from Some(/opt/spark/conf) : spark-env.sh,log4j2.properties

24/06/17 07:33:52 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 39580.

24/06/17 07:33:52 INFO NettyBlockTransferService: Server created on 10.42.46.241:39580

24/06/17 07:33:52 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

24/06/17 07:33:52 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 10.42.46.241, 39580, None)

24/06/17 07:33:52 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.46.241:39580 with 434.4 MiB RAM, BlockManagerId(driver, 10.42.46.241, 39580, None)

24/06/17 07:33:52 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 10.42.46.241, 39580, None)

24/06/17 07:33:52 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 10.42.46.241, 39580, None)

24/06/17 07:33:52 INFO KubernetesClientUtils: Spark configuration files loaded from Some(/opt/spark/conf) : spark-env.sh,log4j2.properties

24/06/17 07:33:52 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

24/06/17 07:33:57 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: No executor found for 10.42.235.247:52602

24/06/17 07:33:57 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.235.247:52608) with ID 1, ResourceProfileId 0

24/06/17 07:33:57 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: No executor found for 10.42.46.243:51040

24/06/17 07:33:57 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.235.247:46359 with 413.9 MiB RAM, BlockManagerId(1, 10.42.235.247, 46359, None)

24/06/17 07:33:58 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.46.243:51042) with ID 2, ResourceProfileId 0

24/06/17 07:33:58 INFO KubernetesClusterSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

24/06/17 07:33:58 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.46.243:34050 with 413.9 MiB RAM, BlockManagerId(2, 10.42.46.243, 34050, None)

24/06/17 07:33:58 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir.

24/06/17 07:33:58 INFO SharedState: Warehouse path is 'file:/opt/apache-kyuubi-1.9.0-bin/work/anonymous/spark-warehouse'.

24/06/17 07:34:01 INFO CatalogUtil: Loading custom FileIO implementation: org.apache.iceberg.aws.s3.S3FileIO

24/06/17 07:34:02 INFO CodeGenerator: Code generated in 293.979616 ms

24/06/17 07:34:02 INFO CodeGenerator: Code generated in 10.25986 ms

24/06/17 07:34:03 INFO CodeGenerator: Code generated in 12.699915 ms

24/06/17 07:34:03 INFO SparkContext: Starting job: isEmpty at KyuubiSparkUtil.scala:49

24/06/17 07:34:03 INFO DAGScheduler: Got job 0 (isEmpty at KyuubiSparkUtil.scala:49) with 1 output partitions

24/06/17 07:34:03 INFO DAGScheduler: Final stage: ResultStage 0 (isEmpty at KyuubiSparkUtil.scala:49)

24/06/17 07:34:03 INFO DAGScheduler: Parents of final stage: List()

24/06/17 07:34:03 INFO DAGScheduler: Missing parents: List()

24/06/17 07:34:03 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[2] at isEmpty at KyuubiSparkUtil.scala:49), which has no missing parents

24/06/17 07:34:03 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 7.0 KiB, free 434.4 MiB)

24/06/17 07:34:03 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 3.7 KiB, free 434.4 MiB)

24/06/17 07:34:03 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.42.46.241:39580 (size: 3.7 KiB, free: 434.4 MiB)

24/06/17 07:34:03 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1580

24/06/17 07:34:03 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[2] at isEmpty at KyuubiSparkUtil.scala:49) (first 15 tasks are for partitions Vector(0))

24/06/17 07:34:03 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks resource profile 0

24/06/17 07:34:03 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0) (10.42.46.243, executor 2, partition 0, PROCESS_LOCAL, 10848 bytes)

24/06/17 07:34:03 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.42.46.243:34050 (size: 3.7 KiB, free: 413.9 MiB)

24/06/17 07:34:04 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1115 ms on 10.42.46.243 (executor 2) (1/1)

24/06/17 07:34:04 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

24/06/17 07:34:04 INFO DAGScheduler: ResultStage 0 (isEmpty at KyuubiSparkUtil.scala:49) finished in 1.414 s

24/06/17 07:34:04 INFO DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job

24/06/17 07:34:04 INFO TaskSchedulerImpl: Killing all running tasks in stage 0: Stage finished

24/06/17 07:34:04 INFO DAGScheduler: Job 0 finished: isEmpty at KyuubiSparkUtil.scala:49, took 1.522348 s

24/06/17 07:34:04 INFO BlockManagerInfo: Removed broadcast_0_piece0 on 10.42.46.241:39580 in memory (size: 3.7 KiB, free: 434.4 MiB)

24/06/17 07:34:04 INFO Utils: Loading Kyuubi properties from /opt/kyuubi/conf/kyuubi-defaults.conf

24/06/17 07:34:04 INFO BlockManagerInfo: Removed broadcast_0_piece0 on 10.42.46.243:34050 in memory (size: 3.7 KiB, free: 413.9 MiB)

24/06/17 07:34:04 INFO ThreadUtils: SparkSQLSessionManager-exec-pool: pool size: 100, wait queue size: 100, thread keepalive time: 60000 ms

24/06/17 07:34:04 INFO SparkSQLOperationManager: Service[SparkSQLOperationManager] is initialized.

24/06/17 07:34:04 INFO SparkSQLSessionManager: Service[SparkSQLSessionManager] is initialized.

24/06/17 07:34:04 INFO SparkSQLBackendService: Service[SparkSQLBackendService] is initialized.

24/06/17 07:34:05 INFO SparkTBinaryFrontendService: Initializing SparkTBinaryFrontend on kyuubi-59b89b87b7-bjckz:42178 with [9, 999] worker threads

24/06/17 07:34:05 INFO CuratorFrameworkImpl: Starting

24/06/17 07:34:05 INFO ZooKeeper: Client environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

24/06/17 07:34:05 INFO ZooKeeper: Client environment:host.name=kyuubi-59b89b87b7-bjckz

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.version=11.0.13

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.vendor=Azul Systems, Inc.

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.home=/opt/java/zulu11.52.13-ca-jdk11.0.13-linux_x64

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.class.path=/opt/spark/conf/:/opt/spark/jars/HikariCP-2.5.1.jar:/opt/spark/jars/JLargeArrays-1.5.jar:/opt/spark/jars/JTransforms-3.1.jar:/opt/spark/jars/RoaringBitmap-0.9.45.jar:/opt/spark/jars/ST4-4.0.4.jar:/opt/spark/jars/activation-1.1.1.jar:/opt/spark/jars/aircompressor-0.25.jar:/opt/spark/jars/algebra_2.12-2.0.1.jar:/opt/spark/jars/annotations-17.0.0.jar:/opt/spark/jars/antlr-runtime-3.5.2.jar:/opt/spark/jars/antlr4-runtime-4.9.3.jar:/opt/spark/jars/aopalliance-repackaged-2.6.1.jar:/opt/spark/jars/arpack-3.0.3.jar:/opt/spark/jars/arpack_combined_all-0.1.jar:/opt/spark/jars/arrow-format-12.0.1.jar:/opt/spark/jars/arrow-memory-core-12.0.1.jar:/opt/spark/jars/arrow-memory-netty-12.0.1.jar:/opt/spark/jars/arrow-vector-12.0.1.jar:/opt/spark/jars/audience-annotations-0.5.0.jar:/opt/spark/jars/avro-1.11.2.jar:/opt/spark/jars/avro-ipc-1.11.2.jar:/opt/spark/jars/avro-mapred-1.11.2.jar:/opt/spark/jars/blas-3.0.3.jar:/opt/spark/jars/bonecp-0.8.0.RELEASE.jar:/opt/spark/jars/breeze-macros_2.12-2.1.0.jar:/opt/spark/jars/breeze_2.12-2.1.0.jar:/opt/spark/jars/cats-kernel_2.12-2.1.1.jar:/opt/spark/jars/chill-java-0.10.0.jar:/opt/spark/jars/chill_2.12-0.10.0.jar:/opt/spark/jars/commons-cli-1.5.0.jar:/opt/spark/jars/commons-codec-1.16.0.jar:/opt/spark/jars/commons-collections-3.2.2.jar:/opt/spark/jars/commons-collections4-4.4.jar:/opt/spark/jars/commons-compiler-3.1.9.jar:/opt/spark/jars/commons-compress-1.23.0.jar:/opt/spark/jars/commons-crypto-1.1.0.jar:/opt/spark/jars/commons-dbcp-1.4.jar:/opt/spark/jars/commons-io-2.13.0.jar:/opt/spark/jars/commons-lang-2.6.jar:/opt/spark/jars/commons-lang3-3.12.0.jar:/opt/spark/jars/commons-logging-1.1.3.jar:/opt/spark/jars/commons-math3-3.6.1.jar:/opt/spark/jars/commons-pool-1.5.4.jar:/opt/spark/jars/commons-text-1.10.0.jar:/opt/spark/jars/compress-lzf-1.1.2.jar:/opt/spark/jars/curator-client-2.13.0.jar:/opt/spark/jars/curator-framework-2.13.0.jar:/opt/spark/jars/curator-recipes-2.13.0.jar:/opt/spark/jars/datanucleus-api-jdo-4.2.4.jar:/opt/spark/jars/datanucleus-core-4.1.17.jar:/opt/spark/jars/datanucleus-rdbms-4.1.19.jar:/opt/spark/jars/datasketches-java-3.3.0.jar:/opt/spark/jars/datasketches-memory-2.1.0.jar:/opt/spark/jars/derby-10.14.2.0.jar:/opt/spark/jars/dropwizard-metrics-hadoop-metrics2-reporter-0.1.2.jar:/opt/spark/jars/flatbuffers-java-1.12.0.jar:/opt/spark/jars/gson-2.2.4.jar:/opt/spark/jars/guava-14.0.1.jar:/opt/spark/jars/hadoop-client-api-3.3.4.jar:/opt/spark/jars/hadoop-client-runtime-3.3.4.jar:/opt/spark/jars/hadoop-shaded-guava-1.1.1.jar:/opt/spark/jars/hadoop-yarn-server-web-proxy-3.3.4.jar:/opt/spark/jars/hive-beeline-2.3.9.jar:/opt/spark/jars/hive-cli-2.3.9.jar:/opt/spark/jars/hive-common-2.3.9.jar:/opt/spark/jars/hive-exec-2.3.9-core.jar:/opt/spark/jars/hive-jdbc-2.3.9.jar:/opt/spark/jars/hive-llap-common-2.3.9.jar:/opt/spark/jars/hive-metastore-2.3.9.jar:/opt/spark/jars/hive-serde-2.3.9.jar:/opt/spark/jars/hive-service-rpc-3.1.3.jar:/opt/spark/jars/hive-shims-0.23-2.3.9.jar:/opt/spark/jars/hive-shims-2.3.9.jar:/opt/spark/jars/hive-shims-common-2.3.9.jar:/opt/spark/jars/hive-shims-scheduler-2.3.9.jar:/opt/spark/jars/hive-storage-api-2.8.1.jar:/opt/spark/jars/hk2-api-2.6.1.jar:/opt/spark/jars/hk2-locator-2.6.1.jar:/opt/spark/jars/hk2-utils-2.6.1.jar:/opt/spark/jars/httpclient-4.5.14.jar:/opt/spark/jars/httpcore-4.4.16.jar:/opt/spark/jars/istack-commons-runtime-3.0.8.jar:/opt/spark/jars/ivy-2.5.1.jar:/opt/spark/jars/jackson-annotations-2.15.2.jar:/opt/spark/jars/jackson-core-2.15.2.jar:/opt/spark/jars/jackson-core-asl-1.9.13.jar:/opt/spark/jars/jackson-databind-2.15.2.jar:/opt/spark/jars/jackson-dataformat-yaml-2.15.2.jar:/opt/spark/jars/jackson-datatype-jsr310-2.15.2.jar:/opt/spark/jars/jackson-mapper-asl-1.9.13.jar:/opt/spark/jars/jackson-module-scala_2.12-2.15.2.jar:/opt/spark/jars/jakarta.annotation-api-1.3.5.jar:/opt/spark/jars/jakarta.inject-2.6.1.jar:/opt/spark/jars/jakarta.servlet-api-4.0.3.jar:/opt/spark/jars/jakarta.validation-api-2.0.2.jar:/opt/spark/jars/jakarta.ws.rs-api-2.1.6.jar:/opt/spark/jars/jakarta.xml.bind-api-2.3.2.jar:/opt/spark/jars/janino-3.1.9.jar:/opt/spark/jars/javassist-3.29.2-GA.jar:/opt/spark/jars/jpam-1.1.jar:/opt/spark/jars/javax.jdo-3.2.0-m3.jar:/opt/spark/jars/javolution-5.5.1.jar:/opt/spark/jars/jaxb-runtime-2.3.2.jar:/opt/spark/jars/jcl-over-slf4j-2.0.7.jar:/opt/spark/jars/jdo-api-3.0.1.jar:/opt/spark/jars/jersey-client-2.40.jar:/opt/spark/jars/jersey-common-2.40.jar:/opt/spark/jars/jersey-container-servlet-2.40.jar:/opt/spark/jars/jersey-container-servlet-core-2.40.jar:/opt/spark/jars/jersey-hk2-2.40.jar:/opt/spark/jars/jersey-server-2.40.jar:/opt/spark/jars/jline-2.14.6.jar:/opt/spark/jars/joda-time-2.12.5.jar:/opt/spark/jars/jodd-core-3.5.2.jar:/opt/spark/jars/json-1.8.jar:/opt/spark/jars/json4s-ast_2.12-3.7.0-M11.jar:/opt/spark/jars/json4s-core_2.12-3.7.0-M11.jar:/opt/spark/jars/json4s-jackson_2.12-3.7.0-M11.jar:/opt/spark/jars/json4s-scalap_2.12-3.7.0-M11.jar:/opt/spark/jars/jsr305-3.0.0.jar:/opt/spark/jars/jta-1.1.jar:/opt/spark/jars/jul-to-slf4j-2.0.7.jar:/opt/spark/jars/kryo-shaded-4.0.2.jar:/opt/spark/jars/kubernetes-client-6.7.2.jar:/opt/spark/jars/kubernetes-client-api-6.7.2.jar:/opt/spark/jars/kubernetes-httpclient-okhttp-6.7.2.jar:/opt/spark/jars/kubernetes-model-admissionregistration-6.7.2.jar:/opt/spark/jars/kubernetes-model-apiextensions-6.7.2.jar:/opt/spark/jars/kubernetes-model-apps-6.7.2.jar:/opt/spark/jars/kubernetes-model-autoscaling-6.7.2.jar:/opt/spark/jars/kubernetes-model-batch-6.7.2.jar:/opt/spark/jars/kubernetes-model-certificates-6.7.2.jar:/opt/spark/jars/kubernetes-model-common-6.7.2.jar:/opt/spark/jars/kubernetes-model-coordination-6.7.2.jar:/opt/spark/jars/kubernetes-model-core-6.7.2.jar:/opt/spark/jars/kubernetes-model-discovery-6.7.2.jar:/opt/spark/jars/kubernetes-model-events-6.7.2.jar:/opt/spark/jars/kubernetes-model-extensions-6.7.2.jar:/opt/spark/jars/kubernetes-model-flowcontrol-6.7.2.jar:/opt/spark/jars/kubernetes-model-gatewayapi-6.7.2.jar:/opt/spark/jars/kubernetes-model-metrics-6.7.2.jar:/opt/spark/jars/kubernetes-model-networking-6.7.2.jar:/opt/spark/jars/kubernetes-model-node-6.7.2.jar:/opt/spark/jars/kubernetes-model-policy-6.7.2.jar:/opt/spark/jars/kubernetes-model-rbac-6.7.2.jar:/opt/spark/jars/kubernetes-model-resource-6.7.2.jar:/opt/spark/jars/kubernetes-model-scheduling-6.7.2.jar:/opt/spark/jars/kubernetes-model-storageclass-6.7.2.jar:/opt/spark/jars/lapack-3.0.3.jar:/opt/spark/jars/leveldbjni-all-1.8.jar:/opt/spark/jars/libfb303-0.9.3.jar:/opt/spark/jars/libthrift-0.12.0.jar:/opt/spark/jars/log4j-1.2-api-2.20.0.jar:/opt/spark/jars/log4j-api-2.20.0.jar:/opt/spark/jars/log4j-core-2.20.0.jar:/opt/spark/jars/log4j-slf4j2-impl-2.20.0.jar:/opt/spark/jars/logging-interceptor-3.12.12.jar:/opt/spark/jars/lz4-java-1.8.0.jar:/opt/spark/jars/mesos-1.4.3-shaded-protobuf.jar:/opt/spark/jars/metrics-core-4.2.19.jar:/opt/spark/jars/metrics-graphite-4.2.19.jar:/opt/spark/jars/metrics-jmx-4.2.19.jar:/opt/spark/jars/metrics-json-4.2.19.jar:/opt/spark/jars/metrics-jvm-4.2.19.jar:/opt/spark/jars/minlog-1.3.0.jar:/opt/spark/jars/netty-all-4.1.96.Final.jar:/opt/spark/jars/netty-buffer-4.1.96.Final.jar:/opt/spark/jars/netty-codec-4.1.96.Final.jar:/opt/spark/jars/netty-codec-http-4.1.96.Final.jar:/opt/spark/jars/netty-codec-http2-4.1.96.Final.jar:/opt/spark/jars/netty-codec-socks-4.1.96.Final.jar:/opt/spark/jars/netty-common-4.1.96.Final.jar:/opt/spark/jars/netty-handler-4.1.96.Final.jar:/opt/spark/jars/netty-handler-proxy-4.1.96.Final.jar:/opt/spark/jars/netty-resolver-4.1.96.Final.jar:/opt/spark/jars/netty-transport-4.1.96.Final.jar:/opt/spark/jars/netty-transport-classes-epoll-4.1.96.Final.jar:/opt/spark/jars/netty-transport-classes-kqueue-4.1.96.Final.jar:/opt/spark/jars/netty-transport-native-epoll-4.1.96.Final-linux-aarch_64.jar:/opt/spark/jars/netty-transport-native-epoll-4.1.96.Final-linux-x86_64.jar:/opt/spark/jars/netty-transport-native-kqueue-4.1.96.Final-osx-aarch_64.jar:/opt/spark/jars/netty-transport-native-kqueue-4.1.96.Final-osx-x86_64.jar:/opt/spark/jars/netty-transport-native-unix-common-4.1.96.Final.jar:/opt/spark/jars/objenesis-3.3.jar:/opt/spark/jars/okhttp-3.12.12.jar:/opt/spark/jars/okio-1.15.0.jar:/opt/spark/jars/opencsv-2.3.jar:/opt/spark/jars/orc-core-1.9.1-shaded-protobuf.jar:/opt/spark/jars/orc-shims-1.9.1.jar:/opt/spark/jars/orc-mapreduce-1.9.1-shaded-protobuf.jar:/opt/spark/jars/oro-2.0.8.jar:/opt/spark/jars/osgi-resource-locator-1.0.3.jar:/opt/spark/jars/paranamer-2.8.jar:/opt/spark/jars/parquet-column-1.13.1.jar:/opt/spark/jars/parquet-common-1.13.1.jar:/opt/spark/jars/parquet-encoding-1.13.1.jar:/opt/spark/jars/parquet-format-structures-1.13.1.jar:/opt/spark/jars/parquet-hadoop-1.13.1.jar:/opt/spark/jars/parquet-jackson-1.13.1.jar:/opt/spark/jars/pickle-1.3.jar:/opt/spark/jars/py4j-0.10.9.7.jar:/opt/spark/jars/rocksdbjni-8.3.2.jar:/opt/spark/jars/scala-collection-compat_2.12-2.7.0.jar:/opt/spark/jars/scala-compiler-2.12.18.jar:/opt/spark/jars/scala-library-2.12.18.jar:/opt/spark/jars/scala-parser-combinators_2.12-2.3.0.jar:/opt/spark/jars/scala-reflect-2.12.18.jar:/opt/spark/jars/scala-xml_2.12-2.1.0.jar:/opt/spark/jars/shims-0.9.45.jar:/opt/spark/jars/slf4j-api-2.0.7.jar:/opt/spark/jars/snakeyaml-2.0.jar:/opt/spark/jars/snakeyaml-engine-2.6.jar:/opt/spark/jars/snappy-java-1.1.10.3.jar:/opt/spark/jars/spark-catalyst_2.12-3.5.0.jar:/opt/spark/jars/spark-common-utils_2.12-3.5.0.jar:/opt/spark/jars/spark-core_2.12-3.5.0.jar:/opt/spark/jars/spark-graphx_2.12-3.5.0.jar:/opt/spark/jars/spark-hive-thriftserver_2.12-3.5.0.jar:/opt/spark/jars/spark-hive_2.12-3.5.0.jar:/opt/spark/jars/spark-kubernetes_2.12-3.5.0.jar:/opt/spark/jars/spark-kvstore_2.12-3.5.0.jar:/opt/spark/jars/spark-launcher_2.12-3.5.0.jar:/opt/spark/jars/spark-mesos_2.12-3.5.0.jar:/opt/spark/jars/spark-mllib-local_2.12-3.5.0.jar:/opt/spark/jars/spark-mllib_2.12-3.5.0.jar:/opt/spark/jars/spark-network-common_2.12-3.5.0.jar:/opt/spark/jars/spark-network-shuffle_2.12-3.5.0.jar:/opt/spark/jars/spark-repl_2.12-3.5.0.jar:/opt/spark/jars/spark-sketch_2.12-3.5.0.jar:/opt/spark/jars/spark-sql-api_2.12-3.5.0.jar:/opt/spark/jars/spark-sql_2.12-3.5.0.jar:/opt/spark/jars/spark-streaming_2.12-3.5.0.jar:/opt/spark/jars/spark-tags_2.12-3.5.0.jar:/opt/spark/jars/spark-unsafe_2.12-3.5.0.jar:/opt/spark/jars/spark-yarn_2.12-3.5.0.jar:/opt/spark/jars/spire-macros_2.12-0.17.0.jar:/opt/spark/jars/spire-platform_2.12-0.17.0.jar:/opt/spark/jars/spire-util_2.12-0.17.0.jar:/opt/spark/jars/spire_2.12-0.17.0.jar:/opt/spark/jars/stax-api-1.0.1.jar:/opt/spark/jars/stream-2.9.6.jar:/opt/spark/jars/super-csv-2.2.0.jar:/opt/spark/jars/threeten-extra-1.7.1.jar:/opt/spark/jars/tink-1.9.0.jar:/opt/spark/jars/transaction-api-1.1.jar:/opt/spark/jars/univocity-parsers-2.9.1.jar:/opt/spark/jars/xbean-asm9-shaded-4.23.jar:/opt/spark/jars/xz-1.9.jar:/opt/spark/jars/zjsonpatch-0.3.0.jar:/opt/spark/jars/zookeeper-3.6.3.jar:/opt/spark/jars/zookeeper-jute-3.6.3.jar:/opt/spark/jars/zstd-jni-1.5.5-4.jar:/opt/spark/jars/apache-client-2.25.65.jar:/opt/spark/jars/auth-2.25.65.jar:/opt/spark/jars/aws-core-2.25.65.jar:/opt/spark/jars/aws-java-sdk-bundle-1.12.262.jar:/opt/spark/jars/aws-json-protocol-2.25.65.jar:/opt/spark/jars/aws-query-protocol-2.25.65.jar:/opt/spark/jars/aws-xml-protocol-2.25.65.jar:/opt/spark/jars/checksums-2.25.65.jar:/opt/spark/jars/checksums-spi-2.25.65.jar:/opt/spark/jars/dynamodb-2.25.65.jar:/opt/spark/jars/endpoints-spi-2.25.65.jar:/opt/spark/jars/glue-2.25.65.jar:/opt/spark/jars/hadoop-aws-3.3.4.jar:/opt/spark/jars/http-auth-2.25.65.jar:/opt/spark/jars/http-auth-aws-2.25.65.jar:/opt/spark/jars/http-auth-spi-2.25.65.jar:/opt/spark/jars/http-client-spi-2.25.65.jar:/opt/spark/jars/iceberg-spark-runtime-3.5_2.12-1.5.0.jar:/opt/spark/jars/identity-spi-2.25.65.jar:/opt/spark/jars/json-utils-2.25.65.jar:/opt/spark/jars/kms-2.25.65.jar:/opt/spark/jars/metrics-spi-2.25.65.jar:/opt/spark/jars/profiles-2.25.65.jar:/opt/spark/jars/protocol-core-2.25.65.jar:/opt/spark/jars/reactive-streams-1.0.4.jar:/opt/spark/jars/regions-2.25.65.jar:/opt/spark/jars/s3-2.25.65.jar:/opt/spark/jars/sdk-core-2.25.65.jar:/opt/spark/jars/sts-2.25.65.jar:/opt/spark/jars/third-party-jackson-core-2.25.65.jar:/opt/spark/jars/utils-2.25.65.jar

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.library.path=/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.io.tmpdir=/tmp

24/06/17 07:34:05 INFO ZooKeeper: Client environment:java.compiler=<NA>

24/06/17 07:34:05 INFO ZooKeeper: Client environment:os.name=Linux

24/06/17 07:34:05 INFO ZooKeeper: Client environment:os.arch=amd64

24/06/17 07:34:05 INFO ZooKeeper: Client environment:os.version=3.10.0-1160.114.2.el7.x86_64

24/06/17 07:34:05 INFO ZooKeeper: Client environment:user.name=root

24/06/17 07:34:05 INFO ZooKeeper: Client environment:user.home=/root

24/06/17 07:34:05 INFO ZooKeeper: Client environment:user.dir=/opt/apache-kyuubi-1.9.0-bin/work/anonymous

24/06/17 07:34:05 INFO ZooKeeper: Initiating client connection, connectString=10.42.46.241:2181 sessionTimeout=60000 watcher=org.apache.kyuubi.shaded.curator.ConnectionState@13482361

24/06/17 07:34:05 INFO EngineServiceDiscovery: Service[EngineServiceDiscovery] is initialized.

24/06/17 07:34:05 INFO SparkTBinaryFrontendService: Service[SparkTBinaryFrontend] is initialized.

24/06/17 07:34:05 INFO SparkSQLEngine: Service[SparkSQLEngine] is initialized.

24/06/17 07:34:05 INFO ClientCnxn: Opening socket connection to server kyuubi-59b89b87b7-bjckz/10.42.46.241:2181. Will not attempt to authenticate using SASL (unknown error)

24/06/17 07:34:05 INFO ClientCnxn: Socket connection established to kyuubi-59b89b87b7-bjckz/10.42.46.241:2181, initiating session

24/06/17 07:34:05 INFO SparkSQLOperationManager: Service[SparkSQLOperationManager] is started.

24/06/17 07:34:05 INFO SparkSQLSessionManager: Service[SparkSQLSessionManager] is started.

24/06/17 07:34:05 INFO SparkSQLBackendService: Service[SparkSQLBackendService] is started.

24/06/17 07:34:05 INFO ClientCnxn: Session establishment complete on server kyuubi-59b89b87b7-bjckz/10.42.46.241:2181, sessionid = 0x101113150210002, negotiated timeout = 60000

24/06/17 07:34:05 INFO ConnectionStateManager: State change: CONNECTED

24/06/17 07:34:05 INFO ZookeeperDiscoveryClient: Zookeeper client connection state changed to: CONNECTED

24/06/17 07:34:05 INFO ZookeeperDiscoveryClient: Created a /kyuubi_1.9.0_USER_SPARK_SQL/anonymous/default/serverUri=10.42.46.241:42178;version=1.9.0;spark.driver.memory=;spark.executor.memory=;kyuubi.engine.id=spark-a13ae55c985c44168bd3fa6476c1ac1f;kyuubi.engine.url=10.42.46.241:42553;refId=50850fdf-6a15-4c47-bc5f-d8816d255ef8;sequence=0000000000 on ZooKeeper for KyuubiServer uri: 10.42.46.241:42178

24/06/17 07:34:05 INFO EngineServiceDiscovery: Registered EngineServiceDiscovery in namespace /kyuubi_1.9.0_USER_SPARK_SQL/anonymous/default.

24/06/17 07:34:05 INFO EngineServiceDiscovery: Service[EngineServiceDiscovery] is started.

24/06/17 07:34:05 INFO SparkTBinaryFrontendService: Service[SparkTBinaryFrontend] is started.

24/06/17 07:34:05 INFO SparkSQLEngine: Service[SparkSQLEngine] is started.

24/06/17 07:34:05 INFO SparkSQLEngine:

Spark application name: kyuubi_USER_SPARK_SQL_anonymous_default_50850fdf-6a15-4c47-bc5f-d8816d255ef8

application ID: spark-a13ae55c985c44168bd3fa6476c1ac1f

application tags:

application web UI: http://10.42.46.241:42553

master: k8s://https://10.38.199.201:443/k8s/clusters/c-m-l7gflsx7

version: 3.5.0

driver: [cpu: 1, mem: 1g]

executor: [cpu: 2, mem: 1g, maxNum: 2]

Start time: Mon Jun 17 07:33:49 UTC 2024

User: anonymous (shared mode: USER)

State: STARTED

2024-06-17 07:34:05.979 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.ha.client.zookeeper.ZookeeperDiscoveryClient: Get service instance:10.42.46.241:42178 engine id:spark-a13ae55c985c44168bd3fa6476c1ac1f and version:1.9.0 under /kyuubi_1.9.0_USER_SPARK_SQL/anonymous/default

24/06/17 07:34:06 INFO SparkTBinaryFrontendService: Client protocol version: HIVE_CLI_SERVICE_PROTOCOL_V10

24/06/17 07:34:06 INFO SparkSQLSessionManager: Opening session for anonymous@10.42.46.241

24/06/17 07:34:06 INFO CatalogUtil: Loading custom FileIO implementation: org.apache.iceberg.aws.s3.S3FileIO

24/06/17 07:34:06 INFO HiveUtils: Initializing HiveMetastoreConnection version 2.3.9 using Spark classes.

24/06/17 07:34:06 INFO HiveClientImpl: Warehouse location for Hive client (version 2.3.9) is file:/opt/apache-kyuubi-1.9.0-bin/work/anonymous/spark-warehouse

24/06/17 07:34:06 WARN HiveConf: HiveConf of name hive.stats.jdbc.timeout does not exist

24/06/17 07:34:06 WARN HiveConf: HiveConf of name hive.stats.retries.wait does not exist

24/06/17 07:34:06 INFO HiveMetaStore: 0: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

24/06/17 07:34:06 INFO ObjectStore: ObjectStore, initialize called

24/06/17 07:34:07 INFO Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

24/06/17 07:34:07 INFO Persistence: Property datanucleus.cache.level2 unknown - will be ignored

24/06/17 07:34:12 INFO ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

24/06/17 07:34:18 INFO MetaStoreDirectSql: Using direct SQL, underlying DB is DERBY

24/06/17 07:34:18 INFO ObjectStore: Initialized ObjectStore

24/06/17 07:34:19 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 2.3.0

24/06/17 07:34:19 WARN ObjectStore: setMetaStoreSchemaVersion called but recording version is disabled: version = 2.3.0, comment = Set by MetaStore UNKNOWN@10.42.46.241

24/06/17 07:34:19 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException

24/06/17 07:34:19 INFO HiveMetaStore: Added admin role in metastore

24/06/17 07:34:19 INFO HiveMetaStore: Added public role in metastore

24/06/17 07:34:20 INFO HiveMetaStore: No user is added in admin role, since config is empty

24/06/17 07:34:20 INFO HiveMetaStore: 0: get_database: default

24/06/17 07:34:20 INFO audit: ugi=anonymous ip=unknown-ip-addr cmd=get_database: default

24/06/17 07:34:20 INFO HiveMetaStore: 0: get_database: global_temp

24/06/17 07:34:20 INFO audit: ugi=anonymous ip=unknown-ip-addr cmd=get_database: global_temp

24/06/17 07:34:20 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

24/06/17 07:34:20 INFO HiveMetaStore: 0: get_database: default

24/06/17 07:34:20 INFO audit: ugi=anonymous ip=unknown-ip-addr cmd=get_database: default

2024-06-17 07:34:21.585 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.session.KyuubiSessionImpl: [anonymous:10.38.199.201] SessionHandle [50850fdf-6a15-4c47-bc5f-d8816d255ef8] - Connected to engine [10.42.46.241:42178]/[spark-a13ae55c985c44168bd3fa6476c1ac1f] with SessionHandle [50850fdf-6a15-4c47-bc5f-d8816d255ef8]]

2024-06-17 07:34:21.588 INFO Curator-Framework-0 org.apache.kyuubi.shaded.curator.framework.imps.CuratorFrameworkImpl: backgroundOperationsLoop exiting

2024-06-17 07:34:21.607 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.shaded.zookeeper.ZooKeeper: Session: 0x101113150210001 closed

2024-06-17 07:34:21.607 INFO KyuubiSessionManager-exec-pool: Thread-54-EventThread org.apache.kyuubi.shaded.zookeeper.ClientCnxn: EventThread shut down for session: 0x101113150210001

2024-06-17 07:34:21.613 INFO KyuubiSessionManager-exec-pool: Thread-54 org.apache.kyuubi.operation.LaunchEngine: Processing anonymous's query[c612b8d0-8bc7-4efa-acc8-e6c477fbda20]: RUNNING_STATE -> FINISHED_STATE, time taken: 35.942 seconds

24/06/17 07:34:21 INFO SparkSQLSessionManager: anonymous's SparkSessionImpl with SessionHandle [50850fdf-6a15-4c47-bc5f-d8816d255ef8] is opened, current opening sessions 1

Connected to: Spark SQL (version 3.5.0)

Driver: Kyuubi Project Hive JDBC Client (version 1.9.0)

Beeline version 1.9.0 by Apache Kyuubi

0: jdbc:hive2://10.38.199.201:30009>

执行sql日志

0: jdbc:hive2://10.38.199.201:30009> select * from p530_cimarronbp.attr_vals limit 10;

2024-06-17 06:31:19.099 INFO KyuubiSessionManager-exec-pool: Thread-63 org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[65d78d0c-0e89-4f2f-a41c-7a584520bc81]: PENDING_STATE -> RUNNING_STATE, statement:

select * from p530_cimarronbp.attr_vals limit 10

24/06/17 06:31:19 INFO ExecuteStatement: Processing anonymous's query[65d78d0c-0e89-4f2f-a41c-7a584520bc81]: PENDING_STATE -> RUNNING_STATE, statement:

select * from p530_cimarronbp.attr_vals limit 10

24/06/17 06:31:19 INFO ExecuteStatement:

Spark application name: kyuubi_USER_SPARK_SQL_anonymous_default_6474780a-72b2-439a-82f0-79131d583346

application ID: spark-6b4ff4ba67df455d94af062c51825801

application web UI: http://10.42.235.246:45076

master: k8s://https://10.38.199.201:443/k8s/clusters/c-m-l7gflsx7

deploy mode: client

version: 3.5.0

Start time: 2024-06-17T06:30:04.865

User: anonymous

24/06/17 06:31:19 INFO ExecuteStatement: Execute in full collect mode

24/06/17 06:31:19 INFO V2ScanRelationPushDown:

Output: serial_num#96, trans_seq#97, attr_name#98, pre_attr_value#99, post_attr_value#100, in_run_file#101, event_date#102, family#103, operation#104

24/06/17 06:31:19 INFO SnapshotScan: Scanning table spark_catalog.p530_cimarronbp.attr_vals snapshot 4278818967903043345 created at 2024-06-15T05:35:09.281+00:00 with filter true

24/06/17 06:31:19 INFO BaseDistributedDataScan: Planning file tasks locally for table spark_catalog.p530_cimarronbp.attr_vals

24/06/17 06:31:23 INFO LoggingMetricsReporter: Received metrics report: ScanReport{tableName=spark_catalog.p530_cimarronbp.attr_vals, snapshotId=4278818967903043345, filter=true, schemaId=0, projectedFieldIds=[1, 2, 3, 4, 5, 6, 7, 8, 9], projectedFieldNames=[serial_num, trans_seq, attr_name, pre_attr_value, post_attr_value, in_run_file, event_date, family, operation], scanMetrics=ScanMetricsResult{totalPlanningDuration=TimerResult{timeUnit=NANOSECONDS, totalDuration=PT4.375705985S, count=1}, resultDataFiles=CounterResult{unit=COUNT, value=53367}, resultDeleteFiles=CounterResult{unit=COUNT, value=0}, totalDataManifests=CounterResult{unit=COUNT, value=97}, totalDeleteManifests=CounterResult{unit=COUNT, value=0}, scannedDataManifests=CounterResult{unit=COUNT, value=97}, skippedDataManifests=CounterResult{unit=COUNT, value=0}, totalFileSizeInBytes=CounterResult{unit=BYTES, value=2867530435}, totalDeleteFileSizeInBytes=CounterResult{unit=BYTES, value=0}, skippedDataFiles=CounterResult{unit=COUNT, value=0}, skippedDeleteFiles=CounterResult{unit=COUNT, value=0}, scannedDeleteManifests=CounterResult{unit=COUNT, value=0}, skippedDeleteManifests=CounterResult{unit=COUNT, value=0}, indexedDeleteFiles=CounterResult{unit=COUNT, value=0}, equalityDeleteFiles=CounterResult{unit=COUNT, value=0}, positionalDeleteFiles=CounterResult{unit=COUNT, value=0}}, metadata={engine-version=3.5.0, iceberg-version=Apache Iceberg 1.5.0 (commit 2519ab43d654927802cc02e19c917ce90e8e0265), app-id=spark-6b4ff4ba67df455d94af062c51825801, engine-name=spark}}

24/06/17 06:31:23 INFO SparkPartitioningAwareScan: Reporting UnknownPartitioning with 13356 partition(s) for table spark_catalog.p530_cimarronbp.attr_vals

24/06/17 06:31:23 INFO MemoryStore: Block broadcast_7 stored as values in memory (estimated size 32.0 KiB, free 434.4 MiB)

24/06/17 06:31:23 INFO MemoryStore: Block broadcast_7_piece0 stored as bytes in memory (estimated size 3.8 KiB, free 434.4 MiB)

24/06/17 06:31:23 INFO SparkContext: Created broadcast 7 from broadcast at SparkBatch.java:79

24/06/17 06:31:23 INFO MemoryStore: Block broadcast_8 stored as values in memory (estimated size 32.0 KiB, free 434.3 MiB)

24/06/17 06:31:23 INFO MemoryStore: Block broadcast_8_piece0 stored as bytes in memory (estimated size 3.8 KiB, free 434.3 MiB)

24/06/17 06:31:23 INFO SparkContext: Created broadcast 8 from broadcast at SparkBatch.java:79

24/06/17 06:31:23 INFO SparkContext: Starting job: collect at ExecuteStatement.scala:85

24/06/17 06:31:23 INFO SQLOperationListener: Query [65d78d0c-0e89-4f2f-a41c-7a584520bc81]: Job 3 started with 1 stages, 1 active jobs running

24/06/17 06:31:23 INFO SQLOperationListener: Query [65d78d0c-0e89-4f2f-a41c-7a584520bc81]: Stage 3.0 started with 1 tasks, 1 active stages running

2024-06-17 06:31:24.103 INFO KyuubiSessionManager-exec-pool: Thread-63 org.apache.kyuubi.operation.ExecuteStatement: Query[65d78d0c-0e89-4f2f-a41c-7a584520bc81] in RUNNING_STATE

24/06/17 06:31:24 INFO SQLOperationListener: Finished stage: Stage(3, 0); Name: 'collect at ExecuteStatement.scala:85'; Status: succeeded; numTasks: 1; Took: 343 msec

24/06/17 06:31:24 INFO DAGScheduler: Job 3 finished: collect at ExecuteStatement.scala:85, took 0.362979 s

24/06/17 06:31:24 INFO StatsReportListener: task runtime:(count: 1, mean: 326.000000, stdev: 0.000000, max: 326.000000, min: 326.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 326.0 ms 326.0 ms 326.0 ms 326.0 ms 326.0 ms 326.0 ms 326.0 ms 326.0 ms 326.0 ms

24/06/17 06:31:24 INFO StatsReportListener: shuffle bytes written:(count: 1, mean: 0.000000, stdev: 0.000000, max: 0.000000, min: 0.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B

24/06/17 06:31:24 INFO StatsReportListener: fetch wait time:(count: 1, mean: 0.000000, stdev: 0.000000, max: 0.000000, min: 0.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 0.0 ms 0.0 ms 0.0 ms 0.0 ms 0.0 ms 0.0 ms 0.0 ms 0.0 ms 0.0 ms

24/06/17 06:31:24 INFO StatsReportListener: remote bytes read:(count: 1, mean: 0.000000, stdev: 0.000000, max: 0.000000, min: 0.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B 0.0 B

24/06/17 06:31:24 INFO StatsReportListener: task result size:(count: 1, mean: 5057.000000, stdev: 0.000000, max: 5057.000000, min: 5057.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB 4.9 KiB

24/06/17 06:31:24 INFO StatsReportListener: executor (non-fetch) time pct: (count: 1, mean: 84.969325, stdev: 0.000000, max: 84.969325, min: 84.969325)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 85 % 85 % 85 % 85 % 85 % 85 % 85 % 85 % 85 %

24/06/17 06:31:24 INFO StatsReportListener: fetch wait time pct: (count: 1, mean: 0.000000, stdev: 0.000000, max: 0.000000, min: 0.000000)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 0 % 0 % 0 % 0 % 0 % 0 % 0 % 0 % 0 %

24/06/17 06:31:24 INFO StatsReportListener: other time pct: (count: 1, mean: 15.030675, stdev: 0.000000, max: 15.030675, min: 15.030675)

24/06/17 06:31:24 INFO StatsReportListener: 0% 5% 10% 25% 50% 75% 90% 95% 100%

24/06/17 06:31:24 INFO StatsReportListener: 15 % 15 % 15 % 15 % 15 % 15 % 15 % 15 % 15 %

24/06/17 06:31:24 INFO SQLOperationListener: Query [65d78d0c-0e89-4f2f-a41c-7a584520bc81]: Job 3 succeeded, 0 active jobs running

24/06/17 06:31:24 INFO ExecuteStatement: Processing anonymous's query[65d78d0c-0e89-4f2f-a41c-7a584520bc81]: RUNNING_STATE -> FINISHED_STATE, time taken: 5.241 seconds

24/06/17 06:31:24 INFO ExecuteStatement: statementId=65d78d0c-0e89-4f2f-a41c-7a584520bc81, operationRunTime=0.3 s, operationCpuTime=77 ms

2024-06-17 06:31:24.344 INFO KyuubiSessionManager-exec-pool: Thread-63 org.apache.kyuubi.operation.ExecuteStatement: Query[65d78d0c-0e89-4f2f-a41c-7a584520bc81] in FINISHED_STATE

2024-06-17 06:31:24.344 INFO KyuubiSessionManager-exec-pool: Thread-63 org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[65d78d0c-0e89-4f2f-a41c-7a584520bc81]: RUNNING_STATE -> FINISHED_STATE, time taken: 5.244 seconds

+-------------+------------+----------------+-----------------------+-----------------------+--------------+-------------+---------+------------+

| serial_num | trans_seq | attr_name | pre_attr_value | post_attr_value | in_run_file | event_date | family | operation |

+-------------+------------+----------------+-----------------------+-----------------------+--------------+-------------+---------+------------+

| WRQ2NM0H | 19 | STATE_NAME | END | END | NULL | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | TEST_DATE | 04/27/2024 09:55:40 | 04/27/2024 09:55:40 | NULL | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | FILE_TYPE | NTR | NTR | NULL | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | CUM_TEST_TIME | 162301.49 | 212097.50 | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | PCBA_COMP_ID5 | 70437 | 70437 | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | CCVTEST | NONE | NONE | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | PCBA_COMP_ID3 | 78810 | 78810 | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | PCBA_COMP_ID2 | 57706 | 57706 | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | PCBA_COMP_ID1 | 15229 | 15229 | 1 | 20240427 | 2TJ | CAL2 |

| WRQ2NM0H | 19 | FTFC_APC_DATE | "0001-01-0100:00:00" | "0001-01-0100:00:00" | 1 | 20240427 | 2TJ | CAL2 |

+-------------+------------+----------------+-----------------------+-----------------------+--------------+-------------+---------+------------+

10 rows selected (5.287 seconds)

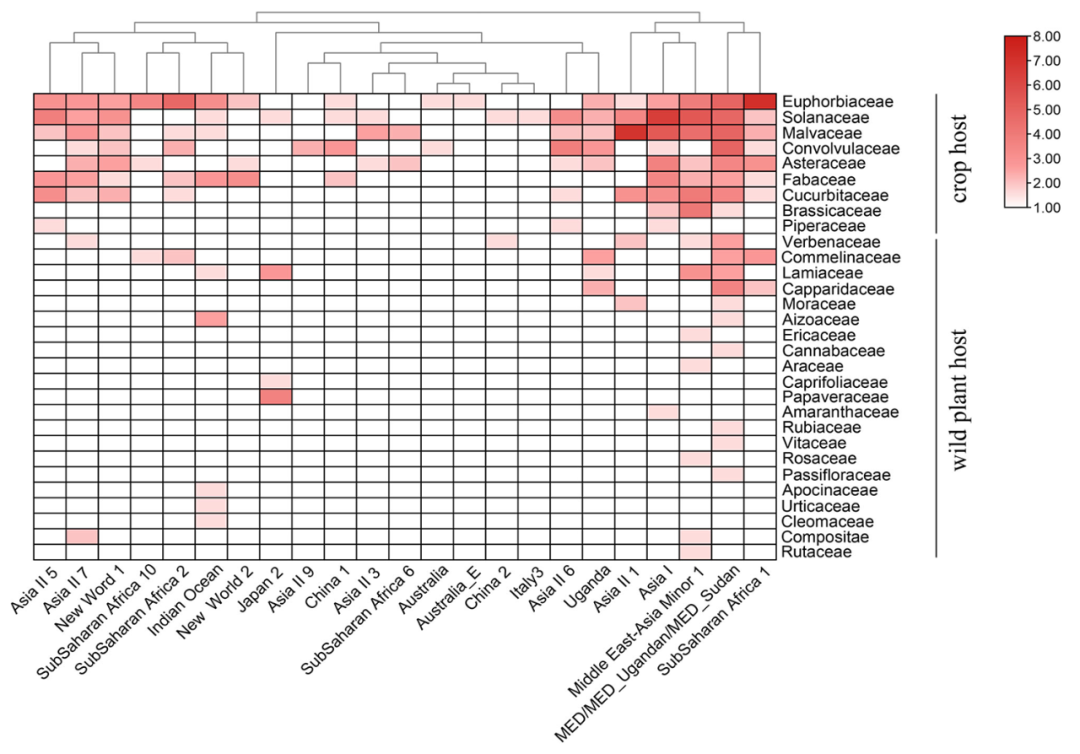

k8s中生成2个executors