参考:

https://github.com/chunhuizhang/pytorch_distribute_tutorials/blob/main/tutorials/amp_autocast_mixed_precision_training.ipynb

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

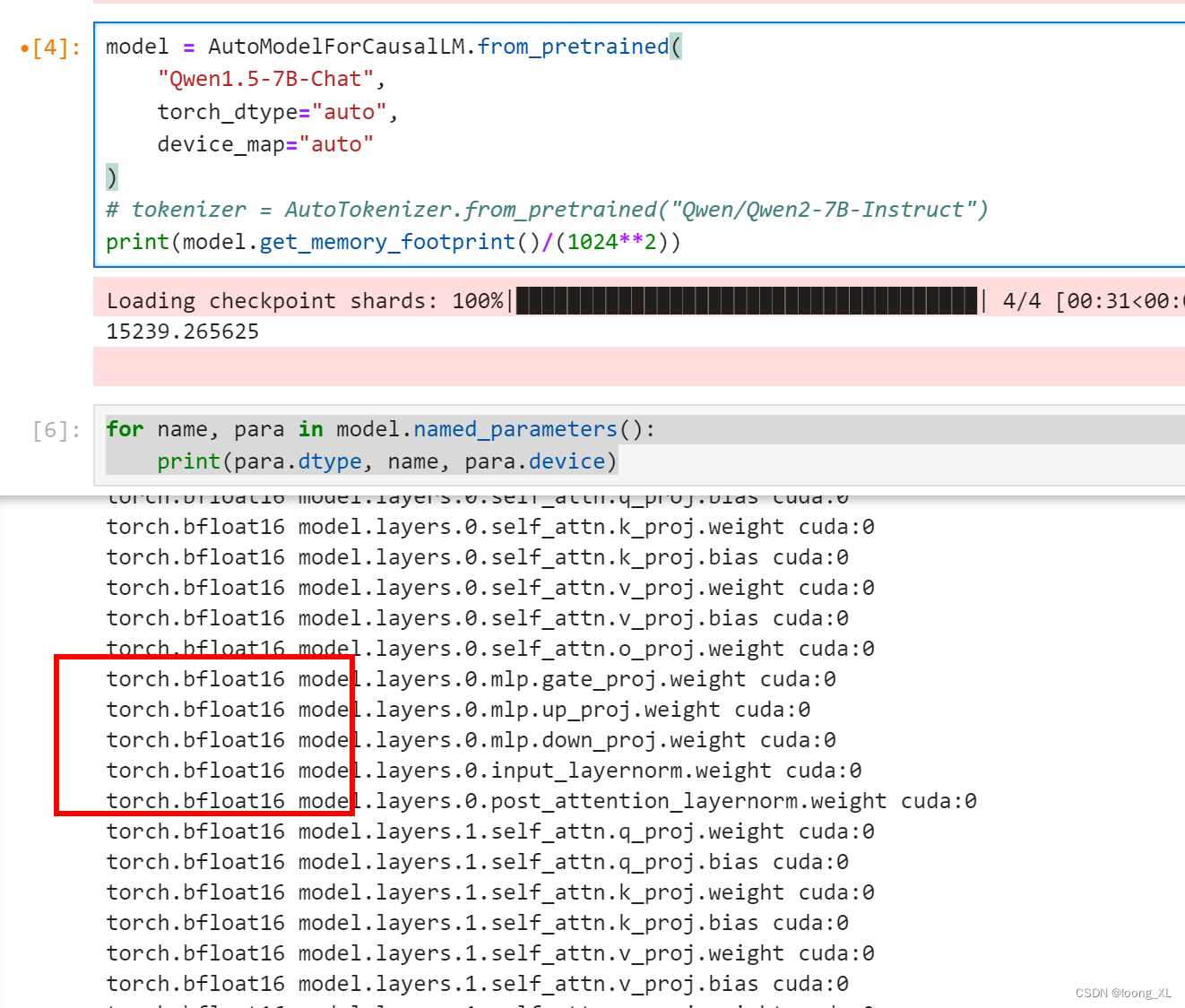

model = AutoModelForCausalLM.from_pretrained(

"Qwen1.5-7B-Chat",

torch_dtype="auto",

device_map="auto"

)

# tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2-7B-Instruct")

print(model.get_memory_footprint()/(1024**2))

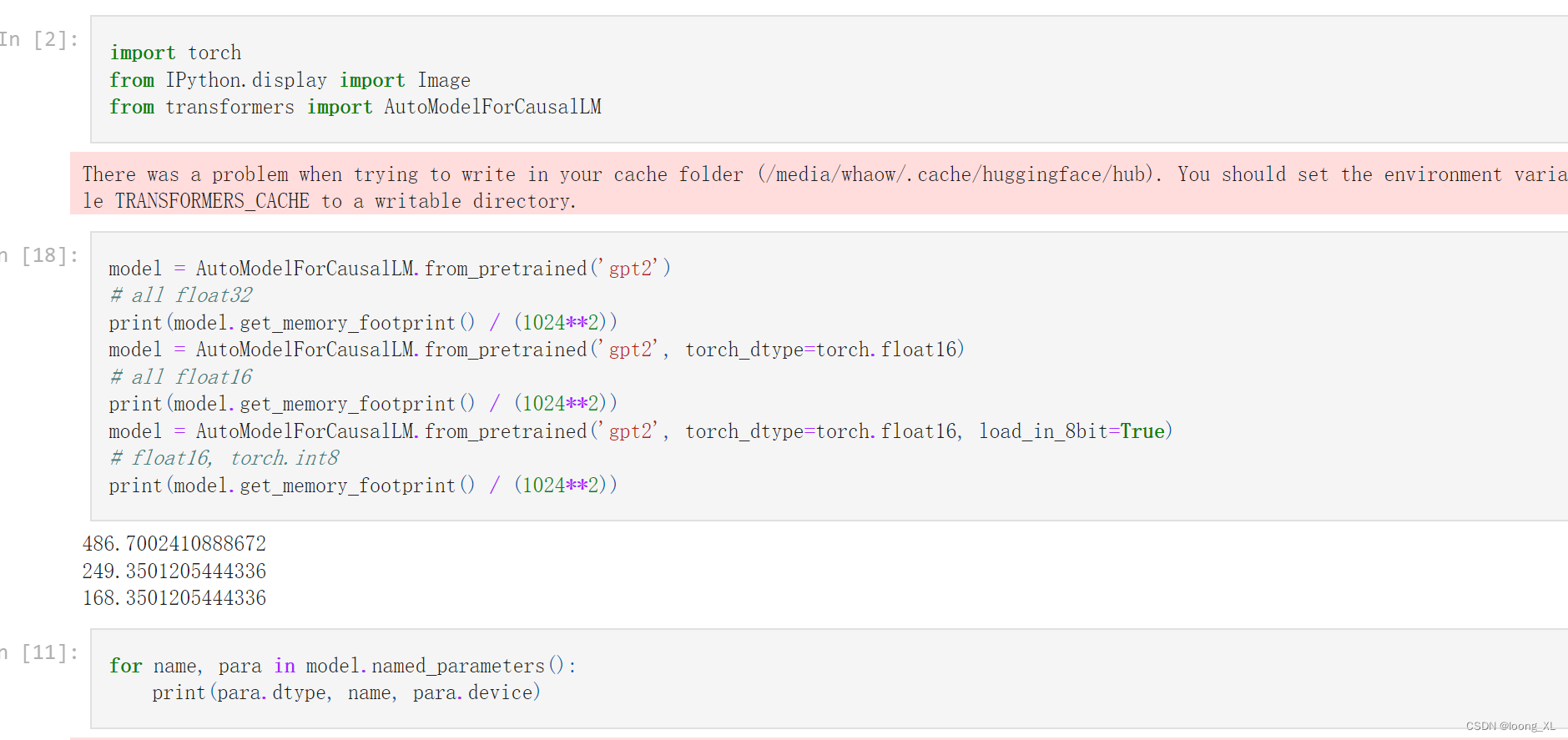

for name, para in model.named_parameters():

print(para.dtype, name, para.device)

默认bfloat16

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

device = "cuda" # the device to load the model onto

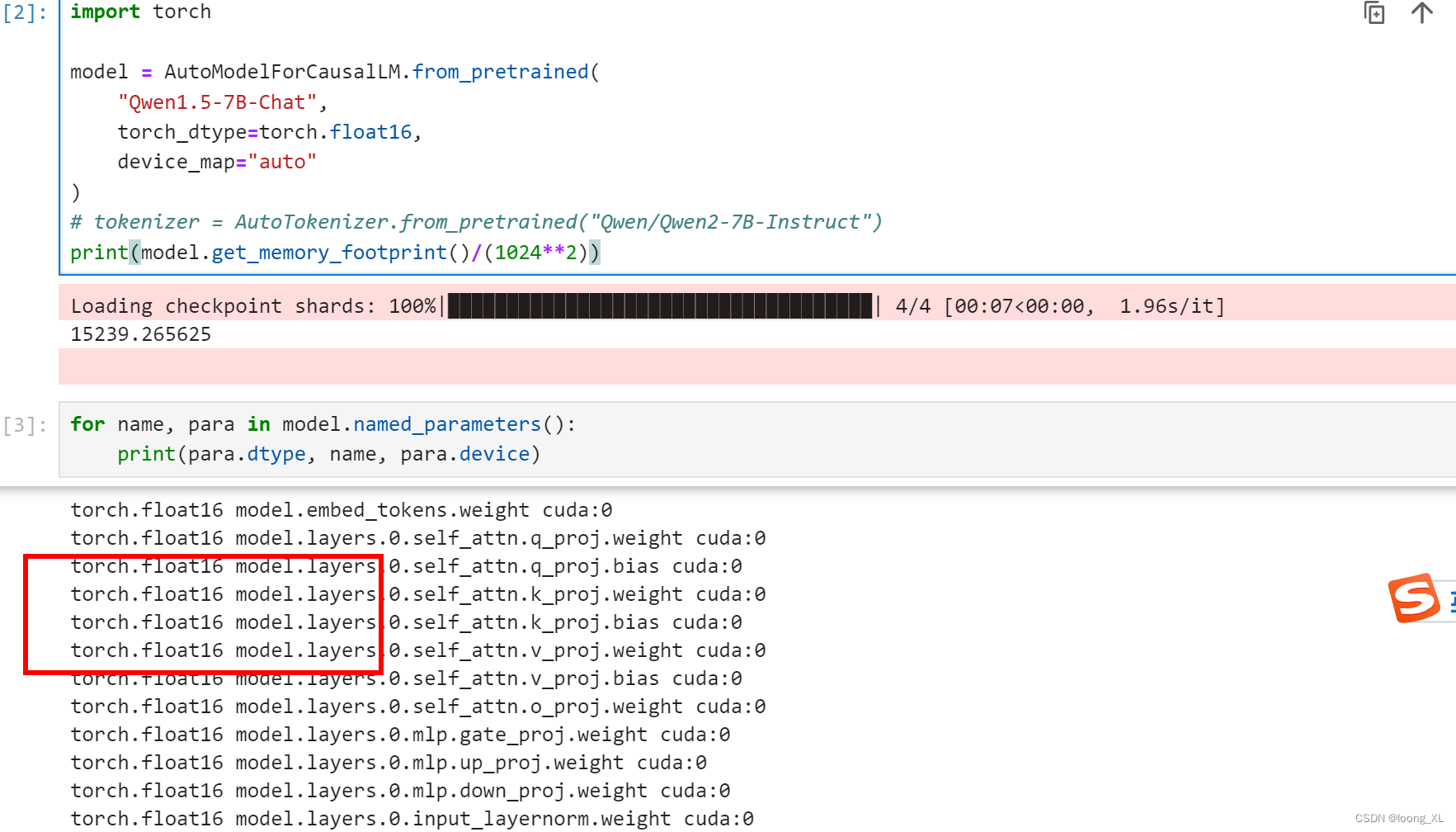

model = AutoModelForCausalLM.from_pretrained(

"Qwen1.5-7B-Chat",

torch_dtype=torch.float16,,

device_map="auto"

)

# tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2-7B-Instruct")

print(model.get_memory_footprint()/(1024**2))

for name, para in model.named_parameters():

print(para.dtype, name, para.device)

float16与bfloat16加载空间需要差不多,差不多GPU需要15G多

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained(

"Qwen1.5-7B-Chat",

torch_dtype=torch.float32,,

device_map="auto"

)

# tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2-7B-Instruct")

print(model.get_memory_footprint()/(1024**2))

for name, para in model.named_parameters():

print(para.dtype, name, para.device)

GPU需要19G多,精度会高些32bit,空间大些