一、数据标注

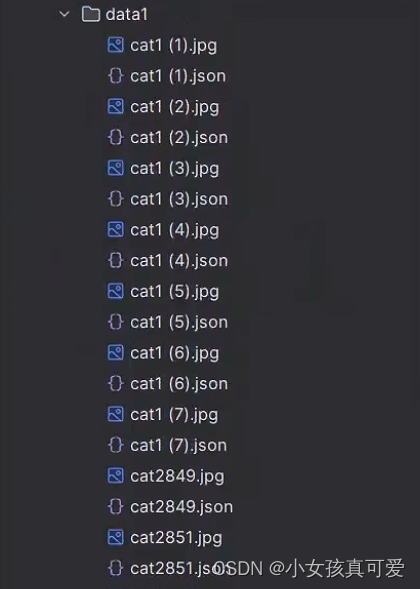

(1)使用labelme来进行分割标注,标注完之后会得到一个json,数据格式如下:

二、获取数据信息

读取json文件来得到标签信息,读取jpg文件获取图像。在语义分割中用到的数据无非就是原始图片(image)和标注后得到的mask图片,所以在读取数据的时候只要返回图片和标签信息就OK 了。

def get_label(self, img_path, labelme_json_path, img_size):

# h, w 是图片的宽高

mask_array = np.zeros([self.num_classes, h, w, 1], dtype=np.uint8)

with open(labelme_json_path, "r") as f:

json_data = json.load(f)

shapes = json_data["shapes"]

for shape in shapes:

category = shape["label"]

# 获取类别的索引

category_idx = self.category_types.index(category)

points = shape["points"]

points_array = np.array(points, dtype=np.int32)

temp = mask_array[category_idx, ...] # 获取出mask_array对应的类别层

# 将标注的坐标点连接成一个区域,并将区域内的值填为255

mask_array[category_idx, ...] = cv2.fillPoly(temp, [points_array], 255)

# 可以将每一层的输出来看mask图

# 交换维度

mask_array = np.transpose(mask_array, (1, 2, 0, 3)).squeeze(axis=-1)

# 将mask转为tensor

mask_tensor = ut.i2t(mask_array, False)

return mask_tensor如果一张图片只有一个类别,那其他类的mask图就是黑的,效果展示如下:

完整的读取数据代码如下:dataloader_labelme.py

import torch

import os

import numpy as np

from torch.utils.data import Dataset

from utils_func import seg_utils as ut

import cv2

from torchvision.transforms.functional import rotate as tensor_rotate

from torchvision.transforms.functional import vflip, hflip

from torchvision.transforms.functional import adjust_brightness

import random

import base64

import json

import os

import os.path as osp

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

class train_data(Dataset):

def __init__(self, image_folder, img_size, category_types):

self.image_folder = image_folder

self.img_size = img_size

self.category_types = category_types

self.num_classes = len(category_types)

self.data_list = self.generate_mask()

# print(self.data_list)

self.img_and_mask = []

for idx, data in enumerate(self.data_list):

img_tensor, mask_tensor = self.get_image_and_label(data[0], data[1], self.img_size)

self.img_and_mask.append([img_tensor, mask_tensor])

def __len__(self):

return len(self.img_and_mask)

def __getitem__(self, index):

img_tensor = self.img_and_mask[index][0].to(device)

mask_tensor = self.img_and_mask[index][1].to(device)

# 如果有数据增强就在这里处理

return img_tensor, mask_tensor

def data_augment(self, img_tensor, mask_tensor, aug_flag):

if aug_flag[0] == 0:

angel = random.choice(aug_flag[1])

img_tensor = tensor_rotate(img_tensor, int(angel))

mask_tensor = tensor_rotate(mask_tensor, int(angel))

elif aug_flag[0] == 1:

factor = aug_flag[1]

img_tensor = adjust_brightness(img_tensor, factor)

elif aug_flag[0] == 2:

flip_type = random.choice(aug_flag[1])

if flip_type == 1:

img_tensor = vflip(img_tensor)

mask_tensor = vflip(mask_tensor)

else:

img_tensor = hflip(img_tensor)

mask_tensor = hflip(mask_tensor)

return img_tensor, mask_tensor

def generate_mask(self):

data_lists = []

for file_name in os.listdir(self.image_folder):

if file_name.endswith("json"):

json_path = os.path.join(self.image_folder, file_name)

img_path = osp.join(self.image_folder, "%s.jpg" % file_name.split(".")[0])

data_lists.append([img_path, json_path])

return data_lists

def get_image_and_label(self, img_path, labelme_json_path, img_size):

# print("==================================================")

# print(img_path)

# print("==================================================")

img = ut.p2i(img_path)

h, w = img.shape[:2]

img = cv2.resize(img, (img_size[0], img_size[1]))

# cv2.imwrite(r"C:\Users\HJ\Desktop\test\%s.jpg"%img_path.split(".")[0][-7:], img)

img_tensor = ut.i2t(img)

mask_array = np.zeros([self.num_classes, h, w, 1], dtype=np.uint8)

with open(labelme_json_path, "r") as f:

json_data = json.load(f)

shapes = json_data["shapes"]

for shape in shapes:

category = shape["label"]

category_idx = self.category_types.index(category)

points = shape["points"]

points_array = np.array(points, dtype=np.int32)

temp = mask_array[category_idx, ...]

mask_array[category_idx, ...] = cv2.fillPoly(temp, [points_array], 255)

mask_array = np.transpose(mask_array, (1, 2, 0, 3)).squeeze(axis=-1)

mask_array = cv2.resize(mask_array, (self.img_size[0], self.img_size[1])).astype(np.uint8)

mask_tensor = ut.i2t(mask_array, False)

return img_tensor, mask_tensor

if __name__ == '__main__':

img_folder = r"D:\finish_code\SegmentationProject\datasets\data2"

img_size1 = [256, 512]

category_types = ["background", "person", "car", "road"]

t = train_data(img_folder, img_size1, category_types)

t.__getitem__(1)