PART 1 - 基础知识

一、文件读取

a. 二进制文件

mmap https://stackoverflow.com/questions/44553907/mmap-sigbus-error-and-initializing-the-file

fread fwrite

//read

FILE* fi;

if(fi = fopen("input.bin", "rb")){

fread(&p, sizeof(int), 1, fi);

fread(&n, sizeof(int), 1, fi);

int * a = (int *)malloc((n+1)*sizeof(int));

fread(a ,sizeof(int), n, fi);

fclose(fi);

free(a);

}

// write

FILE* fo;

if (fo = fopen("output.bin", "wb")) {

fwrite(&x, 1, sizeof(int), fo);

fwrite(a,1,sizeof(int)*n,fo);

fclose(fo);

}

b. 文本文件

// read

FILE *f1,*f2;

f1 = fopen("input.txt","r");

fscanf(f1,"%d",&xi);

float *input = (float *)malloc((xi+1)*sizeof(float));

for(int i=0;i<xi;i++){

fscanf(f1,"%f",&input[i]);

}

fclose(f1);

free(input);

//writ

FILE *out;

out = fopen("output.dat","w");

fprintf(out,"%.12g\n",answer);

fclose(out);

c. 命令行读取参数

int N = atoi(argv[1]); // 从命令行读取第一个参数

unsigned int N2 = strtoul(argv[2], NULL, 10); // unsigned int 第二个参数

二、sbatch使用

a. 脚本编写

- CPU编译运行(mpi程序 cpi.c 为例)

#!/bin/bash

module load mpi/2021.8.0

mpicc cpi.c -lm -o cpi

# compile.sh

#!/bin/bash

module load mpi/2021.8.0

export I_MPI_PIN_RESPECT_CPUSET=0;

# mpirun ./cpi

I_MPI_OFI_PROVIDER=tcp mpirun -genv I_MPI_FABRICS=shm:ofi -iface eno2 ./cpi

# job.sh

#!/bin/bash

#run.sh

sbatch --cpus-per-task=1 --nodes=5 --ntasks-per-node=2 --partition=compute --qos=normal --output=output.txt ./job.sh

# run.sh

编译:./compile.sh

提交作业:./run.sh

- GPU编译运行(cuda为例)

#!/bin/bash

sbatch -p GPU --gres=gpu:1 --gpus=1 -t 00:00:30 -o output.txt -e error.txt compile-job.sh

# compile.sh

#!/bin/bash

sbatch -p GPU --gres=gpu:1 --gpus=1 -t 00:20:00 -o output.txt -e error.txt run-job.sh

# run.sh

compile-job.sh、run-job.sh 需实现

编译:./compile.sh

提交作业:./run.sh

b. 作业管理

- 查看作业状态:

squeue

- 取消作业:

scancel XXXX(作业编号)

ps.

srun 直接执行可执行程序

sbatch 提交批处理脚本进行任务计算

三、数据生成器

#!/usr/bin/php

<?php

if ($argc != 4) {

die("USAGE: {$argv[0]} <OUTPUT_PATH> <P> <N_RANGE>\n");

}

function i32_to_bytes(int $n): array

{

$rslt = [];

for ($i = 0; $i < 4; ++$i) {

$rslt[] = $n & 255;

$n >>= 8;

}

return $rslt;

}

function bytes_to_string(array $n): string

{

$a = array_map(fn (int $num) => chr($num), $n);

return join('', $a);

}

function i32_to_string(int $n): string

{

return bytes_to_string(i32_to_bytes($n));

}

$f = fopen($argv[1], "w");

$p = $argv[2];

$n = (1 << ((int) trim($argv[3])));

$n += $n + rand(0, $n / 2);

$part = 1;

$n = floor($n / $part) * $part;

echo "p={$p}, n={$n}" . PHP_EOL;

fwrite($f, i32_to_string($p));

fwrite($f, i32_to_string($n));

for ($i = 0; $i < ($n / $part); ++$i) {

$m = i32_to_string(rand(0, 1 << 30));

fwrite($f, $m);

}

fclose($f);

四、Attention

高精度题目注意:

不能直接 double _N = 1.0 /N; 因为1.0默认是float会损失精度

double dif = 1.0;

double _N = dif/N;

//or

double _N = (double)1.0/N;

运算次序的改变可能会导致精度损失

大数据数组开辟用 malloc

变量初始化记得 赋值

在#define里开omp: https://www.thinbug.com/q/56717411

不能在#pragma内使用#define,但是可以在宏定义内将pragma运算符用作_pragma(“omp parallel for”)

#define resize2d_bilinear_kernel(typename) \

_Pragma("omp parallel for schedule(dynamic)") \

for(){ .... }

PATR 2 - 优化小结

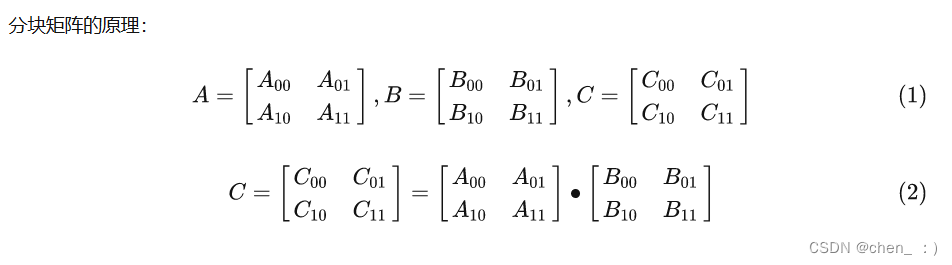

一、矩阵乘法

a. 矩阵分块+访存优化:

#define BLOCK_SIZE 64

void matMultCPU_Block(const float* a, const float* b, float* c, int n)

{

#pragma omp parallel for schedule(dynamic)

for (int ii = 0; ii < n; ii +=BLOCK_SIZE)

for (int jj = 0; jj < n; jj += BLOCK_SIZE)

for (int kk = 0; kk < n; kk += BLOCK_SIZE)

for (int i = ii; i < std::min(ii + BLOCK_SIZE,n); i++)

for (int k = kk; k < std::min(kk+ BLOCK_SIZE,n); k++)

{

float s = a[i * n + k];

for (int j = jj; j < std::min(jj + BLOCK_SIZE, n); j++)

c[i * n + j] += s * b[k * n + j];

}

}

// from https://zhuanlan.zhihu.com/p/371893547

b. 向量化

void matMult_avx(double *A, double *B, double *C, size_t n)

{

for (size_t i = 0; i < n; i += 4) {

for (size_t j = 0; j < n; j++) {

__m256d c0 = _mm256_load_pd(C+i+j*n); /* c0 = C[i][j] */

for (size_t k = 0; k < n; k++) {

c0 = _mm256_add_pd(c0,

_mm256_mul_pd(_mm256_load_pd(A+i+k*n),

_mm256_broadcast_sd(B+k+j*n)));

}

_mm256_store_pd(C+i+j*n, c0); /* C[i][j] = c0 */;

}

}

}

// from https://zhuanlan.zhihu.com/p/76347262

c. Cache Blocking+AVX

#define UNROLL 4

#define BLOCKSIZE 32

static inline void do_block(int n, int si, int sj, int sk,

double *A, double *B, double *C)

{

for (int i = si; i < si + BLOCKSIZE; i += UNROLL*4) {

for (int j = sj; j < sj + BLOCKSIZE; j++) {

__m256d c[UNROLL];

for (int x = 0; x < UNROLL; x++) {

c[x] = _mm256_load_pd(C+i+x*4+j*n);

}

for (int k = sk; k < sk + BLOCKSIZE; k++) {

__m256d b = _mm256_broadcast_sd(B+k+j*n);

for (int x = 0; x < UNROLL; x++) {

c[x] = _mm256_add_pd(c[x],

_mm256_mul_pd(

_mm256_load_pd(A+n*k+x*4+i), b));

}

}

for (int x = 0; x < UNROLL; x++) {

_mm256_store_pd(C+i+x*4+j*n, c[x]);

}

}

}

}

void dgemm_avx_unroll_blk_omp(size_t n, double *A, double *B, double *C)

{

#pragma omp parallel for

for (int sj = 0; sj < n; sj += BLOCKSIZE) {

for (int si = 0; si < n; si += BLOCKSIZE) {

for (int sk = 0; sk < n; sk += BLOCKSIZE) {

do_block(n, si, sj, sk, A, B, C);

}

}

}

}

//from https://zhuanlan.zhihu.com/p/76347262

d. 算法优化

- Coppersmith Winograd algorithm

Introduction: Coppersmith–Winograd algorithm

时间复杂度: O(n2.375477)

算法核心:

// 算法核心

* matA M*K

* matB K*N

* matC M*N

* matC = matA * matB

* S1 = A21 + A22 T1 = B12 - B11

* S2 = S1 - A11 T2 = B22 - T1

* S3 = A11 - A21 T3 = B22 - B12

* S4 = A12 - S2 T4 = T2 - B21

* M1 = A11 * B11 U1 = M1 + M2

* M2 = A12 * B21 U2 = M1 + M6

* M3 = S4 * B22 U3 = U2 + M7

* M4 = A22 * T4 U4 = U2 + M5

* M5 = S1 * T1 U5 = U4 + M3

* M6 = S2 * T2 U6 = U3 - U4

* M7 = S3 * T3 U7 = U3 + M5

* C11 = U1

* C12 = U5

* C21 = U6

* C22 = U7

代码:https://github.com/YYYYYW/Matrix-Multiplication

二、并行排序

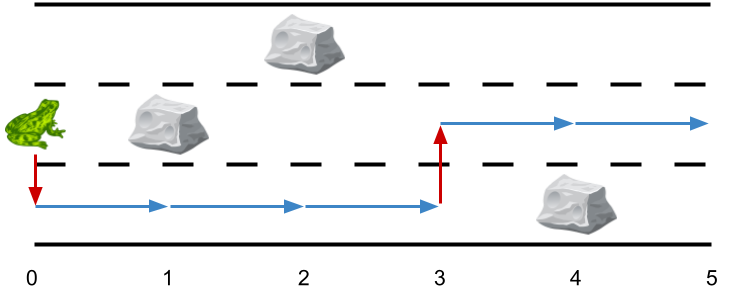

a. Radix Sort

- Diverting LSD radix sort :https://axelle.me/2022/04/19/diverting-lsd-sort/

思想1. 先基数排序,后桶排序

Through an example

let mut array = vec![97, 57, 17, 2, 87, 56, 33, 30, 40, 21, 27, 71];

// In binary it is: [01100001, 00111001, 00010001, 00000010, 01010111, 00111000,

// 00100001, 00011110, 00101000, 00010101, 00011011, 01000111]

Let’s choose a radix equals 2, et let’s sort only the two leftmost levels in a LSD fashion way. The first pass outputs:

assert_eq!(array, vec![2, 71, 17, 87, 30, 21, 27, 97, 33, 40, 57, 56]);

// [00000010, 01000111, 00010001, 01010111, 00011110, 00010101,

// |__________________||_______________________________________

// **00**** **01****

// 00011011, 01100001, 00100001, 00101000, 00111001, 00111000]

// _________||____________________________||__________________|

// **10**** **11****

And the second pass outputs:

assert_eq!(array, vec![2, 17, 30, 21, 27, 33, 40, 57, 56, 71, 87, 97]);

// [00000010, 00010001, 00011110, 00010101, 00011011, 00100001,

// |___________________________________________________________

// 00******

// 00101000, 00111001, 00111000, 01000111, 01010111, 01100001]

// _____________________________||____________________________|

// 01******

With this example, the second pass has only 2 buckets 00 and 01.

For the first bucket 00:

let mut array = [2, 17, 30, 21, 27, 33, 40, 57, 56]

// swap 1 [2, 17, 21, 30, 27, 33, 40, 57, 56]

// swap 2 [2, 17, 21, 27, 30, 33, 40, 57, 56]

// swpa 3 [2, 17, 21, 27, 30, 33, 40, 56, 57]

end.

-

Parallel Radix Sort(OpenMP):https://github.com/iwiwi/parallel-radix-sort

use parallel_radix_sort.h -

Voracious Radix Sort (Rust):https://github.com/lakwet/voracious_sort

cargo add voracious_radix_sort

b. QuickSort

- 并行快排(归并):

#include<omp.h>

data_t Partition(data_t* data, int start, int end) //閸掓帒鍨庨弫鐗堝祦

{

data_t temp = data[start]; //娴犮儳顑囨稉鈧稉顏勫帗缁辩姳璐熼崺鍝勫櫙

while (start < end) {

while (start < end && data[end] >= temp)end--; //閹垫儳鍩岀粭顑跨娑擃亝鐦崺鍝勫櫙鐏忓繒娈戦弫?

data[start] = data[end];

while (start < end && data[start] <= temp)start++; //閹垫儳鍩岀粭顑跨娑擃亝鐦崺鍝勫櫙婢堆呮畱閺?

data[end] = data[start];

}

data[start] = temp; //娴犮儱鐔€閸戝棔缍旀稉鍝勫瀻閻e瞼鍤?

return start;

}

void quickSort(data_t* data, int start, int end) //骞惰蹇帓

{

if (start < end) {

data_t pos = Partition(data, start, end);

#pragma omp parallel sections //璁剧疆骞惰鍖哄煙

{

#pragma omp section //璇ュ尯鍩熷鍓嶉儴鍒嗘暟鎹繘琛屾帓搴?

quickSort(data, start, pos - 1);

#pragma omp section //璇ュ尯鍩熷鍚庨儴鍒嗘暟鎹繘琛屾帓搴?

quickSort(data, pos + 1, end);

}

}

}

quickSort(a , 0, n-1); // main

ps. 用openmp并行快排效果不佳,受限于sections子句,用mpi并行效果不错

c. 标准库

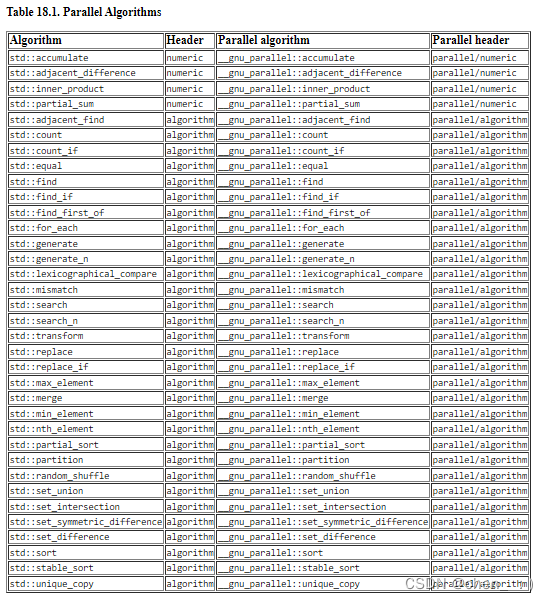

- GNU parallel stl

#include <vector>

#include <parallel/algorithm>

int main()

{

std::vector<int> v(100);

// ...

// Explicitly force a call to parallel sort.

__gnu_parallel::sort(v.begin(), v.end());

return 0;

}

https://gcc.gnu.org/onlinedocs/libstdc++/manual/parallel_mode_using.html

https://www.modernescpp.com/index.php/parallel-algorithms-of-the-stl-with-gcc

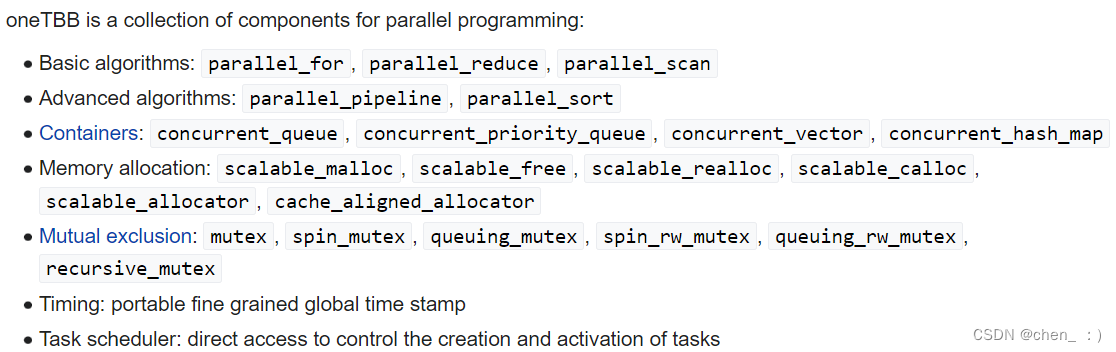

- Intel TBB

Simplified Development for Parallel Applications

https://github.com/oneapi-src/oneTBB

- C++17标准STL库

三、高精度求 π

a. 改进的幂级数法

精度高,n取20000(还可以更小)轻松求得15位有效数字

#include<stdio.h>

#include<mpi.h>

#include<stdlib.h>

#include<math.h>

double f(double x) { // inline

double y = 2 * x + 1;

double z = pow(-1, x);

double h1 = 4.0;

double h2 = 5.0;

double h3 = 239.0;

return h1 * z / y*(h1 / pow(h2, y) - 1 / pow(h3, y));

}

int main(int argc, char* argv[])

{

int myid, numprocs, namelen;

double pi, sum, x, *temp;

long long n;

char processor_name[MPI_MAX_PROCESSOR_NAME];

char* pi_norm = "3.141592653589793238462643383279502884197169399375105820974944592307816406286208998628034825342117067982148086513282306647093844609550582231725359408128481117450284102701938521105559644622948954930381964428810975665933446128475648233786783165271201909145648566923460348610454326648213393607260249141273724587006606315588174881520920962829254091715364367892590360011330530548820466521384146951941511609433057270365759591953092186117381932611793105118548074462379962749567351885752724891227938183011949129833673362440656643086021394946395224737190702179860943702770539217176293176752384674818467669405132000568127145263560827785771342757789609173637178721468440901224953430146549585371050792279689258923542019956112129021960864034418159813629774771309960518707211349999998372978049951059731732816096318595024459455346908302642522308253344685035261931188171010003137838752886587533208381420617177669147303598253490428755468731159562863882353787593751957781857780532171226806613001927876611195909216420198938";

MPI_Init(&argc, &argv); // starts MPI

MPI_Comm_rank(MPI_COMM_WORLD, &myid); // get current process id

MPI_Comm_size(MPI_COMM_WORLD, &numprocs); // get number of processes

MPI_Get_processor_name(processor_name, &namelen);

n = 20000;

if (myid == 0) {

temp = (double*)malloc(sizeof(double)*numprocs);

}

MPI_Bcast(&n, 1, MPI_LONG_LONG, 0, MPI_COMM_WORLD); //靠

sum = 0.0, pi = 0.0;

for (long long i = myid; i <= n; i += numprocs) {

sum += f(i);

}

MPI_Gather(&sum, sizeof(sum), MPI_BYTE, temp, sizeof(sum), MPI_BYTE, 0, MPI_COMM_WORLD);

if (myid == 0) {

for (int i = 0; i < numprocs; i++) {

pi += temp[i];

}

FILE *out;

out = fopen("output.txt","w");

fprintf(out,"%.15g\n",pi);

fclose(out);

free(temp);

}

MPI_Finalize();

return 0;

}

- 五种方式 MPICH2 并行计算π: https://github.com/lang22/MPI-PI

四、二维卷积(AVX)

a. Conv2D-AVX512

float *input = (float *)aligned_alloc(64, N*sizeof(float));

float *kernel = (float *)aligned_alloc(64, M*sizeof(float));

float *ans = (float *)aligned_alloc(64, N*sizeof(float));

for(int i=0; i<xi-xk+1;i++){

for(int j=0;j<yi-yk+1;j++){

//float temp = 0.0;

__m512 tmp = _mm512_setzero_ps();

for(int m=0;m<xk;m++){

for(int n=0;n<yk;n+=16){

tmp = _mm512_add_ps(tmp,_mm512_mul_ps(_mm512_loadu_ps(&kernel[m*yk+n]),_mm512_loadu_ps(&input[(i+m)*yi+j+n])));

//temp += kernel[m*yk+n] * input[(i+m)*yi+j+n];

}

}

ans[i*(ya)+j] = tmp[0]+tmp[1]+tmp[2]+tmp[3]+tmp[4]+tmp[5]+tmp[6]+tmp[7]+tmp[8]+tmp[9]+tmp[10]+tmp[11]+tmp[12]+tmp[13]+tmp[14]+tmp[15];

//ans[i*(ya)+j] = temp;

}

}

------ 补充 ------

b. 内存对齐

- aligned 原理

void* aligned_malloc(size_t required_bytes, size_t alignment)

{

int offset = alignment - 1 + sizeof(void*);

void* p1 = (void*)malloc(required_bytes + offset);

if (p1 == NULL)

return NULL;

void** p2 = (void**)( ( (size_t)p1 + offset ) & ~(alignment - 1) );

p2[-1] = p1;

return p2;

}

void aligned_free(void *p2)

{

void* p1 = ((void**)p2)[-1];

free(p1);

}

- 数据对齐以辅助向量化说明(Intel) Data Alignment to Assist Vectorization

c. SIMD

- 玩转SIMD指令编程 :https://zhuanlan.zhihu.com/p/591900754

- AVX512:Intrinsics for Intel® Advanced Vector Extensions 512 (Intel® AVX-512) Instructions

d. Xsimd

- https://github.com/xtensor-stack/xsimd

xsimd provides a unified means for using these features for library authors. Namely, it enables manipulation of batches of numbers with the same arithmetic operators as for single values. It also provides accelerated implementation of common mathematical functions operating on batches.

- https://xsimd.readthedocs.io/en/latest/

五、CUDA(C)

a. 基本模式

// init

malloc(...);

cudaMalloc((void **)&, n*sizeof( ));

cudaMemcpy(GPU, CPU, sizeof, cudaMemcpyHostToDevice);

dim3 block();

dim3 grid();

<<<grid, block>>>

cudaThreadSynchronize();

cudaMemcpy(CPU, GPU, sizeof, cudaMemcpyDeviceToHost);

cudaFree();

// f

__global__ void f(){

// GPU CODE

}

b. 循环代码并行

- CPU code

// cpu

for(int i=0;i<n;i++){

for(int j=0;j<m;j++){

// code

}

}

- GPU code

一维

__global__ void fGPU(){

int index = blockIdx.x * blockDim.x + threadIdx.x;

int i = index / nn;

int j = index % nn;

// code

}

// main

const int dimx = 128;

fGPU<<<n*m/128, 128>>>();

二维

__global__ void fGPU(){

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

if((i< n && j<m){

// code

}

}

int dimx = 16;

int dimy = 16;

dim3 block(dimx,dimy);

dim3 grid((n+block.x-1)/block.x,(my+block.y-1)/block.y);

fGPU<<<grid, block>>>();

c. 优化原子操作

__global void histogram_kernel_v1(unsigned char *arry,unsigned int *bins){

int tid = blockIdx.x * blockDim.x + threadIdx.x;

// we do not need to check boundary here as ARRSZ is a power of 2.

atomicAdd(&bins[arry[tid]],1u);

}

void compute(){

const int block_size = 256;

histogram_kernel_v1<<<ARRSZ/block_size,block_size>>>(arr_gpu,bin_gpu);

assert(cudaSuccess == cudaDevicechronize());

}

- 利用 shared memery

__shared__ unsigned int bins_shared[256];

__global void histogram_kernel_v3_5(unsigned char *arry,unsigned int *bins){

int tid = blockIdx.x * blockDim.x + threadIdx.x;

bins_shared[threadIdx.x] = 0; // block size assumed to just 256

__syncthtreads();

atomicAdd(&bins[arry[tid]],1u);

__syncthtreads();

atomicAdd(&bins[threadIdx.x],bins_shared[threadIdx.x]);

}

void compute(){

const int block_size = 256; // do not change this

histogram_kernel_v1<<<ARRSZ/block_size,block_size>>>(arr_gpu,bin_gpu);

assert(cudaSuccess == cudaDevicechronize());

}

d. cuFFT库

cuFFT库提供了一个优化且基于CUDA实现的快速傅里叶变换(FFT)

https://docs.nvidia.com/cuda/cufft/

// 3D FFT

#include <cufft.h>

#include <complex>

void my_fft(int c, int r, std::complex<double> *in, std::complex<double> *out) {

cufftHandle plan;

cufftPlan3d(&plan, c, c, c, CUFFT_Z2Z);

cufftExecZ2Z(plan, reinterpret_cast<cufftDoubleComplex*>(in),

reinterpret_cast<cufftDoubleComplex*>(out),

CUFFT_INVERSE);

cufftDestroy(plan);

}

nvcc -lcufft cufft.cu -o cufft

References

slurm作业管理系统怎么用?

矩阵乘法的并行优化(3):共享内存多核CPU优化

矩阵乘法优化过程(DGEMM)

Data Alignment to Assist Vectorization

玩转SIMD指令编程

Intrinsics for Intel® Advanced Vector Extensions 512 (Intel® AVX-512) Instructions

Links

mmap:https://stackoverflow.com/questions/44553907/mmap-sigbus-error-and-initializing-the-file

#define: https://www.thinbug.com/q/56717411

Coppersmith Winograd algorithm:https://github.com/YYYYYW/Matrix-Multiplication

Diverting LSD radix sort :https://axelle.me/2022/04/19/diverting-lsd-sort/

Parallel Radix Sort(OpenMP):https://github.com/iwiwi/parallel-radix-sort

Voracious Radix Sort (Rust):https://github.com/lakwet/voracious_sort

GNU parallel stl:https://gcc.gnu.org/onlinedocs/libstdc++/manual/parallel_mode_using.html

https://www.modernescpp.com/index.php/parallel-algorithms-of-the-stl-with-gcc

Intel TBB:https://github.com/oneapi-src/oneTBB

并行求 π: https://github.com/lang22/MPI-PI

Xsimd: https://github.com/xtensor-stack/xsimd

cuFFT:https://docs.nvidia.com/cuda/cufft/