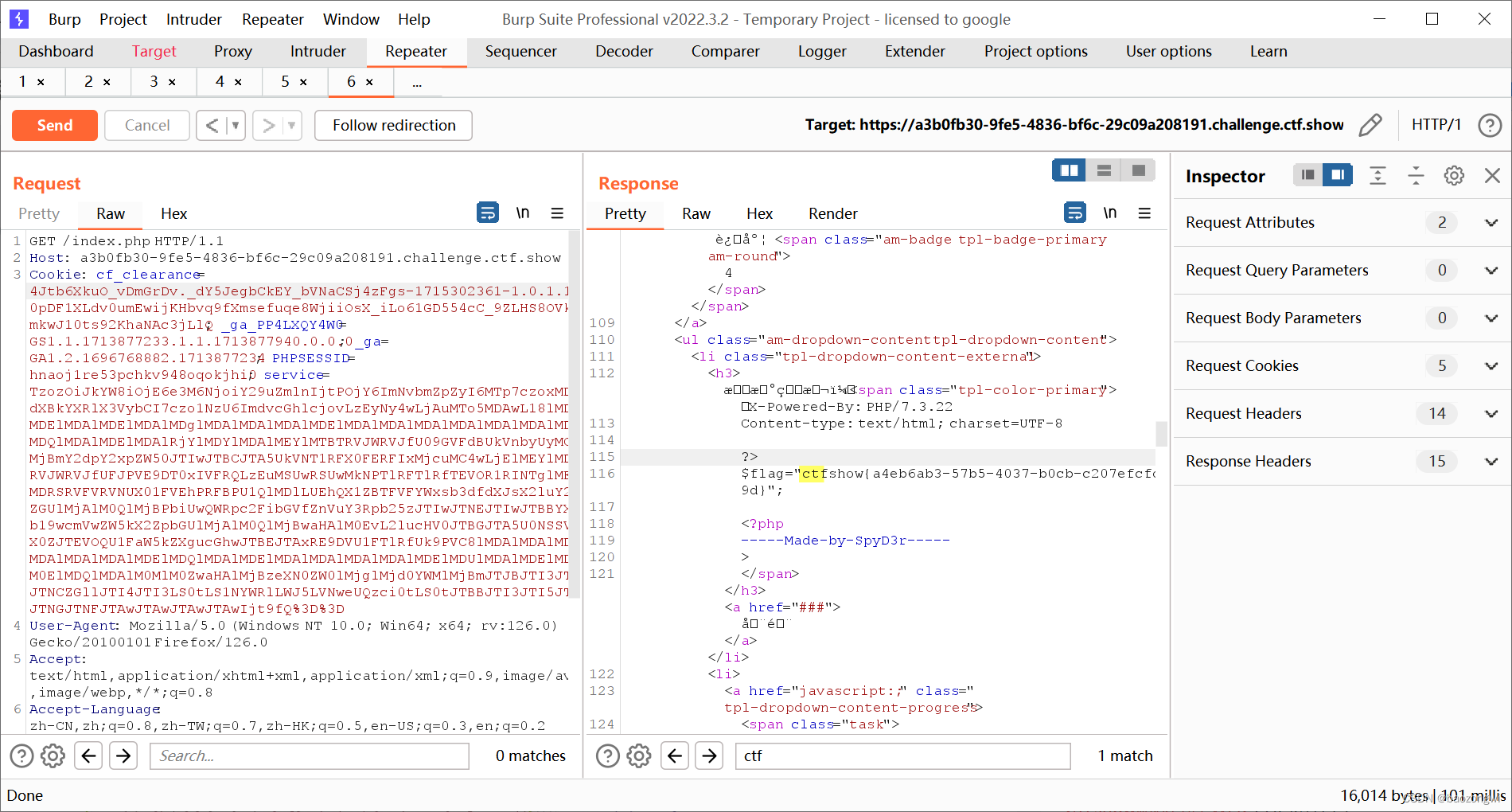

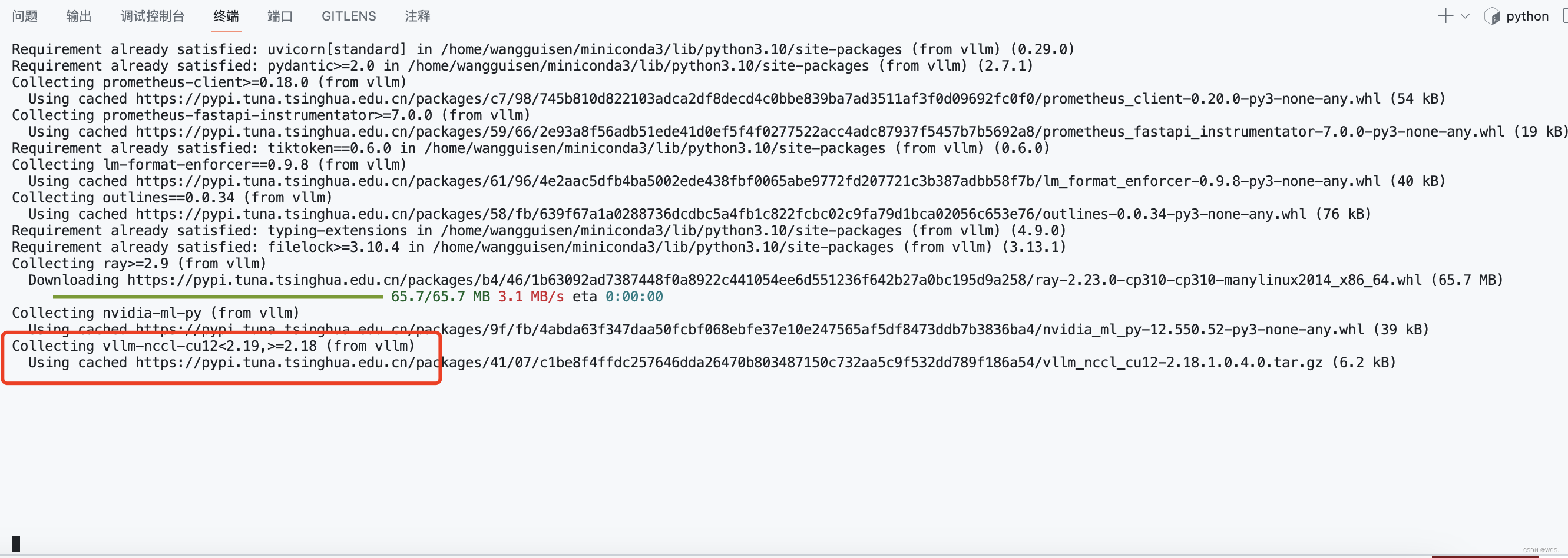

按照vllm的时候卡主:

...

Requirement already satisfied: typing-extensions in /home/wangguisen/miniconda3/lib/python3.10/site-packages (from vllm) (4.9.0)

Requirement already satisfied: filelock>=3.10.4 in /home/wangguisen/miniconda3/lib/python3.10/site-packages (from vllm) (3.13.1)

Collecting ray>=2.9 (from vllm)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/b4/46/1b63092ad7387448f0a8922c441054ee6d551236f642b27a0bc195d9a258/ray-2.23.0-cp310-cp310-manylinux2014_x86_64.whl (65.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 65.7/65.7 MB 3.1 MB/s eta 0:00:00

Collecting nvidia-ml-py (from vllm)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/9f/fb/4abda63f347daa50fcbf068ebfe37e10e247565af5df8473ddb7b3836ba4/nvidia_ml_py-12.550.52-py3-none-any.whl (39 kB)

Collecting vllm-nccl-cu12<2.19,>=2.18 (from vllm)

Using cached https://pypi.tuna.tsinghua.edu.cn/packages/41/07/c1be8f4ffdc257646dda26470b803487150c732aa5c9f532dd789f186a54/vllm_nccl_cu12-2.18.1.0.4.0.tar.gz (6.2 kB)

卡主了:

搜解决办法的时候发现有个issue里说:vllm 如果使用cuda11.7 需要使用版本小于等于0.2.0

所以:

pip install vllm==0.2.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

vllm与cuda版本有对应关系,请按照

https://docs.vllm.ai/en/latest/getting_started/installation.html选择版本

https://github.com/modelscope/swift/blob/main/docs/source/LLM/VLLM%E6%8E%A8%E7%90%86%E5%8A%A0%E9%80%9F%E4%B8%8E%E9%83%A8%E7%BD%B2.md