手写数字识别项目在机器学习中经常被用作入门练习,因为它相对简单,但又涵盖了许多基本的概念。这个项目可以视为机器学习中的 “Hello World”,因为它涉及到数据收集、特征提取、模型选择、训练和评估等机器学习中的基本步骤,所以手写数字识别项目是一个很好的起点。

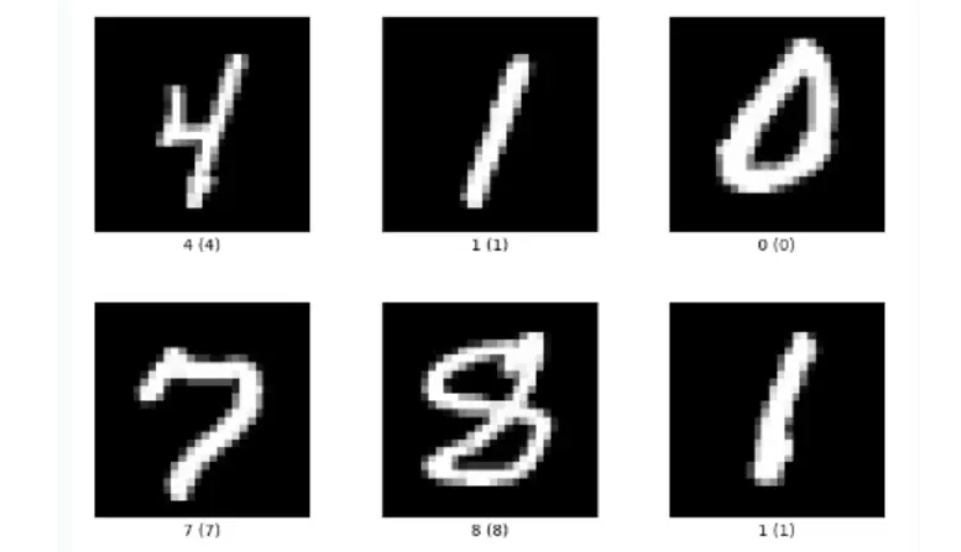

我们的要做的是,训练出一个人工神经网络,使它能够识别手写数字(如下图所示):

以下是一个简单的示例代码,展示如何使用PyTorch创建一个手写数字识别的模型,包括数据集加载、训练和测试过程。

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

# 检查GPU是否可用

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 加载MNIST数据集

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

train_dataset = torchvision.datasets.MNIST(root='./data', train=True, download=True, transform=transform)

test_dataset = torchvision.datasets.MNIST(root='./data', train=False, download=True, transform=transform)

print(f"训练集第1张图像形状 = {train_dataset.__getitem__(0)[0].shape}")

print(f"训练集第1张图像标签 = {train_dataset.__getitem__(0)[1]}")

print(f"测试集第1张图像形状 = {test_dataset.__getitem__(0)[0].shape}")

print(f"测试集第1张图像标签 = {test_dataset.__getitem__(0)[1]}")

# 使用数据加载器

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False)

# 定义神经网络模型并将其移至GPU

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc = nn.Sequential(

nn.Linear(28*28, 128),

nn.ReLU(),

nn.Linear(128, 64),

nn.ReLU(),

nn.Linear(64, 10)

)

def forward(self, x):

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

model = Net().to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# 训练模型,训练过程输出损失值

num_epochs = 5

for epoch in range(num_epochs):

model.train()

for images, labels in train_loader:

images, labels = images.to(device), labels.to(device) # 将数据移至GPU

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

print(f'Epoch [{epoch+1}/{num_epochs}], Loss: {loss.item()}')

# 测试模型,输出数字识别准确率

model.eval()

with torch.no_grad():

correct = 0

total = 0

for images, labels in test_loader:

images, labels = images.to(device), labels.to(device) # 将数据移至GPU

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'Accuracy on the test set: {100 * correct / total}%')

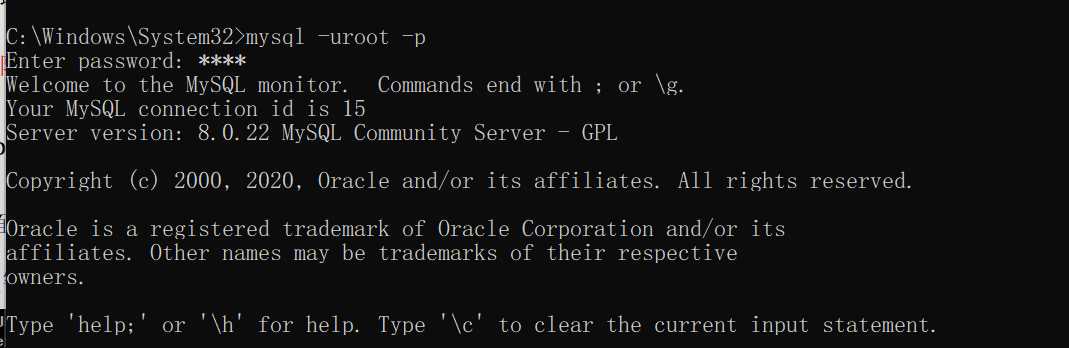

程序运行后,输出如下:

训练集第1张图像形状 = torch.Size([1, 28, 28])

训练集第1张图像标签 = 5

测试集第1张图像形状 = torch.Size([1, 28, 28])

测试集第1张图像标签 = 7

Epoch [1/5], Loss: 0.3935443162918091

Epoch [2/5], Loss: 0.1757822483778

Epoch [3/5], Loss: 0.1337398886680603

Epoch [4/5], Loss: 0.03868262842297554

Epoch [5/5], Loss: 0.025882571935653687

Accuracy on the test set: 96.85%

进程已结束,退出代码为 0