使用决策树训练红酒数据集

完整代码:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

from sklearn import tree, datasets

from sklearn.model_selection import train_test_split

# 准备数据,这里使用前两个特征

data = datasets.load_wine()

X, y = data.data[:,:2], data.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

# 训练模型

clf = tree.DecisionTreeClassifier(max_depth=1)

clf.fit(X, y)

print(clf.score(X_test, y_test))

输出:

0.7555555555555555

绘制决策树的图形

完整代码:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

from sklearn import tree, datasets

from sklearn.model_selection import train_test_split

# 准备数据,这里使用前两个特征

data = datasets.load_wine()

X, y = data.data[:,:2], data.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

# 训练模型

clf = tree.DecisionTreeClassifier(max_depth=1)

clf.fit(X, y)

# 画图

cmap_light = ListedColormap(["#FFAAAA", "#AAFFAA", "#AAAAFF"])

cmap_bold = ListedColormap(["#FF0000", "#00FF00", "#0000FF"])

x_min, x_max = X_train[:,0].min() - 1, X_train[:,0].max() + 1

y_min, y_max = X_train[:,1].min() - 1, X_train[:,1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, .02), np.arange(y_min, y_max, .02))

z = clf.predict(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

plt.figure()

plt.pcolormesh(xx, yy, z, cmap=cmap_light)

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold, edgecolor="k", s=20)

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

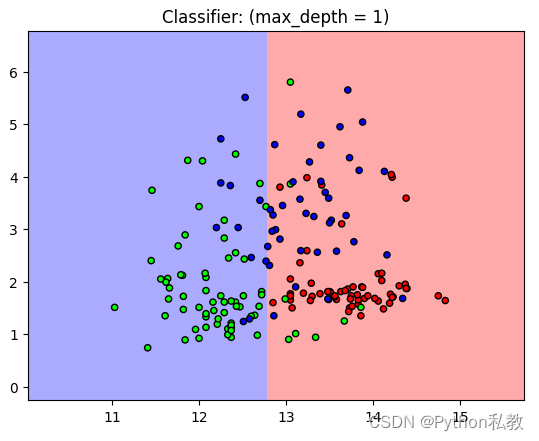

plt.title("Classifier: (max_depth = 1)")

plt.show()

输出:

从结果来看,分类器的表现并不是特别好,我们可以加大深度试试。

调整决策树的深度

完整代码:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

from sklearn import tree, datasets

from sklearn.model_selection import train_test_split

# 准备数据,这里使用前两个特征

data = datasets.load_wine()

X, y = data.data[:,:2], data.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

# 训练模型

clf = tree.DecisionTreeClassifier(max_depth=3)

clf.fit(X, y)

print(clf.score(X_test, y_test))

# 画图

cmap_light = ListedColormap(["#FFAAAA", "#AAFFAA", "#AAAAFF"])

cmap_bold = ListedColormap(["#FF0000", "#00FF00", "#0000FF"])

x_min, x_max = X_train[:,0].min() - 1, X_train[:,0].max() + 1

y_min, y_max = X_train[:,1].min() - 1, X_train[:,1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, .02), np.arange(y_min, y_max, .02))

z = clf.predict(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

plt.figure()

plt.pcolormesh(xx, yy, z, cmap=cmap_light)

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold, edgecolor="k", s=20)

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

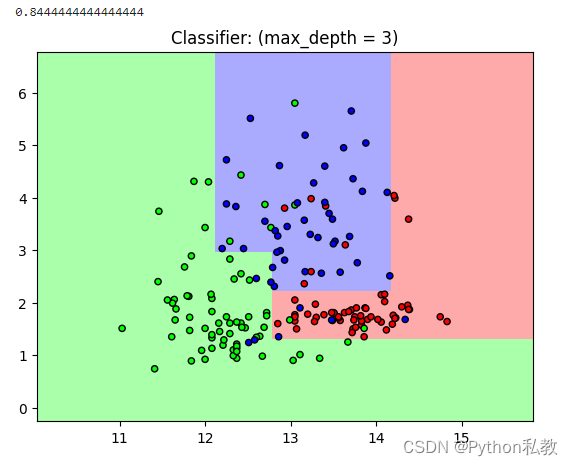

plt.title("Classifier: (max_depth = 3)")

plt.show()

输出:

从结果来看,分数变成了0.84,已经是一个比较能够接受的分数了。

另外,从图像来看,不同的点大致都能落入到自己的区域中,相比深度为1的时候更加的准确一点。

继续加大决策树的深度

完整代码:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

from sklearn import tree, datasets

from sklearn.model_selection import train_test_split

# 准备数据,这里使用前两个特征

data = datasets.load_wine()

X, y = data.data[:,:2], data.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

# 训练模型

clf = tree.DecisionTreeClassifier(max_depth=5)

clf.fit(X, y)

print(clf.score(X_test, y_test))

# 画图

cmap_light = ListedColormap(["#FFAAAA", "#AAFFAA", "#AAAAFF"])

cmap_bold = ListedColormap(["#FF0000", "#00FF00", "#0000FF"])

x_min, x_max = X_train[:,0].min() - 1, X_train[:,0].max() + 1

y_min, y_max = X_train[:,1].min() - 1, X_train[:,1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, .02), np.arange(y_min, y_max, .02))

z = clf.predict(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

plt.figure()

plt.pcolormesh(xx, yy, z, cmap=cmap_light)

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=cmap_bold, edgecolor="k", s=20)

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

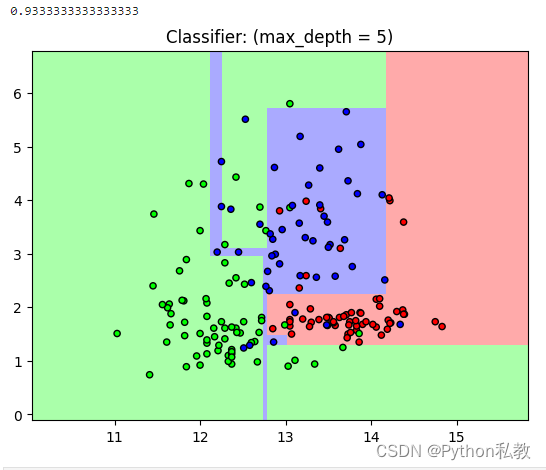

plt.title("Classifier: (max_depth = 5)")

plt.show()

输出:

从结果来看,分数从0.84变成了0.93,明显更加的准确了。

![[STM32-HAL库]AS608-指纹识别模块-STM32CUBEMX开发-HAL库开发系列-主控STM32F103C8T6](https://img-blog.csdnimg.cn/direct/14275104e38145d3884a69fc6c51f07c.png)

![[实例] Unity Shader 逐像素漫反射与半兰伯特光照](https://img-blog.csdnimg.cn/direct/0098a63f439043e5b5920a77726734df.gif)