参考:

https://docs.vllm.ai/en/latest/serving/deploying_with_docker.html

https://hub.docker.com/r/vllm/vllm-openai

https://blog.csdn.net/weixin_42357472/article/details/136165481

下载镜像:

docker pull vllm/vllm-openai

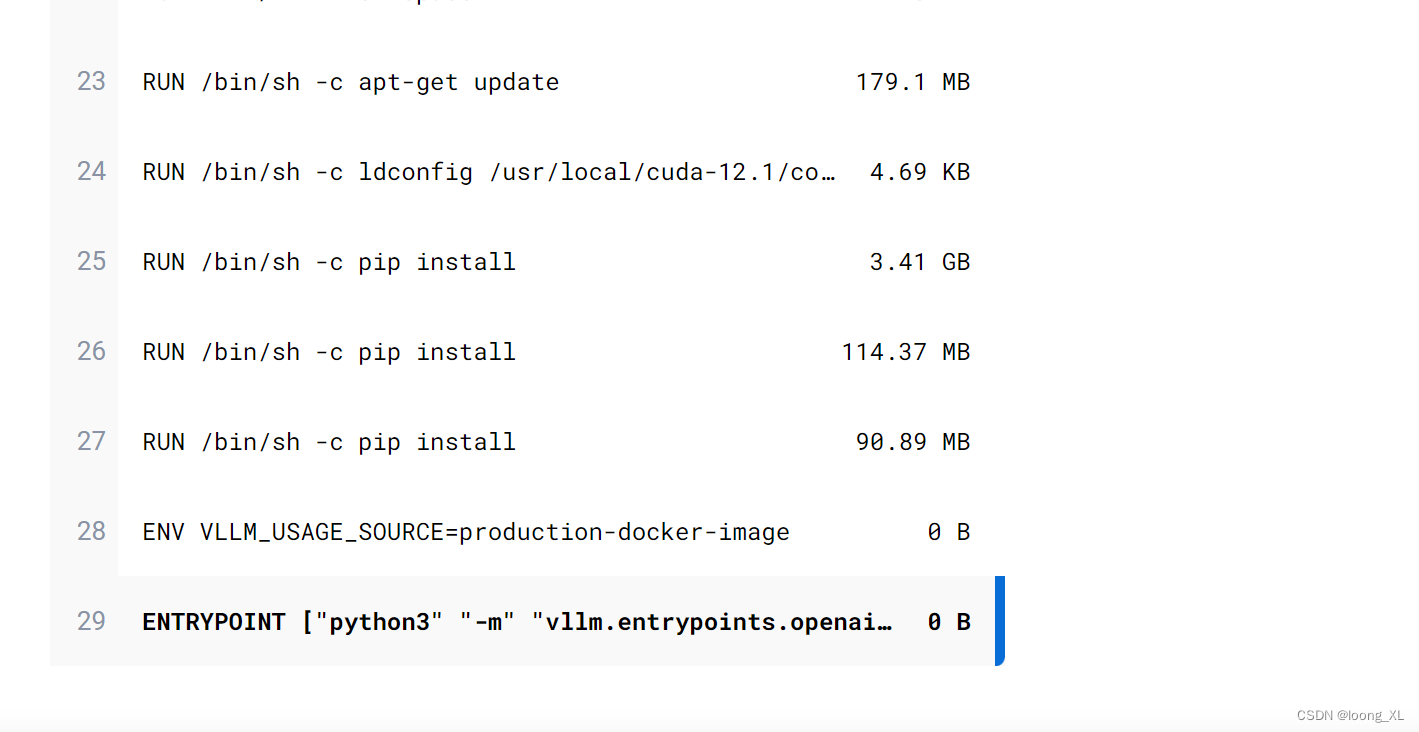

镜像默认最后一层就是python -m vllm.entrypoints.openai.api_server

运行qwen:

docker run -d --gpus all -v /ai/Qwen1.5-7B-Chat:/qwen