ECO分类模型

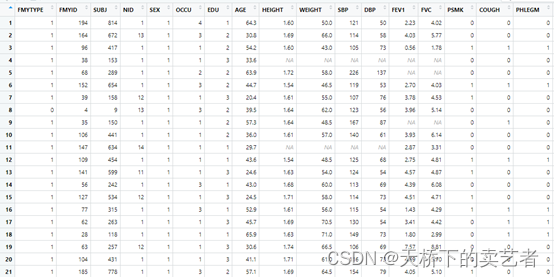

ECO 分类模型,可以对视频进行分类,视频是静止画面的集合,并短时间内进行播放,在人眼中形成了视频,通过 FPS 单位进行计算,指的是每秒显示多少张图片。如果直接把图片组合一张大图,随后输入给分类模型是不是可以进行分类呢?由于是静态图片,无法表达出时间的属性,视频中人物的移动、速度等也无法提现。2014 年,研究人员提出了光流的概念,指的是两帧画面中物体移动的速度,速度越快,光流的向量就越长。所以只要观察光流的变化,就可以得到图像中物体移动的开始和结束时间,以及移动速度信息。ECO模型就是实现光流检测的一种方案,他并不是直接将数据放到 C3D的网络,而是首先通过二维卷积生成较小的特征数据,让后再讲这些数据输入 C3D中进行视频处理。

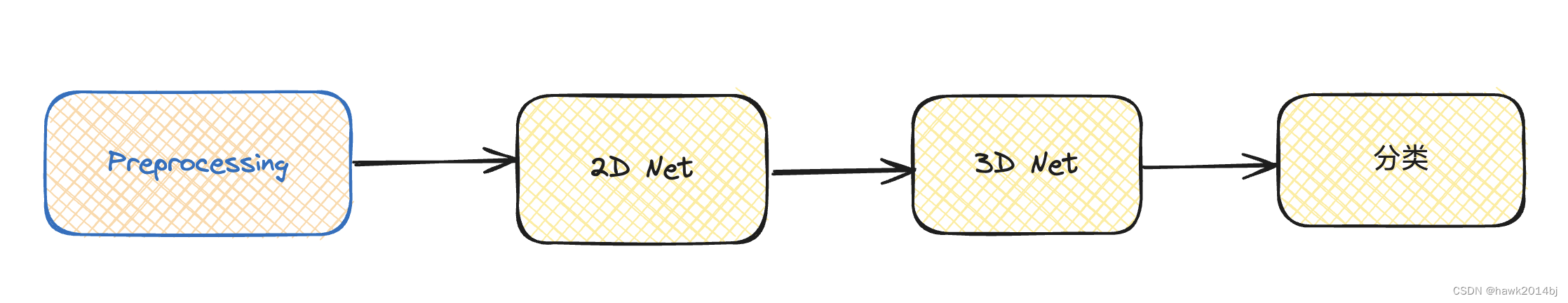

网络结构

ECO网络主要分为以下 4 部分,其中 2D Net和 3D Net 是核心网络。

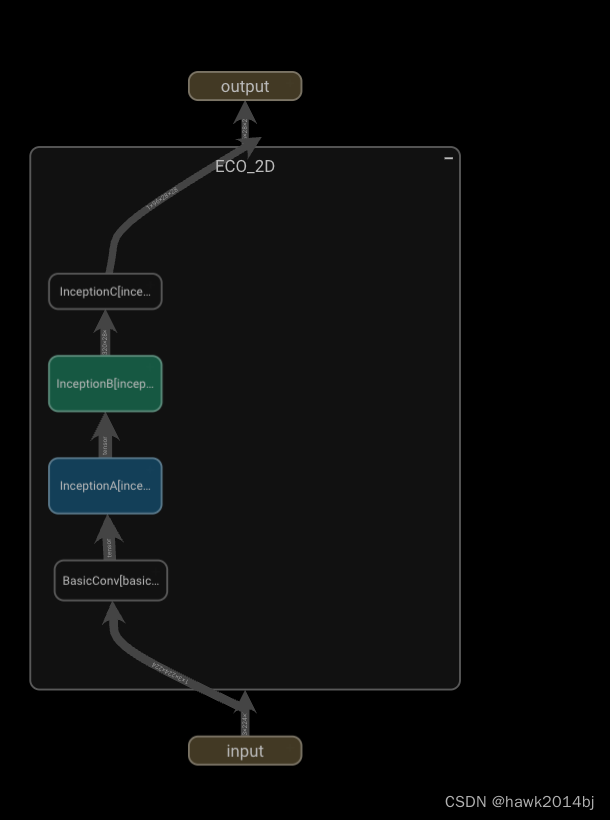

2D Net

2D Net 包括 4 个字模块,Basic Conv、InceptionA、InceptionB、InceptionC。BasicConv 是卷积层特征变换,输入 3 * 224 * 224,输出 192 * 28 * 28。InceptionA ~ InceptionC进一步进行特征转换,输出尺寸分别为 (256 * 28 * 28)、(256 * 28 * 28)、(96 * 28 * 28)。代码如下:

BasicConv

class BasicConv(nn.Module):

'''ECO的2D Net模块中开头的模块'''

def __init__(self):

super(BasicConv, self).__init__()

self.conv1_7x7_s2 = nn.Conv2d(3, 64, kernel_size=(

7, 7), stride=(2, 2), padding=(3, 3))

self.conv1_7x7_s2_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.conv1_relu_7x7 = nn.ReLU(inplace=True)

self.pool1_3x3_s2 = nn.MaxPool2d(

kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

self.conv2_3x3_reduce = nn.Conv2d(

64, 64, kernel_size=(1, 1), stride=(1, 1))

self.conv2_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.conv2_relu_3x3_reduce = nn.ReLU(inplace=True)

self.conv2_3x3 = nn.Conv2d(64, 192, kernel_size=(

3, 3), stride=(1, 1), padding=(1, 1))

self.conv2_3x3_bn = nn.BatchNorm2d(

192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.conv2_relu_3x3 = nn.ReLU(inplace=True)

self.pool2_3x3_s2 = nn.MaxPool2d(

kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

def forward(self, x):

out = self.conv1_7x7_s2(x)

out = self.conv1_7x7_s2_bn(out)

out = self.conv1_relu_7x7(out)

out = self.pool1_3x3_s2(out)

out = self.conv2_3x3_reduce(out)

out = self.conv2_3x3_reduce_bn(out)

out = self.conv2_relu_3x3_reduce(out)

out = self.conv2_3x3(out)

out = self.conv2_3x3_bn(out)

out = self.conv2_relu_3x3(out)

out = self.pool2_3x3_s2(out)

return out

InceptionA

class InceptionA(nn.Module):

'''InceptionA'''

def __init__(self):

super(InceptionA, self).__init__()

self.inception_3a_1x1 = nn.Conv2d(

192, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3a_1x1_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_1x1 = nn.ReLU(inplace=True)

self.inception_3a_3x3_reduce = nn.Conv2d(

192, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3a_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_3x3_reduce = nn.ReLU(inplace=True)

self.inception_3a_3x3 = nn.Conv2d(

64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3a_3x3_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_3x3 = nn.ReLU(inplace=True)

self.inception_3a_double_3x3_reduce = nn.Conv2d(

192, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3a_double_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_double_3x3_reduce = nn.ReLU(inplace=True)

self.inception_3a_double_3x3_1 = nn.Conv2d(

64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3a_double_3x3_1_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_double_3x3_1 = nn.ReLU(inplace=True)

self.inception_3a_double_3x3_2 = nn.Conv2d(

96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3a_double_3x3_2_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_double_3x3_2 = nn.ReLU(inplace=True)

self.inception_3a_pool = nn.AvgPool2d(

kernel_size=3, stride=1, padding=1)

self.inception_3a_pool_proj = nn.Conv2d(

192, 32, kernel_size=(1, 1), stride=(1, 1))

self.inception_3a_pool_proj_bn = nn.BatchNorm2d(

32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3a_relu_pool_proj = nn.ReLU(inplace=True)

def forward(self, x):

out1 = self.inception_3a_1x1(x)

out1 = self.inception_3a_1x1_bn(out1)

out1 = self.inception_3a_relu_1x1(out1)

out2 = self.inception_3a_3x3_reduce(x)

out2 = self.inception_3a_3x3_reduce_bn(out2)

out2 = self.inception_3a_relu_3x3_reduce(out2)

out2 = self.inception_3a_3x3(out2)

out2 = self.inception_3a_3x3_bn(out2)

out2 = self.inception_3a_relu_3x3(out2)

out3 = self.inception_3a_double_3x3_reduce(x)

out3 = self.inception_3a_double_3x3_reduce_bn(out3)

out3 = self.inception_3a_relu_double_3x3_reduce(out3)

out3 = self.inception_3a_double_3x3_1(out3)

out3 = self.inception_3a_double_3x3_1_bn(out3)

out3 = self.inception_3a_relu_double_3x3_1(out3)

out3 = self.inception_3a_double_3x3_2(out3)

out3 = self.inception_3a_double_3x3_2_bn(out3)

out3 = self.inception_3a_relu_double_3x3_2(out3)

out4 = self.inception_3a_pool(x)

out4 = self.inception_3a_pool_proj(out4)

out4 = self.inception_3a_pool_proj_bn(out4)

out4 = self.inception_3a_relu_pool_proj(out4)

outputs = [out1, out2, out3, out4]

return torch.cat(outputs, 1)

InceptionB

class InceptionB(nn.Module):

'''InceptionB'''

def __init__(self):

super(InceptionB, self).__init__()

self.inception_3b_1x1 = nn.Conv2d(

256, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3b_1x1_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_1x1 = nn.ReLU(inplace=True)

self.inception_3b_3x3_reduce = nn.Conv2d(

256, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3b_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_3x3_reduce = nn.ReLU(inplace=True)

self.inception_3b_3x3 = nn.Conv2d(

64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3b_3x3_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_3x3 = nn.ReLU(inplace=True)

self.inception_3b_double_3x3_reduce = nn.Conv2d(

256, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3b_double_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_double_3x3_reduce = nn.ReLU(inplace=True)

self.inception_3b_double_3x3_1 = nn.Conv2d(

64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3b_double_3x3_1_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_double_3x3_1 = nn.ReLU(inplace=True)

self.inception_3b_double_3x3_2 = nn.Conv2d(

96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3b_double_3x3_2_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_double_3x3_2 = nn.ReLU(inplace=True)

self.inception_3b_pool = nn.AvgPool2d(

kernel_size=3, stride=1, padding=1)

self.inception_3b_pool_proj = nn.Conv2d(

256, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3b_pool_proj_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3b_relu_pool_proj = nn.ReLU(inplace=True)

def forward(self, x):

out1 = self.inception_3b_1x1(x)

out1 = self.inception_3b_1x1_bn(out1)

out1 = self.inception_3b_relu_1x1(out1)

out2 = self.inception_3b_3x3_reduce(x)

out2 = self.inception_3b_3x3_reduce_bn(out2)

out2 = self.inception_3b_relu_3x3_reduce(out2)

out2 = self.inception_3b_3x3(out2)

out2 = self.inception_3b_3x3_bn(out2)

out2 = self.inception_3b_relu_3x3(out2)

out3 = self.inception_3b_double_3x3_reduce(x)

out3 = self.inception_3b_double_3x3_reduce_bn(out3)

out3 = self.inception_3b_relu_double_3x3_reduce(out3)

out3 = self.inception_3b_double_3x3_1(out3)

out3 = self.inception_3b_double_3x3_1_bn(out3)

out3 = self.inception_3b_relu_double_3x3_1(out3)

out3 = self.inception_3b_double_3x3_2(out3)

out3 = self.inception_3b_double_3x3_2_bn(out3)

out3 = self.inception_3b_relu_double_3x3_2(out3)

out4 = self.inception_3b_pool(x)

out4 = self.inception_3b_pool_proj(out4)

out4 = self.inception_3b_pool_proj_bn(out4)

out4 = self.inception_3b_relu_pool_proj(out4)

outputs = [out1, out2, out3, out4]

return torch.cat(outputs, 1)

InceptionC

class InceptionC(nn.Module):

'''InceptionC'''

def __init__(self):

super(InceptionC, self).__init__()

self.inception_3c_double_3x3_reduce = nn.Conv2d(

320, 64, kernel_size=(1, 1), stride=(1, 1))

self.inception_3c_double_3x3_reduce_bn = nn.BatchNorm2d(

64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3c_relu_double_3x3_reduce = nn.ReLU(inplace=True)

self.inception_3c_double_3x3_1 = nn.Conv2d(

64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

self.inception_3c_double_3x3_1_bn = nn.BatchNorm2d(

96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.inception_3c_relu_double_3x3_1 = nn.ReLU(inplace=True)

def forward(self, x):

out = self.inception_3c_double_3x3_reduce(x)

out = self.inception_3c_double_3x3_reduce_bn(out)

out = self.inception_3c_relu_double_3x3_reduce(out)

out = self.inception_3c_double_3x3_1(out)

out = self.inception_3c_double_3x3_1_bn(out)

out = self.inception_3c_relu_double_3x3_1(out)

return out

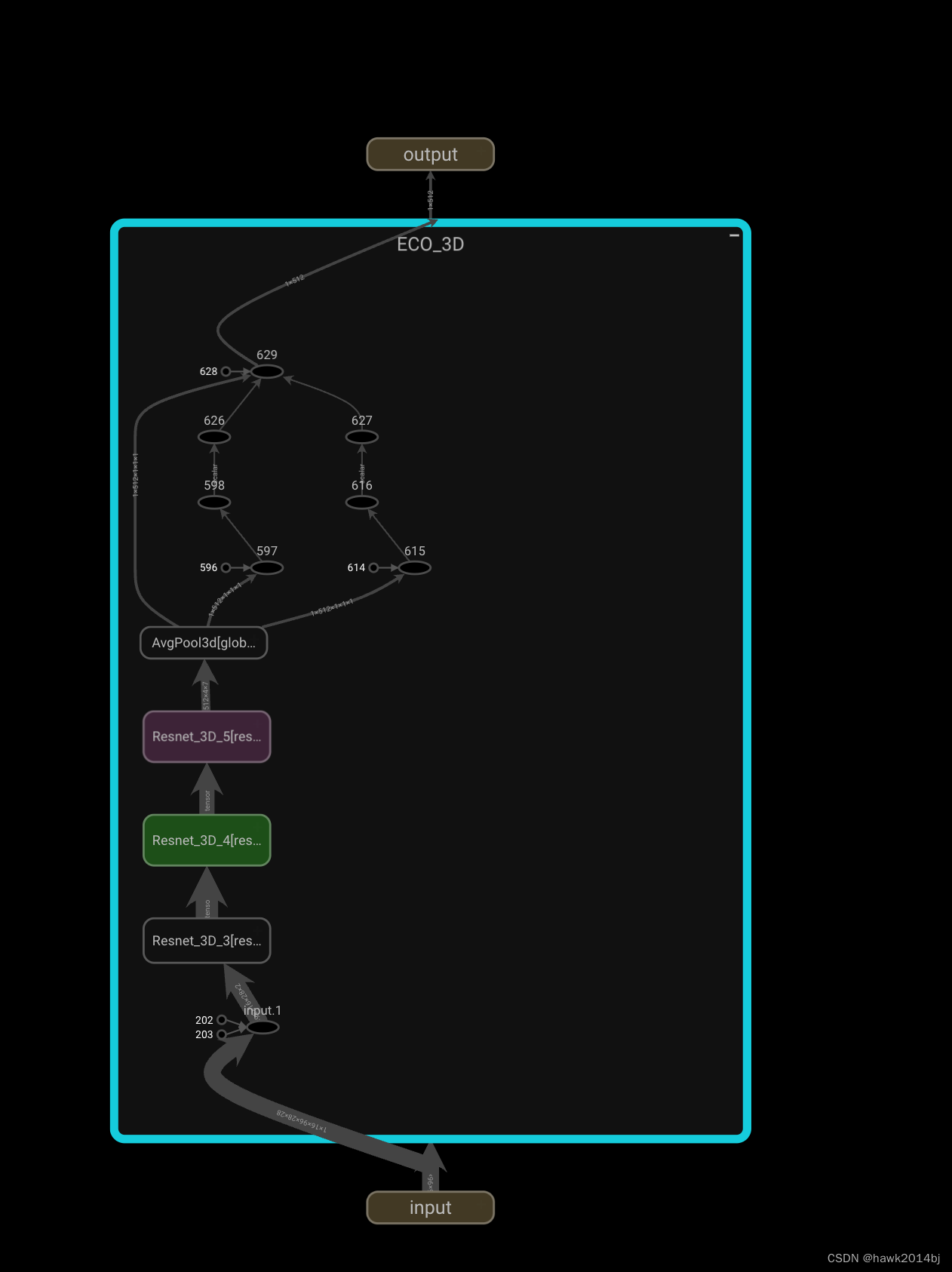

3D Net

从2D Net的输出(16 * 96 * 28 * 28)进入3D Net,首先将数据进行转换,需要转为时间、高度、宽度。3D Net 包含 4 个字模块,Resnet_3D_3、Resnet_3D_4、Resnet_3D_5,分别进行特征转换,转换后分别为 128 * 16 * 28 *28、256 * 8 * 14 14、512 4 * 7 *7,最终转换为 (512) 特征向量。代码如下:

Resnet_3D_3

class Resnet_3D_3(nn.Module):

'''Resnet_3D_3'''

def __init__(self):

super(Resnet_3D_3, self).__init__()

self.res3a_2 = nn.Conv3d(96, 128, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res3a_bn = nn.BatchNorm3d(

128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res3a_relu = nn.ReLU(inplace=True)

self.res3b_1 = nn.Conv3d(128, 128, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res3b_1_bn = nn.BatchNorm3d(

128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res3b_1_relu = nn.ReLU(inplace=True)

self.res3b_2 = nn.Conv3d(128, 128, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res3b_bn = nn.BatchNorm3d(

128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res3b_relu = nn.ReLU(inplace=True)

def forward(self, x):

residual = self.res3a_2(x)

out = self.res3a_bn(residual)

out = self.res3a_relu(out)

out = self.res3b_1(out)

out = self.res3b_1_bn(out)

out = self.res3b_relu(out)

out = self.res3b_2(out)

out += residual

out = self.res3b_bn(out)

out = self.res3b_relu(out)

return out

Resnet_3D_4

class Resnet_3D_4(nn.Module):

'''Resnet_3D_4'''

def __init__(self):

super(Resnet_3D_4, self).__init__()

self.res4a_1 = nn.Conv3d(128, 256, kernel_size=(

3, 3, 3), stride=(2, 2, 2), padding=(1, 1, 1))

self.res4a_1_bn = nn.BatchNorm3d(

256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res4a_1_relu = nn.ReLU(inplace=True)

self.res4a_2 = nn.Conv3d(256, 256, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res4a_down = nn.Conv3d(128, 256, kernel_size=(

3, 3, 3), stride=(2, 2, 2), padding=(1, 1, 1))

self.res4a_bn = nn.BatchNorm3d(

256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res4a_relu = nn.ReLU(inplace=True)

self.res4b_1 = nn.Conv3d(256, 256, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res4b_1_bn = nn.BatchNorm3d(

256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res4b_1_relu = nn.ReLU(inplace=True)

self.res4b_2 = nn.Conv3d(256, 256, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res4b_bn = nn.BatchNorm3d(

256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res4b_relu = nn.ReLU(inplace=True)

def forward(self, x):

residual = self.res4a_down(x)

out = self.res4a_1(x)

out = self.res4a_1_bn(out)

out = self.res4a_1_relu(out)

out = self.res4a_2(out)

out += residual

residual2 = out

out = self.res4a_bn(out)

out = self.res4a_relu(out)

out = self.res4b_1(out)

out = self.res4b_1_bn(out)

out = self.res4b_1_relu(out)

out = self.res4b_2(out)

out += residual2

out = self.res4b_bn(out)

out = self.res4b_relu(out)

return out

Resnet_3D_5

class Resnet_3D_5(nn.Module):

'''Resnet_3D_5'''

def __init__(self):

super(Resnet_3D_5, self).__init__()

self.res5a_1 = nn.Conv3d(256, 512, kernel_size=(

3, 3, 3), stride=(2, 2, 2), padding=(1, 1, 1))

self.res5a_1_bn = nn.BatchNorm3d(

512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res5a_1_relu = nn.ReLU(inplace=True)

self.res5a_2 = nn.Conv3d(512, 512, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res5a_down = nn.Conv3d(256, 512, kernel_size=(

3, 3, 3), stride=(2, 2, 2), padding=(1, 1, 1))

self.res5a_bn = nn.BatchNorm3d(

512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res5a_relu = nn.ReLU(inplace=True)

self.res5b_1 = nn.Conv3d(512, 512, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res5b_1_bn = nn.BatchNorm3d(

512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res5b_1_relu = nn.ReLU(inplace=True)

self.res5b_2 = nn.Conv3d(512, 512, kernel_size=(

3, 3, 3), stride=(1, 1, 1), padding=(1, 1, 1))

self.res5b_bn = nn.BatchNorm3d(

512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

self.res5b_relu = nn.ReLU(inplace=True)

def forward(self, x):

residual = self.res5a_down(x)

out = self.res5a_1(x)

out = self.res5a_1_bn(out)

out = self.res5a_1_relu(out)

out = self.res5a_2(out)

out += residual # res5a

residual2 = out

out = self.res5a_bn(out)

out = self.res5a_relu(out)

out = self.res5b_1(out)

out = self.res5b_1_bn(out)

out = self.res5b_1_relu(out)

out = self.res5b_2(out)

out += residual2 # res5b

out = self.res5b_bn(out)

out = self.res5b_relu(out)

return out

有兴趣可以从 github 下载模型进行推理。https://github.com/mzolfaghari/ECO-efficient-video-understanding