硬件要求(模型推理):

INT4 : RTX30901,显存24GB,内存32GB,系统盘200GB

INT4 : RTX40901或RTX3090*2,显存24GB,内存32GB,系统盘200GB

模型微调硬件要求更高。一般不建议个人用户环境使用

如果要运行官方web界面streamlit run composite_demo/main.py显存需要40G以上,至少需两张RTX3090显卡。否则基本无法体验

环境准备

模型准备

手动下载以下几个模型(体验时几个模型不一定需全下载)

下载地址:https://hf-mirror.com/THUDM

lmsys/vicuna-7b-v1.5

THUDM/cogagent-chat-hf

THUDM/cogvlm-chat-hf

THUDM/cogvlm-grounding-generalist-hf

下载模型源码

git clone https://github.com/THUDM/CogVLM.git;

cd CogVLM

创建conda环境

conda create -n cogvlm python=3.11 -y

source activate cogvlm

修改本国内源

pip config set global.index-url http://mirrors.aliyun.com/pypi/simple

pip config set install.trusted-host mirrors.aliyun.com

安装依赖库

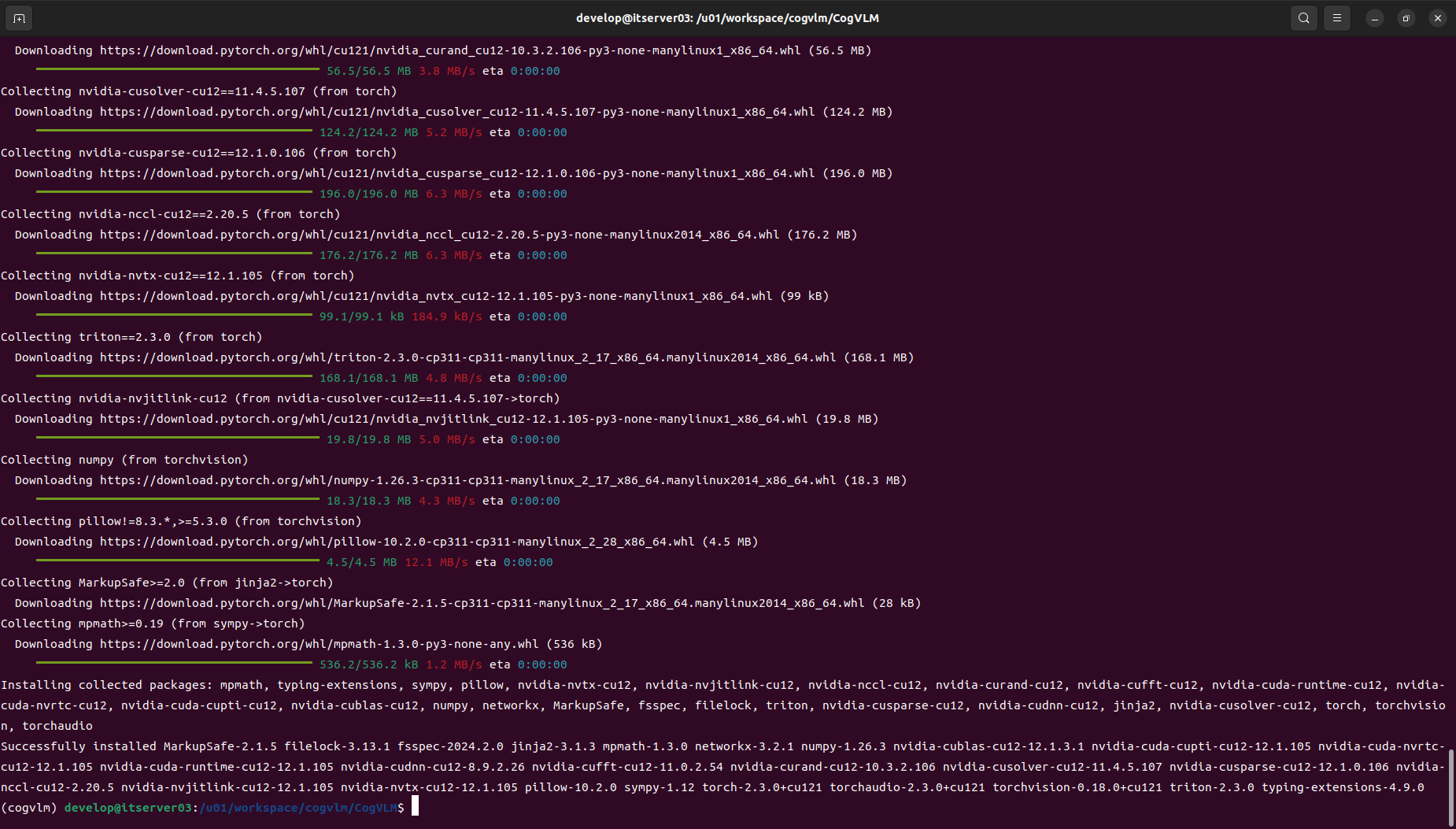

安装torch torchvision torchaudio

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

安装 cuda-runtime

(cogvlm) develop@itserver03:/u01/workspace/cogvlm/CogVLM$: conda install -y -c "nvidia/label/cuda-12.1.0" cuda-runtime

The following NEW packages will be INSTALLED:

cuda-cudart nvidia/label/cuda-12.1.0/linux-64::cuda-cudart-12.1.55-0

cuda-libraries nvidia/label/cuda-12.1.0/linux-64::cuda-libraries-12.1.0-0

cuda-nvrtc nvidia/label/cuda-12.1.0/linux-64::cuda-nvrtc-12.1.55-0

cuda-opencl nvidia/label/cuda-12.1.0/linux-64::cuda-opencl-12.1.56-0

cuda-runtime nvidia/label/cuda-12.1.0/linux-64::cuda-runtime-12.1.0-0

libcublas nvidia/label/cuda-12.1.0/linux-64::libcublas-12.1.0.26-0

libcufft nvidia/label/cuda-12.1.0/linux-64::libcufft-11.0.2.4-0

libcufile nvidia/label/cuda-12.1.0/linux-64::libcufile-1.6.0.25-0

libcurand nvidia/label/cuda-12.1.0/linux-64::libcurand-10.3.2.56-0

libcusolver nvidia/label/cuda-12.1.0/linux-64::libcusolver-11.4.4.55-0

libcusparse nvidia/label/cuda-12.1.0/linux-64::libcusparse-12.0.2.55-0

libnpp nvidia/label/cuda-12.1.0/linux-64::libnpp-12.0.2.50-0

libnvjitlink nvidia/label/cuda-12.1.0/linux-64::libnvjitlink-12.1.55-0

libnvjpeg nvidia/label/cuda-12.1.0/linux-64::libnvjpeg-12.1.0.39-0

Downloading and Extracting Packages:

libcublas-12.1.0.26 | 329.0 MB | | 0%

libcusparse-12.0.2.5 | 163.0 MB | | 0%

libnpp-12.0.2.50 | 139.8 MB | | 0%

libcufft-11.0.2.4 | 102.9 MB | | 0%

libcusolver-11.4.4.5 | 98.3 MB | | 0%

libcurand-10.3.2.56 | 51.7 MB | | 0%

cuda-nvrtc-12.1.55 | 19.7 MB | | 0%

libnvjitlink-12.1.55 | 16.9 MB | | 0%

libnvjpeg-12.1.0.39 | 2.5 MB | | 0%

libcufile-1.6.0.25 | 763 KB | | 0%

cuda-cudart-12.1.55 | 189 KB | | 0%

cuda-opencl-12.1.56 | 11 KB | | 0%

cuda-libraries-12.1. | 2 KB | | 0%

cuda-runtime-12.1.0 | 1 KB | | 0%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

(cogvlm) develop@itserver03:/u01/workspace/cogvlm/CogVLM$

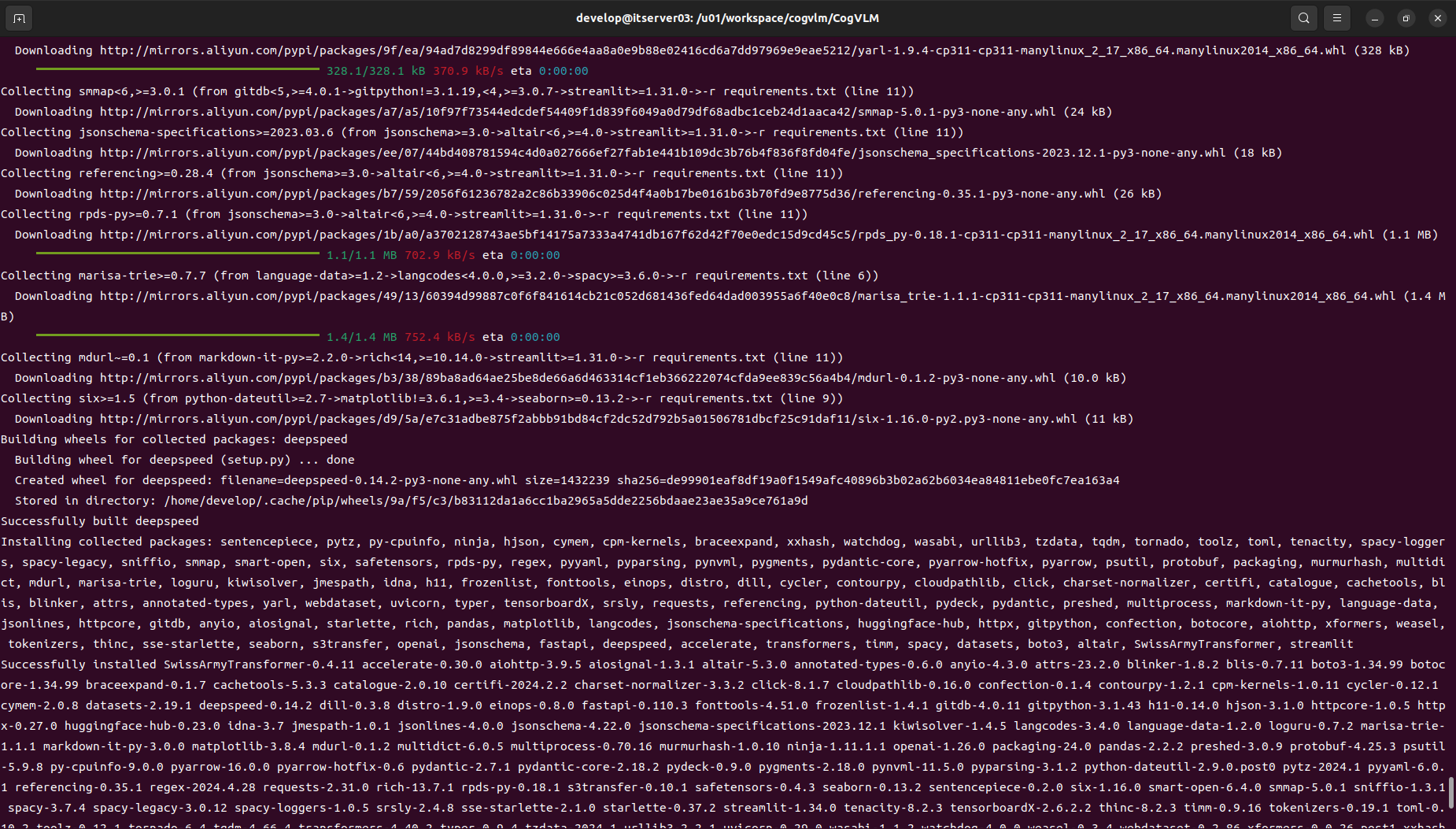

安装CogVLM依赖库

pip install -r requirements.txt

在安装后,启动web界面时,会出现报错,可能碰到如下安装包依赖库问题。huggingface_hub版本不要用最新版。这里制定版本huggingface_hub==0.21.4。bitsandbytes,chardet 这两库可能会需要单独在安装以便,这里至少我是碰到了错误。

pip install bitsandbytes

pip install chardet

pip install huggingface_hub==0.21.4

安装语言模型(非必须)

python -m spacy download en_core_web_sm

运行

运行web界面

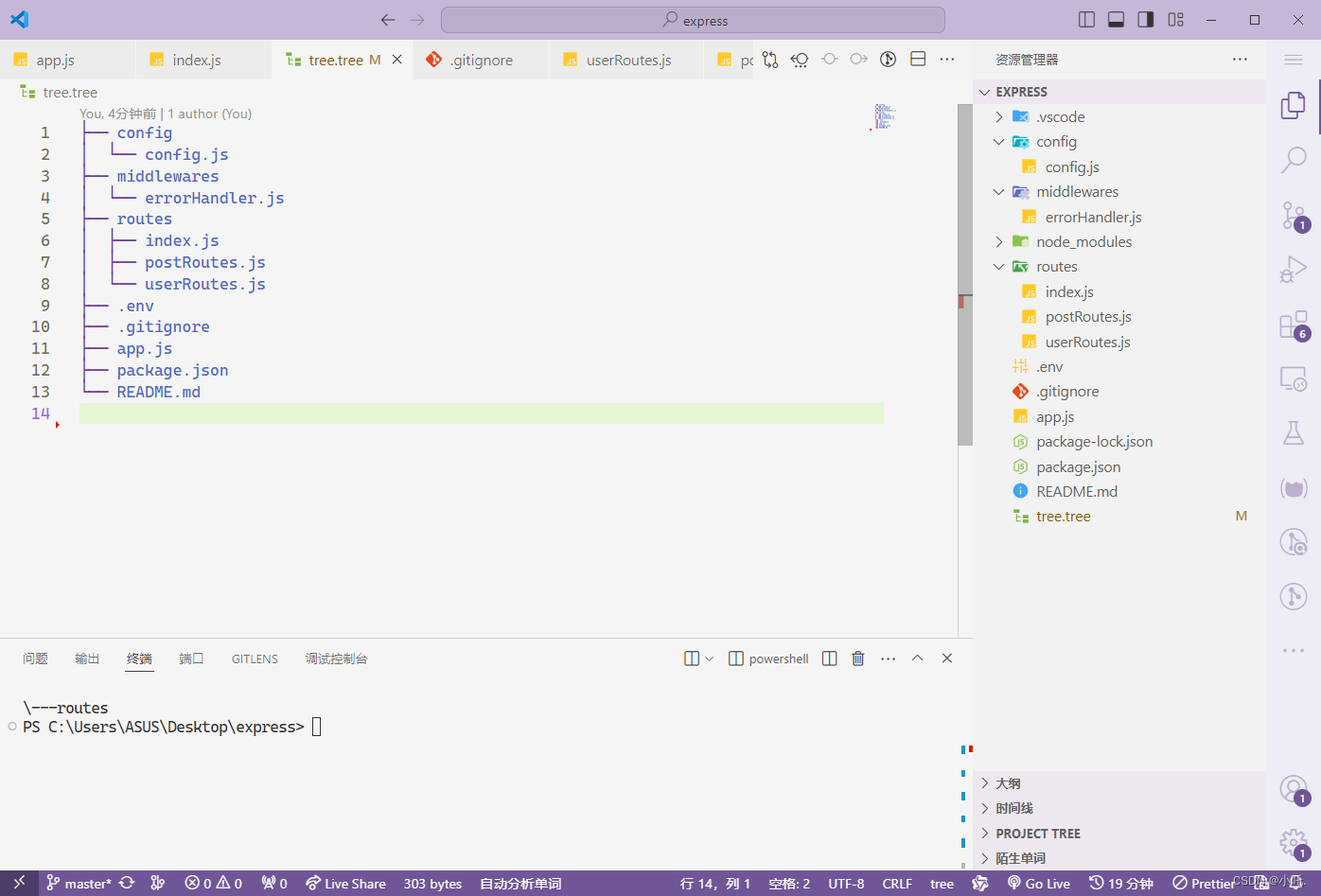

运行前请先修改模型地址,编辑composite_demo/client.py 文件中默认的模型地址

models_info = {

'tokenizer': {

#'path': os.environ.get('TOKENIZER_PATH', 'lmsys/vicuna-7b-v1.5'),

'path': os.environ.get('TOKENIZER_PATH', '/u01/workspace/cogvlm/models/vicuna-7b-v1.5'),

},

'agent_chat': {

#'path': os.environ.get('MODEL_PATH_AGENT_CHAT', 'THUDM/cogagent-chat-hf'),

'path': os.environ.get('MODEL_PATH_AGENT_CHAT', '/u01/workspace/cogvlm/models/cogagent-chat-hf'),

'device': ['cuda:0']

},

'vlm_chat': {

#'path': os.environ.get('MODEL_PATH_VLM_CHAT', 'THUDM/cogvlm-chat-hf'),

'path': os.environ.get('MODEL_PATH_VLM_CHAT', '/u01/workspace/cogvlm/models/cogvlm-chat-hf'),

'device': ['cuda:0']

},

'vlm_grounding': {

#'path': os.environ.get('MODEL_PATH_VLM_GROUNDING','THUDM/cogvlm-grounding-generalist-hf'),

'path': os.environ.get('MODEL_PATH_VLM_GROUNDING','/u01/workspace/cogvlm/models/cogvlm-grounding-generalist-hf'),

'device': ['cuda:']

}

}

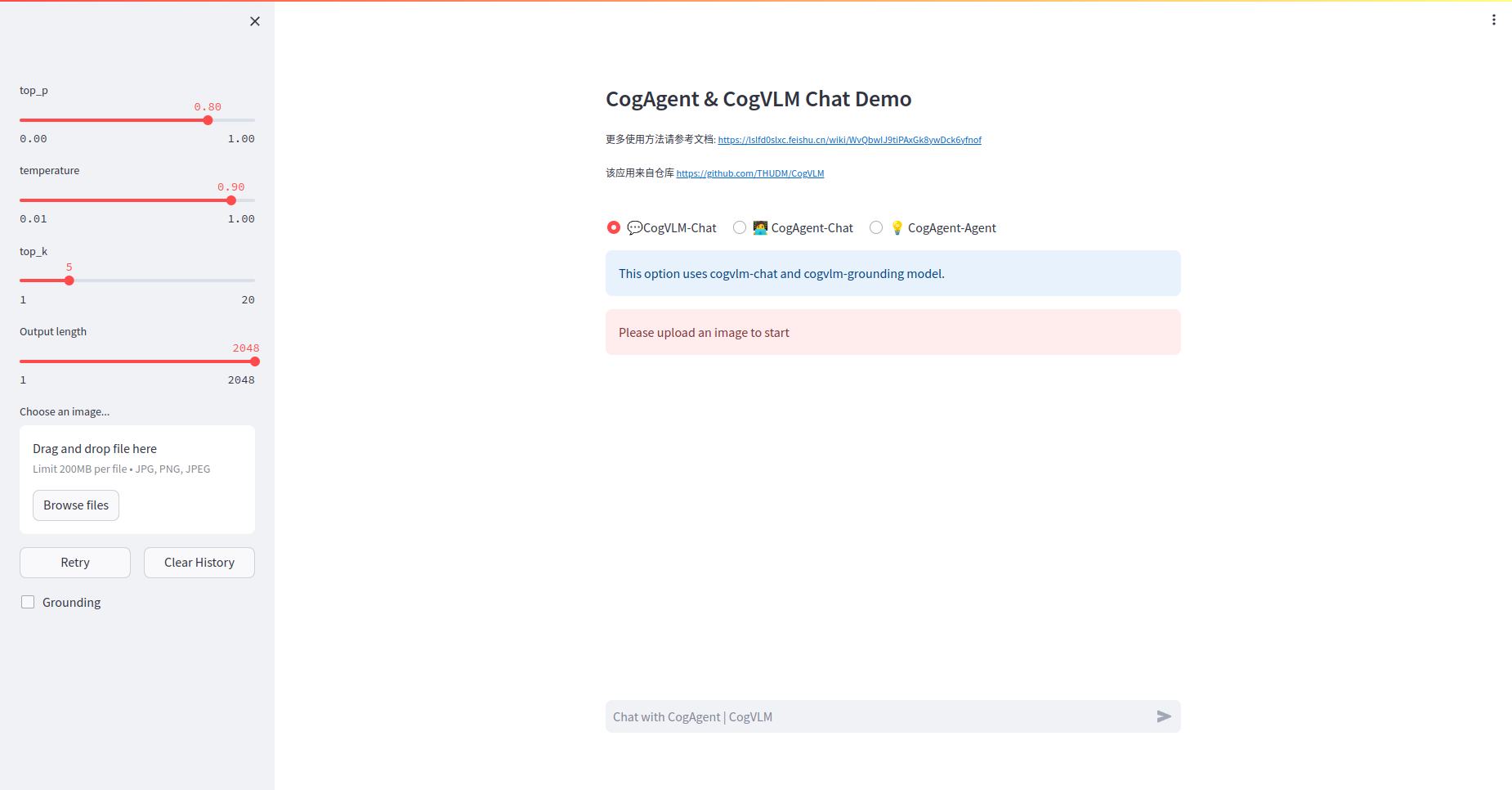

执行启动命令

streamlit run composite_demo/main.py

成功后可以打开界面

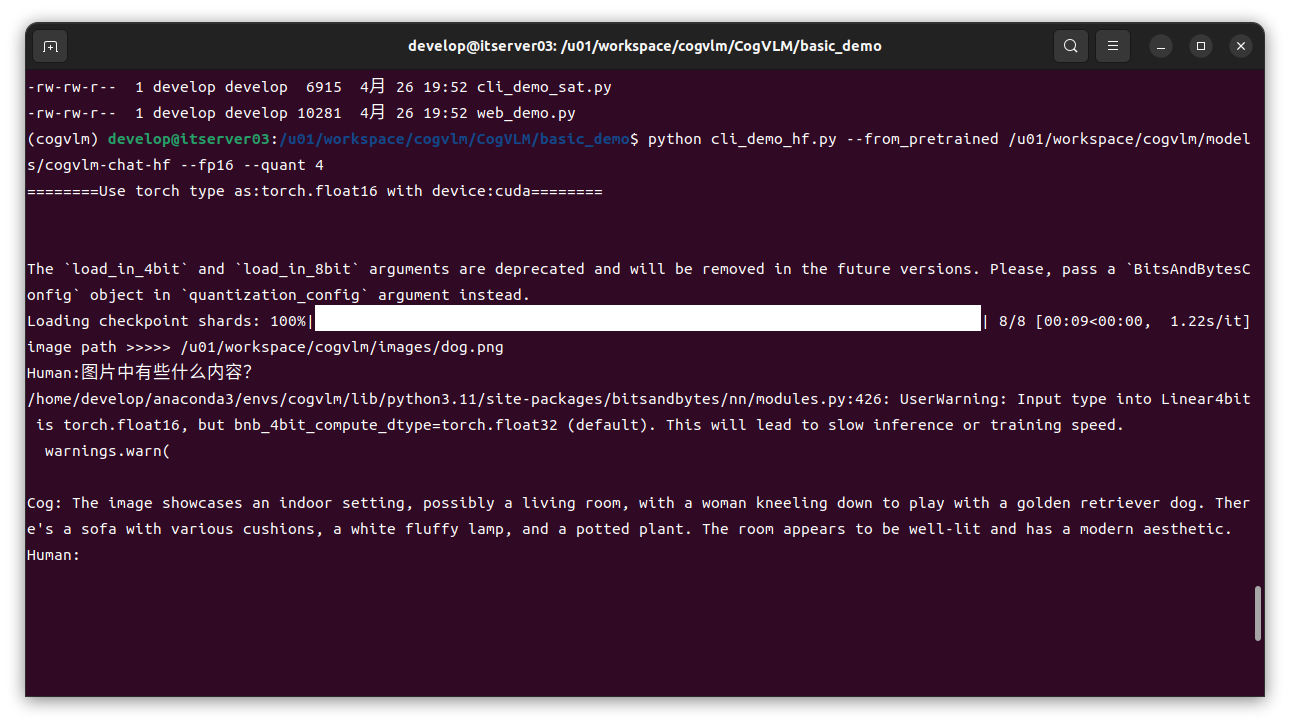

控制台交互式运行

在python basic_demo/cli_demo_hf.py中运行代码,注意替换模型地址

python cli_demo_hf.py --from_pretrained /u01/workspace/cogvlm/models/cogvlm-chat-hf --fp16 --quant 4

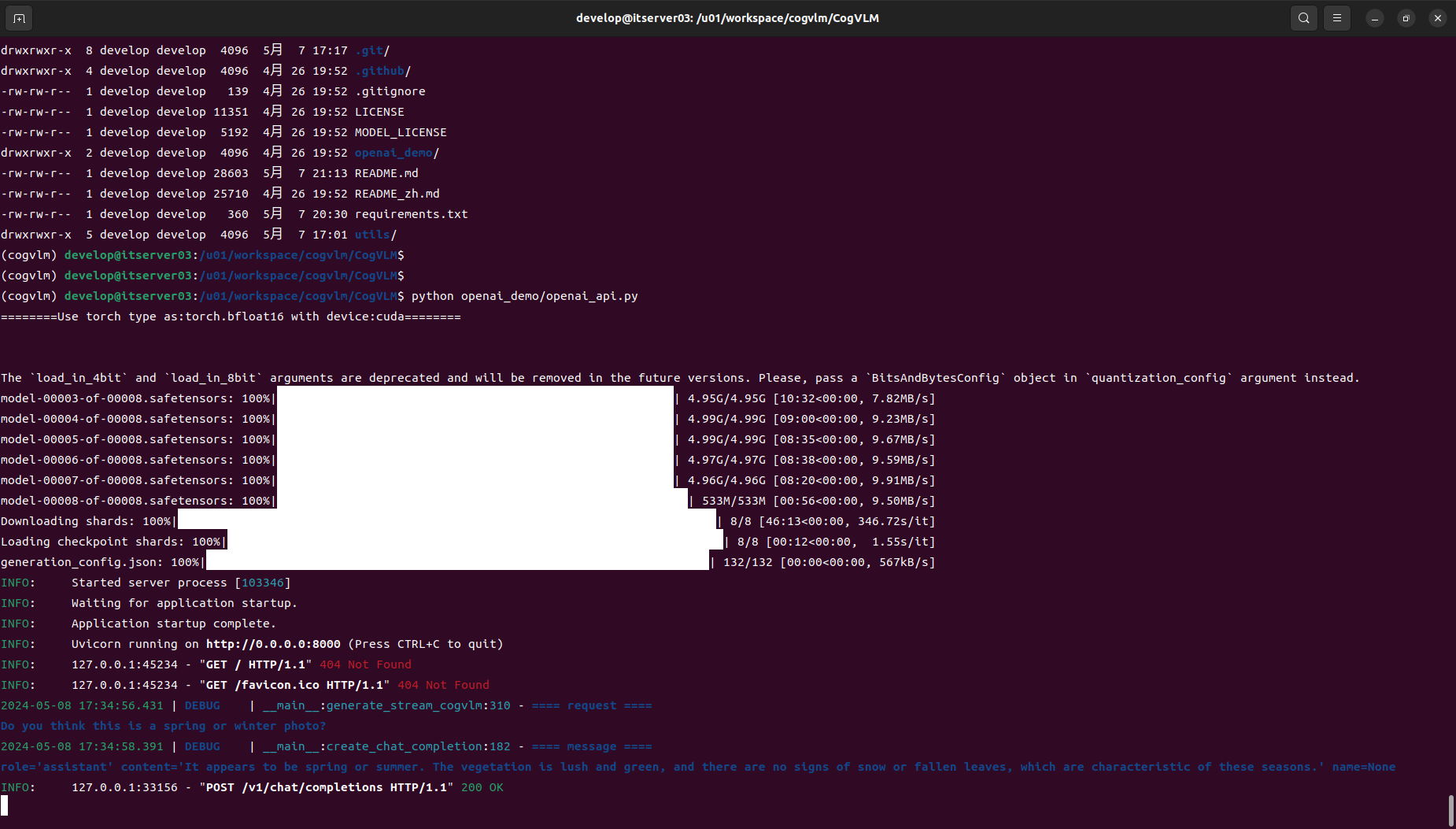

OpenAI 方式 Restful API 运行

运行服务端

python openai_demo/openai_api.py

客户端请求

请编辑openai_demo/openai_api_request.py中的图片地址以及你需要提的问题,例如

messages = [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?",

},

{

"type": "image_url",

"image_url": {

"url": img_url

},

},

],

},

{

"role": "assistant",

"content": "The image displays a wooden boardwalk extending through a vibrant green grassy wetland. The sky is partly cloudy with soft, wispy clouds, indicating nice weather. Vegetation is seen on either side of the boardwalk, and trees are present in the background, suggesting that this area might be a natural reserve or park designed for ecological preservation and outdoor recreation. The boardwalk allows visitors to explore the area without disturbing the natural habitat.",

},

{

"role": "user",

"content": "Do you think this is a spring or winter photo?"

},

]

if __name__ == "__main__":

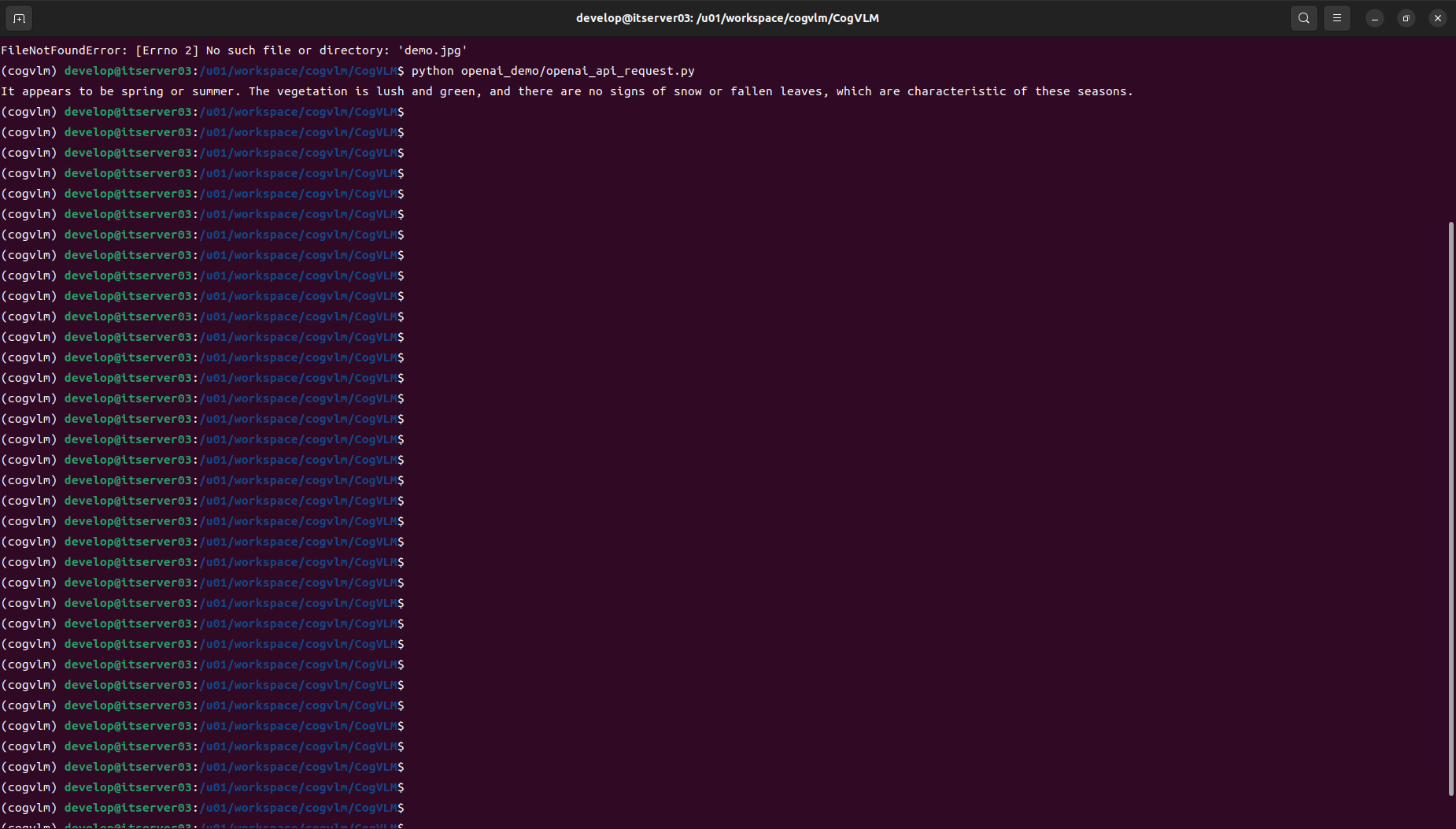

simple_image_chat(use_stream=False, img_path="/u01/workspace/cogvlm/CogVLM/openai_demo/demo.jpg")

运行客户端请求命令

python openai_demo/openai_api_request.py

【Qinghub Studio 】更适合开发人员的低代码开源开发平台

【QingHub企业级应用统一部署】

【QingHub企业级应用开发管理】

【QingHub** 演示】**

【https://qingplus.cn】

![[微信小程序] 入门笔记2-自定义一个显示组件](https://img-blog.csdnimg.cn/direct/4f11639794bb454ba7f8c5bb3144d775.png)