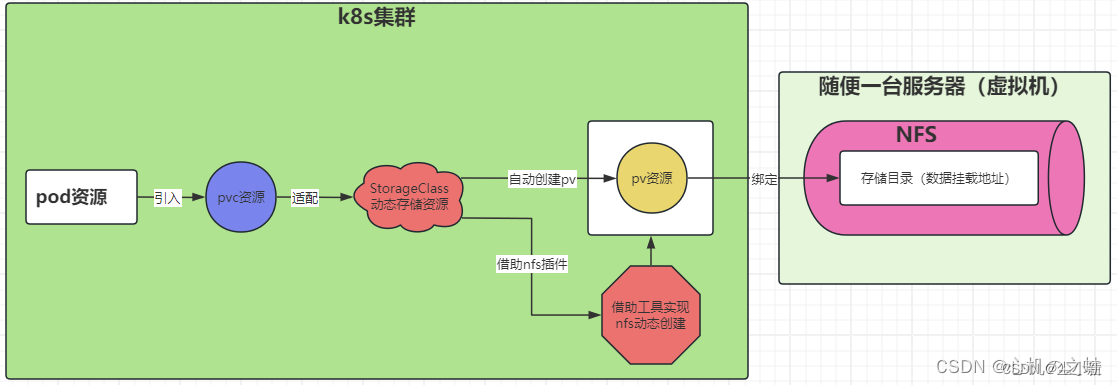

StorageClass动态存储资源:简称sc资源;

动态存储类,它自动创建pv;不再需要手动创建pv;

但是,我们的存储卷系统nfs本身不支持这个sc动态存储,所以,我们需要借助一个插件来实现nfs配合sc资源实现动态自动存储

整体流程规划汇总;

1,部署nfs环境(存储nfs,和集群的nfs命令)

2,配置nfs动态存储插件;

3,创建StorageClass动态存储资源;

4,创建pvc适配StorageClass动态存储资源

5,创建业务pod引用pvc

1 准别nfs环境

所有节点安装nfs工具

[root@master ~]# yum -y install nfs-utils

[root@node1 ~]# yum -y install nfs-utils[root@node2 ~]# yum -y install nfs-utils

master节点编辑nfs配置文件

[root@master ~]# mkdir -p /data/kubernetes

[root@master ~]# cat /etc/exports

/data/kubernetes *(rw,no_root_squash)# 重新加载配置文件 exportfs -r

[root@master ~]# exportfs -r

# 生效配置文件[root@master ~]# exportfs

/data/kubernetes

<world>[root@master ~]# systemctl enable --now nfs

master节点创建挂在目录

[root@master ~]# mkdir -p /data/kubernetes/storageclass

2 部署配置nfs动态存储插件

注意,nfs的这个插件包中,包含了创建部署服务pod清单,pvc清单和nfs插件清单(deployment.yaml)、storageclass清单(class.yaml);

所以,这个插件包,满足了一切要求了;当然,除了deploymengt.yaml文件外,其他的都不算是插件,都是我们要创建的资源,只是这个插件的构建者,帮助我们把资源清单汇总了;

你也可以自己单独去创建;

2.1 修改集群api-server,为适配插件中镜像

[root@master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

...........

containers:

- command:

- kube-apiserver

- --service-node-port-range=3000-50000# 就是加下面这句代码

- --feature-gates=RemoveSelfLink=false.............

2.2 编辑nfs-client-provisioner.yaml 文件

创建一个制备器提供给存储类storageClass使用

[root@master storageclass]# cat nfs-client-provisioner.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: dolphin-provisioner

- name: NFS_SERVER

value: 192.168.190.200

- name: NFS_PATH

value: /data/kubernetes/storageclass

volumes:

- name: nfs-client-root

nfs:

server: 192.168.190.200

path: /data/kubernetes/storageclass

[root@master storageclass]# kubectl apply -f nfs-client-provisioner.yaml

deployment.apps/nfs-client-provisioner created

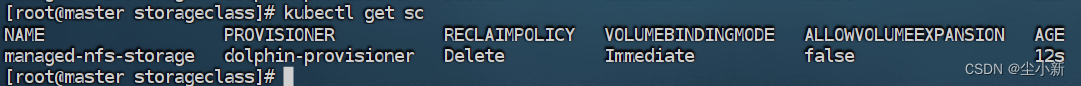

2.3 创建managed-nfs-storage.yaml

[root@master storageclass]# cat managed-nfs-storage.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

#这个值,对应nfs插件的deploymengt.yaml文件中env下的name是PROVISIONER_NAME的value值

provisioner: dolphin-provisioner

parameters:

#是否删除里面的数据;

#注意:仅对“回收策略是Retain”的值时生效,如果回收策略是delete,则这个参数无效;

#删除数据后,会在存储卷路径创建“archived-*”前缀的目录;

archiveOnDelete: "true"

#在这里设置pv的回收策略(如果不设置,默认是delete)

recalaimPolicy: Retain

[root@master storageclass]# kubectl apply -f managed-nfs-storage.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

查看sc资源

2.4 编辑test-pvc-claim.yaml 测试pvc对象

检测存储类型storageClass是否能正常工作

[root@master storageclass]# cat test-pvc-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-pvc-claim

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

storageClassName: managed-nfs-storage

[root@master storageclass]# kubectl apply -f test-pvc-claim.yaml

persistentvolumeclaim/test-pvc-claim created

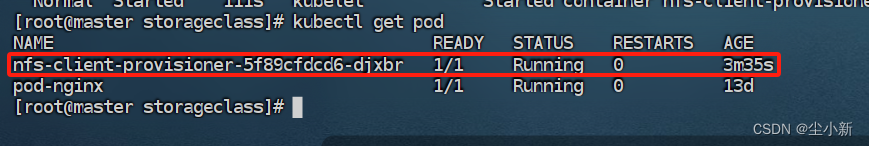

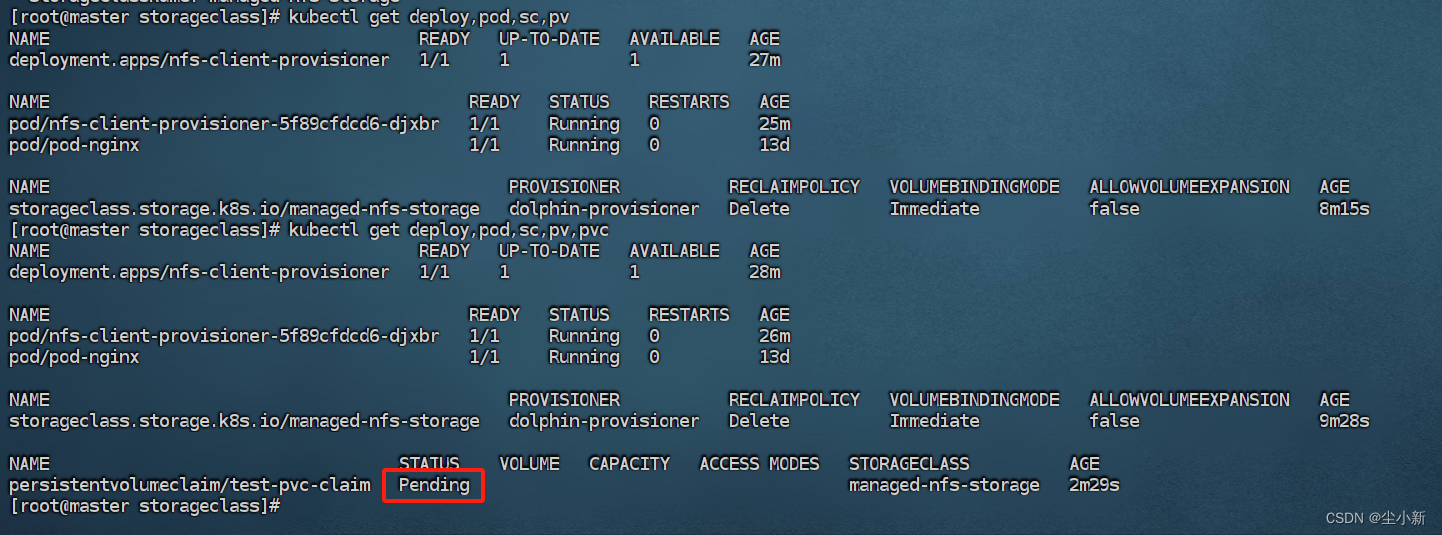

2.5 查看deploy、pod、sc、pv、pvc的信息

发现pvc处于一直pending状态

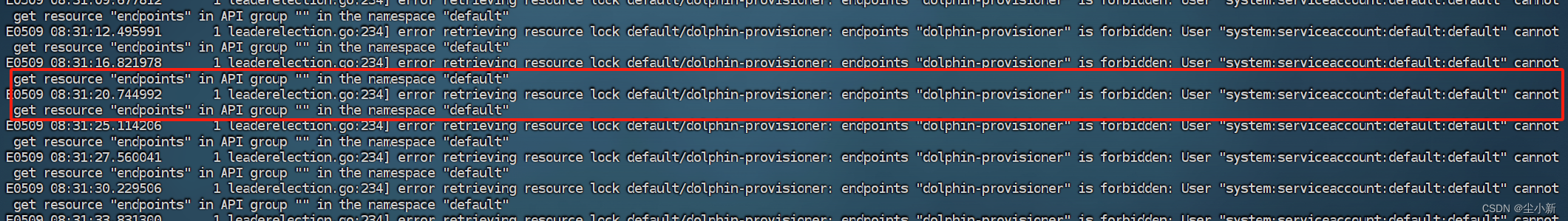

查看日志:没有权限访问

[root@master storageclass]# kubectl logs -f nfs-client-provisioner-5f89cfdcd6-djxbr

从日志信息中可以看出,default命名空间中的服务账号(serviceaccount)没有权限访问dolphin-provisioner对象

2.6 编辑nfs-provisioner-runner.yaml 创建nfs-provisioner-runner角色资源

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: default

namespace: default

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io[root@master storageclass]# kubectl apply -f nfs-provisioner-runner.yaml

clusterrole.rbac.authorization.k8s.io/nfs-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-provisioner created

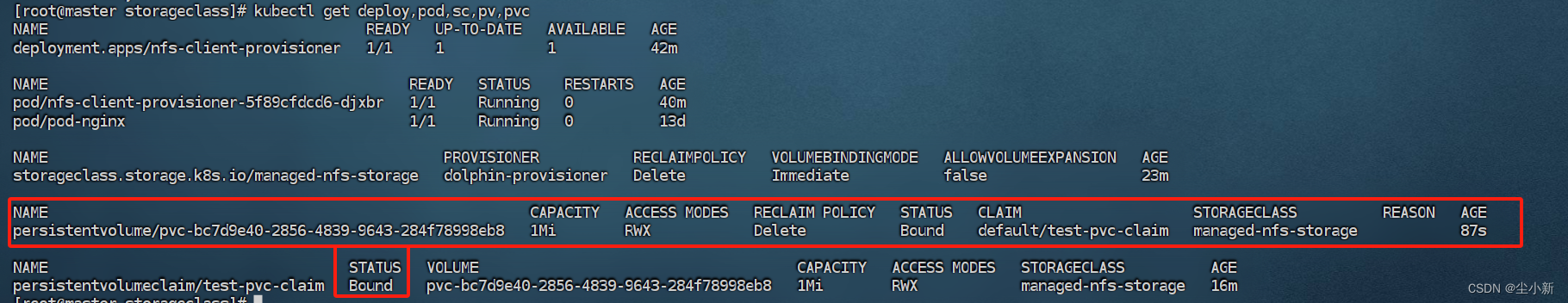

2.7 deploy、pod、sc、pv、pvc的信息

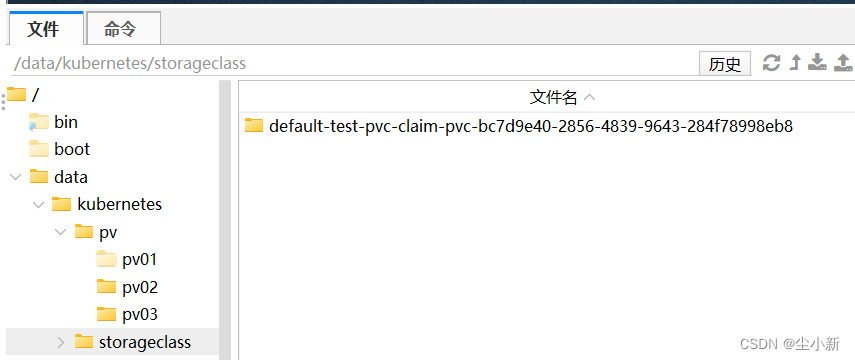

资源成功绑定,且自动创建了一个pv pvc-bc7d9e40-2856-4839-9643-284f78998eb8,与之绑定

创建了pvc之后,nfs挂载目录,就通过sc资源自动创建了一个目录,用于pvc使用;

创建了pvc之后,nfs挂载目录,就通过sc资源自动创建了一个目录,用于pvc使用;

2.8 编辑test-pvc-pod.yaml 文件创建pod来使用PVC资源,进行测试

[root@master storageclass]# cat test-pvc-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox

args:

- /bin/sh

- -c

- sleep 3000

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-pvc-claim

[root@master storageclass]# kubectl apply -f test-pvc-pod.yaml

pod/test-pod created

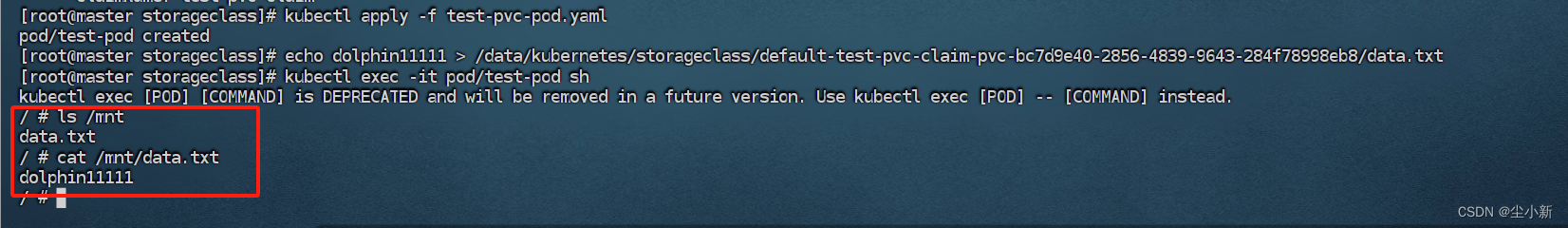

挂在目录写入文件

[root@master storageclass]# echo dolphin11111 > /data/kubernetes/storageclass/default-test-pvc-claim-pvc-bc7d9e40-2856-4839-9643-284f78998eb8/data.txt

进入上面创建的pod容器内查看是否有文件

操作完成