文章目录

- 1.CrushMap核心概念

- 2.集群默认的CrushMap规则剖析

- 2.1.CrushMap列表显示内容剖析

- 2.2.对默认的CrushMap规则进行深度的剖析

- 2.3.完整的CrushMap定义信息

1.CrushMap核心概念

CrushMap官方文档:https://docs.ceph.com/en/pacific/rados/operations/crush-map/

Ceph集群数据写入流程,最终是通过Crush算法将数据落入到OSD中,使用Crush算法来存储和管理数据,相当于智能的数据分发机制。Ceph可以通过Crush算法准确的计算出数据应该存储在哪里,也可以计算出从哪里读取数据。

Crush算法就是依据配置的Crush Map规则策略,将数据路由到对应的存储点。

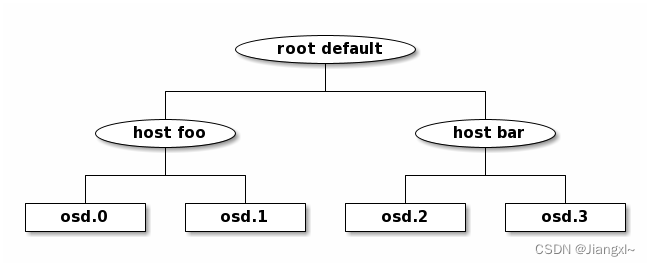

如下图所示,一份数据被拆成多个Object对象,这些Object对象从PG写入到OSD时,Crush算法是根据节点来选择OSD进行存储的,也就是说,Crush算法不会将同一份数据的多个副本写入在一个节点中多个OSD上,因为一旦这个节点宕机,对于数据的完整性就会产生影响,Crush算法会将一份数据的多个副本分别存储在不同的节点的OSD上,以确保数据的安全性。

Crush算法是根据CrushMap规则来决定数据应该如何分配和存储,由CrushMap来定义具体的规则,数据的容灾也是由CrushMap来定义。

如果对于Ceph中存储的数据安全性要求很高,那么数据容灾机制也是必不可少的环节。

数据容灾可以通过CrushMap来定义,CrushMap支持很多种容灾方案:

- OSD容灾:多个OSD之间存放一份数据的多个副本。

- Host容灾:多个节点上的不同OSD存放一份数据的多个副本。

如果数据安全性很高可以使用下面几种方案:

- ROW容灾:跨机房的容灾,多个机房中的Ceph节点组成的集群。

- Datacenter容灾:数据中心容灾,跨数据中心组网,实现数据存储的高可靠性。

- zone容灾:区域容灾,同一地区下多个区域之间的组网。

- region容灾:跨地区容灾,例如上海和北京组网,对数据存储实现高可靠性。

- root:顶部

等等多种方案··············

这些容灾机制都是通过CrushMap来实现的,定义好的这些规则与Pool资源池进行关联,最终实现数据的分布式存储。

2.集群默认的CrushMap规则剖析

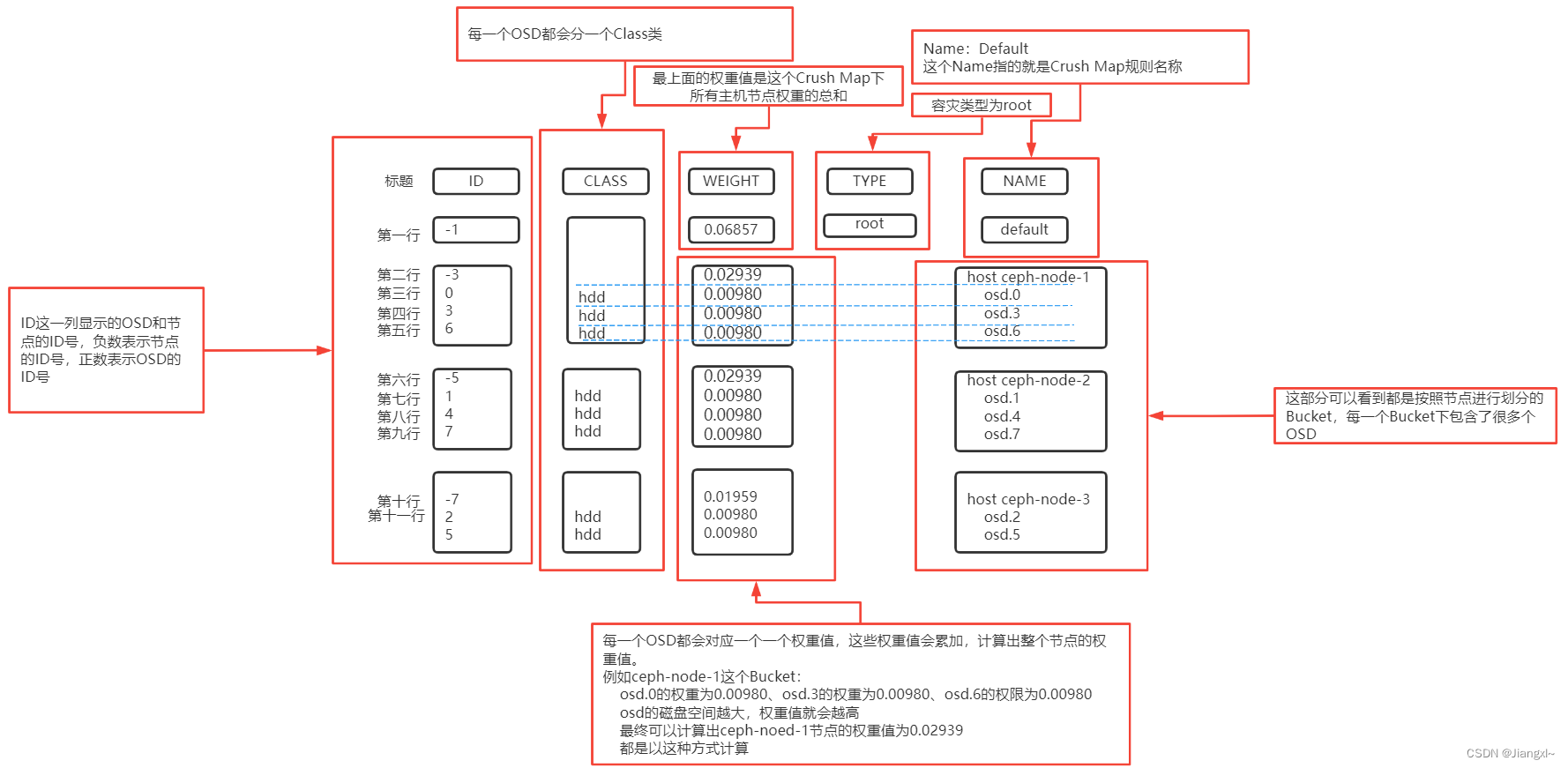

2.1.CrushMap列表显示内容剖析

可以通过ceph osd tree或者ceph osd crush tree两个命令来查看集群中OSD有那些CrushMap规则列表,显示的内容是一样的,后者的输出更加详细。

[root@ceph-node-1 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.06857 root default

-3 0.02939 host ceph-node-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

6 hdd 0.00980 osd.6 up 1.00000 1.00000

-5 0.02939 host ceph-node-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

7 hdd 0.00980 osd.7 up 1.00000 1.00000

-7 0.00980 host ceph-node-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

1)在NAME一列中可以看到第一行为default,这个default就是Crush Map规则的名称。

2)在TYPE一列可以看到值为root,也就是说该Crush Map使用的是root类型的容灾机制。

3)在NAME之一列中还可以看到集群中的各个节点,这说明该Crush Map是以Host节点进行划分Bucket的,每一个节点都充当刚一个Bucket,每个Bucket下都包含了很多个OSD。

4)每一个OSD还会划分一个Class类。

5)每个OSD都会对应一个权重值,OSD磁盘空间越大,权重值就越高,与节点名称同行的权重值是该节点下所有OSD权重之和,第一行的权重值是该Crush Map规则中权重的总和。

输出的内容剖析示意图:

2.2.对默认的CrushMap规则进行深度的剖析

通过ceph osd crush dump命令可以展开Crush Map规则的所有配置项。

一个Crush Map规则中包含五部分主要配置内容:

- devices中包含集群中所有的OSD设备。

- types中包含可以选择那些数据保护机制,也就是容灾机制。

- buckets中包含数据存储的分布信息。

- rules中定义的是具体的规则,所有的pool都会与这个规则进行关联。

- tunables中定义的是一些具体的参数,不需要过多的关注。

[root@ceph-node-1 ~]# ceph osd crush dump

{

"devices": [

],

"types": [

],

"buckets": [

],

"rules": [

],

"tunables": {

},

"choose_args": {}

}

1)devices中配置内容详解

在devices中包含的配置内容是集群中所有的OSD设备信息,包括OSD的ID号、名称、以及划分的class类。

"devices": [

{

"id": 0, #OSD的名称

"name": "osd.0", #OSD的名称

"class": "hdd" #OSD的class类名称

},

{

"id": 1,

"name": "osd.1",

"class": "hdd"

},

·················

],

2)types中配置内容详解

在types中包含了数据容灾保护的11种类型,后面选择使用哪种类型时都需要在指定该类型的ID号以及名称。

"types": [

{

"type_id": 0, #类型的ID

"name": "osd" #类型的名称,osd类型

},

{

"type_id": 1,

"name": "host" #主机类型

},

{

"type_id": 2,

"name": "chassis"

},

{

"type_id": 3,

"name": "rack"

},

{

"type_id": 4,

"name": "row" #机房

},

{

"type_id": 5,

"name": "pdu"

},

{

"type_id": 6,

"name": "pod"

},

{

"type_id": 7,

"name": "room"

},

{

"type_id": 8,

"name": "datacenter" #数据中心

},

{

"type_id": 9,

"name": "zone" #区域

},

{

"type_id": 10,

"name": "region" #地区

},

{

"type_id": 11,

"name": "root" #顶级

}

],

3)Buckets中配置内容详解

在Buckets中包含了Crush Map的信息,从这里可以看出该Bucket属于哪一个ClusterMap。

"buckets": [

{

"id": -1, #指定Crush Map的ID号,后期自动生成的

"name": "default", #Crush Map的名称

"type_id": 11, #使用的数据保护类型,ID为11对应Type中的11也就是root

"type_name": "root", #类型的名称

"weight": 4494, #权重

"alg": "straw2",

"hash": "rjenkins1",

"items": [ #在items中会包含集群所有节点的信息

{

"id": -3, #节点的ID号

"weight": 1926, #权重

"pos": 0

},

{

"id": -5,

"weight": 1926,

"pos": 1

},

{

"id": -7,

"weight": 642,

"pos": 2

}

]

},

····················

4)Rules中配置内容详解

Pool资源池需要与Rule规则进行关联,在Rule规则中主要定义了数据是如何分布存储的。

"rules": [

{

"rule_id": 0,

"rule_name": "replicated_rule", #规则的名称,查看pool的属性的时候就可以看到pool使用的是哪个规则

"ruleset": 0,

"type": 1, #类型为1也就对应types中的host类型

"min_size": 1, #最小副本数量

"max_size": 10, #最大副本数量

"steps": [

{

"op": "take",

"item": -1,

"item_name": "default" #关联的crush map

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

],

5)tunables中配置内容详解

tunables中定义了一些配置参数,不需要过多的关注。

"tunables": {

"choose_local_tries": 0,

"choose_local_fallback_tries": 0,

"choose_total_tries": 50,

"chooseleaf_descend_once": 1,

"chooseleaf_vary_r": 1,

"chooseleaf_stable": 1,

"straw_calc_version": 1,

"allowed_bucket_algs": 54,

"profile": "jewel",

"optimal_tunables": 1,

"legacy_tunables": 0,

"minimum_required_version": "jewel",

"require_feature_tunables": 1,

"require_feature_tunables2": 1,

"has_v2_rules": 0,

"require_feature_tunables3": 1,

"has_v3_rules": 0,

"has_v4_buckets": 1,

"require_feature_tunables5": 1,

"has_v5_rules": 0

},

2.3.完整的CrushMap定义信息

[root@ceph-node-1 ~]# ceph osd crush dump

{

"devices": [

{

"id": 0,

"name": "osd.0",

"class": "hdd"

},

{

"id": 1,

"name": "osd.1",

"class": "hdd"

},

{

"id": 2,

"name": "osd.2",

"class": "hdd"

},

{

"id": 3,

"name": "osd.3",

"class": "hdd"

},

{

"id": 4,

"name": "osd.4",

"class": "hdd"

},

{

"id": 5,

"name": "device5"

},

{

"id": 6,

"name": "osd.6",

"class": "hdd"

},

{

"id": 7,

"name": "osd.7",

"class": "hdd"

}

],

"types": [

{

"type_id": 0,

"name": "osd"

},

{

"type_id": 1,

"name": "host"

},

{

"type_id": 2,

"name": "chassis"

},

{

"type_id": 3,

"name": "rack"

},

{

"type_id": 4,

"name": "row"

},

{

"type_id": 5,

"name": "pdu"

},

{

"type_id": 6,

"name": "pod"

},

{

"type_id": 7,

"name": "room"

},

{

"type_id": 8,

"name": "datacenter"

},

{

"type_id": 9,

"name": "zone"

},

{

"type_id": 10,

"name": "region"

},

{

"type_id": 11,

"name": "root"

}

],

"buckets": [

{

"id": -1,

"name": "default",

"type_id": 11,

"type_name": "root",

"weight": 4494,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": -3,

"weight": 1926,

"pos": 0

},

{

"id": -5,

"weight": 1926,

"pos": 1

},

{

"id": -7,

"weight": 642,

"pos": 2

}

]

},

{

"id": -2,

"name": "default~hdd",

"type_id": 11,

"type_name": "root",

"weight": 4494,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": -4,

"weight": 1926,

"pos": 0

},

{

"id": -6,

"weight": 1926,

"pos": 1

},

{

"id": -8,

"weight": 642,

"pos": 2

}

]

},

{

"id": -3,

"name": "ceph-node-1",

"type_id": 1,

"type_name": "host",

"weight": 1926,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 0,

"weight": 642,

"pos": 0

},

{

"id": 3,

"weight": 642,

"pos": 1

},

{

"id": 6,

"weight": 642,

"pos": 2

}

]

},

{

"id": -4,

"name": "ceph-node-1~hdd",

"type_id": 1,

"type_name": "host",

"weight": 1926,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 0,

"weight": 642,

"pos": 0

},

{

"id": 3,

"weight": 642,

"pos": 1

},

{

"id": 6,

"weight": 642,

"pos": 2

}

]

},

{

"id": -5,

"name": "ceph-node-2",

"type_id": 1,

"type_name": "host",

"weight": 1926,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 1,

"weight": 642,

"pos": 0

},

{

"id": 4,

"weight": 642,

"pos": 1

},

{

"id": 7,

"weight": 642,

"pos": 2

}

]

},

{

"id": -6,

"name": "ceph-node-2~hdd",

"type_id": 1,

"type_name": "host",

"weight": 1926,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 1,

"weight": 642,

"pos": 0

},

{

"id": 4,

"weight": 642,

"pos": 1

},

{

"id": 7,

"weight": 642,

"pos": 2

}

]

},

{

"id": -7,

"name": "ceph-node-3",

"type_id": 1,

"type_name": "host",

"weight": 642,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 2,

"weight": 642,

"pos": 0

}

]

},

{

"id": -8,

"name": "ceph-node-3~hdd",

"type_id": 1,

"type_name": "host",

"weight": 642,

"alg": "straw2",

"hash": "rjenkins1",

"items": [

{

"id": 2,

"weight": 642,

"pos": 0

}

]

}

],

"rules": [

{

"rule_id": 0,

"rule_name": "replicated_rule",

"ruleset": 0,

"type": 1,

"min_size": 1,

"max_size": 10,

"steps": [

{

"op": "take",

"item": -1,

"item_name": "default"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

],

"tunables": {

"choose_local_tries": 0,

"choose_local_fallback_tries": 0,

"choose_total_tries": 50,

"chooseleaf_descend_once": 1,

"chooseleaf_vary_r": 1,

"chooseleaf_stable": 1,

"straw_calc_version": 1,

"allowed_bucket_algs": 54,

"profile": "jewel",

"optimal_tunables": 1,

"legacy_tunables": 0,

"minimum_required_version": "jewel",

"require_feature_tunables": 1,

"require_feature_tunables2": 1,

"has_v2_rules": 0,

"require_feature_tunables3": 1,

"has_v3_rules": 0,

"has_v4_buckets": 1,

"require_feature_tunables5": 1,

"has_v5_rules": 0

},

"choose_args": {}

}

![【Linux】-Linux基础命令[2]](https://img-blog.csdnimg.cn/direct/b0d5eed8dcbb407a983768b6e5abe702.png)