flash_attn安装

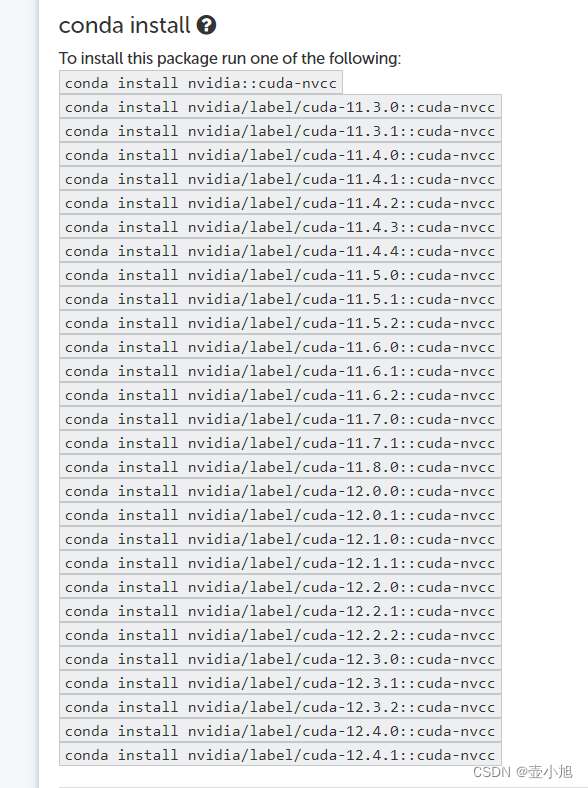

1. cuda-nvcc安装

https://anaconda.org/nvidia/cuda-nvcc

2. torch安装

# https://pytorch.org/

# 找到对应cuda版本的torch进行安装

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

3. flash_attn安装

访问该网站,找到对应torch、python、cuda版本的flash_attn进行下载,并上传到服务器

https://github.com/Dao-AILab/flash-attention/releases/

#例如python3.8 torch2.3 cuda12

pip install flash_attn-2.5.8+cu122torch2.3cxx11abiFALSE-cp38-cp38-linux_x86_64.whl

4. transform安装

如果出现该错误cannot import name ‘is_flash_attn_available’ from ‘transformers.utils’,可以参考

pip install transformers==4.34.1

![[详解]Spring AOP](https://img-blog.csdnimg.cn/direct/8867272fd9bd40e1a0ff4cb4ceac3fee.png)