文章目录

- 准备工作

- 1、升级操作系统内核

- 1.1、查看操作系统和内核版本

- 1.2、下载内核离线升级包

- 1.3、升级内核

- 1.4、确认内核版本

- 2、修改主机名/hosts文件

- 2.1、修改主机名

- 2.2、修改hosts文件

- 3、关闭防火墙

- 4、关闭SELINUX配置

- 5、时间同步

- 5.1、下载NTP

- 5.2、卸载

- 5.3、安装

- 5.4、配置

- 5.4.1、主节点配置

- 5.4.2、从节点配置

- 6、配置内核路由转发及网桥过滤

- 7、安装ipset、ipvsadm

- 7.1、下载

- 8、关闭swap交换区

- 9、配置ssh免密登录

- 10、安装etcd集群

- 10.1、下载

- 10.2、生成配置文件

- 10.3、启动

- 11、安装docker-ce/cri-dockerd

- 11.1、安装docker-ce/containerd.io

- 11.1.1、下载

- 11.1.2、安装

- 11.2、安装cri-dockerd

- 11.2.1、下载

- 11.2.2、安装

- 12、安装docker-compose

- 12.1、下载

- 12.2、安装

- 13、安装nginx+keepalived

- 13.1、安装nginx

- 13.1.1、下载nginx镜像

- 13.1.2、安装

- 创建docker-compose.yml

- 13.2、安装keepalived

- 13.2.1、下载keepalived

- 13.2.2、下载gcc(已下载)

- 13.2.3、下载openssl

- 13.2.4、安装gcc

- 13.2.5、安装openssl

- 13.2.6、安装keepalived

- 14、安装kubernetes

- 14.1、下载kubelet kubeadm kubectl

- 14.2、安装kubelet kubeadm kubectl

- 14.3、安装tab命令补全工具(可选)

- 14.4、下载K8S运行依赖的镜像

- 14.5、安装docker registry并做一些关联配置

- 14.5.1、下载docker-registry

- 14.5.2、安装docker-registry

- 14.5.3、将k8s依赖的镜像传入docker-registry

- 14.5.4、修改cri-docker将pause镜像修改为docker-registry中的

- 14.6、安装kubernetes

- 14.6.1、k8s-master01安装

- 14.6.2、k8s-master02/3安装

- 14.6.3、k8s-node01/2安装

- 14.7、安装网络组件calico

- 14.7.1、下载镜像

- 14.7.2、安装

准备工作

| IP | 用途 |

|---|---|

| 192.168.115.11 | k8s-master01 |

| 192.168.115.12 | k8s-master02 |

| 192.168.115.13 | k8s-master03 |

| 192.168.115.101 | k8s-node01 |

| 192.168.115.102 | k8s-node02 |

| 192.168.115.10 | vip |

1、升级操作系统内核

每台机器都执行

1.1、查看操作系统和内核版本

查看内核:

[root@localhost ~]# uname -r

3.10.0-1160.71.1.el7.x86_64

[root@localhost ~]#

[root@localhost ~]# cat /proc/version

Linux version 3.10.0-1160.71.1.el7.x86_64 (mockbuild@kbuilder.bsys.centos.org) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC) ) #1 SMP Tue Jun 28 15:37:28 UTC 2022

查看操作系统:

[root@localhost ~]# cat /etc/*release

CentOS Linux release 7.9.2009 (Core)

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"

CentOS Linux release 7.9.2009 (Core)

CentOS Linux release 7.9.2009 (Core)

[root@localhost ~]#

1.2、下载内核离线升级包

下载地址:https://elrepo.org/linux/kernel/el7/x86_64/RPMS/

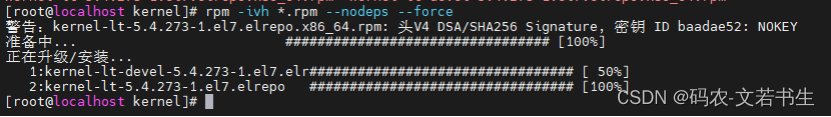

1.3、升级内核

上传下载的内核安装包,执行命令:

rpm -ivh *.rpm --nodeps --force

安装过程截图:

执行命令:

awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

命令截图:

修改/etc/default/grub

GRUB_DEFAULT=saved 改为 GRUB_DEFAULT=0,保存退出

重新加载内核

grub2-mkconfig -o /boot/grub2/grub.cfg

重启机器

reboot

1.4、确认内核版本

[root@localhost ~]# uname -r

5.4.273-1.el7.elrepo.x86_64

[root@localhost ~]#

Linux version 5.4.273-1.el7.elrepo.x86_64 (mockbuild@Build64R7) (gcc version 9.3.1 20200408 (Red Hat 9.3.1-2) (GCC)) #1 SMP Wed Mar 27 15:58:08 EDT 2024

[root@localhost ~]#

2、修改主机名/hosts文件

2.1、修改主机名

192.168.115.11上执行:

hostnamectl set-hostname k8s-master01

192.168.115.12上执行:

hostnamectl set-hostname k8s-master02

192.168.115.13上执行:

hostnamectl set-hostname k8s-master03

192.168.115.101上执行:

hostnamectl set-hostname k8s-node01

192.168.115.102上执行:

hostnamectl set-hostname k8s-node02

2.2、修改hosts文件

每台机器上执行。

cat >> /etc/hosts << EOF

192.168.115.11 k8s-master01

192.168.115.12 k8s-master02

192.168.115.13 k8s-master03

192.168.115.101 k8s-node01

192.168.115.102 k8s-node02

EOF

3、关闭防火墙

每台机器上执行:

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl status firewalld.service

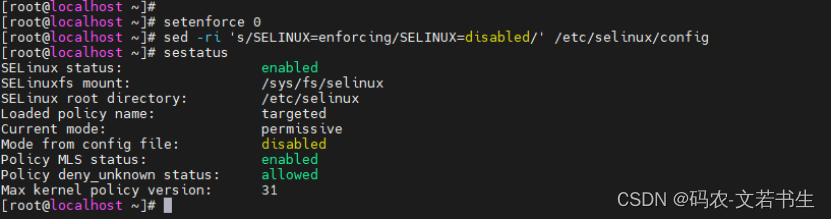

4、关闭SELINUX配置

每台机器上执行:

setenforce 0

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

sestatus

5、时间同步

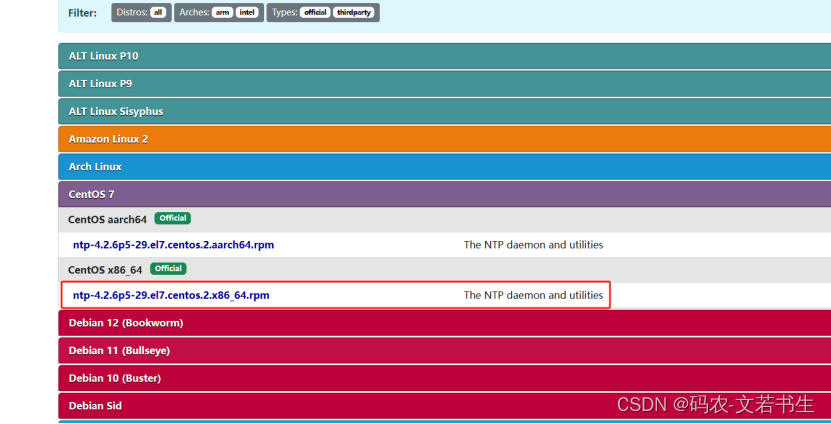

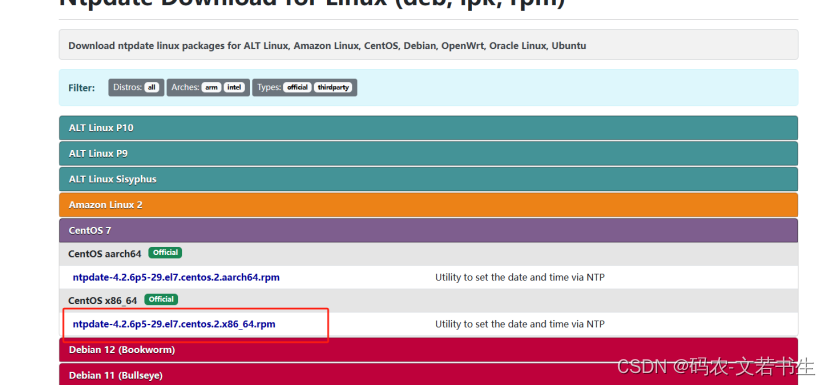

5.1、下载NTP

下载地址:https://pkgs.org/download/ntp

https://pkgs.org/download/ntpdate

https://pkgs.org/download/libopts.so.25()(64bit)

5.2、卸载

每个机器都执行。

如果已安装了ntp,查询版本信息,如果版本不对,可卸载

查询ntp:

rpm -qa | grep ntp

卸载:

rpm -e --nodeps ntp-xxxx

5.3、安装

每个机器都执行。

将ntp、ntpdate、libopts上传至各个机器,执行安装命令。

rpm -ivh *.rpm

设置开机自启

systemctl start ntpd

systemctl enable ntpd

5.4、配置

将一台机器设置为ntp主节点(这里使用192.168.115.11),其他几台机器为从节点

5.4.1、主节点配置

vi /etc/ntp.conf

按下面的配置注释一些信息添加或修改中文注释附近的配置, 其中192.168.115.0是这几台机器所在的网段。

完整配置如下:

# For more information about this file, see the man pages

# ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).

driftfile /var/lib/ntp/drift

# Permit time synchronization with our time source, but do not

# permit the source to query or modify the service on this system.

restrict default nomodify notrap nopeer noquery

# Permit all access over the loopback interface. This could

# be tightened as well, but to do so would effect some of

# the administrative functions.

restrict 127.0.0.1

restrict ::1

# Hosts on local network are less restricted.

# 允许内网其他机器同步时间,如果不添加该约束默认允许所有IP访问本机同步服务

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

restrict 192.168.115.0 mask 255.255.255.0 nomodify notrap

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

# 配置和上游标准时间同步

server 210.72.145.44 # 中国国家授时中心

server 133.100.11.8 #日本[福冈大学]

server 0.cn.pool.ntp.org

server 1.cn.pool.ntp.org

server 2.cn.pool.ntp.org

server 3.cn.pool.ntp.org

# 配置允许上游时间服务器主动修改本机(内网ntp Server)的时间

restrict 210.72.145.44 nomodify notrap noquery

restrict 133.100.11.8 nomodify notrap noquery

restrict 0.cn.pool.ntp.org nomodify notrap noquery

restrict 1.cn.pool.ntp.org nomodify notrap noquery

restrict 2.cn.pool.ntp.org nomodify notrap noquery

restrict 3.cn.pool.ntp.org nomodify notrap noquery

# 确保localhost有足够权限,使用没有任何限制关键词的语法。

# 外部时间服务器不可用时,以本地时间作为时间服务。

# 注意:这里不能改,必须使用127.127.1.0,否则会导致无法

#在ntp客户端运行ntpdate serverIP,出现no server suitable for synchronization found的错误。

#在ntp客户端用ntpdate –d serverIP查看,发现有“Server dropped: strata too high”的错误,并且显示“stratum 16”。而正常情况下stratum这个值得范围是“0~15”。

#这是因为NTP server还没有和其自身或者它的server同步上。

#以下的定义是让NTP Server和其自身保持同步,如果在ntp.conf中定义的server都不可用时,将使用local时间作为ntp服务提供给ntp客户端。

#下面这个配置,建议NTP Client关闭,建议NTP Server打开。因为Client如果打开,可能导致NTP自动选择合适的最近的NTP Server、也就有可能选择了LOCAL作为Server进行同步,而不与远程Server进行同步。

server 127.127.1.0 iburst

fudge 127.127.1.0 stratum 10

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

#manycastserver 239.255.254.254 # manycast server

#manycastclient 239.255.254.254 autokey # manycast client

# Enable public key cryptography.

#crypto

includefile /etc/ntp/crypto/pw

# Key file containing the keys and key identifiers used when operating

# with symmetric key cryptography.

keys /etc/ntp/keys

# Specify the key identifiers which are trusted.

#trustedkey 4 8 42

# Specify the key identifier to use with the ntpdc utility.

#requestkey 8

# Specify the key identifier to use with the ntpq utility.

#controlkey 8

# Enable writing of statistics records.

#statistics clockstats cryptostats loopstats peerstats

# Disable the monitoring facility to prevent amplification attacks using ntpdc

# monlist command when default restrict does not include the noquery flag. See

# CVE-2013-5211 for more details.

# Note: Monitoring will not be disabled with the limited restriction flag.

disable monitor

重启ntp

systemctl restart ntpd

5.4.2、从节点配置

vi /etc/ntp.conf

按下面的配置注释一些信息添加或修改中文注释附近的配置, 其中192.168.115.11是NTP服务节点的IP。

完整配置:

# For more information about this file, see the man pages

# ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).

driftfile /var/lib/ntp/drift

# Permit time synchronization with our time source, but do not

# permit the source to query or modify the service on this system.

restrict default nomodify notrap nopeer noquery

# Permit all access over the loopback interface. This could

# be tightened as well, but to do so would effect some of

# the administrative functions.

restrict 127.0.0.1

restrict ::1

# Hosts on local network are less restricted.

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

#配置上游时间服务器为本地的ntpd Server服务器

server 192.168.115.11 iburst

# 配置允许上游时间服务器主动修改本机的时间

restrict 192.168.115.11 nomodify notrap noquery

#下面这个配置,建议NTP Client关闭,建议NTP Server打开。因为Client如果打开,可能导致NTP自动选择合适的最近的NTP Server、也就有可能选择了LOCAL作为Server进行同步,而不与远程Server进行同步。

#server 127.127.1.0

#fudge 127.127.1.0 stratum 10

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

#manycastserver 239.255.254.254 # manycast server

#manycastclient 239.255.254.254 autokey # manycast client

# Enable public key cryptography.

#crypto

includefile /etc/ntp/crypto/pw

# Key file containing the keys and key identifiers used when operating

# with symmetric key cryptography.

keys /etc/ntp/keys

# Specify the key identifiers which are trusted.

#trustedkey 4 8 42

# Specify the key identifier to use with the ntpdc utility.

#requestkey 8

# Specify the key identifier to use with the ntpq utility.

#controlkey 8

# Enable writing of statistics records.

#statistics clockstats cryptostats loopstats peerstats

# Disable the monitoring facility to prevent amplification attacks using ntpdc

# monlist command when default restrict does not include the noquery flag. See

# CVE-2013-5211 for more details.

# Note: Monitoring will not be disabled with the limited restriction flag.

disable monitor

重启ntp

systemctl restart ntpd

查看ntp服务状态

[root@localhost ntp]# systemctl status ntpd

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2024-04-08 21:36:18 CST; 3min 42s ago

Process: 9129 ExecStart=/usr/sbin/ntpd -u ntp:ntp $OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 9130 (ntpd)

CGroup: /system.slice/ntpd.service

└─9130 /usr/sbin/ntpd -u ntp:ntp -g

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen and drop on 0 v4wildcard 0.0.0.0 UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen and drop on 1 v6wildcard :: UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen normally on 2 lo 127.0.0.1 UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen normally on 3 ens33 192.168.115.12 UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen normally on 4 ens33 fe80::20c:29ff:febe:19d4 UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listen normally on 5 lo ::1 UDP 123

4月 08 21:36:18 k8s-master02 ntpd[9130]: Listening on routing socket on fd #22 for interface updates

4月 08 21:36:18 k8s-master02 ntpd[9130]: 0.0.0.0 c016 06 restart

4月 08 21:36:18 k8s-master02 ntpd[9130]: 0.0.0.0 c012 02 freq_set kernel 0.000 PPM

4月 08 21:36:18 k8s-master02 ntpd[9130]: 0.0.0.0 c011 01 freq_not_set

[root@localhost ntp]#

查看ntp服务器有无和上层ntp连通

[root@localhost ntp]# ntpstat

unsynchronised

time server re-starting

polling server every 8 s

[root@localhost ntp]#

查看ntp服务器和上层ntp的状态

[root@localhost ntp]# ntpq -p

remote refid st t when poll reach delay offset jitter

=============================================================================

k8s-master01 .INIT. 16 u 32 64 0 0.000 0.000 0.000

[root@localhost ntp]#

6、配置内核路由转发及网桥过滤

# 添加网桥过滤及内核转发配置文件

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.swappiness=0

EOF

# 加载br_netfilter模块

modprobe br_netfilter

# 查看是否加载成功

[root@localhost ntp]# lsmod | grep br_netfilter

br_netfilter 28672 0

# 使其生效

sysctl --system

7、安装ipset、ipvsadm

本次安装使用的景象ipset已经安装了不再安装,仅安装ipvsadm

7.1、下载

yum -y install --downloadonly --downloaddir /opt/software/ipset_ipvsadm ipset ipvsadm

7.2、安装

每台机器都安装。

将ipvsadm的rpm安装包上传至服务器

安装:

rpm -ivh ipvsadm-1.27-8.el7.x86_64.rpm

8、关闭swap交换区

# 临时关闭Swap分区

swapoff -a

# 永久关闭Swap分区

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 查看下

grep swap /etc/fstab

9、配置ssh免密登录

在一台机器上创建:

[root@k8s-master01 ~]# ssh-keygen

Generating public/private rsa key pair.

# 回车

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

# 回车

Enter passphrase (empty for no passphrase):

# 回车

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:wljf8M0hYRw4byXHnwgQpZcVCGA8R0+FmzXfHYpSzE8 root@k8s-master01

The key's randomart image is:

+---[RSA 2048]----+

| .oo=BO*+. |

| .o +=*B*E . |

| .ooo*O==.oo|

| + . *==.++ o|

| . o S.+ o |

| . |

| |

| |

| |

+----[SHA256]-----+

[root@k8s-master01 ~]#

复制id_rsa.pub

[root@k8s-master01 ~]# cd /root/.ssh

[root@k8s-master01 .ssh]# ls

id_rsa id_rsa.pub

# 复制

[root@k8s-master01 .ssh]# cp id_rsa.pub authorized_keys

[root@k8s-master01 .ssh]# ll

总用量 12

-rw-r--r--. 1 root root 399 4月 8 22:34 authorized_keys

-rw-------. 1 root root 1766 4月 8 22:31 id_rsa

-rw-r--r--. 1 root root 399 4月 8 22:31 id_rsa.pub

[root@k8s-master01 .ssh]#

在其他机器创建/root/.ssh目录

mkdir -p /root/.ssh

将/root/.ssh拷贝到其他机器

scp -r /root/.ssh/* 192.168.115.12:/root/.ssh/

scp -r /root/.ssh/* 192.168.115.13:/root/.ssh/

scp -r /root/.ssh/* 192.168.115.101:/root/.ssh/

scp -r /root/.ssh/* 192.168.115.102:/root/.ssh/

到各个机器验证免密

[root@k8s-node01 ~]# ssh root@192.168.115.11

The authenticity of host '192.168.115.11 (192.168.115.11)' can't be established.

ECDSA key fingerprint is SHA256:DmSlU9aS8ikfAB9IHc6N7HMY/X/Z4qc6QGA0/TrhRo8.

ECDSA key fingerprint is MD5:6d:08:b2:e4:18:d0:78:eb:9a:92:2b:1e:4d:a4:e6:28.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.115.11' (ECDSA) to the list of known hosts.

Last login: Mon Apr 8 22:42:08 2024 from k8s-master03

[root@k8s-master01 ~]# exit

登出

10、安装etcd集群

10.1、下载

下载地址:

https://github.com/coreos/etcd/releases/download/v3.5.11/etcd-v3.5.11-linux-amd64.tar.gz

解压并移动到/usr/local/bin

tar xzvf etcd-v3.5.11-linux-amd64.tar.gz

cd etcd-v3.5.11-linux-amd64/

mv etcd* /usr/local/bin

10.2、生成配置文件

分别在三个主节点生成etcd.service配置文件。

K8s-master01:

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \

--name=k8s-master01 \

--data-dir=/var/lib/etcd/default.etcd \

--listen-peer-urls=http://192.168.115.11:2380 \

--listen-client-urls=http://192.168.115.11:2379,http://127.0.0.1:2379 \

--advertise-client-urls=http://192.168.115.11:2379 \

--initial-advertise-peer-urls=http://192.168.115.11:2380 \

--initial-cluster=k8s-master01=http://192.168.115.11:2380,k8s-master02=http://192.168.115.12:2380,k8s-master03=http://192.168.115.13:2380 \

--initial-cluster-token=smartgo \

--initial-cluster-state=new

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

k8s-master02:

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \

--name=k8s-master02 \

--data-dir=/var/lib/etcd/default.etcd \

--listen-peer-urls=http://192.168.115.12:2380 \

--listen-client-urls=http://192.168.115.12:2379,http://127.0.0.1:2379 \

--advertise-client-urls=http://192.168.115.12:2379 \

--initial-advertise-peer-urls=http://192.168.115.12:2380 \

--initial-cluster=k8s-master01=http://192.168.115.11:2380,k8s-master02=http://192.168.115.12:2380,k8s-master03=http://192.168.115.13:2380 \

--initial-cluster-token=smartgo \

--initial-cluster-state=new

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

k8s-master03:

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \

--name=k8s-master03 \

--data-dir=/var/lib/etcd/default.etcd \

--listen-peer-urls=http://192.168.115.13:2380 \

--listen-client-urls=http://192.168.115.13:2379,http://127.0.0.1:2379 \

--advertise-client-urls=http://192.168.115.13:2379 \

--initial-advertise-peer-urls=http://192.168.115.13:2380 \

--initial-cluster=k8s-master01=http://192.168.115.11:2380,k8s-master02=http://192.168.115.12:2380,k8s-master03=http://192.168.115.13:2380 \

--initial-cluster-token=smartgo \

--initial-cluster-state=new

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

10.3、启动

在三个主节点执行:

systemctl enable --now etcd

查看etcd是否启动成功

# 查看etcd状态

[root@k8s-master01 etcd-v3.5.11-linux-amd64]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2024-04-08 23:23:31 CST; 26s ago

Main PID: 9623 (etcd)

CGroup: /system.slice/etcd.service

└─9623 /usr/local/bin/etcd --name=k8s-master01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=http://192.168.115.11:2380 --listen-client-urls=http://192.168.115.11:2379,http://127.0.0.1:2379 --advertise-client-urls=http://192.168.115.11:2379 --initial-advertise-...

4月 08 23:23:33 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:33.169835+0800","caller":"rafthttp/stream.go:249","msg":"set message encoder","from":"9dabc06b927824f3","to":"1e93e73748d8f538","stream-type":"stream MsgApp v2"}

4月 08 23:23:33 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:33.169847+0800","caller":"rafthttp/stream.go:274","msg":"established TCP streaming connection with remote peer","stream-writer-type":"stream MsgApp v2","local-member-id":"9dabc06b9...d":"1e93e73748d8f538"}

4月 08 23:23:33 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:33.169911+0800","caller":"rafthttp/stream.go:412","msg":"established TCP streaming connection with remote peer","stream-reader-type":"stream Message","local-member-id":"9dabc06b927...d":"1e93e73748d8f538"}

4月 08 23:23:35 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:35.599089+0800","caller":"etcdserver/server.go:2580","msg":"updating cluster version using v2 API","from":"3.0","to":"3.5"}

4月 08 23:23:35 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:35.61736+0800","caller":"membership/cluster.go:576","msg":"updated cluster version","cluster-id":"7d449573da26fc1a","local-member-id":"9dabc06b927824f3","from":"3.0","to":"3.5"}

4月 08 23:23:35 k8s-master01 etcd[9623]: {"level":"info","ts":"2024-04-08T23:23:35.617467+0800","caller":"etcdserver/server.go:2599","msg":"cluster version is updated","cluster-version":"3.5"}

4月 08 23:23:37 k8s-master01 etcd[9623]: {"level":"warn","ts":"2024-04-08T23:23:37.046053+0800","caller":"rafthttp/probing_status.go:82","msg":"prober found high clock drift","round-tripper-name":"ROUND_TRIPPER_RAFT_MESSAGE","remote-peer-id":"1e93e73748d8f538"...","rtt":"14.778195ms"}

4月 08 23:23:37 k8s-master01 etcd[9623]: {"level":"warn","ts":"2024-04-08T23:23:37.046076+0800","caller":"rafthttp/probing_status.go:82","msg":"prober found high clock drift","round-tripper-name":"ROUND_TRIPPER_RAFT_MESSAGE","remote-peer-id":"9c555681cd4d45b4"…421s","rtt":"172.218µs"}

4月 08 23:23:37 k8s-master01 etcd[9623]: {"level":"warn","ts":"2024-04-08T23:23:37.0461+0800","caller":"rafthttp/probing_status.go:82","msg":"prober found high clock drift","round-tripper-name":"ROUND_TRIPPER_SNAPSHOT","remote-peer-id":"1e93e73748d8f538","cloc...","rtt":"14.779498ms"}

4月 08 23:23:37 k8s-master01 etcd[9623]: {"level":"warn","ts":"2024-04-08T23:23:37.046036+0800","caller":"rafthttp/probing_status.go:82","msg":"prober found high clock drift","round-tripper-name":"ROUND_TRIPPER_SNAPSHOT","remote-peer-id":"9c555681cd4d45b4","cl…3306s","rtt":"121.37µs"}

Hint: Some lines were ellipsized, use -l to show in full.

# 查看etcd版本

[root@k8s-master01 etcd-v3.5.11-linux-amd64]# etcd --version

etcd Version: 3.5.11

Git SHA: 3b252db4f

Go Version: go1.20.12

Go OS/Arch: linux/amd64

# 查看etcd成员

[root@k8s-master01 etcd-v3.5.11-linux-amd64]# etcdctl member list

1e93e73748d8f538, started, k8s-master03, http://192.168.115.13:2380, http://192.168.115.13:2379, false

9c555681cd4d45b4, started, k8s-master02, http://192.168.115.12:2380, http://192.168.115.12:2379, false

9dabc06b927824f3, started, k8s-master01, http://192.168.115.11:2380, http://192.168.115.11:2379, false

11、安装docker-ce/cri-dockerd

11.1、安装docker-ce/containerd.io

11.1.1、下载

在一个有网机器上(和各个虚拟机的系统一致)将依赖包下载下来

(1)配置阿里云源

cd /etc/yum.repos.d/

# 备份默认的repo文件

mkdir bak && mv *.repo bak

# 下载阿里云yum源文件

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# 清理、更新缓存

yum clean all && yum makecache

(2)如果存在docker,卸载

yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine

(3)建议重新安装epel源

rpm -qa | grep epel

yum remove epel-release

yum -y install epel-release

(4)安装yum-utils

yum install -y yum-utils

(5)添加docker仓库

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(6)更新软件包索引

yum makecache fast

(7)下载RPM包

# 查看docker版本,这里选择25.0.5

yum list docker-ce --showduplicates |sort –r

# 查看containerd.io版本,这里选择1.6.31

yum list containerd.io --showduplicates |sort –r

# 下载命令,下载后包在/tmp/docker下

mkdier -p /tmp/docker

yum install -y docker-ce-25.0.5 docker-ce-cli-25.0.5 containerd.io-1.6.31 --downloadonly --downloaddir=/tmp/docker

11.1.2、安装

每台机器都安装

将下载好的安装包上传至各个虚拟机

rpm -ivh *.rpm

启动docker

systemctl daemon-reload #重载unit配置文件

systemctl start docker #启动Docker

systemctl enable docker.service #设置开机自启

查看docker版本

[root@k8s-master01 docker-ce]# docker --version

Docker version 25.0.5, build 5dc9bcc

[root@k8s-master01 docker-ce]#

11.2、安装cri-dockerd

在 Kubernetes v1.24 及更早版本中,可以在 Kubernetes 中使用 Docker Engine, 依赖于一个称作 dockershim 的内置 Kubernetes 组件。 dockershim 组件在 Kubernetes v1.24 发行版本中已被移除;不过,一种来自第三方的替代品, cri-dockerd 是可供使用的。 cri-dockerd 适配器允许通过 容器运行时接口(Container Runtime Interface,CRI) 来使用 Docker Engine。

11.2.1、下载

下载地址:[https://github.com/Mirantis/cri-dockerd/releases](https://github.com/Mirantis/cri-dockerd/releases)

选择对应的架构和版本,这里下载:[https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.8/cri-dockerd-0.3.8-3.el7.x86_64.rpm](https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.8/cri-dockerd-0.3.8-3.el7.x86_64.rpm)

11.2.2、安装

每台机器都安装

将RPM包上传至机器

#安装

rpm -ivh cri-dockerd-0.3.8-3.el7.x86_64.rpm

# 修改/usr/lib/system/system/cri-docker.service中ExecStart那一行,制定用作Pod的基础容器镜像(pause)

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.9 --container-runtime-endpoint fd://

启动cri-dockerd

systemctl enable --now cri-docker

查看状态

[root@k8s-master01 cri-dockerd]# systemctl status cri-docker

● cri-docker.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/cri-docker.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2024-04-09 03:20:54 CST; 16s ago

Docs: https://docs.mirantis.com

Main PID: 11598 (cri-dockerd)

Tasks: 8

Memory: 14.3M

CGroup: /system.slice/cri-docker.service

└─11598 /usr/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.9 --container-runtime-endpoint fd://

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Hairpin mode is set to none"

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="The binary conntrack is not installed, this can cause failures in network connection cleanup."

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="The binary conntrack is not installed, this can cause failures in network connection cleanup."

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Loaded network plugin cni"

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Docker cri networking managed by network plugin cni"

4月 09 03:20:54 k8s-master01 systemd[1]: Started CRI Interface for Docker Application Container Engine.

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Setting cgroupDriver systemd"

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Docker cri received runtime config &RuntimeConfig{NetworkConfig:&NetworkConfig{PodCidr:,},}"

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Starting the GRPC backend for the Docker CRI interface."

4月 09 03:20:54 k8s-master01 cri-dockerd[11598]: time="2024-04-09T03:20:54+08:00" level=info msg="Start cri-dockerd grpc backend"

[root@k8s-master01 cri-dockerd]#

12、安装docker-compose

12.1、下载

下载地址:https://github.com/docker/compose/releases

这里下载2.24.7版本,完整地址为:https://github.com/docker/compose/releases/download/v2.24.7/docker-compose-linux-x86_64

12.2、安装

每个机器都安装

将安装包上传至机器

#设置执行权限

mv docker-compose-linux-x86_64 docker-compose

chmod +x docker-compose

#配置环境变量

vi /etc/profile

在最后加上

export PATH=<docker-compose所在的文件夹>:$PATH

# 刷新环境变量

source /etc/profile

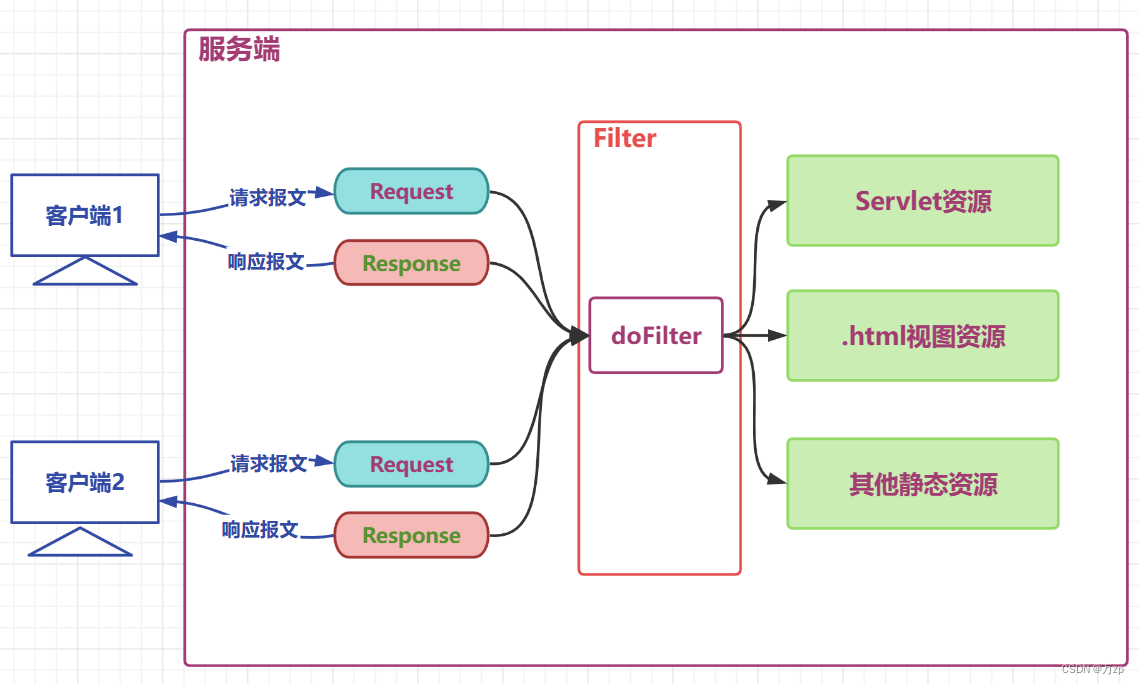

13、安装nginx+keepalived

keepalived+nginx 实现高可用+反向代理,这里为了节约服务器,将keepalived+nginx部署在master节点上。

keepalived会虚拟一个vip(192.168.115.10),vip任意绑定在一台master节点上,使用nginx对3台master节点进行反向代理。

在初始化k8s集群的使用,IP填写的vip,这样安装好k8s集群之后,kubectl客户端而言,访问的vip:16443端口,

该端口是nginx监听的端口,nginx会进行反向代理到3个master节点上的6443端口。

13.1、安装nginx

13.1.1、下载nginx镜像

在一台有网的机器执行:

# 下载镜像

docker pull nginx

# 保存镜像为tar

docker save -o nginx.tar nginx:latest

13.1.2、安装

三个主节点安装。

# 三个机器上运行

mkdir -p /home/admin/software/docker/nginx/{conf,html,cert,logs}

# 在三个机器上分别执行

echo '192.168.115.11'>/opt/software/nginx/html/index.html

echo '192.168.115.12'>/opt/software/nginx/html/index.html

echo '192.168.115.13'>/opt/software/nginx/html/index.html

编写nginx配置文件,修改upstream处各个端口,改为三个master节点的IP

vi /opt/software/nginx/conf/nginx.conf

#添加内容

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.115.11:6443;

server 192.168.115.12:6443;

server 192.168.115.13:6443;

}

server {

listen 16443;

proxy_pass k8s-apiserver;

}

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

将nginx.tar上传至三个主节点的服务器,解压镜像

docker load -i nginx.tar

使用docker-compose安装(三个主节点都安装)

192.168.115.11执行:

#创建目录

mkdir -p /opt/software/nginx/docker-compose

cd /opt/software/nginx/docker-compose

创建docker-compose.yml

vi docker-compose.yml

# 添加内容:

version: '3'

services:

nginx:

image: nginx:latest

restart: always

hostname: nginx

container_name: nginx

privileged: true

ports:

- 80:80

- 443:443

- 16443:16443

volumes:

- /usr/share/zoneinfo/Asia/Shanghai:/etc/localtime:ro

- /opt/software/nginx/conf/nginx.conf:/etc/nginx/nginx.conf # 这里是引用的配置文件,主配置文件路径是/etc/nginx/nginx.conf

- /opt/software/nginx/html/:/usr/share/nginx/html/ # 默认显示的index网页

#- /home/admin/software/docker/nginx/cert/:/etc/nginx/cert

- /opt/software/nginx/logs/:/var/log/nginx/ # 日志文件

192.168.115.12执行:

#创建目录

mkdir -p /opt/software/nginx/docker-compose

cd /opt/software/nginx/docker-compose

# 创建docker-compose.yml

vi docker-compose.yml

# 添加内容:

version: '3'

services:

nginx:

image: nginx:latest

restart: always

hostname: nginx

container_name: nginx

privileged: true

ports:

- 80:80

- 443:443

- 16443:16443

volumes:

- /usr/share/zoneinfo/Asia/Shanghai:/etc/localtime:ro

- /opt/software/nginx/conf/nginx.conf:/etc/nginx/nginx.conf # 这里是引用的配置文件,主配置文件路径是/etc/nginx/nginx.conf

- /opt/software/nginx/html/:/usr/share/nginx/html/ # 默认显示的index网页

#- /home/admin/software/docker/nginx/cert/:/etc/nginx/cert

- /opt/software/nginx/logs/:/var/log/nginx/ # 日志文件

192.168.115.13执行:

#创建目录

mkdir -p /opt/software/nginx/docker-compose

cd /opt/software/nginx/docker-compose

# 创建docker-compose.yml

vi docker-compose.yml

# 添加内容:

version: '3'

services:

nginx:

image: nginx:latest

restart: always

hostname: nginx

container_name: nginx

privileged: true

ports:

- 80:80

- 443:443

- 16443:16443

volumes:

- /usr/share/zoneinfo/Asia/Shanghai:/etc/localtime:ro

- /opt/software/nginx/conf/nginx.conf:/etc/nginx/nginx.conf # 这里是引用的配置文件,主配置文件路径是/etc/nginx/nginx.conf

- /opt/software/nginx/html/:/usr/share/nginx/html/ # 默认显示的index网页

#- /home/admin/software/docker/nginx/cert/:/etc/nginx/cert

- /opt/software/nginx/logs/:/var/log/nginx/ # 日志文件

三个主节点都启动

# 在docker-compose.yml所在目录执行

docker-compose up -d

测试

# 每个主节点在docker-compose.yml所在目录执行 docker-compose ps测试

[root@k8s-master01 docker-compose]# docker-compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

nginx nginx:latest "/docker-entrypoint.…" nginx 13 minutes ago Up 13 minutes 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:443->443/tcp, :::443->443/tcp

[root@k8s-master01 docker-compose]#

#三个主节点分别测试

#192.168.115.11测试

[root@k8s-master01 docker-compose]# curl 127.0.0.1

192.168.115.11

#192.168.115.12测试

[root@k8s-master02 docker-compose]# curl 127.0.0.1

192.168.115.12

# 192.168.115.13测试

[root@k8s-master03 docker-compose]# curl 127.0.0.1

192.168.115.13

13.2、安装keepalived

13.2.1、下载keepalived

下载地址:https://www.keepalived.org/download.html

选择版本下载,实际下载地址:https://www.keepalived.org/software/keepalived-2.2.8.tar.gz

13.2.2、下载gcc(已下载)

在一个有网的机器上下载

yum install -y --downloadonly --downloaddir=/opt/software/gcc/ gcc-c++

下载的rpm在目录:/opt/software/gcc

13.2.3、下载openssl

在一个有网的机器上下载

yum -y install --downloadonly --downloaddir=/opt/software/openssl make openssl-devel libnfnetlink-devel libnl3-devel net-snmp-devel

下载的rpm在目录:/opt/software/openssl

13.2.4、安装gcc

三个主节点都执行安装

将gcc包上传至三个主节点机器,执行安装

rpm -ivh *.rpm

13.2.5、安装openssl

三个主节点都执行安装

将openssl包上传至三个主节点机器,执行安装

rpm -Uvh --force *.rpm

13.2.6、安装keepalived

三个主节点都执行安装

将keepalived包上传至三个主节点

#解压

tar -zvxf keepalived-2.2.8.tar.gz

cd keepalived-2.2.8

./configure --prefix=/opt/software/keepalived --sysconf=/etc

make && make install

生成健康检查脚本

vi /etc/keepalived/check_apiserver.sh

# 添加内容

#!/bin/bash

#检测nginx是否启动了

#如果nginx没有启动就启动nginx

if [ "$(ps -ef | grep "nginx: master process"| grep -v grep )" == "" ];then

#重启nginx

docker restart nginx

sleep 5

#nginx重启失败,则停掉keepalived服务,进行VIP转移

if [ "$(ps -ef | grep "nginx: master process"| grep -v grep )" == "" ];then

systemctl stop keepalived

fi

fi

# 赋权

chmod +x /etc/keepalived/check_apiserver.sh

分别在三台机器修改(其中设置的192.168.115.10为VIP)

cd /etc/keepalived

cp keepalived.conf.sample keepalived.conf

分别编辑keepalived.conf

192.168.115.11:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh" #检测脚本文件

interval 5 #检测时间间隔

weight -5 #权重

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER # 主机状态master,从节点为BACKUP

interface ens33 #设置实例绑定的网卡

mcast_src_ip 192.168.115.11 # 广播的原地址,k8s-master01:192.168.115.11,k8s-master02:192.168.115.12,k8s-master03:192.168.115.13

virtual_router_id 51 #同一实例下virtual_router_id必须相同

priority 100 #设置优先级,优先级高的会被竞选为Master

advert_int 2

authentication { #设置认证

auth_type PASS #认证方式,支持PASS和AH

auth_pass K8SHA_KA_AUTH #认证密码

}

virtual_ipaddress { #设置VIP,可以设置多个

192.168.115.10

}

track_script { #设置追踪脚本

chk_apiserver

}

}

192.168.115.12:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh" #检测脚本文件

interval 5 #检测时间间隔

weight -5 #权重

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP # 主机状态master,从节点为BACKUP

interface ens33 #设置实例绑定的网卡

mcast_src_ip 192.168.115.12 # 广播的原地址,k8s-master01:192.168.115.11,k8s-master02:192.168.115.12,k8s-master03:192.168.115.13

virtual_router_id 51 #同一实例下virtual_router_id必须相同

priority 100 #设置优先级,优先级高的会被竞选为Master

advert_int 2

authentication { #设置认证

auth_type PASS #认证方式,支持PASS和AH

auth_pass K8SHA_KA_AUTH #认证密码

}

virtual_ipaddress { #设置VIP,可以设置多个

192.168.115.10

}

track_script { #设置追踪脚本

chk_apiserver

}

}

192.168.115.13:

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh" #检测脚本文件

interval 5 #检测时间间隔

weight -5 #权重

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP # 主机状态master,从节点为BACKUP

interface ens33 #设置实例绑定的网卡

mcast_src_ip 192.168.115.13 # 广播的原地址,k8s-master01:192.168.115.11,k8s-master02:192.168.115.12,k8s-master03:192.168.115.13

virtual_router_id 51 #同一实例下virtual_router_id必须相同

priority 100 #设置优先级,优先级高的会被竞选为Master

advert_int 2

authentication { #设置认证

auth_type PASS #认证方式,支持PASS和AH

auth_pass K8SHA_KA_AUTH #认证密码

}

virtual_ipaddress { #设置VIP,可以设置多个

192.168.115.10

}

track_script { #设置追踪脚本

chk_apiserver

}

}

三个机器都启动

# 启动服务并验证

systemctl daemon-reload

# 开机启动并立即启动

systemctl enable --now keepalived

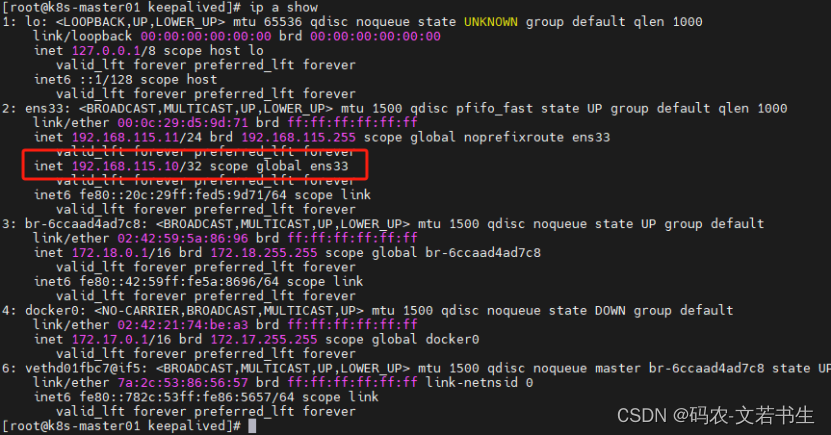

在master的11节点执行:ip a show 会发现多了一个VIP

# 在任意节点执行

[root@k8s-master01 keepalived]# curl 192.168.115.10

192.168.115.11

在master节点停止keepalived : systemctl stop keepalived,模拟事故

去其他两个master执行 ip a show会发现VIP飘移到了其中一个节点

# 在任意节点执行

[root@k8s-master01 keepalived]# curl 192.168.115.10

192.168.115.12

# 结果可以看出访问从11切换到了12,说明keepalived生效了

14、安装kubernetes

14.1、下载kubelet kubeadm kubectl

在一台有网的机器执行:

# 配置镜像源

# k8s源镜像源准备(社区版yum源,注意区分版本)

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

# exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

下载RPM包

#查看可安装的版本,选择合适的版本,这里选择1.30.0-150500.1.1

yum list kubeadm.x86_64 --showduplicates |sort -r

yum list kubelet.x86_64 --showduplicates |sort -r

yum list kubectl.x86_64 --showduplicates |sort -r

# yum下载(不安装)

yum -y install --downloadonly --downloaddir=/opt/software/k8s-package kubeadm-1.30.0-150500.1.1 kubelet-1.30.0-150500.1.1 kubectl-1.30.0-150500.1.1

14.2、安装kubelet kubeadm kubectl

每台机器都执行

将安装包上传至各个机器

# 安装

rpm -ivh *.rpm

修改docker的cgroup-driver

vi /etc/docker/daemon.json

# 添加或修改内容

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

#重启docker

systemctl daemon-reload

systemctl restart docker

systemctl status docker

配置kublet的cgroup 驱动与docker一致

# 备份原文件

cp /etc/sysconfig/kubelet{,.bak}

# 修改kubelet文件

vi /etc/sysconfig/kubelet

# 修改内容

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

开启自启kubelet

systemctl enable kubelet

14.3、安装tab命令补全工具(可选)

在一个有网的机器下载(已下载)

yum install -y --downloadonly --downloaddir=/opt/software/command-tab/ bash-completion

安装

rpm -ivh bash-completion-2.1-8.el7.noarch.rpm

source /usr/share/bash-completion/bash_completion

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

14.4、下载K8S运行依赖的镜像

在一个有网的机器执行下载(已安装过docker的机器)

查看k8s1.30需要依赖的镜像

[root@k8s-master01 ~]# kubeadm config images list

registry.k8s.io/kube-apiserver:v1.30.0

registry.k8s.io/kube-controller-manager:v1.30.0

registry.k8s.io/kube-scheduler:v1.30.0

registry.k8s.io/kube-proxy:v1.30.0

registry.k8s.io/coredns/coredns:v1.11.1

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.12-0

其中etcd不用下载,因为在前面已经安装过了,这里不使用镜像安装。

K8s.io需要梯子才能下载,这里使用阿里云国内镜像

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

docker pull registry.aliyuncs.com/google_containers/coredns:1.11.1

docker pull registry.aliyuncs.com/google_containers/pause:3.9

将docker镜像保存为tar包,并保存待离线使用

docker save -o kube-apiserver-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

docker save -o kube-controller-manager-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

docker save -o kube-scheduler-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

docker save -o kube-proxy-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

docker save -o coredns-1.11.1.tar registry.aliyuncs.com/google_containers/coredns:1.11.1

docker save -o pause-3.9.tar registry.aliyuncs.com/google_containers/pause:3.9

14.5、安装docker registry并做一些关联配置

14.5.1、下载docker-registry

在一个有网的已安装docker的机器上执行

#下载

docker pull docker.io/registry

#保存为tar包待离线使用

docker save -o docker-registry.tar docker.io/registry

14.5.2、安装docker-registry

将docker-registry镜像包上传至一个机器,这里选择k8s-master01

# 解压镜像

docker load -i docker-registry.tar

# 运行docker-registry

mkdir -p /opt/software/registry-data

docker run -d --name registry --restart=always -v /opt/software/registry-data:/var/lib/registry -p 81:5000 docker.io/registry

查看是否已运行

[root@k8s-master01 docker-registry]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

72b1ee0dd35d registry "/entrypoint.sh /etc…" 17 seconds ago Up 15 seconds 0.0.0.0:81->5000/tcp, :::81->5000/tcp registry

14.5.3、将k8s依赖的镜像传入docker-registry

将K8S依赖的镜像上传至k8s-master01节点,执行

docker load -i kube-apiserver-v1.30.0.tar

docker load -i kube-controller-manager-v1.30.0.tar

docker load -i kube-scheduler-v1.30.0.tar

docker load -i kube-proxy-v1.30.0.tar

docker load -i coredns-1.11.1.tar

docker load -i pause-3.9.tar

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0 192.168.115.11:81/kube-apiserver:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0 192.168.115.11:81/kube-controller-manager:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0 192.168.115.11:81/kube-scheduler:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0 192.168.115.11:81/kube-proxy:v1.30.0

docker tag registry.aliyuncs.com/google_containers/coredns:1.11.1 192.168.115.11:81/coredns:v1.11.1

docker tag registry.aliyuncs.com/google_containers/pause:3.9 192.168.115.11:81/pause:3.9

在每台机器执行配置,将docker-registry以及k8s的镜像的地址配置到/etc/docker/daemon.json中

Vi /etc/docker/daemon.json

添加配置

"insecure-registries":["192.168.115.11:81", "quay.io", "k8s.gcr.io", "gcr.io"]

[root@k8s-master02 ~]# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries":["192.168.115.11:81", "quay.io", "k8s.gcr.io", "gcr.io"]

}

# 重启docker

sytemctl daemon-reload

systemctl restart docker

在k8s-master01上将镜像推送到docker-registry

docker push 192.168.115.11:81/kube-apiserver:v1.30.0

docker push 192.168.115.11:81/kube-controller-manager:v1.30.0

docker push 192.168.115.11:81/kube-scheduler:v1.30.0

docker push 192.168.115.11:81/kube-proxy:v1.30.0

docker push 192.168.115.11:81/coredns:v1.11.1

docker push 192.168.115.11:81/pause:3.9

14.5.4、修改cri-docker将pause镜像修改为docker-registry中的

每台电脑都执行

# vi /usr/lib/systemd/system/cri-docker.service

# 修改--pod-infra-container-image=registry.k8s.io/pause:3.9 为--pod-infra-container-image=192.168.115.11:81/pause:3.9

# 重启cri-docker

systemctl daemon-reload

systemctl restart cri-docker

14.6、安装kubernetes

14.6.1、k8s-master01安装

在第一个主节点k8s-master01操作 :

生成kubeadm-config.yaml配置文件

# 查看不同 kind默认配置

kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadm-config.yaml

kubeadm config print init-defaults --component-configs InitConfiguration

kubeadm config print init-defaults --component-configs ClusterConfiguration

修改kubeadm-config.yaml配置文件,如下面的配置文件,需要修改和添加的部分包括:

(1)advertiseAddress处 改为 k8s-master01的IP

(2)添加或修改nodeRegistration: 对应的配置

(3)添加certSANs 处配置,配置为keepalived VIP地址

(4)修改etcd配置

(5)修改imageRepository配置

(6)添加controlPlaneEndpoint处配置,配置为VIP:16443

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.115.11

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master01

taints: null

---

apiServer:

certSANs:

- 192.168.115.10

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

external:

endpoints:

- http://192.168.115.11:2379

- http://192.168.115.12:2379

- http://192.168.115.13:2379

imageRepository: 192.168.115.11:81

kind: ClusterConfiguration

kubernetesVersion: 1.30.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

controlPlaneEndpoint: "192.168.115.10:16443"

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: ""

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMaximumGCAge: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

text:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

K8s-master01执行命令

kubeadm init --config kubeadm-config.yaml --upload-certs --v=9

执行完后成功后会生成一些配置信息,如下

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.115.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3c85f66540e67437ba4db122a736ba3aafb53443961be2605fbc0f9900196ef0 \

--control-plane --certificate-key 3e9843a94c319853455ff67515b84345066363395622438f8a06d10ca75b81b8

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.115.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3c85f66540e67437ba4db122a736ba3aafb53443961be2605fbc0f9900196ef0

其中两处join拷贝出来待用。

执行提示的三条命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

14.6.2、k8s-master02/3安装

在k8s-master02和k8s-master03执行主节点join

在k8s-master01 init后提示的带control-plane的命令后添加--cri-socket unix:///var/run/cri-dockerd.sock

kubeadm join 192.168.115.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3c85f66540e67437ba4db122a736ba3aafb53443961be2605fbc0f9900196ef0 \

--control-plane --certificate-key 3e9843a94c319853455ff67515b84345066363395622438f8a06d10ca75b81b8 \

--cri-socket unix:///var/run/cri-dockerd.sock

执行完后执行三条命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

14.6.3、k8s-node01/2安装

在k8s-node01和k8s-node02执行从节点join

在k8s-master01 init后提示的不带control-plane的命令后添加–cri-socket unix:///var/run/cri-dockerd.sock

kubeadm join 192.168.115.10:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3c85f66540e67437ba4db122a736ba3aafb53443961be2605fbc0f9900196ef0 \

--cri-socket unix:///var/run/cri-dockerd.sock

至此,k8s的5个节点都安装好了。在其中一个主节点通过命令查看节点情况

[root@k8s-master01 kubeadm-config]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 34m v1.30.0

k8s-master02 NotReady control-plane 27m v1.30.0

k8s-master03 NotReady control-plane 18m v1.30.0

k8s-node01 NotReady <none> 10m v1.30.0

k8s-node02 NotReady <none> 10m v1.30.0

[root@k8s-master01 kubeadm-config]#

14.7、安装网络组件calico

14.7.1、下载镜像

在一个有网的机器下载镜像

docker pull docker.io/calico/node:v3.27.3

docker pull docker.io/calico/kube-controllers:v3.27.3

docker pull docker.io/calico/cni:v3.27.3

docker save -o calico-node.tar docker.io/calico/node:v3.27.3

docker save -o calico-kube-controllers.tar docker.io/calico/kube-controllers:v3.27.3

docker save -o calico-cni.tar docker.io/calico/cni:v3.27.3

# 如果以上方式不好下载,从github下载:https://github.com/projectcalico/calico/releases/tag/v3.27.3,选择release-v3.27.3.tgz,下载后解压,从image中找到三个镜像

下载calico.yaml: https://github.com/projectcalico/calico/blob/v3.27.3/manifests/calico.yaml

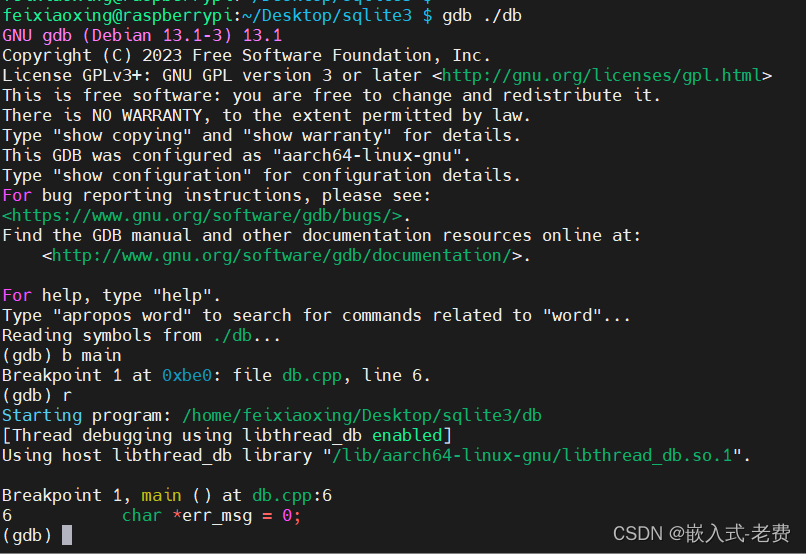

14.7.2、安装

将calico的tar包和calico.yaml上传至k8s-master01

docker load -i calico-cni.tar

docker load -i calico-kube-controllers.tar

docker load -i calico-node.tar

docker tag calico/node:v3.27.3 192.168.115.11:81/calico/node:v3.27.3

docker tag calico/kube-controllers:v3.27.3 192.168.115.11:81/calico/kube-controllers:v3.27.3

docker tag docker.io/calico/cni:v3.27.3 192.168.115.11:81/calico/cni:v3.27.3

docker push 192.168.115.11:81/calico/node:v3.27.3

docker push 192.168.115.11:81/calico/kube-controllers:v3.27.3

docker push 192.168.115.11:81/calico/cni:v3.27.3

将calico.yaml上传至一个主节点

修改其中的镜像,都修改为192.168.115.11:81中的三个镜像:192.168.115.11:81/calico/node:v3.27.3,192.168.115.11:81/calico/kube-controllers:v3.27.3,192.168.115.11:81/calico/cni:v3.27.3

修改网络,value修改为kubuedm-config.yaml中的podSubnet值一致

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

启动calico

kubectl apply -f calico.yaml

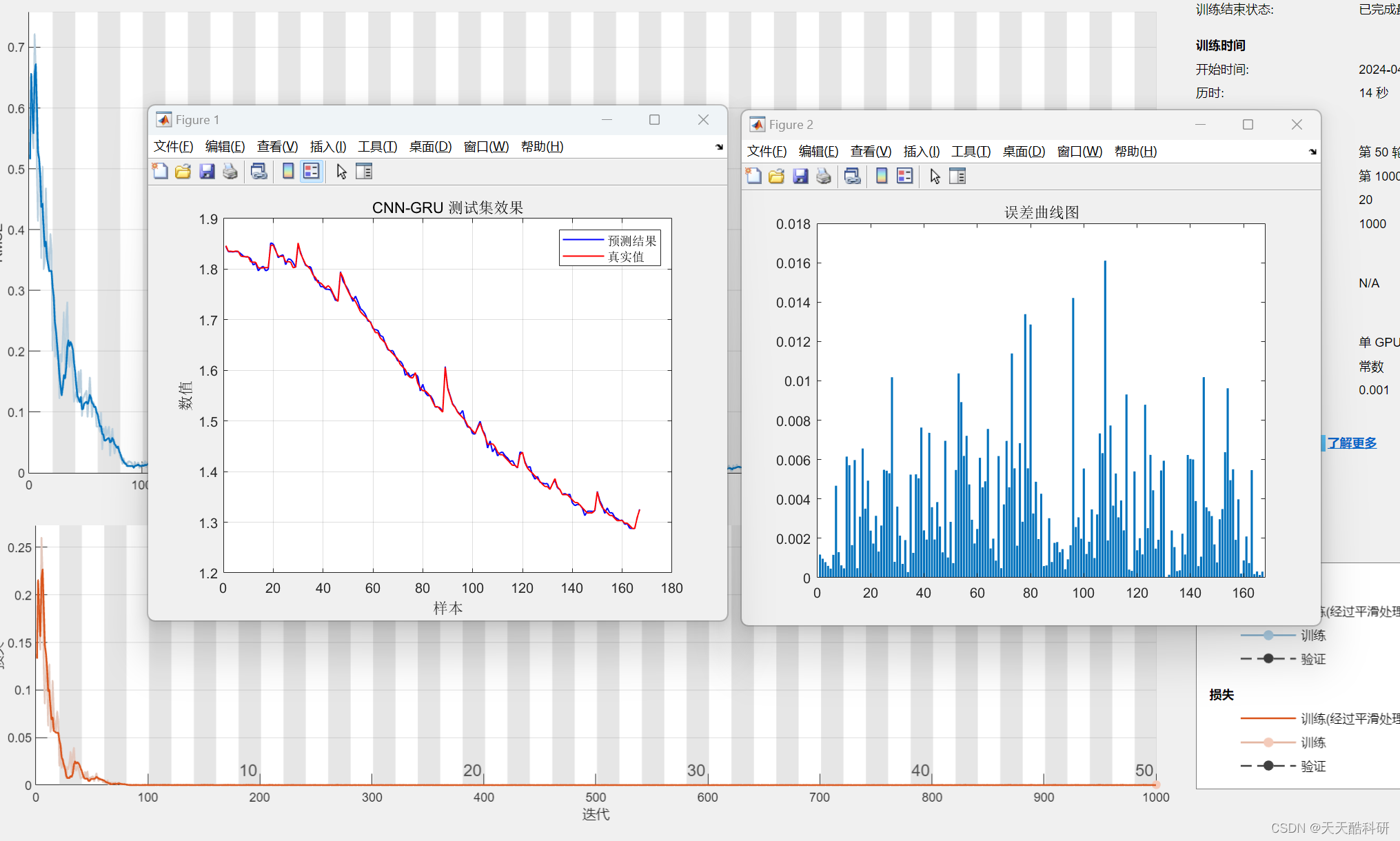

等待几分钟后查看calico的pod,都在running状态了

[root@k8s-master01 calico]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5f87f7fc98-84wpm 1/1 Running 0 2m55s

calico-node-bxns7 1/1 Running 0 2m55s

calico-node-dpvhb 1/1 Running 0 2m55s

calico-node-gzncb 1/1 Running 0 2m55s

calico-node-j62nt 1/1 Running 0 2m55s

calico-node-np695 1/1 Running 0 2m55s

coredns-7b9565c6c-f865r 1/1 Running 0 104m

coredns-7b9565c6c-g9df5 1/1 Running 0 104m

kube-apiserver-k8s-master01 1/1 Running 10 105m

kube-apiserver-k8s-master02 1/1 Running 0 98m

kube-apiserver-k8s-master03 1/1 Running 0 89m

kube-controller-manager-k8s-master01 1/1 Running 4 105m

kube-controller-manager-k8s-master02 1/1 Running 0 98m

kube-controller-manager-k8s-master03 1/1 Running 0 89m

kube-proxy-2j9t2 1/1 Running 0 89m

kube-proxy-4l48v 1/1 Running 0 81m

kube-proxy-cf4mb 1/1 Running 0 104m

kube-proxy-gs2ph 1/1 Running 0 81m

kube-proxy-lgtxw 1/1 Running 0 98m

kube-scheduler-k8s-master01 1/1 Running 4 105m

kube-scheduler-k8s-master02 1/1 Running 0 98m

kube-scheduler-k8s-master03 1/1 Running 0 89m

查看节点状态,都是ready了

[root@k8s-master01 calico]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 106m v1.30.0

k8s-master02 Ready control-plane 99m v1.30.0

k8s-master03 Ready control-plane 90m v1.30.0

k8s-node01 Ready <none> 82m v1.30.0

k8s-node02 Ready <none> 82m v1.30.0

[root@k8s-master01 calico]#