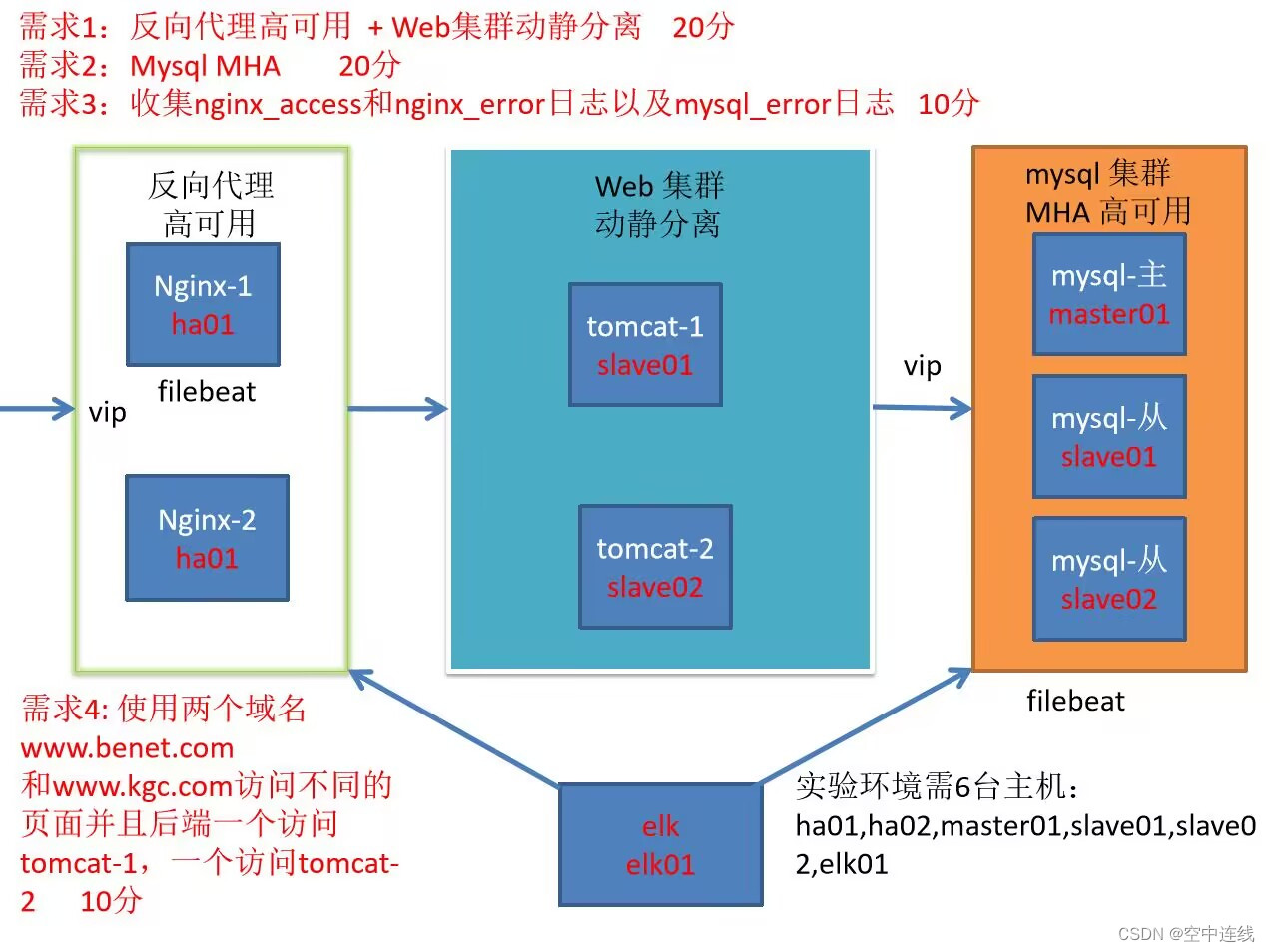

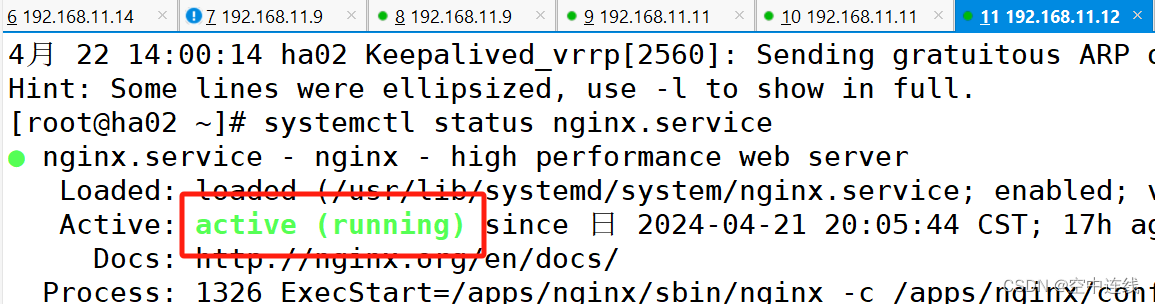

一 keeplived 高可用

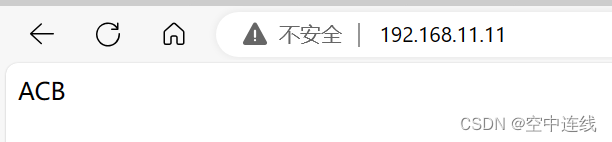

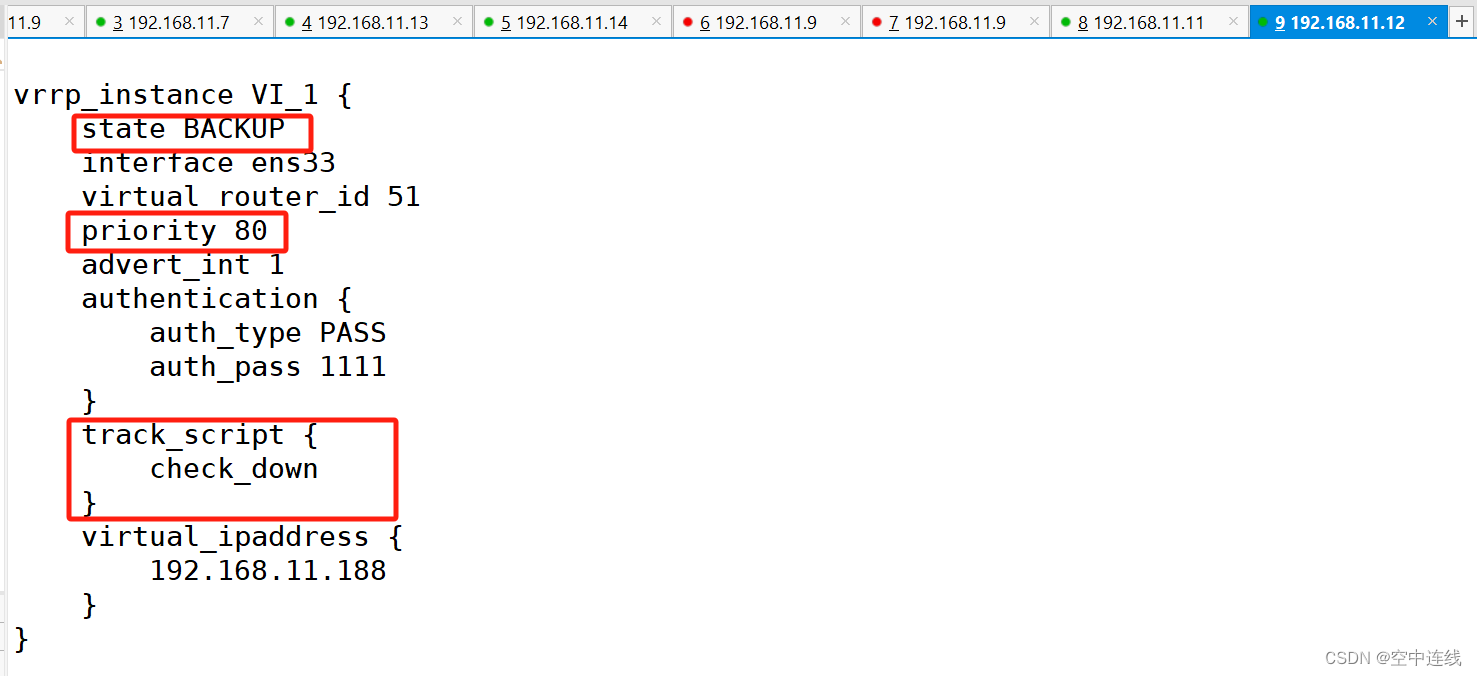

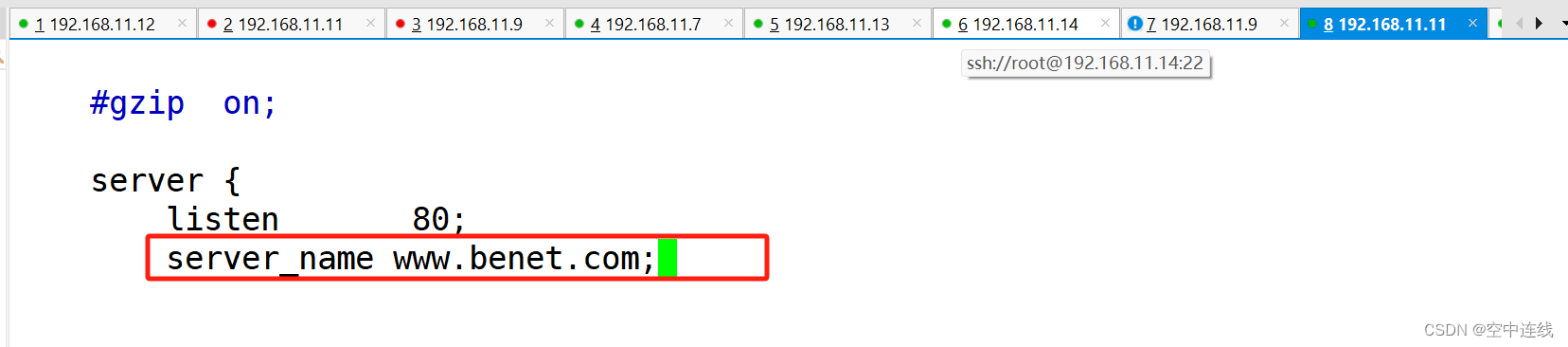

| 192.168.11.11 | nginx keeplived |

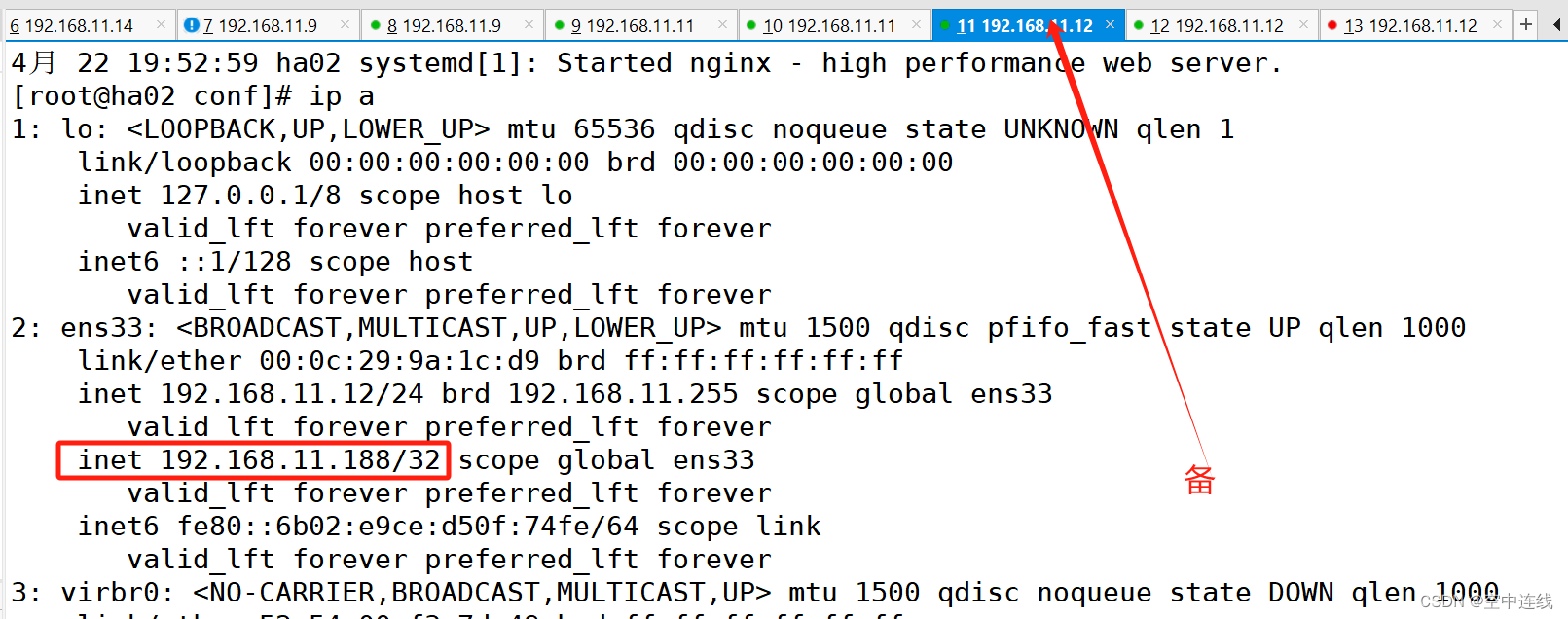

| 192.168.11.12 | nginx keeplived |

两台均编译安装服务器

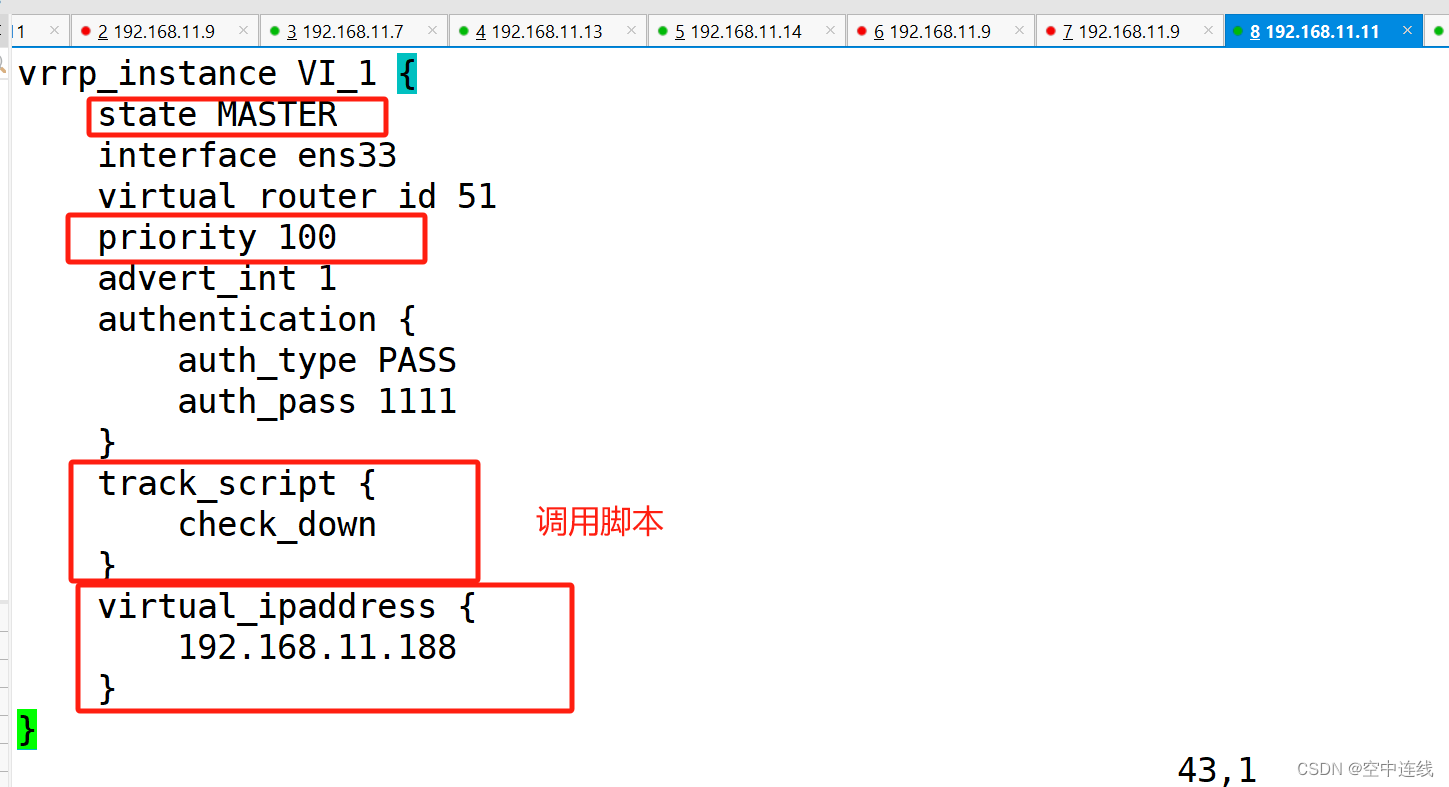

1 主服务器修改文件:

2 备服务器修改文本

scp keepalived.conf 192.168.11.12:/etc/keepalived/

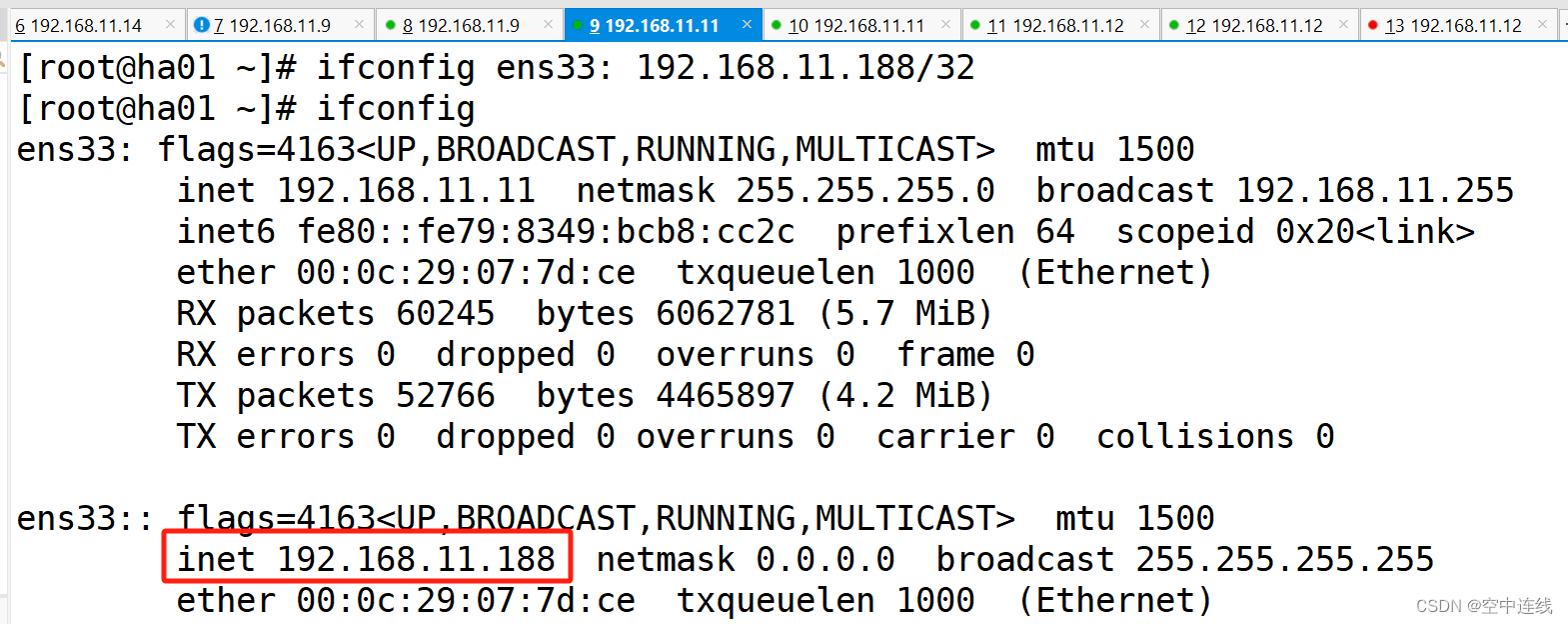

3 给主服务器添加虚拟ip

ifconfig ens33:0 192.168.11.188 netmask 255.255.255.255

ifconfig ens33: 192.168.11.188/32

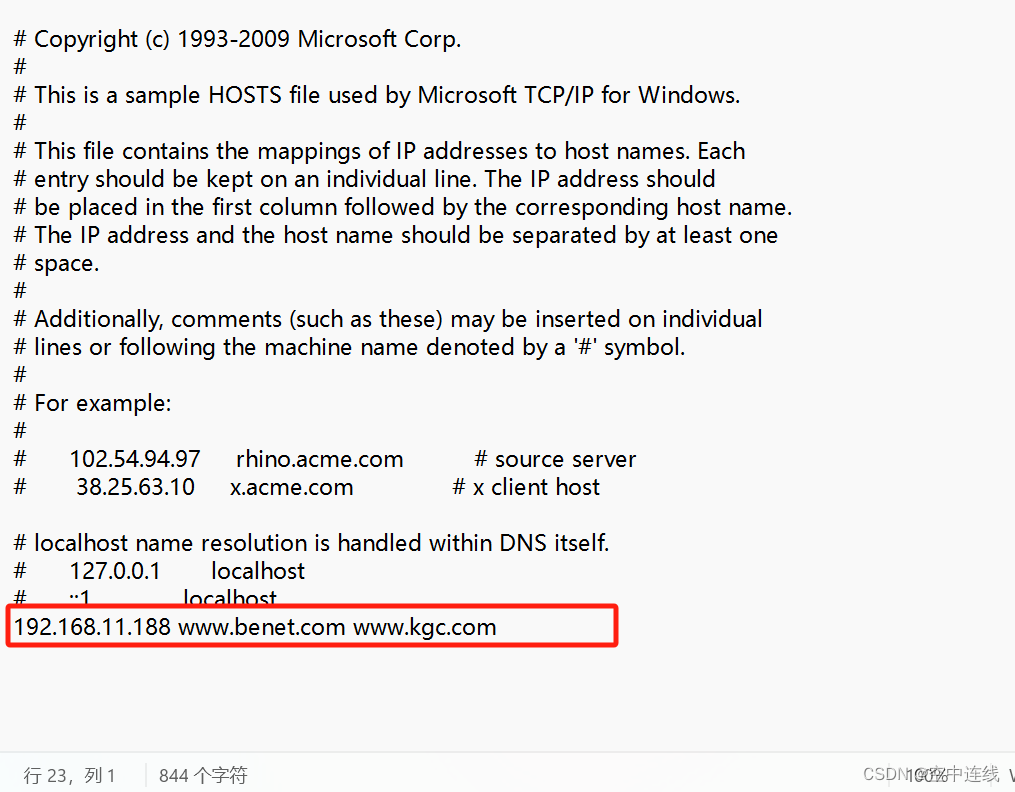

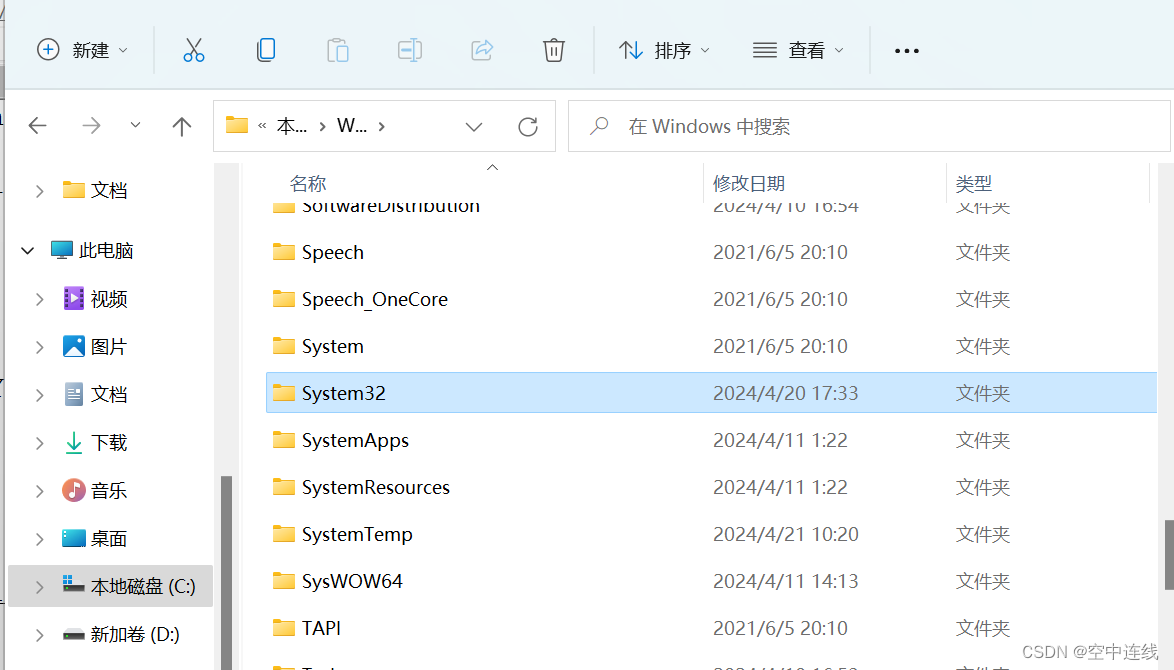

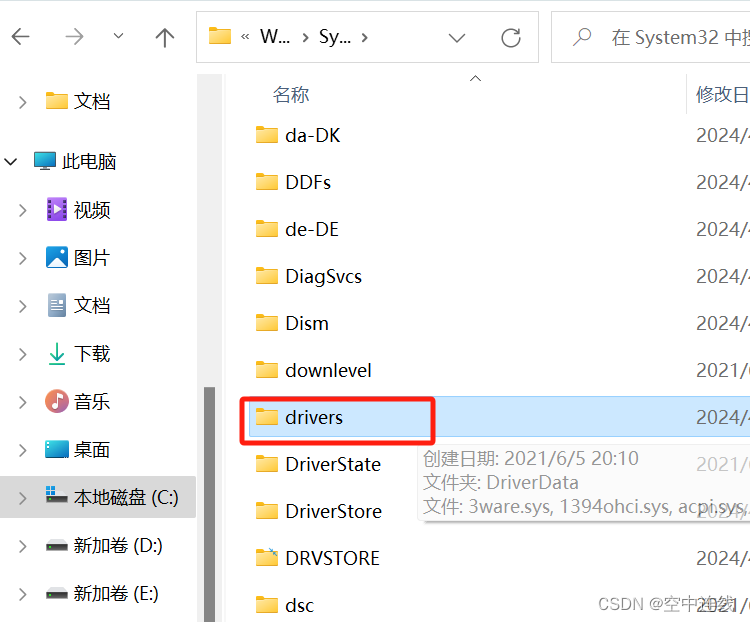

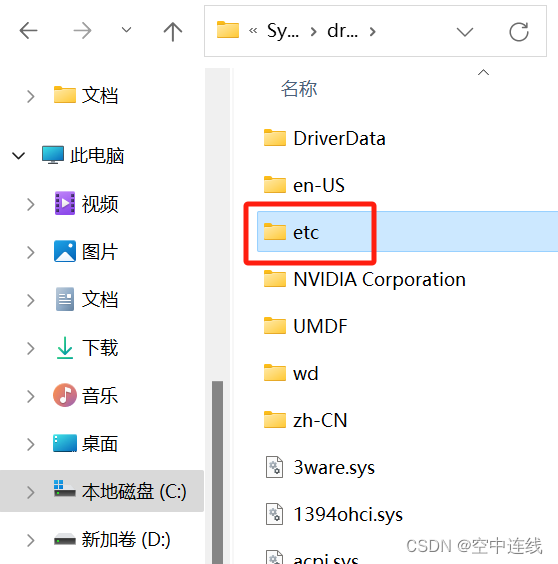

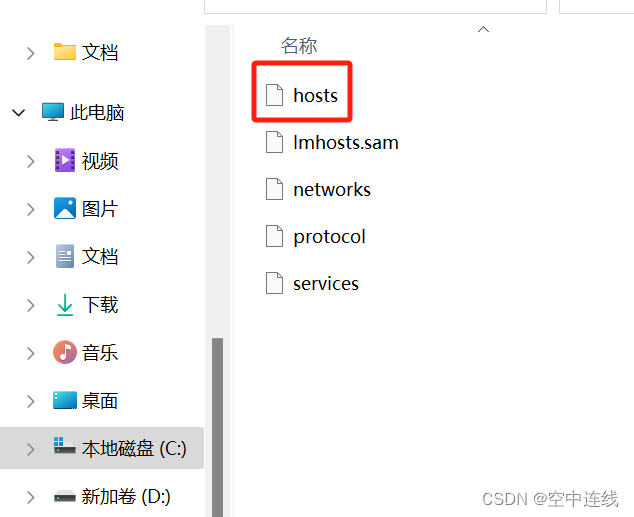

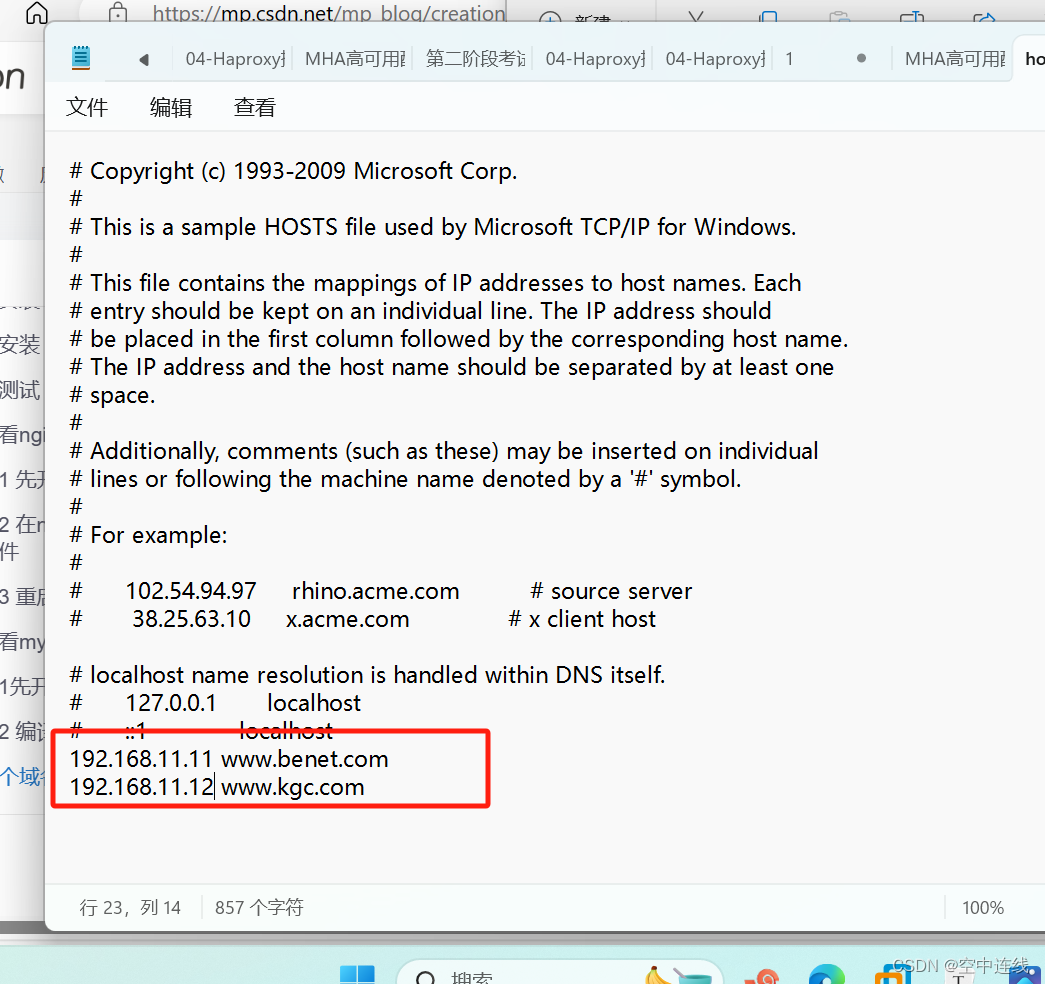

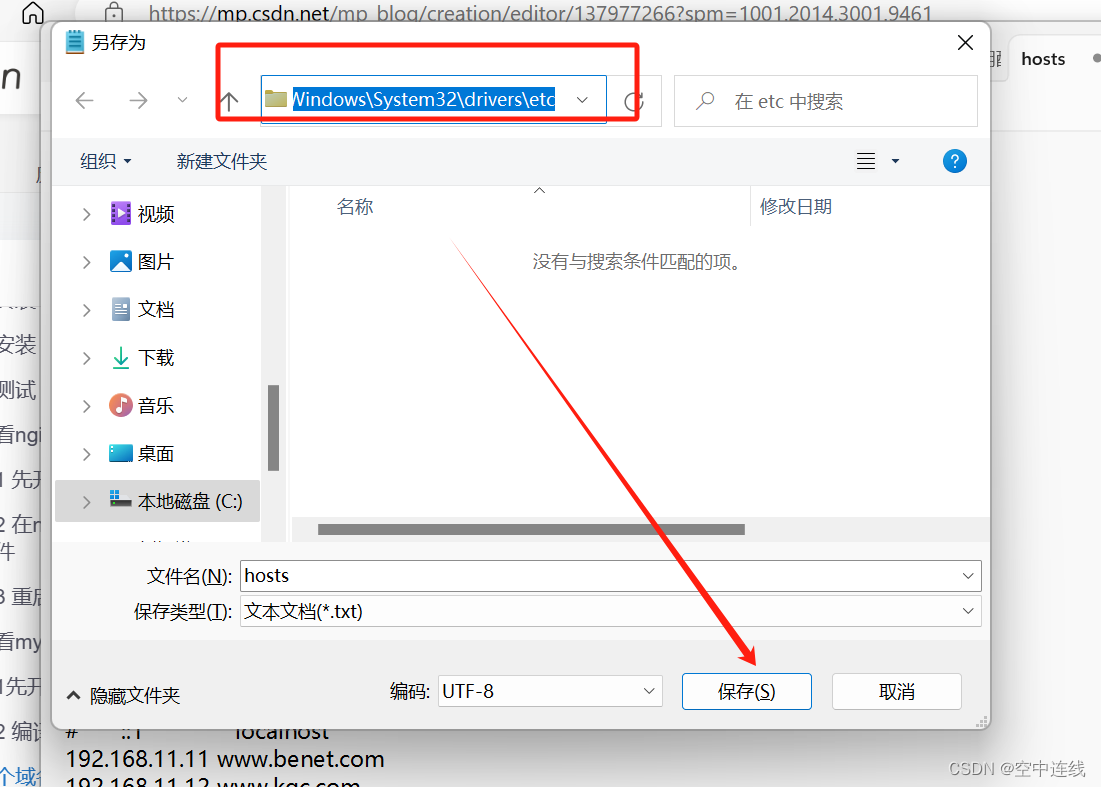

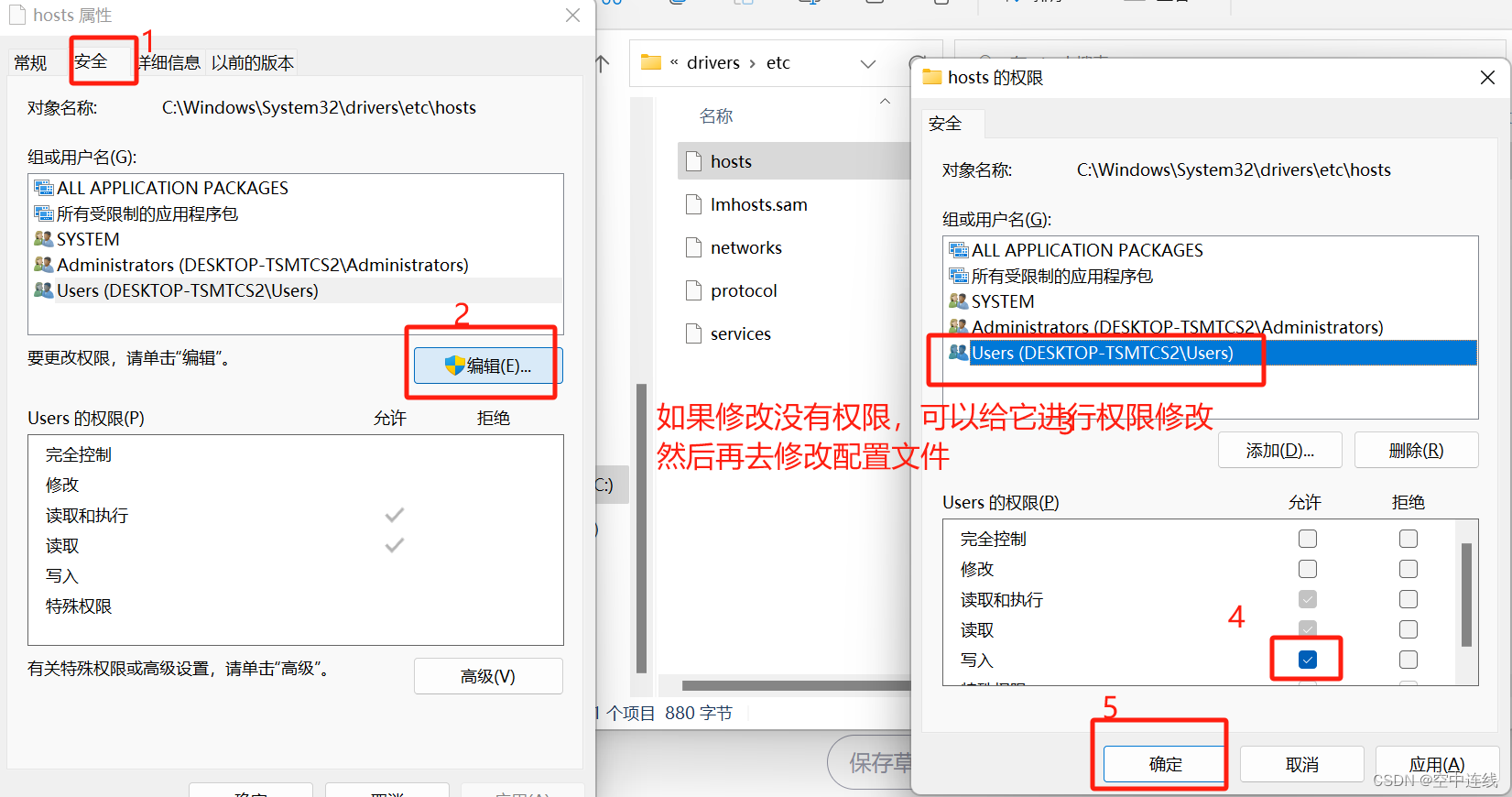

4 去真机windows添加虚拟ip

4 去真机windows添加虚拟ip

192.168.11.188 www.benet.com www.kgc.com

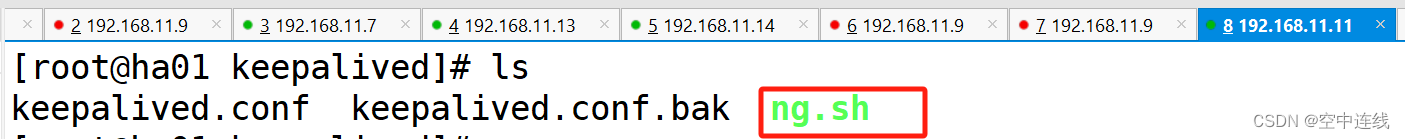

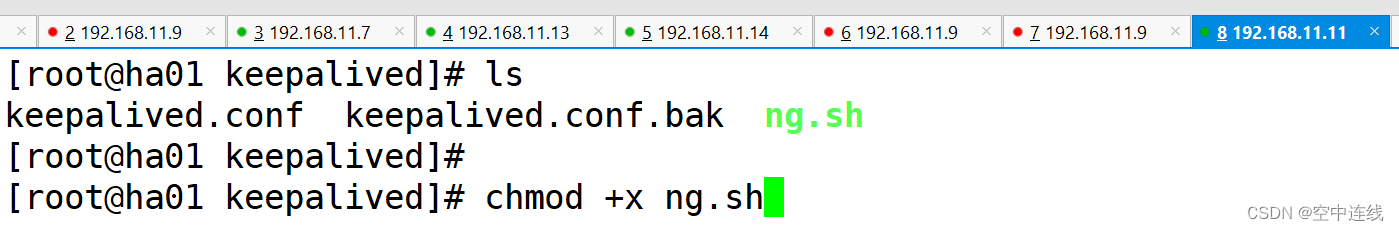

5 添加脚本

6执行权限

chmod +x ng.sh

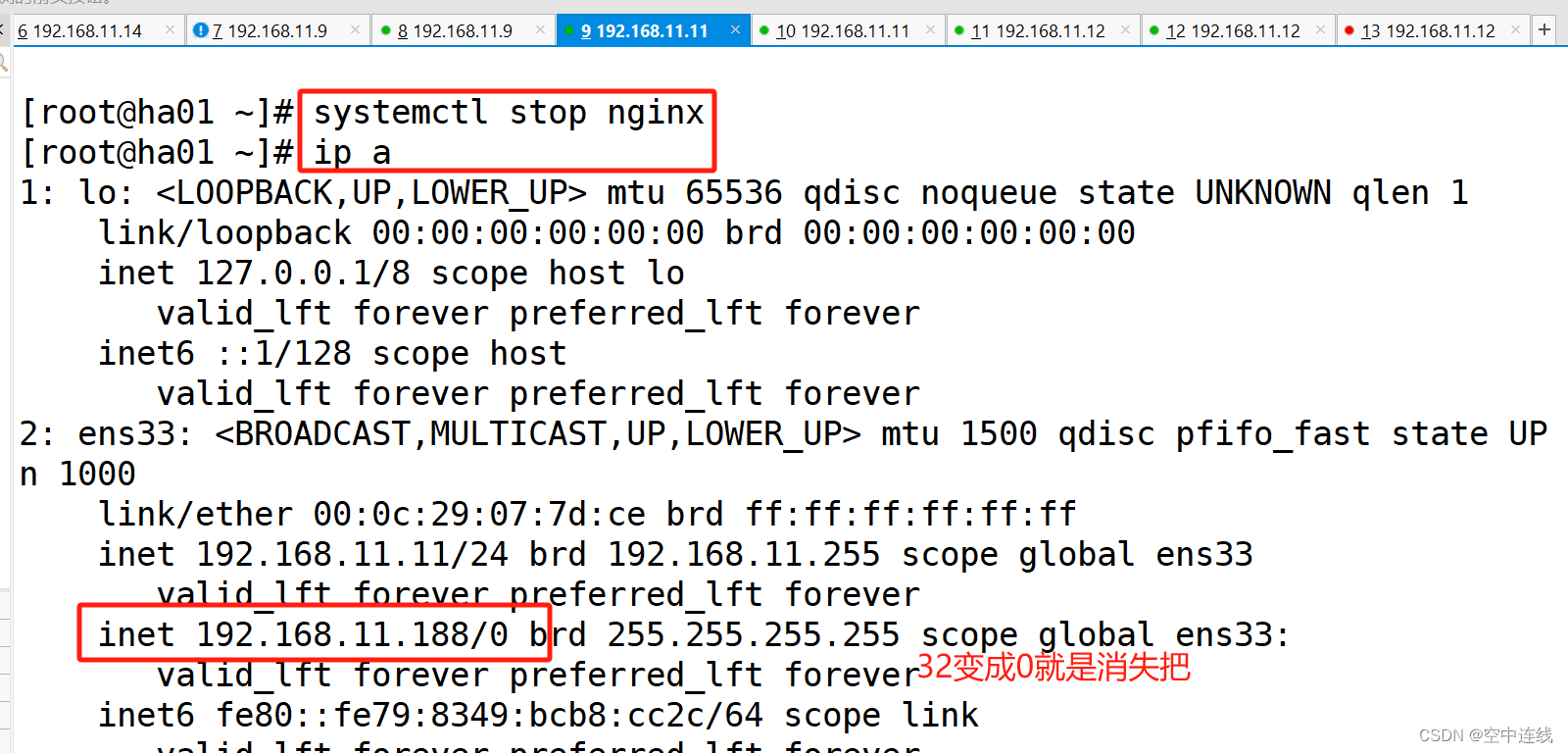

7 主服务器关闭nginx

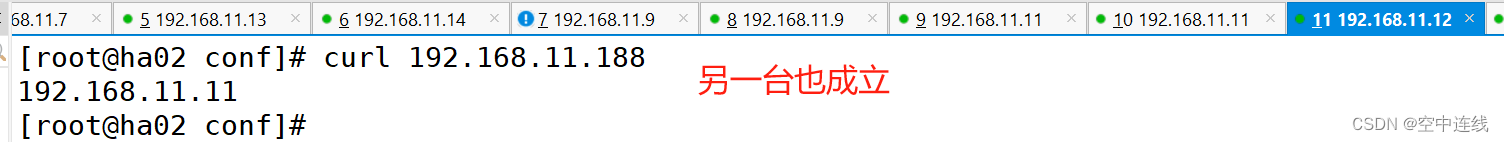

systemctl stop nginx8 去被服务器检测:

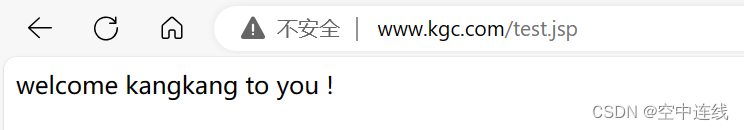

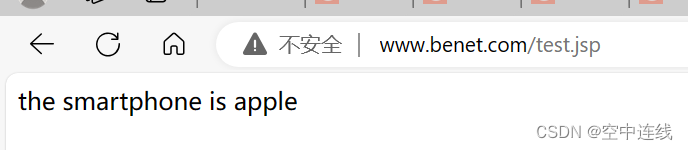

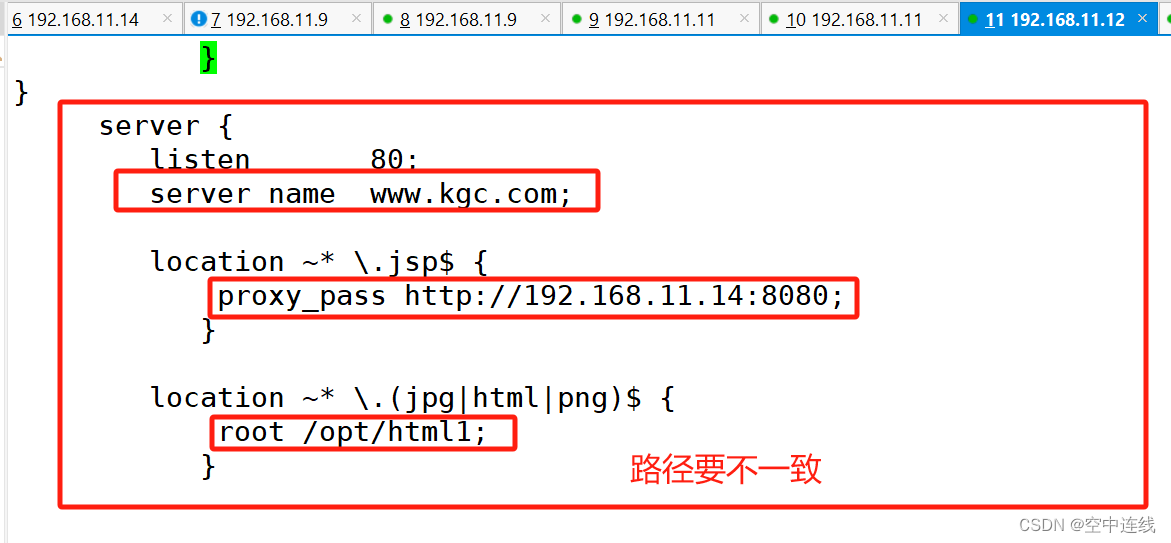

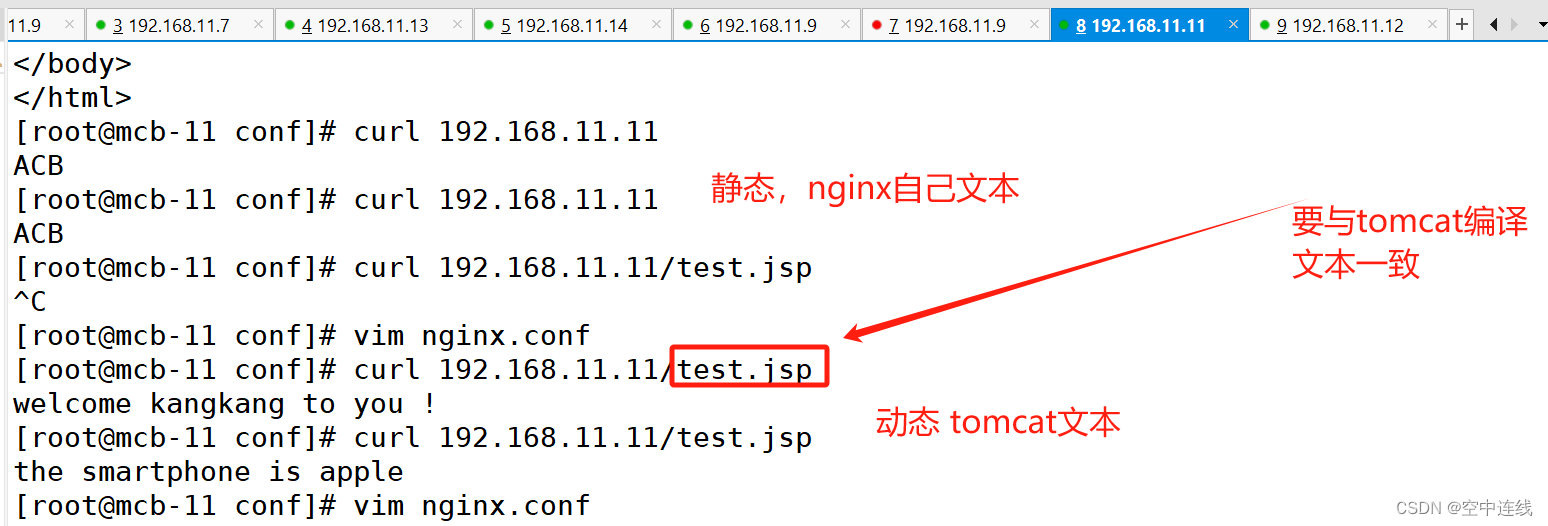

二 实验 动静分离

承接高可用

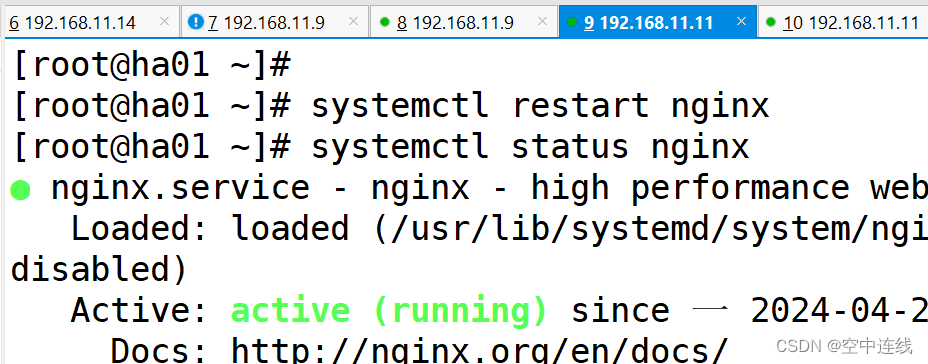

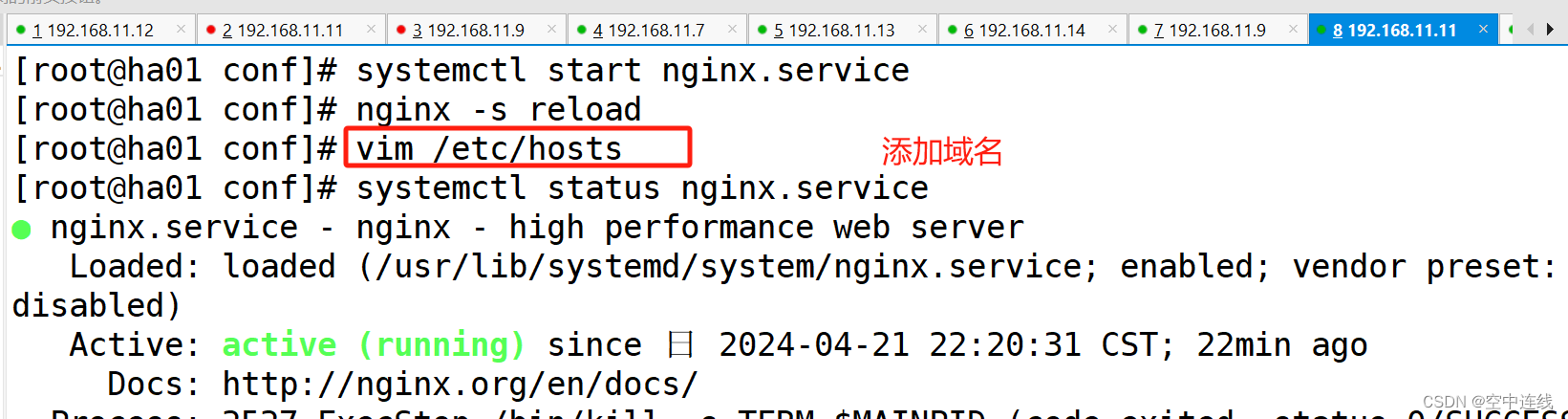

1先把关闭nginx启动

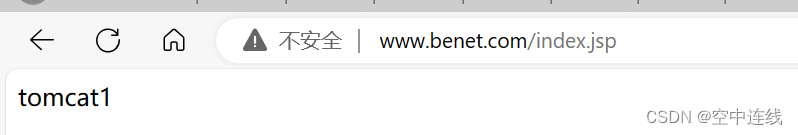

去浏览器上检测

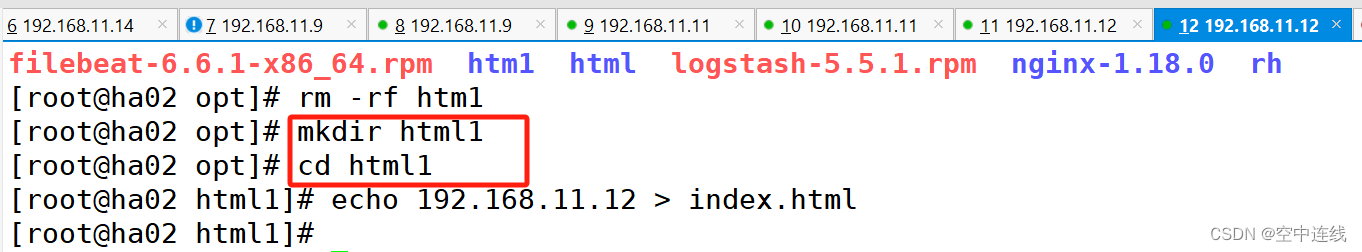

在虚拟机nginx做静

一 编译安装tomcat:192.168.11.13

另一台tomcat也用此方法

[root@mcb-11-13 ~]# systemctl stop firewalld

[root@mcb-11-13 ~]# setenforce 0

[root@mcb-11-13 ~]# hostnamectl set-hostname slave01

[root@mcb-11-13 ~]# su

[root@slave01 ~]# mkdir /data

[root@slave01 ~]# cd /data

[root@slave01 data]# rz -E

rz waiting to receive.

[root@slave01 data]# rz -E

rz waiting to receive.

[root@slave01 data]# ls

apache-tomcat-9.0.16.tar.gz jdk-8u291-linux-x64.tar.gz

[root@slave01 data]# tar xf jdk-8u291-linux-x64.tar.gz -C /usr/local

[root@slave01 data]# cd /usr/local

[root@slave01 local]# ll

总用量 0

drwxr-xr-x. 2 root root 6 11月 5 2016 bin

drwxr-xr-x. 2 root root 6 11月 5 2016 etc

drwxr-xr-x. 2 root root 6 11月 5 2016 games

drwxr-xr-x. 2 root root 6 11月 5 2016 include

drwxr-xr-x. 8 10143 10143 273 4月 8 2021 jdk1.8.0_291

drwxr-xr-x. 2 root root 6 11月 5 2016 lib

drwxr-xr-x. 2 root root 6 11月 5 2016 lib64

drwxr-xr-x. 2 root root 6 11月 5 2016 libexec

drwxr-xr-x. 2 root root 6 11月 5 2016 sbin

drwxr-xr-x. 5 root root 49 3月 15 19:36 share

drwxr-xr-x. 2 root root 6 11月 5 2016 src

[root@slave01 local]# ln -s jdk1.8.0_291/ jdk

[root@slave01 local]# ls

bin etc games include jdk jdk1.8.0_291 lib lib64 libexec sbin share src

[root@slave01 local]# . /etc/profile.d/env.sh

[root@slave01 local]# java -version

openjdk version "1.8.0_131" #文本配置文件错误,此时要修改

OpenJDK Runtime Environment (build 1.8.0_131-b12)

OpenJDK 64-Bit Server VM (build 25.131-b12, mixed mode)

[root@slave01 local]# vim /etc/profile.d/env.sh

[root@slave01 local]# . /etc/profile.d/env.sh

[root@slave01 local]# java -version

java version "1.8.0_291" #jdk 这个版本才是正确的

Java(TM) SE Runtime Environment (build 1.8.0_291-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.291-b10, mixed mode)

[root@slave01 local]# ls

bin etc games include jdk jdk1.8.0_291 lib lib64 libexec sbin share src

[root@slave01 local]# cd /data

[root@slave01 data]# ls

apache-tomcat-9.0.16.tar.gz jdk-8u291-linux-x64.tar.gz

[root@slave01 data]# tar xf apache-tomcat-9.0.16.tar.gz

[root@slave01 data]# ls

apache-tomcat-9.0.16 apache-tomcat-9.0.16.tar.gz jdk-8u291-linux-x64.tar.gz

[root@slave01 data]# cp -r apache-tomcat-9.0.16 /usr/local

[root@slave01 data]# cd /usr/local

[root@slave01 local]# ls

apache-tomcat-9.0.16 etc include jdk1.8.0_291 lib64 sbin src

bin games jdk lib libexec share

[root@slave01 local]# ln -s apache-tomcat-9.0.16/ tomcat

[root@slave01 local]# ll

总用量 0

drwxr-xr-x. 9 root root 220 4月 19 18:45 apache-tomcat-9.0.16

drwxr-xr-x. 2 root root 6 11月 5 2016 bin

drwxr-xr-x. 2 root root 6 11月 5 2016 etc

drwxr-xr-x. 2 root root 6 11月 5 2016 games

drwxr-xr-x. 2 root root 6 11月 5 2016 include

lrwxrwxrwx. 1 root root 13 4月 19 18:40 jdk -> jdk1.8.0_291/

drwxr-xr-x. 8 10143 10143 273 4月 8 2021 jdk1.8.0_291

drwxr-xr-x. 2 root root 6 11月 5 2016 lib

drwxr-xr-x. 2 root root 6 11月 5 2016 lib64

drwxr-xr-x. 2 root root 6 11月 5 2016 libexec

drwxr-xr-x. 2 root root 6 11月 5 2016 sbin

drwxr-xr-x. 5 root root 49 3月 15 19:36 share

drwxr-xr-x. 2 root root 6 11月 5 2016 src

lrwxrwxrwx. 1 root root 21 4月 19 18:46 tomcat -> apache-tomcat-9.0.16/

[root@slave01 local]# useradd tomcat -s /sbin/nologin

[root@slave01 local]# useradd tomcat -s /sbin/nologin -R #建错了,需要修改

useradd: 选项“-R”需要一个选项

[root@slave01 local]# useradd tomcat -s /sbin/nologin -M

useradd:用户“tomcat”已存在

[root@slave01 local]# userdel tomcat

[root@slave01 local]# find / -name tomcat

/etc/selinux/targeted/active/modules/100/tomcat

/var/spool/mail/tomcat

/usr/local/tomcat

/home/tomcat

[root@slave01 local]# cat /etc/passwd

root:x:0:0:root:/root:/bin/bash

bin:x:1:1:bin:/bin:/sbin/nologin

daemon:x:2:2:daemon:/sbin:/sbin/nologin

adm:x:3:4:adm:/var/adm:/sbin/nologin

lp:x:4:7:lp:/var/spool/lpd:/sbin/nologin

sync:x:5:0:sync:/sbin:/bin/sync

shutdown:x:6:0:shutdown:/sbin:/sbin/shutdown

halt:x:7:0:halt:/sbin:/sbin/halt

mail:x:8:12:mail:/var/spool/mail:/sbin/nologin

operator:x:11:0:operator:/root:/sbin/nologin

games:x:12:100:games:/usr/games:/sbin/nologin

ftp:x:14:50:FTP User:/var/ftp:/sbin/nologin

nobody:x:99:99:Nobody:/:/sbin/nologin

systemd-network:x:192:192:systemd Network Management:/:/sbin/nologin

dbus:x:81:81:System message bus:/:/sbin/nologin

polkitd:x:999:998:User for polkitd:/:/sbin/nologin

abrt:x:173:173::/etc/abrt:/sbin/nologin

libstoragemgmt:x:998:996:daemon account for libstoragemgmt:/var/run/lsm:/sbin/nologin

rpc:x:32:32:Rpcbind Daemon:/var/lib/rpcbind:/sbin/nologin

colord:x:997:995:User for colord:/var/lib/colord:/sbin/nologin

saslauth:x:996:76:Saslauthd user:/run/saslauthd:/sbin/nologin

rtkit:x:172:172:RealtimeKit:/proc:/sbin/nologin

pulse:x:171:171:PulseAudio System Daemon:/var/run/pulse:/sbin/nologin

chrony:x:995:991::/var/lib/chrony:/sbin/nologin

rpcuser:x:29:29:RPC Service User:/var/lib/nfs:/sbin/nologin

nfsnobody:x:65534:65534:Anonymous NFS User:/var/lib/nfs:/sbin/nologin

ntp:x:38:38::/etc/ntp:/sbin/nologin

tss:x:59:59:Account used by the trousers package to sandbox the tcsd daemon:/dev/null:/sbin/nologin

usbmuxd:x:113:113:usbmuxd user:/:/sbin/nologin

geoclue:x:994:989:User for geoclue:/var/lib/geoclue:/sbin/nologin

qemu:x:107:107:qemu user:/:/sbin/nologin

radvd:x:75:75:radvd user:/:/sbin/nologin

setroubleshoot:x:993:988::/var/lib/setroubleshoot:/sbin/nologin

sssd:x:992:987:User for sssd:/:/sbin/nologin

gdm:x:42:42::/var/lib/gdm:/sbin/nologin

gnome-initial-setup:x:991:986::/run/gnome-initial-setup/:/sbin/nologin

sshd:x:74:74:Privilege-separated SSH:/var/empty/sshd:/sbin/nologin

avahi:x:70:70:Avahi mDNS/DNS-SD Stack:/var/run/avahi-daemon:/sbin/nologin

postfix:x:89:89::/var/spool/postfix:/sbin/nologin

tcpdump:x:72:72::/:/sbin/nologin

mcb:x:1000:1000:mcb:/home/mcb:/bin/bash

tomcat:x:1001:1001::/home/tomcat:/sbin/nologin

[root@slave01 local]# userdel tomcat

[root@slave01 local]#

[root@slave01 local]# cat /etc/passwd

root:x:0:0:root:/root:/bin/bash

bin:x:1:1:bin:/bin:/sbin/nologin

daemon:x:2:2:daemon:/sbin:/sbin/nologin

adm:x:3:4:adm:/var/adm:/sbin/nologin

lp:x:4:7:lp:/var/spool/lpd:/sbin/nologin

sync:x:5:0:sync:/sbin:/bin/sync

shutdown:x:6:0:shutdown:/sbin:/sbin/shutdown

halt:x:7:0:halt:/sbin:/sbin/halt

mail:x:8:12:mail:/var/spool/mail:/sbin/nologin

operator:x:11:0:operator:/root:/sbin/nologin

games:x:12:100:games:/usr/games:/sbin/nologin

ftp:x:14:50:FTP User:/var/ftp:/sbin/nologin

nobody:x:99:99:Nobody:/:/sbin/nologin

systemd-network:x:192:192:systemd Network Management:/:/sbin/nologin

dbus:x:81:81:System message bus:/:/sbin/nologin

polkitd:x:999:998:User for polkitd:/:/sbin/nologin

abrt:x:173:173::/etc/abrt:/sbin/nologin

libstoragemgmt:x:998:996:daemon account for libstoragemgmt:/var/run/lsm:/sbin/nologin

rpc:x:32:32:Rpcbind Daemon:/var/lib/rpcbind:/sbin/nologin

colord:x:997:995:User for colord:/var/lib/colord:/sbin/nologin

saslauth:x:996:76:Saslauthd user:/run/saslauthd:/sbin/nologin

rtkit:x:172:172:RealtimeKit:/proc:/sbin/nologin

pulse:x:171:171:PulseAudio System Daemon:/var/run/pulse:/sbin/nologin

chrony:x:995:991::/var/lib/chrony:/sbin/nologin

rpcuser:x:29:29:RPC Service User:/var/lib/nfs:/sbin/nologin

nfsnobody:x:65534:65534:Anonymous NFS User:/var/lib/nfs:/sbin/nologin

ntp:x:38:38::/etc/ntp:/sbin/nologin

tss:x:59:59:Account used by the trousers package to sandbox the tcsd daemon:/dev/null:/sbin/nologin

usbmuxd:x:113:113:usbmuxd user:/:/sbin/nologin

geoclue:x:994:989:User for geoclue:/var/lib/geoclue:/sbin/nologin

qemu:x:107:107:qemu user:/:/sbin/nologin

radvd:x:75:75:radvd user:/:/sbin/nologin

setroubleshoot:x:993:988::/var/lib/setroubleshoot:/sbin/nologin

sssd:x:992:987:User for sssd:/:/sbin/nologin

gdm:x:42:42::/var/lib/gdm:/sbin/nologin

gnome-initial-setup:x:991:986::/run/gnome-initial-setup/:/sbin/nologin

sshd:x:74:74:Privilege-separated SSH:/var/empty/sshd:/sbin/nologin

avahi:x:70:70:Avahi mDNS/DNS-SD Stack:/var/run/avahi-daemon:/sbin/nologin

postfix:x:89:89::/var/spool/postfix:/sbin/nologin

tcpdump:x:72:72::/:/sbin/nologin

mcb:x:1000:1000:mcb:/home/mcb:/bin/bash

[root@slave01 local]#

[root@slave01 local]# find / -name tomcat

/etc/selinux/targeted/active/modules/100/tomcat

/usr/local/tomcat

[root@slave01 local]# useradd tomcat -s /sbin/nologin -M

[root@slave01 local]# chown tomcat:tomcat /usr/local/tomcat/ -R

[root@slave01 local]# systemctl start tomcat

Failed to start tomcat.service: Unit not found.

[root@slave01 local]# vim /usr/lib/systemd/system/tomcat

[root@slave01 local]# systemctl daemon-reload

[root@slave01 local]# systemctl start tomcat #因为tomcat.service没有加service,就不行

Failed to start tomcat.service: Unit not found.

[root@slave01 local]# cd /usr/lib/systemd/system/

[root@slave01 system]# ls

abrt-ccpp.service plymouth-poweroff.service

abrtd.service plymouth-quit.service

abrt-oops.service plymouth-quit-wait.service

abrt-pstoreoops.service plymouth-read-write.service

abrt-vmcore.service plymouth-reboot.service

abrt-xorg.service plymouth-start.service

accounts-daemon.service plymouth-switch-root.service

alsa-restore.service polkit.service

alsa-state.service postfix.service

alsa-store.service poweroff.target

anaconda-direct.service poweroff.target.wants

anaconda-nm-config.service printer.target

anaconda-noshell.service proc-fs-nfsd.mount

anaconda-pre.service proc-sys-fs-binfmt_misc.automount

anaconda.service proc-sys-fs-binfmt_misc.mount

anaconda-shell@.service psacct.service

anaconda-sshd.service qemu-guest-agent.service

anaconda.target quotaon.service

anaconda-tmux@.service radvd.service

arp-ethers.service rc-local.service

atd.service rdisc.service

auditd.service rdma-ndd.service

auth-rpcgss-module.service rdma.service

autofs.service realmd.service

autovt@.service reboot.target

avahi-daemon.service reboot.target.wants

avahi-daemon.socket remote-fs-pre.target

basic.target remote-fs.target

basic.target.wants rescue.service

blk-availability.service rescue.target

bluetooth.service rescue.target.wants

bluetooth.target rhel-autorelabel-mark.service

brandbot.path rhel-autorelabel.service

brandbot.service rhel-configure.service

brltty.service rhel-dmesg.service

canberra-system-bootup.service rhel-domainname.service

canberra-system-shutdown-reboot.service rhel-import-state.service

canberra-system-shutdown.service rhel-loadmodules.service

certmonger.service rhel-readonly.service

cgconfig.service rngd.service

cgdcbxd.service rpcbind.service

cgred.service rpcbind.socket

chrony-dnssrv@.service rpcbind.target

chrony-dnssrv@.timer rpc-gssd.service

chronyd.service rpcgssd.service

chrony-wait.service rpcidmapd.service

colord.service rpc-rquotad.service

configure-printer@.service rpc-statd-notify.service

console-getty.service rpc-statd.service

console-shell.service rsyncd.service

container-getty@.service rsyncd@.service

cpupower.service rsyncd.socket

crond.service rsyslog.service

cryptsetup-pre.target rtkit-daemon.service

cryptsetup.target runlevel0.target

ctrl-alt-del.target runlevel1.target

cups-browsed.service runlevel1.target.wants

cups.path runlevel2.target

cups.service runlevel2.target.wants

cups.socket runlevel3.target

dbus-org.freedesktop.hostname1.service runlevel3.target.wants

dbus-org.freedesktop.import1.service runlevel4.target

dbus-org.freedesktop.locale1.service runlevel4.target.wants

dbus-org.freedesktop.login1.service runlevel5.target

dbus-org.freedesktop.machine1.service runlevel5.target.wants

dbus-org.freedesktop.timedate1.service runlevel6.target

dbus.service saslauthd.service

dbus.socket selinux-policy-migrate-local-changes@.service

dbus.target.wants serial-getty@.service

debug-shell.service shutdown.target

default.target shutdown.target.wants

default.target.wants sigpwr.target

dev-hugepages.mount sleep.target

dev-mqueue.mount -.slice

dm-event.service slices.target

dm-event.socket smartcard.target

dmraid-activation.service smartd.service

dnsmasq.service sockets.target

dracut-cmdline.service sockets.target.wants

dracut-initqueue.service sound.target

dracut-mount.service speech-dispatcherd.service

dracut-pre-mount.service spice-vdagentd.service

dracut-pre-pivot.service spice-vdagentd.target

dracut-pre-trigger.service sshd-keygen.service

dracut-pre-udev.service sshd.service

dracut-shutdown.service sshd@.service

ebtables.service sshd.socket

emergency.service sssd-autofs.service

emergency.target sssd-autofs.socket

fcoe.service sssd-nss.service

final.target sssd-nss.socket

firewalld.service sssd-pac.service

firstboot-graphical.service sssd-pac.socket

flatpak-system-helper.service sssd-pam-priv.socket

fprintd.service sssd-pam.service

fstrim.service sssd-pam.socket

fstrim.timer sssd-secrets.service

gdm.service sssd-secrets.socket

geoclue.service sssd.service

getty@.service sssd-ssh.service

getty.target sssd-ssh.socket

graphical.target sssd-sudo.service

graphical.target.wants sssd-sudo.socket

gssproxy.service suspend.target

halt-local.service swap.target

halt.target sys-fs-fuse-connections.mount

halt.target.wants sysinit.target

hibernate.target sysinit.target.wants

hybrid-sleep.target sys-kernel-config.mount

hypervfcopyd.service sys-kernel-debug.mount

hypervkvpd.service syslog.socket

hypervvssd.service syslog.target.wants

initial-setup-graphical.service sysstat.service

initial-setup-reconfiguration.service systemd-ask-password-console.path

initial-setup.service systemd-ask-password-console.service

initial-setup-text.service systemd-ask-password-plymouth.path

initrd-cleanup.service systemd-ask-password-plymouth.service

initrd-fs.target systemd-ask-password-wall.path

initrd-parse-etc.service systemd-ask-password-wall.service

initrd-root-fs.target systemd-backlight@.service

initrd-switch-root.service systemd-binfmt.service

initrd-switch-root.target systemd-bootchart.service

initrd-switch-root.target.wants systemd-firstboot.service

initrd.target systemd-fsck-root.service

initrd.target.wants systemd-fsck@.service

initrd-udevadm-cleanup-db.service systemd-halt.service

instperf.service systemd-hibernate-resume@.service

iprdump.service systemd-hibernate.service

iprinit.service systemd-hostnamed.service

iprupdate.service systemd-hwdb-update.service

iprutils.target systemd-hybrid-sleep.service

ipsec.service systemd-importd.service

irqbalance.service systemd-initctl.service

iscsid.service systemd-initctl.socket

iscsid.socket systemd-journal-catalog-update.service

iscsi.service systemd-journald.service

iscsi-shutdown.service systemd-journald.socket

iscsiuio.service systemd-journal-flush.service

iscsiuio.socket systemd-kexec.service

kdump.service systemd-localed.service

kexec.target systemd-logind.service

kexec.target.wants systemd-machined.service

kmod-static-nodes.service systemd-machine-id-commit.service

kpatch.service systemd-modules-load.service

ksm.service systemd-nspawn@.service

ksmtuned.service systemd-poweroff.service

libstoragemgmt.service systemd-quotacheck.service

libvirtd.service systemd-random-seed.service

lldpad.service systemd-readahead-collect.service

lldpad.socket systemd-readahead-done.service

local-fs-pre.target systemd-readahead-done.timer

local-fs.target systemd-readahead-drop.service

local-fs.target.wants systemd-readahead-replay.service

lvm2-lvmetad.service systemd-reboot.service

lvm2-lvmetad.socket systemd-remount-fs.service

lvm2-lvmpolld.service systemd-rfkill@.service

lvm2-lvmpolld.socket systemd-shutdownd.service

lvm2-monitor.service systemd-shutdownd.socket

lvm2-pvscan@.service systemd-suspend.service

machine.slice systemd-sysctl.service

machines.target systemd-timedated.service

mdadm-grow-continue@.service systemd-tmpfiles-clean.service

mdadm-last-resort@.service systemd-tmpfiles-clean.timer

mdadm-last-resort@.timer systemd-tmpfiles-setup-dev.service

mdmonitor.service systemd-tmpfiles-setup.service

mdmon@.service systemd-udevd-control.socket

messagebus.service systemd-udevd-kernel.socket

microcode.service systemd-udevd.service

ModemManager.service systemd-udev-settle.service

multipathd.service systemd-udev-trigger.service

multi-user.target systemd-update-done.service

multi-user.target.wants systemd-update-utmp-runlevel.service

netcf-transaction.service systemd-update-utmp.service

NetworkManager-dispatcher.service systemd-user-sessions.service

NetworkManager.service systemd-vconsole-setup.service

NetworkManager-wait-online.service system.slice

network-online.target system-update.target

network-online.target.wants system-update.target.wants

network-pre.target target.service

network.target tcsd.service

nfs-blkmap.service teamd@.service

nfs-client.target timers.target

nfs-config.service timers.target.wants

nfs-idmapd.service time-sync.target

nfs-idmap.service tmp.mount

nfs-lock.service tomcat

nfslock.service tuned.service

nfs-mountd.service udisks2.service

nfs-rquotad.service umount.target

nfs-secure.service upower.service

nfs-server.service usb_modeswitch@.service

nfs.service usbmuxd.service

nfs-utils.service user.slice

nss-lookup.target var-lib-nfs-rpc_pipefs.mount

nss-user-lookup.target vgauthd.service

ntpdate.service virt-guest-shutdown.target

ntpd.service virtlockd.service

numad.service virtlockd.socket

oddjobd.service virtlogd.service

packagekit-offline-update.service virtlogd.socket

packagekit.service vmtoolsd.service

paths.target wacom-inputattach@.service

plymouth-halt.service wpa_supplicant.service

plymouth-kexec.service zram.service

[root@slave01 system]# mv tomcat tomcat.service

[root@slave01 system]# systemctl daemon-reload

[root@slave01 system]# systemctl start tomcat.service

[root@slave01 system]# systemctl status tomcat.service

● tomcat.service - Tomcat

Loaded: loaded (/usr/lib/systemd/system/tomcat.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2024-04-19 19:04:17 CST; 13s ago

Process: 4903 ExecStart=/usr/local/tomcat/bin/startup.sh (code=exited, status=0/SUCCESS)

Main PID: 4918 (catalina.sh)

CGroup: /system.slice/tomcat.service

├─4918 /bin/sh /usr/local/tomcat/bin/catalina.sh start

└─4919 /usr/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/loggi...

4月 19 19:04:17 slave01 systemd[1]: Starting Tomcat...

4月 19 19:04:17 slave01 startup.sh[4903]: Using CATALINA_BASE: /usr/local/tomcat

4月 19 19:04:17 slave01 startup.sh[4903]: Using CATALINA_HOME: /usr/local/tomcat

4月 19 19:04:17 slave01 startup.sh[4903]: Using CATALINA_TMPDIR: /usr/local/tomcat/temp

4月 19 19:04:17 slave01 startup.sh[4903]: Using JRE_HOME: /usr

4月 19 19:04:17 slave01 startup.sh[4903]: Using CLASSPATH: /usr/local/tomcat/bin/b...jar

4月 19 19:04:17 slave01 systemd[1]: Started Tomcat.

Hint: Some lines were ellipsized, use -l to show in full.

[root@slave01 system]# systemctl enable tomcat.service

Created symlink from /etc/systemd/system/multi-user.target.wants/tomcat.service to /usr/lib/systemd/system/tomcat.service.

[root@slave01 system]#

实验: 做动静分离

| nginx | 192.168.11.11 |

| tomcat | 192.168.11.13 |

| tomcat | 192.168.11.14 |

1 三台机器分别安装 nginx(参考我 虎 赵) tomcat(我 虎 赵)

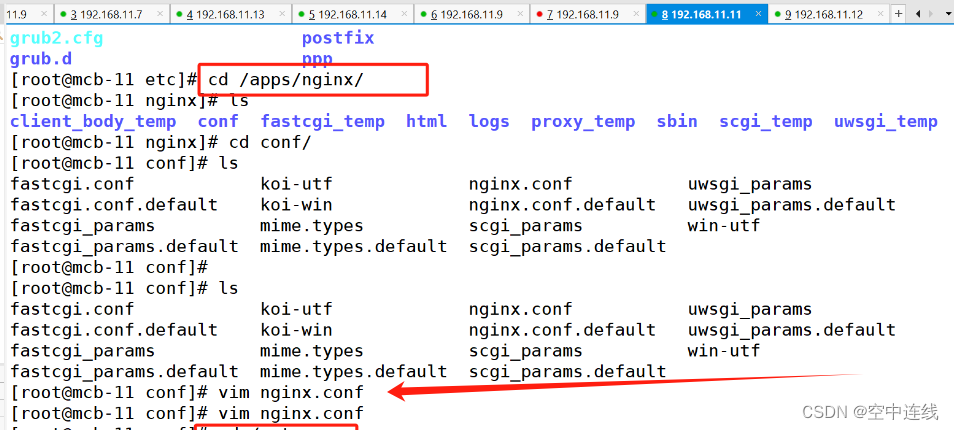

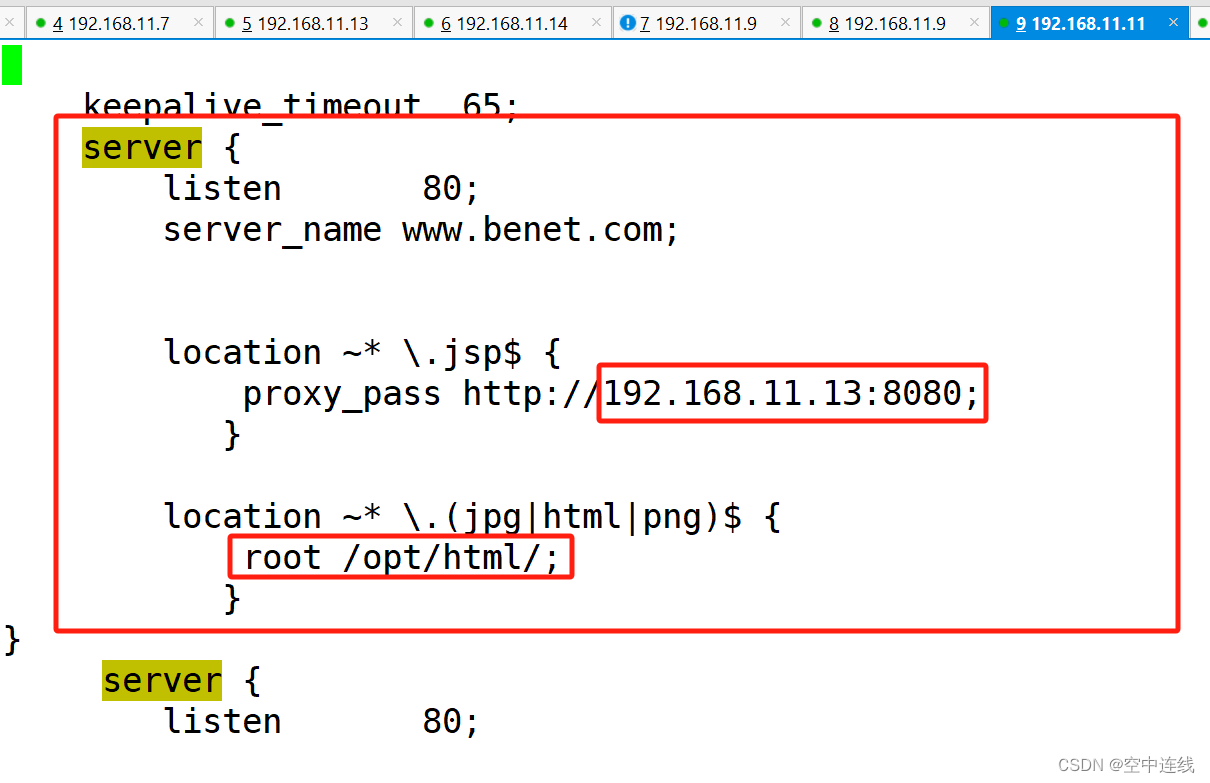

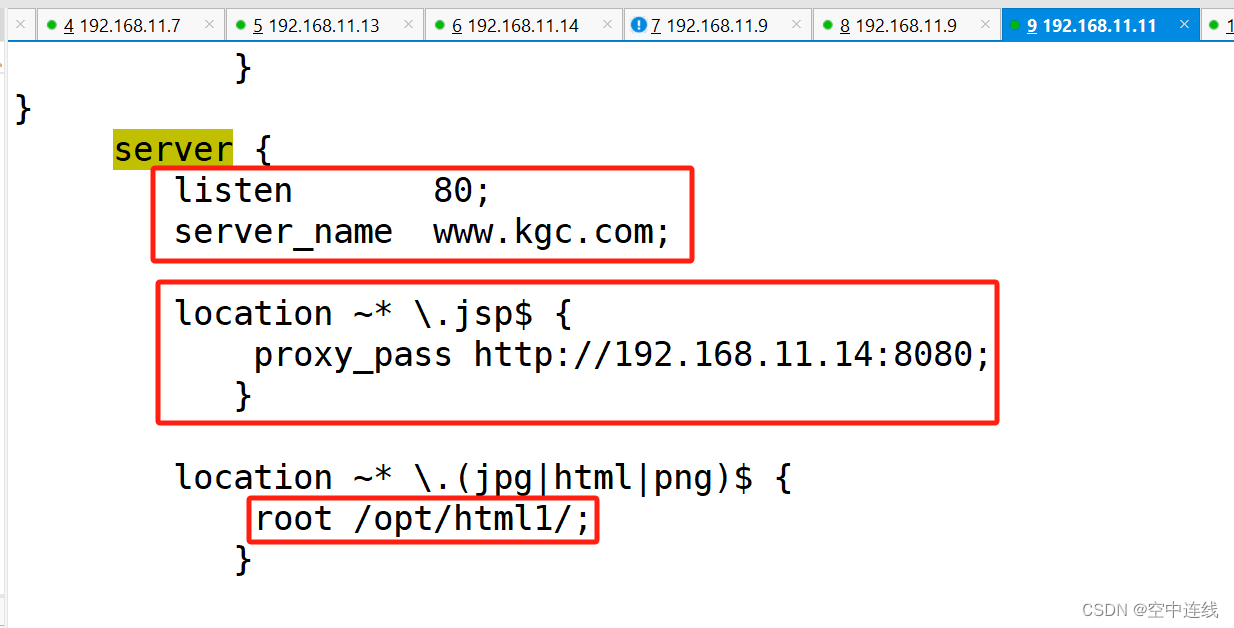

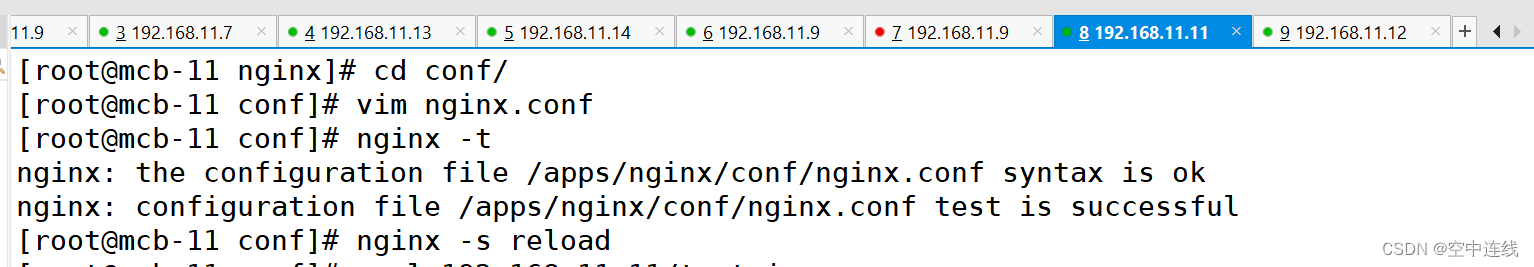

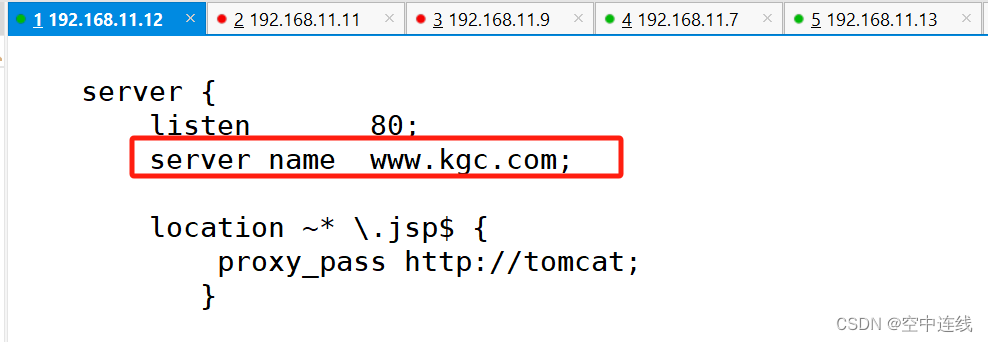

1 编译 反向代里 动静分离文本

去静态 也就是nginx添加文本

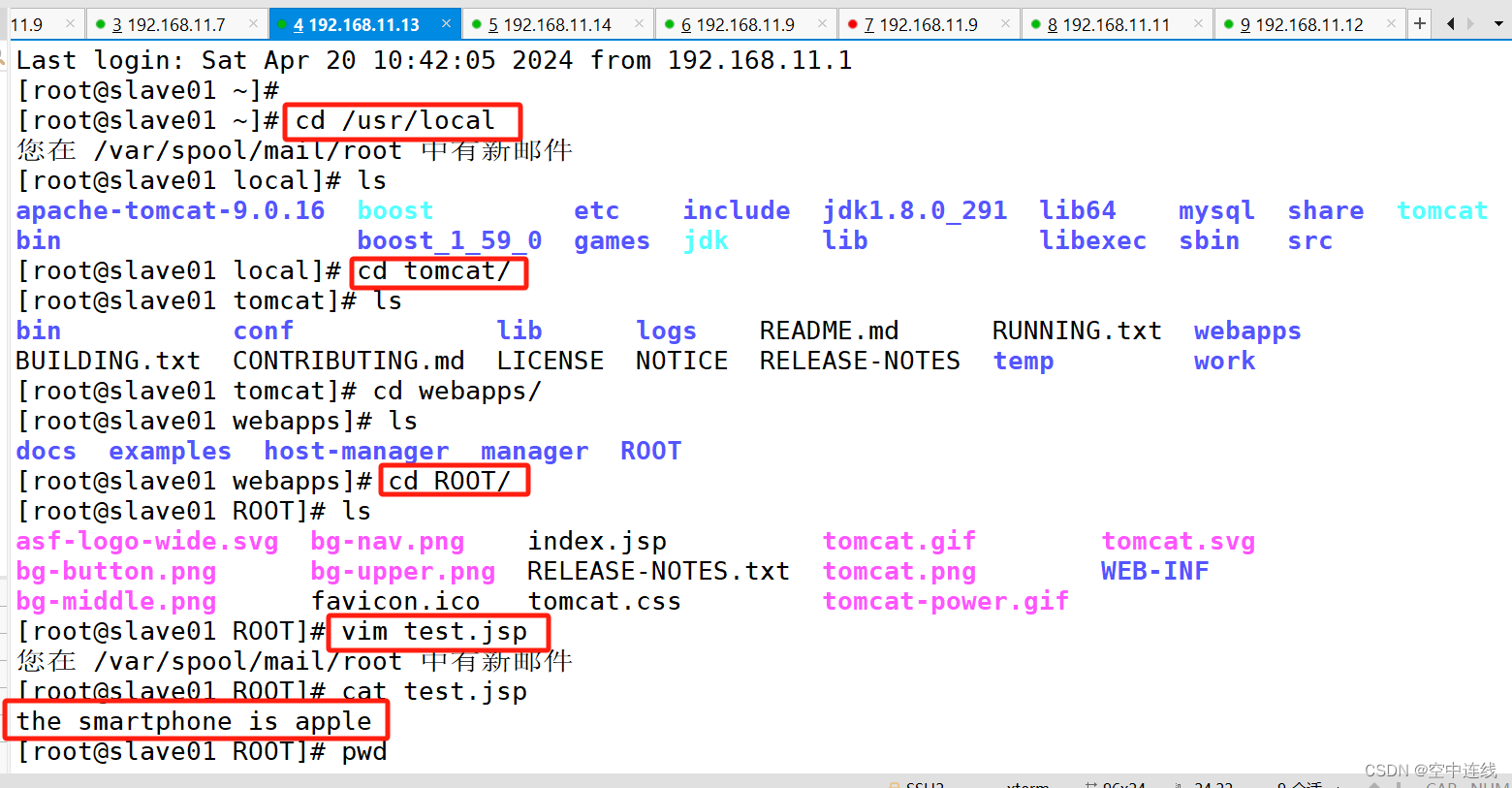

2 去tomcat配置(192.168.11.13)动态文本

3 检测

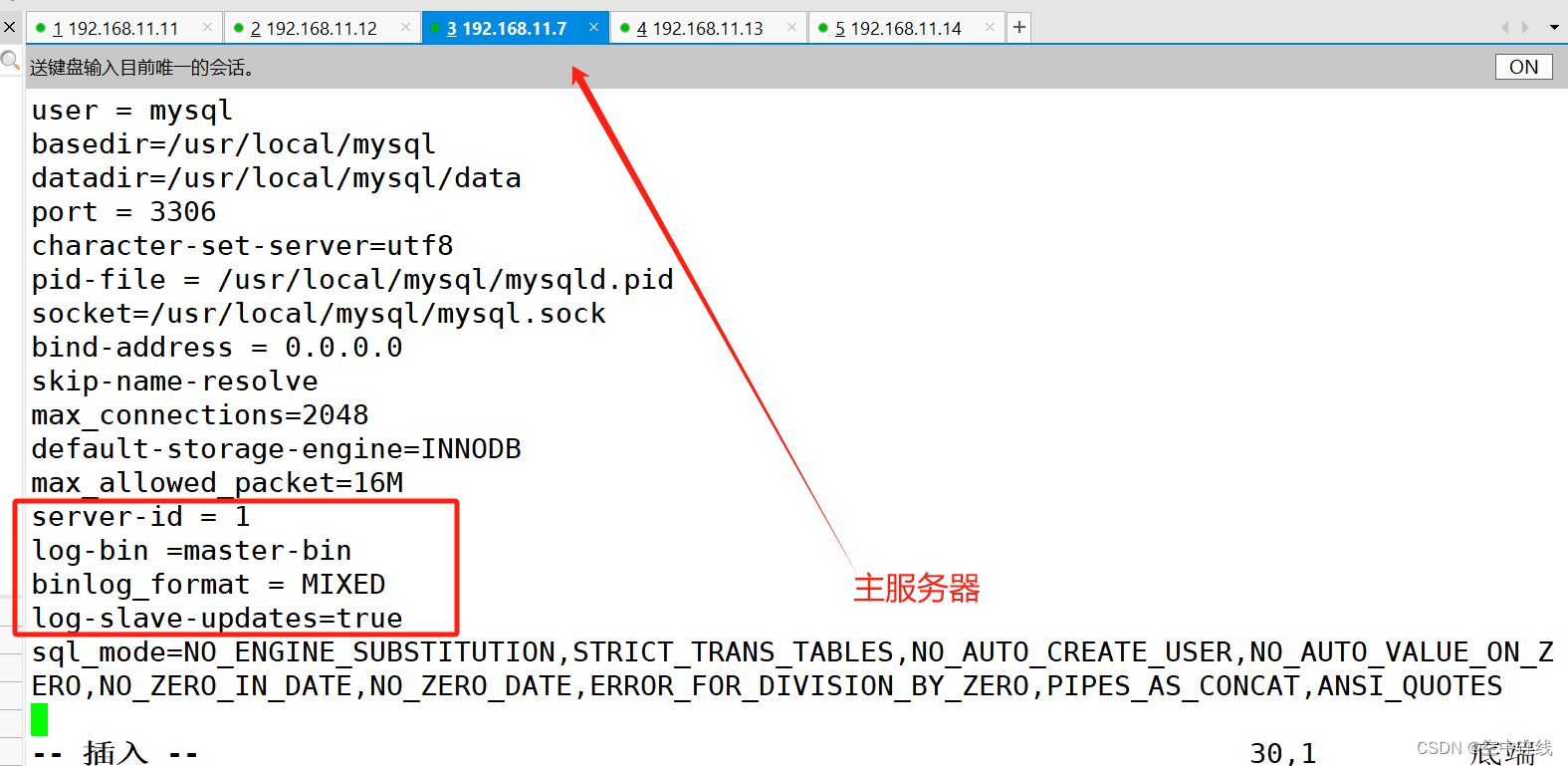

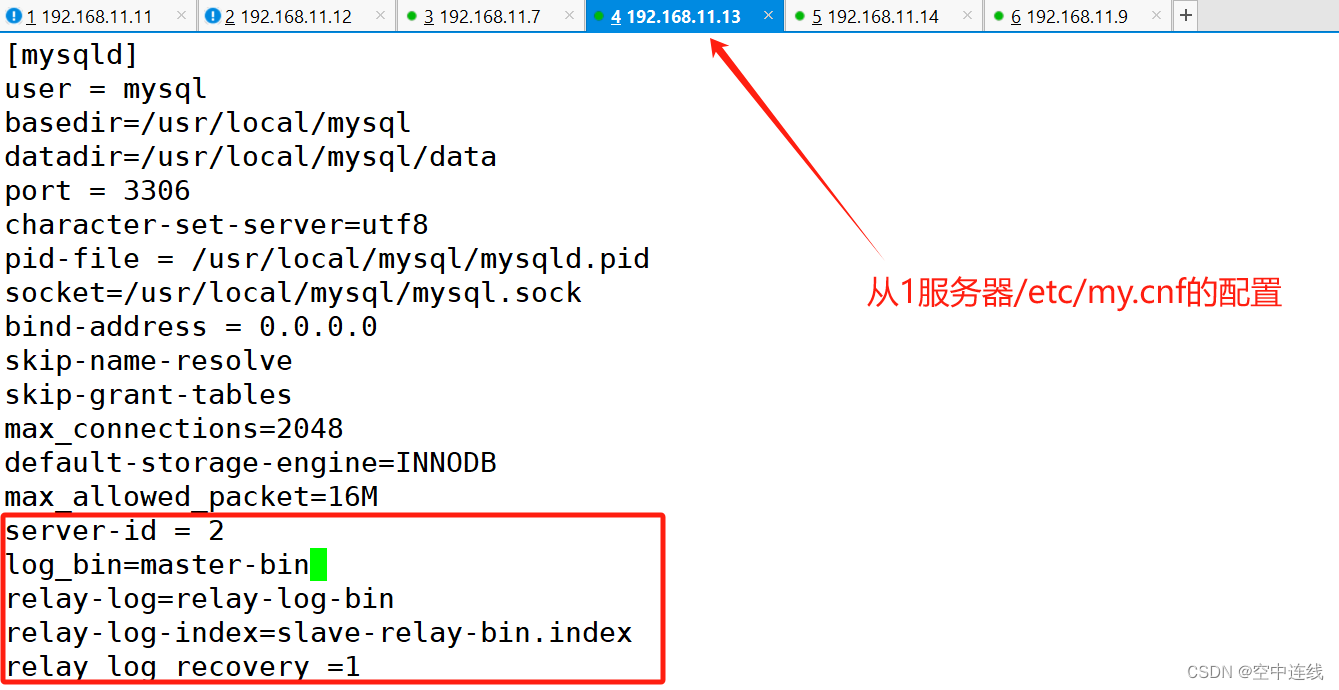

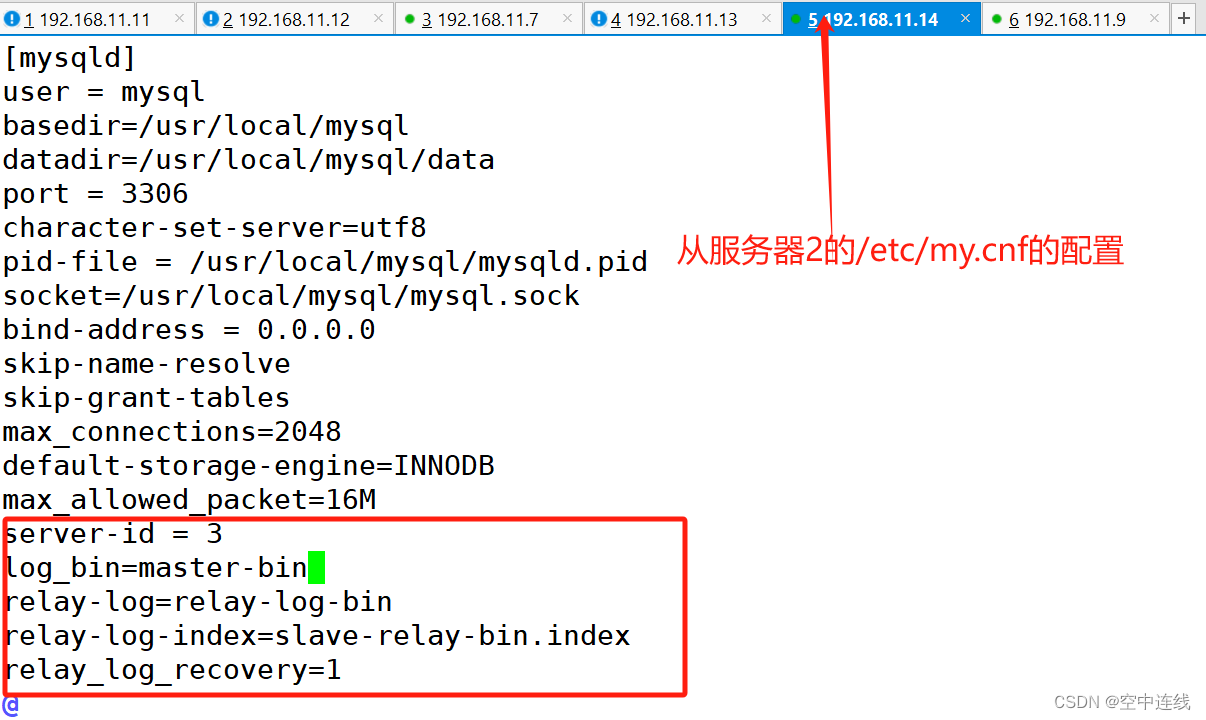

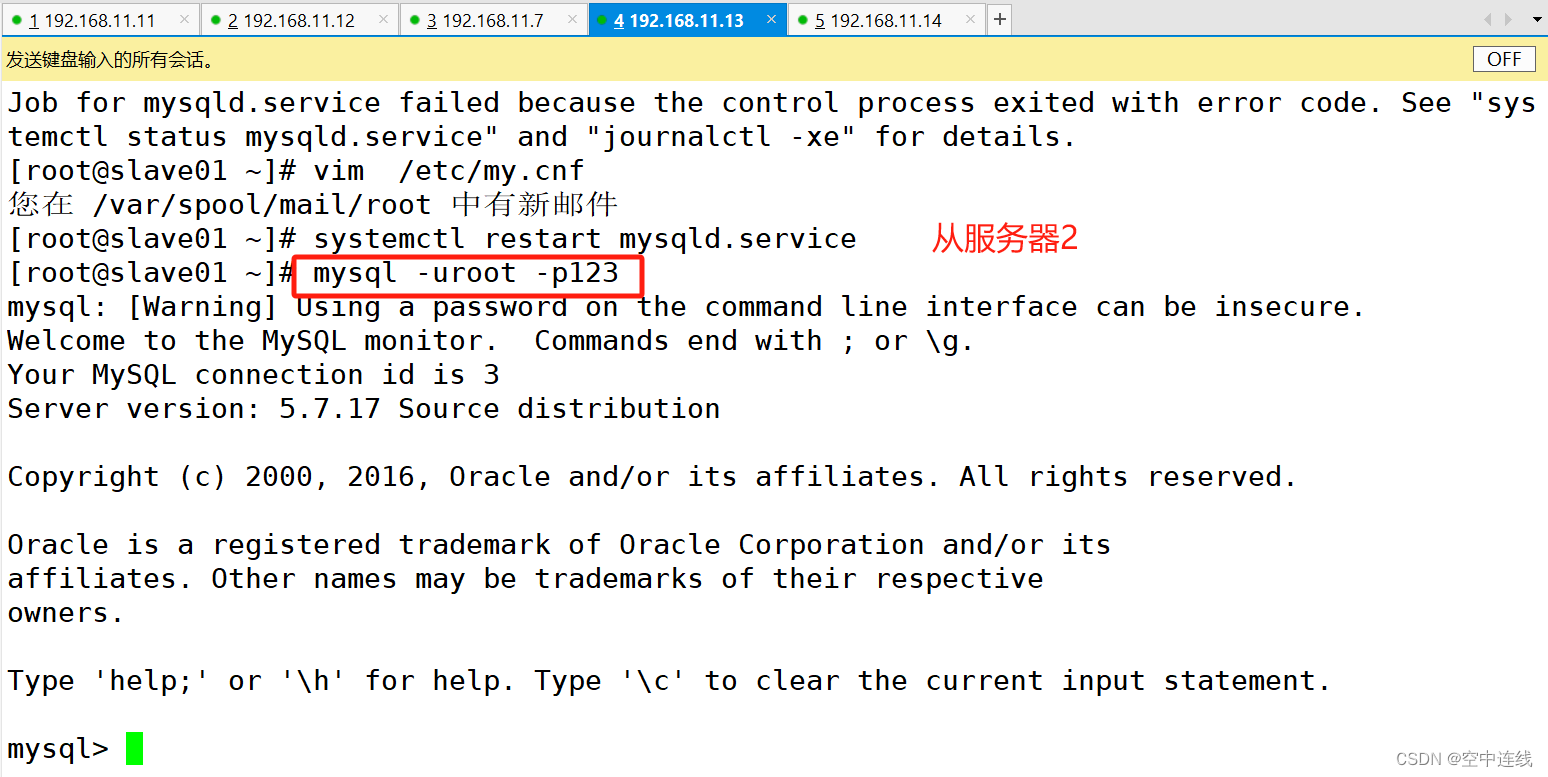

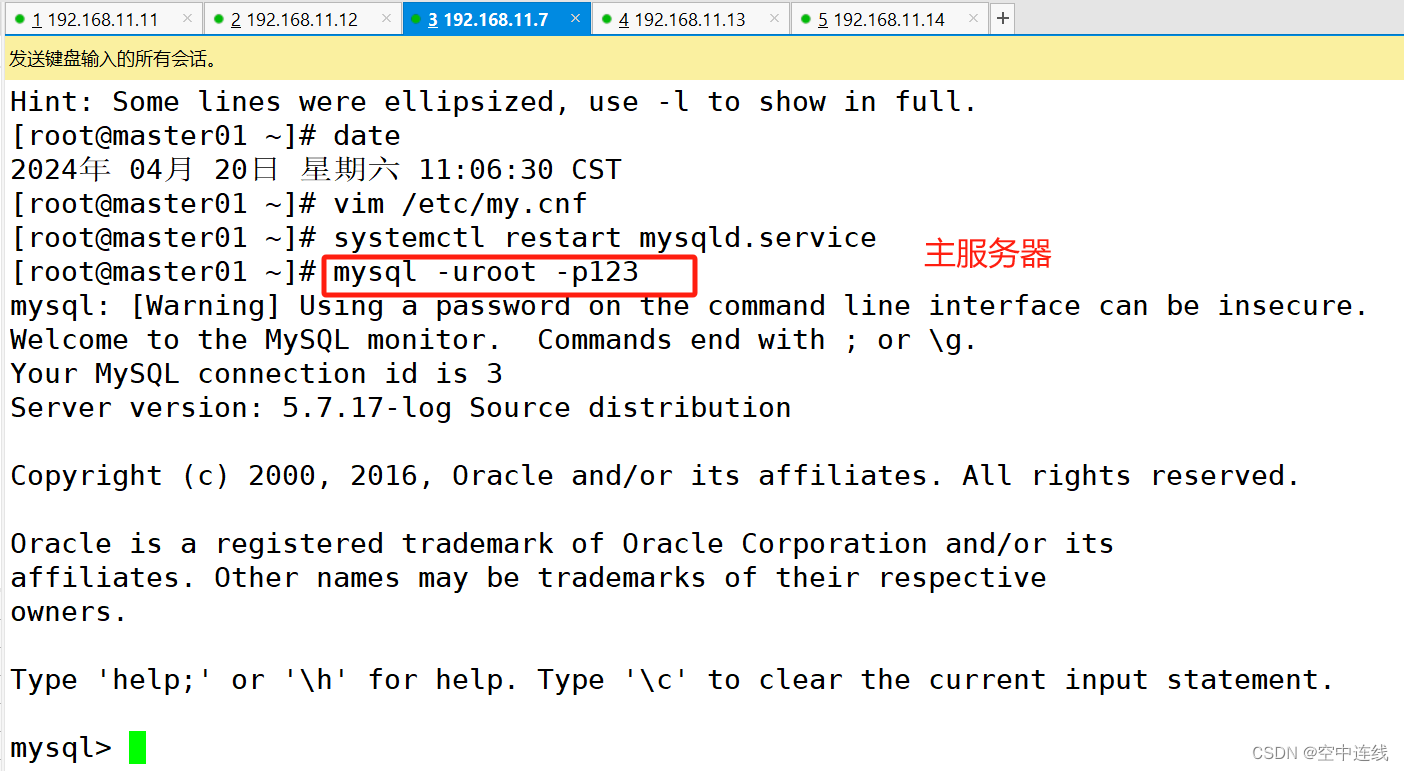

二 MySQL MHA

mysql编译安装:

| master | 192.168.11.7 |

| slave01 | 192.168.11.13 |

| slave02 | 192.168.11.14 |

| manager | 192.168.11.9 |

1 四台主机关闭防火墙 防护

2 下载安装环境 安装包

3 创建mysql用户及解压mysql压缩包 软连接

4 编译安装及安装路径

5 更改属主/属主 修改配置文件

6 设置环境变量 加载

7 启动与自启动

[root@slave01 ~]#

[root@slave01 ~]# cd /opt

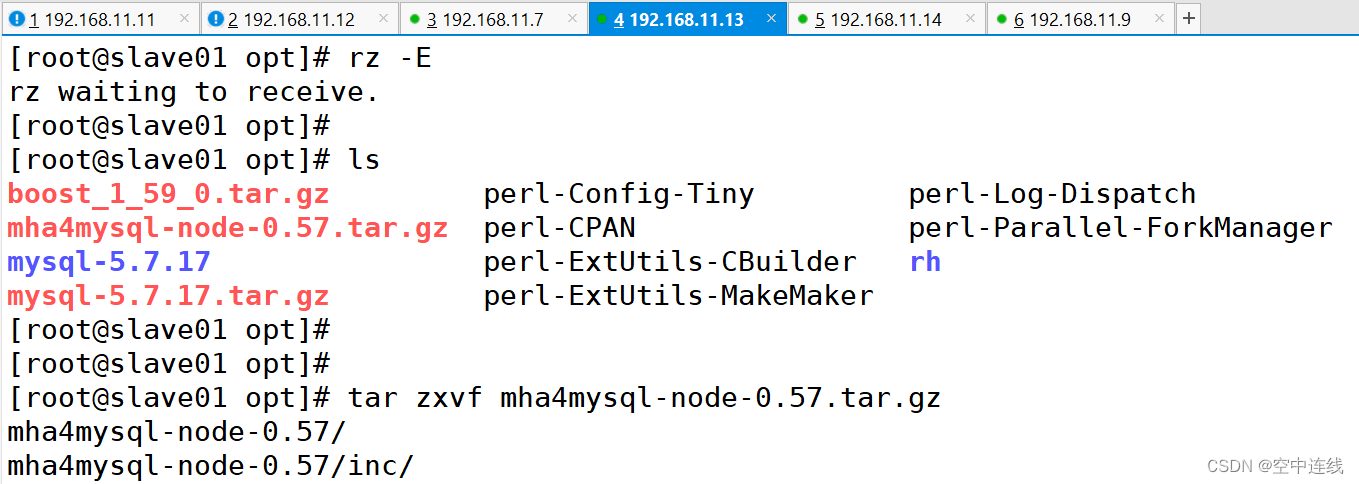

[root@slave01 opt]# rz -E

rz waiting to receive.

[root@slave01 opt]# rz -E

rz waiting to receive.

[root@slave01 opt]# ls

boost_1_59_0.tar.gz mysql-5.7.17.tar.gz rh

[root@slave01 opt]#

[root@slave01 opt]# yum -y install gcc gcc-c++ ncurses ncurses-devel bison cmake

已加载插件:fastestmirror, langpacks

yum -y install gcc gcc-c++ cmake bison bison-devel zlib-devel libcurl-devel libarchive-devel boost-devel ncurses-devel gnutls-devel libxml2-devel openssl-devel libevent-devel libaio-devel

创建mysql用户及解压mysql压缩包

[root@slave01 opt]# useradd -s /sbin/nologin mysql

[root@slave01 opt]# ls

boost_1_59_0.tar.gz mysql-5.7.17.tar.gz rh

[root@slave01 opt]# tar xf mysql-5.7.17.tar.gz

[root@slave01 opt]# tar xf boost_1_59_0.tar.gz -C /usr/local

[root@slave01 opt]# cd /usr/local

[root@slave01 local]# ls

apache-tomcat-9.0.16 boost_1_59_0 games jdk lib libexec share tomcat

bin etc include jdk1.8.0_291 lib64 sbin src

[root@slave01 local]# ln -s boost_1_59_0/ boost

[root@slave01 local]# ls

apache-tomcat-9.0.16 boost etc include jdk1.8.0_291 lib64 sbin src

bin boost_1_59_0 games jdk lib libexec share tomcat

[root@slave01 local]#

编译安装及安装路径

[root@slave01 local]# cd /opt/mysql-5.7.17/

[root@slave01 mysql-5.7.17]# cmake \

> -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

> -DMYSQL_UNIX_ADDR=/usr/local/mysql/mysql.sock \

> -DSYSCONFDIR=/etc \

> -DSYSTEMD_PID_DIR=/usr/local/mysql \

> -DDEFAULT_CHARSET=utf8 \

> -DDEFAULT_COLLATION=utf8_general_ci \

> -DWITH_EXTRA_CHARSETS=all \

> -DWITH_INNOBASE_STORAGE_ENGINE=1 \

> -DWITH_ARCHIVE_STORAGE_ENGINE=1 \

> -DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

> -DWITH_PERFSCHEMA_STORAGE_ENGINE=1 \

> -DMYSQL_DATADIR=/usr/local/mysql/data \

> -DWITH_BOOST=/usr/local/boost \

> -DWITH_SYSTEMD=1

[root@slave01 mysql-5.7.17]# make -j 4 && make install #需要很长时间

chown -R mysql:mysql /usr/local/mysql/

#更改管理主/组

chown -R mysql:mysql /usr/local/mysql/

修改配置文件

[root@slave01 mysql-5.7.17]# vim /etc/my.cnf

[client]

port = 3306

default-character-set=utf8

socket=/usr/local/mysql/mysql.sock

[mysql]

port = 3306

default-character-set=utf8

socket=/usr/local/mysql/mysql.sock

auto-rehash

[mysqld]

user = mysql

basedir=/usr/local/mysql

datadir=/usr/local/mysql/data

port = 3306

character-set-server=utf8

pid-file = /usr/local/mysql/mysqld.pid

socket=/usr/local/mysql/mysql.sock

bind-address = 0.0.0.0

skip-name-resolve

max_connections=2048

default-storage-engine=INNODB

max_allowed_packet=16M

server-id = 1

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES,NO_AUTO_CREATE_USER,NO_AUTO_VALUE_ON_ZERO,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,PIPES_AS_CONCAT,ANSI_QUOTES

设置环境变量

[root@slave01 mysql-5.7.17]# echo "PATH=$PATH:/usr/local/mysql/bin" >> /etc/profile

[root@slave01 mysql-5.7.17]# source /etc/profile

[root@slave01 mysql-5.7.17]#

[root@slave01 mysql-5.7.17]# cd /usr/local/mysql/bin/

[root@slave01 bin]#

[root@slave01 bin]# ./mysqld \

> --initialize-insecure \

> --user=mysql \

> --basedir=/usr/local/mysql \

> --datadir=/usr/local/mysql/data

2024-04-19T15:27:32.812209Z 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use --explicit_defaults_for_timestamp server option (see documentation for more details).

2024-04-19T15:27:33.117578Z 0 [Warning] InnoDB: New log files created, LSN=45790

2024-04-19T15:27:33.146953Z 0 [Warning] InnoDB: Creating foreign key constraint system tables.

2024-04-19T15:27:33.221546Z 0 [Warning] No existing UUID has been found, so we assume that this is the first time that this server has been started. Generating a new UUID: 57baeda1-fe61-11ee-bf36-000c291fe803.

2024-04-19T15:27:33.223747Z 0 [Warning] Gtid table is not ready to be used. Table 'mysql.gtid_executed' cannot be opened.

2024-04-19T15:27:33.225148Z 1 [Warning] root@localhost is created with an empty password ! Please consider switching off the --initialize-insecure option.

2024-04-19T15:27:33.416709Z 1 [Warning] 'user' entry 'root@localhost' ignored in --skip-name-resolve mode.

2024-04-19T15:27:33.416742Z 1 [Warning] 'user' entry 'mysql.sys@localhost' ignored in --skip-name-resolve mode.

2024-04-19T15:27:33.416756Z 1 [Warning] 'db' entry 'sys mysql.sys@localhost' ignored in --skip-name-resolve mode.

2024-04-19T15:27:33.416766Z 1 [Warning] 'proxies_priv' entry '@ root@localhost' ignored in --skip-name-resolve mode.

2024-04-19T15:27:33.416792Z 1 [Warning] 'tables_priv' entry 'sys_config mysql.sys@localhost' ignored in --skip-name-resolve mode.

[root@slave01 bin]#

[root@slave01 bin]# cp /usr/local/mysql/usr/lib/systemd/system/mysqld.service /usr/lib/systemd/system/

[root@slave01 bin]#

[root@slave01 bin]# systemctl daemon-reload

[root@slave01 bin]# systemctl start mysqld.service

[root@slave01 bin]# systemctl status mysqld.service

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; disabled; vendor preset: disabled)

Active: active (running) since 六 2024-04-20 00:09:17 CST; 8s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 22677 ExecStart=/usr/local/mysql/bin/mysqld --daemonize --pid-file=/usr/local/mysql/mysqld.pid $MYSQLD_OPTS (code=exited, status=0/SUCCESS)

Process: 22657 ExecStartPre=/usr/local/mysql/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 22680 (mysqld)

CGroup: /system.slice/mysqld.service

└─22680 /usr/local/mysql/bin/mysqld --daemonize --pid-file=/usr/local/mysql/mysql...

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.907483Z 0 [Warning] 'db' entr...de.

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.907493Z 0 [Warning] 'proxies_...de.

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.908342Z 0 [Warning] 'tables_p...de.

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.912412Z 0 [Note] Event Schedu...nts

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.912647Z 0 [Note] Executing 'S...ck.

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.912656Z 0 [Note] Beginning of...les

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.930205Z 0 [Note] End of list ...les

4月 20 00:09:17 slave02 mysqld[22677]: 2024-04-19T16:09:17.930343Z 0 [Note] /usr/local/m...ns.

4月 20 00:09:17 slave02 mysqld[22677]: Version: '5.7.17' socket: '/usr/local/mysql/mysq...ion

4月 20 00:09:17 slave02 systemd[1]: Started MySQL Server.

Hint: Some lines were ellipsized, use -l to show in full.

[root@slave02 bin]# systemctl enable mysqld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/mysqld.service to /usr/lib/systemd/system/mysqld.service.

[root@slave02 bin]# netstat -natp |grep mysql

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 22680/mysqld

[root@slave02 bin]# mysqladmin -uroot -p password "123"

Enter password:

mysqladmin: [Warning] Using a password on the command line interface can be insecure.

Warning: Since password will be sent to server in plain text, use ssl connection to ensure password safety.

[root@slave02 bin]# mysql -uroot -p123

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 4

Server version: 5.7.17 Source distribution

Copyright (c) 2000, 2016, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

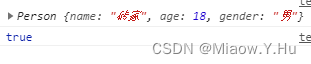

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.01 sec)

mysql> exit

Bye

[root@slave02 bin]#

[client]

port = 3306

default-character-set=utf8

socket=/usr/local/mysql/mysql.sock

[mysql]

port = 3306

default-character-set=utf8

socket=/usr/local/mysql/mysql.sock

auto-rehash

[mysqld]

user = mysql

basedir=/usr/local/mysql

datadir=/usr/local/mysql/data

port = 3306

character-set-server=utf8

pid-file = /usr/local/mysql/mysqld.pid

socket=/usr/local/mysql/mysql.sock

bind-address = 0.0.0.0

skip-name-resolve

max_connections=2048

default-storage-engine=INNODB

max_allowed_packet=16M

server-id = 1

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES,NO_AUTO_CREATE_USER,NO_AUTO_VALUE_ON_ZERO,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,PIPES_AS_CONCAT,ANSI_QUOTES

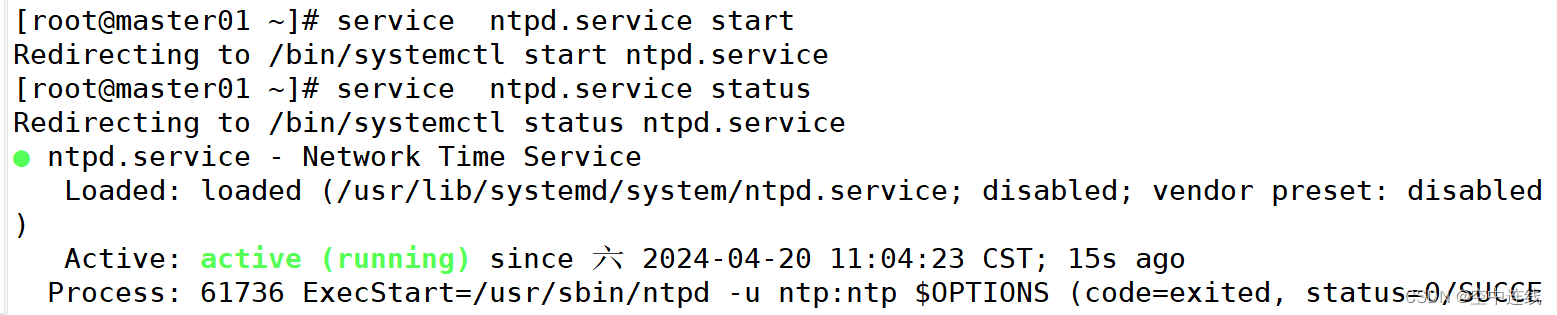

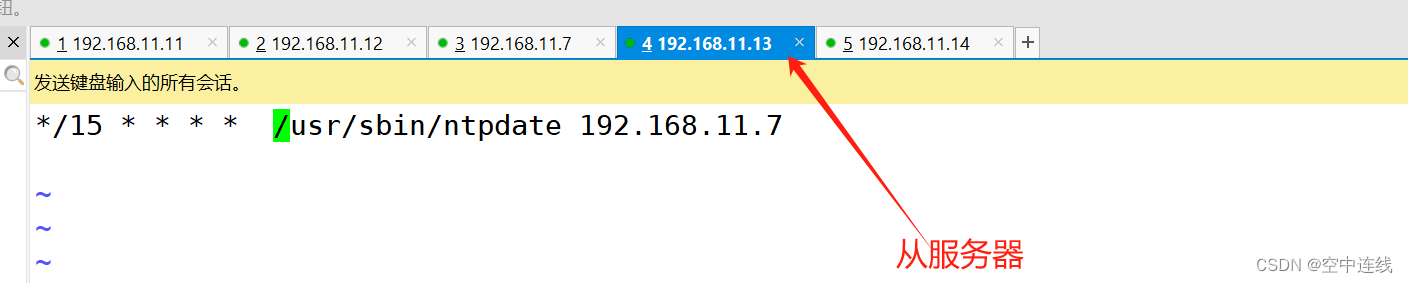

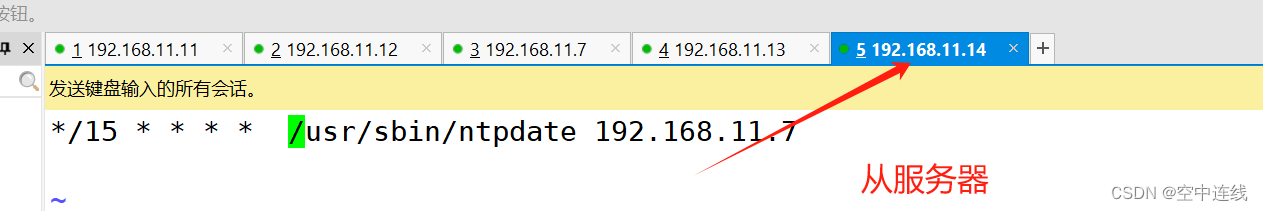

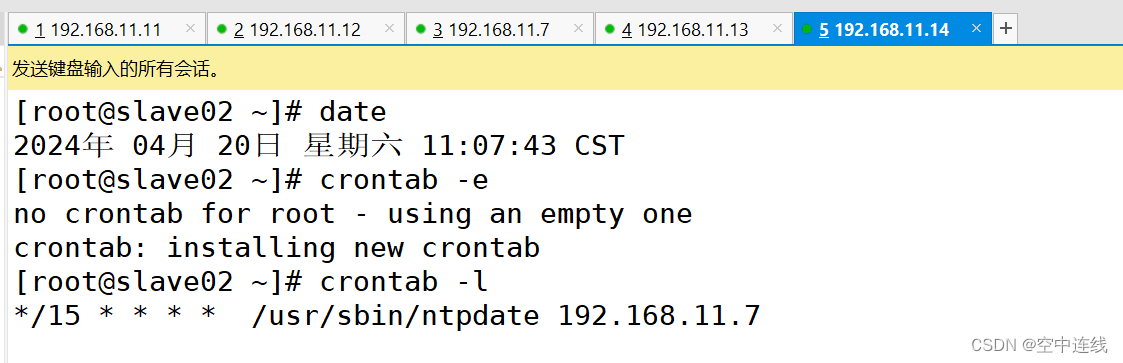

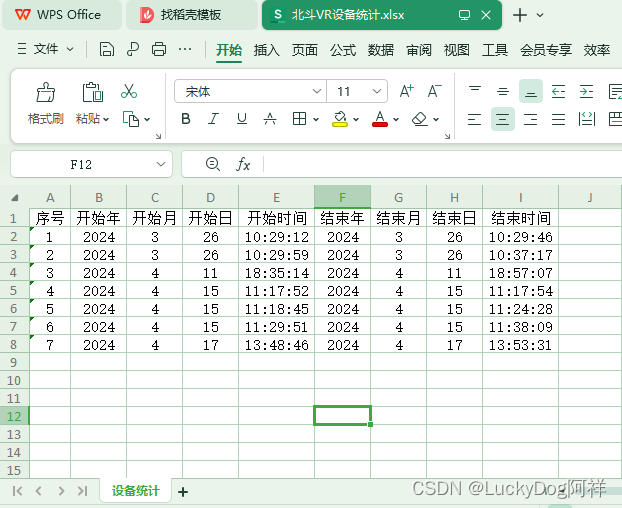

8 做个定时计划

9 修改 Master01、Slave01、Slave02 节点的 Mysql主配置文件/etc/my.cnf

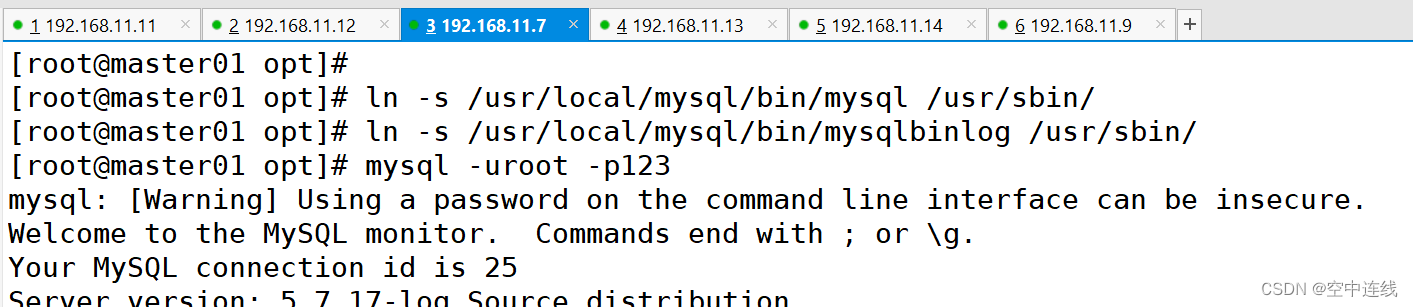

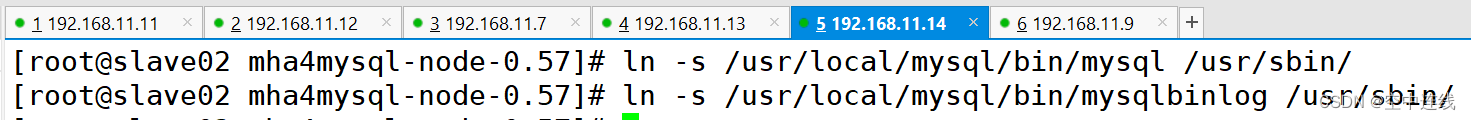

10 在 Master01、Slave01、Slave02 节点上都创建两个软链接

ln -s /usr/local/mysql/bin/mysql /usr/sbin/

ln -s /usr/local/mysql/bin/mysqlbinlog /usr/sbin/

ls /usr/sbin/mysql* #查看软连接

/usr/sbin/mysql /usr/sbin/mysqlbinlog

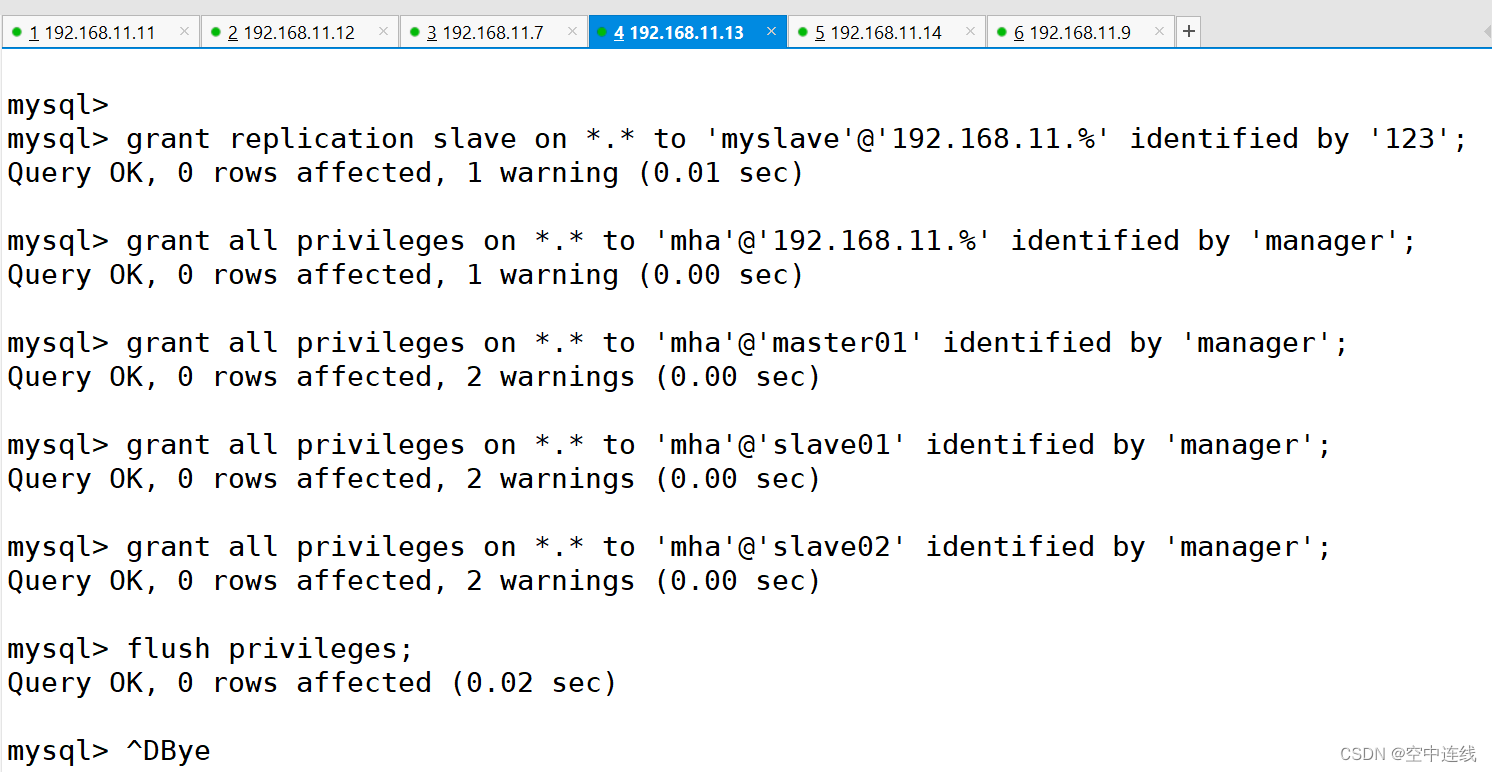

11 在 Master、Slave1、Slave2 节点上都进行主从同步的授权

grant replication slave on *.* to 'myslave'@'192.168.246.%' identified by '123123';

grant all privileges on *.* to 'mha'@'192.168.246.%' identified by 'manager';

grant all privileges on *.* to 'mha'@'master' identified by 'manager';

grant all privileges on *.* to 'mha'@'slave1' identified by 'manager';

grant all privileges on *.* to 'mha'@'slave2' identified by 'manager';

flush privileges;

主从同时登陆

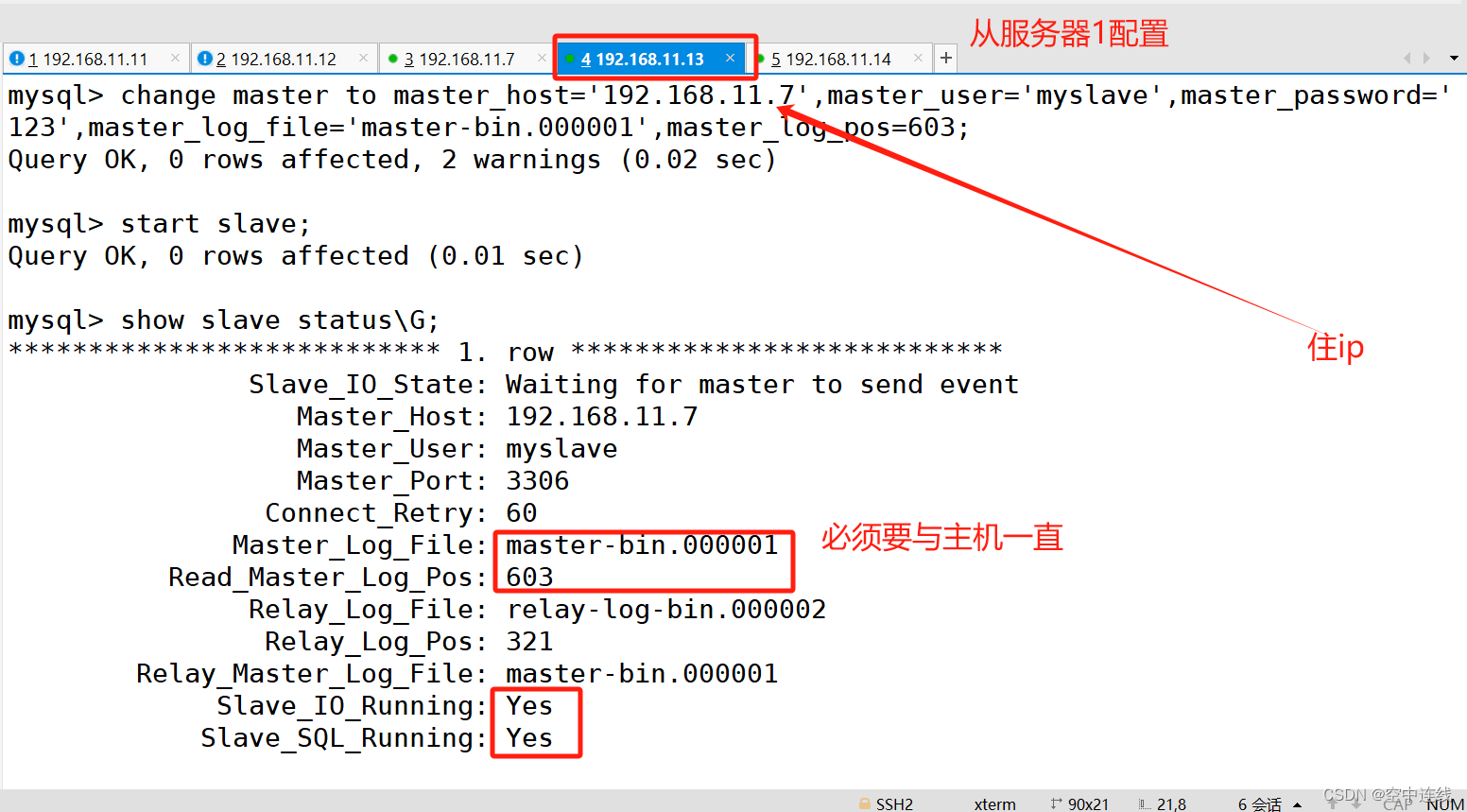

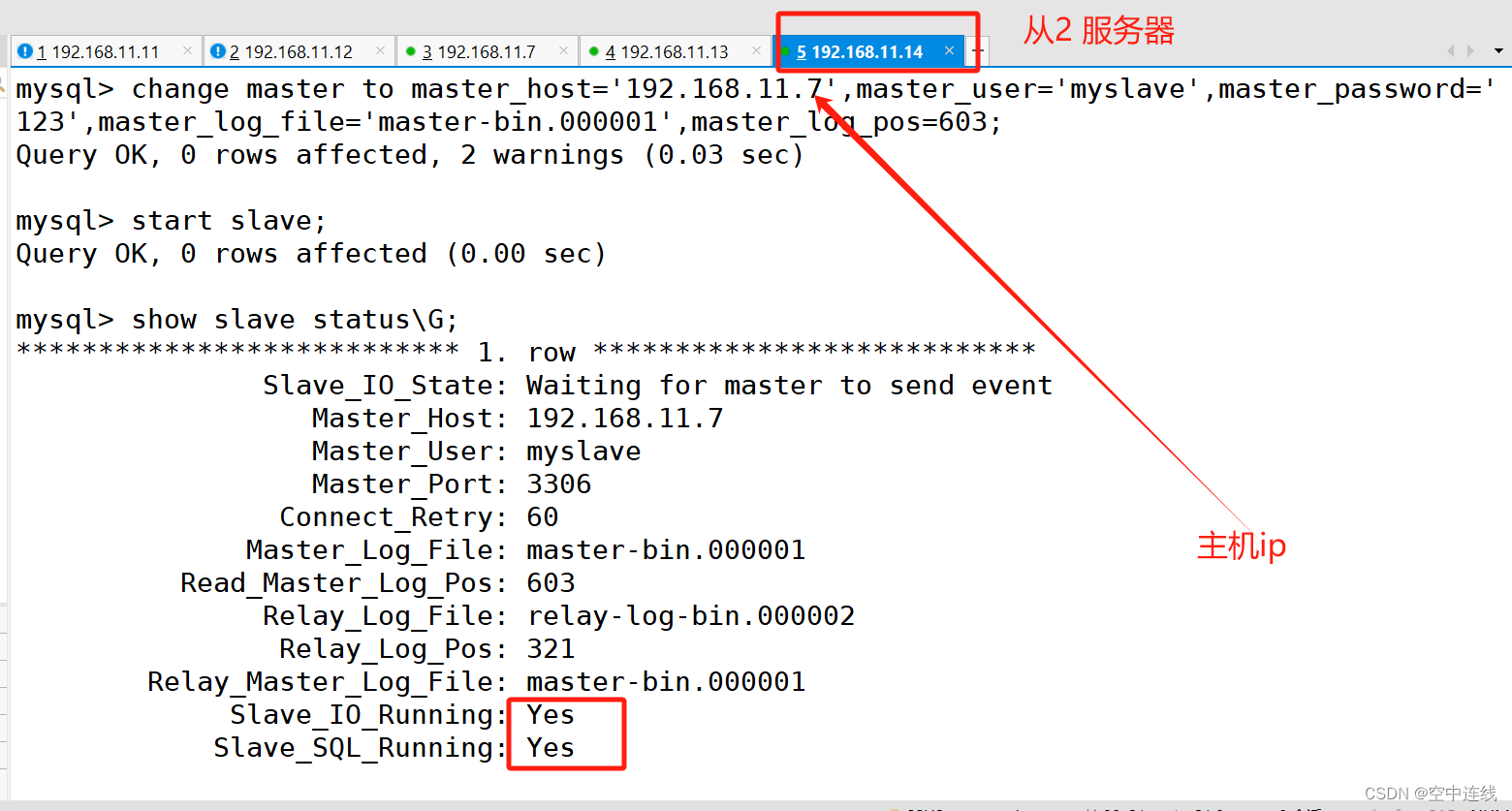

给从服务器授权slave01 服务器配置

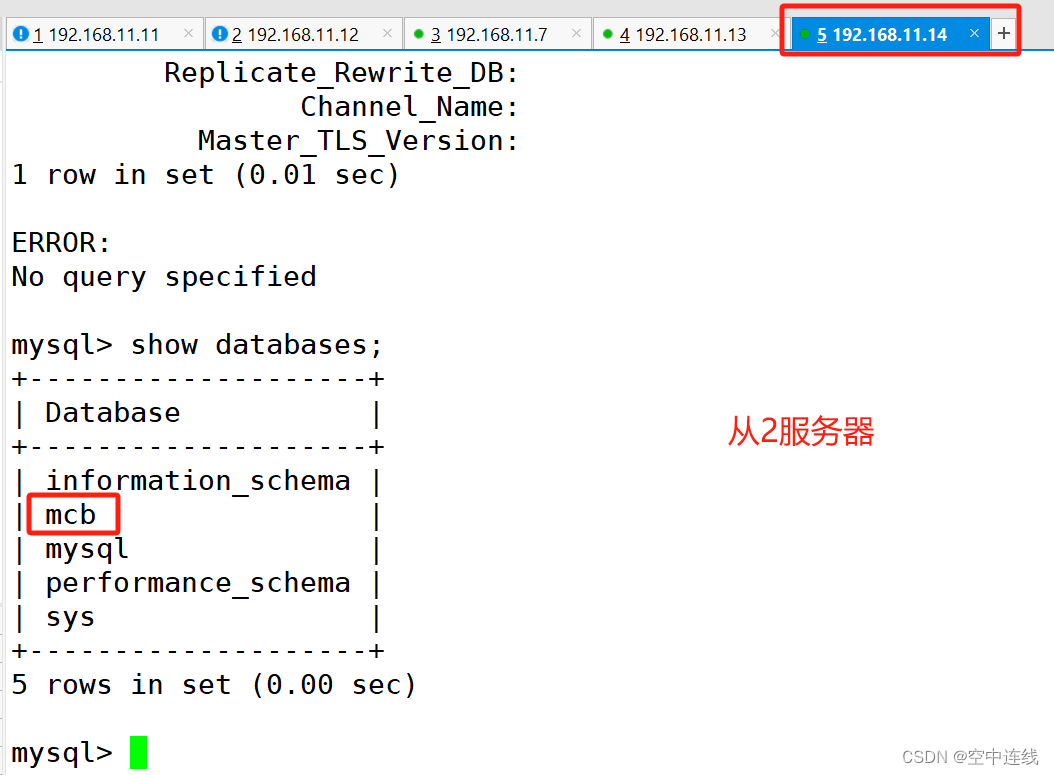

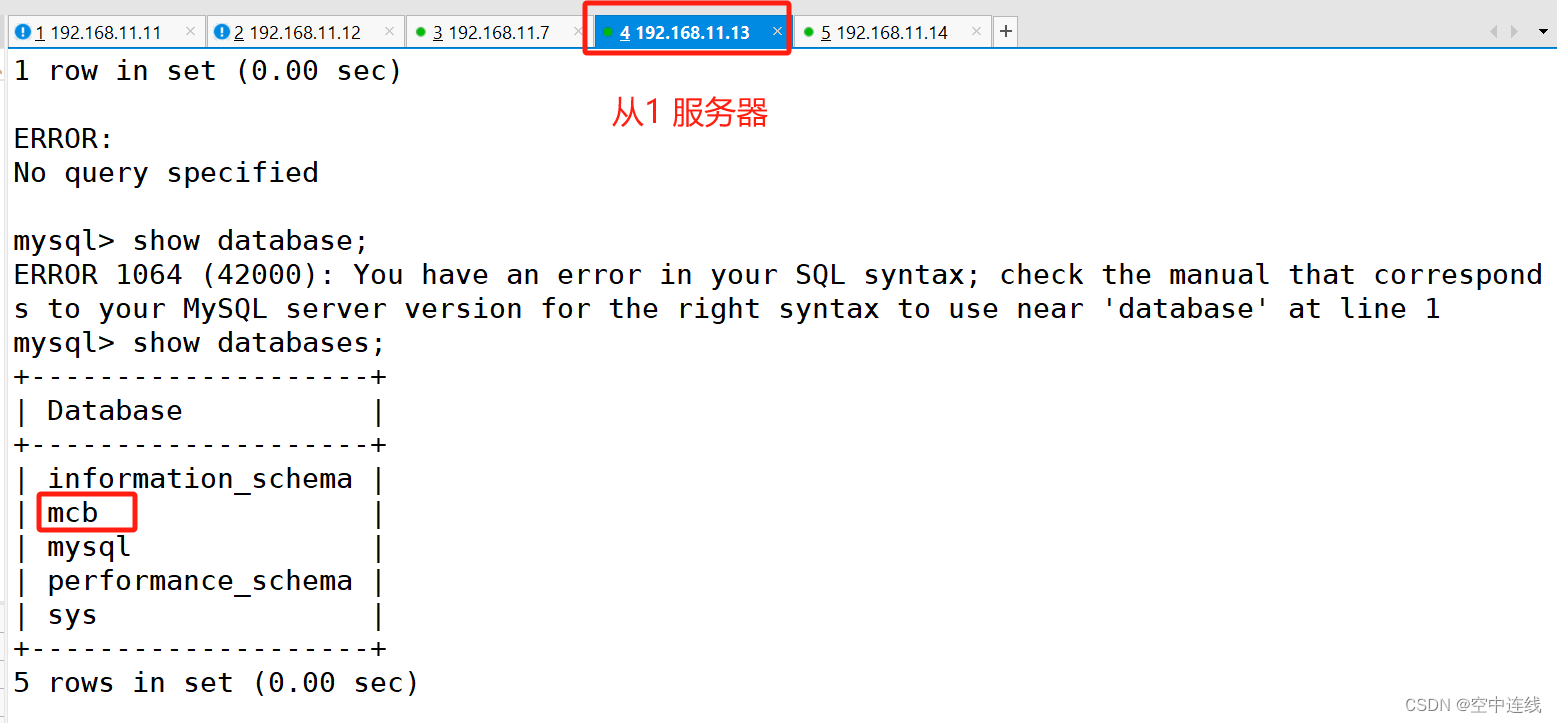

验证:在主服务器创建

mysql> flush privilieges;

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'privilieges' at line 1

mysql> flush privileges;

Query OK, 0 rows affected (0.01 sec)

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 603 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql> create database mcb;

Query OK, 1 row affected (0.01 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mcb |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.01 sec)

mysql>

从服务器验证

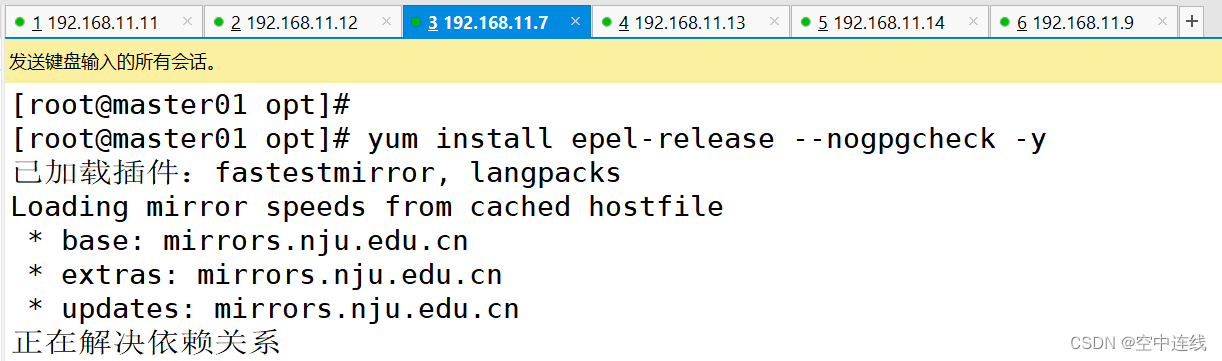

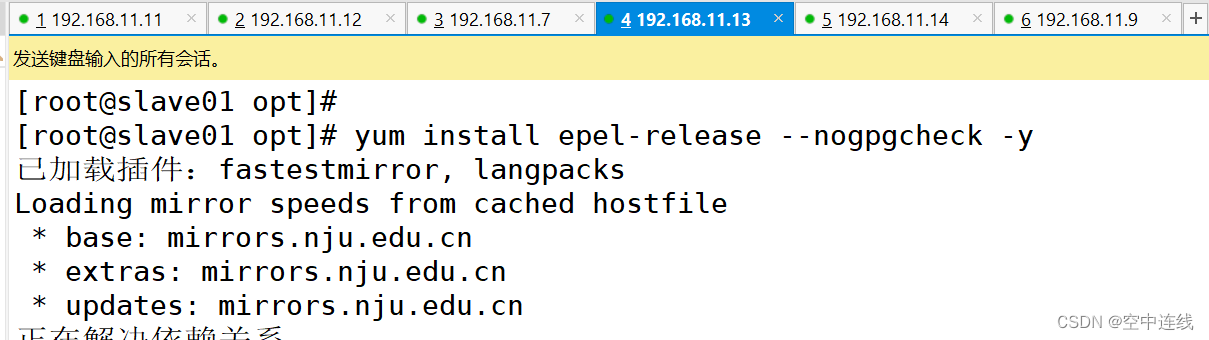

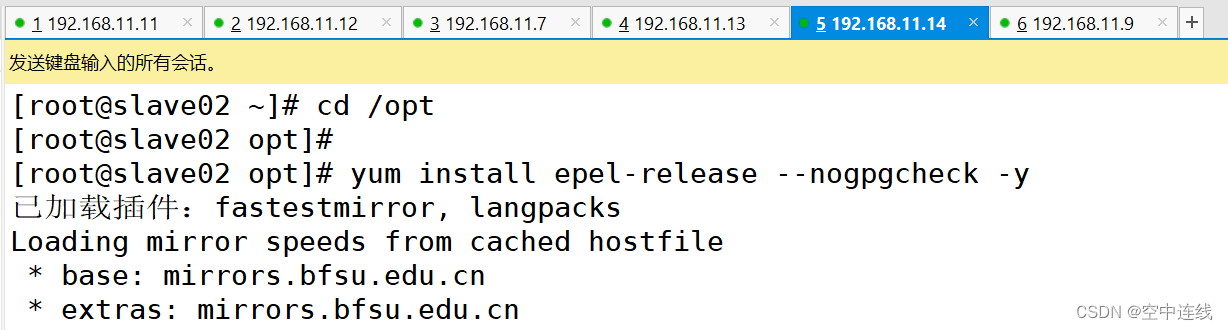

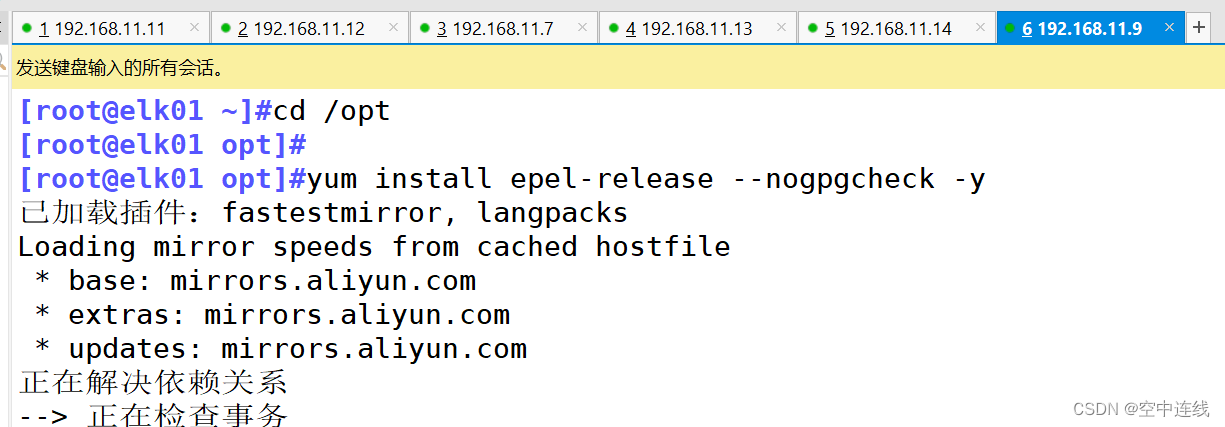

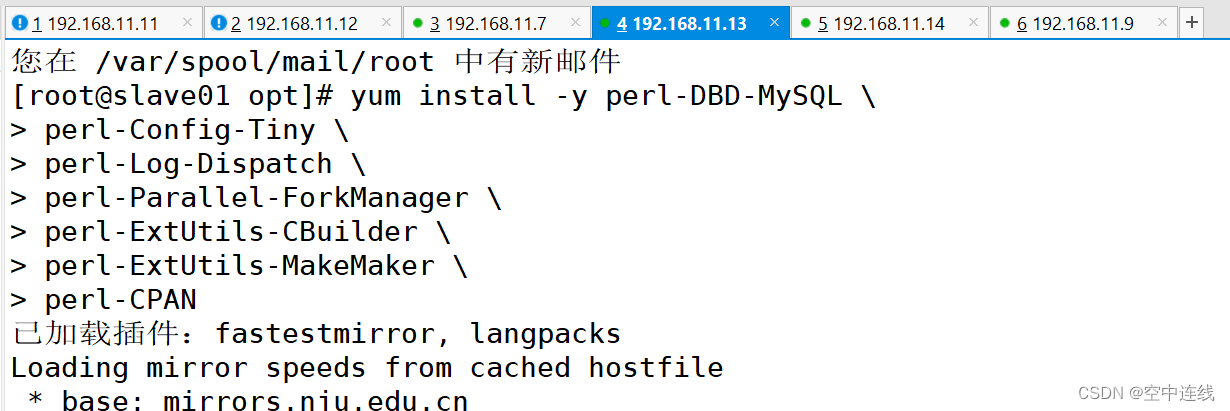

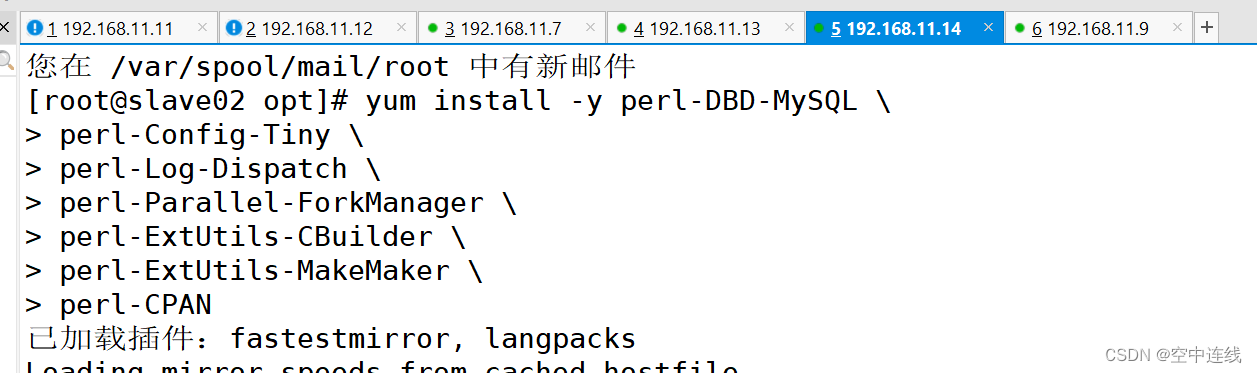

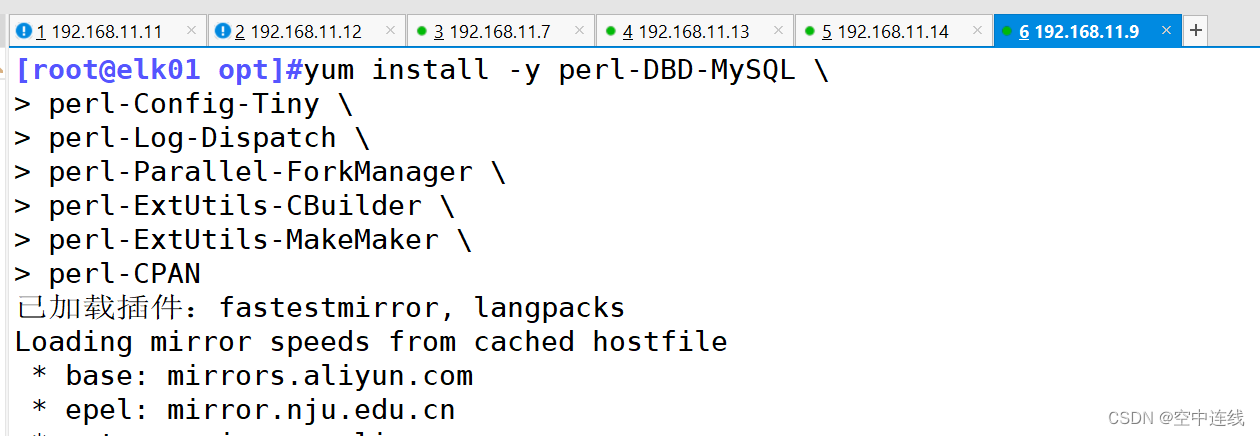

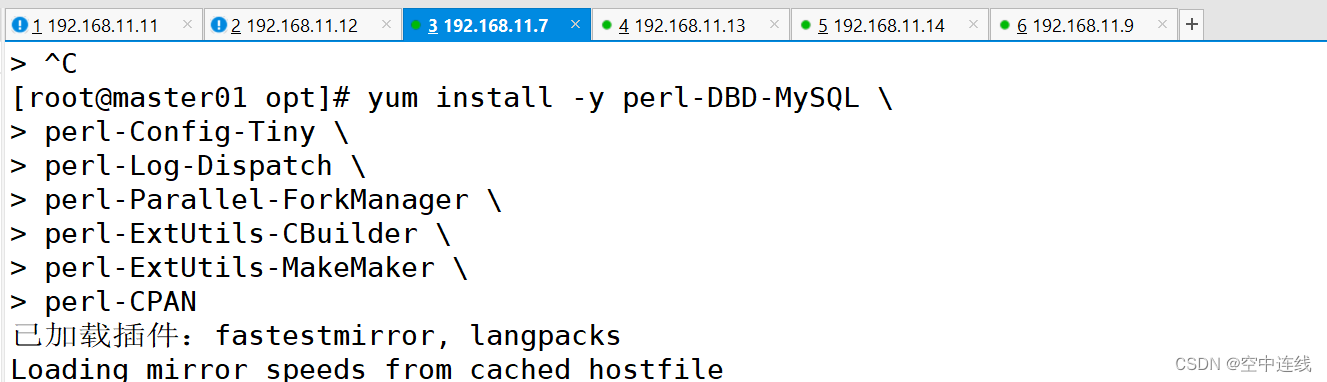

12 配置MHA所有组件,所有服务器上都安装 MHA 依赖的环境,首先安装 epel 源

yum install epel-release --nogpgcheck -y

yum install -y perl-DBD-MySQL \

> perl-Config-Tiny \

> perl-Log-Dispatch \

> perl-Parallel-ForkManager \

> perl-ExtUtils-CBuilder \

> perl-ExtUtils-MakeMaker \

> perl-CPAN13 四台服务器安装环境

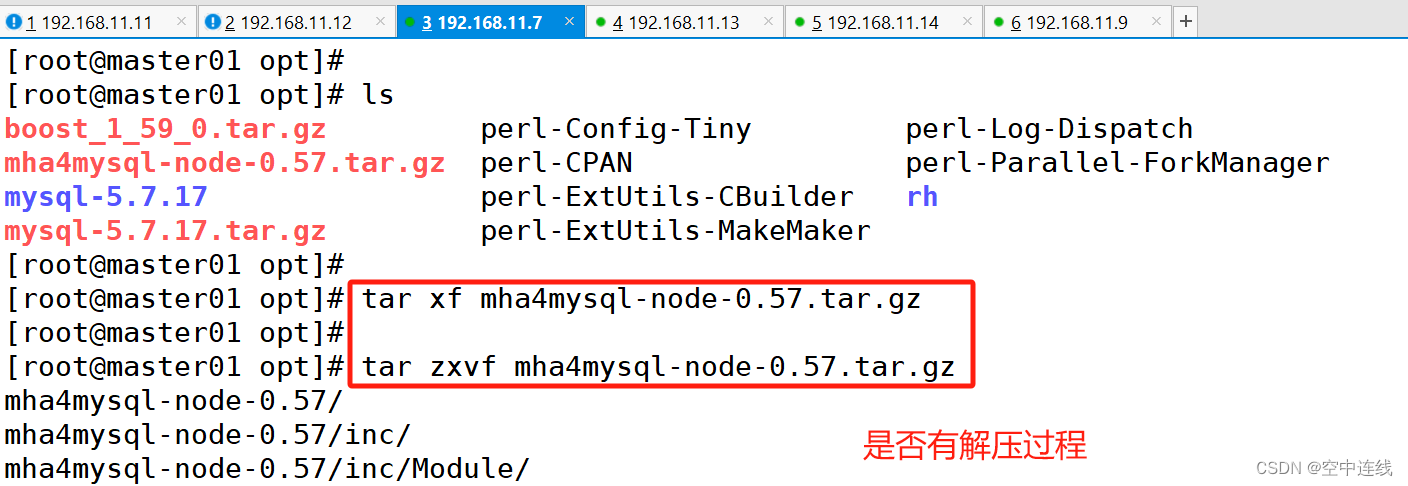

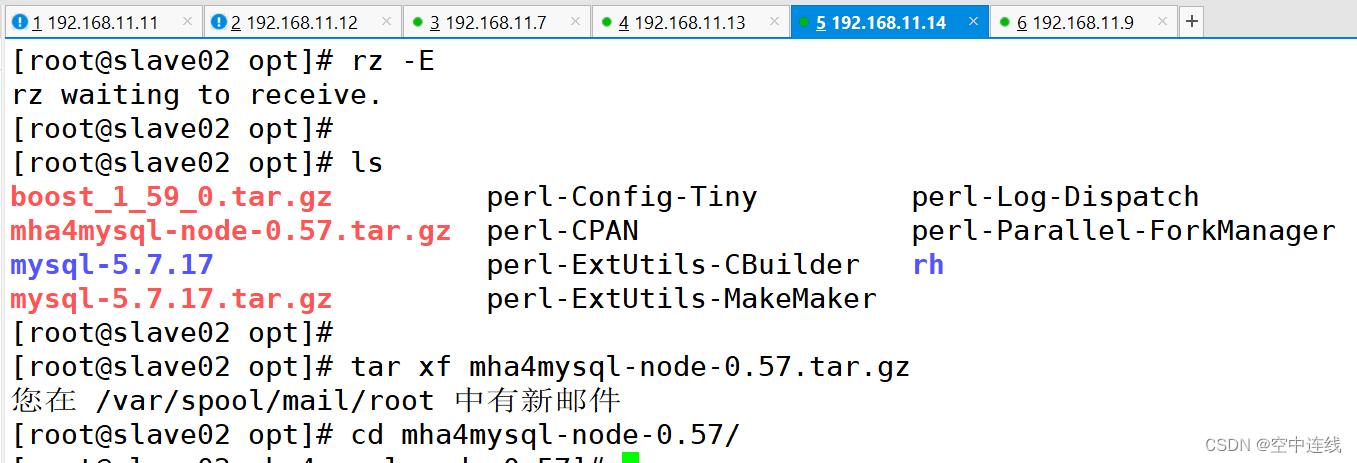

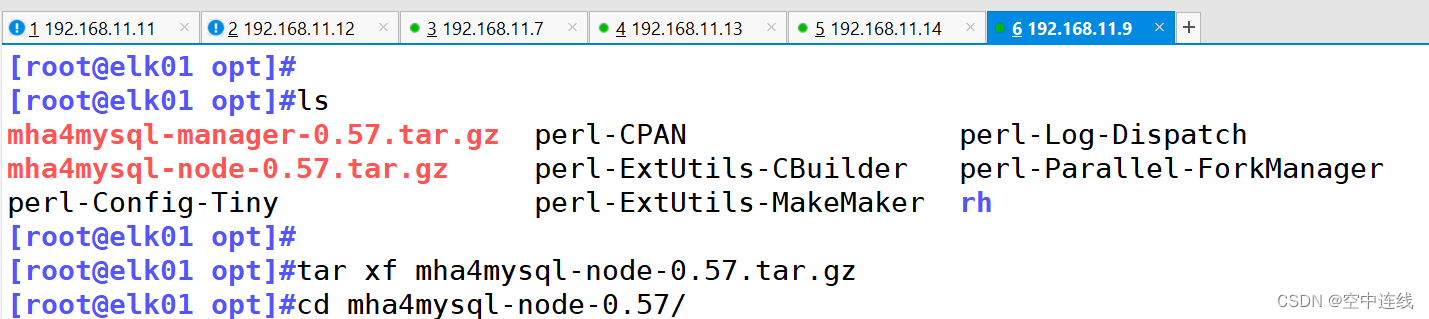

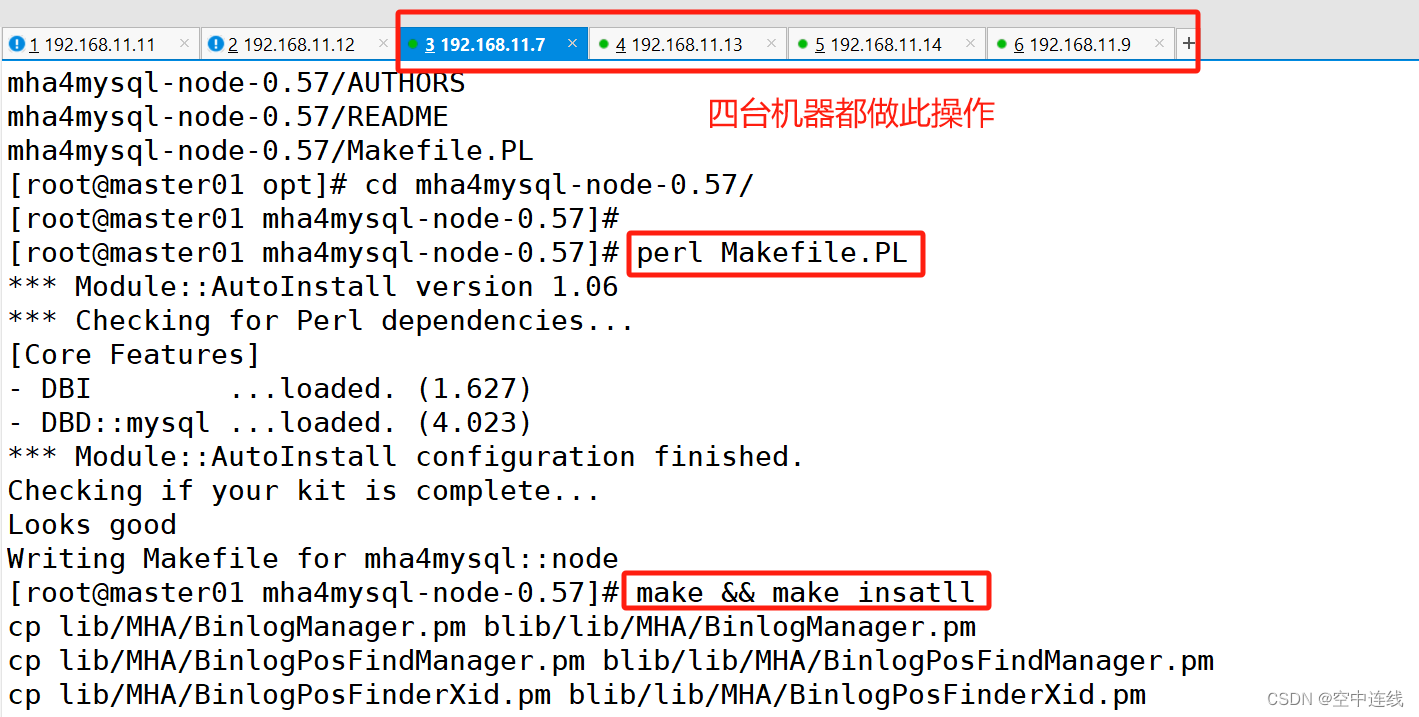

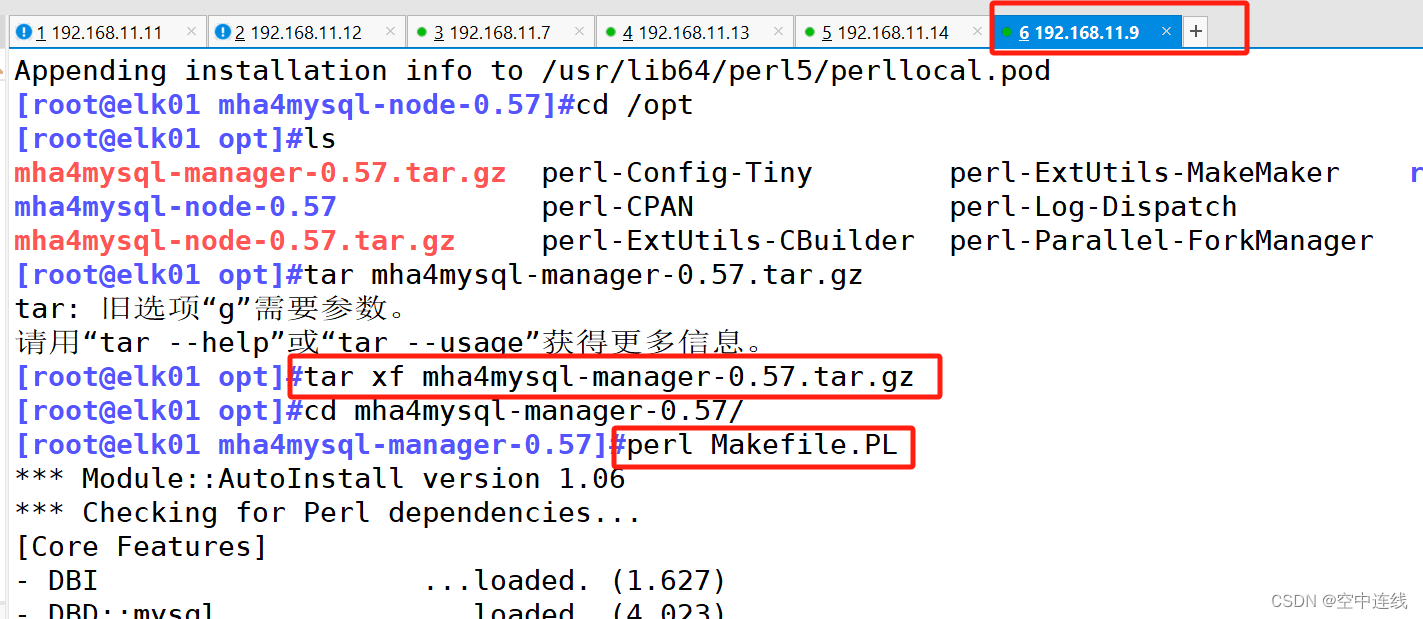

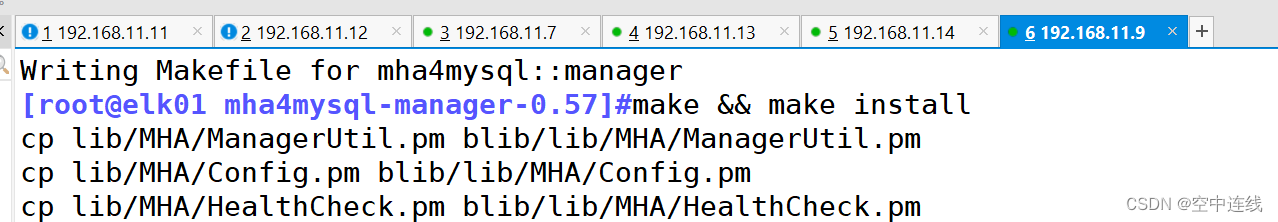

14 在所有服务器上必须先安装node组件,最后在MHA-manager节点上安装manager组件,因为manager依赖node组件。

在 MHA manager 节点上安装 manager 组件

manege免密交互

[root@elk01 mha4mysql-manager-0.57]#ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:HvUbXKX4fSndq2yADEyKmlwWJ+iJlHOVwma49qdcGKY root@elk01

The key's randomart image is:

+---[RSA 2048]----+

| = ... .|

| * O o . . o |

|+ B * + . . o |

|.= * . o . o o..o|

|o O o S . +..o+|

| E o o . + . o. o|

| . + . o . |

| o ... |

| .o |

+----[SHA256]-----+

[root@elk01 mha4mysql-manager-0.57]#ssh-copy-id 192.168.11.7

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.7 (192.168.11.7)' can't be established.

ECDSA key fingerprint is SHA256:uQfWnfl20Yj/iVllTVL3GAe3b5oPUj7IkhfWji2tF4Y.

ECDSA key fingerprint is MD5:23:93:1c:28:77:cc:64:8c:b6:fb:4a:c2:90:9c:b5:1a.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.7's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.7'"

and check to make sure that only the key(s) you wanted were added.

[root@elk01 mha4mysql-manager-0.57]#ssh-copy-id 192.168.11.13

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.13 (192.168.11.13)' can't be established.

ECDSA key fingerprint is SHA256:yxbaJImj8mJsF3SNpt1dlUq4RCnL5sn8R7NJNBhCQIs.

ECDSA key fingerprint is MD5:8b:67:9d:ff:25:ae:d2:81:f0:a0:ca:f6:af:ef:31:b1.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.13's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.13'"

and check to make sure that only the key(s) you wanted were added.

[root@elk01 mha4mysql-manager-0.57]#ssh-copy-id 192.168.11.14

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.14 (192.168.11.14)' can't be established.

ECDSA key fingerprint is SHA256:JAQ3v9JIlkv3lauqQxhRmSga7GPl5zIOv0THdDWT1TU.

ECDSA key fingerprint is MD5:d3:64:b1:26:6c:a5:f3:50:38:b2:db:ab:07:67:fe:00.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.14's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.14'"

and check to make sure that only the key(s) you wanted were added.

主服务器做免密

[root@master01 mha4mysql-node-0.57]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:vAZw2+fhzyIb49b+VAl10Vg7mUw2BY5rJGU5EuIgEwo root@master01

The key's randomart image is:

+---[RSA 2048]----+

|E +.. . ..o.oOB|

| . . o o ..oo+=.*|

| . . . . ..+..* |

| o + o o ..|

| o S o o o |

| . = o . |

| =.o . |

| oooo+ |

| .o+.o+ |

+----[SHA256]-----+

[root@master01 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.13

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.13 (192.168.11.13)' can't be established.

ECDSA key fingerprint is SHA256:yxbaJImj8mJsF3SNpt1dlUq4RCnL5sn8R7NJNBhCQIs.

ECDSA key fingerprint is MD5:8b:67:9d:ff:25:ae:d2:81:f0:a0:ca:f6:af:ef:31:b1.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.13's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.13'"

and check to make sure that only the key(s) you wanted were added.

[root@master01 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.14

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.14 (192.168.11.14)' can't be established.

ECDSA key fingerprint is SHA256:JAQ3v9JIlkv3lauqQxhRmSga7GPl5zIOv0THdDWT1TU.

ECDSA key fingerprint is MD5:d3:64:b1:26:6c:a5:f3:50:38:b2:db:ab:07:67:fe:00.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.14's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.14'"

and check to make sure that only the key(s) you wanted were added.

从1服务器做免密

[root@slave01 mha4mysql-node-0.57]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:yZwFzSDAbfdK2ybyqwU2VwfLx4EcK4bhN6I2t6mhsMw root@slave01

The key's randomart image is:

+---[RSA 2048]----+

| ..oo o=oo. |

| ..o+.o== . |

| .+.=.* + |

| . *.B.o |

| + =.S+ |

| . +.*+ o |

|. . oo.o |

|oo . o .. |

|.E. . .... |

+----[SHA256]-----+

您在 /var/spool/mail/root 中有新邮件

[root@slave01 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.14

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.14 (192.168.11.14)' can't be established.

ECDSA key fingerprint is SHA256:JAQ3v9JIlkv3lauqQxhRmSga7GPl5zIOv0THdDWT1TU.

ECDSA key fingerprint is MD5:d3:64:b1:26:6c:a5:f3:50:38:b2:db:ab:07:67:fe:00.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.14's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.14'"

and check to make sure that only the key(s) you wanted were added.

您在 /var/spool/mail/root 中有新邮件

[root@slave01 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.7

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.7 (192.168.11.7)' can't be established.

ECDSA key fingerprint is SHA256:uQfWnfl20Yj/iVllTVL3GAe3b5oPUj7IkhfWji2tF4Y.

ECDSA key fingerprint is MD5:23:93:1c:28:77:cc:64:8c:b6:fb:4a:c2:90:9c:b5:1a.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.7's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.7'"

and check to make sure that only the key(s) you wanted were added.

从2服务器免密

[root@slave02 mha4mysql-node-0.57]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:fxWAkdGfr9KqphQ2b40ZUq/kys2thugv5jehXNycyMM root@slave02

The key's randomart image is:

+---[RSA 2048]----+

| o*. |

| o .. |

| . ... |

| . . o. |

| +S+o.. .. |

| .EO+* . .|

| . +.+O o. . |

| *o+=oo. o |

| +o+===ooo |

+----[SHA256]-----+

您在 /var/spool/mail/root 中有新邮件

[root@slave02 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.7

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.7 (192.168.11.7)' can't be established.

ECDSA key fingerprint is SHA256:uQfWnfl20Yj/iVllTVL3GAe3b5oPUj7IkhfWji2tF4Y.

ECDSA key fingerprint is MD5:23:93:1c:28:77:cc:64:8c:b6:fb:4a:c2:90:9c:b5:1a.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.7's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.7'"

and check to make sure that only the key(s) you wanted were added.

[root@slave02 mha4mysql-node-0.57]# ssh-copy-id 192.168.11.13

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.11.13 (192.168.11.13)' can't be established.

ECDSA key fingerprint is SHA256:yxbaJImj8mJsF3SNpt1dlUq4RCnL5sn8R7NJNBhCQIs.

ECDSA key fingerprint is MD5:8b:67:9d:ff:25:ae:d2:81:f0:a0:ca:f6:af:ef:31:b1.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.11.13's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.11.13'"

and check to make sure that only the key(s) you wanted were added.15在 manager 节点上配置 MHA

(1)在 manager 节点上复制相关脚本到/usr/local/bin 目录

[root@elk01 mha4mysql-manager-0.57]#cp -rp /opt/mha4mysql-manager-0.57/samples/scripts /usr/local/bin

[root@elk01 mha4mysql-manager-0.57]#ll /usr/local/bin/scripts/

总用量 32

-rwxr-xr-x 1 1001 1001 3648 5月 31 2015 master_ip_failover

-rwxr-xr-x 1 1001 1001 9870 5月 31 2015 master_ip_online_change

-rwxr-xr-x 1 1001 1001 11867 5月 31 2015 power_manager

-rwxr-xr-x 1 1001 1001 1360 5月 31 2015 send_report

[root@elk01 mha4mysql-manager-0.57]#cp /usr/local/bin/scripts/master_ip_failover /usr/local/bin/

[root@elk01 mha4mysql-manager-0.57]#ll /usr/local/bin

总用量 88

-r-xr-xr-x 1 root root 16381 4月 20 13:40 apply_diff_relay_logs

-r-xr-xr-x 1 root root 4807 4月 20 13:40 filter_mysqlbinlog

-r-xr-xr-x 1 root root 1995 4月 20 13:52 masterha_check_repl

-r-xr-xr-x 1 root root 1779 4月 20 13:52 masterha_check_ssh

-r-xr-xr-x 1 root root 1865 4月 20 13:52 masterha_check_status

-r-xr-xr-x 1 root root 3201 4月 20 13:52 masterha_conf_host

-r-xr-xr-x 1 root root 2517 4月 20 13:52 masterha_manager

-r-xr-xr-x 1 root root 2165 4月 20 13:52 masterha_master_monitor

-r-xr-xr-x 1 root root 2373 4月 20 13:52 masterha_master_switch

-r-xr-xr-x 1 root root 5171 4月 20 13:52 masterha_secondary_check

-r-xr-xr-x 1 root root 1739 4月 20 13:52 masterha_stop

-rwxr-xr-x 1 root root 3648 4月 20 14:13 master_ip_failover

-r-xr-xr-x 1 root root 8261 4月 20 13:40 purge_relay_logs

-r-xr-xr-x 1 root root 7525 4月 20 13:40 save_binary_logs

drwxr-xr-x 2 1001 1001 103 5月 31 2015 scripts

14 创建 MHA 软件目录并拷贝配置文件,这里使用app1.cnf配置文件来管理 mysql 节点服务器

[root@elk01 mha4mysql-manager-0.57]#vim /usr/local/bin/master_ip_failover

[root@elk01 mha4mysql-manager-0.57]#mkdir /etc/masterha

[root@elk01 mha4mysql-manager-0.57]#vim /usr/local/bin/master_ip_failover

[root@elk01 mha4mysql-manager-0.57]#cp /opt/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha

[root@elk01 mha4mysql-manager-0.57]#vim /etc/masterha/app1.cnf

[server default]

manager_log=/var/log/masterha/app1/manager.log

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/usr/local/mysql/data

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

password=abc123

user=my

ping_interval=1

remote_workdir=/tmp

repl_password=123

repl_user=myslave

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.11.13 -s 192.168.11.14

shutdown_script=""

ssh_user=root

[server1]

hostname=192.168.11.7

port=3306

[server2]

candidate_master=1

check_repl_delay=0

hostname=192.168.11.13

port=3306

[server3]

hostname=192.168.11.14

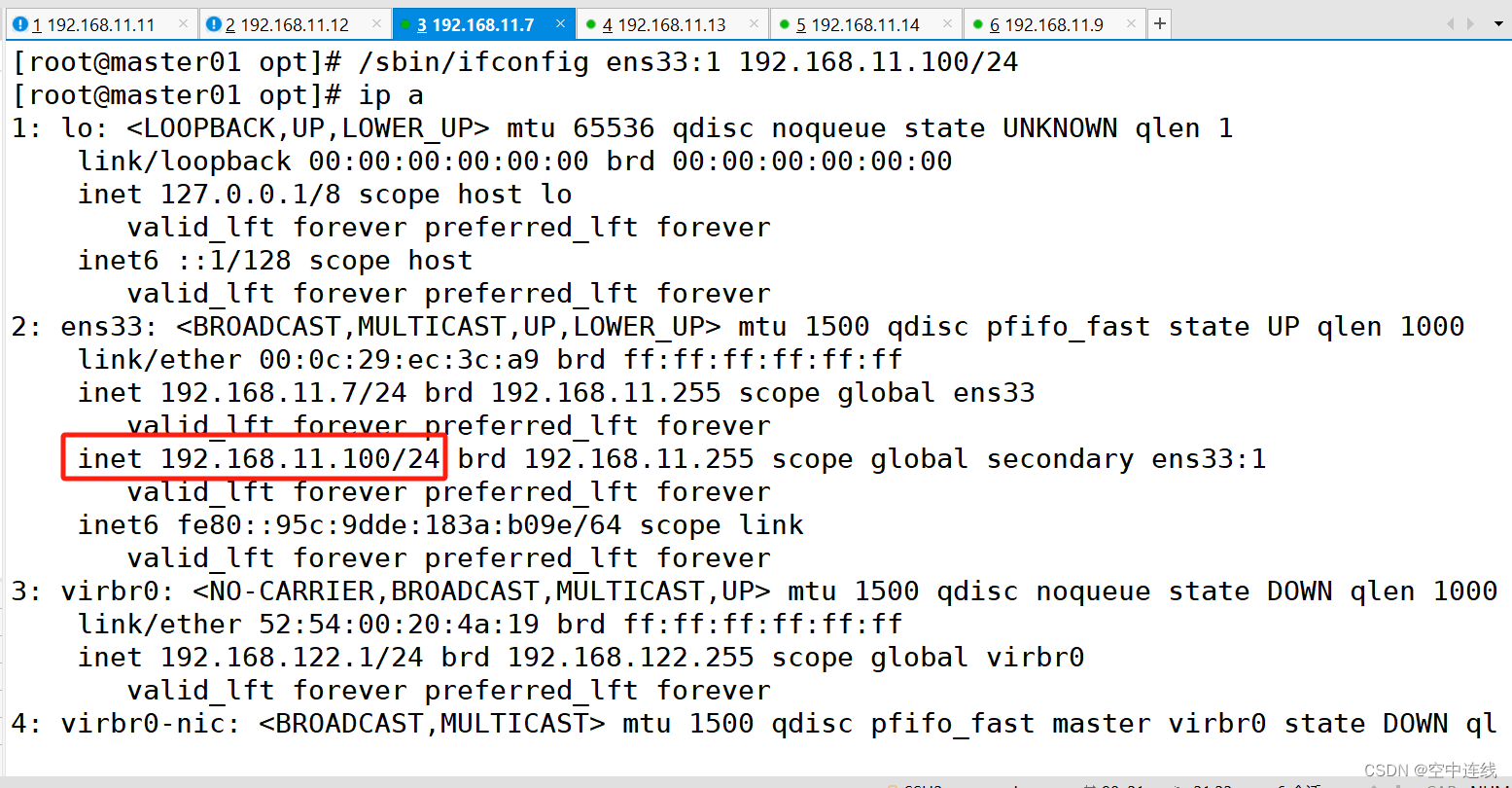

port=330615 第一次配置需要在 Master 节点上手动开启虚拟IP地址

[root@master01 opt]# /sbin/ifconfig ens33:1 192.168.11.100/24

[root@master01 opt]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

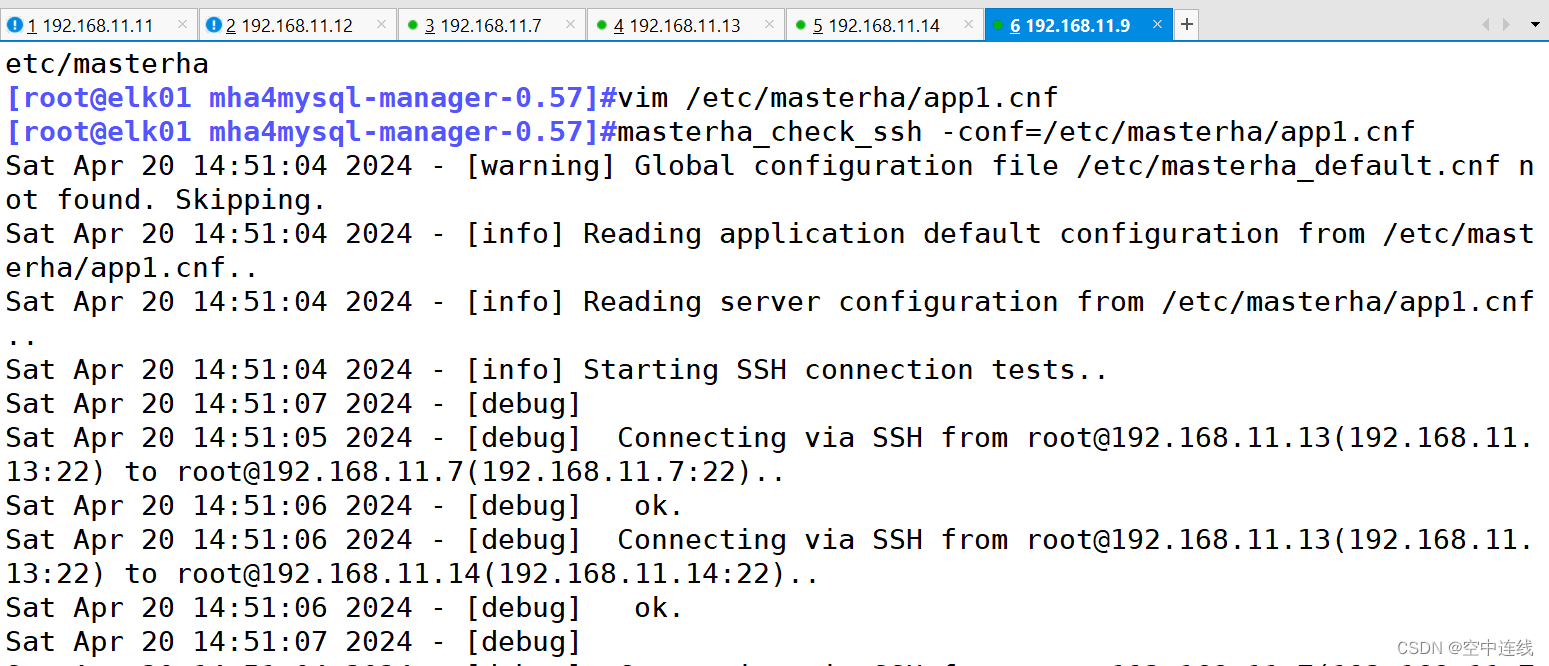

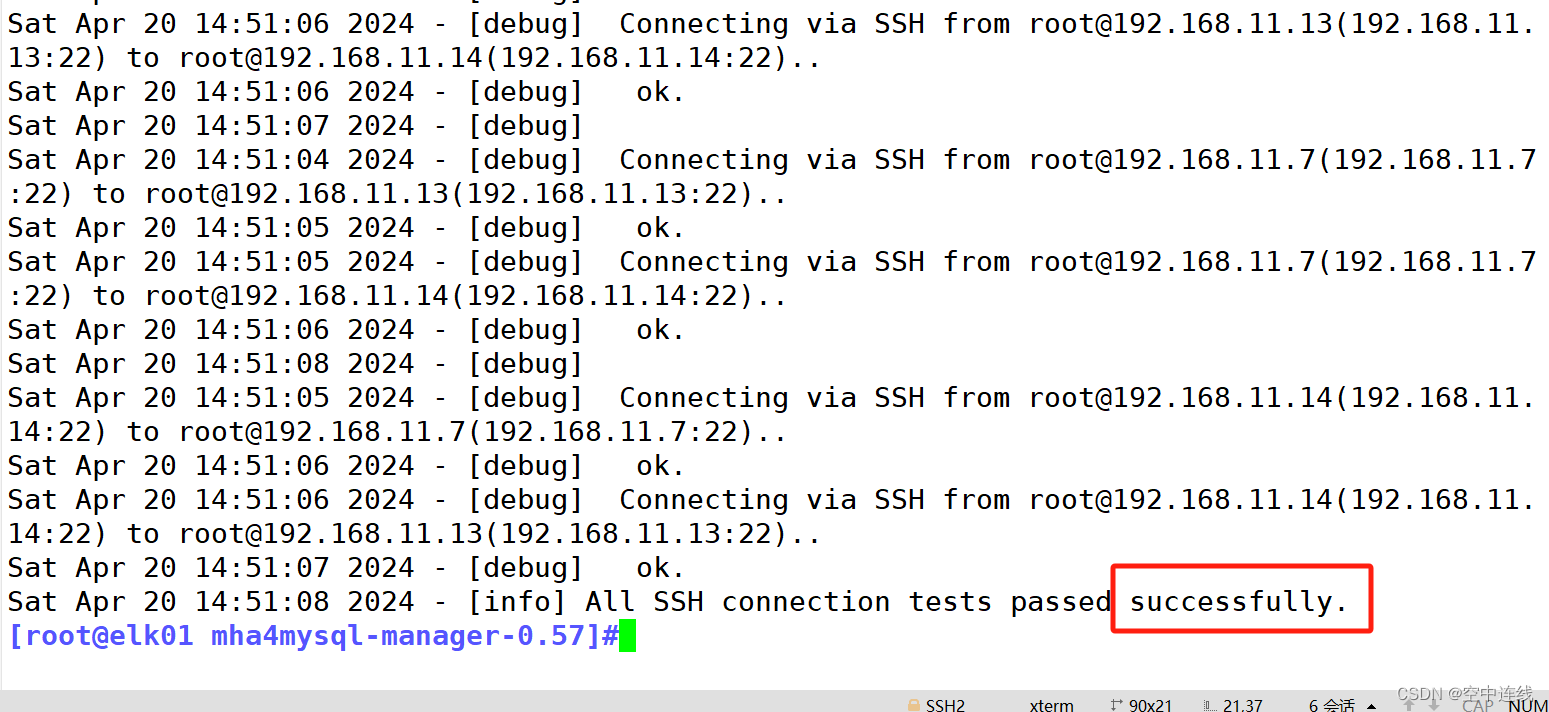

16 在 manager 节点上测试 ssh 无密码认证

在 manager 节点上测试 ssh 无密码认证,如果正常最后会输出 successfully,如下所示。

masterha_check_ssh -conf=/etc/masterha/app1.cnf17 在 manager 节点上测试 mysql 主从连接情况

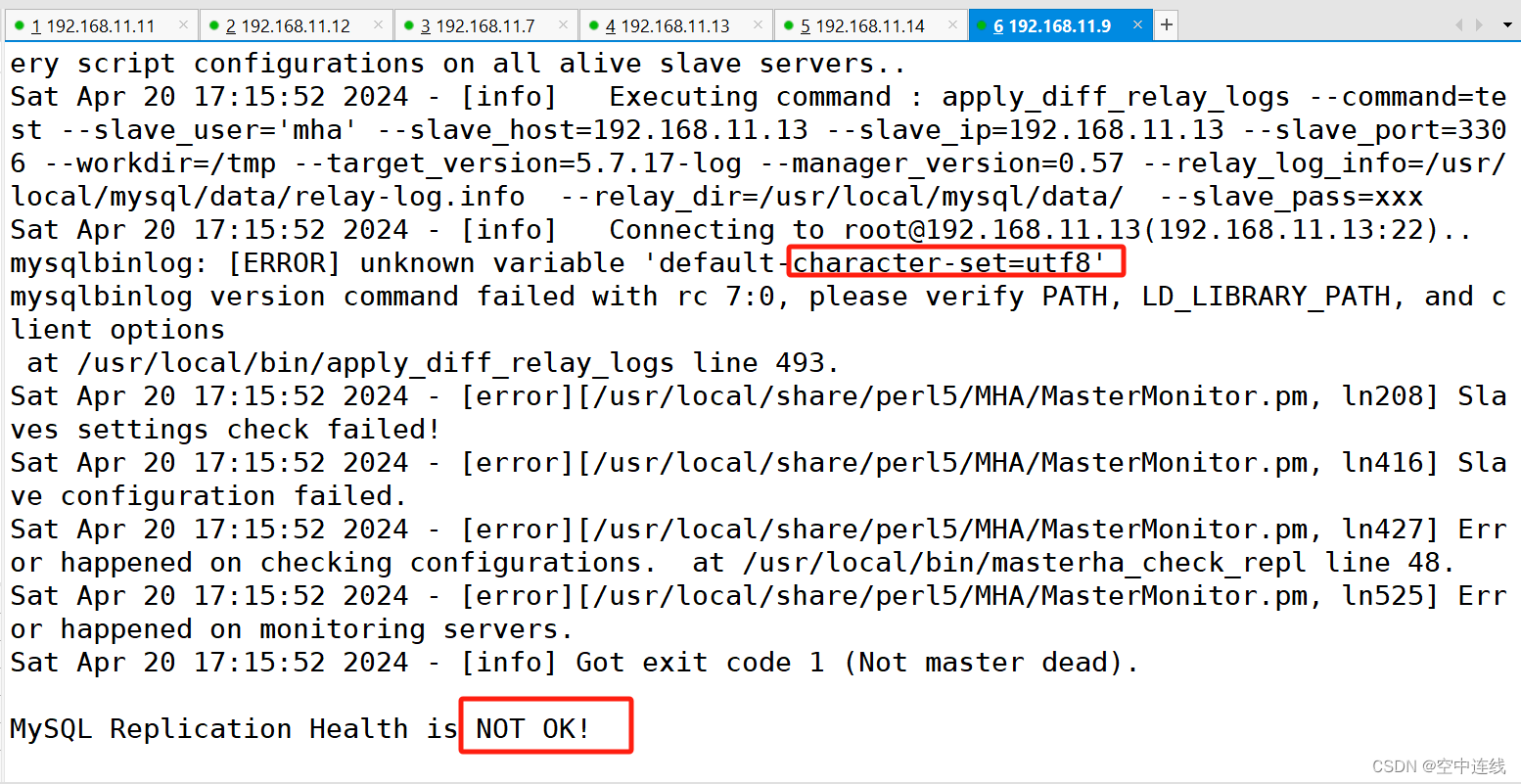

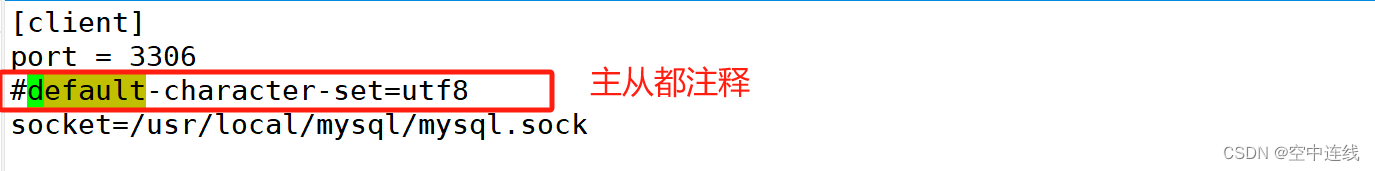

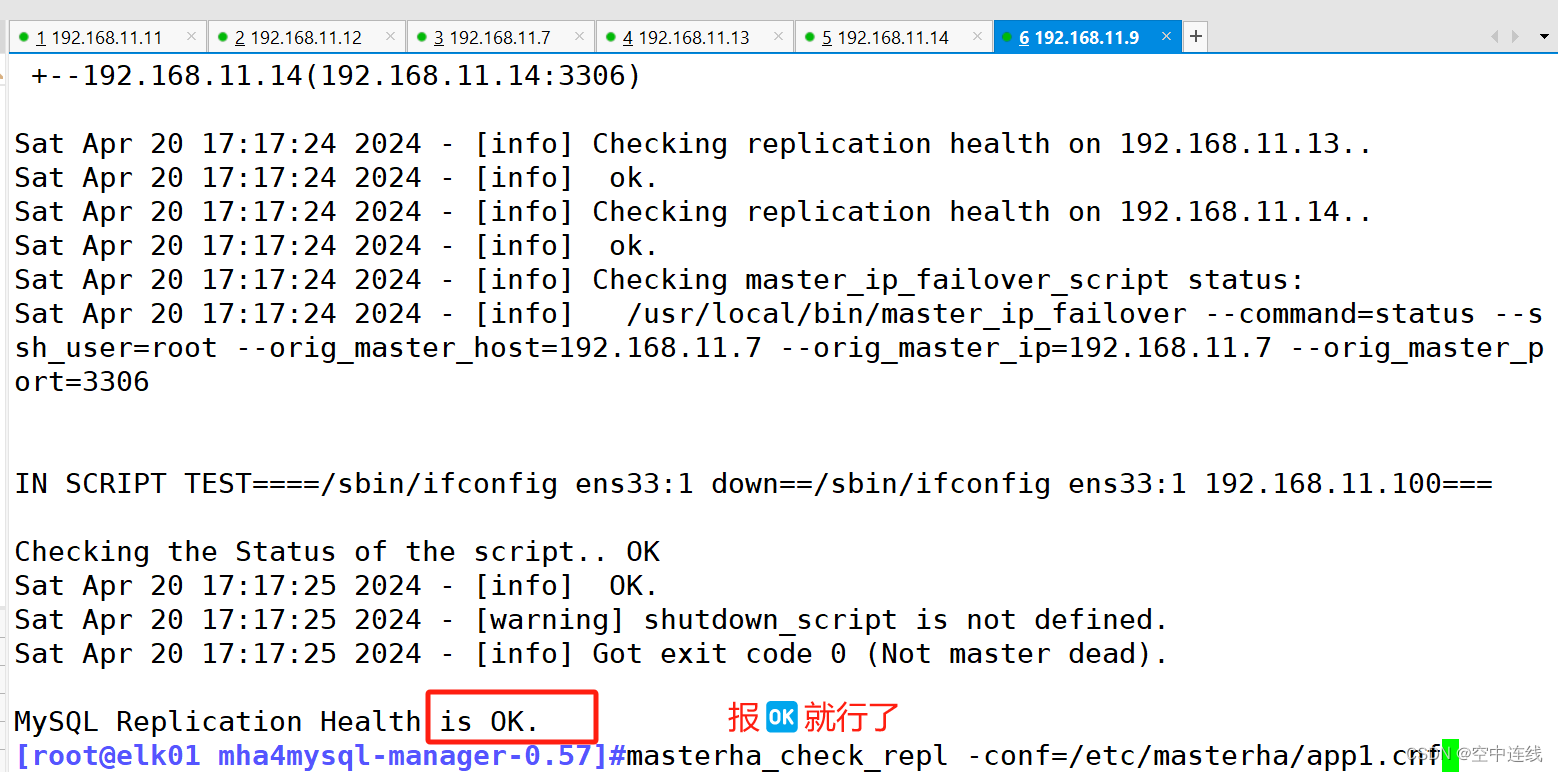

在 manager 节点上测试 mysql 主从连接情况,最后出现MySQL Replication Health is OK 字样说明正常。如下所示。

输入它查看情况

[root@elk01 mha4mysql-manager-0.57]#masterha_check_repl -conf=/etc/masterha/app1.cnf在 manager 节点上启动 MHA

nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &[root@elk01 mha4mysql-manager-0.57]#nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

[1] 10192

[root@elk01 mha4mysql-manager-0.57]#ps -aux|grep manager

root 10192 0.5 0.5 297380 21752 pts/0 S 17:20 0:00 perl /usr/local/bin/masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover

root 10295 0.0 0.0 112824 984 pts/0 S+ 17:21 0:00 grep --color=auto manager

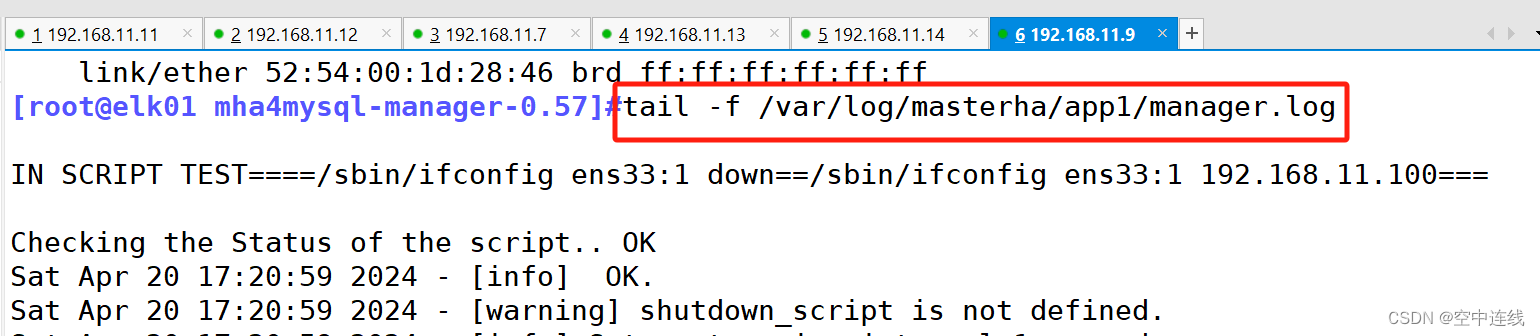

在 manager 节点上查看 MHA 状态 和 MHA 日志,可以看到 master的地址

masterha_check_status --conf=/etc/masterha/app1.cnf[root@elk01 mha4mysql-manager-0.57]#masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:10192) is running(0:PING_OK), master:192.168.11.7

[root@elk01 mha4mysql-manager-0.57]#cat /var/log/masterha/app1/manager.log | grep "current master"

Sat Apr 20 17:20:56 2024 - [info] Checking SSH publickey authentication settings on the current master..

192.168.11.7(192.168.11.7:3306) (current master)

查看master 的 VIP 地址 192.168.10.200 是否存在

这个 VIP 地址不会因为 manager 节点停止 MHA 服务而消失。

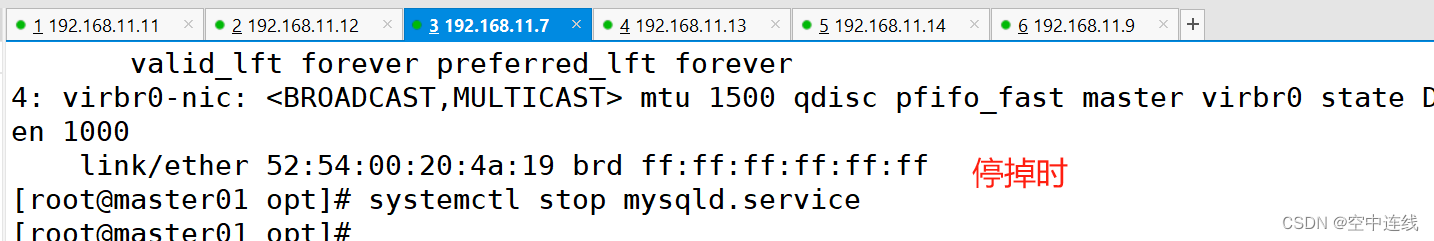

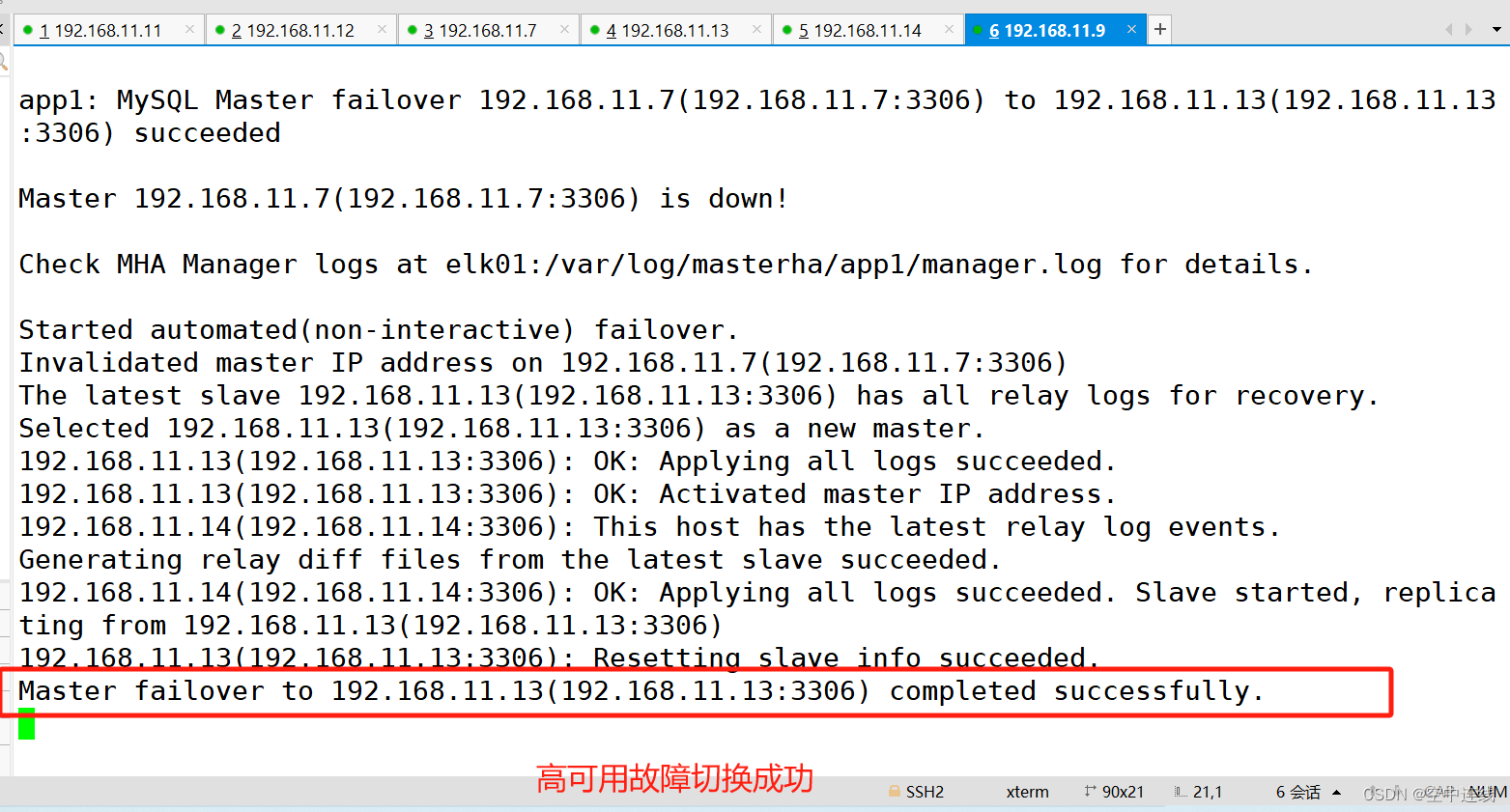

故障模拟

#在 manager 节点上监控观察日志记录

tail -f /var/log/masterha/app1/manager.log同时去Master 节点 master 上停止mysql服务

再去manager服务器上的日志查看

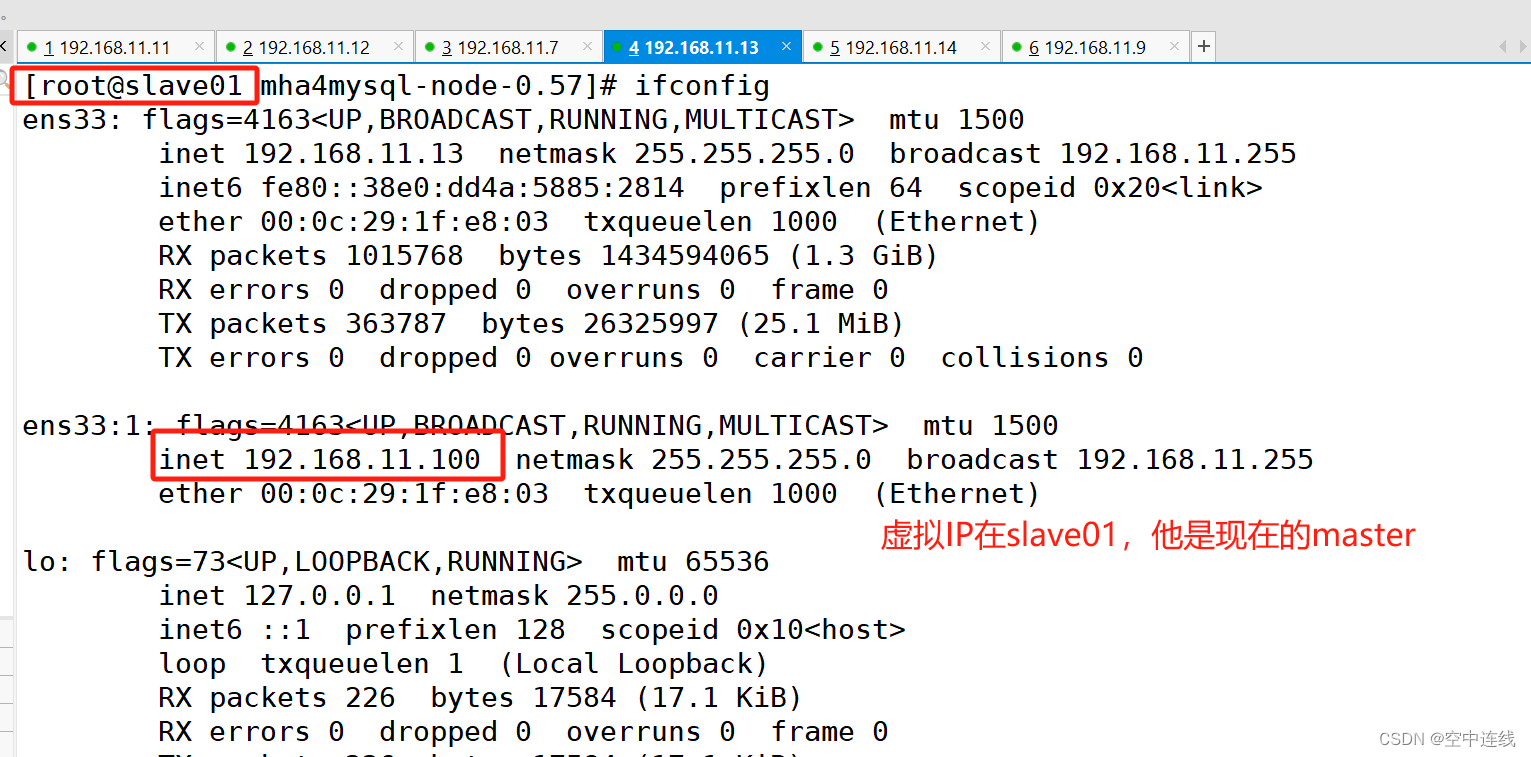

查看 slave1 是否接管 VIP,使用ifconfig

正常自动切换一次后,MHA 进程会退出。HMA 会自动修改 app1.cnf 文件内容,将宕机的 master 节点删除。查看 slave1 是否接管 VIP

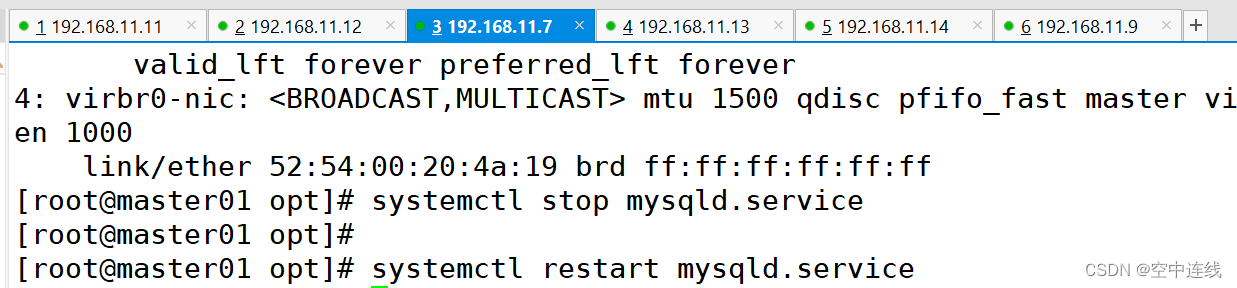

故障修复

修复原来的master(即修复原来的主节点)

修复主从

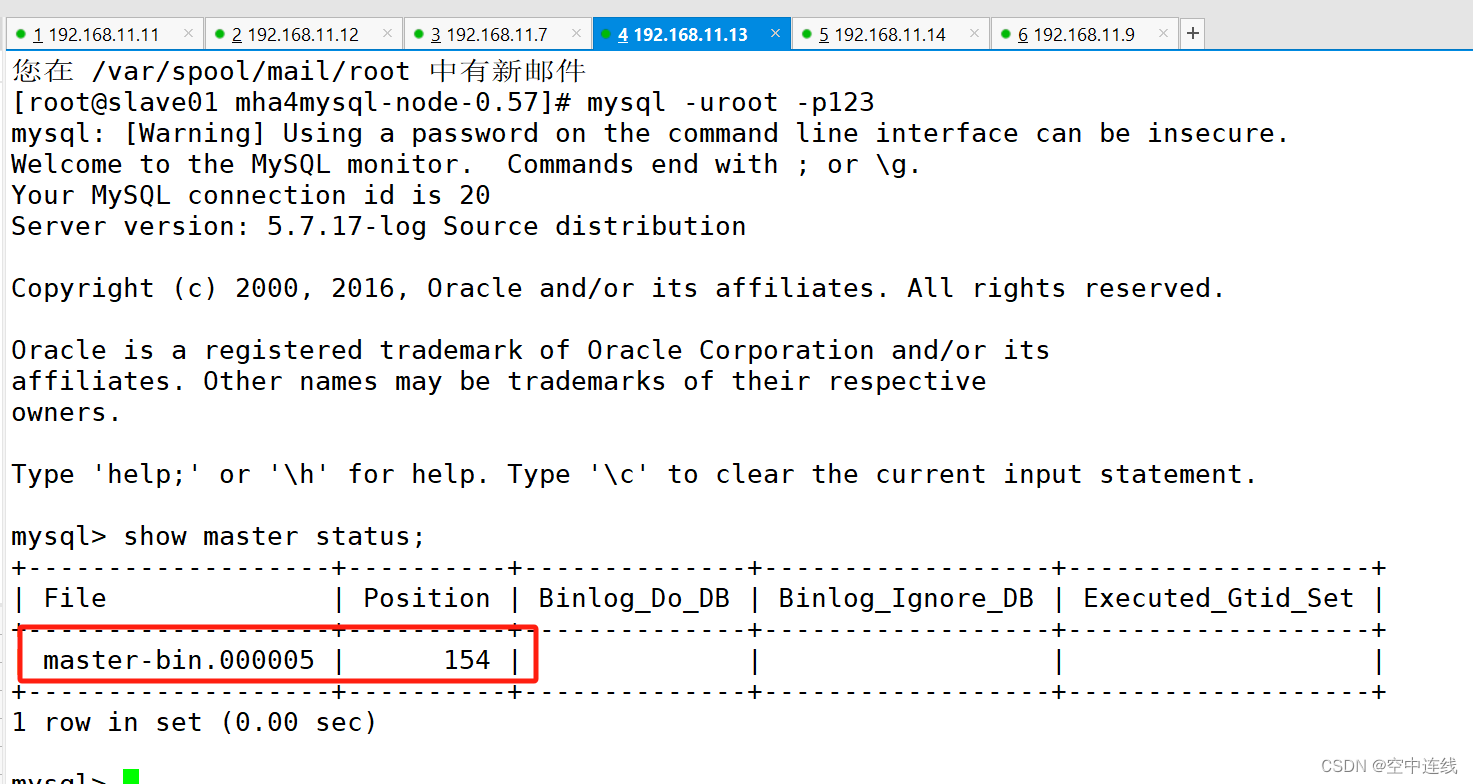

在新的主库服务器 slave1 中查看二进制日志文件和同步点

show master status;

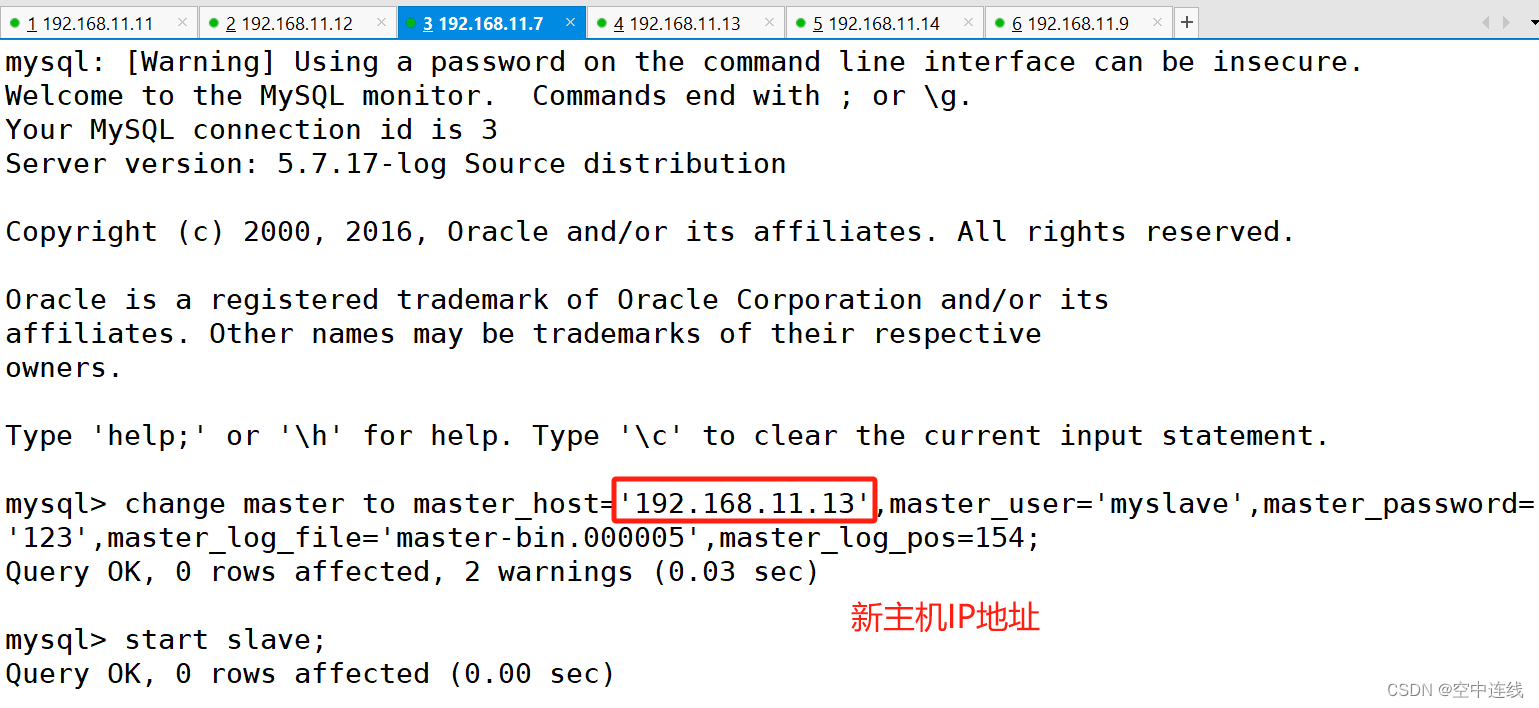

在原主库服务器 master执行同步操作,同步现在主库中的数据

change master to master_host='192.168.11.13',master_user='myslave',master_password='123',master_log_file='master-bin.000005',master_log_pos=154;

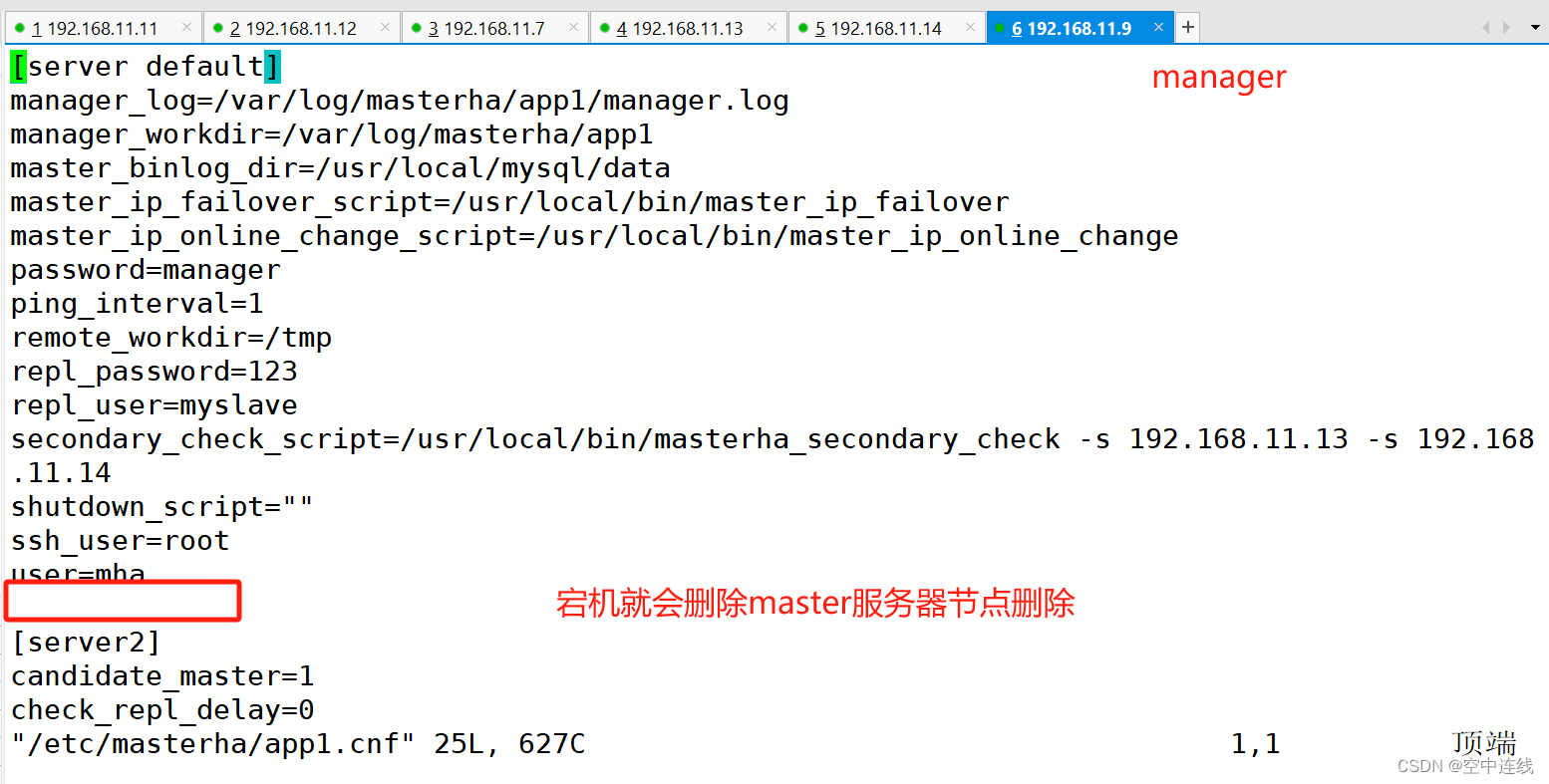

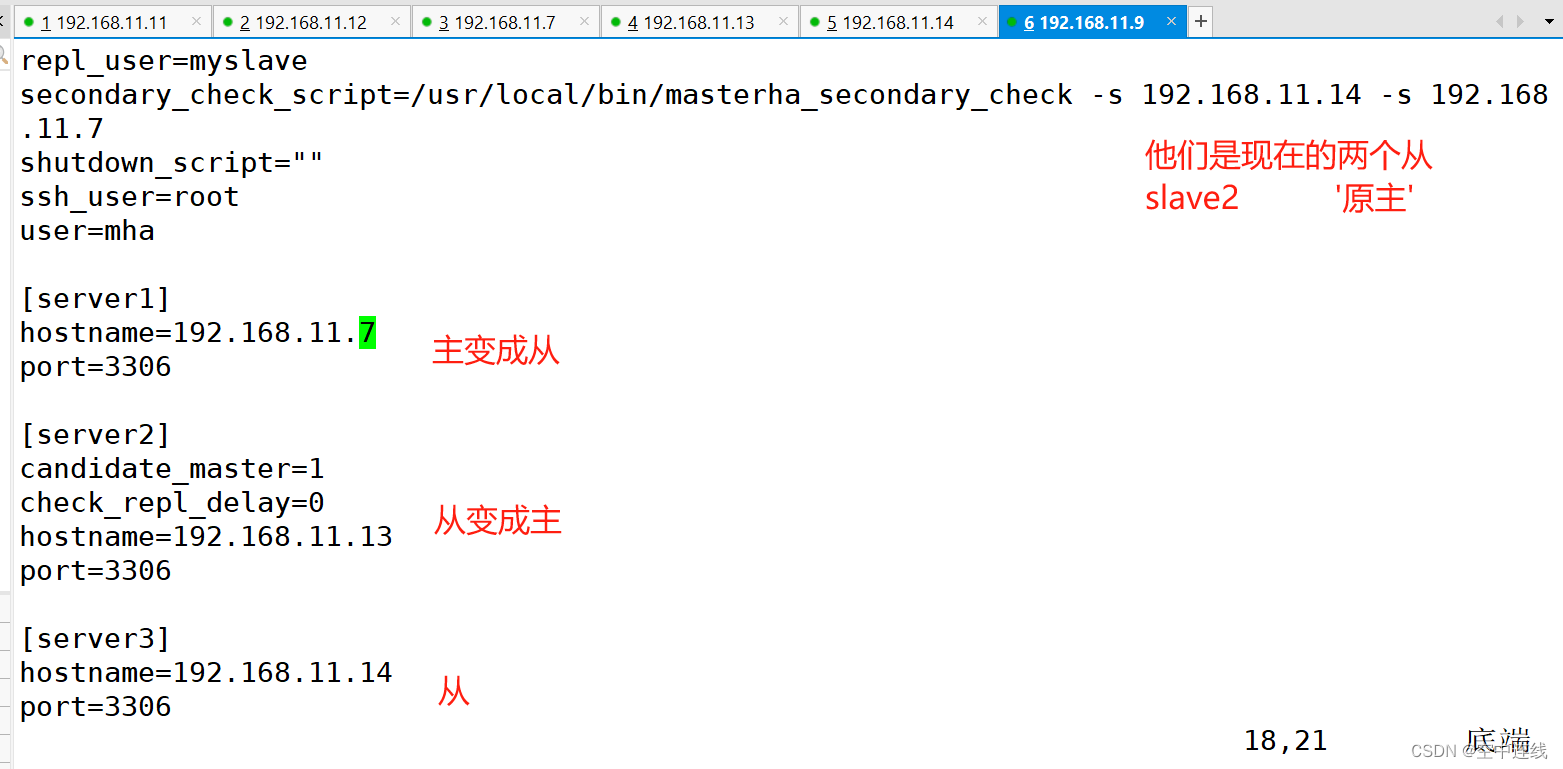

在 manager 节点上修改配置文件app1.cnf

重新把三台mysql节点服务器这个记录添加进去,因为它检测到主节点失效时候会自动删除主节点

将slave01添加为新的候选master01

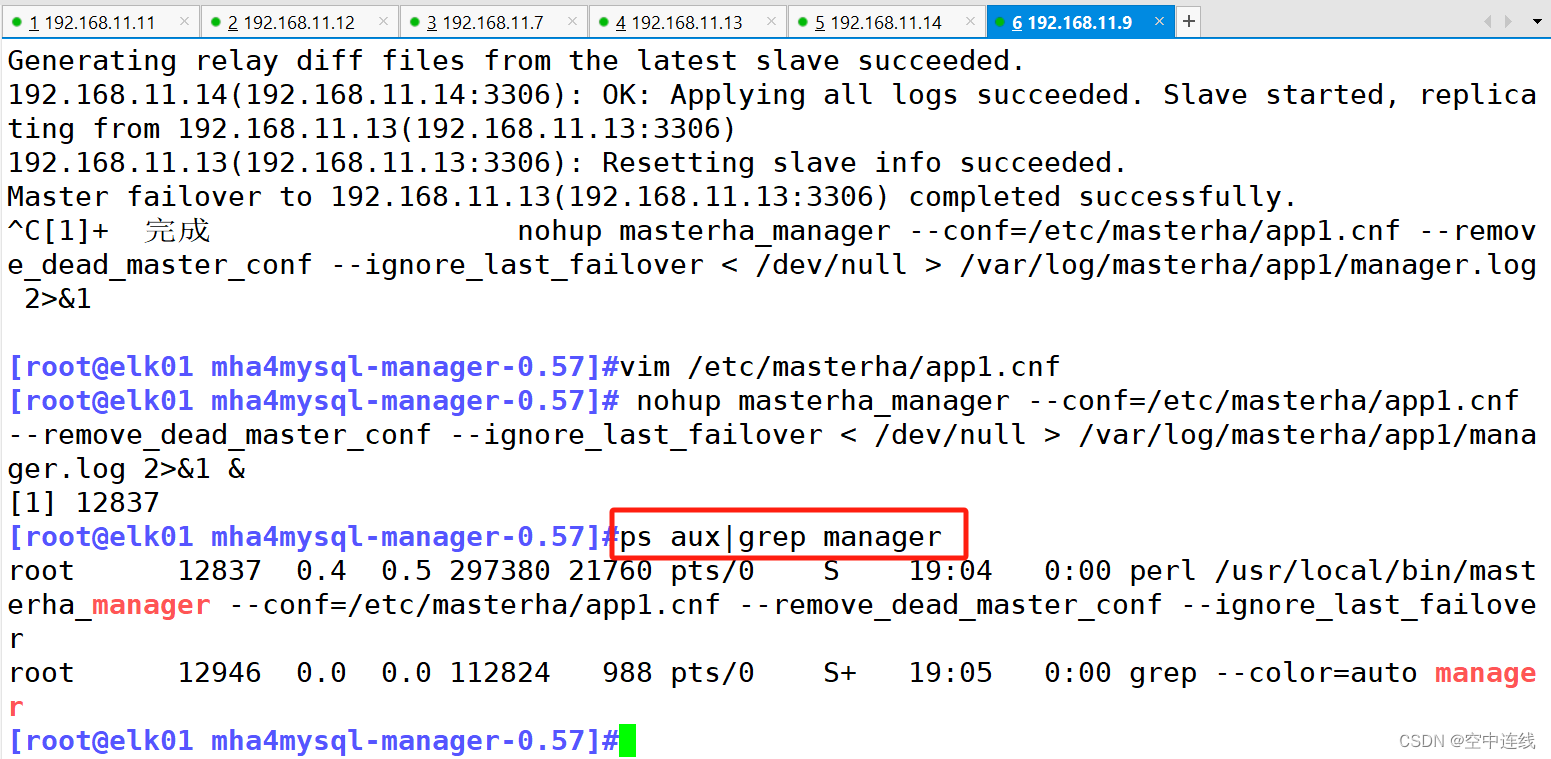

在 manager 节点上启动 MHA

nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

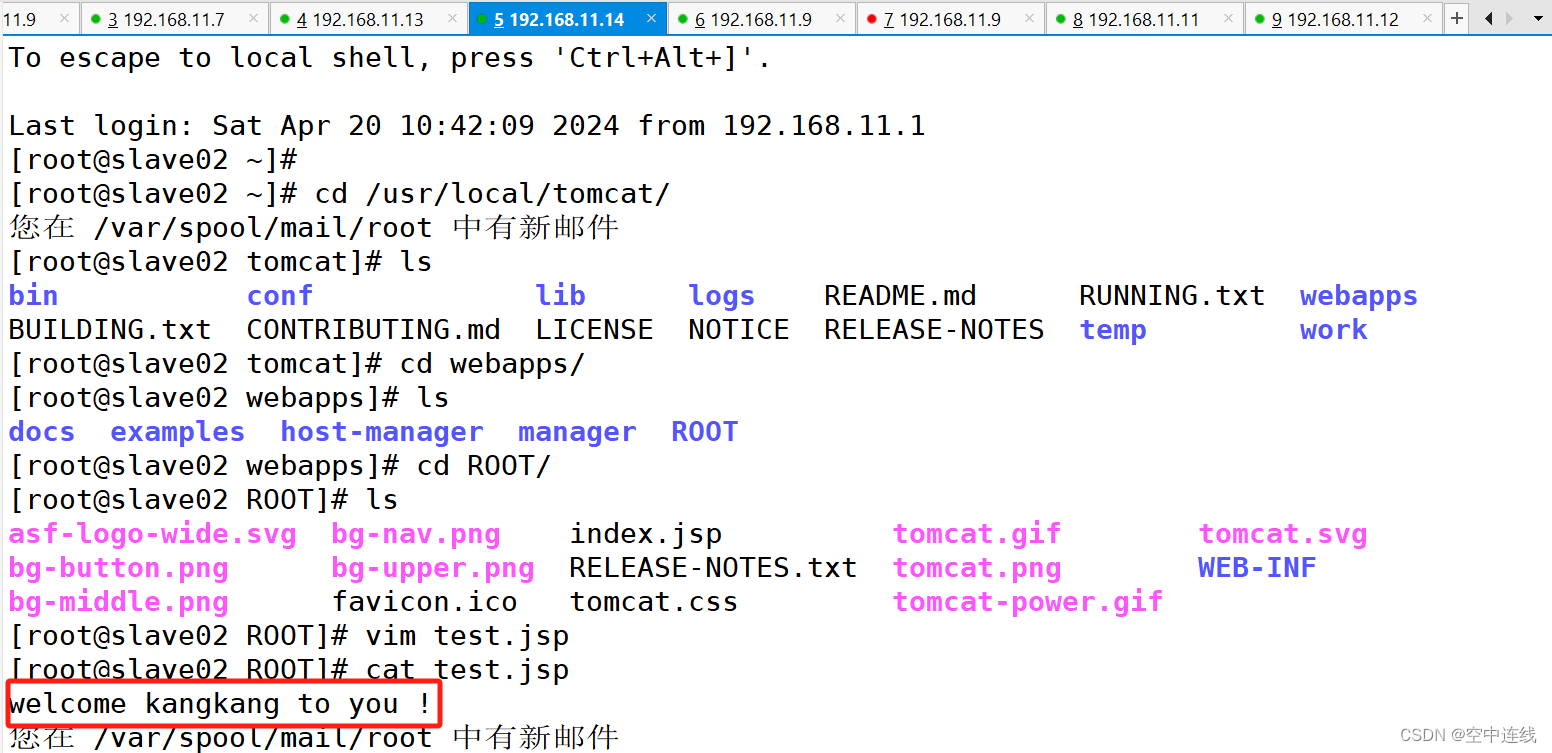

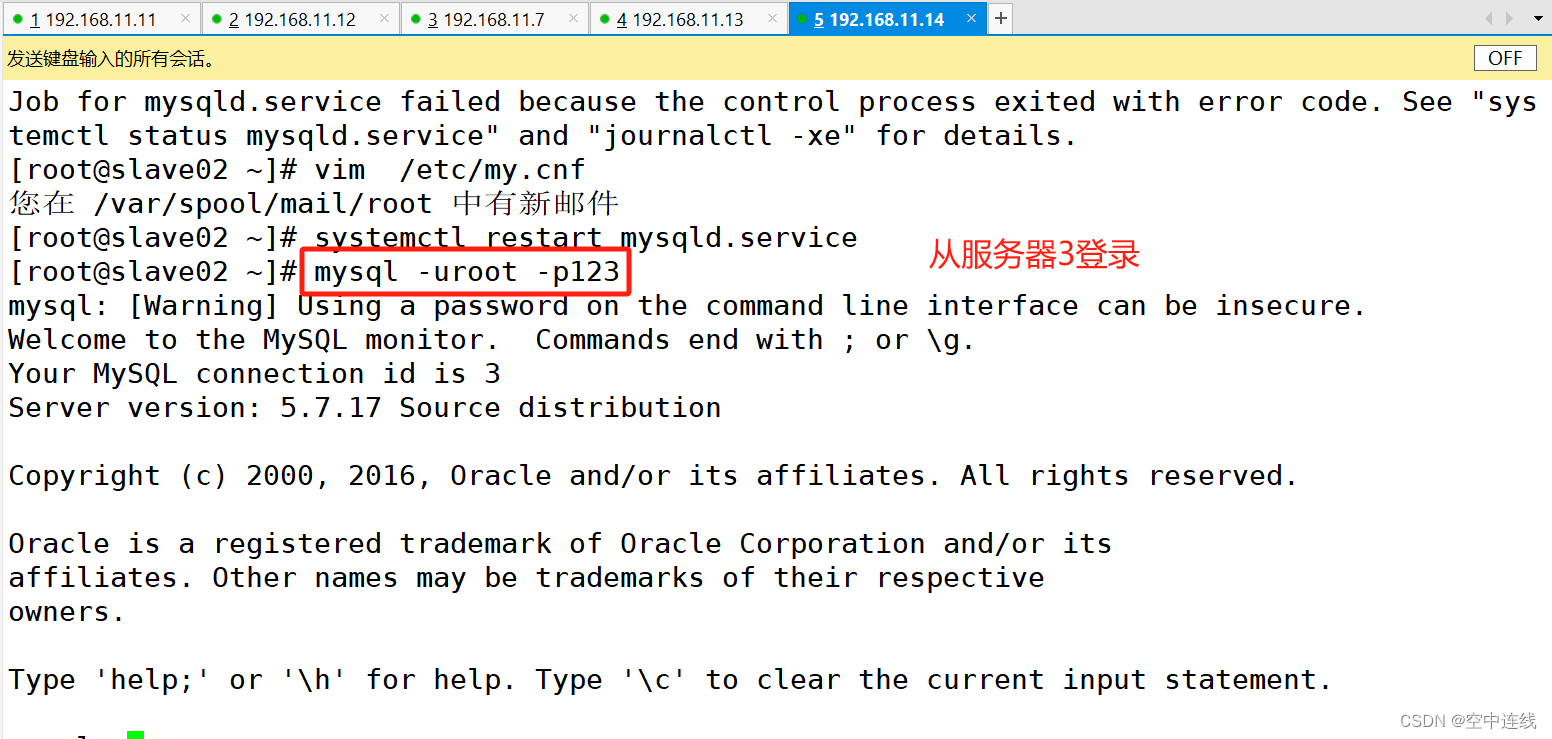

安装tomcat编译:192.168.11.14

#使用二进制安装jdk

[root@slave02 ~]# mkdir /data

[root@slave02 ~]# cd /data

[root@slave02 data]# rz -E

rz waiting to receive.

[root@slave02 data]# rz -E

rz waiting to receive.

[root@slave02 data]# ls

apache-tomcat-9.0.16.tar.gz jdk-8u291-linux-x64.tar.gz

[root@slave02 data]# tar jdk-8u291-linux-x64.tar.gz

tar: 旧选项“g”需要参数。

请用“tar --help”或“tar --usage”获得更多信息。

[root@slave02 data]# tar xf jdk-8u291-linux-x64.tar.gz

[root@slave02 data]# ls

apache-tomcat-9.0.16.tar.gz jdk1.8.0_291 jdk-8u291-linux-x64.tar.gz

[root@slave02 data]# rm -rf jdk1.8.0_291/

[root@slave02 data]# tar xf jdk-8u291-linux-x64.tar.gz -C /usr/local

[root@slave02 data]# ls /usr/local

bin boost_1_59_0 games jdk1.8.0_291 lib64 mysql share

boost etc include lib libexec sbin src

[root@slave02 data]# cd /usr/local

[root@slave02 local]# ln -s jdk1.8.0_291/ jdk

[root@slave02 local]# ls

bin boost_1_59_0 games jdk lib libexec sbin src

boost etc include jdk1.8.0_291 lib64 mysql share

[root@slave02 local]# ll

总用量 4

drwxr-xr-x. 2 root root 6 11月 5 2016 bin

lrwxrwxrwx. 1 root root 13 4月 19 23:49 boost -> boost_1_59_0/

drwx------. 8 501 games 4096 8月 12 2015 boost_1_59_0

drwxr-xr-x. 2 root root 6 11月 5 2016 etc

drwxr-xr-x. 2 root root 6 11月 5 2016 games

drwxr-xr-x. 2 root root 6 11月 5 2016 include

lrwxrwxrwx. 1 root root 13 4月 20 08:57 jdk -> jdk1.8.0_291/ #软连接显示

drwxr-xr-x. 8 10143 10143 273 4月 8 2021 jdk1.8.0_291

drwxr-xr-x. 2 root root 6 11月 5 2016 lib

drwxr-xr-x. 2 root root 6 11月 5 2016 lib64

drwxr-xr-x. 2 root root 6 11月 5 2016 libexec

drwxr-xr-x. 12 mysql mysql 229 4月 20 00:09 mysql

drwxr-xr-x. 2 root root 6 11月 5 2016 sbin

drwxr-xr-x. 5 root root 49 3月 15 19:21 share

drwxr-xr-x. 2 root root 6 11月 5 2016 src

[root@slave02 local]# vim /etc profile.d/env.sh

还有 2 个文件等待编辑

[root@slave02 local]# vim /etc/profile.d/env.sh

[root@slave02 local]# cat /etc/profile.d/env.sh

# java home

export JAVA_HOME=/usr/local/jdk #切记路径要一致,否则型号会报错

export PATH=$JAVA_HOME/bin:$PATH

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib/:$JRE_HOME/lib/

[root@slave02 local]# java -version

java version "1.8.0_291"

Java(TM) SE Runtime Environment (build 1.8.0_291-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.291-b10, mixed mode)

[root@slave02 data]# ls

apache-tomcat-9.0.16.tar.gz jdk-8u291-linux-x64.tar.gz

[root@slave02 data]# cp -r apache-tomcat-9.0.16.tar.gz /usr/local

[root@slave02 data]# cd /usr/local

[root@slave02 local]# ls

apache-tomcat-9.0.16.tar.gz boost_1_59_0 include lib mysql src

bin etc jdk lib64 sbin

boost games jdk1.8.0_291 libexec share

[root@slave02 local]# tar xf apache-tomcat-9.0.16.tar.gz

[root@slave02 local]# ls

apache-tomcat-9.0.16 boost games jdk1.8.0_291 libexec share

apache-tomcat-9.0.16.tar.gz boost_1_59_0 include lib mysql src

bin etc jdk lib64 sbin

[root@slave02 local]# ln -s apache-tomcat-9.0.16/ tomcat

[root@slave02 local]# cd tomcat/

[root@slave02 tomcat]# ls

bin conf lib logs README.md RUNNING.txt webapps

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE RELEASE-NOTES temp work

新建用户tomcat 并修改其属主和属组,权限

[root@slave02 tomcat]# useradd tomcat -s /sbin/nologin -M

[root@slave02 tomcat]# chown tomcat:tomcat /usr/local/tomcat/ -R

[root@slave02 tomcat]# cat > /usr/lib/systemd/system/tomcat.service <<EOF

> [Unit]

> Description=Tomcat

> After=syslog.target network.target

>

> [Service]

> Type=forking

> ExecStart=/usr/local/tomcat/bin/startup.sh

> ExecStop=/usr/local/tomcat/bin/shutdown.sh

> RestartSec=3

> PrivateTmp=true

> User=tomcat

> Group=tomcat

>

> [Install]

> WantedBy=multi-user.target

> EOF

[root@slave02 tomcat]# systemctl start tomcat

[root@slave02 tomcat]# systemctl status tomcat

● tomcat.service - Tomcat

Loaded: loaded (/usr/lib/systemd/system/tomcat.service; disabled; vendor preset: disabled)

Active: active (running) since 六 2024-04-20 09:35:19 CST; 6s ago

Process: 34542 ExecStart=/usr/local/tomcat/bin/startup.sh (code=exited, status=0/SUCCESS)

Main PID: 34557 (catalina.sh)

CGroup: /system.slice/tomcat.service

├─34557 /bin/sh /usr/local/tomcat/bin/catalina.sh start

└─34558 /usr/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf...

4月 20 09:35:19 slave02 systemd[1]: Starting Tomcat...

4月 20 09:35:19 slave02 startup.sh[34542]: Using CATALINA_BASE: /usr/local/tomcat

4月 20 09:35:19 slave02 startup.sh[34542]: Using CATALINA_HOME: /usr/local/tomcat

4月 20 09:35:19 slave02 startup.sh[34542]: Using CATALINA_TMPDIR: /usr/local/tomcat/temp

4月 20 09:35:19 slave02 startup.sh[34542]: Using JRE_HOME: /usr

4月 20 09:35:19 slave02 startup.sh[34542]: Using CLASSPATH: /usr/local/tomcat/...ar

4月 20 09:35:19 slave02 systemd[1]: Started Tomcat.

Hint: Some lines were ellipsized, use -l to show in full.

[root@slave02 tomcat]# systemctl enable tomcat

Created symlink from /etc/systemd/system/multi-user.target.wants/tomcat.service to /usr/lib/systemd/system/tomcat.service.

[root@slave02 tomcat]# pstree -p | grep tomcat

[root@slave02 tomcat]# pstree -p | grep java

|-catalina.sh(34557)---java(34558)-+-{java}(34559)

| |-{java}(34560)

| |-{java}(34561)

| |-{java}(34562)

|

[root@slave02 tomcat]# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.1 0.1 193680 5076 ? Ss 08:05 0:07 /usr/lib/systemd/systemd

root 2 0.0 0.0 0 0 ? S 08:05 0:00 [kthreadd]

root 34510 0.0 0.0 0 0 ? S 09:32 0:00 [kworker/12:0]

tomcat 34557 0.0 0.0 113408 680 ? S 09:35 0:00 /bin/sh /usr/local/tomca

tomcat 34558 3.6 3.3 6872068 127728 ? Sl 09:35 0:07 /usr/bin/java -Djava.uti

root 34680 0.0 0.0 108052 352 ? S 09:38 0:00 sleep 60

root 34681 0.0 0.0 151212 1844 pts/2 R+ 09:38 0:00 ps aux

[root@slave02 tomcat]#

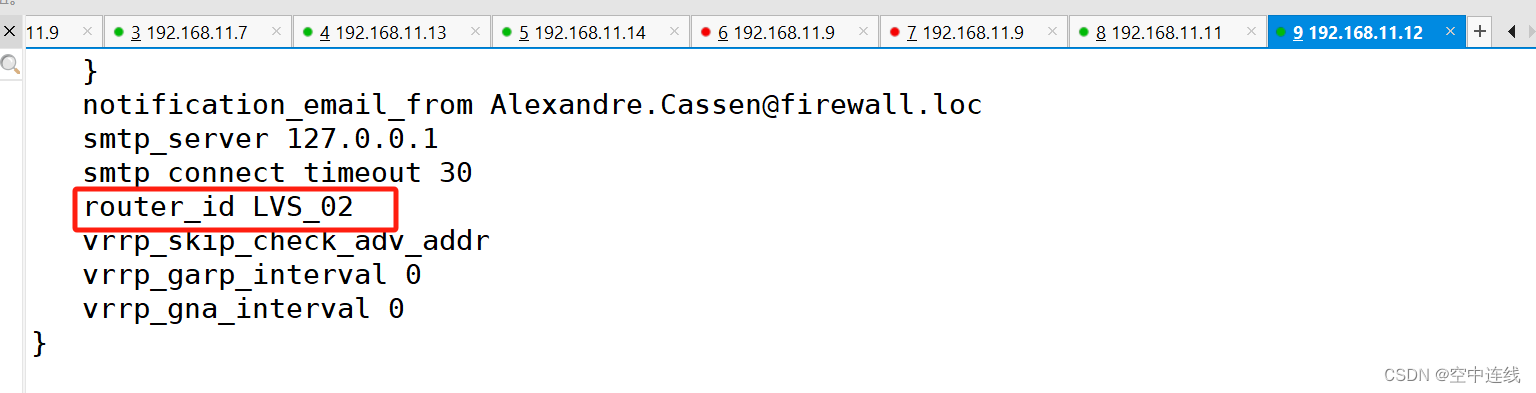

编译安装keeplived:192.168.11.11

[root@mcb-11 ~]# yum install keepalived.x86_64 -y

已加载插件:fastestmirror, langpacks

[root@mcb-11 ~]# cd /etc/keepalived/

[root@mcb-11 keepalived]# ls

keepalived.conf

[root@mcb-11 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@mcb-11 keepalived]# ls

keepalived.conf keepalived.conf.bak

[root@mcb-11 keepalived]# vim keepalived.conf

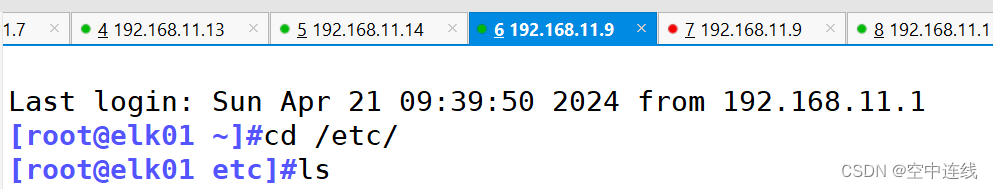

三 做 ELK:192.168.11.9

做主机名映射

[root@elk01 ~]#echo "192.168.11.9 elk01" >> /etc/hosts

安装 ElasticSearch-rpm包

[root@elk01 ~]#cd /opt

[root@elk01 opt]#ls

mha4mysql-manager-0.57 perl-Config-Tiny perl-Log-Dispatch

mha4mysql-manager-0.57.tar.gz perl-CPAN perl-Parallel-ForkManager

mha4mysql-node-0.57 perl-ExtUtils-CBuilder rh

mha4mysql-node-0.57.tar.gz perl-ExtUtils-MakeMaker

[root@elk01 opt]#rz -E

rz waiting to receive.

[root@elk01 opt]#rpm -ivh elasticsearch-5.5.0.rpm

警告:elasticsearch-5.5.0.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

加载系统服务

[root@elk01 opt]#systemctl daemon-reload

[root@elk01 opt]#systemctl start elasticsearch.service

[root@elk01 opt]#systemctl enable elasticsearch.service

修改 elasticsearch 主配置文件

[root@elk01 opt]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

#备份配置文件

[root@elk01 opt]# vim /etc/elasticsearch/elasticsearch.yml

##17行,取消注释,指定群集名称

cluster.name: my-elk-cluster

##23行,取消注释,指定节点名称()

node.name: node1

##33行,取消注释,指定数据存放路径

path.data: /data/elk_data

##37行,取消注释,指定日志存放路径

path.logs: /var/log/elasticsearch/

##43行,取消注释,不在启动的时候锁定内存(前端缓存,与IOPS-性能测试方式,每秒读写次数相关)

bootstrap.memory_lock: false

##55行,取消注释,设置监听地址,0.0.0.0代表所有地址

network.host: 0.0.0.0

##59行,取消注释,ES服务的默认监听端口为9200

http.port: 9200

##68行,取消注释,集群发现通过单播实现,指定要发现的节点node1、node2

discovery.zen.ping.unicast.hosts: ["elk01"]

查看我们修改的内容

[root@elk01 elasticsearch]#vim /etc/elasticsearch/elasticsearch.yml

[root@elk01 elasticsearch]#grep -v "^#" /etc/elasticsearch/elasticsearch.yml

cluster.name: my-elk-cluster

node.name: elk01

path.data: /data/elk_data

bootstrap.memory_lock: false

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["elk01"]

创建数据存放路径并授权

[root@elk01 elasticsearch]# mkdir -p /data/elk_data

[root@elk01 elasticsearch]#

[root@elk01 elasticsearch]#chown elasticsearch:elasticsearch /data/elk_data/

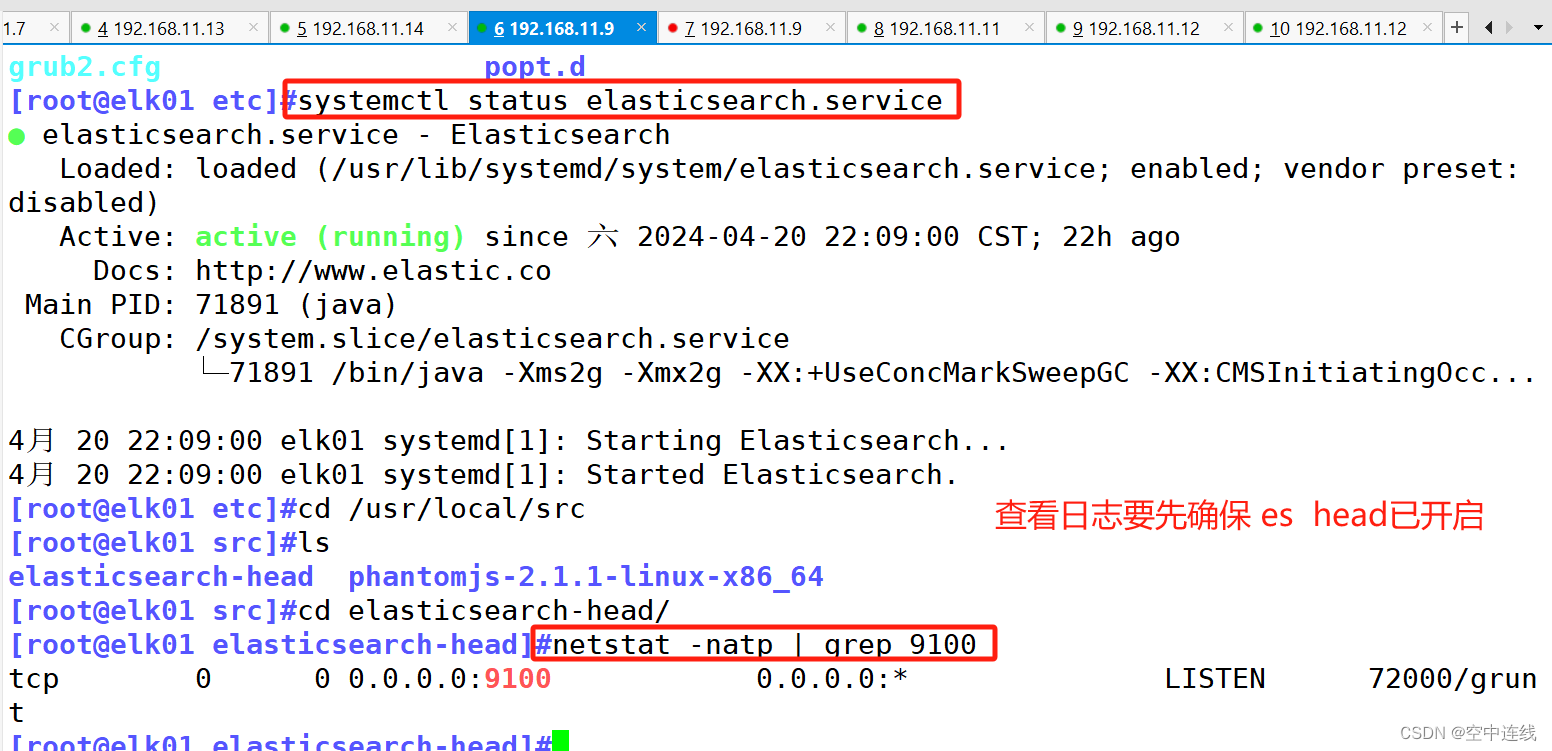

启动 elasticsearch

[root@elk01 elasticsearch]#systemctl start elasticsearch.service

[root@elk01 elasticsearch]#netstat -natp | grep 9200

tcp6 0 0 127.0.0.1:9200 :::* LISTEN 16953/java

tcp6 0 0 ::1:9200 :::* LISTEN 16953/java

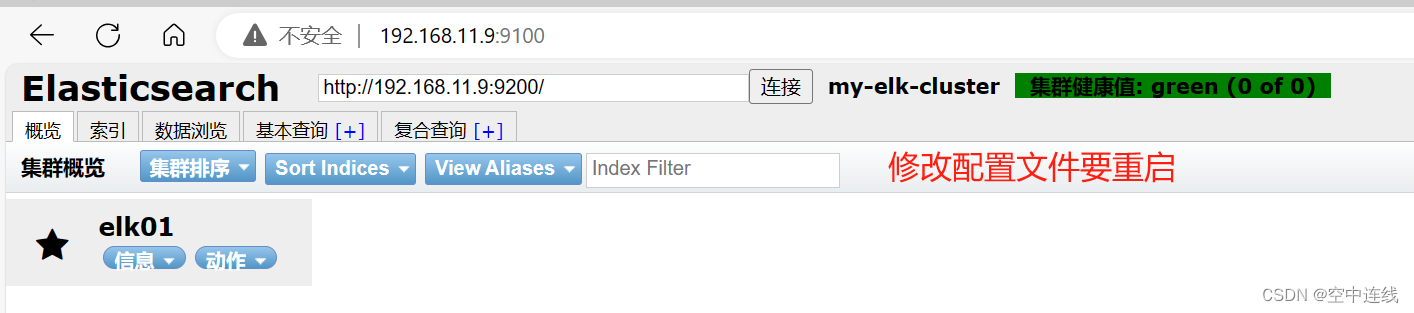

启动 elasticsearch-head 服务

[root@elk01 elasticsearch]#systemctl start elasticsearch.service

[root@elk01 elasticsearch]#netstat -natp | grep 9200

tcp6 0 0 127.0.0.1:9200 :::* LISTEN 16953/java

tcp6 0 0 ::1:9200 :::* LISTEN 16953/java

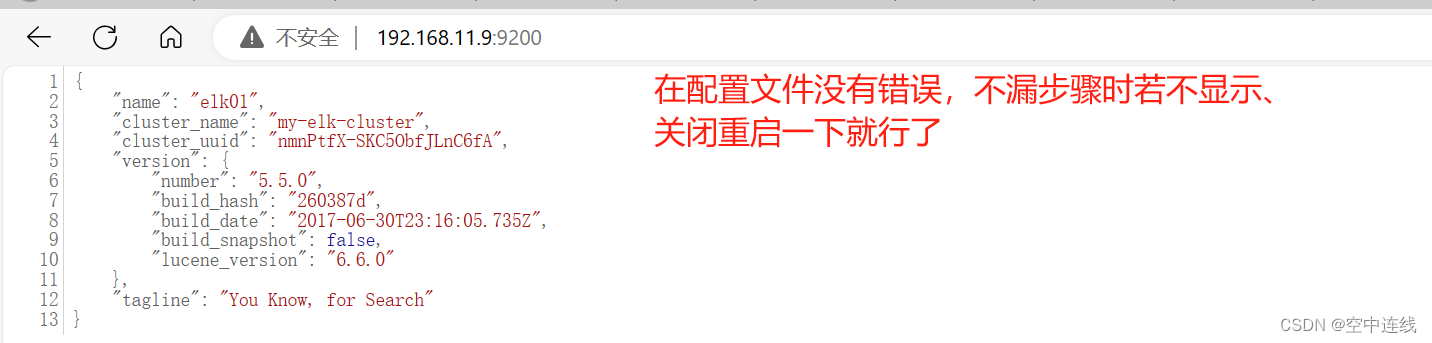

查看节点信息

报错原因一般时配置文件

浏览器访问 http://192.168.11.11:9200

安装 Elasticsearch-head 插件

[root@elk01 elasticsearch]#cd /opt

[root@elk01 opt]#ls

elasticsearch-5.5.0.rpm mha4mysql-node-0.57.tar.gz perl-ExtUtils-MakeMaker

mha4mysql-manager-0.57 perl-Config-Tiny perl-Log-Dispatch

mha4mysql-manager-0.57.tar.gz perl-CPAN perl-Parallel-ForkManager

mha4mysql-node-0.57 perl-ExtUtils-CBuilder rh

[root@elk01 opt]#yum install -y gcc gcc-c++ make

[root@elk01 opt]#rz -E

rz waiting to receive.

[root@elk01 opt]#

[root@elk01 opt]#tar xf node-v8.2.1.tar.gz

[root@elk01 opt]#cd node-v8.2.1/

[root@elk01 node-v8.2.1]#./configure

creating ./icu_config.gypi

[root@elk01 node-v8.2.1]#make -j 4 && make install #需要编译很长时间

安装phantomjs

[root@elk01 node-v8.2.1]#rz -E

rz waiting to receive.

[root@elk01 node-v8.2.1]#rz -E

rz waiting to receive.

[root@elk01 node-v8.2.1]#cd /opt

[root@elk01 opt]#ls

elasticsearch-5.5.0.rpm node-v8.2.1 perl-ExtUtils-MakeMaker

mha4mysql-manager-0.57 node-v8.2.1.tar.gz perl-Log-Dispatch

mha4mysql-manager-0.57.tar.gz perl-Config-Tiny perl-Parallel-ForkManager

mha4mysql-node-0.57 perl-CPAN rh

mha4mysql-node-0.57.tar.gz perl-ExtUtils-CBuilder

[root@elk01 opt]#cd ..

[root@elk01 /]#cd ../

[root@elk01 /]#cd ~

[root@elk01 ~]# cd

[root@elk01 ~]#cd /opt/node-v8.2.1/

[root@elk01 node-v8.2.1]#ls

android-configure configure node.gyp

AUTHORS CONTRIBUTING.md node.gypi

benchmark deps out

BSDmakefile doc phantomjs-2.1.1-linux-x86_64.tar.bz2

BUILDING.md elasticsearch-head.tar.gz README.md

CHANGELOG.md GOVERNANCE.md src

CODE_OF_CONDUCT.md icu_config.gypi test

COLLABORATOR_GUIDE.md lib tools

common.gypi LICENSE vcbuild.bat

config.gypi Makefile

config.mk node

[root@elk01 node-v8.2.1]#mv elasticsearch-head.tar.gz phantomjs-2.1.1-linux-x86_64.tar.bz2 /opt

[root@elk01 node-v8.2.1]#cd /opt

[root@elk01 opt]#laa

bash: laa: 未找到命令...

[root@elk01 opt]#ls

elasticsearch-5.5.0.rpm perl-Config-Tiny

elasticsearch-head.tar.gz perl-CPAN

mha4mysql-manager-0.57 perl-ExtUtils-CBuilder

mha4mysql-manager-0.57.tar.gz perl-ExtUtils-MakeMaker

mha4mysql-node-0.57 perl-Log-Dispatch

mha4mysql-node-0.57.tar.gz perl-Parallel-ForkManager

node-v8.2.1 phantomjs-2.1.1-linux-x86_64.tar.bz2

node-v8.2.1.tar.gz rh

[root@elk01 opt]#tar xf phantomjs-2.1.1-linux-x86_64.tar.bz2 -C /usr/local/src

[root@elk01 opt]#cd /usr/local/src/phantomjs-2.1.1-linux-x86_64/

[root@elk01 phantomjs-2.1.1-linux-x86_64]#ls

bin ChangeLog examples LICENSE.BSD README.md third-party.txt

[root@elk01 phantomjs-2.1.1-linux-x86_64]#cd bin

[root@elk01 bin]#ls

phantomjs

[root@elk01 bin]#cp phantomjs /usr/local/bin

安装 Elasticsearch-head 数据可视化工具

[root@elk01 bin]#cp phantomjs /usr/local/bin

[root@elk01 bin]#cd /opt

[root@elk01 opt]#ls

elasticsearch-5.5.0.rpm perl-Config-Tiny

elasticsearch-head.tar.gz perl-CPAN

mha4mysql-manager-0.57 perl-ExtUtils-CBuilder

mha4mysql-manager-0.57.tar.gz perl-ExtUtils-MakeMaker

mha4mysql-node-0.57 perl-Log-Dispatch

mha4mysql-node-0.57.tar.gz perl-Parallel-ForkManager

node-v8.2.1 phantomjs-2.1.1-linux-x86_64.tar.bz2

node-v8.2.1.tar.gz rh

[root@elk01 opt]#tar xf elasticsearch-head.tar.gz -C /usr/local/src

[root@elk01 opt]#cd /usr/local/src

[root@elk01 src]#cd elasticsearch-head/

[root@elk01 elasticsearch-head]#npm install

npm WARN deprecated fsevents@1.2.13: The v1 package contains DANGEROUS / INSECURE binaries. Upgrade to safe fsevents v2

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@^1.0.0 (node_modules/karma/node_modules/chokidar/node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for fsevents@1.2.13: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expression

up to date in 6.331s

修改 Elasticsearch 主配置文件

[root@node1 elasticsearch-head]# vim /etc/elasticsearch/elasticsearch.yml

##末行添加以下内容

http.cors.enabled: true ##开启跨域访问支持,默认为false

http.cors.allow-origin: "*" ##指定跨域访问允许的域名地址为所有

[root@ekl elasticsearch-head]# systemctl restart elasticsearch.service

[root@elk elasticsearch-head]# netstat -antp | grep 9200

启动 elasticsearch-head 服务

[root@elk01 elasticsearch-head]#npm run start &

[2] 70435

[root@elk01 elasticsearch-head]#

> elasticsearch-head@0.0.0 start /usr/local/src/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

#此时去浏览器去访问

通过 Elasticsearch-head 查看 ES 信息

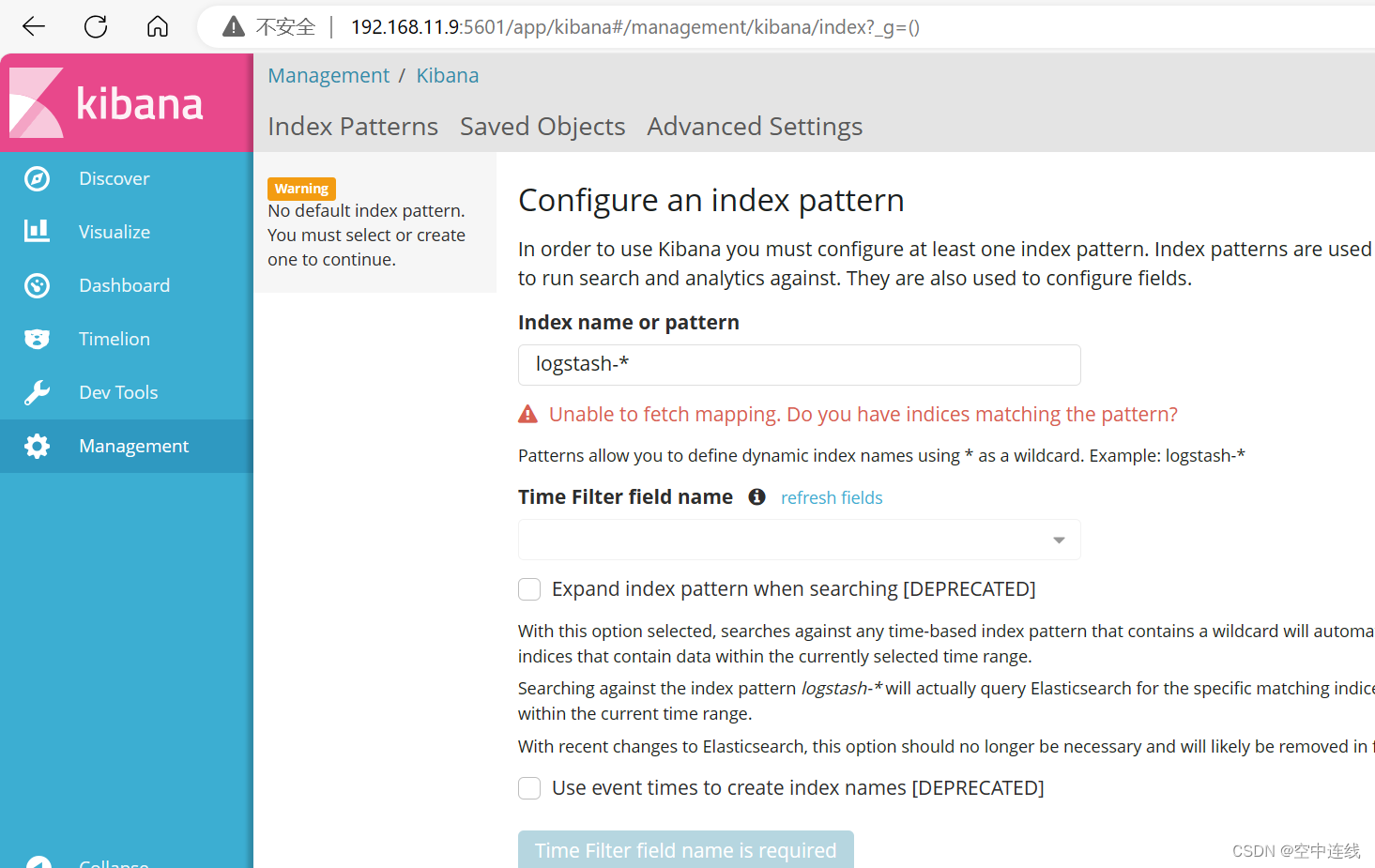

安装Kibana 192.168.11.9

[root@elk01 opt]#rz -E

rz waiting to receive.

[root@elk01 opt]#rpm -ivh kibana-5.5.1-x86_64.rpm

警告:kibana-5.5.1-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:kibana-5.5.1-1 ################################# [100%]

[root@elk01 opt]#cp /etc/kibana/kibana.yml /etc/kibana/kibana.yml.bar

[root@elk01 opt]#vim /etc/kibana/kibana.yml

[root@elk01 opt]#systemctl start kibana.service

[root@elk01 opt]#systemctl enable kibana.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /etc/systemd/system/kibana.service.

[root@elk01 opt]#netstat -natp | grep 5601

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 72781/node

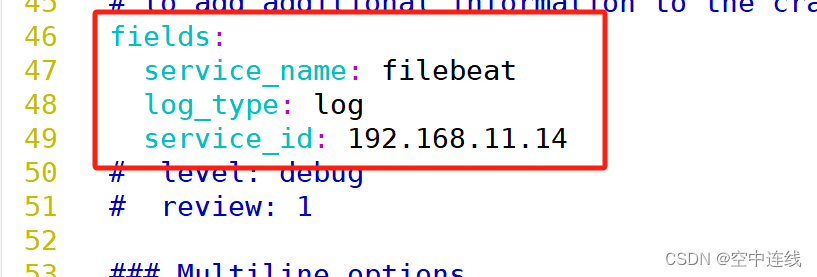

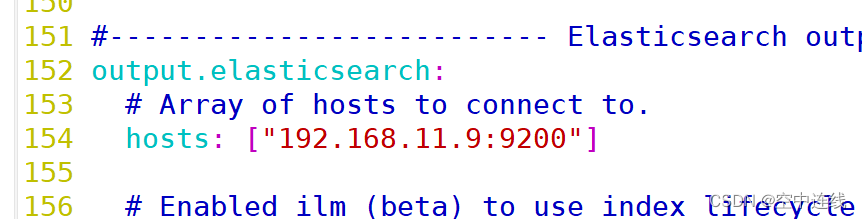

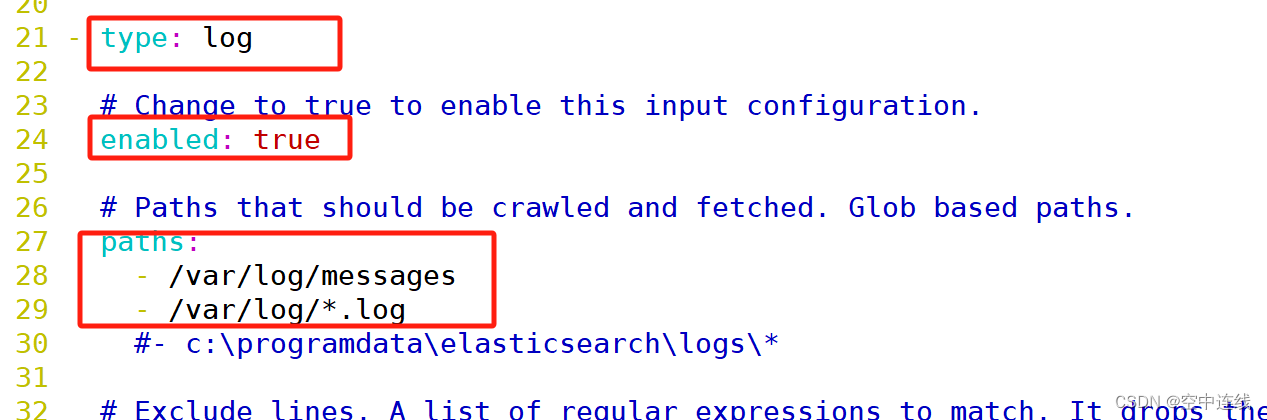

安装 filebeat 192.168.11.4

安装 logstash

[root@slave02 filebeat]# cd /opt

[root@slave02 opt]# rz -E

rz waiting to receive.

[root@slave02 opt]# rpm -ivh logstash-5.5.1.rpm

警告:logstash-5.5.1.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:logstash-1:5.5.1-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

Successfully created system startup script for Logstash

您在 /var/spool/mail/root 中有新邮件

[root@slave02 opt]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

[root@slave02 opt]# systemctl start logstash.service

[root@slave02 opt]# systemctl enable logstash.service

Created symlink from /etc/systemd/system/multi-user.target.wants/logstash.service to /etc/systemd/system/logstash.service.

logstash -e 'input { stdin{} } output { stdout{} }'测试

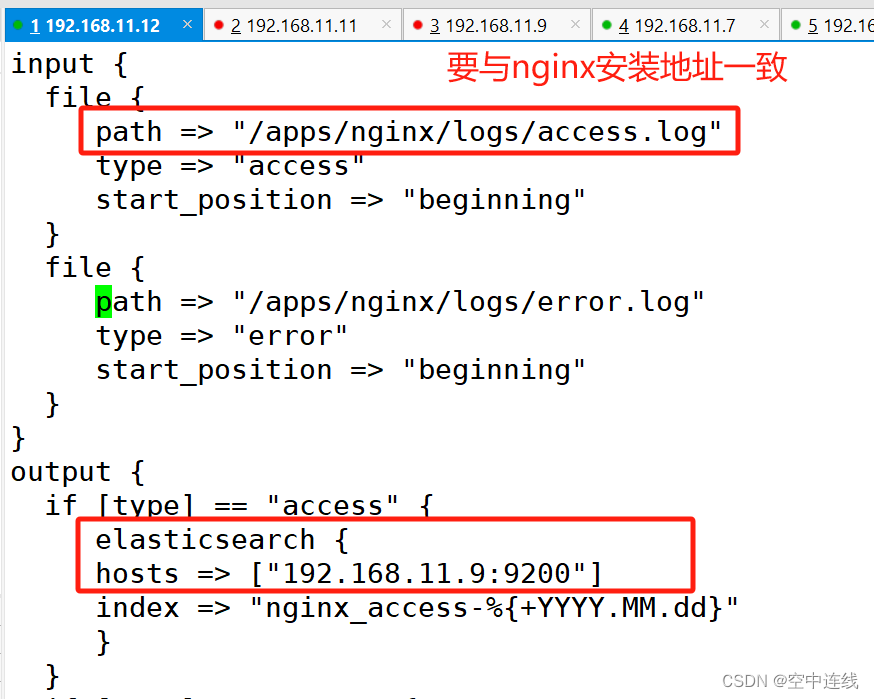

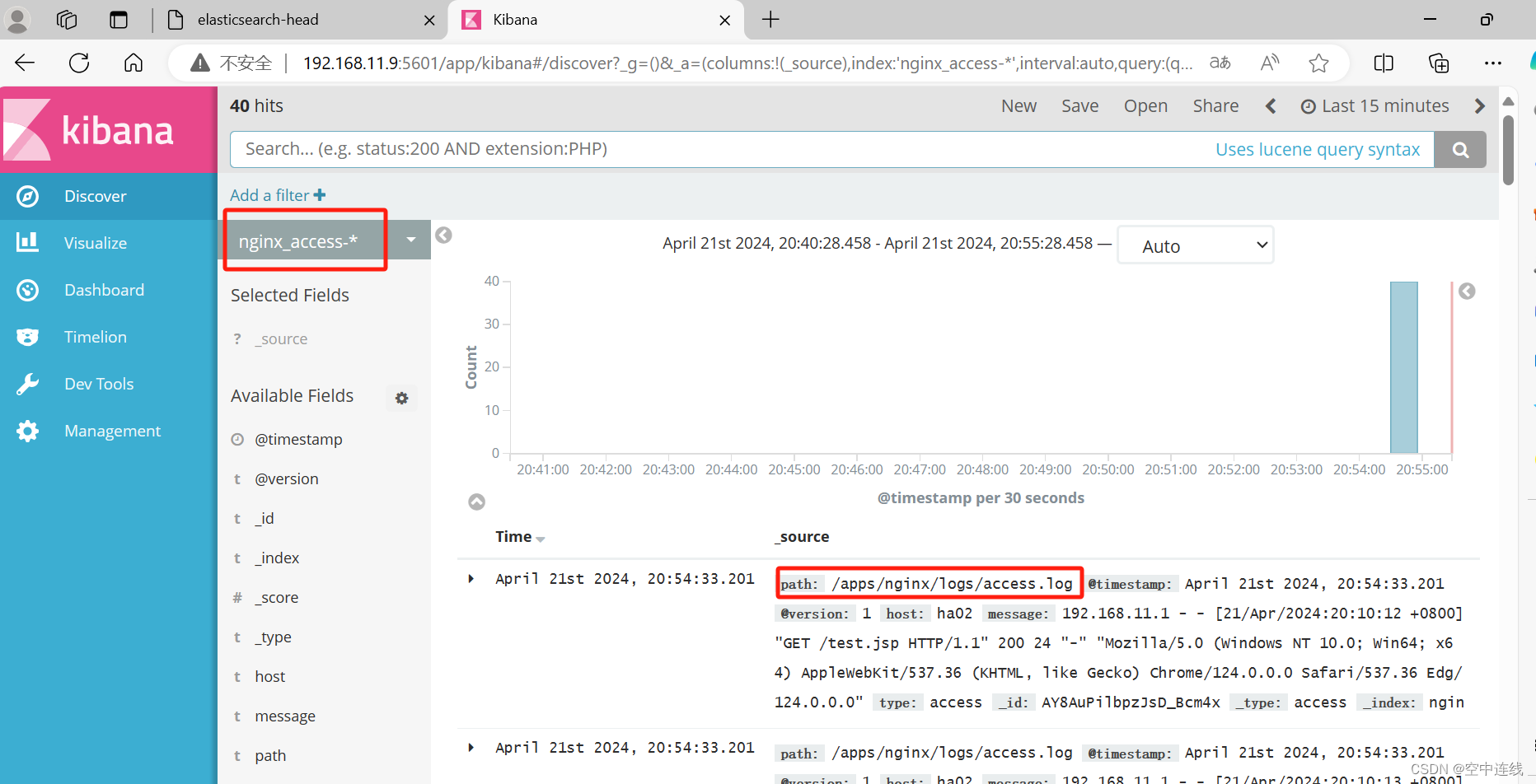

思 查看nginx_accrss日志

| 192.168.11.9 | es head kafba |

| 192.168.11.12 | logstash |

| 192.168.11.14 | logstash |

1 先开启es

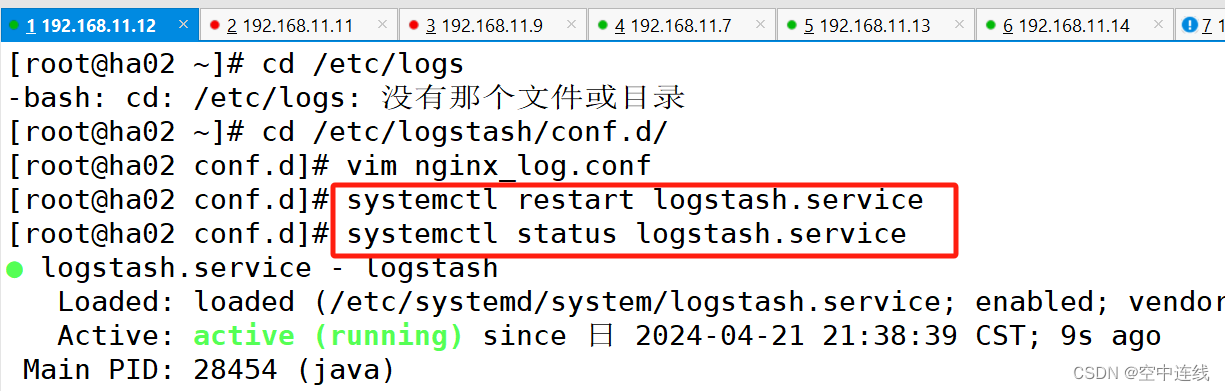

2 在nginx_access 修改配置文件

cd /etc/logstash/conf.d/

ls

vim nginx_log.conf

systemctl restart logstash

systemctl status logstash

ls

logstash -f nginx_log.confinput {

file {

path => "/apps/nginx/logs/access.log"

type => "access"

start_position => "beginning"

}

file {

path => "/apps/nginx/logs/error.log"

type => "error"

start_position => "beginning"

}

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.11.9:9200"]

index => "nginx_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.11.9:9200"]

index => "nginx_error-%{+YYYY.MM.dd}"

}

}

}

3 重启服务

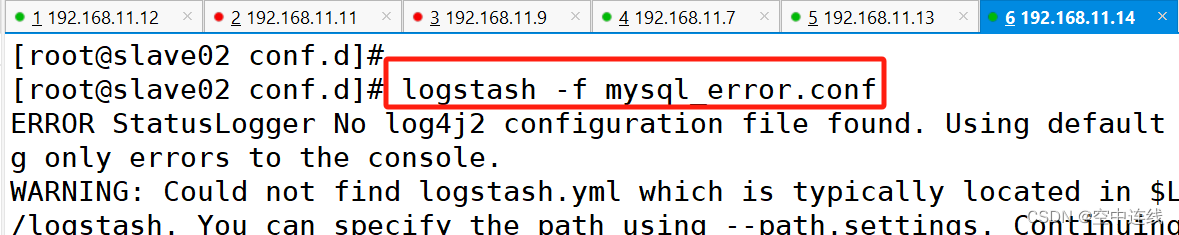

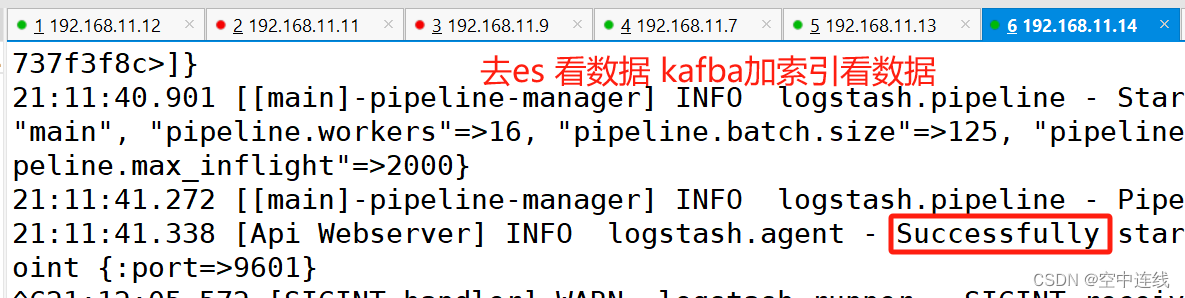

五 查看mysql_error 日志

五 查看mysql_error 日志

1先开启es head

[root@slave02 conf.d]# cat mysql_log.conf

input {

file {

path => "/usr/local/mysql/data/error.log"

start_position => "beginning"

sincedb_path => "/dev/null"

type => "mysql_error"

}

}

output {

elasticsearch {

hosts => ["192.168.11.9:9200"]

index => "mysql.error-%{+YYYY.MM.dd}"

}

}

[root@slave02 conf.d]# logstash -f mysql_log.conf

2 编译MySQL 上logstash文本

[root@slave02 ROOT]# cd /etc/logstash/

[root@slave02 logstash]# vim mysql_error.conf

[root@slave02 logstash]# systemctl restart mysql

[root@slave02 conf.d]# logstash -f mysql_error.conf

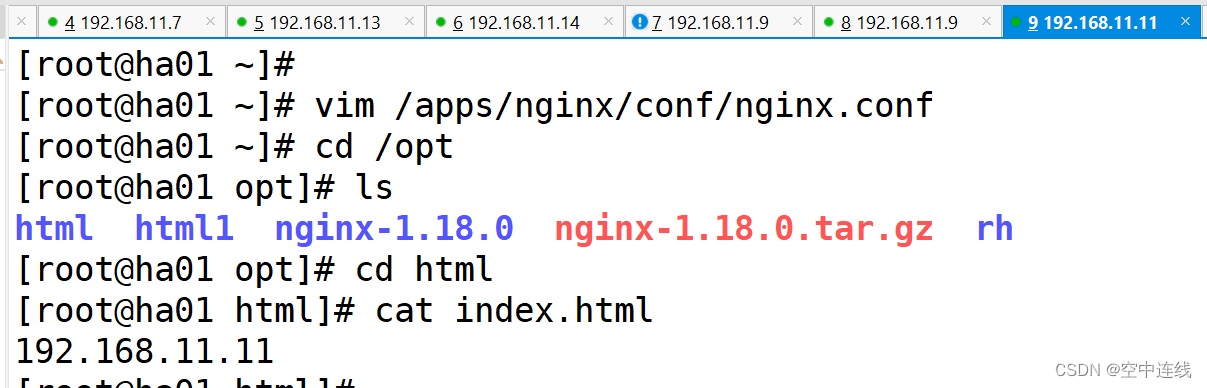

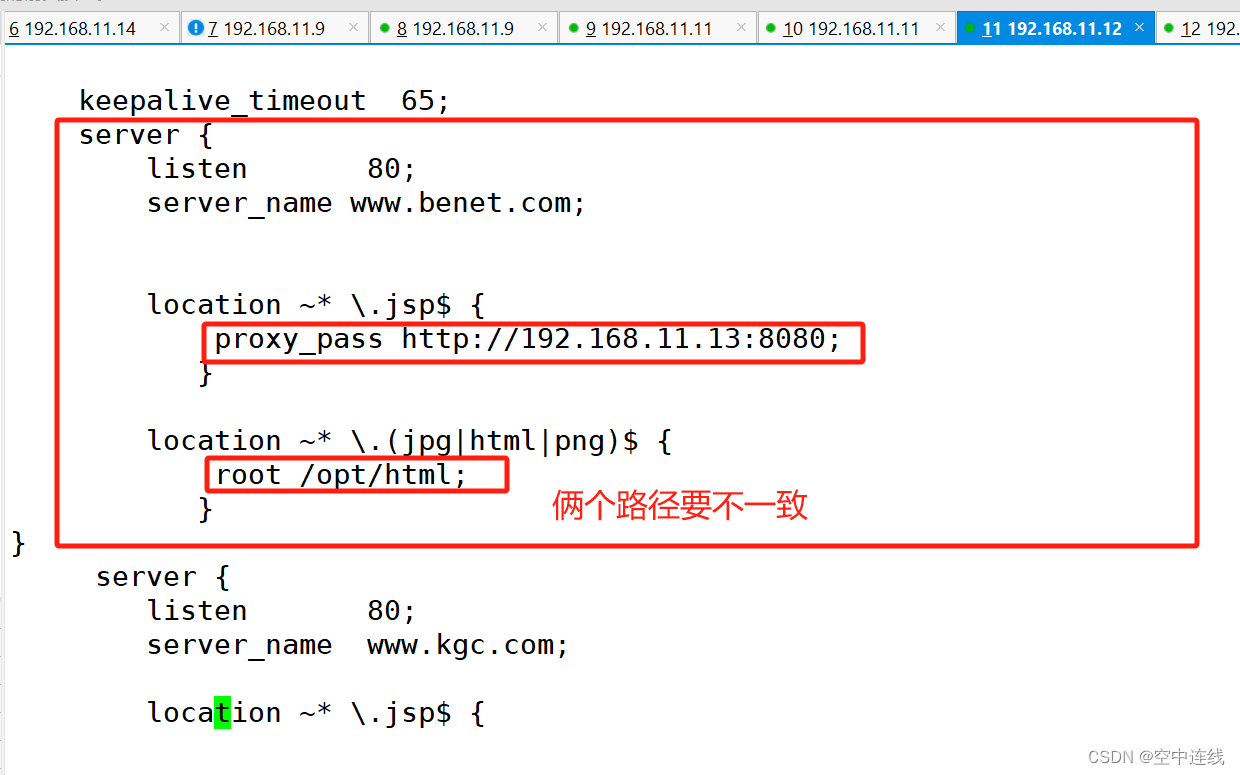

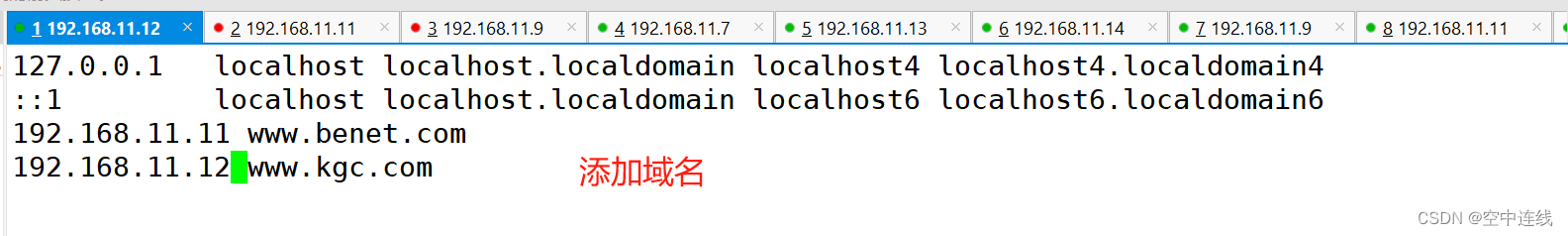

六 两个域名访问同页面

| 192.168.11.11 | ha01 nginx |

| 192.168.11.12 | |

| 浏览器 |

1 在192.168.11.11 添加

[root@ha01 ~]# cd /apps/nginx/

[root@ha01 nginx]# ls

client_body_temp fastcgi_temp logs sbin uwsgi_temp

conf html proxy_temp scgi_temp

[root@ha01 nginx]# cd conf/

[root@ha01 conf]# vim nginx.conf

[root@ha01 conf]# cd /opt/html/

[root@ha01 html]# ls

index.html

[root@ha01 html]# cat index.html

ACB

[root@ha01 html]# cat /etc/host

cat: /etc/host: 没有那个文件或目录

[root@ha01 html]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.11.11 www.benet.com

192.168.11.12 www.kgc.com

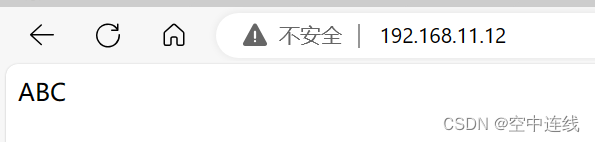

2 去192.168.11.14添加

[root@ha02 opt]# cd html/

[root@ha02 html]# ls

[root@ha02 html]# cat /apps/nginx/conf/nginx.conf

[root@ha02 conf.d]# vim /etc/hosts

[root@ha02 opt]# cd html/

[root@ha02 html]# ls

index.html

[root@ha02 html]# cat index.html

ABC

给真机添加该访问的域名

3 去浏览器检测:

需要有个缓存时间