PSAvatar: A Point-based Morphable Shape Model for Real-Time Head Avatar Creation with 3D Gaussian Splatting

PSAvatar:一种基于点的可变形形状模型,用于3D高斯溅射的实时头部化身创建

赵中原 1,2 、鲍振宇 1,2 、李庆 1 、邱国平 3,4 、刘康林 1

1 Pengcheng Laboratory 2 Peking University 3 University of Nottingham 4 Shenzhen University

1 鹏程实验室 2 北京大学 3 诺丁汉大学 4 深圳大学

Abstract 摘要 PSAvatar: A Point-based Morphable Shape Model for Real-Time Head Avatar Creation with 3D Gaussian Splatting

Despite much progress, achieving real-time high-fidelity head avatar animation is still difficult and existing methods have to trade-off between speed and quality. 3DMM based methods often fail to model non-facial structures such as eyeglasses and hairstyles, while neural implicit models suffer from deformation inflexibility and rendering inefficiency. Although 3D Gaussian has been demonstrated to possess promising capability for geometry representation and radiance field reconstruction, applying 3D Gaussian in head avatar creation remains a major challenge since it is difficult for 3D Gaussian to model the head shape variations caused by changing poses and expressions. In this paper, we introduce PSAvatar 1, a novel framework for animatable head avatar creation that utilizes discrete geometric primitive to create a parametric morphable shape model and employs 3D Gaussian for fine detail representation and high fidelity rendering. The parametric morphable shape model is a Point-based Morphable Shape Model (PMSM) which uses points instead of meshes for 3D representation to achieve enhanced representation flexibility. The PMSM first converts the FLAME mesh to points by sampling on the surfaces as well as off the meshes to enable the reconstruction of not only surface-like structures but also complex geometries such as eyeglasses and hairstyles. By aligning these points with the head shape in an analysis-by-synthesis manner, the PMSM makes it possible to utilize 3D Gaussian for fine detail representation and appearance modeling, thus enabling the creation of high-fidelity avatars. We show that PSAvatar can reconstruct high-fidelity head avatars of a variety of subjects and the avatars can be animated in real-time (≥ 25 fps at a resolution of 512 × 512 )2.

尽管取得了很大进展,但实现实时高保真头部化身动画仍然很困难,现有方法必须在速度和质量之间进行权衡。基于3DMM的方法通常无法对眼镜和发型等非面部结构进行建模,而神经隐式模型则存在变形不确定性和渲染效率低下的问题。虽然3D高斯已被证明具有良好的几何表示和辐射场重建的能力,应用3D高斯在头部化身创建仍然是一个主要的挑战,因为它是困难的3D高斯模型的头部形状变化所造成的姿势和表情。在本文中,我们介绍了PSAvatar,一种新的框架,利用离散几何图元创建一个参数化的变形形状模型,并采用3D高斯精细的细节表示和高保真渲染的动画头部化身创建。 参数化可变形形状模型是一种基于点的可变形形状模型(PMSM),它使用点代替网格进行3D表示,以实现增强的表示灵活性。PMSM首先通过在表面上采样以及在网格外采样将FLAME网格转换为点,从而不仅能够重建类似表面的结构,还能够重建复杂的几何形状,例如眼镜和发型。通过以合成分析的方式将这些点与头部形状对齐,PMSM可以利用3D高斯进行精细细节表示和外观建模,从而能够创建高保真化身。我们表明,PSAvatar可以重建各种主题的高保真头部化身,并且化身可以实时动画( ≥ 25 fps,分辨率为512 × 512) 2 。

![[Uncaptioned image]](https://img-blog.csdnimg.cn/img_convert/7efda5f27d741b06a792c15cb885b7ea.png)

Figure 1:PSAvatar learns the shape with pose and expression variations based on a point-based morphable shape model, and employs 3D Gaussian for fine detail representation and efficient rendering. Given monocular portrait videos, PSAvatar can create head avatars that enable real-time (≥ 25 fps at 512 × 512 resolution) and high-fidelity rendering.

图一:PSAvatar基于基于点的变形形状模型学习具有姿势和表情变化的形状,并采用3D高斯进行精细细节表示和高效渲染。对于单眼肖像视频,PSAvatar可以创建头部化身,实现实时( ≥ 25 fps,512 × 512分辨率)和高保真渲染。

1Introduction 1介绍

Creating animatable head avatars has wide applications and has attracted extensive interests in academia and industries. Many methods based on explicit representations, e.g., 3D morphable models (3DMMs) [1, 21], points [41, 35] and more recently 3D Gaussian [17, 25, 3]), and neural implicit representations, e.g., Neural Radiance Field (NeRF) [22, 10, 42] and signed distance function (SDF) [37, 40]), have been developed in recent years. Whilst these methods have achieved very impressive results, there are still many unsolved problems.

创建可动画化的头部化身具有广泛的应用,并且在学术界和工业界引起了广泛的兴趣。许多方法基于显式表示,例如,3D变形模型(3DMM)[1,21],点[41,35]和最近的3D高斯[17,25,3]),以及神经隐式表示,例如,神经辐射场(NeRF)[22,10,42]和符号距离函数(SDF)[37,40])是近年来开发的。虽然这些方法已经取得了令人印象深刻的成果,但仍然有许多未解决的问题。

3DMM-based methods allow efficient rasterization and inherently generalize to unseen deformations, but are limited by a priori-fixed topology and surface-like geometries, making them less suitable for modeling individuals with eyeglasses or complex hairstyles [3, 25]. Whilst neural implicit representations outperform 3DMM-based methods in capturing hair strands and eyeglasses [40, 6], they are computationally extremely demanding [15]. Furthermore, neural implicit representations need the deformer network or similar techniques to bridge the gap between the canonical and deformed spaces, making it challenging to achieve high deformation accuracy.

基于3DMM的方法允许有效的光栅化,并且固有地概括为不可见的变形,但是受到优先级固定的拓扑结构和表面状几何形状的限制,使得它们不太适合对戴眼镜或复杂发型的个体进行建模[3,25]。虽然神经隐式表示在捕获发丝和眼镜方面优于基于3DMM的方法[40,6],但它们在计算上要求极高[15]。此外,神经隐式表示需要变形器网络或类似技术来弥合规范空间和变形空间之间的差距,这使得实现高变形精度具有挑战性。

In contrast to neural implicit representations, both point and 3D Gaussian representations can be rendered efficiently with a splatting-based rasterization [41, 3, 25], and both are considerably more flexible than 3DMMs in representing complex volumetric structures, e.g., eyeglass, hair strands, etc.. PointAvatar [41] initializes with a sparse point cloud randomly sampled on a sphere and periodically upsamples the point cloud by adding noises. The position of the points are updated to match the target geometry via backwards gradients. Points are rotation-invariant and isotropically scaled, making them easy to control. In comparison, 3D Gaussians can be rotated and scaled, making them more flexible than points for 3D representation. In order to achieve consistent 3D representations, 3D Gaussian rely on carefully designed controlling strategy. In GaussianAvatar [25], each triangle of the mesh is initialized with a 3D Gaussian, and the positional gradient is utilized to move and periodically densify the Gaussian splats. A major difficulty in applying 3D Gaussian to head avatar creation is modeling the head shape variations caused by changing poses and expressions.

与神经隐式表示相比,点和3D高斯表示都可以用基于分裂的光栅化有效地渲染[41,3,25],并且在表示复杂的体积结构方面都比3DMM灵活得多,例如,头发丝等。PointAvatar [41]使用在球体上随机采样的稀疏点云进行建模,并通过添加噪声定期对点云进行上采样。点的位置通过向后梯度更新以匹配目标几何形状。点是旋转不变和各向同性缩放的,使其易于控制。相比之下,3D高斯可以旋转和缩放,使它们比3D表示的点更灵活。为了实现一致的3D表示,3D高斯依赖于精心设计的控制策略。 在GaussianAvatar [25]中,网格的每个三角形都使用3D高斯进行初始化,并且位置梯度用于移动和周期性地致密高斯splats。将3D高斯应用于头部化身创建的主要困难是对由改变姿势和表情引起的头部形状变化进行建模。

In this paper, we introduce PSAvatar, a novel framework for animatable head avatar creation that utilizes discrete geometric primitive to create a parametric morphable shape model to make it possible to employ 3D Gaussian for fine detail representation and high fidelity rendering. Such a parametric morphable shape model, referred to as Point-based Morphable Shape Model (PMSM), relies on points instead of meshes for 3D representation to achieve enhanced representation flexibility. PMSM is created based on FLAME to inherit its morphable capability. Specifically, PMSM converts the FLAME mesh to points by uniformly sampling points on the surface of the mesh. However, FLAME is incapable of representing individuals with eyeglasses or complex hairstyles. To address this, PMSM samples points off the FLAME mesh to enhance the representation flexibility. PMSM splats the points onto screen and minimizes the difference between the rendered and ground truth images. After removing the invisible points, the remaining points are then aligned with the head shape. PSAvatar models the appearance by employing 3D Gaussian in combination with the PMSM to reconstruct the underlying radiance field and to achieve high-fidelity rendering. Our contributions are as follows:

在本文中,我们介绍PSAvatar,一个新的框架,利用离散的几何图元创建一个参数化的变形形状模型,使之有可能采用3D高斯精细的细节表示和高保真渲染的动画头部化身创建。这种参数化的可变形形状模型,称为基于点的可变形形状模型(PMSM),依赖于点而不是网格来进行3D表示,以实现增强的表示灵活性。永磁同步电机是在FLAME的基础上创建的,继承了FLAME的变形能力。具体来说,PMSM通过对网格表面上的点进行均匀采样,将FLAME网格转换为点。然而,FLAME无法代表戴眼镜或发型复杂的个人。为了解决这个问题,PMSM采样点离开FLAME网格,以提高表示的灵活性。PMSM将点显示在屏幕上,并最大限度地减少渲染图像和地面实况图像之间的差异。 在移除不可见的点之后,剩余的点然后与头部形状对齐。PSAvatar通过采用3D高斯模型结合PMSM来重建底层辐射场并实现高保真渲染。 我们的贡献如下:

- •

We present PSAvatar, a method for creating animatable head avatars using a point-based morphable shape model for shape modeling and employing 3D Gaussian for fine detail representation and appearance modeling.

·我们提出了PSAvatar,一种使用基于点的可变形形状模型进行形状建模并采用3D高斯进行精细细节表示和外观建模来创建可动画化头部化身的方法。 - •

We have developed a Point-based Morphable Shape Model for 3D head representation that is capable of modeling facial shapes with pose and expression variations and capturing complex volumetric structures e.g., hair strands, glasses, etc..

·我们开发了一种用于3D头部表示的基于点的可变形形状模型,该模型能够对具有姿势和表情变化的面部形状进行建模,并捕获复杂的体积结构,例如,头发、眼镜等。 - •

We show that PSAvatar can reconstruct high-fidelity head avatars of a variety of subjects and the avatars can be animated in real-time (≥ 25 fps at 512 × 512 resolution).

·我们表明PSAvatar可以重建各种主题的高保真头部化身,并且化身可以实时动画( ≥ 25 fps,512 × 512分辨率)。

2Related Work 2相关工作

Head Avatar Creation with Implicit Models Implicit models reconstruct the face by neural radiance field in combination with volumetric rendering or using implicit surface functions (e.g., signed distance functions). A popular approach to creating animatable head avatar is to condition the NeRF on low-dimensional facial model parameters such as expression, pose and camera setting [10, 34, 11]. NeRFBlendshape [12] models the dynamic NeRF by linear combinations of multiple NeRF basis one-to-one corresponding to semantic blendshape coefficients. Despite achieving impressive performances, such an approach could either fail to disentangle pose and expression or fail to generalize well to novel poses and expressions [7, 10, 16, 26, 30, 32, 33]. Another paradigm is to establish the target head model in the canonical space and synthesize the dynamics by deformation [19, 20, 38]. INSTA [42] deforms the query points from the observation space to the canonical space by using the bounding volume hierarchy (BVH) and employs InstantNGP to accelerate rendering. IMAvatar [40] represents the deformation fields via learned expression blendshapes and solves for the mapping from the observed space to the canonical space via iterative root-finding. AvatarMAV [36] defines motion-aware neural voxels, and models deformations via blending a set of voxel grid motion bases according to an input 3DMM expression vector. In addition, a variety of powerful techniques such as triplane [39], Kplane [9], deformable multi-layer meshes [6] have been utilized in head avatar creation to improve training efficiency and rendering quality.

使用隐式模型的头部化身创建隐式模型通过神经辐射场结合体积渲染或使用隐式表面函数(例如,距离函数)。创建可动画头部化身的流行方法是根据低维面部模型参数(如表情,姿势和相机设置)调节NeRF [10,34,11]。NeRFBlendshape [12]通过与语义blendshape系数一一对应的多个NeRF基础的线性组合来对动态NeRF进行建模。尽管取得了令人印象深刻的性能,这种方法可能无法解开姿势和表情,或者无法很好地推广到新的姿势和表情[7,10,16,26,30,32,33]。另一个范例是在规范空间中建立目标头部模型,并通过变形合成动力学[19,20,38]。 INSTA [42]通过使用包围体层次(BVH)将查询点从观察空间变形到规范空间,并采用InstantNGP来加速渲染。IMAvatar [40]通过学习表达式blendshapes表示变形场,并通过迭代求根求解从观察空间到规范空间的映射。AvatarMAV [36]定义了运动感知神经体素,并根据输入的3DMM表达向量通过混合一组体素网格运动基础来建模变形。此外,各种强大的技术,如三平面[39],Kplane [9],可变形多层网格[6]已用于头部化身创建,以提高训练效率和渲染质量。

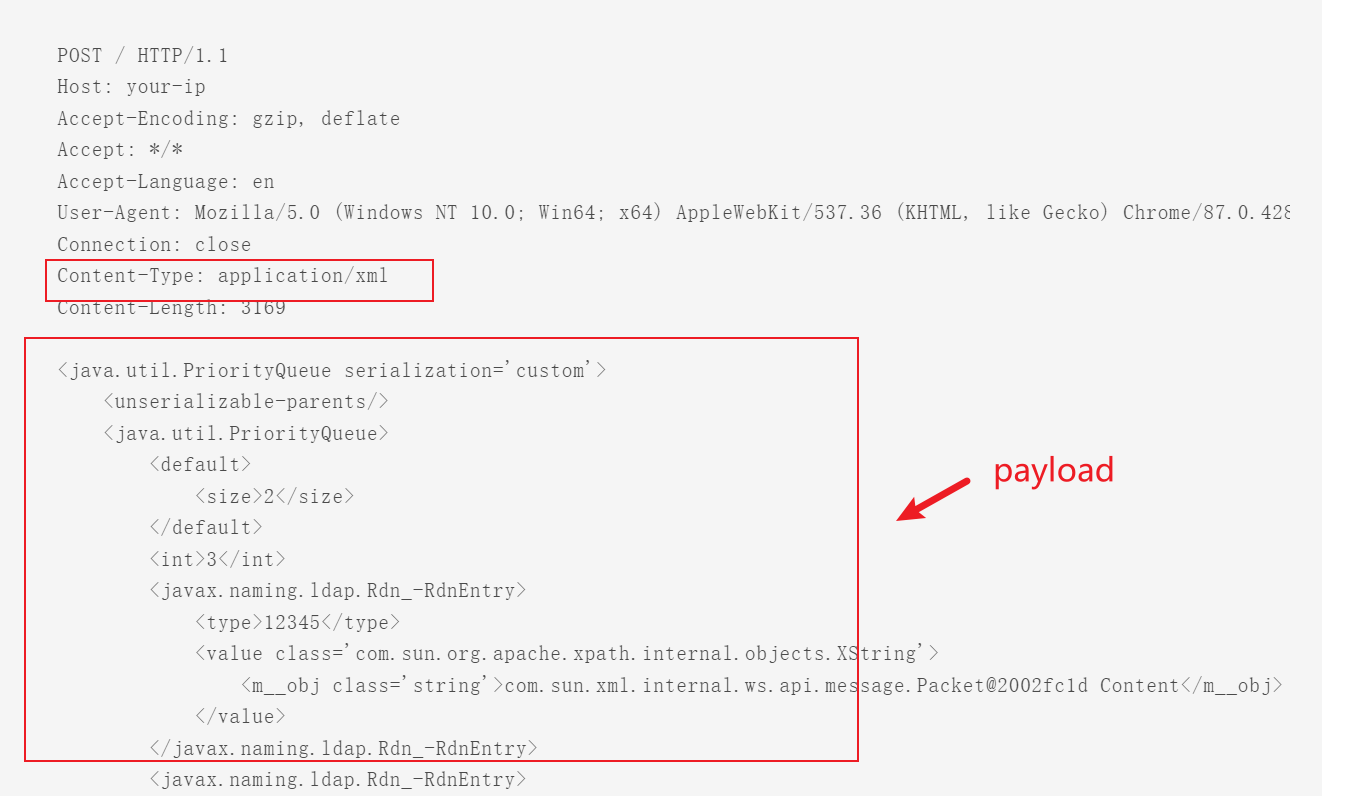

Figure 2:Overview. Given a monocular portrait video, we conduct FLAME tracking to obtain the parameters. The point-based morphable shape model (PMSM) first converts the FLAME mesh to points. It performs sampling on the surfaces (blue points) and additionally generates samples off the meshes by offsetting the samples on the meshes along their normal directions (black points). These points are then aligned with the head shape in an analysis-by-synthesis manner. The inclusion of points on meshes and off meshes enables the PMSM to reconstruct not only surface-like structures but also complex geometries that are beyond the capability of 3DMMs. Combining the PMSM with 3D Gaussian allows the reconstruction of the radiance field for efficient rendering.

图2:概述。给定一个单目人像视频,我们进行FLAME跟踪,以获得参数。基于点的可变形形状模型(PMSM)首先将FLAME网格转换为点。它在曲面上执行采样(蓝色点),并通过沿网格的法线方向(黑色点)偏移网格上的采样(沿着),在网格外生成采样。然后,这些点以合成分析的方式与头部形状对齐。包含网格上的点和网格外的点使得PMSM不仅能够重建表面结构,而且能够重建超出3DMM能力的复杂几何形状。将PMSM与3D高斯相结合允许重建辐射场以进行高效渲染。

Head Avatar Creation with Explicit Models The seminal work of 3D Morphable Model (3DMM) [1] uses principal component analysis (PCA) to model facial appearance and geometry on a low-dimensional linear subspace. 3DMM and its variants [21, 24, 2, 14, 8] have been widely applied in optimization-based and deep learning-based head avatar creation [13, 31, 4, 5]. Neural Head Avatar [15] employs neural networks to predict vertex offsets and textures, enabling the extrapolation to unseen facial expressions. ROME [18] estimates a person-specific head mesh and the associated neural texture to enhance local photometric and geometric details. 3DMM-based methods produce geometrically consistent avatars that can be easily controlled, however, they are limited to craniofacial structures and can fail to represent hair and glasses. To address this, PointAvatar [41] explores point-based geometry representation with differential point splatting, allowing for high quality rendering and representation of hair and eyeglasses. Although point-based representations are easy to handle, they lack flexibility. In comparison, 3D Gaussian [17] offers improved flexibility. MonoGaussianAvatar [3] replaces the point cloud in PointAvatar with Gaussian points to improve representation flexibility and rendering quality. GaussianAvatars [25] pairs a triangle of the mesh with a 3D Gaussian and introduces densification and pruning strategies for sufficiently representing the geometry. In addition, it uses binding inheritance to ensure that the 3D Gaussian translates and rotates with the triangle to enable precise animation control via the underlying parametric model.

3D Morphable Model(3DMM)的开创性工作[1]使用主成分分析(PCA)在低维线性子空间上对面部外观和几何形状进行建模。3DMM及其变体[21,24,2,14,8]已广泛应用于基于优化和基于深度学习的头部化身创建[13,31,4,5]。Neural Head Avatar [15]采用神经网络来预测顶点偏移和纹理,从而能够外推到看不见的面部表情。罗马[18]估计特定于人的头部网格和相关的神经纹理,以增强局部光度和几何细节。基于3DMM的方法产生可以容易地控制的几何一致的化身,然而,它们限于颅面结构,并且可能无法表示头发和眼镜。 为了解决这个问题,PointAvatar [41]探索了基于点的几何表示与差分点溅射,允许高质量的渲染和头发和眼镜的表示。虽然基于点的表示很容易处理,但它们缺乏灵活性。相比之下,3D高斯[17]提供了更好的灵活性。MonoGaussianAvatar [3]用高斯点替换PointAvatar中的点云,以提高表示灵活性和渲染质量。GaussianAvatars [25]将网格的三角形与3D高斯配对,并引入致密化和修剪策略以充分表示几何形状。此外,它使用绑定继承来确保3D高斯与三角形一起平移和旋转,以通过底层参数化模型实现精确的动画控制。

Both point and 3D Gaussian rely on the controlling strategy for achieving consistent 3D representation. PointAvatar [41] initializes sparse points by randomly sampling on a sphere, and updates their positions to approximate the coarse shape. Besides, PointAvatar introduces a deformer network to bridge the gap between the canonical space and the deformed space. To model details, it periodically densifies points by adding noises. 3D Gaussians are considerably more flexible than points in 3D representation but are more difficult to control. In GaussianAvatars [25], each triangle of the mesh is initialized with a 3D Gaussian. For each 3D Gaussian with a large positional gradient, GaussianAvatars split it into two smaller ones if it is large or clone it if it is small. The newly generated 3D Gaussian is bound to the same triangle as the old one to enable binding inheritance during densification. A major difficulty in applying 3D Gaussian to head avatar creation is modeling the head shape variations caused by changing poses and expressions. Our new PSAvatar uses a point-based morphable shape model to capture the head dynamics and successfully achieve real-time head avatar animation.

点和3D高斯都依赖于控制策略来实现一致的3D表示。PointAvatar [41]通过在球体上随机采样来消除稀疏点,并更新它们的位置以近似粗略的形状。此外,PointAvatar引入了一个变形器网络,以弥合规范空间和变形空间之间的差距。为了模拟细节,它通过添加噪声来周期性地加密点。3D高斯比3D表示中的点灵活得多,但更难以控制。在GaussianAvatars [25]中,网格的每个三角形都使用3D高斯初始化。对于每个具有较大位置梯度的3D高斯,GaussianAvatars将其拆分为两个较小的高斯,如果它很大,则将其克隆。新生成的3D高斯被绑定到与旧三角形相同的三角形,以在致密化期间启用绑定继承。 将3D高斯应用于头部化身创建的主要困难是对由改变姿势和表情引起的头部形状变化进行建模。我们的新PSAvatar使用基于点的变形形状模型来捕捉头部动态,并成功实现实时头部化身动画。

3Method 3方法

Fig. 2 shows the schematic of PSAvatar. The objective is to reconstruct an animatable head avatar with a monocular portrait video of a subject performing diverse expressions and poses. To achieve this, PSAvatar introduces a point-based morphable shape model (PMSM) for 3D representation to model pose and expression dependent shape variations (see section 3.2), and models the appearance by combining the PMSM and 3D Gaussian (see section 3.3).

图2显示了PSAvatar的示意图。我们的目标是重建一个动画头部化身与单眼人像视频的主题执行不同的表情和姿势。为了实现这一点,PSAvatar引入了基于点的变形形状模型(PMSM)用于3D表示,以建模姿势和表情相关的形状变化(见第3.2节),并通过组合PMSM和3D高斯来建模外观(见第3.3节)。

(a)

(b)

(c)

(d)

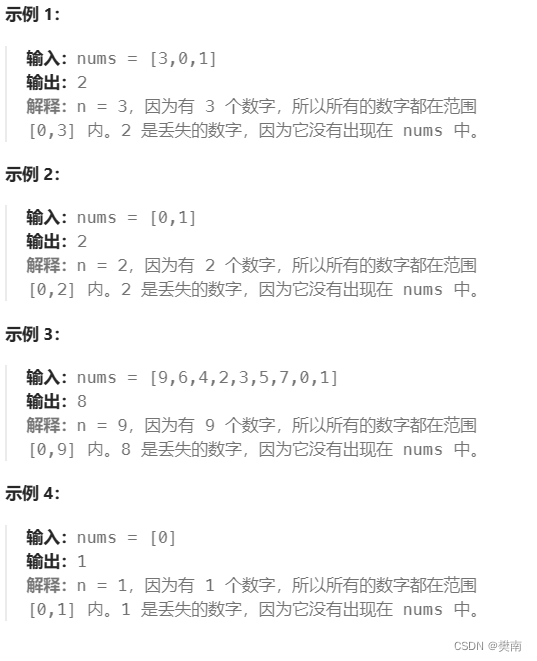

Figure 3:Shape variations for given poses and expressions. The reference images (taken from subject 2) on the left provide the pose and expression parameters, and the Point-based Morphable Shape Model (PMSM) can warp the points in a way that is consistent with the reference, i.e. the reference person turns his head around, the points follow the movements. Blue and black represent the points on and off the mesh respectively. To visualize the shape variation in a better way, points sampled based on the eye, nose and mouth regions are colored with pink, red and green, respectively.

图3:给定姿势和表情的形状变化。左侧的参考图像(取自对象2)提供姿势和表情参数,并且基于点的可变形形状模型(PMSM)可以以与参考一致的方式扭曲点,即,参考人转动他的头,点跟随运动。蓝色和黑色分别表示网格上和网格外的点。为了以更好的方式可视化形状变化,基于眼睛、鼻子和嘴区域采样的点分别用粉红色、红色和绿色着色。

3.1Preliminary

Point and 3D Gaussian utilize discrete primitives for geometry representation. Points are parameterized by the radius �, the opacity � and the color �. A 3D Gaussian is defined by a covariance matrix Σ centered at a point (mean) � [17]:

点和3D高斯利用离散基元进行几何表示。点由半径 � 、不透明度 � 和颜色 � 参数化。3D高斯由以点(平均值) � 为中心的协方差矩阵 Σ 定义[17]:

| �(�)=�−12(�−�)�Σ(�−�) | (1) |

To guarantee that Σ is physically meaningful, the covariance matrix is constructed by a parametric ellipse with a scaling matrix � and a rotation matrix �:

为了保证 Σ 在物理上有意义,协方差矩阵由具有缩放矩阵 � 和旋转矩阵 � 的参数椭圆构造:

| Σ=������ | (2) |

Identical to that in [17], the scaling and rotation matrix are represented by a scaling vector �∈�3 and a quaternion �∈�4, respectively. � and � can be trivially converted to their respective matrices, and they can be combined to make sure that the normalised � is a valid unit quaternion.

与[17]中相同,缩放和旋转矩阵分别由缩放矢量 �∈�3 和四元数 �∈�4 表示。 � 和 � 可以被简单地转换为它们各自的矩阵,并且它们可以被组合以确保归一化的 � 是有效的单位四元数。

Both points and 3D Gaussian can be rendered via a differentiable splatting-based rasterizer, and the color � of a pixel is computed by alpha compositing:

点和3D高斯都可以通过基于可微分splatting的光栅化器渲染,像素的颜色 � 通过alpha合成计算:

| �=∑�=1����=∑�=1��∏�=1�−1(1−��)�� | (3) |

where �� is the weight for alpha compositing, �� is the color of each point or 3D Gaussian and � is the blending weight. For points, � is calculated as �=�(1−�2/�2), where � is the distance from the point center to the pixel center. For Gaussians, � is given by evaluating the 2D projection of the 3D Gaussian multiplied by a per-point opacity �.

其中 �� 是alpha合成的权重, �� 是每个点的颜色或3D高斯, � 是混合权重。对于点, � 被计算为 �=�(1−�2/�2) ,其中 � 是从点中心到像素中心的距离。对于高斯,通过评估3D高斯的2D投影乘以每点不透明度 � 来给出 � 。

(a)

(b)

(c)

(d)

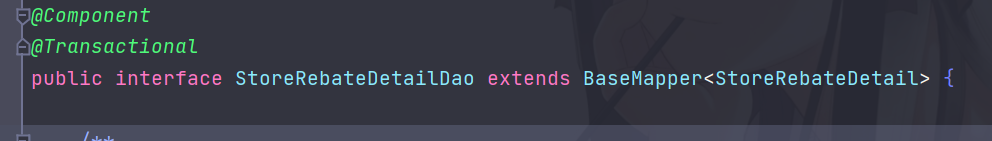

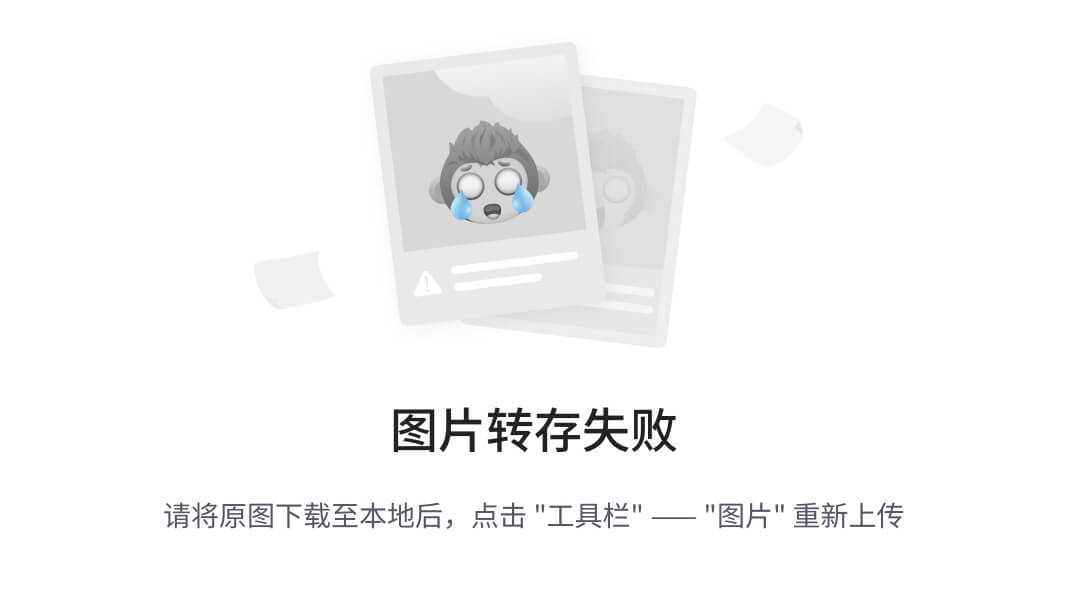

Figure 4:Visualization of each component in PSAvatar. (a) shows the learned point-based morphable shape model. (b) visualizes the 3D Gaussian, which shows improved representation flexibility over PMSM. (c) and (d) are the rendered and ground truth image, respectively.

图4:PSAvatar中每个组件的可视化。(a)显示了学习的基于点的可变形形状模型。(b)可视化的三维高斯,这表明改进的PMSM表示的灵活性。(c)和(d)分别是渲染图像和地面实况图像。

3.2Point-based Morphable Shape Model

3.2基于点的可变形形状模型

Given shape, pose and expression components, FLAME [21] can produce morphologically realistic faces in a convenient and effective way. This motivates us to build a point-based morphable shape model (PMSM) on FLAME to inherit its morphable capability. Since we focus on human facial avatars, we specifically model the pose and expression dependent shape variations and simplify the FLAME model �(�,�):

给定形状,姿势和表情组件,FLAME [21]可以以方便有效的方式生成形态逼真的面部。这促使我们建立一个基于点的变形形状模型(PMSM)的火焰继承其变形能力。由于我们专注于人类面部化身,因此我们专门对姿势和表情相关的形状变化进行建模,并简化FLAME模型 �(�,�) :

| �(�,�)=�(��(�,�),�(�),�,𝒲) | (4) |

where � and � denote the pose and expression parameters respectively. �(⋅) and �(⋅) define the standard skinning function and the joint regressor respectively. 𝒲 represents the per-vertex skinning weights for smooth blending, and �� denotes the template mesh with pose and expression offsets, defined as:

其中 � 和 � 分别表示姿势和表情参数。 �(⋅) 和 �(⋅) 分别定义标准蒙皮函数和关节回归量。 𝒲 表示用于平滑混合的逐顶点蒙皮权重, �� 表示具有姿势和表达式偏移的模板网格,定义为:

| ��(�,�)=�¯+��(�;𝒫)+��(�;ℰ)+𝒢(�,�) | (5) |

where �¯ is the personalized template, �� and �� model the corrective pose and expression blendshapes, respectively. 𝒫 and ℰ denote the pose and expression basis, respectively. To model the inconsistency between FLAME and the head geometry, 𝒢(�,�) is introduced as the per-vertex geometry correction:

其中 �¯ 是个性化模板, �� 和 �� 分别对校正姿势和表情融合变形建模。 𝒫 和 ℰ 分别表示姿势和表情基础。为了对FLAME和股骨头几何结构之间的不一致性进行建模,引入 𝒢(�,�) 作为逐顶点几何结构校正:

| 𝒢(�,�)=��(�;𝒫′)+��(�;ℰ′) | (6) |

where 𝒫′ and ℰ′ are learned pose and expression blendshape basis, respectively.

其中 𝒫′ 和 ℰ′ 分别是学习的姿势和表情融合变形基础。

Because FLAME is incapable of modeling hair strands or eyeglasses, PSAvatar addresses this limitation by introducing the point-based morphable shape model (PMSM) which utilizes points instead of meshes to enhance the representation flexibility. Specifically, we first convert the FLAME mesh to points by uniformly sampling points on the surface of the mesh with a probability that is proportional to the face area:

由于FLAME无法建模头发或眼镜,PSAvatar通过引入基于点的变形形状模型(PMSM)来解决这一限制,该模型利用点而不是网格来增强表示的灵活性。具体来说,我们首先将FLAME网格转换为点,方法是以与面部面积成比例的概率对网格表面上的点进行均匀采样:

| ���=∑�=02������ | (7) |

where the superscript � denotes the �-th mesh, the subscript � represents sampling on the mesh. ��� and ��� with �={0, 1, 2} are the barycentric coordinate and the vertex of the �-th mesh, respectively. Since FLAME excels in modeling the facial dynamics, sampling is only conducted on the facial region. In addition, sampling is conducted off the FLAME meshes to capture complex structures ignored by the FLAME model. This is achieved by offsetting the sample on the mesh along its normal:

其中上标 � 表示第 � 个网格,下标 � 表示网格上的采样。 ��� 和 ��� 以及 �= {0,1,2}分别是第 � 个网格的重心坐标和顶点。由于FLAME擅长面部动态建模,因此仅在面部区域上进行采样。此外,采样是进行了FLAME网格,以捕捉复杂的结构忽略了FLAME模型。这是通过沿网格法线沿着偏移网格上的样本来实现的:

| ���=���+���⋅��� | (8) |

where the subscript � represents sampling off the mesh. ��� is a random offset from a uniform distribution [0,����], where ���� is a hyperparameter, empirically taken as 0.30 for covering the entire head as much as possible. ��� is the normal on ���, calculated by:

其中下标 � 表示从网格采样。 ��� 是从均匀分布 [0,����] 的随机偏移,其中 ���� 是超参数,经验上取为0.30以尽可能多地覆盖整个头部。 ��� 是 ��� 上的正常值,计算公式为:

| ���=∑�=02������ | (9) |

where ��� with �={0, 1, 2} are the vertex normal of the �-th mesh.

其中 ��� 和 �= {0,1,2}是第 � 个网格的顶点法线。

Samples on the mesh are parameterized by the face index �, the barycentric coordinates, the opacity � and the color �. While samples off the mesh carry one additional parameter ���. During shape acquisition, the color � is modeled by the RGB value for simplification. Such a parameterization guarantees samples across diverse poses and expressions are in one to one (or point to point) correspondence, thus enabling the PMSM to be morphable.

网格上的样本由面索引 � 、重心坐标、不透明度 � 和颜色 � 参数化。而网片上的样本带有一个附加参数 ��� 。在形状获取期间,颜色 � 由RGB值建模以用于简化。这样的参数化保证了不同姿势和表情的样本是一对一(或点对点)的对应关系,从而使PMSM能够变形。

PMSM aligns the points and the target head in an analysis-by-synthesis manner, i.e., all the samples are splatted onto the screen via the tile rasterizer in Equation (3), and the difference between the rendered image and the input are minimized. Samples with visibility below a predefined threshold are removed. For shape acquisition, point splatting instead of Gaussian splatting is applied as point has fewer parameters than Gaussian thus converging faster to model the shape (see section 4.3). As shown in Fig. 3 and the supplementary video sequences, the resulting shape model can represent the head geometry including the hair, and can be morphed with given poses and expressions.

PMSM以综合分析的方式对准点和目标头,即,在等式(3)中,所有样本都经由瓦片光栅化器被溅射到屏幕上,并且渲染图像与输入之间的差异被最小化。可见性低于预定义阈值的样本将被移除。对于形状采集,应用点溅射而不是高斯溅射,因为点具有比高斯更少的参数,从而更快地收敛以建模形状(参见第4.3节)。如图3和补充视频序列所示,所得到的形状模型可以表示包括头发的头部几何形状,并且可以用给定的姿势和表情变形。

Table 1:Quantitative comparison with state-of-the-art methods. Green and yellow indicates the best and the second, respectively.

表1:与现有技术方法的定量比较。绿色和黄色分别表示最好和第二。

| Subject ID | subject 1 (yufeng) 科目一(裕丰) | subject 2 (marcel) 受试者二(marcel) | subject 3 (soubhik) 科目3(soubhik) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Metrics | PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| IMAvatar [40] [40]第四十话 | 21.2437 | 0.8410 | 0.1608 | 21.0541 | 0.8409 | 0.2653 | 18.6646 | 0.7664 | 0.1822 |

| INSTA [42] | 17.7720 | 0.7888 | 0.1967 | 19.1923 | 0.8117 | 0.2261 | 16.4970 | 0.7607 | 0.2348 |

| PointAvatar [41] [41]第四十一话 | 24.8368 | 0.8686 | 0.1519 | 24.1019 | 0.8525 | 0.1913 | 22.8175 | 0.8211 | 0.0996 |

| Ours | 29.3942 | 0.9212 | 0.0580 | 26.3734 | 0.8869 | 0.0930 | 27.4765 | 0.8901 | 0.0609 |

| Subject ID | subject 4 (person_1) 受试者4(人_1) | subject 5 (person_2) 受试者5(人_2) | subject 6 (person_3) 受试者6(人_3) | ||||||

| Metrics | PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| IMAvatar [40] [40]第四十话 | 20.2843 | 0.8785 | 0.1359 | 23.7250 | 0.9363 | 0.0924 | 24.9572 | 0.9052 | 0.1051 |

| INSTA [42] | 19.1685 | 0.8855 | 0.1478 | 22.7280 | 0.9324 | 0.0951 | 23.5286 | 0.9070 | 0.0861 |

| PointAvatar [41] [41]第四十一话 | 25.2502 | 0.9212 | 0.0778 | 26.4185 | 0.9467 | 0.0524 | 29.953 | 0.9419 | 0.0481 |

| Ours | 31.5703 | 0.9667 | 0.0341 | 32.2534 | 0.9732 | 0.0254 | 32.3608 | 0.9675 | 0.0269 |

3.3Rendering

To represent the fine detail and model the appearance, PSAvatar employs 3D Gaussian in combination with the PMSM. Specifically, each Gaussian is parameterized by its rotation matrix �′, anisotropic scaling matrix �, color � and opacity �. In contrast to that in PMSM, the color � for the Gaussian is modeled by the spherical harmonics.

为了表现细节并模拟外观,PSAvatar结合PMSM使用3D高斯。具体地,每个高斯由其旋转矩阵 �′ 、各向异性缩放矩阵 � 、颜色 � 和不透明度 � 参数化。与PMSM中的颜色相反,高斯的颜色 � 由球谐函数建模。

As shown in Equation (7), samples in the PMSM are obtained based on the local coordinate determined by each mesh. To achieve rendering, Gaussians are supposed to be transformed from the local coordinate to the global coordinate by:

如等式(7)所示,基于由每个网格确定的局部坐标来获得PMSM中的样本。为了实现渲染,高斯应该通过以下方式从局部坐标转换到全局坐标:

| �=�′�� | (10) |

where � is the rotation matrix of the Gaussian in the global coordinate, and �� is the local rotation matrix determined by the �-th mesh, which is calculated by the barycentric interpolation as well:

其中, � 为高斯在全局坐标中的旋转矩阵, �� 为第2#个网格确定的局部旋转矩阵,也是通过重心插值计算得到的:

| ��=∑�=02������ | (11) |

where ��� with �={0, 1, 2} is the rotation matrix of each vertex that can be derived by �(⋅) in Equation (4), i.e., ��� corresponds to the rotation part of �(⋅). The directoinal color is calculated based on the spherical harmonics which is rotated from the local coordinate to the global one using the method in [23]. Eventually, the tile rasterizer in Equation (3) splats the 3D Gaussians onto the screen to implement the rendering. Empirically, we have found that the performance of PSAvatar can be further improved with the guidance of the enhancement network. Considering this, a U-net [28] based enhancement is applied to the rendered image for improving the visual quality.

其中 ��� 和 �= {0,1,2}是可以由等式(4)中的 �(⋅) 导出的每个顶点的旋转矩阵,即, ��� 对应于 �(⋅) 的旋转部分。方向颜色是基于球谐函数计算的,球谐函数使用[23]中的方法从局部坐标旋转到全局坐标。最后,等式(3)中的瓦片光栅化器将3D高斯投影到屏幕上以实现渲染。经验上,我们已经发现,PSAvatar的性能可以进一步提高与增强网络的指导。考虑到这一点,基于U-net [28]的增强应用于渲染图像以提高视觉质量。

3.4Optimization and Regularization

3.4优化与正则化

The tile rasterizer in Equation (3) will output a rendered image ��, and the U-net perform enhancement on the rendered image and produce the ultimate output ���ℎ. The RGB loss constrains the output image in the pixel domain:

等式(3)中的瓦片光栅化器将输出渲染图像 �� ,并且U网对渲染图像执行增强并产生最终输出 ���ℎ 。RGB损失在像素域中约束输出图像:

| ℒ���=‖��−���‖+‖���ℎ−���‖ | (12) |

where ��� is the ground truth. Analogous to prior work [41], we adopt a VGG feature loss:

其中 ��� 是基本事实。类似于之前的工作[41],我们采用VGG特征损失:

| ℒ���=‖����(��)−����(���)‖+‖����(���ℎ)−����(���)‖ | (13) |

where ���� calculates the features from the first four layers of a pre-trained VGG network [29]. To avoid the scaling vector � growing unbounded, we regularize the scaling vector � by:

其中 ���� 从预训练的VGG网络的前四层计算特征[29]。为了避免缩放向量 � 无限增长,我们通过以下方式正则化缩放向量 � :

| ℒ�������=‖�‖ | (14) |

Formally, the training objective for supervising PSAvatar is defined as:

监督PSAvatar的培训目标正式定义为:

| ℒ=ℒ���+�1ℒ���+�2ℒ������� | (15) |

where �1 and �2 are taken as 0.1.

其中 �1 和 �2 取0.1。