arXiv-2016

code: https://github.com/loshchil/SGDR/blob/master/SGDR_WRNs.py

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

- 5 Experiments

- 5.1 Datasets and Metric

- 5.2 Single-Model Results

- 5.3 Ensemble Results

- 5.4 Experiments on a Dataset of EEG Recordings

- 5.5 Preliminary Experiments on a downsampled ImageNet Dataset

- 6 Conclusion(own) / Future work

1 Background and Motivation

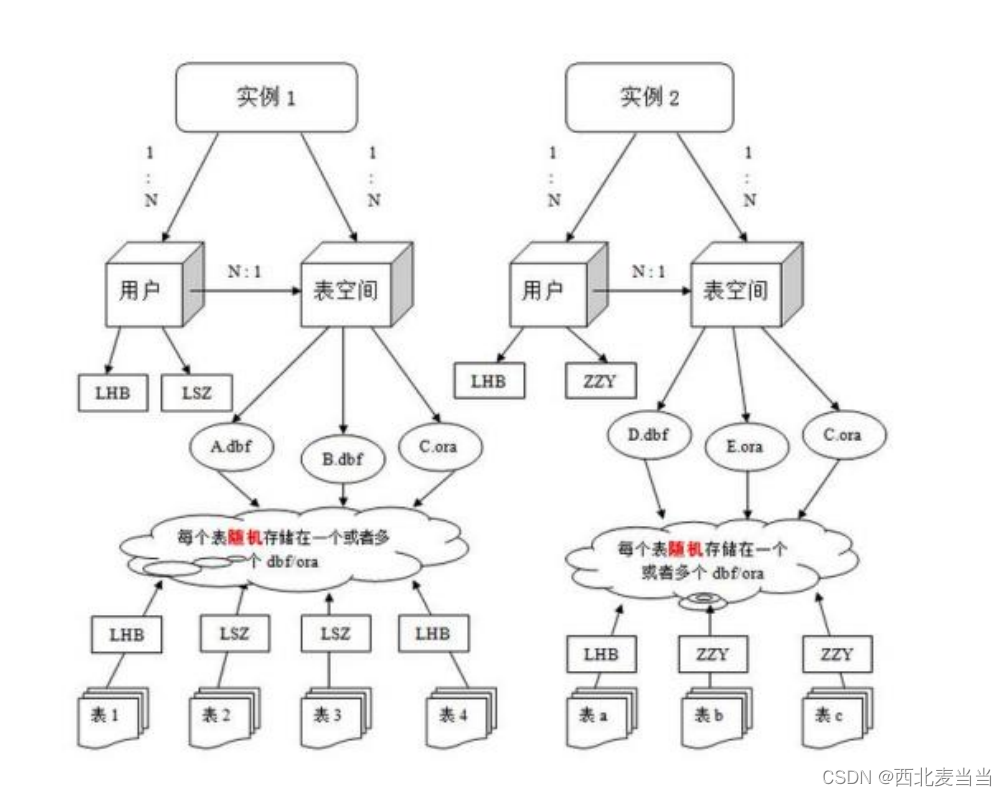

训练深度神经网络的过程可以视为找下面这个方程 min 解的过程

或者用二阶导的形式

然而 inverse Hessian 不好求(【矩阵学习】Jacobian矩阵和Hessian矩阵)

虽然有许多改进的优化方法来尽可能的逼近 inverse Hessian,但是,目前在诸多计算机视觉相关任务数据集上表现最好的方法还是 SGD + momentum

有了实践中比较猛的 optimization techniques 后,The main difficulty in training a DNN is then associated with the scheduling of the learning rate and the amount of L2 weight decay regularization employed.

本文,作者从 learning rate schedule 角度出发,提出了 SGDR 学习率策略,periodically simulate warm restarts of SGD

使得深度学习任务收敛的更快更好

2 Related Work

In applied mathematics, multimodal optimization deals with optimization tasks that involve finding all or most of the multiple (at least locally optimal) solutions of a problem, as opposed to a single best solution.

-

restarts in gradient-free optimization

based on niching methods(见文末总结部分)

-

restarts in gradient-based optimization

《Cyclical Learning Rates for Training Neural Networks》(WACV-2017)

closely-related to our approach in its spirit and formulation but does not focus on restarts

一个 soft restart 一个 hard restart(SGDR)

3 Advantages / Contributions

SGD + warm restart 技术的结合,或者说 warm restart 在 SGD 上的应用

两者均非原创,在一些小数据集(输入分辨率有限)上有提升,泛化性能还可以

速度上比SGD收敛的要快一些,x2 ~x4

4 Method

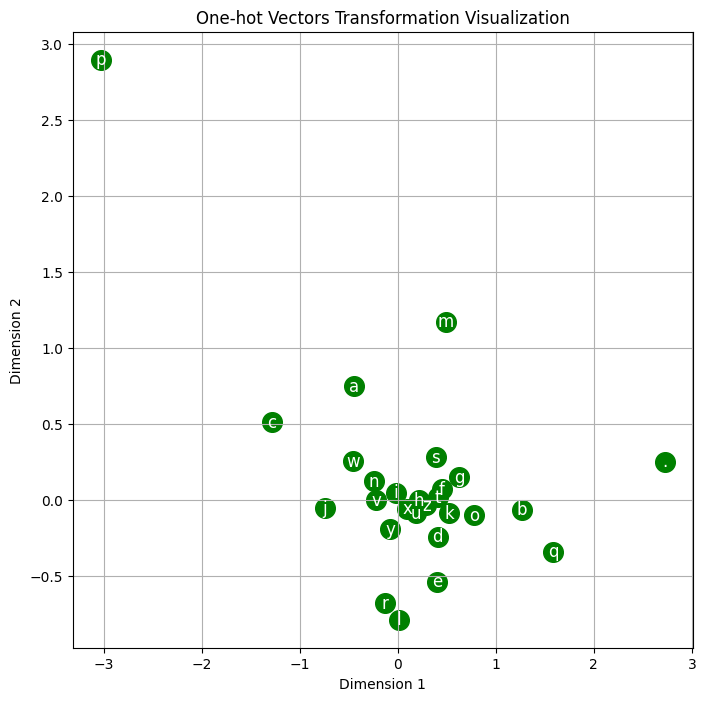

periodically simulate warm restarts of SGD

SGD with momentum

再加 warm start

蓝色和红色是之前的 step 式 learning rate schedule( A common learning rate schedule is to use a constant learning rate and divide it by a fixed constant in (approximately) regular interval)

其余颜色是作者的 SGDR 伴随不同的参数配置

核心公式

-

η \eta η 是学习率

-

i − t h i-th i−th run

-

T c u r T_{cur} Tcur accounts for how many epochs have been performed since the last restar, T c u r = 0 T_{cur} = 0 Tcur=0 学习率最大,为 η m a x \eta_{max} ηmax, T c u r = T i T_{cur}=T_i Tcur=Ti 时学习率最小为 η m i n \eta_{min} ηmin

-

T i T_i Ti cosine 的一个下降周期对应的 epoch 数或 iteration 数

每次 new start 的时候, η m i n \eta_{min} ηmin 或者 η m a x \eta_{max} ηmax 可调整

让每个周期变得越来越长的话,可以设置 T m u l t T_{mult} Tmult > 1(eg =2, it doubles the maximum number of epochs for every new restart. The main purpose of this doubling is to reach good test error as soon as possible)

code,来自 Cosine Annealing Warm Restart

# 导包

from torch import optim

from torch.optim import lr_scheduler

# 定义模型

model, parameters = generate_model(opt)

# 定义优化器

if opt.nesterov:

dampening = 0

else:

dampening = 0.9

optimizer = opt.SGD(parameters, lr=0.1, momentum=0.9, dampening=dampending, weight_decay=1e-3, nesterov=opt.nesterov)

# 定义热重启学习率策略

scheduler = lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=10, T_mult=2, eta_min=0, last_epoch=-1)

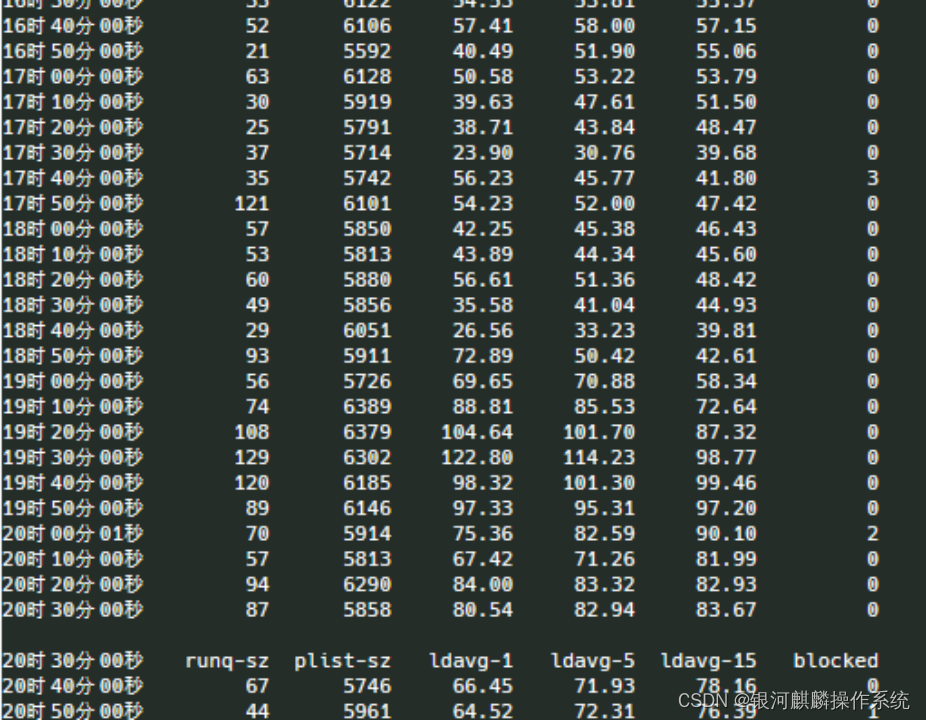

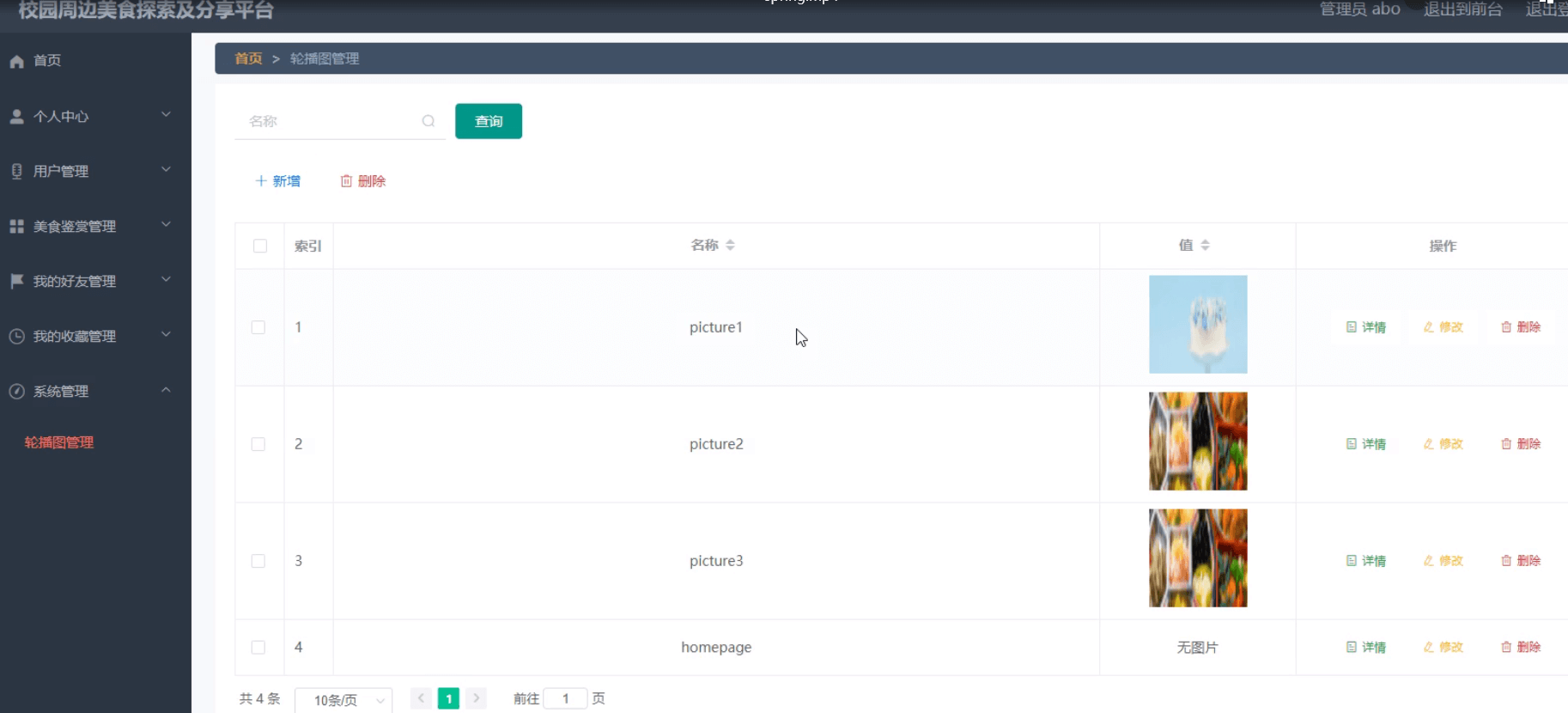

5 Experiments

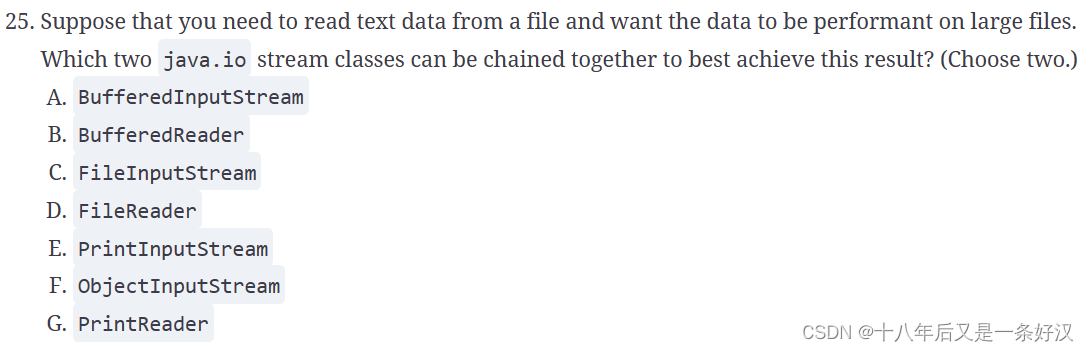

5.1 Datasets and Metric

CIFAR-10:Top1 error

CIFAR-100:Top1 error

a dataset of electroencephalographic (EEG)

downsampled ImageNet(32x32): Top1 and Top5 error

5.2 Single-Model Results

WRN 网络 with depth d and width k

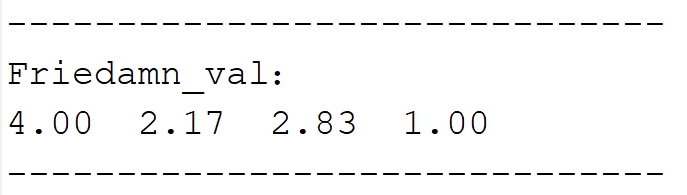

CIFAR10 上 T m u l t i = 1 T_{multi} = 1 Tmulti=1 比较猛

CIFAR100 上 T m u l t i = 2 T_{multi} = 2 Tmulti=2 比较猛

收敛速度快的优势

Since SGDR achieves good performance faster, it may allow us to train larger networks

CIFAR10 上 T m u l t i = 1 T_{multi} = 1 Tmulti=1 比较猛,黑白色

CIFAR100 上 T m u l t i = 2 T_{multi} = 2 Tmulti=2 比较猛

只看收敛效果的话,白色最猛, cosine learning rate

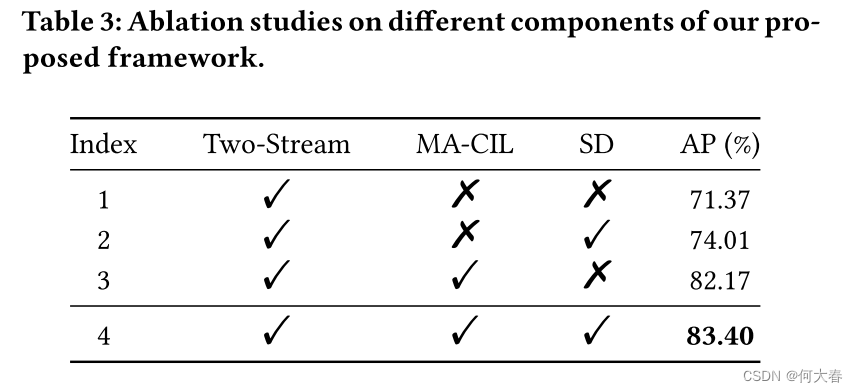

5.3 Ensemble Results

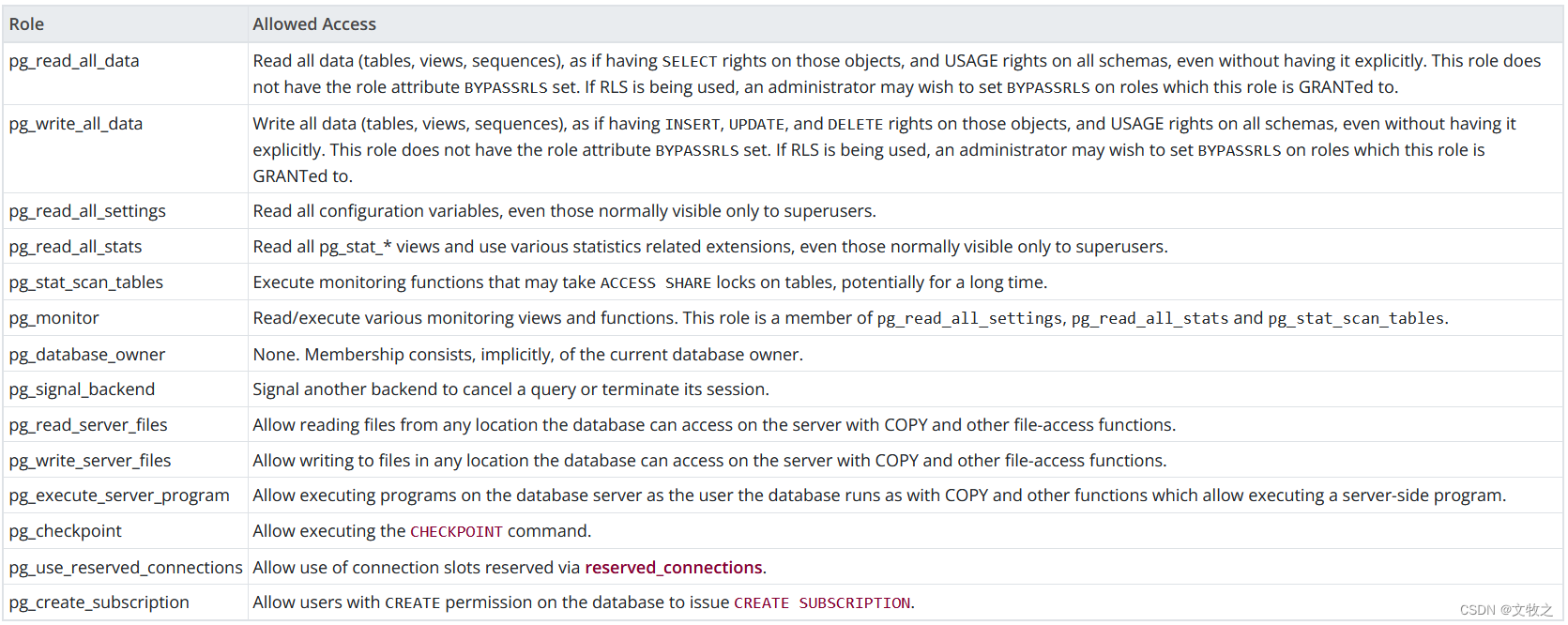

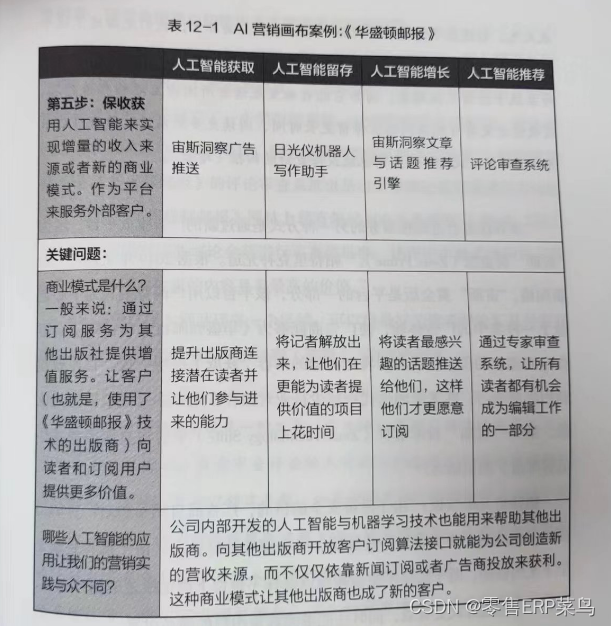

这里是复刻下《SNAPSHOT ENSEMBLES: TRAIN 1, GET M FOR FREE》(ICLR-2017)中的方法

来自 优化器: Snapshots Ensembles 快照集成

具体的实验细节不是很了解,N =16,M=3 表示总共 200 epoch,16个 restart 周期,选出来3个模型平均?还是说跑了 16*200个 epoch,每次run(200epoch)选出来 M 个模型平均?

M = 3,30,70,150

M = 2, 70,150

M = 1 ,150

5.4 Experiments on a Dataset of EEG Recordings

5.5 Preliminary Experiments on a downsampled ImageNet Dataset

our downsampled ImageNet contains exactly the same images from 1000 classes as the original ImageNet but resized with box downsampling to 32 × 32 pixels.

6 Conclusion(own) / Future work

-

实战中 T i T_i Ti 怎么设计比较好,是设置成最大 epoch 吗?还是多 restart 几次, T m u l T_{mul} Tmul 是不是大于 1 比等于 1 效果好?

-

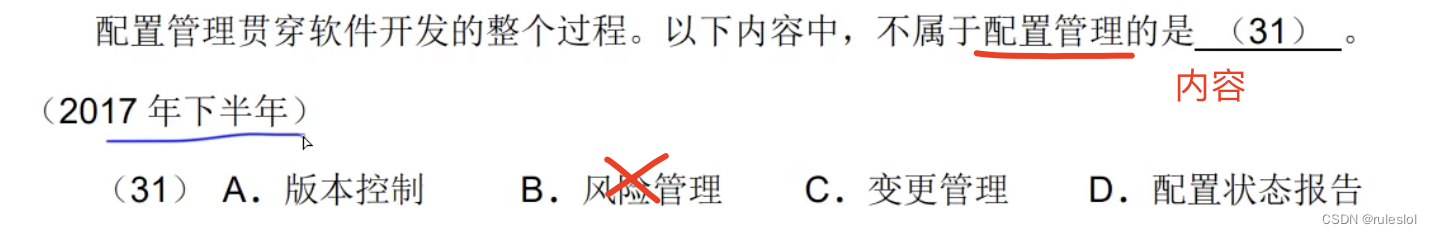

Restart techniques are common in gradient-free optimization to deal with multimodal functions

-

Stochastic subGradient Descent with restarts can achieve a linear convergence rate for a class of non-smooth and non-strongly convex optimization problems

-

Cyclic Learning rate和SGDR-学习率调整策略论文两篇

可以将 SGDR 称为hard restart,因为每次循环开始时学习率都是断崖式增加的,相反,CLR应该称为soft restart

-

理解深度学习中的学习率及多种选择策略

-

什么是ill-conditioning 对SGD有什么影响? - Martin Tan的回答 - 知乎

https://www.zhihu.com/question/56977045/answer/151137770

-

niching

What is niching scheme?

Niching methods:

小生境(Niche):来自于生物学的一个概念,是指特定环境下的一种生存环境,生物在其进化过程中,一般总是与自己相同的物种生活在一起,共同繁衍后代。例如,热带鱼不能在较冷的地带生存,而北极熊也不能在热带生存。把这种思想提炼出来,运用到优化上来的关键操作是:当两个个体的海明距离小于预先指定的某个值(称之为小生境距离)时,惩罚其中适应值较小的个体。

海明距离(Hamming Distance):在信息编码中,两个合法代码对应位上编码不同的位数称为码距,又称海明距离。例如,10101和00110从第一位开始依次有第一位、第四、第五位不同,则海明距离为3。

![[Leetcode]用栈实现队列](https://img-blog.csdnimg.cn/direct/52fabee278f54153a9d72aa18e2c4192.png)