WACV-2017

IEEE Winter Conference on Applications of Computer Vision

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

- 5 Experiments

- 5.1 Datasets and Metrics

- 5.2 CIFAR-10 and CIFAR-100

- 5.3 ImageNet

- 6 Conclusion(own) / Future work

1 Background and Motivation

训练神经网络的时候,学习率是一个非常重要的超参数

常规学习率设定会随着学习的深入,以各种方式减少,作者另辟蹊径,提出了 cyclical learning rate(CLR),有升有降,周而复始,以防止网络在学习中陷入局部局部最优解 or 鞍点(difficulty in minimizing the loss arises from saddle points rather than poor local minima,Saddle points have small gradients that slow the learning process)

收敛会加快,但是最终结果不一定会比 step learning rate 好

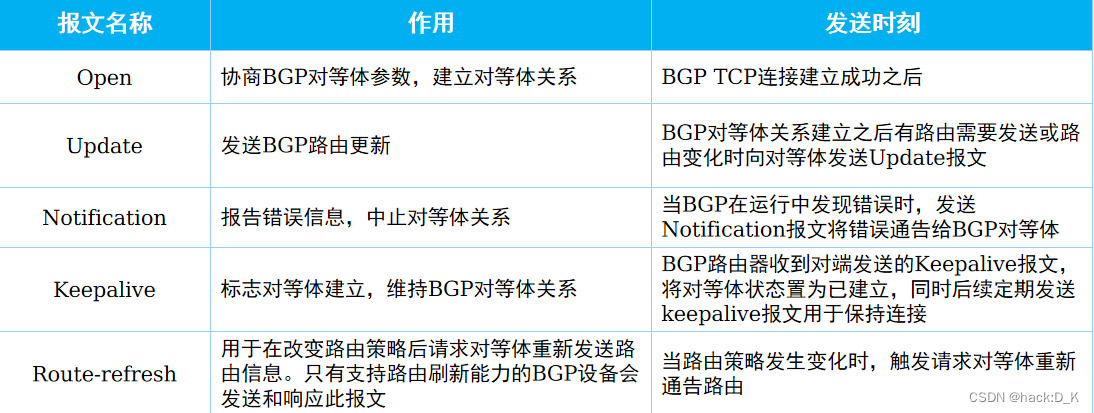

2 Related Work

- Adaptive learning rates

AdaGrad / RMSProp / AdaDelta / AdaSecant / RMSProp

CLR can be combined with adaptive learning rates

3 Advantages / Contributions

提出了 CLR,一种学习率的方法论,不用去花额外代价 find the best values and schedule

发现学习率的 rise and fall 对最终的收敛速度精度有帮助

在公开的模型和数据集上,验证了 CLR 的有效性

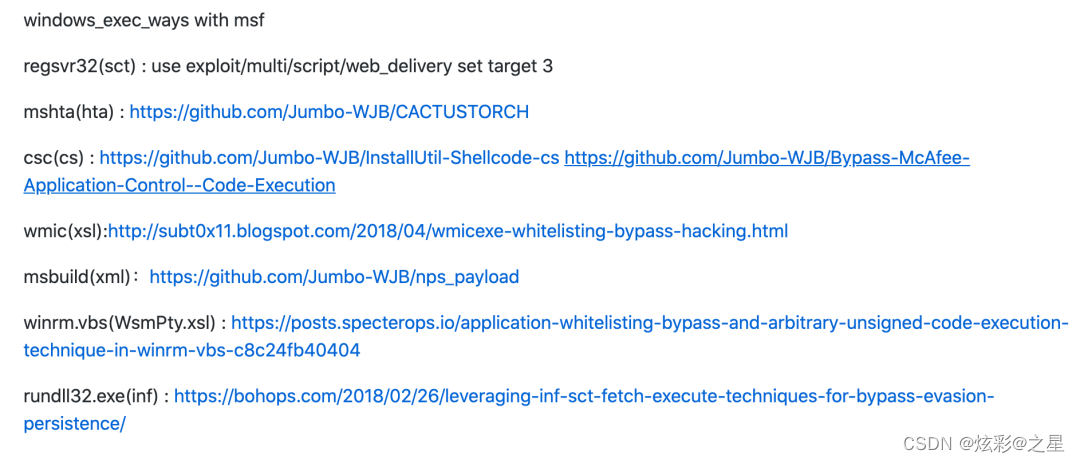

4 Method

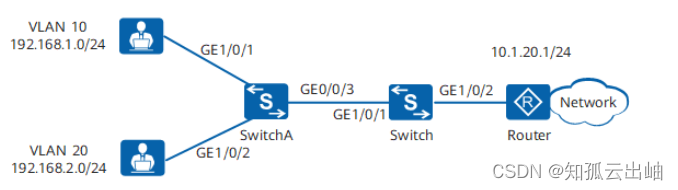

学习率形式

- a triangular window (linear) 三角

- a Welch window (parabolic) 抛物线

- a Hann window (sinusoidal) 正弦

作者选择最简单的 triangular

超参:stepsize (half the period or cycle length),base_lr,max_lr

(1)How can one estimate a good value for the cycle length?

stepsize 作者给出的建议为

is good to set stepsize equal to 2 − 10 times the number of iterations in an epoch

也即 2~10 epoch 长度

(2)How can one estimate reasonable minimum and maximum boundary values?

作者的方法论,学习率一直增加,长度可以为一个 stepsize,观测精度变化,选定学习率范围(Set both the stepsize and max iter to the same number of iterations)

上图 base lr = 0.001,max lr = 0.006

a single LR range test provides both a good LR value and a good range

作者基于 triangular 还衍生出了两种 schedule

- triangular2

the same as the triangular policy except the learning rate difference is cut in half at the end of each cycle

triangular 每个周期min max都是一样的,triangular2 是 min / max / stepsize 都随着周期的变化而变化

- exp_range

min 和 max learning rate 随着周期的变化而 decline,变化公式为

g a m m a i t e r a t i o n gamma^{iteration} gammaiteration,gamma 文中设定为 0.99994

5 Experiments

5.1 Datasets and Metrics

- CIFAR-10:top1 error,acc

- CIFAR-100:top1 error

- ImageNet:top1 / top5 error

5.2 CIFAR-10 and CIFAR-100

CIFAR-10 上效果还是 ok的,收敛的更快,更好

对比 exponential 学习率和作者提出的 exp range

CIFAR10 上确实领先

和不同的学习方法对比,adaptive learning rate methods with / without CLR

Nesterov / ADAM / RMSprop 都没有 fixed learning rate 猛耶,这里 fixed 的描述应该是相对周期性变化来说的

一直在波动,毕竟学习率也在周期性的变化中

在看看不同网络结构的效果,ResNets, Stochastic Depth, and DenseNets

CLR 有提升

5.3 ImageNet

还是有一点点提升的

(1)AlexNet

先根据 LR range test 找到了 min 和 max learing rate,stepsize is 6 epochs

有提升,但是整体波动性会更大,能理解(exp range policy do oscillate around the exp policy accuracies)

(2)GoogLeNet/Inception Architecture

先 LR range test 找出 min 和 max learning rate

6 Conclusion(own) / Future work

- future work

- equivalent policies work for training different architectures, such as recurrent neural networks

- theoretical analysis would provide an improved understanding of these methods

- 第二次遇到 solo 论文的,上次还是 CVPR 的 xception,Keras的发明人,作者的单位,第一次遇到,(○´・д・)ノ

- 启发比较大的是找 learning rate min 和 max 的方法——LR range test

- 其他表格图有提升,table 3 中和其他 adaptive learning rate methods 对比,with / without CLR 有点弱了

- 不知道和 SGDR 中 T m u l t i T_{multi} Tmulti 配合起来会怎么样

![P1712 [NOI2016] 区间(线段树 + 贪心 + 双指针)](https://img-blog.csdnimg.cn/img_convert/4c30e02e3af7587428f7587fe6bc8127.png)