关于

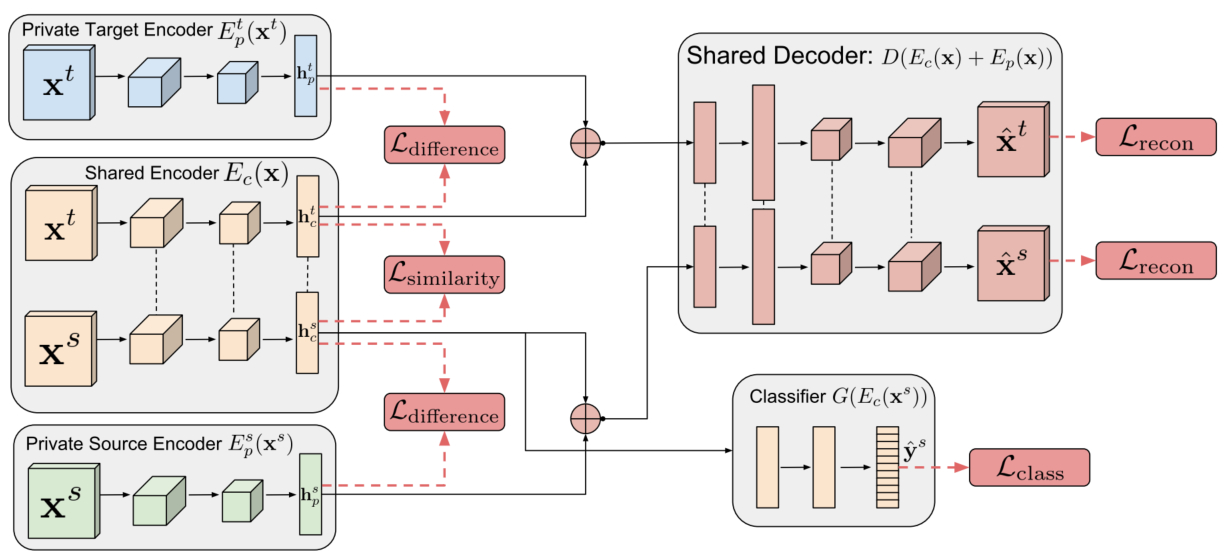

大规模数据收集和注释的成本通常使得将机器学习算法应用于新任务或数据集变得异常昂贵。规避这一成本的一种方法是在合成数据上训练模型,其中自动提供注释。尽管它们很有吸引力,但此类模型通常无法从合成图像推广到真实图像,因此需要域适应算法来操纵这些模型,然后才能成功应用。现有的方法要么侧重于将表示从一个域映射到另一个域,要么侧重于学习提取对于提取它们的域而言不变的特征。然而,通过只关注在两个域之间创建映射或共享表示,他们忽略了每个域的单独特征。域分离网络可以实现对每个域的独特之处进行特征建模,,同时进行模型域不变特征的提取。

参考文章: https://arxiv.org/abs/1608.06019

工具

方法实现

数据集定义

import torch.utils.data as data

from PIL import Image

import os

class GetLoader(data.Dataset):

def __init__(self, data_root, data_list, transform=None):

self.root = data_root

self.transform = transform

f = open(data_list, 'r')

data_list = f.readlines()

f.close()

self.n_data = len(data_list)

self.img_paths = []

self.img_labels = []

for data in data_list:

self.img_paths.append(data[:-3])

self.img_labels.append(data[-2])

def __getitem__(self, item):

img_paths, labels = self.img_paths[item], self.img_labels[item]

imgs = Image.open(os.path.join(self.root, img_paths)).convert('RGB')

if self.transform is not None:

imgs = self.transform(imgs)

labels = int(labels)

return imgs, labels

def __len__(self):

return self.n_data模型搭建

import torch.nn as nn

from functions import ReverseLayerF

class DSN(nn.Module):

def __init__(self, code_size=100, n_class=10):

super(DSN, self).__init__()

self.code_size = code_size

##########################################

# private source encoder

##########################################

self.source_encoder_conv = nn.Sequential()

self.source_encoder_conv.add_module('conv_pse1', nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5,

padding=2))

self.source_encoder_conv.add_module('ac_pse1', nn.ReLU(True))

self.source_encoder_conv.add_module('pool_pse1', nn.MaxPool2d(kernel_size=2, stride=2))

self.source_encoder_conv.add_module('conv_pse2', nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5,

padding=2))

self.source_encoder_conv.add_module('ac_pse2', nn.ReLU(True))

self.source_encoder_conv.add_module('pool_pse2', nn.MaxPool2d(kernel_size=2, stride=2))

self.source_encoder_fc = nn.Sequential()

self.source_encoder_fc.add_module('fc_pse3', nn.Linear(in_features=7 * 7 * 64, out_features=code_size))

self.source_encoder_fc.add_module('ac_pse3', nn.ReLU(True))

#########################################

# private target encoder

#########################################

self.target_encoder_conv = nn.Sequential()

self.target_encoder_conv.add_module('conv_pte1', nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5,

padding=2))

self.target_encoder_conv.add_module('ac_pte1', nn.ReLU(True))

self.target_encoder_conv.add_module('pool_pte1', nn.MaxPool2d(kernel_size=2, stride=2))

self.target_encoder_conv.add_module('conv_pte2', nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5,

padding=2))

self.target_encoder_conv.add_module('ac_pte2', nn.ReLU(True))

self.target_encoder_conv.add_module('pool_pte2', nn.MaxPool2d(kernel_size=2, stride=2))

self.target_encoder_fc = nn.Sequential()

self.target_encoder_fc.add_module('fc_pte3', nn.Linear(in_features=7 * 7 * 64, out_features=code_size))

self.target_encoder_fc.add_module('ac_pte3', nn.ReLU(True))

################################

# shared encoder (dann_mnist)

################################

self.shared_encoder_conv = nn.Sequential()

self.shared_encoder_conv.add_module('conv_se1', nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5,

padding=2))

self.shared_encoder_conv.add_module('ac_se1', nn.ReLU(True))

self.shared_encoder_conv.add_module('pool_se1', nn.MaxPool2d(kernel_size=2, stride=2))

self.shared_encoder_conv.add_module('conv_se2', nn.Conv2d(in_channels=32, out_channels=48, kernel_size=5,

padding=2))

self.shared_encoder_conv.add_module('ac_se2', nn.ReLU(True))

self.shared_encoder_conv.add_module('pool_se2', nn.MaxPool2d(kernel_size=2, stride=2))

self.shared_encoder_fc = nn.Sequential()

self.shared_encoder_fc.add_module('fc_se3', nn.Linear(in_features=7 * 7 * 48, out_features=code_size))

self.shared_encoder_fc.add_module('ac_se3', nn.ReLU(True))

# classify 10 numbers

self.shared_encoder_pred_class = nn.Sequential()

self.shared_encoder_pred_class.add_module('fc_se4', nn.Linear(in_features=code_size, out_features=100))

self.shared_encoder_pred_class.add_module('relu_se4', nn.ReLU(True))

self.shared_encoder_pred_class.add_module('fc_se5', nn.Linear(in_features=100, out_features=n_class))

self.shared_encoder_pred_domain = nn.Sequential()

self.shared_encoder_pred_domain.add_module('fc_se6', nn.Linear(in_features=100, out_features=100))

self.shared_encoder_pred_domain.add_module('relu_se6', nn.ReLU(True))

# classify two domain

self.shared_encoder_pred_domain.add_module('fc_se7', nn.Linear(in_features=100, out_features=2))

######################################

# shared decoder (small decoder)

######################################

self.shared_decoder_fc = nn.Sequential()

self.shared_decoder_fc.add_module('fc_sd1', nn.Linear(in_features=code_size, out_features=588))

self.shared_decoder_fc.add_module('relu_sd1', nn.ReLU(True))

self.shared_decoder_conv = nn.Sequential()

self.shared_decoder_conv.add_module('conv_sd2', nn.Conv2d(in_channels=3, out_channels=16, kernel_size=5,

padding=2))

self.shared_decoder_conv.add_module('relu_sd2', nn.ReLU())

self.shared_decoder_conv.add_module('conv_sd3', nn.Conv2d(in_channels=16, out_channels=16, kernel_size=5,

padding=2))

self.shared_decoder_conv.add_module('relu_sd3', nn.ReLU())

self.shared_decoder_conv.add_module('us_sd4', nn.Upsample(scale_factor=2))

self.shared_decoder_conv.add_module('conv_sd5', nn.Conv2d(in_channels=16, out_channels=16, kernel_size=3,

padding=1))

self.shared_decoder_conv.add_module('relu_sd5', nn.ReLU(True))

self.shared_decoder_conv.add_module('conv_sd6', nn.Conv2d(in_channels=16, out_channels=3, kernel_size=3,

padding=1))

def forward(self, input_data, mode, rec_scheme, p=0.0):

result = []

if mode == 'source':

# source private encoder

private_feat = self.source_encoder_conv(input_data)

private_feat = private_feat.view(-1, 64 * 7 * 7)

private_code = self.source_encoder_fc(private_feat)

elif mode == 'target':

# target private encoder

private_feat = self.target_encoder_conv(input_data)

private_feat = private_feat.view(-1, 64 * 7 * 7)

private_code = self.target_encoder_fc(private_feat)

result.append(private_code)

# shared encoder

shared_feat = self.shared_encoder_conv(input_data)

shared_feat = shared_feat.view(-1, 48 * 7 * 7)

shared_code = self.shared_encoder_fc(shared_feat)

result.append(shared_code)

reversed_shared_code = ReverseLayerF.apply(shared_code, p)

domain_label = self.shared_encoder_pred_domain(reversed_shared_code)

result.append(domain_label)

if mode == 'source':

class_label = self.shared_encoder_pred_class(shared_code)

result.append(class_label)

# shared decoder

if rec_scheme == 'share':

union_code = shared_code

elif rec_scheme == 'all':

union_code = private_code + shared_code

elif rec_scheme == 'private':

union_code = private_code

rec_vec = self.shared_decoder_fc(union_code)

rec_vec = rec_vec.view(-1, 3, 14, 14)

rec_code = self.shared_decoder_conv(rec_vec)

result.append(rec_code)

return result

模型训练

import random

import os

import torch.backends.cudnn as cudnn

import torch.optim as optim

import torch.utils.data

import numpy as np

from torch.autograd import Variable

from torchvision import datasets

from torchvision import transforms

from model_compat import DSN

from data_loader import GetLoader

from functions import SIMSE, DiffLoss, MSE

from test import test

######################

# params #

######################

source_image_root = os.path.join('.', 'dataset', 'mnist')

target_image_root = os.path.join('.', 'dataset', 'mnist_m')

model_root = 'model'

cuda = True

cudnn.benchmark = True

lr = 1e-2

batch_size = 32

image_size = 28

n_epoch = 100

step_decay_weight = 0.95

lr_decay_step = 20000

active_domain_loss_step = 10000

weight_decay = 1e-6

alpha_weight = 0.01

beta_weight = 0.075

gamma_weight = 0.25

momentum = 0.9

manual_seed = random.randint(1, 10000)

random.seed(manual_seed)

torch.manual_seed(manual_seed)

#######################

# load data #

#######################

img_transform = transforms.Compose([

transforms.Resize(image_size),

transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5))

])

dataset_source = datasets.MNIST(

root=source_image_root,

train=True,

transform=img_transform

)

dataloader_source = torch.utils.data.DataLoader(

dataset=dataset_source,

batch_size=batch_size,

shuffle=True,

num_workers=8

)

train_list = os.path.join(target_image_root, 'mnist_m_train_labels.txt')

dataset_target = GetLoader(

data_root=os.path.join(target_image_root, 'mnist_m_train'),

data_list=train_list,

transform=img_transform

)

dataloader_target = torch.utils.data.DataLoader(

dataset=dataset_target,

batch_size=batch_size,

shuffle=True,

num_workers=8

)

#####################

# load model #

#####################

my_net = DSN()

#####################

# setup optimizer #

#####################

def exp_lr_scheduler(optimizer, step, init_lr=lr, lr_decay_step=lr_decay_step, step_decay_weight=step_decay_weight):

# Decay learning rate by a factor of step_decay_weight every lr_decay_step

current_lr = init_lr * (step_decay_weight ** (step / lr_decay_step))

if step % lr_decay_step == 0:

print 'learning rate is set to %f' % current_lr

for param_group in optimizer.param_groups:

param_group['lr'] = current_lr

return optimizer

optimizer = optim.SGD(my_net.parameters(), lr=lr, momentum=momentum, weight_decay=weight_decay)

loss_classification = torch.nn.CrossEntropyLoss()

loss_recon1 = MSE()

loss_recon2 = SIMSE()

loss_diff = DiffLoss()

loss_similarity = torch.nn.CrossEntropyLoss()

if cuda:

my_net = my_net.cuda()

loss_classification = loss_classification.cuda()

loss_recon1 = loss_recon1.cuda()

loss_recon2 = loss_recon2.cuda()

loss_diff = loss_diff.cuda()

loss_similarity = loss_similarity.cuda()

for p in my_net.parameters():

p.requires_grad = True

#############################

# training network #

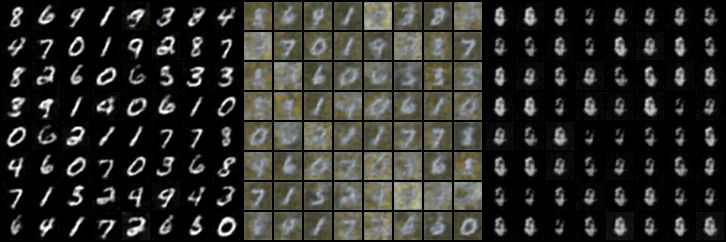

#############################MNIST数据重建/共有部分特征/私有数据特征可视化

MNIST_m数据重建/共有部分特征/私有数据特征可视化

代码获取

相关问题和项目开发,欢迎私信交流和沟通。