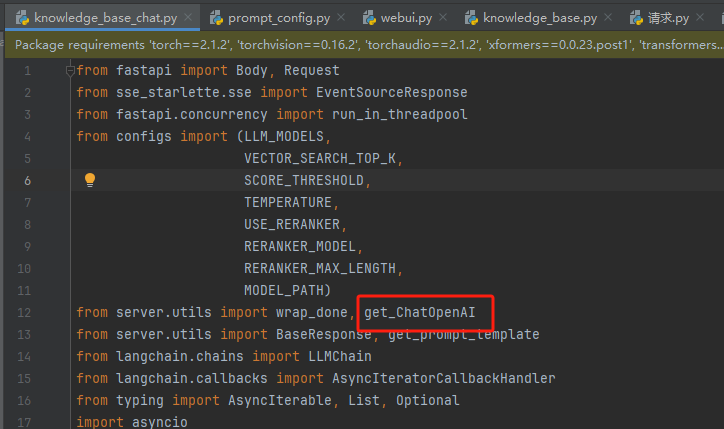

1.找到knowledge_base_chat.py文件中的get_ChatOpenAI函数

2.按crtl进入get_ChatOpenAI函数位置

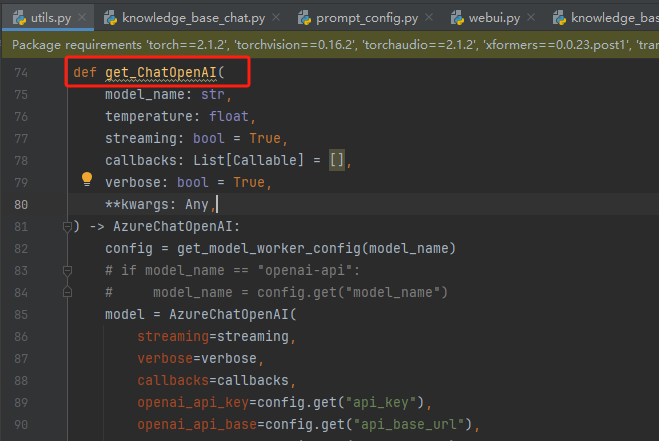

3.注释原先的get_ChatOpenAI函数,修改成以下内容:

def get_ChatOpenAI(

model_name: str,

temperature: float,

streaming: bool = True,

callbacks: List[Callable] = [],

verbose: bool = True,

**kwargs: Any,

) -> AzureChatOpenAI:

config = get_model_worker_config(model_name)

# if model_name == "openai-api":

# model_name = config.get("model_name")

model = AzureChatOpenAI(

streaming=streaming,

verbose=verbose,

callbacks=callbacks,

openai_api_key=config.get("api_key"),

openai_api_base=config.get("api_base_url"),

deployment_name=config.get("model_name"),

temperature=temperature,

# openai_proxy=config.get("openai_proxy"),

openai_api_version="2024-02-01",

# openai_api_type="azure",

** kwargs

)

return model

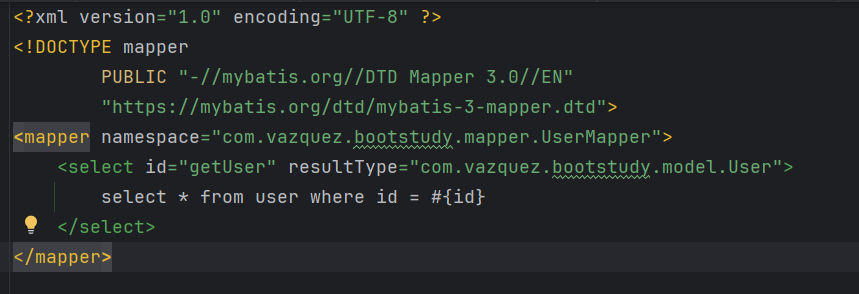

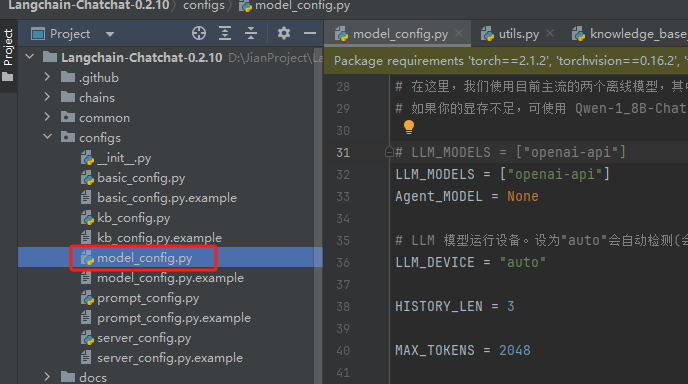

4.找到model_config.py文件

5.增加以下内容

(1)创建.env文件,里面放入自己在微软申请的Azure Open AI

(2)加载.env文件,在model_config.py文件加上以下代码

from dotenv import load_dotenv

load_dotenv()

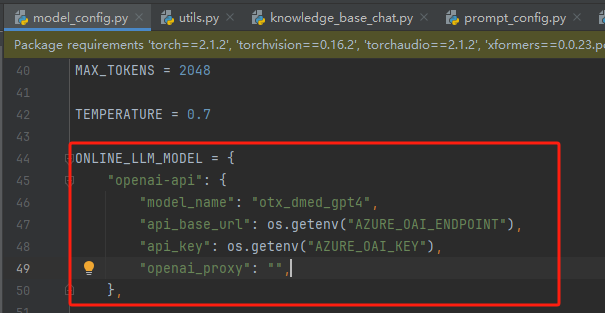

(3)修改openai-api,在model_config.py文件加上以下代码

ONLINE_LLM_MODEL = {

"openai-api": {

"model_name": "otx_dmed_gpt4",

"api_base_url": os.getenv("AZURE_OAI_ENDPOINT"),

"api_key": os.getenv("AZURE_OAI_KEY"),

"openai_proxy": "",

},

6.直接python startup.py -a运行即可调用Azure Open AI