一.概述:

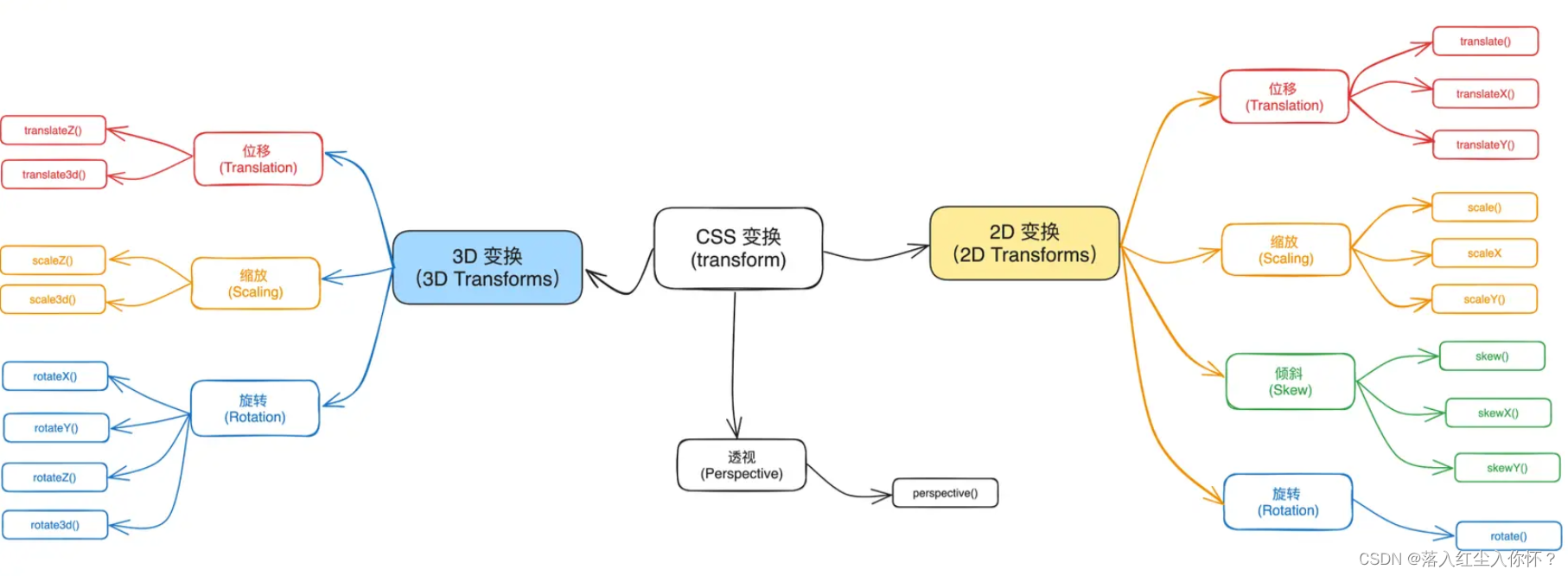

1.lio sam 是一个imu与lidar的紧耦合框架

2.前端:imu主要用来预计分,对后端里程计进行位姿推测得到先验位姿 ,对雷达进行畸变校正,特征点提取得到cloudinfo

3.后端主要有两个模块:模块一通过前端位姿初值进行scan->map的匹配 通过lm算法计算更新后端位姿,将后端odom给到因子图得到最终位姿;模块二就是回环的使用,通过多线程使用icp配准,得到回环因子,将回环因子加入因子图;

二.工程使用中基础常见的问题:

1.启动程序后地图飘的问题

检查imu的内参和外参

检查imu的角速度单位

2.将9轴imu修改为6轴imu 因为一般磁力角稳定好用的imu一般在千元左右

磁力角主要使用在重力对齐和没有关键帧时的匹配初值.可以直接在数据输入时进行角速度积分替换掉磁力角或者是将代码里的imu磁里角相关注释掉

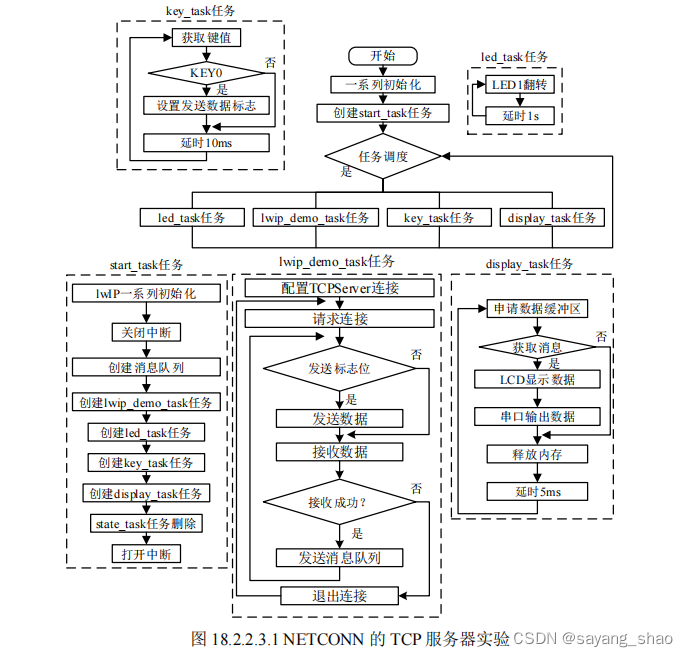

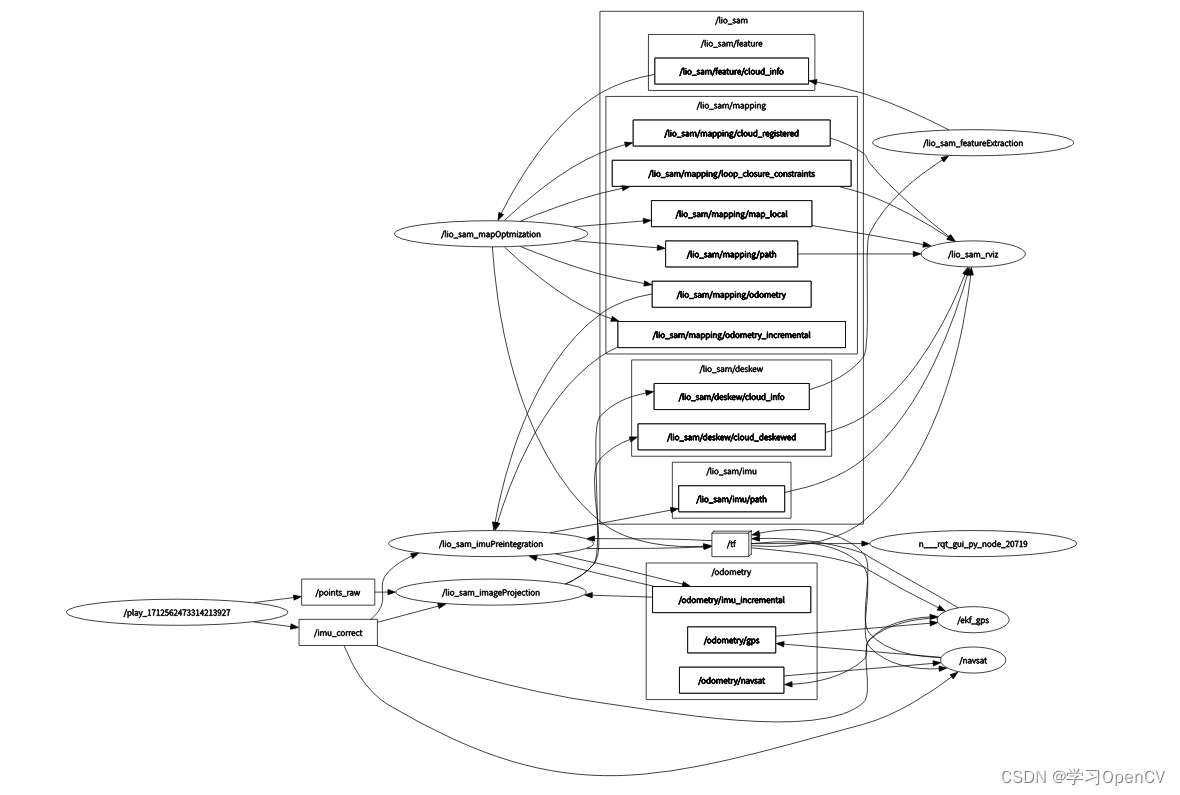

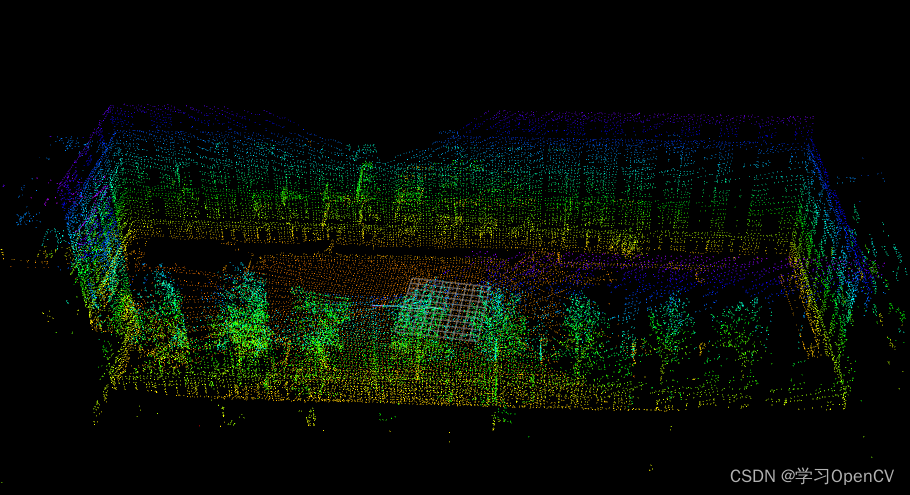

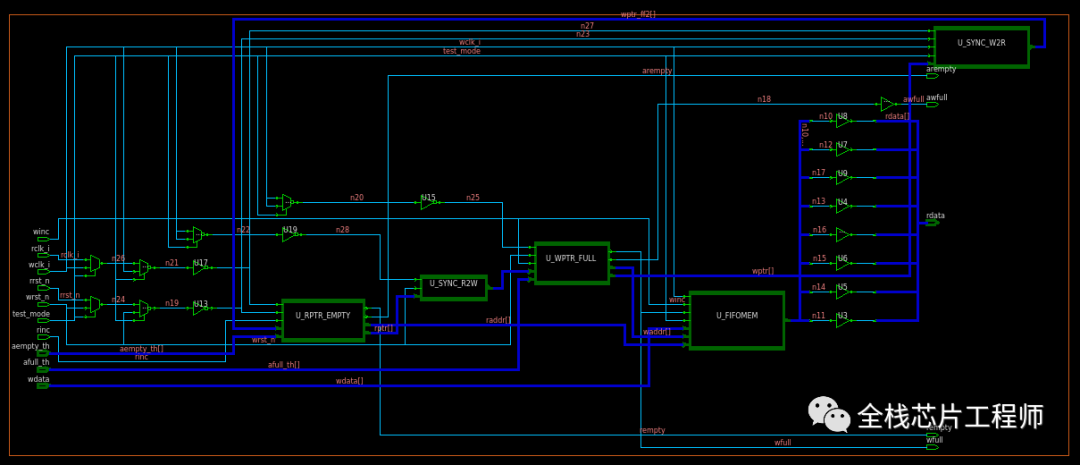

三.节点图,tf树,地图构建截图

1.节点图

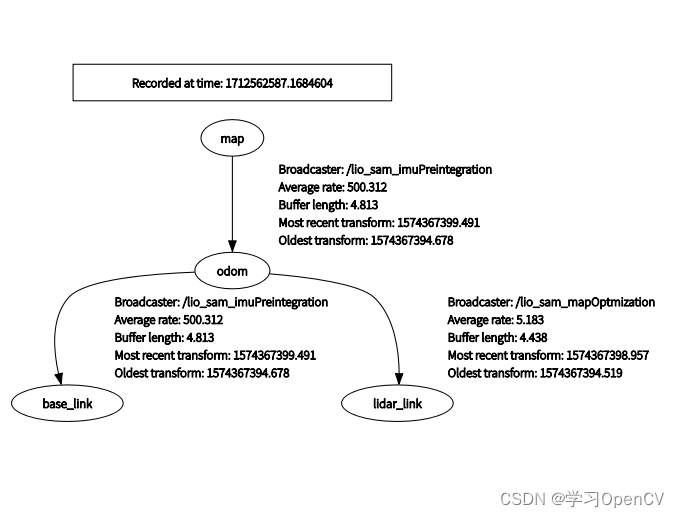

2.tf图

2.tf图

3.构建的地图

四.代码梳理

1.imu预积分,位姿态推测进程imuPreintegration.cpp

1.1两个实现类

IMUPreintegration ImuP; imu预先积分类

TransformFusion TF; tf发布类 ,位姿推测部分

1.2使用gtsam进行预积分,使用后端位姿做参考imuHandler(const sensor_msgs::Imu::ConstPtr& imu_raw)

// 将imu角速度 加速度 传入gtsam 进行imu预积分,并发布odom

void imuHandler(const sensor_msgs::Imu::ConstPtr& imu_raw)

{

std::lock_guard<std::mutex> lock(mtx);

// 首先把imu数据转换到lidar系

sensor_msgs::Imu thisImu = imuConverter(*imu_raw);

//注意这里有两个imu的队列,作用不相同,一个用来执行预积分和位姿的优化,一个用来更新最新的imu状态

imuQueOpt.push_back(thisImu);

imuQueImu.push_back(thisImu);

//如果没有发生过优化就return

if (doneFirstOpt == false)

return;

//当前帧imu的时间戳

double imuTime = ROS_TIME(&thisImu);

// 两帧imu时间差 , 第一次为1/500,之后是两次imuTime间的差

double dt = (lastImuT_imu < 0) ? (1.0 / 500.0) : (imuTime - lastImuT_imu);

// 上一帧imu的时间戳

lastImuT_imu = imuTime;

// 每来一个值 ,就加入预积分状态中

imuIntegratorImu_->integrateMeasurement(gtsam::Vector3(thisImu.linear_acceleration.x, thisImu.linear_acceleration.y, thisImu.linear_acceleration.z),

gtsam::Vector3(thisImu.angular_velocity.x, thisImu.angular_velocity.y, thisImu.angular_velocity.z), dt);

// predict odometry

// 根据这个值预测最新的状态

gtsam::NavState currentState = imuIntegratorImu_->predict(prevStateOdom, prevBiasOdom);

// publish odometry

nav_msgs::Odometry odometry;

odometry.header.stamp = thisImu.header.stamp;

// odom

odometry.header.frame_id = odometryFrame;

odometry.child_frame_id = "odom_imu";

//发布imu里程计 转到lidar系

gtsam::Pose3 imuPose = gtsam::Pose3(currentState.quaternion(), currentState.position());

gtsam::Pose3 lidarPose = imuPose.compose(imu2Lidar);

odometry.pose.pose.position.x = lidarPose.translation().x();

odometry.pose.pose.position.y = lidarPose.translation().y();

odometry.pose.pose.position.z = lidarPose.translation().z();

odometry.pose.pose.orientation.x = lidarPose.rotation().toQuaternion().x();

odometry.pose.pose.orientation.y = lidarPose.rotation().toQuaternion().y();

odometry.pose.pose.orientation.z = lidarPose.rotation().toQuaternion().z();

odometry.pose.pose.orientation.w = lidarPose.rotation().toQuaternion().w();

odometry.twist.twist.linear.x = currentState.velocity().x();

odometry.twist.twist.linear.y = currentState.velocity().y();

odometry.twist.twist.linear.z = currentState.velocity().z();

odometry.twist.twist.angular.x = thisImu.angular_velocity.x + prevBiasOdom.gyroscope().x();

odometry.twist.twist.angular.y = thisImu.angular_velocity.y + prevBiasOdom.gyroscope().y();

odometry.twist.twist.angular.z = thisImu.angular_velocity.z + prevBiasOdom.gyroscope().z();

pubImuOdometry.publish(odometry);

}

1.3预积分初始化 ,提供imu预积分所用的参考值odometryHandler(const nav_msgs::Odometry::ConstPtr& odomMsg)

void odometryHandler(const nav_msgs::Odometry::ConstPtr& odomMsg)

{

std::lock_guard<std::mutex> lock(mtx);

// 当前帧激光里程计的时间戳

double currentCorrectionTime = ROS_TIME(odomMsg);

// make sure we have imu data to integrate

// 确保imu队列中有数据

if (imuQueOpt.empty())

return;

//当前帧激光位姿,来自scan-to-map匹配、因子图优化后的位姿

float p_x = odomMsg->pose.pose.position.x;

float p_y = odomMsg->pose.pose.position.y;

float p_z = odomMsg->pose.pose.position.z;

float r_x = odomMsg->pose.pose.orientation.x;

float r_y = odomMsg->pose.pose.orientation.y;

float r_z = odomMsg->pose.pose.orientation.z;

float r_w = odomMsg->pose.pose.orientation.w;

// 该位姿是否出现退化

bool degenerate = (int)odomMsg->pose.covariance[0] == 1 ? true : false;

// 把位姿转成gtsam的格式

gtsam::Pose3 lidarPose = gtsam::Pose3(gtsam::Rot3::Quaternion(r_w, r_x, r_y, r_z), gtsam::Point3(p_x, p_y, p_z));

// 0 系统初始化 第一帧

if (systemInitialized == false)

{

// 重置ISAM2优化器

resetOptimization();

// pop old IMU message

// 将这个里程计消息之前的imu数据全部扔掉

while (!imuQueOpt.empty())

{

if (ROS_TIME(&imuQueOpt.front()) < currentCorrectionTime - delta_t)

{

lastImuT_opt = ROS_TIME(&imuQueOpt.front());

imuQueOpt.pop_front();

}

else

break;

}

// 将雷达的位姿转移到imu坐标系下

prevPose_ = lidarPose.compose(lidar2Imu);

// 设置其初始位姿和置信度

gtsam::PriorFactor<gtsam::Pose3> priorPose(X(0), prevPose_, priorPoseNoise);

// 约束加入到因子中

graphFactors.add(priorPose);

// initial velocity

// 初始化速度 这里就直接赋0了

prevVel_ = gtsam::Vector3(0, 0, 0);

// 将速度的约束也加入到因子图中

gtsam::PriorFactor<gtsam::Vector3> priorVel(V(0), prevVel_, priorVelNoise);

graphFactors.add(priorVel);

// initial bias

// 初始化零偏

prevBias_ = gtsam::imuBias::ConstantBias();

gtsam::PriorFactor<gtsam::imuBias::ConstantBias> priorBias(B(0), prevBias_, priorBiasNoise);

// 零偏加入到因子图中

graphFactors.add(priorBias);

// add values

// 以上把约束加入完毕 下面开始添加状态量

// 将各个状态量赋成初始值

graphValues.insert(X(0), prevPose_);

graphValues.insert(V(0), prevVel_);

graphValues.insert(B(0), prevBias_);

// optimize once

// 约束和状态量更新进isam优化器

optimizer.update(graphFactors, graphValues);

graphFactors.resize(0);

graphValues.clear();

imuIntegratorImu_->resetIntegrationAndSetBias(prevBias_);

imuIntegratorOpt_->resetIntegrationAndSetBias(prevBias_);

key = 1;

systemInitialized = true;

return;

}

// 每隔100帧激光里程计,重置ISAM2优化器,保证优化效率

// 当isam优化器中加入了较多的约束后,为了避免运算时间变长,就直接清空

if (key == 100)

{

// 取出最新时刻位姿 速度 零偏的协方差矩阵

gtsam::noiseModel::Gaussian::shared_ptr updatedPoseNoise = gtsam::noiseModel::Gaussian::Covariance(optimizer.marginalCovariance(X(key-1)));

gtsam::noiseModel::Gaussian::shared_ptr updatedVelNoise = gtsam::noiseModel::Gaussian::Covariance(optimizer.marginalCovariance(V(key-1)));

gtsam::noiseModel::Gaussian::shared_ptr updatedBiasNoise = gtsam::noiseModel::Gaussian::Covariance(optimizer.marginalCovariance(B(key-1)));

// reset graph

// 复位整个优化问题

resetOptimization();

// add pose

// 将最新的位姿 速度 零偏 以及对应的协方差矩阵加入到因子图中

gtsam::PriorFactor<gtsam::Pose3> priorPose(X(0), prevPose_, updatedPoseNoise);

graphFactors.add(priorPose);

// add velocity

gtsam::PriorFactor<gtsam::Vector3> priorVel(V(0), prevVel_, updatedVelNoise);

graphFactors.add(priorVel);

// add bias

gtsam::PriorFactor<gtsam::imuBias::ConstantBias> priorBias(B(0), prevBias_, updatedBiasNoise);

graphFactors.add(priorBias);

// add values

graphValues.insert(X(0), prevPose_);

graphValues.insert(V(0), prevVel_);

graphValues.insert(B(0), prevBias_);

// optimize once

optimizer.update(graphFactors, graphValues);

graphFactors.resize(0);

graphValues.clear();

key = 1;

}

// 将两帧之间的imu做积分

while (!imuQueOpt.empty())

{

// 提取前一帧与当前帧之间的imu数据,计算预积分

sensor_msgs::Imu *thisImu = &imuQueOpt.front();

double imuTime = ROS_TIME(thisImu);

// 时间小于当前lidar位姿的都取出来

//currentCorrectionTime是当前回调函数收到的激光里程计数据的时间

if (imuTime < currentCorrectionTime - delta_t)

{

// 调用预积分接口 将imu数据送进去处理

double dt = (lastImuT_opt < 0) ? (1.0 / 500.0) : (imuTime - lastImuT_opt);

// imu预积分数据输入:加速度、角速度、dt

// 加入的是这个用来因子图优化的预积分器imuIntegratorOpt_,注意加入了上一步算出的dt

//作者要求的9轴imu数据中欧拉角在本程序文件中没有任何用到,全在地图优化里用到的

imuIntegratorOpt_->integrateMeasurement(

gtsam::Vector3(thisImu->linear_acceleration.x, thisImu->linear_acceleration.y, thisImu->linear_acceleration.z),

gtsam::Vector3(thisImu->angular_velocity.x, thisImu->angular_velocity.y, thisImu->angular_velocity.z), dt);

//在推出一次数据前保存上一个数据的时间戳

lastImuT_opt = imuTime;

// 从队列中删除已经处理的imu数据

imuQueOpt.pop_front();

}

else

break;

}

// add imu factor to graph

// 两帧间imu预积分完成了之后 就将其转化为预积分约束

const gtsam::PreintegratedImuMeasurements& preint_imu = dynamic_cast<const gtsam::PreintegratedImuMeasurements&>(*imuIntegratorOpt_);

// 预积分约束对相邻两帧之间位姿 速度 零偏形成约束

gtsam::ImuFactor imu_factor(X(key - 1), V(key - 1), X(key), V(key), B(key - 1), preint_imu);

// 加入因子图中

graphFactors.add(imu_factor);

// add imu bias between factor

// 零偏的约束, 两帧间零偏不会相差太大 因此使用常量约束

graphFactors.add(gtsam::BetweenFactor<gtsam::imuBias::ConstantBias>(B(key - 1), B(key), gtsam::imuBias::ConstantBias(),

gtsam::noiseModel::Diagonal::Sigmas(sqrt(imuIntegratorOpt_->deltaTij()) * noiseModelBetweenBias)));

// add pose factor

gtsam::Pose3 curPose = lidarPose.compose(lidar2Imu);

// lidar 位姿补偿到imu坐标系下,同时根据是否退化选择不同的置信度,作为这一帧的先验估计

gtsam::PriorFactor<gtsam::Pose3> pose_factor(X(key), curPose, degenerate ? correctionNoise2 : correctionNoise);

// 加入到因子图中

graphFactors.add(pose_factor);

// insert predicted values

// 根据上一时刻的状态 结合与积分结果,对当前状态进行预测

gtsam::NavState propState_ = imuIntegratorOpt_->predict(prevState_, prevBias_);

// 预测量作为初始值加入到因子图中去

graphValues.insert(X(key), propState_.pose());

graphValues.insert(V(key), propState_.v());

graphValues.insert(B(key), prevBias_);

// optimize

// 执行优化

optimizer.update(graphFactors, graphValues);

optimizer.update();

graphFactors.resize(0);

graphValues.clear();

// Overwrite the beginning of the preintegration for the next step.

gtsam::Values result = optimizer.calculateEstimate();

// 获取优化后的当前状态作为当前帧的最佳估计

prevPose_ = result.at<gtsam::Pose3>(X(key));

prevVel_ = result.at<gtsam::Vector3>(V(key));

prevState_ = gtsam::NavState(prevPose_, prevVel_);

prevBias_ = result.at<gtsam::imuBias::ConstantBias>(B(key));

// Reset the optimization preintegration object.

// 当前约束任务已经完成 预积分约束复位 同时需要设置一下零偏 作为下一次积分的先决条件

imuIntegratorOpt_->resetIntegrationAndSetBias(prevBias_);

// check optimization

// 一个简单的失败检测

if (failureDetection(prevVel_, prevBias_))

{

resetParams();

return;

}

// 2. after optiization, re-propagate imu odometry preintegration

// 优化之后根据最新的imu状态进行传输

prevStateOdom = prevState_;

prevBiasOdom = prevBias_;

// first pop imu message older than current correction data

double lastImuQT = -1;

// 首先把lidar帧之前的imu状态全部弹出去

while (!imuQueImu.empty() && ROS_TIME(&imuQueImu.front()) < currentCorrectionTime - delta_t)

{

lastImuQT = ROS_TIME(&imuQueImu.front());

imuQueImu.pop_front();

}

// repropogate

// 如果有新于雷达状态时刻的imu

if (!imuQueImu.empty())

{ // reset bias use the newly optimized bias

// 这个预积分变量复位

imuIntegratorImu_->resetIntegrationAndSetBias(prevBiasOdom);

// integrate imu message from the beginning of this optimization

// 然后把剩下的imu状态重新积分

for (int i = 0; i < (int)imuQueImu.size(); ++i)

{

sensor_msgs::Imu *thisImu = &imuQueImu[i];

double imuTime = ROS_TIME(thisImu);

double dt = (lastImuQT < 0) ? (1.0 / 500.0) :(imuTime - lastImuQT);

imuIntegratorImu_->integrateMeasurement(gtsam::Vector3(thisImu->linear_acceleration.x, thisImu->linear_acceleration.y, thisImu->linear_acceleration.z),

gtsam::Vector3(thisImu->angular_velocity.x, thisImu->angular_velocity.y, thisImu->angular_velocity.z), dt);

lastImuQT = imuTime;

}

}

++key;

doneFirstOpt = true;

}

1.4 tf类下的lidarOdometryHandler(const nav_msgs::Odometry::ConstPtr& odomMsg)

获取后端位姿为位姿推测提供初值

void lidarOdometryHandler(const nav_msgs::Odometry::ConstPtr& odomMsg)

{

std::lock_guard<std::mutex> lock(mtx);

lidarOdomAffine = odom2affine(*odomMsg);

lidarOdomTime = odomMsg->header.stamp.toSec();

}

1.5位姿推测部分 tf发布

void imuOdometryHandler(const nav_msgs::Odometry::ConstPtr& odomMsg)

{

// static tf

static tf::TransformBroadcaster tfMap2Odom;

static tf::Transform map_to_odom = tf::Transform(tf::createQuaternionFromRPY(0, 0, 0), tf::Vector3(0, 0, 0));

// 发送静态tf odom系和map系将它们重合

tfMap2Odom.sendTransform(tf::StampedTransform(map_to_odom, odomMsg->header.stamp, mapFrame, odometryFrame));

std::lock_guard<std::mutex> lock(mtx);

// imu得到的里程计结果送入这个队列中

imuOdomQueue.push_back(*odomMsg);

// get latest odometry (at current IMU stamp)

// 如果没有收到lidar位姿就return

if (lidarOdomTime == -1)

return;

// 弹出时间戳小于最新lidar位姿时刻之前的imu里程计数据

while (!imuOdomQueue.empty())

{

if (imuOdomQueue.front().header.stamp.toSec() <= lidarOdomTime)

imuOdomQueue.pop_front();

else

break;

}

// 计算最新队列里imu里程计记的增量

Eigen::Affine3f imuOdomAffineFront = odom2affine(imuOdomQueue.front());

Eigen::Affine3f imuOdomAffineBack = odom2affine(imuOdomQueue.back());

Eigen::Affine3f imuOdomAffineIncre = imuOdomAffineFront.inverse() * imuOdomAffineBack;

// 增量补偿到lidar的位姿上去,就得到了最新的预测的位姿

Eigen::Affine3f imuOdomAffineLast = lidarOdomAffine * imuOdomAffineIncre;

float x, y, z, roll, pitch, yaw;

// 分解成平移+欧拉角的形式

pcl::getTranslationAndEulerAngles(imuOdomAffineLast, x, y, z, roll, pitch, yaw);

// publish latest odometry

// 发送全局一致位姿的最新位姿

nav_msgs::Odometry laserOdometry = imuOdomQueue.back();

laserOdometry.pose.pose.position.x = x;

laserOdometry.pose.pose.position.y = y;

laserOdometry.pose.pose.position.z = z;

laserOdometry.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(roll, pitch, yaw);

pubImuOdometry.publish(laserOdometry);

// publish tf

// 更新tf

static tf::TransformBroadcaster tfOdom2BaseLink;

tf::Transform tCur;

tf::poseMsgToTF(laserOdometry.pose.pose, tCur);

if(lidarFrame != baselinkFrame)

tCur = tCur * lidar2Baselink;

// 更新odom到baselink的tf

tf::StampedTransform odom_2_baselink = tf::StampedTransform(tCur, odomMsg->header.stamp, odometryFrame, baselinkFrame);

tfOdom2BaseLink.sendTransform(odom_2_baselink);

// publish IMU path

// 发送imu里程计的轨迹

static nav_msgs::Path imuPath;

static double last_path_time = -1;

double imuTime = imuOdomQueue.back().header.stamp.toSec();

// 控制一下更新频率 不超过10hz

if (imuTime - last_path_time > 0.1)

{

last_path_time = imuTime;

geometry_msgs::PoseStamped pose_stamped;

pose_stamped.header.stamp = imuOdomQueue.back().header.stamp;

pose_stamped.header.frame_id = odometryFrame;

pose_stamped.pose = laserOdometry.pose.pose;

// 将最新的位姿送入到轨迹中

imuPath.poses.push_back(pose_stamped);

// 把lidar时间戳之前的轨迹全部擦除

while(!imuPath.poses.empty() && imuPath.poses.front().header.stamp.toSec() < lidarOdomTime - 1.0)

imuPath.poses.erase(imuPath.poses.begin());

// 发布轨迹 这个轨迹实际上是可视化imu预积分节点输出的预测值

if (pubImuPath.getNumSubscribers() != 0)

{

imuPath.header.stamp = imuOdomQueue.back().header.stamp;

imuPath.header.frame_id = odometryFrame;

pubImuPath.publish(imuPath);

}

}

}

2.imageProjection.cpp 点云畸变去除节点

2.1imu数据的接收

void imuHandler(const sensor_msgs::Imu::ConstPtr &imuMsg)

{

// 将imu数据转换到lidar坐标系

sensor_msgs::Imu thisImu = imuConverter(*imuMsg);

// 加一个线程锁 将imu数据保存进队列

std::lock_guard<std::mutex> lock1(imuLock);

imuQueue.push_back(thisImu);

// debug IMU data

cout << std::setprecision(6);

cout << "IMU acc: " << endl;

cout << "x: " << thisImu.linear_acceleration.x << ", y: " << thisImu.linear_acceleration.y << ", z: " << thisImu.linear_acceleration.z << endl;

cout << "IMU gyro: " << endl;

cout << "x: " << thisImu.angular_velocity.x << ", y: " << thisImu.angular_velocity.y << ", z: " << thisImu.angular_velocity.z << endl;

double imuRoll, imuPitch, imuYaw;

tf::Quaternion orientation;

tf::quaternionMsgToTF(thisImu.orientation, orientation);

tf::Matrix3x3(orientation).getRPY(imuRoll, imuPitch, imuYaw);

cout << "IMU roll pitch yaw: " << endl;

cout << "roll: " << imuRoll * 180 / M_PI << ", pitch: " << imuPitch * 180 / M_PI << ", yaw: " << imuYaw * 180 / M_PI << endl

<< endl;

}

2.2前端里成计的接收

void odometryHandler(const nav_msgs::Odometry::ConstPtr &odometryMsg)

{

std::lock_guard<std::mutex> lock2(odoLock);

odomQueue.push_back(*odometryMsg);

}

2.3原始点云的接收

// 原始点云处理

void cloudHandler(const sensor_msgs::PointCloud2ConstPtr &laserCloudMsg)

{

// 检查点云数据格式

if (!cachePointCloud(laserCloudMsg))

return;

if (!deskewInfo())

return;

projectPointCloud();

// 提取有效点

cloudExtraction();

// 发布有效点

publishClouds();

resetParameters();

}

2.4点云数据格式检测

bool cachePointCloud(const sensor_msgs::PointCloud2ConstPtr &laserCloudMsg)

{

// 点云数据保存进队列

cloudQueue.push_back(*laserCloudMsg);

// 确保队列里大于2帧点云数据

if (cloudQueue.size() <= 2)

return false;

// 缓存了足够多的点云之后 ,将首个点云转存

currentCloudMsg = std::move(cloudQueue.front());

// 将首个点云从队列中扔掉

cloudQueue.pop_front();

if (sensor == SensorType::VELODYNE || sensor == SensorType::LIVOX)

{

// 转换点云数据格式

pcl::moveFromROSMsg(currentCloudMsg, *laserCloudIn);

}

else if (sensor == SensorType::OUSTER)

{

pcl::moveFromROSMsg(currentCloudMsg, *tmpOusterCloudIn);

laserCloudIn->points.resize(tmpOusterCloudIn->size());

laserCloudIn->is_dense = tmpOusterCloudIn->is_dense;

for (size_t i = 0; i < tmpOusterCloudIn->size(); i++)

{

auto &src = tmpOusterCloudIn->points[i];

auto &dst = laserCloudIn->points[i];

dst.x = src.x;

dst.y = src.y;

dst.z = src.z;

dst.intensity = src.intensity;

dst.ring = src.ring;

dst.time = src.t * 1e-9f;

}

}

else

{

ROS_ERROR_STREAM("传感器数据类型参数设置错误: " << int(sensor));

ros::shutdown();

}

// 时间戳

cloudHeader = currentCloudMsg.header;

timeScanCur = cloudHeader.stamp.toSec();

timeScanEnd = timeScanCur + laserCloudIn->points.back().time;

// is_dense是点云是否是有序排序的标志

if (laserCloudIn->is_dense == false)

{

ROS_ERROR("c点云为设置成有序点云!");

ros::shutdown();

}

// 查看驱动里是否把每个点属于哪一根扫描scan这个信息

static int ringFlag = 0;

if (ringFlag == 0)

{

ringFlag = -1;

for (int i = 0; i < (int)currentCloudMsg.fields.size(); ++i)

{

if (currentCloudMsg.fields[i].name == "ring")

{

ringFlag = 1;

break;

}

}

// 如果没有这个信息就需要像 loam或者lego loam那样手动计算scan id 现在velodyne的驱动都会携带这些信息的

if (ringFlag == -1)

{

ROS_ERROR("b点云没有线束信息!");

ros::shutdown();

}

}

// 同样检查是否有时间戳信息

if (deskewFlag == 0)

{

deskewFlag = -1;

for (auto &field : currentCloudMsg.fields)

{

if (field.name == "time" || field.name == "t")

{

deskewFlag = 1;

break;

}

}

if (deskewFlag == -1)

ROS_WARN("a点云没有时间戳信息!");

}

return true;

}

2.5获取运动补偿信息函数包括lidar帧附近的imu数据和前端里程计数据

// 获取运动补偿所需的信息

bool deskewInfo()

{

std::lock_guard<std::mutex> lock1(imuLock);

std::lock_guard<std::mutex> lock2(odoLock);

// 确保imu的数据覆盖这一帧点云

// imu 时间戳在点云起始前和终止后都存在

if (imuQueue.empty() || imuQueue.front().header.stamp.toSec() > timeScanCur || imuQueue.back().header.stamp.toSec() < timeScanEnd)

{

ROS_DEBUG("imu数据未覆盖scan ...");

return false;

}

// 准备imu补偿的信息

imuDeskewInfo();

// 准备odom补偿的信息

odomDeskewInfo();

return true;

}

2.6imu运动补偿信息

// imu角速度积分出角度

void imuDeskewInfo()

{

cloudInfo.imuAvailable = false;

while (!imuQueue.empty())

{

// 用scan 前0.01时刻的数据

if (imuQueue.front().header.stamp.toSec() < timeScanCur - 0.01) // 扔掉过早的imu

imuQueue.pop_front();

else

break;

}

if (imuQueue.empty())

return;

imuPointerCur = 0;

for (int i = 0; i < (int)imuQueue.size(); ++i)

{

sensor_msgs::Imu thisImuMsg = imuQueue[i];

double currentImuTime = thisImuMsg.header.stamp.toSec();

// 把imu的姿态转成欧拉角

if (currentImuTime <= timeScanCur)

imuRPY2rosRPY(&thisImuMsg, &cloudInfo.imuRollInit, &cloudInfo.imuPitchInit, &cloudInfo.imuYawInit);

// 这一帧scan遍历完了就break

if (currentImuTime > timeScanEnd + 0.01)

break;

if (imuPointerCur == 0)

{ // 起始帧

imuRotX[0] = 0;

imuRotY[0] = 0;

imuRotZ[0] = 0;

imuTime[0] = currentImuTime;

++imuPointerCur;

continue;

}

double angular_x, angular_y, angular_z;

// 取出当前帧的角速度

imuAngular2rosAngular(&thisImuMsg, &angular_x, &angular_y, &angular_z);

// imu时间差从用的第一帧到用的最后一帧 连续两帧imu数据时间差

double timeDiff = currentImuTime - imuTime[imuPointerCur - 1];

// 计算每一时刻的姿态角 方便后续查找对应每个点时间的值 姿态角积分

// 当前帧角度=上一帧角度+角速度*连续两帧时间差

imuRotX[imuPointerCur] = imuRotX[imuPointerCur - 1] + angular_x * timeDiff;

imuRotY[imuPointerCur] = imuRotY[imuPointerCur - 1] + angular_y * timeDiff;

imuRotZ[imuPointerCur] = imuRotZ[imuPointerCur - 1] + angular_z * timeDiff;

imuTime[imuPointerCur] = currentImuTime;

// imu 在当前帧scan的使用帧数

++imuPointerCur;

}

--imuPointerCur;

// 与当前scan匹配的第二帧imu数据以后的做运动补偿

if (imuPointerCur <= 0)

return;

// 可以使用imu数据进行运动补偿

cloudInfo.imuAvailable = true;

}

2.7odom运动补偿信息

void odomDeskewInfo()

{

cloudInfo.odomAvailable = false;

while (!odomQueue.empty())

{

// 扔掉过早的数据

if (odomQueue.front().header.stamp.toSec() < timeScanCur - 0.01)

odomQueue.pop_front();

else

break;

}

if (odomQueue.empty())

return;

// odom数据队列第一个时间要在scan前

if (odomQueue.front().header.stamp.toSec() > timeScanCur)

return;

nav_msgs::Odometry startOdomMsg;

// 找到对应的邻近点云时间的odom数据

for (int i = 0; i < (int)odomQueue.size(); ++i)

{ startOdomMsg = odomQueue[i];

if (ROS_TIME(&startOdomMsg) < timeScanCur)

continue;

else

break;

}

// 将ros消息格式中的姿态转成tf的格式

tf::Quaternion orientation;

tf::quaternionMsgToTF(startOdomMsg.pose.pose.orientation, orientation);

// 前端odom欧拉角

double roll, pitch, yaw;

tf::Matrix3x3(orientation).getRPY(roll, pitch, yaw);

// 记录点云起始时刻的对应odom姿态

cloudInfo.initialGuessX = startOdomMsg.pose.pose.position.x;

cloudInfo.initialGuessY = startOdomMsg.pose.pose.position.y;

cloudInfo.initialGuessZ = startOdomMsg.pose.pose.position.z;

cloudInfo.initialGuessRoll = roll;

cloudInfo.initialGuessPitch = pitch;

cloudInfo.initialGuessYaw = yaw;

cloudInfo.odomAvailable = true; // odom提供了这一帧点云的初始位姿

odomDeskewFlag = false;

// 这里发现没有覆盖到最后的点云 那就不能用odom数据做运动补偿

if (odomQueue.back().header.stamp.toSec() < timeScanEnd)

return;

nav_msgs::Odometry endOdomMsg;

// 找到点云最晚时间对应的odom数据

for (int i = 0; i < (int)odomQueue.size(); ++i)

{

endOdomMsg = odomQueue[i];

if (ROS_TIME(&endOdomMsg) < timeScanEnd)

continue;

else

break;

}

// 这个代表odom退化了 就置信度不高了

if (int(round(startOdomMsg.pose.covariance[0])) != int(round(endOdomMsg.pose.covariance[0])))

return;

// 起始位姿和结束位姿都转成Affine3f这个数据结构

Eigen::Affine3f transBegin = pcl::getTransformation(startOdomMsg.pose.pose.position.x, startOdomMsg.pose.pose.position.y, startOdomMsg.pose.pose.position.z, roll, pitch, yaw);

tf::quaternionMsgToTF(endOdomMsg.pose.pose.orientation, orientation);

tf::Matrix3x3(orientation).getRPY(roll, pitch, yaw);

Eigen::Affine3f transEnd = pcl::getTransformation(endOdomMsg.pose.pose.position.x, endOdomMsg.pose.pose.position.y, endOdomMsg.pose.pose.position.z, roll, pitch, yaw);

// 计算起始位姿和结束位姿之间的delta pose

Eigen::Affine3f transBt = transBegin.inverse() * transEnd;

// 将这个增量转成xyz和欧拉角的形式

float rollIncre, pitchIncre, yawIncre;

pcl::getTranslationAndEulerAngles(transBt, odomIncreX, odomIncreY, odomIncreZ, rollIncre, pitchIncre, yawIncre);

odomDeskewFlag = true; // 表示可以用odom来做运动补偿

}

2.8将点云信息行数和索引记录在mat数据格式上,并进行畸变处理

void projectPointCloud()

{

int cloudSize = laserCloudIn->points.size();

for (int i = 0; i < cloudSize; ++i)

{

PointType thisPoint;

// 取出对应的每个点

thisPoint.x = laserCloudIn->points[i].x;

thisPoint.y = laserCloudIn->points[i].y;

thisPoint.z = laserCloudIn->points[i].z;

thisPoint.intensity = laserCloudIn->points[i].intensity;

// 计算这个点距离到lidar中心的距离

float range = pointDistance(thisPoint);

// 距离太小或太大都认为是异常点

if (range < lidarMinRange || range > lidarMaxRange)

continue;

// 取出对应的在第几条scan上

int rowIdn = laserCloudIn->points[i].ring;

// scan id必须合理

if (rowIdn < 0 || rowIdn >= N_SCAN)

continue;

// 如果需要降采样 就根据scan id适当跳过

if (rowIdn % downsampleRate != 0)

continue;

int columnIdn = -1;

if (sensor == SensorType::VELODYNE || sensor == SensorType::OUSTER)

{

// 计算水平角

float horizonAngle = atan2(thisPoint.x, thisPoint.y) * 180 / M_PI;

// 水平分辨率 0.2

static float ang_res_x = 360.0 / float(Horizon_SCAN);

// 计算水平线束id 转换到x负方向e为起始 顺时针为正方向 范围[0,H]

columnIdn = -round((horizonAngle - 90.0) / ang_res_x) + Horizon_SCAN / 2;

if (columnIdn >= Horizon_SCAN)

columnIdn -= Horizon_SCAN;

}

else if (sensor == SensorType::LIVOX)

{

columnIdn = columnIdnCountVec[rowIdn];

columnIdnCountVec[rowIdn] += 1;

}

// 对水平id进行检查

if (columnIdn < 0 || columnIdn >= Horizon_SCAN)

continue;

// 如果这个位值已经有填充了就跳过

if (rangeMat.at<float>(rowIdn, columnIdn) != FLT_MAX)

continue;

// 对点做运动补偿

thisPoint = deskewPoint(&thisPoint, laserCloudIn->points[i].time);

// 对这个点的距离数据保存进入到这个range矩阵中

rangeMat.at<float>(rowIdn, columnIdn) = range;

// 算出这个点的索引

int index = columnIdn + rowIdn * Horizon_SCAN;

// 保存这个点的坐标

fullCloud->points[index] = thisPoint;

}

}

2.9提取出可以用来计算曲率的点

// 提取出有效点的信息

void cloudExtraction()

{

int count = 0;

// 遍历每一根scan

for (int i = 0; i < N_SCAN; ++i)

{

// 这个scan可以计算曲率的起始点 计算曲率需要左右各5个点

cloudInfo.startRingIndex[i] = count - 1 + 5;

// 水平索引

for (int j = 0; j < Horizon_SCAN; ++j)

{

if (rangeMat.at<float>(i, j) != FLT_MAX)

{

// 这是一个有用的点

// mark the points' column index for marking occlusion later

// 这个点对应着哪一根垂直线

cloudInfo.pointColInd[count] = j;

// save range info

// 每个点到雷达中心的距离距离信息

cloudInfo.pointRange[count] = rangeMat.at<float>(i, j);

// 他的3d坐标信息

// save extracted cloud

extractedCloud->push_back(fullCloud->points[j + i * Horizon_SCAN]);

// size of extracted cloud

// count只在有效点才会累加

++count;

}

}

// 这个scan可以计算曲率的终点

cloudInfo.endRingIndex[i] = count - 1 - 5;

}

}

2.10将cloudinfo数据结构中的信息发布出来,发布给特征点提取部分使用

void publishClouds()

{

cloudInfo.header = cloudHeader;

// 发布提取出来有效的点

cloudInfo.cloud_deskewed = publishCloud(pubExtractedCloud, extractedCloud, cloudHeader.stamp, lidarFrame);

// 发布这个进程计算出来的一些中间细节信息

pubLaserCloudInfo.publish(cloudInfo);

}

2.11 参数复位,准备下一帧的点云处理

void resetParameters()

{

laserCloudIn->clear();

extractedCloud->clear();

// reset range matrix for range image projection

rangeMat = cv::Mat(N_SCAN, Horizon_SCAN, CV_32F, cv::Scalar::all(FLT_MAX));

imuPointerCur = 0;

firstPointFlag = true;

odomDeskewFlag = false;

for (int i = 0; i < queueLength; ++i)

{

imuTime[i] = 0;

imuRotX[i] = 0;

imuRotY[i] = 0;

imuRotZ[i] = 0;

}

columnIdnCountVec.assign(N_SCAN, 0);

}

3.featureExtraction.cpp特征点提取节点,发布角点特征面点特征,并和前端里程计imu磁力角等打包cloudinfo给后端

3.1去畸变后点云回调函数

void laserCloudInfoHandler(const lio_sam::cloud_infoConstPtr &msgIn)

{

cloudInfo = *msgIn; // 订阅的去畸变点云

cloudHeader = msgIn->header; // new cloud header

// 转成ros格式

pcl::fromROSMsg(msgIn->cloud_deskewed, *extractedCloud); // new cloud for extraction

// 平滑度 曲率计算 为了区分出边缘点和面点

calculateSmoothness();

// 标记属于遮挡、平行两种情况的点,不做特征提取

markOccludedPoints();

// 点云角点、平面点特征提取

// 1、遍历扫描线,每根扫描线扫描一周的点云划分为6段,针对每段提取20个角点、不限数量的平面点,加入角点集合、平面点集合

// 2、认为非角点的点都是平面点,加入平面点云集合,最后降采样

extractFeatures();

// 发布角点、面点点云,发布带特征点云数据的当前激光帧点云信息

publishFeatureCloud();

}

3.2曲率计算左5个点右5个点距离差值和的平方

void calculateSmoothness()

{

int cloudSize = extractedCloud->points.size();

// 通过前5个点后5个点计算曲率

for (int i = 5; i < cloudSize - 5; i++)

{

float diffRange = cloudInfo.pointRange[i - 5] + cloudInfo.pointRange[i - 4] + cloudInfo.pointRange[i - 3] + cloudInfo.pointRange[i - 2] + cloudInfo.pointRange[i - 1] - cloudInfo.pointRange[i] * 10 + cloudInfo.pointRange[i + 1] + cloudInfo.pointRange[i + 2] + cloudInfo.pointRange[i + 3] + cloudInfo.pointRange[i + 4] + cloudInfo.pointRange[i + 5];

// 距离差值平方作为曲率

cloudCurvature[i] = diffRange * diffRange; // diffX * diffX + diffY * diffY + diffZ * diffZ;

// 0表示还未进行特征提取处理,1表示遮挡、平行,或者已经进行特征提取的点

cloudNeighborPicked[i] = 0;

// 1表示角点,-1表示平面点

cloudLabel[i] = 0;

// 每个点的曲率

cloudSmoothness[i].value = cloudCurvature[i];

// 每个点的索引

cloudSmoothness[i].ind = i;

}

}

3.3通过相邻点深度差值的突变判断是遮挡点,这一类的遮挡点进行去除,根据左右两个点深度值差异大判断是瑕点

void markOccludedPoints()

{

int cloudSize = extractedCloud->points.size();

// 标记遮挡点和平行光束点

for (int i = 5; i < cloudSize - 6; ++i)

{

// occluded points

// 两个点的深度 雷达到障碍物的距离

float depth1 = cloudInfo.pointRange[i];

float depth2 = cloudInfo.pointRange[i + 1];

// 水平方向的序号差

// 两个激光点之间的一维索引差值,如果在一条扫描线上,那么值为1;

// 如果两个点之间有一些无效点被剔除了,可能会比1大,但不会特别大

// 如果恰好前一个点在扫描一周的结束时刻,下一个点是另一条扫描线的起始时刻,那么值会很大

int columnDiff = std::abs(int(cloudInfo.pointColInd[i + 1] - cloudInfo.pointColInd[i]));

// 两个点的水平序号小于10

if (columnDiff < 10)

{

// 两个点在同一扫描线上,且距离相差大于0.3,认为存在遮挡关系

// (也就是这两个点不在同一平面上,如果在同一平面上,距离相差不会太大)

// 远处的点会被遮挡,标记一下该点以及相邻的5个点,后面不再进行特征提取

if (depth1 - depth2 > 0.3)

{

cloudNeighborPicked[i - 5] = 1;

cloudNeighborPicked[i - 4] = 1;

cloudNeighborPicked[i - 3] = 1;

cloudNeighborPicked[i - 2] = 1;

cloudNeighborPicked[i - 1] = 1;

cloudNeighborPicked[i] = 1;

}

else if (depth2 - depth1 > 0.3)

{

cloudNeighborPicked[i + 1] = 1;

cloudNeighborPicked[i + 2] = 1;

cloudNeighborPicked[i + 3] = 1;

cloudNeighborPicked[i + 4] = 1;

cloudNeighborPicked[i + 5] = 1;

cloudNeighborPicked[i + 6] = 1;

}

}

// 用前后相邻点判断当前点所在平面是否与激光束方向平行

// diff1和diff2是当前点距离前后两个点的距离

float diff1 = std::abs(float(cloudInfo.pointRange[i - 1] - cloudInfo.pointRange[i]));

float diff2 = std::abs(float(cloudInfo.pointRange[i + 1] - cloudInfo.pointRange[i]));

// 如果当前点距离左右邻点都过远,则视其为瑕点,因为入射角可能太小导致误差较大

// 选择距离变化较大的点,并将他们标记为1

if (diff1 > 0.02 * cloudInfo.pointRange[i] && diff2 > 0.02 * cloudInfo.pointRange[i])

cloudNeighborPicked[i] = 1;

}

}

3.4从每条线上6等分,每等分取20个角落点,其它认为是面点

void extractFeatures()

{

// 角点/边缘点 平面点 点云清空下面准备存进来对应点云了

cornerCloud->clear();

surfaceCloud->clear();

pcl::PointCloud<PointType>::Ptr surfaceCloudScan(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr surfaceCloudScanDS(new pcl::PointCloud<PointType>());

for (int i = 0; i < N_SCAN; i++)

{

surfaceCloudScan->clear();

for (int j = 0; j < 6; j++)

{

int sp = (cloudInfo.startRingIndex[i] * (6 - j) + cloudInfo.endRingIndex[i] * j) / 6;

int ep = (cloudInfo.startRingIndex[i] * (5 - j) + cloudInfo.endRingIndex[i] * (j + 1)) / 6 - 1;

if (sp >= ep)

continue;

// 按照曲率从小到大排序

std::sort(cloudSmoothness.begin() + sp, cloudSmoothness.begin() + ep, by_value());

// 边缘点选取不在地面上

int largestPickedNum = 0;

// 按照曲率从大到小遍历

for (int k = ep; k >= sp; k--)

{

int ind = cloudSmoothness[k].ind;

// 当前激光点还未被处理,且曲率大于阈值,则认为是角点

if (cloudNeighborPicked[ind] == 0 && cloudCurvature[ind] > edgeThreshold)

{

// 每段只取20个角点,如果单条扫描线扫描一周是1800个点,则划分6段,每段300个点,从中提取20个角点

largestPickedNum++;

if (largestPickedNum <= 20)

{

// 标记为角点,加入角点点云

cloudLabel[ind] = 1;

cornerCloud->push_back(extractedCloud->points[ind]);

}

else

{

break;

}

// 标记已被处理

cloudNeighborPicked[ind] = 1;

// 同一条扫描线上后5个点标记一下,不再处理,避免特征聚集

for (int l = 1; l <= 5; l++)

{

int columnDiff = std::abs(int(cloudInfo.pointColInd[ind + l] - cloudInfo.pointColInd[ind + l - 1]));

if (columnDiff > 10)

break;

cloudNeighborPicked[ind + l] = 1;

}

// 同一条扫描线上前5个点标记一下,不再处理,避免特征聚集

for (int l = -1; l >= -5; l--)

{

int columnDiff = std::abs(int(cloudInfo.pointColInd[ind + l] - cloudInfo.pointColInd[ind + l + 1]));

// 大于10,说明距离远,则不作标记

if (columnDiff > 10)

break;

cloudNeighborPicked[ind + l] = 1;

}

}

}

// 按照曲率从小到大遍历

for (int k = sp; k <= ep; k++)

{

// 激光点的索引

int ind = cloudSmoothness[k].ind;

// 当前激光点还未被处理,且曲率小于阈值,则认为是平面点

if (cloudNeighborPicked[ind] == 0 && cloudCurvature[ind] < surfThreshold)

{

// 标记为平面点

cloudLabel[ind] = -1;

// 标记已被处理

cloudNeighborPicked[ind] = 1;

// 同一条扫描线上后5个点标记一下,不再处理,避免特征聚集

for (int l = 1; l <= 5; l++)

{

int columnDiff = std::abs(int(cloudInfo.pointColInd[ind + l] - cloudInfo.pointColInd[ind + l - 1]));

if (columnDiff > 10)

break;

cloudNeighborPicked[ind + l] = 1;

}

// 同一条扫描线上前5个点标记一下,不再处理,避免特征聚集

for (int l = -1; l >= -5; l--)

{

int columnDiff = std::abs(int(cloudInfo.pointColInd[ind + l] - cloudInfo.pointColInd[ind + l + 1]));

if (columnDiff > 10)

break;

cloudNeighborPicked[ind + l] = 1;

}

}

}

// 平面点和未被处理的点(<=0),都认为是平面点,加入平面点云集合

for (int k = sp; k <= ep; k++)

{

if (cloudLabel[k] <= 0)

{

surfaceCloudScan->push_back(extractedCloud->points[k]);

}

}

}

// 平面点云降采样

surfaceCloudScanDS->clear();

downSizeFilter.setInputCloud(surfaceCloudScan);

downSizeFilter.filter(*surfaceCloudScanDS);

// 加入平面点云集合

*surfaceCloud += *surfaceCloudScanDS;

}

}

3.5发布角点和面点

void publishFeatureCloud()

{

// free cloud info memory

freeCloudInfoMemory();

// save newly extracted features

// // 发布角点、面点点云,用于rviz展示

cloudInfo.cloud_corner = publishCloud(pubCornerPoints, cornerCloud, cloudHeader.stamp, lidarFrame);

cloudInfo.cloud_surface = publishCloud(pubSurfacePoints, surfaceCloud, cloudHeader.stamp, lidarFrame);

// 发布当前激光帧点云信息,加入了角点、面点点云数据,发布给mapOptimization

pubLaserCloudInfo.publish(cloudInfo);

}

4.后端点云匹配因子图优化和回环检测mapOptmization.cpp

4.1订阅特征处理后的点云

void laserCloudInfoHandler(const lio_sam::cloud_infoConstPtr &msgIn)

{

// 提取订阅时间戳

timeLaserInfoStamp = msgIn->header.stamp;

timeLaserInfoCur = msgIn->header.stamp.toSec();

// 角点和面点 liosam 点云格式转化为pcl点云格式

cloudInfo = *msgIn;

pcl::fromROSMsg(msgIn->cloud_corner, *laserCloudCornerLast);

pcl::fromROSMsg(msgIn->cloud_surface, *laserCloudSurfLast);

std::lock_guard<std::mutex> lock(mtx);

// 控制后端频率 两帧处理一帧

static double timeLastProcessing = -1;

// 距离上一次使用帧 两帧大于0.15s 雷达本身是0.1s一次的

if (timeLaserInfoCur - timeLastProcessing >= mappingProcessInterval)

{

timeLastProcessing = timeLaserInfoCur;

// 更新当前匹配结果的初始位姿

// 初始位姿 如果没有关键帧就记录 imu的 使用了x y 角度 其它方向是0

// 有关键帧就使用预计分节点提供的位姿

updateInitialGuess();

// 提取当前帧相关的关键帧并且构建点云局部地图

extractSurroundingKeyFrames();

// 对当前帧进行下采样

downsampleCurrentScan();

// 对点云配准进行优化问题构建求解

scan2MapOptimization();

// 根据配准结果判断是否是关键帧

saveKeyFramesAndFactor();

// 调整全局轨迹

correctPoses();

// 将lidar里程计信息发布出去

publishOdometry();

// 发送可视化点云信息

publishFrames();

}

}

4.2匹配初值的获取

// 基于优化方式的点云匹配 初始值是非常重要的 一个好的初始值会帮助优化问题快速收敛且避免局部最优解的情况

void updateInitialGuess()

{

// transformTobeMapped是上一帧优化后的最优位姿

incrementalOdometryAffineFront = trans2Affine3f(transformTobeMapped);

static Eigen::Affine3f lastImuTransformation;

// 没有关键帧,也就是系统刚刚初始化完成

if (cloudKeyPoses3D->points.empty())

{

// 初始的位姿就由磁力计提供

transformTobeMapped[0] = cloudInfo.imuRollInit;

transformTobeMapped[1] = cloudInfo.imuPitchInit;

transformTobeMapped[2] = cloudInfo.imuYawInit;

// 无论vio还是lio 系统的不可观都是4个自由度 平移+yaw角 这里虽然有磁力计将yaw对齐 但是也可以考虑不使用yaw

// 如果不使用gps 那么就不使用yaw角

if (!useImuHeadingInitialization)

transformTobeMapped[2] = 0;

// 保存磁力计得到的位姿 平移置0

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

// use imu pre-integration estimation for pose guess

static bool lastImuPreTransAvailable = false;

static Eigen::Affine3f lastImuPreTransformation;

// 如果有预积分节点提供的里程计

if (cloudInfo.odomAvailable == true)

{

// 将提供的初值转成eigen数据结构保存下来

Eigen::Affine3f transBack = pcl::getTransformation(cloudInfo.initialGuessX, cloudInfo.initialGuessY, cloudInfo.initialGuessZ,

cloudInfo.initialGuessRoll, cloudInfo.initialGuessPitch, cloudInfo.initialGuessYaw);

// 这个标志位表示是否收到过第一帧预积分里程计信息

if (lastImuPreTransAvailable == false)

{

// 将当前里程计结果记录下来

lastImuPreTransformation = transBack;

// 收到第一个里程计数据以后 这个标志位就是true

lastImuPreTransAvailable = true;

}

else

{

Eigen::Affine3f transIncre = lastImuPreTransformation.inverse() * transBack;

Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);

Eigen::Affine3f transFinal = transTobe * transIncre;

pcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

// 同理把当前帧的值保存下来

lastImuPreTransformation = transBack;

// 虽然有里程计信息 仍然需要把imu磁力计得到的旋转记录下来

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

}

// 如果没有里程计信息,就是用imu的旋转信息来更新,因为单纯使用imu无法得到靠谱的平移信息,因此平移直接置0

if (cloudInfo.imuAvailable == true)

{

// 初值计算方式和上面相同 只不过注意平移置0

Eigen::Affine3f transBack = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit);

Eigen::Affine3f transIncre = lastImuTransformation.inverse() * transBack;

Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);

Eigen::Affine3f transFinal = transTobe * transIncre;

pcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5],

transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;

return;

}

}

4.3使用角点面点构建关键帧,构建局部点云地图

void extractSurroundingKeyFrames()

{

// 如果当前帧没有关键帧 就return了

if (cloudKeyPoses3D->points.empty() == true)

return;

// if (loopClosureEnableFlag == true)

// {

// extractForLoopClosure();

// } else {

// extractNearby();

// }

extractNearby();

}

4.3.1

void extractNearby()

{

pcl::PointCloud<PointType>::Ptr surroundingKeyPoses(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr surroundingKeyPosesDS(new pcl::PointCloud<PointType>());

// 保存kdtree提取出来的元素索引

std::vector<int> pointSearchInd;

// 保存距离查询位值的距离的数组

std::vector<float> pointSearchSqDis;

//所有位姿集合

kdtreeSurroundingKeyPoses->setInputCloud(cloudKeyPoses3D); // create kd-tree

// 根据最后一个kf的位值,提取一定距离内的关键帧 默认50米以内

kdtreeSurroundingKeyPoses->radiusSearch(cloudKeyPoses3D->back(), (double)surroundingKeyframeSearchRadius, pointSearchInd, pointSearchSqDis);

// 根据查询的结果把这些点的位值存进一个点云结构中

for (int i = 0; i < (int)pointSearchInd.size(); ++i)

{

int id = pointSearchInd[i];

// 把符合搜索距离的关键帧存储到集合里

surroundingKeyPoses->push_back(cloudKeyPoses3D->points[id]);

}

// 避免关键帧过多 因此做一个下采样

downSizeFilterSurroundingKeyPoses.setInputCloud(surroundingKeyPoses);

downSizeFilterSurroundingKeyPoses.filter(*surroundingKeyPosesDS);

// 确定每个下采样后的点的索引,就使用一个最近邻搜索,其索引赋值给这个点的intensity数据位

for (auto &pt : surroundingKeyPosesDS->points)

{

kdtreeSurroundingKeyPoses->nearestKSearch(pt, 1, pointSearchInd, pointSearchSqDis);

// 最近关键帧的索引 赋值给当前关键帧的标志位

pt.intensity = cloudKeyPoses3D->points[pointSearchInd[0]].intensity;

}

// 关键帧数量

int numPoses = cloudKeyPoses3D->size();

// 刚刚是提取了一些空间上比较近的关键帧 然后再提取一些时间上比较近的关键帧

for (int i = numPoses - 1; i >= 0; --i)

{

// 最近10s的关键帧也保留下来

if (timeLaserInfoCur - cloudKeyPoses6D->points[i].time < 10.0)

surroundingKeyPosesDS->push_back(cloudKeyPoses3D->points[i]);

else

break;

}

// 根据筛选出来的关键帧进行局部地图构建

extractCloud(surroundingKeyPosesDS);

}

4.4对当前帧进行下采样

void downsampleCurrentScan()

{

// Downsample cloud from current scan

// 当前帧的角点和面点进行下采样 也就是为了减少计算量

laserCloudCornerLastDS->clear();

downSizeFilterCorner.setInputCloud(laserCloudCornerLast);

downSizeFilterCorner.filter(*laserCloudCornerLastDS);

laserCloudCornerLastDSNum = laserCloudCornerLastDS->size();

laserCloudSurfLastDS->clear();

downSizeFilterSurf.setInputCloud(laserCloudSurfLast);

downSizeFilterSurf.filter(*laserCloudSurfLastDS);

laserCloudSurfLastDSNum = laserCloudSurfLastDS->size();

}

4.5scan-map 通过lm算法进行残差优化更新后端位姿态

void scan2MapOptimization()

{

// 根据现有地图与最新点云数据进行配准从而更新机器人精确位姿与融合建图,

// 它分为角点优化、平面点优化、配准与更新等部分。

// 优化的过程与里程计的计算类似,是通过计算点到直线或平面的距离,构建优化公式再用LM法求解。

if (cloudKeyPoses3D->points.empty())

return;

if (laserCloudCornerLastDSNum > edgeFeatureMinValidNum && laserCloudSurfLastDSNum > surfFeatureMinValidNum)

{

// 构建kdtree

kdtreeCornerFromMap->setInputCloud(laserCloudCornerFromMapDS);

kdtreeSurfFromMap->setInputCloud(laserCloudSurfFromMapDS);

// 迭代30次

for (int iterCount = 0; iterCount < 30; iterCount++)

{

laserCloudOri->clear();

coeffSel->clear();

// 边缘点匹配优化

cornerOptimization();

// 平面点匹配优化

surfOptimization();

// 组合优化多项式系数

combineOptimizationCoeffs();

if (LMOptimization(iterCount) == true)

break;

}

// 使用了9轴imu的orientation与做transformTobeMapped插值,并且roll和pitch收到常量阈值约束(权重)

transformUpdate();

}

else

{

ROS_WARN("Not enough features! Only %d edge and %d planar features available.", laserCloudCornerLastDSNum, laserCloudSurfLastDSNum);

}

}

4.5.1点线残差构建

// 当前帧角点寻找局部map匹配点

// 1.更新当前帧位姿 将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小 于1m,且5个点构成直线

// (用距离中心点的协方差矩阵 特征值进行判断,则认为匹配上了)

// 2.计算当前帧角点到直线的距离 垂线的单位向量 存储为角点参数

void cornerOptimization()

{

// 实现transformTobeMapped的矩阵形式转换 下面调用的函数就一行就不展开了 工具类函数

// 把结果存入transPointAssociateToMap中

updatePointAssociateToMap();

#pragma omp parallel for num_threads(numberOfCores)

for (int i = 0; i < laserCloudCornerLastDSNum; i++)

{

PointType pointOri, pointSel, coeff;

std::vector<int> pointSearchInd;

std::vector<float> pointSearchSqDis;

pointOri = laserCloudCornerLastDS->points[i];

// 第i帧的点转换到第一帧坐标系下

// 这里就调用了第一步中updatePointAssociateToMap中实现的transPointAssociateToMap

// 然后利用这个变量,把pointOri的点转换到pointSel下,pointSel作为输出

pointAssociateToMap(&pointOri, &pointSel);

// kdtree的最邻近搜索

kdtreeCornerFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

cv::Mat matA1(3, 3, CV_32F, cv::Scalar::all(0));

cv::Mat matD1(1, 3, CV_32F, cv::Scalar::all(0));

cv::Mat matV1(3, 3, CV_32F, cv::Scalar::all(0));

if (pointSearchSqDis[4] < 1.0)

{

// 先求5个样本的平均值

float cx = 0, cy = 0, cz = 0;

for (int j = 0; j < 5; j++)

{

cx += laserCloudCornerFromMapDS->points[pointSearchInd[j]].x;

cy += laserCloudCornerFromMapDS->points[pointSearchInd[j]].y;

cz += laserCloudCornerFromMapDS->points[pointSearchInd[j]].z;

}

cx /= 5;

cy /= 5;

cz /= 5;

// 下面求矩阵matA1=[ax,ay,az]^t*[ax,ay,az]

// 更准确地说应该是在求协方差matA1

float a11 = 0, a12 = 0, a13 = 0, a22 = 0, a23 = 0, a33 = 0;

for (int j = 0; j < 5; j++)

{

float ax = laserCloudCornerFromMapDS->points[pointSearchInd[j]].x - cx;

float ay = laserCloudCornerFromMapDS->points[pointSearchInd[j]].y - cy;

float az = laserCloudCornerFromMapDS->points[pointSearchInd[j]].z - cz;

a11 += ax * ax;

a12 += ax * ay;

a13 += ax * az;

a22 += ay * ay;

a23 += ay * az;

a33 += az * az;

}

a11 /= 5;

a12 /= 5;

a13 /= 5;

a22 /= 5;

a23 /= 5;

a33 /= 5;

matA1.at<float>(0, 0) = a11;

matA1.at<float>(0, 1) = a12;

matA1.at<float>(0, 2) = a13;

matA1.at<float>(1, 0) = a12;

matA1.at<float>(1, 1) = a22;

matA1.at<float>(1, 2) = a23;

matA1.at<float>(2, 0) = a13;

matA1.at<float>(2, 1) = a23;

matA1.at<float>(2, 2) = a33;

// 求正交阵的特征值和特征向量

// 特征值:matD1,特征向量:matV1中 对应于LOAM论文里雷达建图 特征值与特征向量那块

cv::eigen(matA1, matD1, matV1);

// 边缘:与较大特征值对应的特征向量代表边缘线的方向 (一大两小 大方向)

// 以下这一大块是计算点到边缘的距离 最后通过系数s来判断是否距离很近

// 如果距离很近 就认为这个点在边缘上 需要放到laserOri中

// 如果最大特征值大于次大特征值的3倍 认为构成了线 角点是合格的

if (matD1.at<float>(0, 0) > 3 * matD1.at<float>(0, 1))

{

// 当前帧角点坐标 map系下

float x0 = pointSel.x;

float y0 = pointSel.y;

float z0 = pointSel.z;

// 局部map对应的中心角点 沿着特征向量直线方向 前后各取一个点

float x1 = cx + 0.1 * matV1.at<float>(0, 0);

float y1 = cy + 0.1 * matV1.at<float>(0, 1);

float z1 = cz + 0.1 * matV1.at<float>(0, 2);

float x2 = cx - 0.1 * matV1.at<float>(0, 0);

float y2 = cy - 0.1 * matV1.at<float>(0, 1);

float z2 = cz - 0.1 * matV1.at<float>(0, 2);

// 这边是在求[(x0-x1),(y0-y1),(z0-z1)]与[(x0-x2),(y0-y2),(z0-z2)]叉乘得到的向量的模长

// 这个模长是由0.2*V1[0]和点[x0,y0,z0]构成的平行四边形的面积

// 因为[(x0-x1),(y0-y1),(z0-z1)]x[(x0-x2),(y0-y2),(z0-z2)]=[XXX,YYY,ZZZ],

// [XXX,YYY,ZZZ]=[(y0-y1)(z0-z2)-(y0-y2)(z0-z1),-(x0-x1)(z0-z2)+(x0-x2)(z0-z1),(x0-x1)(y0-y2)-(x0-x2)(y0-y1)]

// area_012,也就是三个点组成的三角形面积*2,叉积的模|axb|=a*b*sin(theta)

float a012 = sqrt(((x0 - x1) * (y0 - y2) - (x0 - x2) * (y0 - y1)) * ((x0 - x1) * (y0 - y2) - (x0 - x2) * (y0 - y1)) + ((x0 - x1) * (z0 - z2) - (x0 - x2) * (z0 - z1)) * ((x0 - x1) * (z0 - z2) - (x0 - x2) * (z0 - z1)) + ((y0 - y1) * (z0 - z2) - (y0 - y2) * (z0 - z1)) * ((y0 - y1) * (z0 - z2) - (y0 - y2) * (z0 - z1)));

// line_12,底边边长

float l12 = sqrt((x1 - x2) * (x1 - x2) + (y1 - y2) * (y1 - y2) + (z1 - z2) * (z1 - z2));

// 两次叉积,得到点到直线的垂线段单位向量,x分量,下面同理

// 求叉乘结果[la',lb',lc']=[(x1-x2),(y1-y2),(z1-z2)]x[XXX,YYY,ZZZ]

// [la,lb,lc]=[la',lb',lc']/a012/l12

// 得到底边上的高的方向向量[la,lb,lc]

// LLL=[la,lb,lc]是V1[0]这条高上的单位法向量。||LLL||=1;

// 如不理解则看图:

// A

// B C

// 这里ABxAC,代表垂直于ABC面的法向量,其模长为平行四边形面积

// 因此BCx(ABxAC),代表了BC和(ABC平面的法向量)的叉乘,那么其实这个向量就是A到BC的垂线的方向向量

// 那么(ABxAC)/|ABxAC|,代表着ABC平面的单位法向量

// BCxABC平面单位法向量,即为一个长度为|BC|的(A到BC垂线的方向向量),因此再除以|BC|,得到A到BC垂线的单位方向向量

float la = ((y1 - y2) * ((x0 - x1) * (y0 - y2) - (x0 - x2) * (y0 - y1)) + (z1 - z2) * ((x0 - x1) * (z0 - z2) - (x0 - x2) * (z0 - z1))) / a012 / l12;

float lb = -((x1 - x2) * ((x0 - x1) * (y0 - y2) - (x0 - x2) * (y0 - y1)) - (z1 - z2) * ((y0 - y1) * (z0 - z2) - (y0 - y2) * (z0 - z1))) / a012 / l12;

float lc = -((x1 - x2) * ((x0 - x1) * (z0 - z2) - (x0 - x2) * (z0 - z1)) + (y1 - y2) * ((y0 - y1) * (z0 - z2) - (y0 - y2) * (z0 - z1))) / a012 / l12;

// 三角形的高,也就是点到直线距离

// 计算点pointSel到直线的距离

// 这里需要特别说明的是ld2代表的是点pointSel到过点[cx,cy,cz]的方向向量直线的距离

float ld2 = a012 / l12;

// 下面涉及到一个鲁棒核函数,作者简单地设计了这个核函数。

// 距离越大,s越小,是个距离惩罚因子(权重)

float s = 1 - 0.9 * fabs(ld2);

// coeff代表系数的意思

// coff用于保存距离的方向向量

coeff.x = s * la;

coeff.y = s * lb;

coeff.z = s * lc;

// intensity本质上构成了一个核函数,ld2越接近于1,增长越慢

// intensity=(1-0.9*ld2)*ld2=ld2-0.9*ld2*ld2

coeff.intensity = s * ld2;

// 程序末尾根据s的值来判断是否将点云点放入点云集合laserCloudOri以及coeffSel中。

// 所以就应该认为这个点是边缘点

// s>0.1 也就是要求点到直线的距离ld2要小于1m

// s越大说明ld2越小(离边缘线越近),这样就说明点pointOri在直线上

if (s > 0.1)

{

laserCloudOriCornerVec[i] = pointOri;

coeffSelCornerVec[i] = coeff;

laserCloudOriCornerFlag[i] = true;

}

}

}

}

}

4.5.2点面残差构建

void surfOptimization()

{

updatePointAssociateToMap();

// 遍历当前帧平面点集合

#pragma omp parallel for num_threads(numberOfCores)

for (int i = 0; i < laserCloudSurfLastDSNum; i++)

{

PointType pointOri, pointSel, coeff;

std::vector<int> pointSearchInd;

std::vector<float> pointSearchSqDis;

// 寻找5个紧邻点 计算其特征值和特征向量

// 平面点 坐标还是lidar系

pointOri = laserCloudSurfLastDS->points[i];

// 根据当前帧位姿 变换到map坐标系下

pointAssociateToMap(&pointOri, &pointSel);

// 在局部平面点map中查找当前平面点相邻的5个平面点

kdtreeSurfFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

Eigen::Matrix<float, 5, 3> matA0;

Eigen::Matrix<float, 5, 1> matB0;

Eigen::Vector3f matX0;

// 5*3存储5个紧邻点

matA0.setZero();

matB0.fill(-1);

matX0.setZero();

// 只考虑附近1m内

if (pointSearchSqDis[4] < 1.0)

{

for (int j = 0; j < 5; j++)

{

matA0(j, 0) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].x;

matA0(j, 1) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].y;

matA0(j, 2) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].z;

}

// 求maxA0中点构成的平面法向量

// matB0是-1,这个函数用来求解AX=B的X,

// 也就是AX+BY+CZ+1=0

matX0 = matA0.colPivHouseholderQr().solve(matB0);

// 假设平面方程为ax+by+cz+1=0,这里就是求方程的系数abc,d=1

float pa = matX0(0, 0);

float pb = matX0(1, 0);

float pc = matX0(2, 0);

float pd = 1;

// 单位法向量

// 对[pa,pb,pc,pd]进行单位化

float ps = sqrt(pa * pa + pb * pb + pc * pc);

pa /= ps;

pb /= ps;

pc /= ps;

pd /= ps;

// 检查平面是否合格,如果5个点中有点到平面的距离超过0.2m,那么认为这些点太分散了,不构成平面

bool planeValid = true;

for (int j = 0; j < 5; j++)

{

if (fabs(pa * laserCloudSurfFromMapDS->points[pointSearchInd[j]].x +

pb * laserCloudSurfFromMapDS->points[pointSearchInd[j]].y +

pc * laserCloudSurfFromMapDS->points[pointSearchInd[j]].z + pd) > 0.2)

{

planeValid = false;

break;

}

}

if (planeValid)

{

// 当前激光帧点到平面距离

// 点(x0,y0,z0)到了平面Ax+By+Cz+D=0的距离为:d=|Ax0+By0+Cz0+D|/√(A^2+B^2+C^2)

// 但是会发现下面的分母开了两次方,不知道为什么,分母多开一次方会更小,这因此求出的距离会更大

float pd2 = pa * pointSel.x + pb * pointSel.y + pc * pointSel.z + pd;

// 距离越大,s越小,是个距离惩罚因子(权重)

// 后面部分相除求的是[pa,pb,pc,pd]与pointSel的夹角余弦值(两个sqrt,其实并不是余弦值)

// 这个夹角余弦值越小越好,越小证明所求的[pa,pb,pc,pd]与平面越垂直

float s = 1 - 0.9 * fabs(pd2) / sqrt(sqrt(pointOri.x * pointOri.x + pointOri.y * pointOri.y + pointOri.z * pointOri.z));

// 点到平面垂线单位法向量(其实等价于平面法向量)

coeff.x = s * pa;

coeff.y = s * pb;

coeff.z = s * pc;

coeff.intensity = s * pd2;

if (s > 0.1)

{

// 当前激光帧平面点,加入匹配集合中.

// 如果s>0.1,代表fabs(pd2) / sqrt(sqrt(pointSel.x * pointSel.x+ pointSel.y * pointSel.y + pointSel.z * pointSel.z))这一项<1,即"伪距离"<1

laserCloudOriSurfVec[i] = pointOri;

coeffSelSurfVec[i] = coeff;

laserCloudOriSurfFlag[i] = true;

}

}

}

}

}

4.5.3提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合,lm算法求解时使用

void combineOptimizationCoeffs()

{

// combine corner coeffs

// 遍历当前帧角点集合,提取出与局部map匹配上了的角点

for (int i = 0; i < laserCloudCornerLastDSNum; ++i)

{

if (laserCloudOriCornerFlag[i] == true)

{

laserCloudOri->push_back(laserCloudOriCornerVec[i]);

coeffSel->push_back(coeffSelCornerVec[i]);

}

}

// combine surf coeffs

// 遍历当前帧平面点集合,提取出与局部map匹配上了的平面点

for (int i = 0; i < laserCloudSurfLastDSNum; ++i)

{

if (laserCloudOriSurfFlag[i] == true)

{

laserCloudOri->push_back(laserCloudOriSurfVec[i]);

coeffSel->push_back(coeffSelSurfVec[i]);

}

}

// reset flag for next iteration

// 清空标记

std::fill(laserCloudOriCornerFlag.begin(), laserCloudOriCornerFlag.end(), false);

std::fill(laserCloudOriSurfFlag.begin(), laserCloudOriSurfFlag.end(), false);

}

4.5.4通过lm算法进行最优解计算

bool LMOptimization(int iterCount)

{

// 由于LOAM里雷达的特殊坐标系 所以这里也转了一次

// This optimization is from the original loam_velodyne by Ji Zhang, need to cope with coordinate transformation

// lidar <- camera --- camera <- lidar

// x = z --- x = y

// y = x --- y = z

// z = y --- z = x

// roll = yaw --- roll = pitch

// pitch = roll --- pitch = yaw

// yaw = pitch --- yaw = roll

// lidar -> camera

float srx = sin(transformTobeMapped[1]);

float crx = cos(transformTobeMapped[1]);

float sry = sin(transformTobeMapped[2]);

float cry = cos(transformTobeMapped[2]);

float srz = sin(transformTobeMapped[0]);

float crz = cos(transformTobeMapped[0]);

int laserCloudSelNum = laserCloudOri->size();

// 当前帧匹配特征点数太少

if (laserCloudSelNum < 50)

{

return false;

}

cv::Mat matA(laserCloudSelNum, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matAt(6, laserCloudSelNum, CV_32F, cv::Scalar::all(0));

cv::Mat matAtA(6, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matB(laserCloudSelNum, 1, CV_32F, cv::Scalar::all(0));

cv::Mat matAtB(6, 1, CV_32F, cv::Scalar::all(0));

cv::Mat matX(6, 1, CV_32F, cv::Scalar::all(0));

PointType pointOri, coeff;

// 遍历匹配特征点,构建Jacobian矩阵

for (int i = 0; i < laserCloudSelNum; i++)

{

// lidar -> camera

pointOri.x = laserCloudOri->points[i].y;

pointOri.y = laserCloudOri->points[i].z;

pointOri.z = laserCloudOri->points[i].x;

// lidar -> camera

coeff.x = coeffSel->points[i].y;

coeff.y = coeffSel->points[i].z;

coeff.z = coeffSel->points[i].x;

coeff.intensity = coeffSel->points[i].intensity;

// in camera

// https://wykxwyc.github.io/2019/08/01/The-Math-Formula-in-LeGO-LOAM/

// 求雅克比矩阵中的元素,距离d对roll角度的偏导量即d(d)/d(roll)

// 各种cos sin的是旋转矩阵对roll求导,pointOri.x是点的坐标,coeff.x等是距离到局部点的偏导,也就是法向量(建议看链接)

// 注意:链接当中的R0-5公式中,ex和ey是反的

// 另一个链接https://blog.csdn.net/weixin_37835423/article/details/111587379#commentBox当中写的更好

float arx = (crx * sry * srz * pointOri.x + crx * crz * sry * pointOri.y - srx * sry * pointOri.z) * coeff.x + (-srx * srz * pointOri.x - crz * srx * pointOri.y - crx * pointOri.z) * coeff.y + (crx * cry * srz * pointOri.x + crx * cry * crz * pointOri.y - cry * srx * pointOri.z) * coeff.z;

// 同上,求解的是对pitch的偏导量

float ary = ((cry * srx * srz - crz * sry) * pointOri.x + (sry * srz + cry * crz * srx) * pointOri.y + crx * cry * pointOri.z) * coeff.x + ((-cry * crz - srx * sry * srz) * pointOri.x + (cry * srz - crz * srx * sry) * pointOri.y - crx * sry * pointOri.z) * coeff.z;

float arz = ((crz * srx * sry - cry * srz) * pointOri.x + (-cry * crz - srx * sry * srz) * pointOri.y) * coeff.x + (crx * crz * pointOri.x - crx * srz * pointOri.y) * coeff.y + ((sry * srz + cry * crz * srx) * pointOri.x + (crz * sry - cry * srx * srz) * pointOri.y) * coeff.z;

// camera -> lidar

// matA就是误差对旋转和平移变量的雅克比矩阵

matA.at<float>(i, 0) = arz;

matA.at<float>(i, 1) = arx;

matA.at<float>(i, 2) = ary;

// 对平移求误差就是法向量,见链接

matA.at<float>(i, 3) = coeff.z;

matA.at<float>(i, 4) = coeff.x;

matA.at<float>(i, 5) = coeff.y;

// 残差项

matB.at<float>(i, 0) = -coeff.intensity;

}

// 将矩阵由matA转置生成matAt

// 先进行计算,以便于后边调用 cv::solve求解

cv::transpose(matA, matAt);

matAtA = matAt * matA;

matAtB = matAt * matB;

// 利用高斯牛顿法进行求解,

// 高斯牛顿法的原型是J^(T)*J * delta(x) = -J*f(x)

// J是雅克比矩阵,这里是A,f(x)是优化目标,这里是-B(符号在给B赋值时候就放进去了)

// 通过QR分解的方式,求解matAtA*matX=matAtB,得到解matX

cv::solve(matAtA, matAtB, matX, cv::DECOMP_QR);

// iterCount==0 说明是第一次迭代,需要初始化

if (iterCount == 0)

{

// 对近似的Hessian矩阵求特征值和特征向量,

cv::Mat matE(1, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matV(6, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matV2(6, 6, CV_32F, cv::Scalar::all(0));

// 对近似的Hessian矩阵求特征值和特征向量,

// matE特征值,matV是特征向量

// 退化方向只与原始的约束方向 A有关,与原始约束的位置 b 无关

// 算这个的目的是要判断退化,即约束中较小的偏移会导致解所在的局部区域发生较大的变化

cv::eigen(matAtA, matE, matV);

matV.copyTo(matV2);

isDegenerate = false;

// 初次优化时,特征值门限设置为100,小于这个值认为是退化了

// 系统退化与否和系统是否存在解没有必然联系,即使系统出现退化,系统也是可能存在解的,

// 因此需要将系统的解进行调整,一个策略就是将解进行投影,

// 对于退化方向,使用优化的状态估计值,对于非退化方向,依然使用方程的解。

// 另一个策略就是直接抛弃解在退化方向的分量。

// 对于退化方向,我们不考虑,直接丢弃,只考虑非退化方向解的增量。

float eignThre[6] = {100, 100, 100, 100, 100, 100};

for (int i = 5; i >= 0; i--)

{

if (matE.at<float>(0, i) < eignThre[i])

{

for (int j = 0; j < 6; j++)

{

matV2.at<float>(i, j) = 0;

}

isDegenerate = true;

}

else

{

break;

}

}

// 以下这步可以参考链接:

// https://blog.csdn.net/i_robots/article/details/108724606

// 以及https://zhuanlan.zhihu.com/p/258159552

matP = matV.inv() * matV2;

}

if (isDegenerate)

{

cv::Mat matX2(6, 1, CV_32F, cv::Scalar::all(0));

matX.copyTo(matX2);

matX = matP * matX2;

}

// 更新当前位姿 x = x + delta_x

transformTobeMapped[0] += matX.at<float>(0, 0);

transformTobeMapped[1] += matX.at<float>(1, 0);

transformTobeMapped[2] += matX.at<float>(2, 0);

transformTobeMapped[3] += matX.at<float>(3, 0);

transformTobeMapped[4] += matX.at<float>(4, 0);

transformTobeMapped[5] += matX.at<float>(5, 0);

float deltaR = sqrt(

pow(pcl::rad2deg(matX.at<float>(0, 0)), 2) +

pow(pcl::rad2deg(matX.at<float>(1, 0)), 2) +

pow(pcl::rad2deg(matX.at<float>(2, 0)), 2));

float deltaT = sqrt(

pow(matX.at<float>(3, 0) * 100, 2) +

pow(matX.at<float>(4, 0) * 100, 2) +

pow(matX.at<float>(5, 0) * 100, 2));

// 旋转或者平移量足够小就停止这次迭代过程

if (deltaR < 0.05 && deltaT < 0.05)

{

return true; // converged

}

return false; // keep optimizing

}

4.5.5 重力对齐 ,使用imu磁力角加权

void transformUpdate()

{

if (cloudInfo.imuAvailable == true)

{

// 俯仰角小于1.4

if (std::abs(cloudInfo.imuPitchInit) < 1.4)

{

double imuWeight = imuRPYWeight;

tf::Quaternion imuQuaternion;

tf::Quaternion transformQuaternion;

double rollMid, pitchMid, yawMid;

// slerp roll

// roll角求加权均值,用scan-to-map优化得到的位姿与imu原始RPY数据,进行加权平均

transformQuaternion.setRPY(transformTobeMapped[0], 0, 0);

imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

transformTobeMapped[0] = rollMid;

// slerp pitch

// pitch角求加权均值,用scan-to-map优化得到的位姿与imu原始RPY数据,进行加权平均

transformQuaternion.setRPY(0, transformTobeMapped[1], 0);

imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

transformTobeMapped[1] = pitchMid;

}

}

// 更新当前帧位姿的roll, pitch, z坐标;因为是小车,roll、pitch是相对稳定的,

// 不会有很大变动,一定程度上可以信赖imu的数据,z是进行高度约束

transformTobeMapped[0] = constraintTransformation(transformTobeMapped[0], rotation_tollerance);

transformTobeMapped[1] = constraintTransformation(transformTobeMapped[1], rotation_tollerance);

transformTobeMapped[5] = constraintTransformation(transformTobeMapped[5], z_tollerance);

// 当前帧位姿

incrementalOdometryAffineBack = trans2Affine3f(transformTobeMapped);

}

4.6判断是否是关键帧,进行因子图优化,更新后端位姿

void saveKeyFramesAndFactor()

{

// 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧

if (saveFrame() == false)

return;

// odom factor

addOdomFactor();

// gps factor

addGPSFactor();

// loop factor

addLoopFactor();

// cout << "****************************************************" << endl;

// gtSAMgraph.print("GTSAM Graph:\n");

// update iSAM

// 执行优化

isam->update(gtSAMgraph, initialEstimate);

isam->update();

if (aLoopIsClosed == true)

{

isam->update();

isam->update();

isam->update();

isam->update();

isam->update();

}

// update之后要清空一下保存的因子图,注:历史数据不会清掉,ISAM保存起来了

gtSAMgraph.resize(0);

initialEstimate.clear();

// save key poses

PointType thisPose3D;

PointTypePose thisPose6D;

Pose3 latestEstimate;

// 优化结果

isamCurrentEstimate = isam->calculateEstimate();

// 当前帧位姿结果

latestEstimate = isamCurrentEstimate.at<Pose3>(isamCurrentEstimate.size() - 1);

// cout << "****************************************************" << endl;

// isamCurrentEstimate.print("Current estimate: ");

// cloudKeyPoses3D加入当前帧位姿

thisPose3D.x = latestEstimate.translation().x();

thisPose3D.y = latestEstimate.translation().y();

thisPose3D.z = latestEstimate.translation().z();

// 索引

thisPose3D.intensity = cloudKeyPoses3D->size(); // this can be used as index

cloudKeyPoses3D->push_back(thisPose3D);

// cloudKeyPoses6D加入当前帧位姿

thisPose6D.x = thisPose3D.x;

thisPose6D.y = thisPose3D.y;

thisPose6D.z = thisPose3D.z;

thisPose6D.intensity = thisPose3D.intensity; // this can be used as index

thisPose6D.roll = latestEstimate.rotation().roll();

thisPose6D.pitch = latestEstimate.rotation().pitch();

thisPose6D.yaw = latestEstimate.rotation().yaw();

thisPose6D.time = timeLaserInfoCur;

cloudKeyPoses6D->push_back(thisPose6D);

// cout << "****************************************************" << endl;

// cout << "Pose covariance:" << endl;

// cout << isam->marginalCovariance(isamCurrentEstimate.size()-1) << endl << endl;

// 位姿协方差

poseCovariance = isam->marginalCovariance(isamCurrentEstimate.size() - 1);

// save updated transform

// transformTobeMapped更新当前帧位姿

transformTobeMapped[0] = latestEstimate.rotation().roll();

transformTobeMapped[1] = latestEstimate.rotation().pitch();

transformTobeMapped[2] = latestEstimate.rotation().yaw();

transformTobeMapped[3] = latestEstimate.translation().x();

transformTobeMapped[4] = latestEstimate.translation().y();

transformTobeMapped[5] = latestEstimate.translation().z();

// save all the received edge and surf points

// 当前帧激光角点、平面点,降采样集合

pcl::PointCloud<PointType>::Ptr thisCornerKeyFrame(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr thisSurfKeyFrame(new pcl::PointCloud<PointType>());

pcl::copyPointCloud(*laserCloudCornerLastDS, *thisCornerKeyFrame);

pcl::copyPointCloud(*laserCloudSurfLastDS, *thisSurfKeyFrame);

// save key frame cloud

// 保存特征点降采样集合

cornerCloudKeyFrames.push_back(thisCornerKeyFrame);

surfCloudKeyFrames.push_back(thisSurfKeyFrame);

// save path for visualization

// 更新里程计轨迹

updatePath(thisPose6D);

}

4.7根据因子图优化结果更新所有关键帧率的位姿

void correctPoses()

{

if (cloudKeyPoses3D->points.empty())

return;

if (aLoopIsClosed == true)

{

// clear map cache

// 清空局部map

laserCloudMapContainer.clear();

// clear path

// 清空里程计轨迹

globalPath.poses.clear();

// update key poses

// 更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿

int numPoses = isamCurrentEstimate.size();

for (int i = 0; i < numPoses; ++i)

{

cloudKeyPoses3D->points[i].x = isamCurrentEstimate.at<Pose3>(i).translation().x();

cloudKeyPoses3D->points[i].y = isamCurrentEstimate.at<Pose3>(i).translation().y();

cloudKeyPoses3D->points[i].z = isamCurrentEstimate.at<Pose3>(i).translation().z();

cloudKeyPoses6D->points[i].x = cloudKeyPoses3D->points[i].x;

cloudKeyPoses6D->points[i].y = cloudKeyPoses3D->points[i].y;

cloudKeyPoses6D->points[i].z = cloudKeyPoses3D->points[i].z;

cloudKeyPoses6D->points[i].roll = isamCurrentEstimate.at<Pose3>(i).rotation().roll();

cloudKeyPoses6D->points[i].pitch = isamCurrentEstimate.at<Pose3>(i).rotation().pitch();

cloudKeyPoses6D->points[i].yaw = isamCurrentEstimate.at<Pose3>(i).rotation().yaw();

updatePath(cloudKeyPoses6D->points[i]);

}

aLoopIsClosed = false;

}

}

4.8多线程icp提供回环因子,给到因子图优化部分

void performLoopClosure()

{

if (cloudKeyPoses3D->points.empty() == true)

return;

mtx.lock();

*copy_cloudKeyPoses3D = *cloudKeyPoses3D;

*copy_cloudKeyPoses6D = *cloudKeyPoses6D;

mtx.unlock();

// find keys

// 当前关键帧索引,候选闭环匹配帧索引

int loopKeyCur;

int loopKeyPre;

if (detectLoopClosureExternal(&loopKeyCur, &loopKeyPre) == false)

// 在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧

if (detectLoopClosureDistance(&loopKeyCur, &loopKeyPre) == false)

return;

// extract cloud

// 提取

pcl::PointCloud<PointType>::Ptr cureKeyframeCloud(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr prevKeyframeCloud(new pcl::PointCloud<PointType>());

{

// 提取当前关键帧特征点集合,降采样;

// loopFindNearKeyframes形参分别为点云集合,当前帧的索引,搜索半径

loopFindNearKeyframes(cureKeyframeCloud, loopKeyCur, 0);

// 提取闭环匹配关键帧前后相邻若干帧的关键帧特征点集合,降采样

loopFindNearKeyframes(prevKeyframeCloud, loopKeyPre, historyKeyframeSearchNum);

// 如果特征点较少,返回

if (cureKeyframeCloud->size() < 300 || prevKeyframeCloud->size() < 1000)

return;

// 发布闭环匹配关键帧局部map

if (pubHistoryKeyFrames.getNumSubscribers() != 0)

publishCloud(pubHistoryKeyFrames, prevKeyframeCloud, timeLaserInfoStamp, odometryFrame);

}

// ICP Settings

// ICP参数设置

static pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaxCorrespondenceDistance(historyKeyframeSearchRadius * 2);

icp.setMaximumIterations(100);

icp.setTransformationEpsilon(1e-6);

icp.setEuclideanFitnessEpsilon(1e-6);

icp.setRANSACIterations(0);

// Align clouds

// scan-to-map,调用icp匹配

icp.setInputSource(cureKeyframeCloud);

icp.setInputTarget(prevKeyframeCloud);

pcl::PointCloud<PointType>::Ptr unused_result(new pcl::PointCloud<PointType>());

icp.align(*unused_result);

// 未收敛,或者匹配不够好

if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore)

return;

// publish corrected cloud

// 发布当前关键帧经过闭环优化后的位姿变换之后的特征点云

if (pubIcpKeyFrames.getNumSubscribers() != 0)

{

pcl::PointCloud<PointType>::Ptr closed_cloud(new pcl::PointCloud<PointType>());

pcl::transformPointCloud(*cureKeyframeCloud, *closed_cloud, icp.getFinalTransformation());

publishCloud(pubIcpKeyFrames, closed_cloud, timeLaserInfoStamp, odometryFrame);

}

// Get pose transformation

// 闭环优化得到的当前关键帧与闭环关键帧之间的位姿变换

float x, y, z, roll, pitch, yaw;

Eigen::Affine3f correctionLidarFrame;

correctionLidarFrame = icp.getFinalTransformation();

// transform from world origin to wrong pose

// 闭环优化前当前帧位姿

Eigen::Affine3f tWrong = pclPointToAffine3f(copy_cloudKeyPoses6D->points[loopKeyCur]);

// transform from world origin to corrected pose

// 闭环优化后当前帧位姿

Eigen::Affine3f tCorrect = correctionLidarFrame * tWrong; // pre-multiplying -> successive rotation about a fixed frame

pcl::getTranslationAndEulerAngles(tCorrect, x, y, z, roll, pitch, yaw);

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));

// 闭环匹配帧的位姿

gtsam::Pose3 poseTo = pclPointTogtsamPose3(copy_cloudKeyPoses6D->points[loopKeyPre]);

gtsam::Vector Vector6(6);

float noiseScore = icp.getFitnessScore();

Vector6 << noiseScore, noiseScore, noiseScore, noiseScore, noiseScore, noiseScore;

noiseModel::Diagonal::shared_ptr constraintNoise = noiseModel::Diagonal::Variances(Vector6);

// Add pose constraint

// 添加闭环因子需要的数据

// 这些内容会在函数addLoopFactor中用到

mtx.lock();

loopIndexQueue.push_back(make_pair(loopKeyCur, loopKeyPre));

loopPoseQueue.push_back(poseFrom.between(poseTo));

loopNoiseQueue.push_back(constraintNoise);

mtx.unlock();

// add loop constriant

loopIndexContainer[loopKeyCur] = loopKeyPre;

}

4.9发布后端里程计

void publishOdometry()

{

// Publish odometry for ROS (global)

// 发布激光里程计,odom等价map

nav_msgs::Odometry laserOdometryROS;

laserOdometryROS.header.stamp = timeLaserInfoStamp;

laserOdometryROS.header.frame_id = odometryFrame;

laserOdometryROS.child_frame_id = "odom_mapping";

laserOdometryROS.pose.pose.position.x = transformTobeMapped[3];

laserOdometryROS.pose.pose.position.y = transformTobeMapped[4];

laserOdometryROS.pose.pose.position.z = transformTobeMapped[5];

laserOdometryROS.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);

pubLaserOdometryGlobal.publish(laserOdometryROS);

// Publish TF

// 发布TF,odom->lidar

static tf::TransformBroadcaster br;

tf::Transform t_odom_to_lidar = tf::Transform(tf::createQuaternionFromRPY(transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]),

tf::Vector3(transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5]));

tf::StampedTransform trans_odom_to_lidar = tf::StampedTransform(t_odom_to_lidar, timeLaserInfoStamp, odometryFrame, "lidar_link");

br.sendTransform(trans_odom_to_lidar);

// Publish odometry for ROS (incremental)

static bool lastIncreOdomPubFlag = false;

static nav_msgs::Odometry laserOdomIncremental; // incremental odometry msg

static Eigen::Affine3f increOdomAffine; // incremental odometry in affine

// 第一次数据直接用全局里程计初始化

if (lastIncreOdomPubFlag == false)

{

lastIncreOdomPubFlag = true;

laserOdomIncremental = laserOdometryROS;

increOdomAffine = trans2Affine3f(transformTobeMapped);

}

else

{

// 当前帧与前一帧之间的位姿变换

Eigen::Affine3f affineIncre = incrementalOdometryAffineFront.inverse() * incrementalOdometryAffineBack;

increOdomAffine = increOdomAffine * affineIncre;

float x, y, z, roll, pitch, yaw;

pcl::getTranslationAndEulerAngles(increOdomAffine, x, y, z, roll, pitch, yaw);

if (cloudInfo.imuAvailable == true)

{

if (std::abs(cloudInfo.imuPitchInit) < 1.4)

{

double imuWeight = 0.1;

tf::Quaternion imuQuaternion;

tf::Quaternion transformQuaternion;

double rollMid, pitchMid, yawMid;

// slerp roll

// roll姿态角加权平均

transformQuaternion.setRPY(roll, 0, 0);

imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

roll = rollMid;

// slerp pitch

// pitch姿态角加权平均

transformQuaternion.setRPY(0, pitch, 0);

imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);

tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);

pitch = pitchMid;

}

}

laserOdomIncremental.header.stamp = timeLaserInfoStamp;

laserOdomIncremental.header.frame_id = odometryFrame;

laserOdomIncremental.child_frame_id = "odom_mapping";

laserOdomIncremental.pose.pose.position.x = x;

laserOdomIncremental.pose.pose.position.y = y;

laserOdomIncremental.pose.pose.position.z = z;

laserOdomIncremental.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(roll, pitch, yaw);

if (isDegenerate)

laserOdomIncremental.pose.covariance[0] = 1;

else

laserOdomIncremental.pose.covariance[0] = 0;

}

pubLaserOdometryIncremental.publish(laserOdomIncremental);

}

![[StartingPoint][Tier2]Archetype](https://img-blog.csdnimg.cn/img_convert/4db13216eba6070eb871d3b62c8b450b.jpeg)