预训练任务 - Mask Language Model

- jieba预分词

- 长度小于4的词直接mask(mask_ids就是input_ids)

if rands > self.mask_rate and len(word) < 4:

word = word_list[i]

word_encode = tokenizer.encode(word, add_special_tokens=False)

for token in word_encode:

mask_ids.append(token)

labels.append(self.ignore_labels)

record.append(i)

- 剩余的词按照ngrams随机抽取mask,mask_rate为0.8

else:

n = np.random.choice(self.ngrams, p=self.pvals)

for index in range(n):

ind = index + i

if ind in record or ind >= len(word_list):

continue

record.append(ind)

word = word_list[ind]

word_encode = tokenizer.encode(word, add_special_tokens=False)

for token in word_encode:

mask_ids.append(self.token_process(token))

labels.append(token)

- Mask机制:80%mask,10%不变,10%随机替换

def token_process(self, token_id):

rand = np.random.random()

if rand <= 0.8:

return self.tokenizer.mask_token_id

elif rand <= 0.9:

return token_id

else:

return np.random.randint(1, self.vocab_length)

- 结果

# print('sentence:',sample['text'])

# print('input_ids:',mask_ids)

# print('decode inputids:',self.tokenizer.decode(mask_ids))

# print('labels',labels)

# print('decode labels:',self.tokenizer.decode(labels))

# print('*'*20)

return {

'input_ids': torch.tensor(input_ids), # mask_ids

'labels': torch.tensor(batch_labels), # labels

'attention_mask': torch.tensor(attention_mask),

'token_type_ids': torch.tensor(token_type_ids)

}

预训练任务 - Sentence Order Predict

- 把输入文本分割成两个部分A和B

def get_a_and_b_segments(sample, np_rng):

"""Divide sample into a and b segments."""

# Number of sentences in the sample.

n_sentences = len(sample)

# Make sure we always have two sentences.

assert n_sentences > 1, 'make sure each sample has at least two sentences.'

# First part:

# `a_end` is how many sentences go into the `A`.

a_end = 1

if n_sentences >= 3:

# Note that randin in numpy is exclusive.

a_end = np_rng.randint(1, n_sentences)

tokens_a = []

for j in range(a_end):

tokens_a.extend(sample[j])

# Second part:

tokens_b = []

for j in range(a_end, n_sentences):

tokens_b.extend(sample[j])

# Random next:

is_next_random = False

if np_rng.random() < 0.5:

is_next_random = True

tokens_a, tokens_b = tokens_b, tokens_a

return tokens_a, tokens_b, is_next_random

- max_seq_length - 3因为还需要拼上[CLS] ,[SEP] ,[SEP]

- 每一个句子内按照字进行Mask

# Masking.

max_predictions_per_seq = self.masked_lm_prob * len(tokens)

(tokens, masked_positions, masked_labels, _, _) = create_masked_lm_predictions(

tokens, self.vocab_id_list, self.vocab_id_to_token_dict, self.masked_lm_prob,

self.tokenizer.cls_token_id, self.tokenizer.sep_token_id, self.tokenizer.mask_token_id,

max_predictions_per_seq, self.np_rng,

masking_style='bert')

- 注意需要考虑Bert的WordPiece的分词格式,兼容以前##的形式,如果后面的词是##开头的,那么直接把后面的拼到前面当作一个词

- 如何抽取词进行Mask,需要采样,几何分布

if not geometric_dist:

n = np_rng.choice(ngrams[:len(cand_index_set)],

p=pvals[:len(cand_index_set)] /

pvals[:len(cand_index_set)].sum(keepdims=True))

else:

# Sampling "n" from the geometric distribution and clipping it to

# the max_ngrams. Using p=0.2 default from the SpanBERT paper

# https://arxiv.org/pdf/1907.10529.pdf (Sec 3.1)

n = min(np_rng.geometric(0.2), max_ngrams)

- Span Mask

# 合并[MASK] 因为这里用的是Bert的mask函数,Bert是按字mask的,

# 这里把连续的mask合并成一个MASK从而达到span mask的效果

span_mask_souce = []

for t in source:

# 如果是连续的多个mask,则跳过

if len(span_mask_souce) > 0 \

and t is self.tokenizer.mask_token_id \

and span_mask_souce[-1] is self.tokenizer.mask_token_id:

continue

span_mask_souce.append(t)

source = torch.LongTensor(span_mask_souce)

预训练任务 - BART自回归掩码

- 注意BART的任务,自回归掩码

prev_output_tokens = torch.zeros_like(target)

# match the preprocessing in fairseq

prev_output_tokens[0] = self.tokenizer.sep_token_id

prev_output_tokens[1:] = target[:-1]

source_ = torch.full((self.max_seq_length,),

self.tokenizer.pad_token_id, dtype=torch.long)

source_[:source.shape[0]] = source

target_ = torch.full((self.max_seq_length,), -100, dtype=torch.long)

target_[:target.shape[0]] = target

prev_output_tokens_ = torch.full(

(self.max_seq_length,), self.tokenizer.pad_token_id, dtype=torch.long)

prev_output_tokens_[:prev_output_tokens.shape[0]] = prev_output_tokens

attention_mask = torch.full((self.max_seq_length,), 0, dtype=torch.long)

attention_mask[:source.shape[0]] = 1

model_inputs.append({

"input_ids": source_,

"labels": target_,

"decoder_input_ids": prev_output_tokens_,

"attention_mask": attention_mask,

})

- 除了算loss,还可以算acc

def comput_metrix(self, logits, labels):

label_idx = labels != -100

labels = labels[label_idx]

logits = logits[label_idx].view(-1, logits.size(-1))

y_pred = torch.argmax(logits, dim=-1)

y_pred = y_pred.view(size=(-1,))

y_true = labels.view(size=(-1,)).float()

corr = torch.eq(y_pred, y_true)

acc = torch.sum(corr.float())/labels.shape[0]

return acc

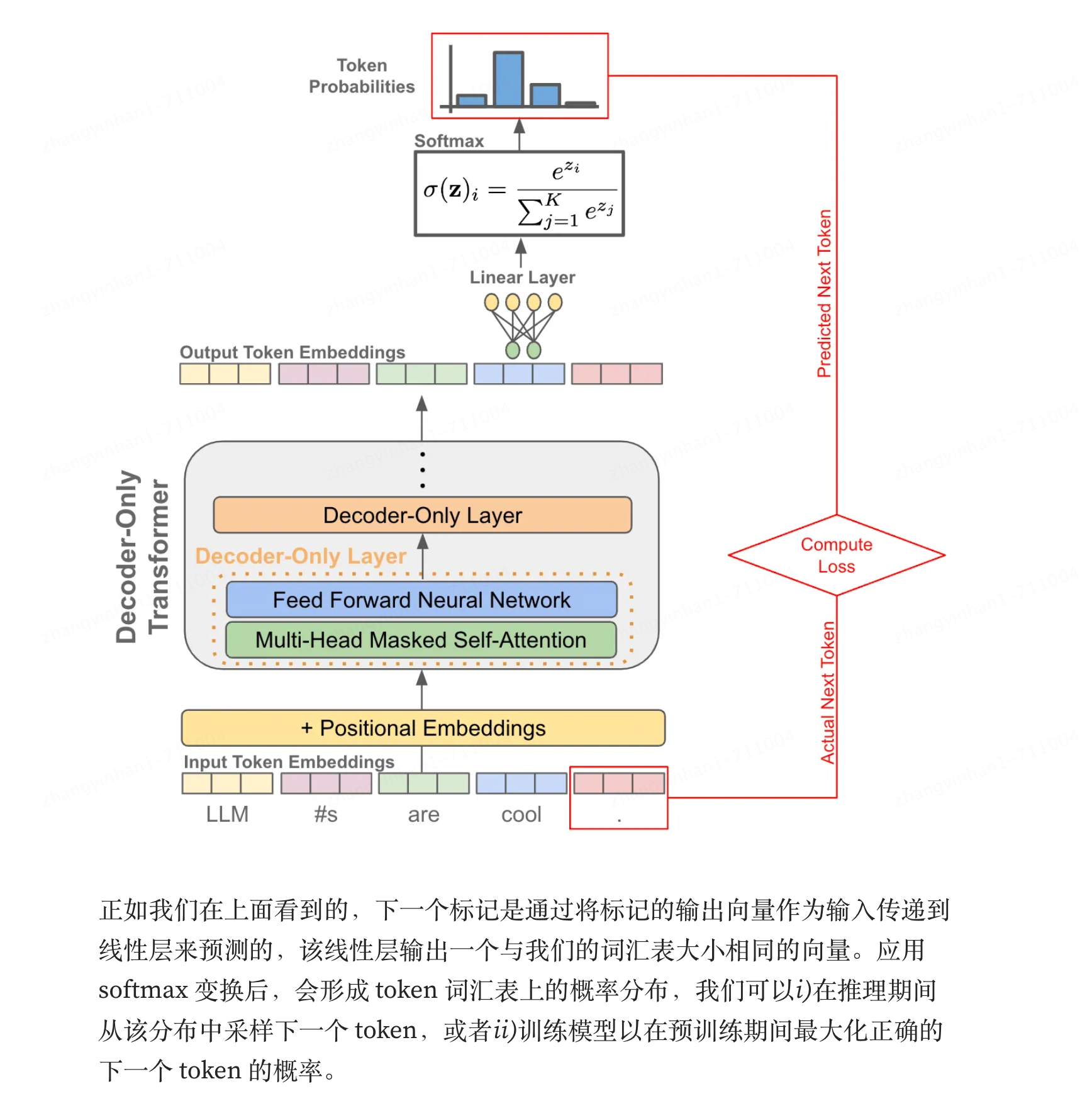

预训练任务 - Next Token Predict

- https://github.com/Morizeyao/GPT2-Chinese/blob/old_gpt_2_chinese_before_2021_4_22/train.py

- https://medium.com/@akash.kesrwani99/understanding-next-token-prediction-concept-to-code-1st-part-7054dabda347

outputs = model.forward(input_ids=batch_inputs, labels=batch_inputs)

# forward

transformer_outputs = self.transformer(

input_ids,

...

)

hidden_states = transformer_outputs[0]

lm_logits = self.lm_head(hidden_states)

loss = None

if labels is not None:

labels = labels.to(lm_logits.device)

shift_logits = lm_logits[..., :-1, :].contiguous()

shift_labels = labels[..., 1:].contiguous()

loss_fct = CrossEntropyLoss()

loss = loss_fct(

shift_logits.view(-1, shift_logits.size(-1)), shift_labels.view(-1)

)

loss, logits = outputs[:2]

shift_logits = lm_logits[..., :-1, :].contiguous() # sequence[:n-1]

shift_labels = labels[..., 1:].contiguous() # sequence[1:n]

自己写的代码:

model.train()

losses = []

for idx, data in enumerate(tqdm(loader, desc = f"Epoch {epoch}"), 0):

y = data["target_ids"].cuda()

y_ids = y[:, :-1].contiguous()

lm_labels = y[:, 1:].clone().detach()

lm_labels[y[:, 1:] == tokenizer.pad_token_id] = -100 # lm pad mask

ids = data["source_ids"].cuda()

mask = data["source_mask"].cuda()

outputs = model(

input_ids=ids, # 【0,n】

attention_mask=mask,

decoder_input_ids=y_ids, # 【0,n-1】

labels=lm_labels, # 【1,n】

)

loss = outputs[0].sum()

举个例子:

input_sequence = 【0,1,2,3,4,5】

Decoder的【:n-1】和【1:n】两个序列

shift_logits =【0,1,2,3,4】

shift_labels = 【1,2,3,4,5】

这两个sequence计算ce loss,效果就是0预测1,1预测2,…

- 也就是next token predict

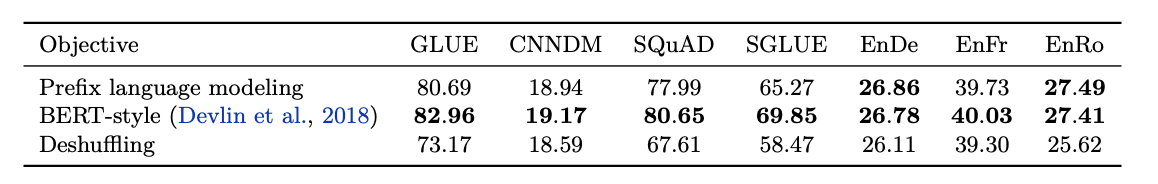

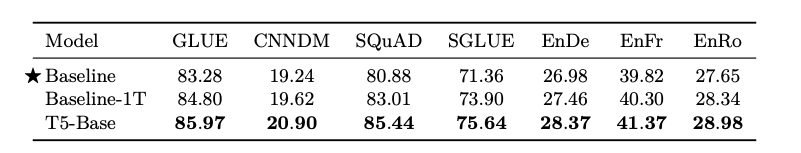

T5的实验结论

预训练对比

迁移学习的核心思想就是两阶段:Pre-Train,Fine-tuning

Preliminary work that applied the transfer learning paradigm of pre-training and fine-tuning all of the model’s parameters to NLP problems used a causal language modeling objective for pre-training

- 预训练阶段:之前主要是因果语言建模(自回归),但是Bert的MLM做Pre-Train性能更佳

MLM的方式又有讲究:

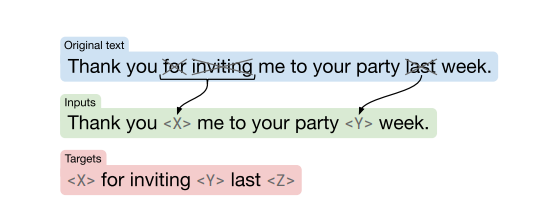

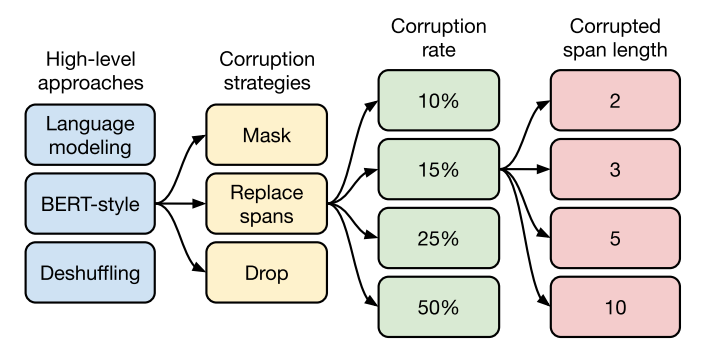

T5 Denoise的方式:

-

Token的增删替换打乱

-

Mask的比例

-

Mask合并成Span,Span的长度(spanbert中的几何分布)

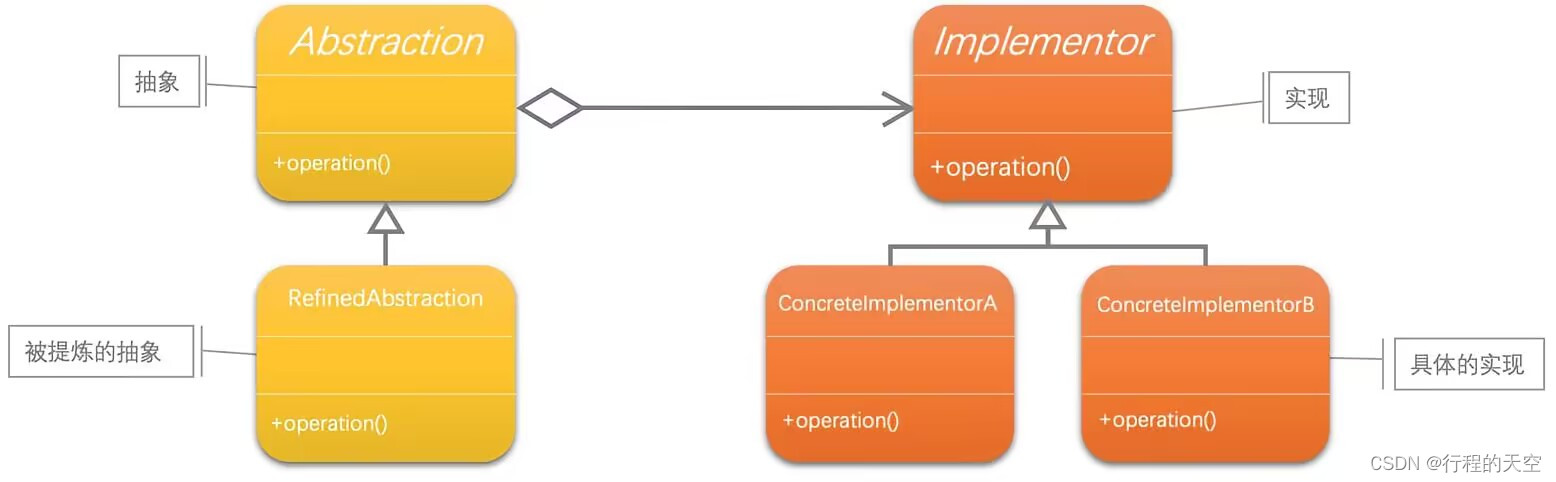

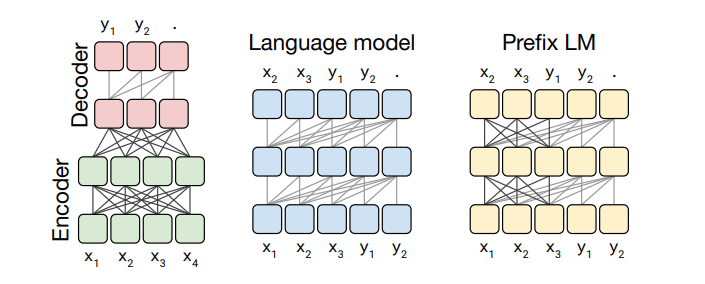

三大模型架构对应三大注意力机制:

总结一下实验策略:

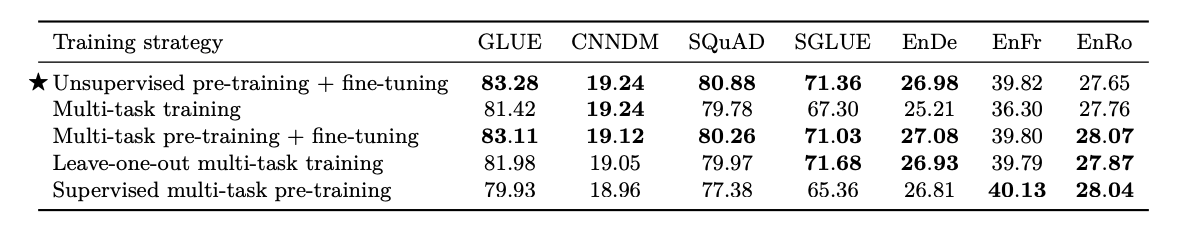

训练策略

微调策略

- adapter layers:在FFN层之后 加入dense-ReLU-dense blocks

- Gradual unfreezing:只微调最后一层

多任务学习

-

在预训练时运用多任务学习,对下游任务没有增益,采样策略没有大的变化

-

多任务训练集配比:N个任务,第M个任务训练集大小为K(最大值限制)

计算公式如下:

r m = m i n ( e m , K ) ∑ m i n ( e n , K ) r_m = \frac{min(e_m,K)}{\sum{min(e_n,K)}} rm=∑min(en,K)min(em,K) -

温度系数配比: T = 1,不变,T越大越接近等比例

r m = ( m i n ( e m , K ) ∑ m i n ( e n , K ) ) 1 T r_m =(\frac{min(e_m,K)}{\sum{min(e_n,K)}})^{\frac{1}{T}} rm=(∑min(en,K)min(em,K))T1 -

等比例采样

- 在微调时运用多任务学习

- 预训练时,一般都是无监督学习,那么CV里面会在预训练时使用有监督数据,这会有增益嘛?

结论:

- 多任务预训练后,再FT:和无监督预训练再FT差不多

- 不经过预训练直接微调:效果有点差

- 监督学习的多任务预训练:除了翻译任务,其他都非常差

Batch size 和训练step

Beam search

对于长序列的输出非常关键,对性能有提升

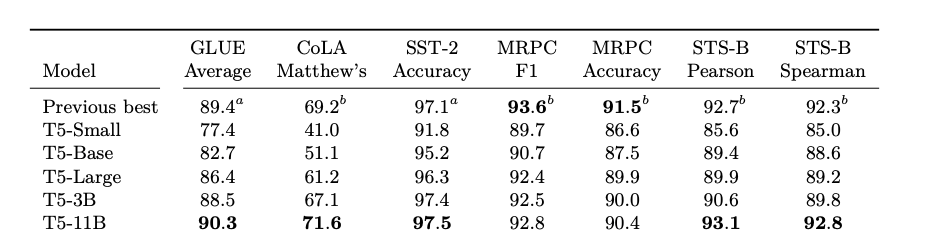

模型参数大小

- Scaling Law成立,涌现现象也存在

- 关于数据的sclaing law,加大训练语料有增益,但也不完全取决于sclaing law

最后总结

- 对于短序列生成,建议无监督的目标函数

- 在领域内无监督的数据上预训练,会对下游任务产生增益,重复的数据会损失性能

- 训练生成式模型,需要大量且多样的数据集

- 小模型在大量数据上训练,要优于大模型在少量数据上训练

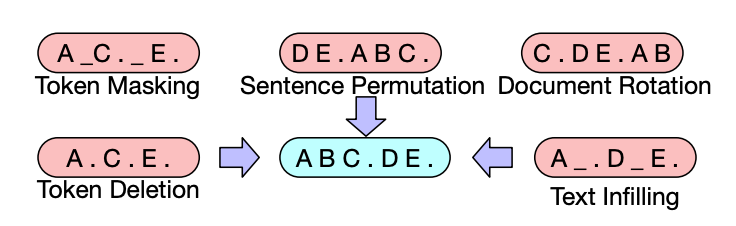

BART的预训练任务

- 5种denoise的策略

BART做机器翻译任务

-

we replace BART’s encoder embedding layer with a new randomly initialized encoder.

The model is trained end-to-end, which trains the new encoder to map foreign words into an input that BART can de-noise to English. -

The new encoder can use a separate vocabulary from the original BART model.

两阶段训练:

-

In the first step, we freeze most of BART parameters and only update the randomly initialized source encoder, the BART positional embeddings, and the self-attention input projection matrix of BART’s encoder first layer

-

In the second step, we train all model parameters for a small number of iterations.