文章目录

- 前言

- 一、相关介绍

- 1. 端口介绍

- 二、部署规划

- 1. 准备centos

- 2. 配置集群免密登录

- 3. 部署规划

- 三、ckman依赖部署

- 1. prometheus搭建

- 1.1 下载并解压

- 1.2 配置启停服务

- 1.3 promethues配置(可选,不影响ckman核心功能)

- 1.4 prometheus启停命令

- 1.4.1 启动prometheus

- 1.4.2 停止prometheus

- 1.4.3 重启prometheus

- 1.4.4 查看prometheus状态

- 2. node_exporter搭建

- 2.1 下载并解压

- 2.2 配置启停服务

- 2.3 node监控配置(可选,不影响ckman核心功能)

- 2.4 node_exporter启停命令

- 2.4.1 启动node_exporter

- 2.4.2 停止node_exporter

- 2.4.3 重启node_exporter

- 2.4.4 查看node_exporter状态

- 3. zookeeper搭建

- 3.1 单机搭建(忽略)

- 3.2 集群搭建

- 3.2.1 hadoop101节点操作

- 3.2.2 hadoop102节点操作

- 3.2.3 hadoop103节点操作

- 3.3 启动zookeeper

- 3.3.1 单机版启动

- 3.3.2 集群版启动

- 3.3.2.1 hadoop101节点操作

- 3.3.2.2 hadoop102节点操作

- 3.3.2.3 hadoop103节点操作

- 3.4 zookeeper命令

- 3.4.1 启动zookeeper

- 3.4.2 停止zookeeper

- 3.4.3 重启zookeeper

- 3.4.4 查看zookeeper状态

- 3.4.5 进入zookeeper客户端

- 3.5 zookeeper监控配置(可选,不影响ckman核心功能)

- 4. mysql搭建

- 5. 安装jdk

- 四、ckman部署

- 1. 下载和安装

- 1.1 rpm包方式

- 1.2 tar包方式(忽略)

- 1.2.1 下载和解压

- 1.2.2 启动

- 2. 启动与停止

- 2.1 启动

- 2.2 重启

- 2.3 停止

- 2.4 查看状态

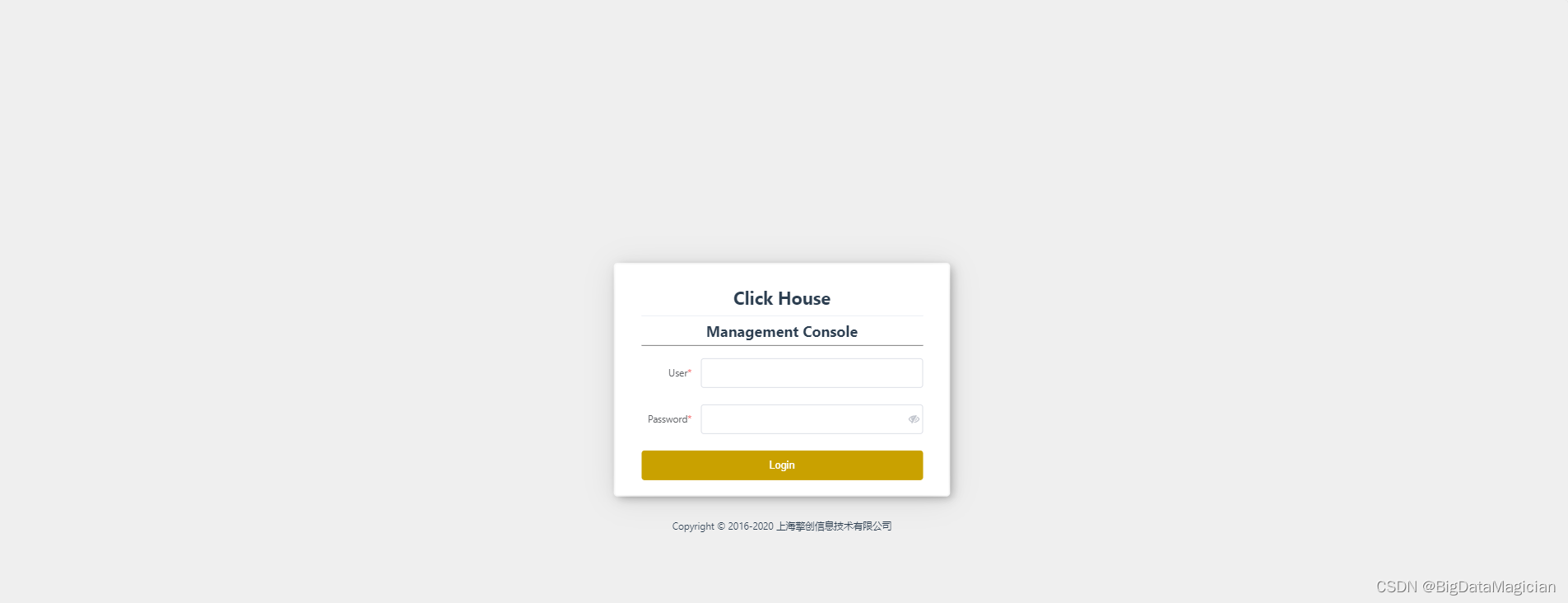

- 3. 浏览器访问

- 五、ClickHouse集群部署

- 1. 下载rpm包

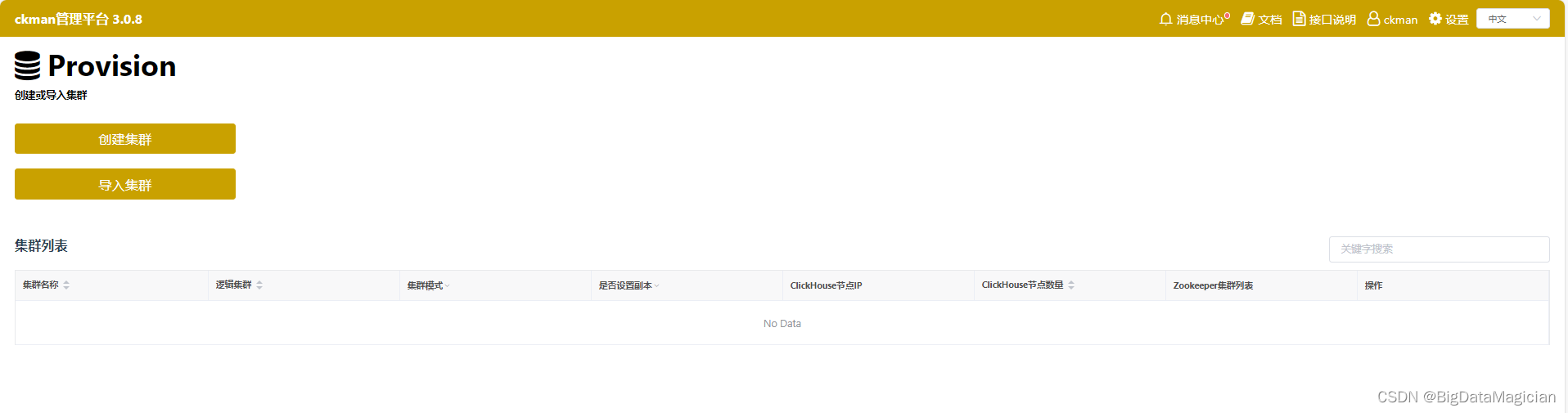

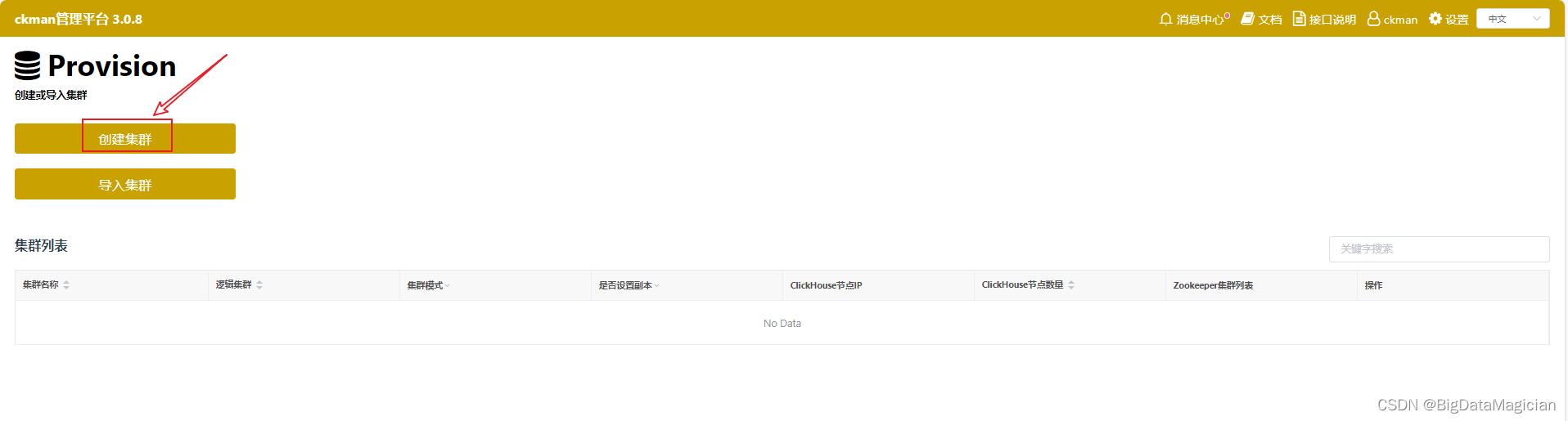

- 2. 使用ckman部署clickhouse集群

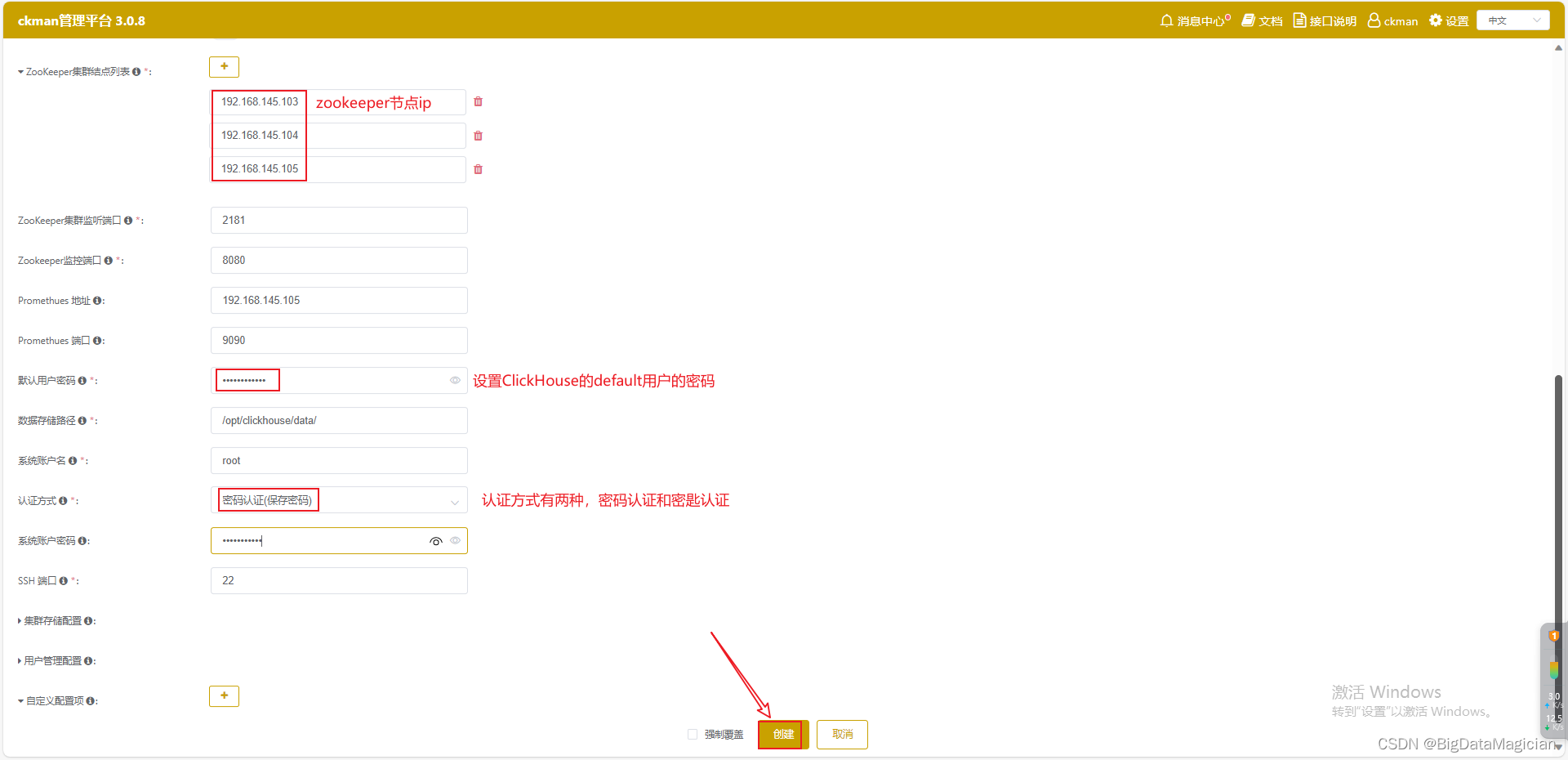

- 2.1 创建集群

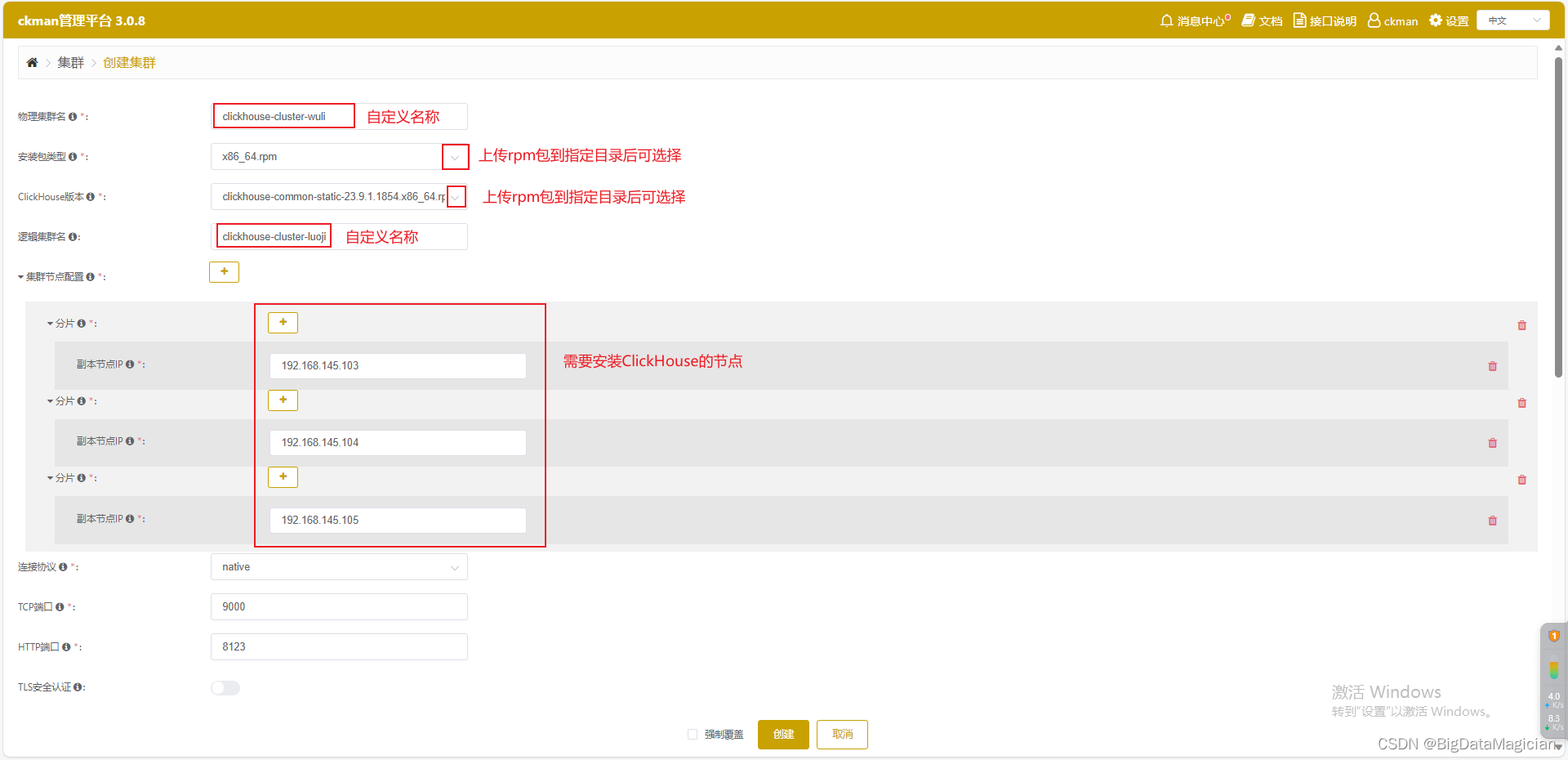

- 2.2 填写集群配置信息

- 2.3 clickhouse监控配置(可选,不影响ckman核心功能)

- 总结

前言

本文介绍了CKman的部署和ClickHouse集群的搭建过程,包括准备环境、安装依赖、配置集群免密登录、部署CKman和ClickHouse等步骤。还介绍了如何使用Prometheus、Node Exporter监控工具以及Zookeeper等组件,并提供了MySQL和JDK的安装方法。最后,详细说明了在CKman中创建集群并进行监控配置的操作步骤。

一、相关介绍

1. 端口介绍

| 端口 | 描述 |

|---|---|

| 8808 | ckman管理平台端口 |

| 2181 | zookeeper 客户端连接端口,用于客户端与Zookeeper集群进行通信的TCP/IP连接 |

| 2888 | zookeeper 选举通信端口,用于服务器之间进行Leader选举的TCP/IP通信 |

| 3888 | zookeeper 数据同步和复制端口,用于将数据从Leader节点复制到Follower节点 |

| 8080 | zookeeper 暴露给四字命令如mntr等的监控端口,3.5.0以上版本支持 |

| 9000 | ClickHouse 客户端连接端口,用于与ClickHouse服务器建立TCP/IP连接 |

| 8123 | HTTP/Web接口访问端口,可通过HTTP协议执行查询并获取结果 |

| 9009 | ClickHouse 用于低级数据访问的服务器间通信端口。用于数据交换、复制和服务器间通信 |

| 9363 | ClickHouse 的 Prometheus 默认指标端口 |

| 9090 | Prometheus监控服务暴露指标数据的HTTP访问接口端口 |

| 9100 | gRPC 端口 |

二、部署规划

1. 准备centos

准备三台centos,我准备的如下。

| ip地址 | 主机名 |

|---|---|

| 192.168.145.103 | hadoop101 |

| 192.168.145.103 | hadoop102 |

| 192.168.145.105 | hadoop103 |

修改/etc/hosts文件,配置主机名映射为ip地址。

hadoop101、hadoop102、hadoop103节点都要执行。

echo >> /etc/hosts

echo '192.168.145.103 hadoop101' >> /etc/hosts

echo '192.168.145.104 hadoop102' >> /etc/hosts

echo '192.168.145.105 hadoop103' >> /etc/hosts

2. 配置集群免密登录

hadoop101节点执行。

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

ssh-copy-id 192.168.145.104

ssh-copy-id 192.168.145.105

hadoop102节点执行。

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

ssh-copy-id 192.168.145.103

ssh-copy-id 192.168.145.105

hadoop103节点执行。

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

ssh-copy-id 192.168.145.13

ssh-copy-id 192.168.145.104

3. 部署规划

| 192.168.145.103 | 192.168.145.104 | 192.168.145.105 | |

|---|---|---|---|

| clickhouse | clickhouse-common-static-23.9.1.1854 clickhouse-client-23.9.1.1854 clickhouse-server-23.9.1.1854 | clickhouse-common-static-23.9.1.1854 clickhouse-client-23.9.1.1854 clickhouse-server-23.9.1.1854 | clickhouse-common-static-23.9.1.1854 clickhouse-client-23.9.1.1854 clickhouse-server-23.9.1.1854 |

| ckman | ckman-3.0.8 | ||

| zookeeper | zookeeper-3.8.1 | zookeeper-3.8.1 | zookeeper-3.8.1 |

| prometheus(非必需) | prometheus-2.51.0 | ||

| node_exporter(非必需) | node_exporter-1.5.0 | node_exporter-1.5.0 | node_exporter-1.5.0 |

| nacos(非必需) |

三、ckman依赖部署

- prometheus(非必需)

- node_exporter(非必需)

- nacos(>1.4)(非必需)

- zookeeper(>3.6.0, 推荐 )

- mysql (当持久化策略设置为mysql时必需)

- jdk1.8+

1. prometheus搭建

1.1 下载并解压

mkdir -p /opt/module

wget https://github.com/prometheus/prometheus/releases/download/v2.51.0/prometheus-2.51.0.linux-amd64.tar.gz -P /tmp

tar -zxvf /tmp/prometheus-2.51.0.linux-amd64.tar.gz -C /opt/module

1.2 配置启停服务

创建/usr/lib/systemd/system/prometheus.service文件。

vim /usr/lib/systemd/system/prometheus.service

添加如下内容。

[Unit]

Description=Prometheus

Documentation=https://prometheus.io/

After=network.target

[Service]

Type=simple

ExecStart=/opt/module/prometheus-2.51.0.linux-amd64/prometheus --config.file=/opt/module/prometheus-2.51.0.linux-amd64/prometheus.yml --web.listen-address=0.0.0.0:9090

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

1.3 promethues配置(可选,不影响ckman核心功能)

修改/opt/module/prometheus-2.51.0.linux-amd64/prometheus.yml文件,添加如下内容。

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: 'node_exporter'

scrape_interval: 10s

static_configs:

- targets: ['192.168.145.103:9100', '192.168.145.104:9100', '192.168.145.105:9100']

- job_name: 'clickhouse'

scrape_interval: 10s

static_configs:

- targets: ['192.168.145.103:9363', '192.168.145.104:9363', '192.168.145.105:9363']

- job_name: 'zookeeper'

scrape_interval: 10s

static_configs:

- targets: ['192.168.145.103:7070', '192.168.145.104:7070', '192.168.145.105:7070']

1.4 prometheus启停命令

1.4.1 启动prometheus

systemctl start prometheus

1.4.2 停止prometheus

systemctl stop prometheus

1.4.3 重启prometheus

systemctl restart prometheus

1.4.4 查看prometheus状态

systemctl status prometheus

2. node_exporter搭建

2.1 下载并解压

mkdir -p /opt/module/

wget https://github.com/prometheus/node_exporter/releases/download/v1.5.0/node_exporter-1.5.0.linux-amd64.tar.gz -P /tmp

tar -zxvf /tmp/node_exporter-1.5.0.linux-amd64.tar.gz -C /opt/module/

2.2 配置启停服务

创建/usr/lib/systemd/system/node_exporter.service文件。

vim /usr/lib/systemd/system/node_exporter.service

添加如下内容。

[Unit]

Description=node_exporter

Documentation=https://github.com/prometheus/node_exporter

After=network.target

[Service]

Type=simple

ExecStart=/opt/module/node_exporter-1.5.0.linux-amd64/node_exporter

Restart=on-failure

[Install]

WantedBy=multi-user.target

2.3 node监控配置(可选,不影响ckman核心功能)

node_exporter是用来监控clickhouse节点所在机器的一些系统指标的一款工具,因此需要安装在ck节点所在的机器,默认监听9100端口。

2.4 node_exporter启停命令

2.4.1 启动node_exporter

systemctl start node_exporter

2.4.2 停止node_exporter

systemctl stop node_exporter

2.4.3 重启node_exporter

systemctl restart node_exporter

2.4.4 查看node_exporter状态

systemctl status node_exporter

3. zookeeper搭建

3.1 单机搭建(忽略)

试用功能不想搭建zookeeper集群时可搭建zookeeper单机版。

wget --no-check-certificate https://archive.apache.org/dist/zookeeper/zookeeper-3.8.1/apache-zookeeper-3.8.1-bin.tar.gz -P /tmp

mkdir -p /opt/soft/zookeeper

tar -zxvf /tmp/apache-zookeeper-3.8.1-bin.tar.gz -C /opt/soft/zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

cp ./conf/zoo_sample.cfg ./conf/zoo.cfg

sed -i "s|^dataDir=.*|dataDir=./tmp/zookeeper|" ./conf/zoo.cfg

sed -i "s|^clientPort=.*|clientPort=12181|" ./conf/zoo.cfg

3.2 集群搭建

3.2.1 hadoop101节点操作

mkdir -p /opt/soft/zookeeper

wget --no-check-certificate https://archive.apache.org/dist/zookeeper/zookeeper-3.8.1/apache-zookeeper-3.8.1-bin.tar.gz -P /tmp

tar -zxvf /tmp/apache-zookeeper-3.8.1-bin.tar.gz -C /opt/soft/zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

cp ./conf/zoo_sample.cfg ./conf/zoo.cfg

sed -i "s|^dataDir=.*|dataDir=./tmp/zookeeper|" ./conf/zoo.cfg

sed -i "s|^clientPort=.*|clientPort=12181|" ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo "server.1=hadoop101:12888:13888" >> ./conf/zoo.cfg

echo "server.2=hadoop102:12888:13888" >> ./conf/zoo.cfg

echo "server.3=hadoop103:12888:13888" >> ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo 'metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider' >> ./conf/zoo.cfg

echo 'metricsProvider.httpPort=7070' >> ./conf/zoo.cfg

echo 'admin.enableServer=true' >> ./conf/zoo.cfg

echo 'admin.serverPort=18080' >> ./conf/zoo.cfg

./bin/zkServer.sh start

echo 1 > ./tmp/zookeeper/myid

./bin/zkServer.sh restart

3.2.2 hadoop102节点操作

mkdir -p /opt/soft/zookeeper

wget --no-check-certificate https://archive.apache.org/dist/zookeeper/zookeeper-3.8.1/apache-zookeeper-3.8.1-bin.tar.gz -P /tmp

tar -zxvf /tmp/apache-zookeeper-3.8.1-bin.tar.gz -C /opt/soft/zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

cp ./conf/zoo_sample.cfg ./conf/zoo.cfg

sed -i "s|^dataDir=.*|dataDir=./tmp/zookeeper|" ./conf/zoo.cfg

sed -i "s|^clientPort=.*|clientPort=12181|" ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo "server.1=hadoop101:12888:13888" >> ./conf/zoo.cfg

echo "server.2=hadoop102:12888:13888" >> ./conf/zoo.cfg

echo "server.3=hadoop103:12888:13888" >> ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo 'metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider' >> ./conf/zoo.cfg

echo 'metricsProvider.httpPort=7070' >> ./conf/zoo.cfg

echo 'admin.enableServer=true' >> ./conf/zoo.cfg

echo 'admin.serverPort=18080' >> ./conf/zoo.cfg

./bin/zkServer.sh start

echo 2 > ./tmp/zookeeper/myid

./bin/zkServer.sh restart

3.2.3 hadoop103节点操作

mkdir -p /opt/soft/zookeeper

wget --no-check-certificate https://archive.apache.org/dist/zookeeper/zookeeper-3.8.1/apache-zookeeper-3.8.1-bin.tar.gz -P /tmp

tar -zxvf /tmp/apache-zookeeper-3.8.1-bin.tar.gz -C /opt/soft/zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

cp ./conf/zoo_sample.cfg ./conf/zoo.cfg

sed -i "s|^dataDir=.*|dataDir=./tmp/zookeeper|" ./conf/zoo.cfg

sed -i "s|^clientPort=.*|clientPort=12181|" ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo "server.1=hadoop101:12888:13888" >> ./conf/zoo.cfg

echo "server.2=hadoop102:12888:13888" >> ./conf/zoo.cfg

echo "server.3=hadoop103:12888:13888" >> ./conf/zoo.cfg

echo >> ./conf/zoo.cfg

echo 'metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider' >> ./conf/zoo.cfg

echo 'metricsProvider.httpPort=7070' >> ./conf/zoo.cfg

echo 'admin.enableServer=true' >> ./conf/zoo.cfg

echo 'admin.serverPort=18080' >> ./conf/zoo.cfg

./bin/zkServer.sh start

echo 3 > ./tmp/zookeeper/myid

./bin/zkServer.sh restart

3.3 启动zookeeper

3.3.1 单机版启动

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh start

查看是否启动成功。

./bin/zkServer.sh status

3.3.2 集群版启动

3.3.2.1 hadoop101节点操作

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh start

3.3.2.2 hadoop102节点操作

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh start

3.3.2.3 hadoop103节点操作

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh start

3.4 zookeeper命令

3.4.1 启动zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh start

3.4.2 停止zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh stop

3.4.3 重启zookeeper

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh restart

3.4.4 查看zookeeper状态

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkServer.sh status

3.4.5 进入zookeeper客户端

cd /opt/soft/zookeeper/apache-zookeeper-3.8.1-bin

./bin/zkCli.sh -server localhost:12181

3.5 zookeeper监控配置(可选,不影响ckman核心功能)

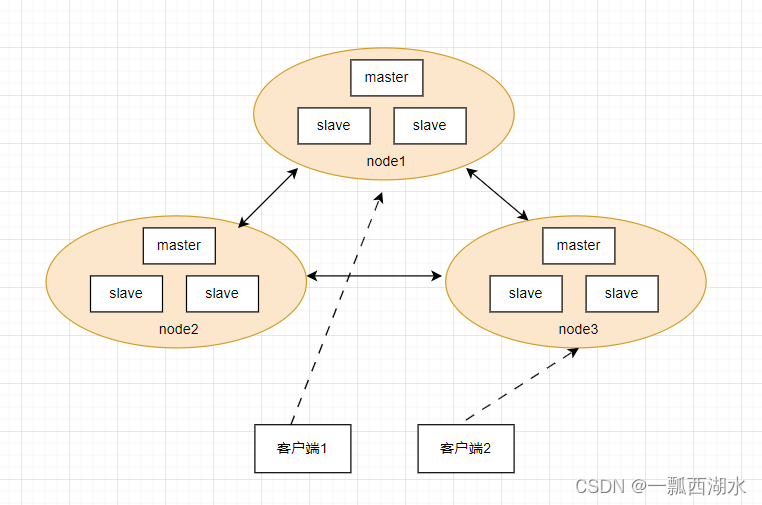

zookeeper集群是clickhouse实现分布式集群的重要组件。由于clickhouse数据量极大,避免给zookeeper带来太大的压力,最好给clickhouse单独部署一套集群,不要和其他业务公用。

修改zoo.cfg配置文件,添加如下配置。

metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

metricsProvider.httpPort=7000 #暴露给promethues的监控端口

admin.enableServer=true

admin.serverPort=8080 #暴露给四字命令如mntr等的监控端口,3.5.0以上版本支持

配置之后访问http://localhost:8080/commands/mntr,检查是否能正常访问。

4. mysql搭建

- mysql5.7.44自动化安装教程

- mysql5.7.37自动化安装教程

5. 安装jdk

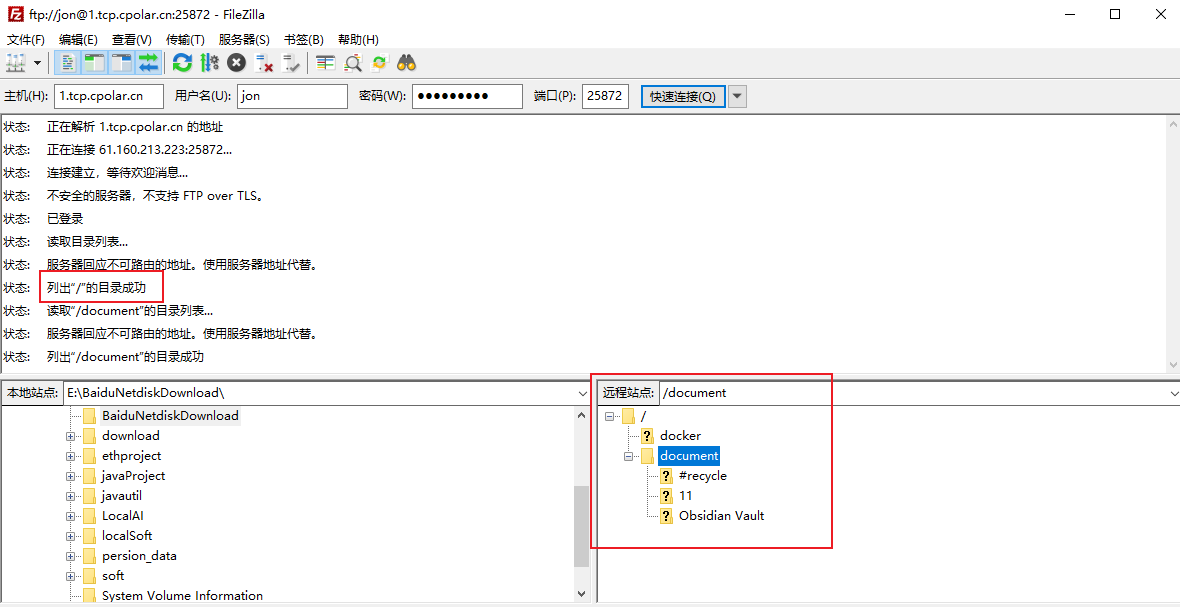

hadoop101、hadoop102、hadoop103节点都要执行。

下载地址:https://www.oracle.com/java/technologies/downloads/#java8

下载后上传到/tmp目录下。

然后执行下面命令,用于创建目录、解压,并设置系统级环境变量。

mkdir -p /opt/module

tar -zxvf /tmp/jdk-8u391-linux-x64.tar.gz -C /opt/module/

echo >> /etc/profile

echo '#JAVA_HOME' >> /etc/profile

echo "export JAVA_HOME=/opt/module/jdk1.8.0_391" >> /etc/profile

echo 'export PATH=$PATH:$JAVA_HOME/bin' >> /etc/profile

source /etc/profile

四、ckman部署

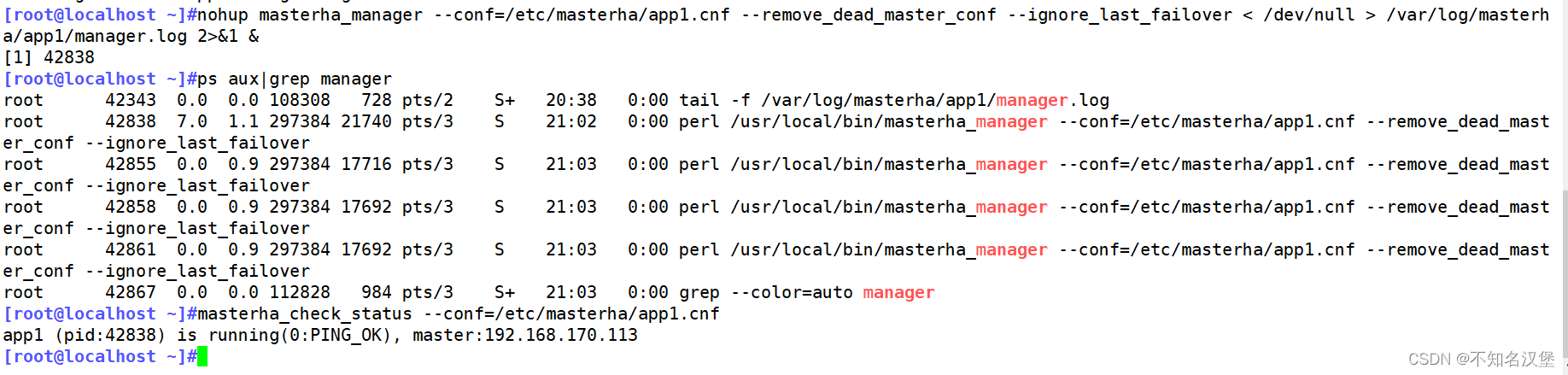

点击可查看ckman官方说明文档。

1. 下载和安装

1.1 rpm包方式

wget https://github.com/housepower/ckman/releases/download/v3.0.8/ckman-3.0.8.x86_64.rpm -P /tmp

rpm -ivh /tmp/ckman-3.0.8.x86_64.rpm

# yum -y install /tmp/ckman-3.0.8.x86_64.rpm

1.2 tar包方式(忽略)

1.2.1 下载和解压

wget https://github.com/housepower/ckman/releases/download/v3.0.8/ckman-3.0.8-240321.Linux.amd64.tar.gz -P /tmp

mkdir -p /opt/software

tar -zxvf /tmp/ckman-3.0.8-240321.Linux.amd64.tar.gz -C /opt/software

1.2.2 启动

cd /opt/software/ckman/bin

./start

2. 启动与停止

2.1 启动

systemctl start ckman

2.2 重启

systemctl restart ckman

2.3 停止

systemctl stop ckman

2.4 查看状态

systemctl status ckman

3. 浏览器访问

浏览器输入 http://localhost:8808 即可访问 ckman 的UI页面。

ckman默认的登录用户为ckman,密码为Ckman123456!。

登录之后,点击右上方的语言,可以选择中文;点击文档,可以查看ckman的相关配置说明和介绍。

五、ClickHouse集群部署

1. 下载rpm包

在安装ckman的主机上创建/etc/ckman/package/clickhouse目录,下载rpm包并上传到/etc/ckman/package/clickhouse目录下。

mkdir -p /etc/ckman/package/clickhouse

chmod a+w /etc/ckman/package/clickhouse

wget https://packages.clickhouse.com/rpm/stable/clickhouse-client-23.9.1.1854.x86_64.rpm -P /etc/ckman/package/clickhouse

wget https://packages.clickhouse.com/rpm/stable/clickhouse-common-static-23.9.1.1854.x86_64.rpm -P /etc/ckman/package/clickhouse

wget https://packages.clickhouse.com/rpm/stable/clickhouse-server-23.9.1.1854.x86_64.rpm -P /etc/ckman/package/clickhouse

wget https://packages.clickhouse.com/rpm/stable/clickhouse-common-static-dbg-23.9.1.1854.x86_64.rpm -P /etc/ckman/package/clickhouse

分别在hadoop101、hadoop102和hadoop03创建数据存储路径。

mkdir -p /opt/clickhouse/data/

2. 使用ckman部署clickhouse集群

2.1 创建集群

打开ckman的UI页面,点击创建集群。

2.2 填写集群配置信息

认证方式分为密码认证和公匙认证两种,密码认证方式直接填写安装ckman的root用户(或具有sudo权限的用户)密码即可。公匙认证需要把公匙文件id_rsa复制到/etc/ckman/conf/目录下,并添加可读权限。

cp /root/.ssh/id_rsa /etc/ckman/conf/

chmod a+r /etc/ckman/conf/id_rsa

填写好集群配置信息后,等待安装完成即可。

2.3 clickhouse监控配置(可选,不影响ckman核心功能)

ckman部署的clickhouse集群默认监听了9363端口上报metric给prometheus,因此无需做任何配置。

如果集群是导入的,请确保/etc/clickhouse-server/config.xml中有以下配置内容:

<prometheus>

<endpoint>/metrics</endpoint>

<port>9363</port>

<metrics>true</metrics>

<events>true</events>

<asynchronous_metrics>true</asynchronous_metrics>

<status_info>true</status_info>

</prometheus>

总结

通过使用CKman工具可以方便地管理ClickHouse集群,在浏览器上进行操作并监控各个节点状态。本文提供了基于RPM包方式进行安装以及使用CKman创建点击房子簇所需填写信息等步骤,并描述如何在需要时为Zookeeper、Prometheus等组件添加监控功能。这些步骤将帮助用户快速搭建一个可靠高效且易于管理的ClickHouse数据分析平台。

希望本教程对您有所帮助!如有任何疑问或问题,请随时在评论区留言。感谢阅读!