基本介绍

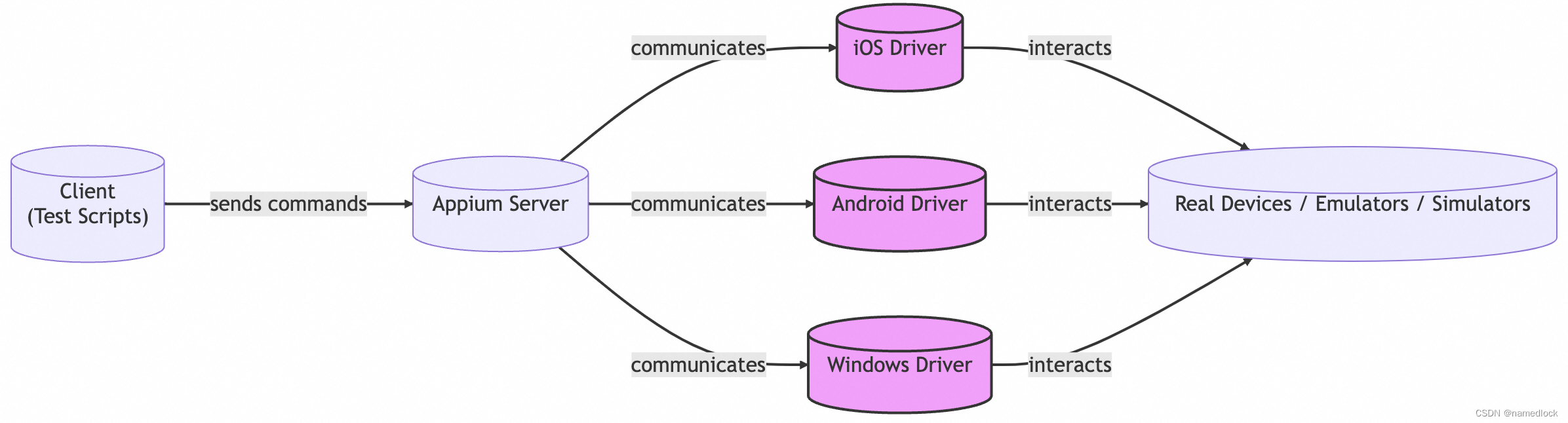

WebRTC(Web Real-Time Communications)是一项实时通讯技术,它允许网络应用或者站点,在不借助中间媒介的情况下,建立浏览器之间点对点(Peer-to-Peer)的连接,实现视频流和(或)音频流或者其他任意数据的传输。WebRTC 包含的这些标准使用户在无需安装任何插件或者第三方的软件的情况下,创建点对点(Peer-to-Peer)的数据分享和电话会议成为可能。

请浏览 MDN文档 WebRTC API 中文指南 了解WebRTC

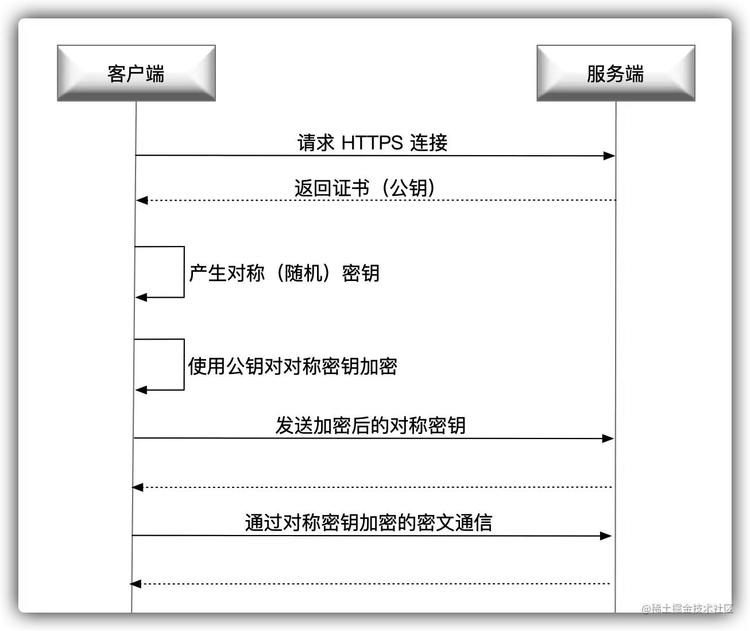

使用websocket实现 WebRTC 建立连接时的信令服务,即交换传递 SDP和ICE两种数据,SDP分为Offer和Answer两类数据。

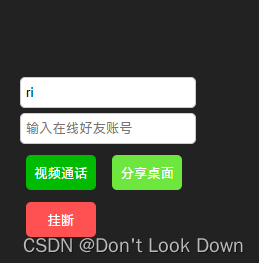

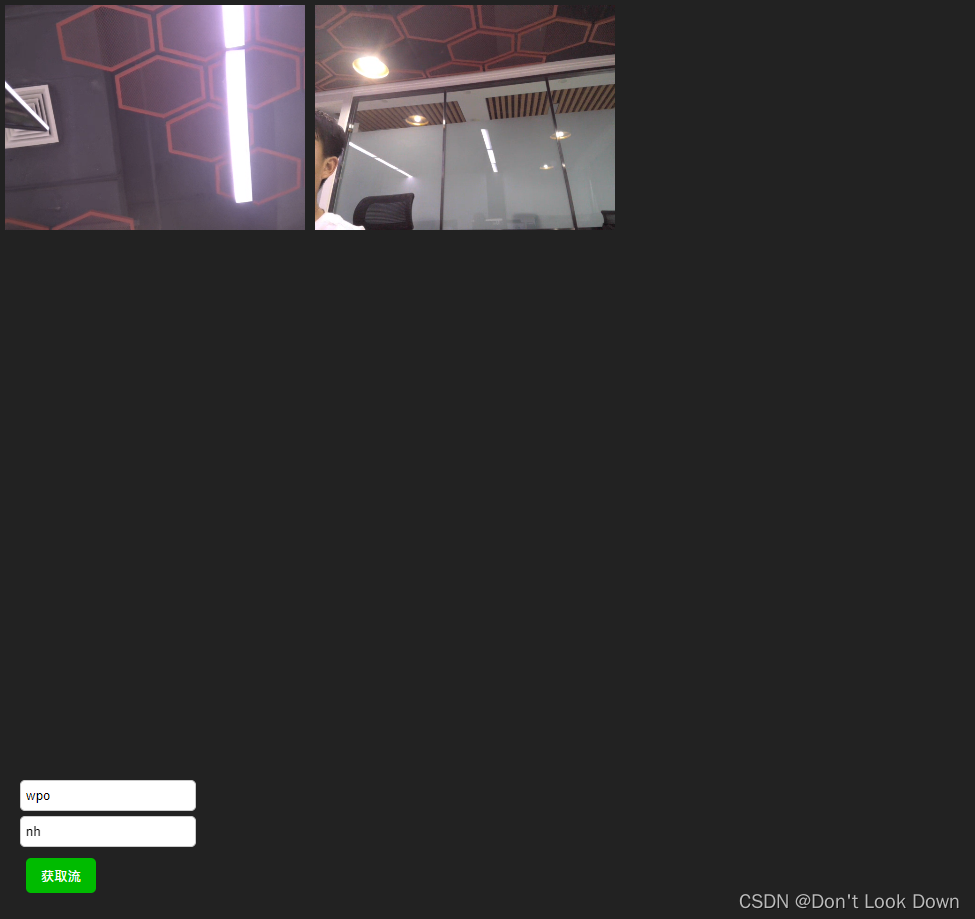

实现了一对一视频通话、共享桌面、多对多 监控页面、挂断后保存视频到本地 等功能。

效果:

局域网测试 一对一 视频 40-80ms延迟:

多对多 监控页

异常问题

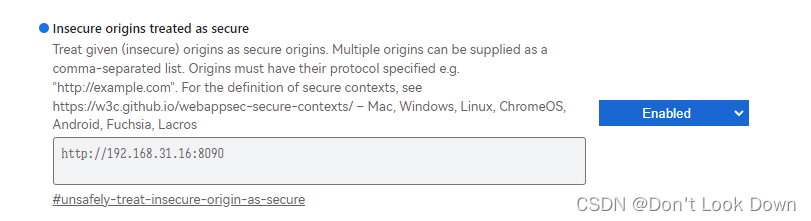

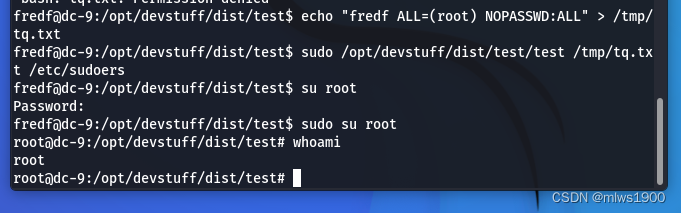

1.http协议下安全性原因导致无法调用摄像头和麦克风

chrome://flags/ 配置安全策略 或者 配置本地的https环境

2. 开启了防火墙,webRTC连接失败,

windows防火墙-高级设置-入站规则-新建规则-端口 ,udp

UDP: 32355-65535 放行

方便测试 直接关闭防火墙也行。

3. 录制webm视频 没有时间轴,使用fix-webm-duration js解决。

demo代码如下

一对一 通话页面

<!DOCTYPE>

<html>

<head>

<meta charset="UTF-8">

<title>WebRTC + WebSocket</title>

<meta name="viewport" content="width=device-width,initial-scale=1.0,user-scalable=no">

<style>

html,

body {

margin: 0;

padding: 0;

}

#main {

position: absolute;

width: 100%;

height: 100%;

}

#localVideo {

position: absolute;

background: #757474;

top: 10px;

right: 10px;

width: 200px;

/* height: 150px; */

z-index: 2;

}

#remoteVideo {

position: absolute;

top: 0px;

left: 0px;

width: 100%;

height: 100%;

background: #222;

}

#buttons {

z-index: 3;

bottom: 20px;

left: 20px;

position: absolute;

}

input {

border: 1px solid #ccc;

padding: 7px 0px;

border-radius: 5px;

padding-left: 5px;

margin-bottom: 5px;

}

input :focus {

border-color: #66afe9;

outline: 0;

-webkit-box-shadow: inset 0 1px 1px rgba(0, 0, 0, .075), 0 0 8px rgba(102, 175, 233, .6);

box-shadow: inset 0 1px 1px rgba(0, 0, 0, .075), 0 0 8px rgba(102, 175, 233, .6)

}

#call {

width: 70px;

height: 35px;

background-color: #00BB00;

border: none;

color: white;

border-radius: 5px;

}

#hangup {

width: 70px;

height: 35px;

background-color: #FF5151;

border: none;

color: white;

border-radius: 5px;

}

button {

width: 70px;

height: 35px;

margin: 6px;

background-color: #6de73d;

border: none;

color: white;

border-radius: 5px;

}

</style>

</head>

<body>

<div id="main">

<video id="remoteVideo" playsinline autoplay></video>

<video id="localVideo" playsinline autoplay muted></video>

<div id="buttons">

<input id="myid" readonly /><br />

<input id="toUser" placeholder="输入在线好友账号" /><br />

<button id="call" onclick="call(true)">视频通话</button>

<button id="deskcall" onclick="call(false)">分享桌面</button><br />

<button id="hangup">挂断</button>

</div>

</div>

</body>

<!--<script src="/js/adapter-2021.js" type="text/javascript"></script>-->

<script src="./fix-webm-duration.js" type="text/javascript"></script>

<script type="text/javascript">

function generateRandomLetters(length) {

let result = '';

const characters = 'abcdefghijklmnopqrstuvwxyz'; // 字母表

for (let i = 0; i < length; i++) {

const randomIndex = Math.floor(Math.random() * characters.length);

const randomLetter = characters[randomIndex];

result += randomLetter;

}

return result;

}

let username = generateRandomLetters(2);

document.getElementById('myid').value = username;

let localVideo = document.getElementById('localVideo');

let remoteVideo = document.getElementById('remoteVideo');

let websocket = null;

let peer = {};

let candidate = null;

let stream = null;

/* WebSocket */

function WebSocketInit() {

//判断当前浏览器是否支持WebSocket

if ('WebSocket' in window) {

websocket = new WebSocket("ws://192.168.31.14:8181/webrtc/" + username);

} else {

alert("当前浏览器不支持WebSocket!");

}

//连接发生错误的回调方法

websocket.onerror = function (e) {

alert("WebSocket连接发生错误!");

};

//连接关闭的回调方法

websocket.onclose = function () {

console.error("WebSocket连接关闭");

};

//连接成功建立的回调方法

websocket.onopen = function () {

console.log("WebSocket连接成功");

};

//接收到消息的回调方法

websocket.onmessage = async function (event) {

let { type, fromUser, toUser, msg, sdp, iceCandidate } = JSON.parse(event.data.replace(/\n/g, "\\n").replace(/\r/g, "\\r"));

console.log(type, fromUser, toUser);

if (type === 'hangup') {

console.log(msg);

document.getElementById('hangup').click();

return;

}

if (type === 'call_start') {

document.getElementById('hangup').click();

let msg = "0"

if (confirm(fromUser + "发起视频通话,确定接听吗") == true) {

document.getElementById('toUser').value = fromUser;

WebRTCInit(fromUser);

msg = "1"

document.getElementById('toUser').style.visibility = 'hidden';

document.getElementById('myid').style.visibility = 'hidden';

}

websocket.send(JSON.stringify({

type: "call_back",

toUser: fromUser,

fromUser: username,

msg: msg

}));

return;

}

if (type === 'call_back') {

if (msg === "1") {

console.log(document.getElementById('toUser').value + "同意视频通话");

await getVideo(callType);

console.log(peer, fromUser)

stream.getTracks().forEach(track => {

peer[fromUser].addTrack(track, stream);

});

let offer = await peer[fromUser].createOffer();

await peer[fromUser].setLocalDescription(offer);

let newOffer = offer.toJSON();

newOffer["fromUser"] = username;

newOffer["toUser"] = document.getElementById('toUser').value;

websocket.send(JSON.stringify(newOffer));

} else if (msg === "0") {

alert(document.getElementById('toUser').value + "拒绝视频通话");

document.getElementById('hangup').click();

} else {

alert(msg);

document.getElementById('hangup').click();

}

return;

}

if (type === 'offer') {

await getVideo(callType);

console.log(peer, fromUser, stream)

stream.getTracks().forEach(track => {

peer[fromUser].addTrack(track, stream);

});

await peer[fromUser].setRemoteDescription(new RTCSessionDescription({ type, sdp }));

let answer = await peer[fromUser].createAnswer();

newAnswer = answer.toJSON();

newAnswer["fromUser"] = username;

newAnswer["toUser"] = fromUser;

websocket.send(JSON.stringify(newAnswer));

await peer[fromUser].setLocalDescription(newAnswer);

return;

}

if (type === 'answer') {

peer[fromUser].setRemoteDescription(new RTCSessionDescription({ type, sdp }));

return;

}

if (type === '_ice') {

peer[fromUser].addIceCandidate(iceCandidate);

return;

}

if (type === 'getStream') {

WebRTCInit(fromUser);

stream.getTracks().forEach(track => {

peer[fromUser].addTrack(track, stream);

});

let offer = await peer[fromUser].createOffer();

await peer[fromUser].setLocalDescription(offer);

let newOffer = offer.toJSON();

newOffer["fromUser"] = username;

newOffer["toUser"] = fromUser;

websocket.send(JSON.stringify(newOffer));

}

}

}

async function getVideo(callType) {

if (stream == null) {

if (callType) {

//创建本地视频并发送offer

stream = await navigator.mediaDevices.getUserMedia({

// video: {

// // width: 2560, height: 1440,

// // width: 1920, height: 1080,

// width: 1280, height: 720,

// // width: 640, height: 480,

// // 强制后置

// // facingMode: { exact: "environment" },

// // 前置 如果有的话

// facingMode: "user",

// // 受限带宽传输时,采用低帧率

// frameRate: { ideal: 15, max: 25 }

// },

video: true,

audio: true

})

} else {

stream = await navigator.mediaDevices.getDisplayMedia({ video: true })

}

startRecorder(stream);

localVideo.srcObject = stream;

}

}

/* WebRTC */

function WebRTCInit(userId) {

if (peer[userId]) {

peer[userId].close();

}

const p = new RTCPeerConnection();

//ice

p.onicecandidate = function (e) {

if (e.candidate) {

websocket.send(JSON.stringify({

type: '_ice',

toUser: userId,

fromUser: username,

iceCandidate: e.candidate

}));

}

};

//track

p.ontrack = function (e) {

if (e && e.streams) {

remoteVideo.srcObject = e.streams[0];

}

};

peer[userId] = p;

console.log(peer)

}

//

let callType = true;

function call(ct) {

let toUser = document.getElementById('toUser').value;

if (!toUser) {

alert("请先指定好友账号,再发起视频通话!");

return;

}

callType = ct;

document.getElementById('toUser').style.visibility = 'hidden';

document.getElementById('myid').style.visibility = 'hidden';

if (!peer[toUser]) {

WebRTCInit(toUser);

}

websocket.send(JSON.stringify({

type: "call_start",

fromUser: username,

toUser: toUser,

}));

}

/* 按钮事件 */

function ButtonFunInit() {

//挂断

document.getElementById('hangup').onclick = function (e) {

document.getElementById('toUser').style.visibility = 'unset';

document.getElementById('myid').style.visibility = 'unset';

stream.getTracks().forEach(track => {

track.stop();

});

stream = null;

// 停止录像

mediaRecorder.stop();

if (remoteVideo.srcObject) {

//挂断同时,通知对方

websocket.send(JSON.stringify({

type: "hangup",

fromUser: username,

toUser: document.getElementById('toUser').value,

}));

}

Object.values(peer).forEach(peer => peer.close())

peer = {};

localVideo.srcObject = null;

remoteVideo.srcObject = null;

}

}

WebSocketInit();

ButtonFunInit();

// 录制视频

let mediaRecorder;

let recordedBlobs = [];

let startTime = 0;

function startRecorder(stream) {

mediaRecorder = new MediaRecorder(stream);

// 录制开始时触发

mediaRecorder.onstart = () => {

recordedBlobs = [];

};

// 录制过程中触发

mediaRecorder.ondataavailable = event => {

if (event.data && event.data.size > 0) {

recordedBlobs.push(event.data);

}

};

// 录制结束时触发

mediaRecorder.onstop = () => {

console.log(recordedBlobs[0].type);

const blob = new Blob(recordedBlobs, { type: 'video/webm' });

ysFixWebmDuration(blob, Date.now() - startTime, function (fixedBlob) {

const url = window.URL.createObjectURL(fixedBlob);

const a = document.createElement('a');

const filename = 'recorded-video.webm';

a.style.display = 'none';

a.href = url;

a.download = filename;

document.body.appendChild(a);

a.click();

window.URL.revokeObjectURL(url);

});

};

mediaRecorder.start();

startTime = Date.now();

}

</script>

</html>

fix-webm-duration.js

(function (name, definition) {

if (typeof define === "function" && define.amd) {

// RequireJS / AMD

define(definition);

} else if (typeof module !== "undefined" && module.exports) {

// CommonJS / Node.js

module.exports = definition();

} else {

// Direct include

window.ysFixWebmDuration = definition();

}

})("fix-webm-duration", function () {

/*

* This is the list of possible WEBM file sections by their IDs.

* Possible types: Container, Binary, Uint, Int, String, Float, Date

*/

var sections = {

0xa45dfa3: { name: "EBML", type: "Container" },

0x286: { name: "EBMLVersion", type: "Uint" },

0x2f7: { name: "EBMLReadVersion", type: "Uint" },

0x2f2: { name: "EBMLMaxIDLength", type: "Uint" },

0x2f3: { name: "EBMLMaxSizeLength", type: "Uint" },

0x282: { name: "DocType", type: "String" },

0x287: { name: "DocTypeVersion", type: "Uint" },

0x285: { name: "DocTypeReadVersion", type: "Uint" },

0x6c: { name: "Void", type: "Binary" },

0x3f: { name: "CRC-32", type: "Binary" },

0xb538667: { name: "SignatureSlot", type: "Container" },

0x3e8a: { name: "SignatureAlgo", type: "Uint" },

0x3e9a: { name: "SignatureHash", type: "Uint" },

0x3ea5: { name: "SignaturePublicKey", type: "Binary" },

0x3eb5: { name: "Signature", type: "Binary" },

0x3e5b: { name: "SignatureElements", type: "Container" },

0x3e7b: { name: "SignatureElementList", type: "Container" },

0x2532: { name: "SignedElement", type: "Binary" },

0x8538067: { name: "Segment", type: "Container" },

0x14d9b74: { name: "SeekHead", type: "Container" },

0xdbb: { name: "Seek", type: "Container" },

0x13ab: { name: "SeekID", type: "Binary" },

0x13ac: { name: "SeekPosition", type: "Uint" },

0x549a966: { name: "Info", type: "Container" },

0x33a4: { name: "SegmentUID", type: "Binary" },

0x3384: { name: "SegmentFilename", type: "String" },

0x1cb923: { name: "PrevUID", type: "Binary" },

0x1c83ab: { name: "PrevFilename", type: "String" },

0x1eb923: { name: "NextUID", type: "Binary" },

0x1e83bb: { name: "NextFilename", type: "String" },

0x444: { name: "SegmentFamily", type: "Binary" },

0x2924: { name: "ChapterTranslate", type: "Container" },

0x29fc: { name: "ChapterTranslateEditionUID", type: "Uint" },

0x29bf: { name: "ChapterTranslateCodec", type: "Uint" },

0x29a5: { name: "ChapterTranslateID", type: "Binary" },

0xad7b1: { name: "TimecodeScale", type: "Uint" },

0x489: { name: "Duration", type: "Float" },

0x461: { name: "DateUTC", type: "Date" },

0x3ba9: { name: "Title", type: "String" },

0xd80: { name: "MuxingApp", type: "String" },

0x1741: { name: "WritingApp", type: "String" },

// 0xf43b675: { name: 'Cluster', type: 'Container' },

0x67: { name: "Timecode", type: "Uint" },

0x1854: { name: "SilentTracks", type: "Container" },

0x18d7: { name: "SilentTrackNumber", type: "Uint" },

0x27: { name: "Position", type: "Uint" },

0x2b: { name: "PrevSize", type: "Uint" },

0x23: { name: "SimpleBlock", type: "Binary" },

0x20: { name: "BlockGroup", type: "Container" },

0x21: { name: "Block", type: "Binary" },

0x22: { name: "BlockVirtual", type: "Binary" },

0x35a1: { name: "BlockAdditions", type: "Container" },

0x26: { name: "BlockMore", type: "Container" },

0x6e: { name: "BlockAddID", type: "Uint" },

0x25: { name: "BlockAdditional", type: "Binary" },

0x1b: { name: "BlockDuration", type: "Uint" },

0x7a: { name: "ReferencePriority", type: "Uint" },

0x7b: { name: "ReferenceBlock", type: "Int" },

0x7d: { name: "ReferenceVirtual", type: "Int" },

0x24: { name: "CodecState", type: "Binary" },

0x35a2: { name: "DiscardPadding", type: "Int" },

0xe: { name: "Slices", type: "Container" },

0x68: { name: "TimeSlice", type: "Container" },

0x4c: { name: "LaceNumber", type: "Uint" },

0x4d: { name: "FrameNumber", type: "Uint" },

0x4b: { name: "BlockAdditionID", type: "Uint" },

0x4e: { name: "Delay", type: "Uint" },

0x4f: { name: "SliceDuration", type: "Uint" },

0x48: { name: "ReferenceFrame", type: "Container" },

0x49: { name: "ReferenceOffset", type: "Uint" },

0x4a: { name: "ReferenceTimeCode", type: "Uint" },

0x2f: { name: "EncryptedBlock", type: "Binary" },

0x654ae6b: { name: "Tracks", type: "Container" },

0x2e: { name: "TrackEntry", type: "Container" },

0x57: { name: "TrackNumber", type: "Uint" },

0x33c5: { name: "TrackUID", type: "Uint" },

0x3: { name: "TrackType", type: "Uint" },

0x39: { name: "FlagEnabled", type: "Uint" },

0x8: { name: "FlagDefault", type: "Uint" },

0x15aa: { name: "FlagForced", type: "Uint" },

0x1c: { name: "FlagLacing", type: "Uint" },

0x2de7: { name: "MinCache", type: "Uint" },

0x2df8: { name: "MaxCache", type: "Uint" },

0x3e383: { name: "DefaultDuration", type: "Uint" },

0x34e7a: { name: "DefaultDecodedFieldDuration", type: "Uint" },

0x3314f: { name: "TrackTimecodeScale", type: "Float" },

0x137f: { name: "TrackOffset", type: "Int" },

0x15ee: { name: "MaxBlockAdditionID", type: "Uint" },

0x136e: { name: "Name", type: "String" },

0x2b59c: { name: "Language", type: "String" },

0x6: { name: "CodecID", type: "String" },

0x23a2: { name: "CodecPrivate", type: "Binary" },

0x58688: { name: "CodecName", type: "String" },

0x3446: { name: "AttachmentLink", type: "Uint" },

0x1a9697: { name: "CodecSettings", type: "String" },

0x1b4040: { name: "CodecInfoURL", type: "String" },

0x6b240: { name: "CodecDownloadURL", type: "String" },

0x2a: { name: "CodecDecodeAll", type: "Uint" },

0x2fab: { name: "TrackOverlay", type: "Uint" },

0x16aa: { name: "CodecDelay", type: "Uint" },

0x16bb: { name: "SeekPreRoll", type: "Uint" },

0x2624: { name: "TrackTranslate", type: "Container" },

0x26fc: { name: "TrackTranslateEditionUID", type: "Uint" },

0x26bf: { name: "TrackTranslateCodec", type: "Uint" },

0x26a5: { name: "TrackTranslateTrackID", type: "Binary" },

0x60: { name: "Video", type: "Container" },

0x1a: { name: "FlagInterlaced", type: "Uint" },

0x13b8: { name: "StereoMode", type: "Uint" },

0x13c0: { name: "AlphaMode", type: "Uint" },

0x13b9: { name: "OldStereoMode", type: "Uint" },

0x30: { name: "PixelWidth", type: "Uint" },

0x3a: { name: "PixelHeight", type: "Uint" },

0x14aa: { name: "PixelCropBottom", type: "Uint" },

0x14bb: { name: "PixelCropTop", type: "Uint" },

0x14cc: { name: "PixelCropLeft", type: "Uint" },

0x14dd: { name: "PixelCropRight", type: "Uint" },

0x14b0: { name: "DisplayWidth", type: "Uint" },

0x14ba: { name: "DisplayHeight", type: "Uint" },

0x14b2: { name: "DisplayUnit", type: "Uint" },

0x14b3: { name: "AspectRatioType", type: "Uint" },

0xeb524: { name: "ColourSpace", type: "Binary" },

0xfb523: { name: "GammaValue", type: "Float" },

0x383e3: { name: "FrameRate", type: "Float" },

0x61: { name: "Audio", type: "Container" },

0x35: { name: "SamplingFrequency", type: "Float" },

0x38b5: { name: "OutputSamplingFrequency", type: "Float" },

0x1f: { name: "Channels", type: "Uint" },

0x3d7b: { name: "ChannelPositions", type: "Binary" },

0x2264: { name: "BitDepth", type: "Uint" },

0x62: { name: "TrackOperation", type: "Container" },

0x63: { name: "TrackCombinePlanes", type: "Container" },

0x64: { name: "TrackPlane", type: "Container" },

0x65: { name: "TrackPlaneUID", type: "Uint" },

0x66: { name: "TrackPlaneType", type: "Uint" },

0x69: { name: "TrackJoinBlocks", type: "Container" },

0x6d: { name: "TrackJoinUID", type: "Uint" },

0x40: { name: "TrickTrackUID", type: "Uint" },

0x41: { name: "TrickTrackSegmentUID", type: "Binary" },

0x46: { name: "TrickTrackFlag", type: "Uint" },

0x47: { name: "TrickMasterTrackUID", type: "Uint" },

0x44: { name: "TrickMasterTrackSegmentUID", type: "Binary" },

0x2d80: { name: "ContentEncodings", type: "Container" },

0x2240: { name: "ContentEncoding", type: "Container" },

0x1031: { name: "ContentEncodingOrder", type: "Uint" },

0x1032: { name: "ContentEncodingScope", type: "Uint" },

0x1033: { name: "ContentEncodingType", type: "Uint" },

0x1034: { name: "ContentCompression", type: "Container" },

0x254: { name: "ContentCompAlgo", type: "Uint" },

0x255: { name: "ContentCompSettings", type: "Binary" },

0x1035: { name: "ContentEncryption", type: "Container" },

0x7e1: { name: "ContentEncAlgo", type: "Uint" },

0x7e2: { name: "ContentEncKeyID", type: "Binary" },

0x7e3: { name: "ContentSignature", type: "Binary" },

0x7e4: { name: "ContentSigKeyID", type: "Binary" },

0x7e5: { name: "ContentSigAlgo", type: "Uint" },

0x7e6: { name: "ContentSigHashAlgo", type: "Uint" },

0xc53bb6b: { name: "Cues", type: "Container" },

0x3b: { name: "CuePoint", type: "Container" },

0x33: { name: "CueTime", type: "Uint" },

0x37: { name: "CueTrackPositions", type: "Container" },

0x77: { name: "CueTrack", type: "Uint" },

0x71: { name: "CueClusterPosition", type: "Uint" },

0x70: { name: "CueRelativePosition", type: "Uint" },

0x32: { name: "CueDuration", type: "Uint" },

0x1378: { name: "CueBlockNumber", type: "Uint" },

0x6a: { name: "CueCodecState", type: "Uint" },

0x5b: { name: "CueReference", type: "Container" },

0x16: { name: "CueRefTime", type: "Uint" },

0x17: { name: "CueRefCluster", type: "Uint" },

0x135f: { name: "CueRefNumber", type: "Uint" },

0x6b: { name: "CueRefCodecState", type: "Uint" },

0x941a469: { name: "Attachments", type: "Container" },

0x21a7: { name: "AttachedFile", type: "Container" },

0x67e: { name: "FileDescription", type: "String" },

0x66e: { name: "FileName", type: "String" },

0x660: { name: "FileMimeType", type: "String" },

0x65c: { name: "FileData", type: "Binary" },

0x6ae: { name: "FileUID", type: "Uint" },

0x675: { name: "FileReferral", type: "Binary" },

0x661: { name: "FileUsedStartTime", type: "Uint" },

0x662: { name: "FileUsedEndTime", type: "Uint" },

0x43a770: { name: "Chapters", type: "Container" },

0x5b9: { name: "EditionEntry", type: "Container" },

0x5bc: { name: "EditionUID", type: "Uint" },

0x5bd: { name: "EditionFlagHidden", type: "Uint" },

0x5db: { name: "EditionFlagDefault", type: "Uint" },

0x5dd: { name: "EditionFlagOrdered", type: "Uint" },

0x36: { name: "ChapterAtom", type: "Container" },

0x33c4: { name: "ChapterUID", type: "Uint" },

0x1654: { name: "ChapterStringUID", type: "String" },

0x11: { name: "ChapterTimeStart", type: "Uint" },

0x12: { name: "ChapterTimeEnd", type: "Uint" },

0x18: { name: "ChapterFlagHidden", type: "Uint" },

0x598: { name: "ChapterFlagEnabled", type: "Uint" },

0x2e67: { name: "ChapterSegmentUID", type: "Binary" },

0x2ebc: { name: "ChapterSegmentEditionUID", type: "Uint" },

0x23c3: { name: "ChapterPhysicalEquiv", type: "Uint" },

0xf: { name: "ChapterTrack", type: "Container" },

0x9: { name: "ChapterTrackNumber", type: "Uint" },

0x0: { name: "ChapterDisplay", type: "Container" },

0x5: { name: "ChapString", type: "String" },

0x37c: { name: "ChapLanguage", type: "String" },

0x37e: { name: "ChapCountry", type: "String" },

0x2944: { name: "ChapProcess", type: "Container" },

0x2955: { name: "ChapProcessCodecID", type: "Uint" },

0x50d: { name: "ChapProcessPrivate", type: "Binary" },

0x2911: { name: "ChapProcessCommand", type: "Container" },

0x2922: { name: "ChapProcessTime", type: "Uint" },

0x2933: { name: "ChapProcessData", type: "Binary" },

0x254c367: { name: "Tags", type: "Container" },

0x3373: { name: "Tag", type: "Container" },

0x23c0: { name: "Targets", type: "Container" },

0x28ca: { name: "TargetTypeValue", type: "Uint" },

0x23ca: { name: "TargetType", type: "String" },

0x23c5: { name: "TagTrackUID", type: "Uint" },

0x23c9: { name: "TagEditionUID", type: "Uint" },

0x23c4: { name: "TagChapterUID", type: "Uint" },

0x23c6: { name: "TagAttachmentUID", type: "Uint" },

0x27c8: { name: "SimpleTag", type: "Container" },

0x5a3: { name: "TagName", type: "String" },

0x47a: { name: "TagLanguage", type: "String" },

0x484: { name: "TagDefault", type: "Uint" },

0x487: { name: "TagString", type: "String" },

0x485: { name: "TagBinary", type: "Binary" },

};

function doInherit(newClass, baseClass) {

newClass.prototype = Object.create(baseClass.prototype);

newClass.prototype.constructor = newClass;

}

function WebmBase(name, type) {

this.name = name || "Unknown";

this.type = type || "Unknown";

}

WebmBase.prototype.updateBySource = function () {};

WebmBase.prototype.setSource = function (source) {

this.source = source;

this.updateBySource();

};

WebmBase.prototype.updateByData = function () {};

WebmBase.prototype.setData = function (data) {

this.data = data;

this.updateByData();

};

function WebmUint(name, type) {

WebmBase.call(this, name, type || "Uint");

}

doInherit(WebmUint, WebmBase);

function padHex(hex) {

return hex.length % 2 === 1 ? "0" + hex : hex;

}

WebmUint.prototype.updateBySource = function () {

// use hex representation of a number instead of number value

this.data = "";

for (var i = 0; i < this.source.length; i++) {

var hex = this.source[i].toString(16);

this.data += padHex(hex);

}

};

WebmUint.prototype.updateByData = function () {

var length = this.data.length / 2;

this.source = new Uint8Array(length);

for (var i = 0; i < length; i++) {

var hex = this.data.substr(i * 2, 2);

this.source[i] = parseInt(hex, 16);

}

};

WebmUint.prototype.getValue = function () {

return parseInt(this.data, 16);

};

WebmUint.prototype.setValue = function (value) {

this.setData(padHex(value.toString(16)));

};

function WebmFloat(name, type) {

WebmBase.call(this, name, type || "Float");

}

doInherit(WebmFloat, WebmBase);

WebmFloat.prototype.getFloatArrayType = function () {

return this.source && this.source.length === 4

? Float32Array

: Float64Array;

};

WebmFloat.prototype.updateBySource = function () {

var byteArray = this.source.reverse();

var floatArrayType = this.getFloatArrayType();

var floatArray = new floatArrayType(byteArray.buffer);

this.data = floatArray[0];

};

WebmFloat.prototype.updateByData = function () {

var floatArrayType = this.getFloatArrayType();

var floatArray = new floatArrayType([this.data]);

var byteArray = new Uint8Array(floatArray.buffer);

this.source = byteArray.reverse();

};

WebmFloat.prototype.getValue = function () {

return this.data;

};

WebmFloat.prototype.setValue = function (value) {

this.setData(value);

};

function WebmContainer(name, type) {

WebmBase.call(this, name, type || "Container");

}

doInherit(WebmContainer, WebmBase);

WebmContainer.prototype.readByte = function () {

return this.source[this.offset++];

};

WebmContainer.prototype.readUint = function () {

var firstByte = this.readByte();

var bytes = 8 - firstByte.toString(2).length;

var value = firstByte - (1 << (7 - bytes));

for (var i = 0; i < bytes; i++) {

// don't use bit operators to support x86

value *= 256;

value += this.readByte();

}

return value;

};

WebmContainer.prototype.updateBySource = function () {

this.data = [];

for (this.offset = 0; this.offset < this.source.length; this.offset = end) {

var id = this.readUint();

var len = this.readUint();

var end = Math.min(this.offset + len, this.source.length);

var data = this.source.slice(this.offset, end);

var info = sections[id] || { name: "Unknown", type: "Unknown" };

var ctr = WebmBase;

switch (info.type) {

case "Container":

ctr = WebmContainer;

break;

case "Uint":

ctr = WebmUint;

break;

case "Float":

ctr = WebmFloat;

break;

}

var section = new ctr(info.name, info.type);

section.setSource(data);

this.data.push({

id: id,

idHex: id.toString(16),

data: section,

});

}

};

WebmContainer.prototype.writeUint = function (x, draft) {

for (

var bytes = 1, flag = 0x80;

x >= flag && bytes < 8;

bytes++, flag *= 0x80

) {}

if (!draft) {

var value = flag + x;

for (var i = bytes - 1; i >= 0; i--) {

// don't use bit operators to support x86

var c = value % 256;

this.source[this.offset + i] = c;

value = (value - c) / 256;

}

}

this.offset += bytes;

};

WebmContainer.prototype.writeSections = function (draft) {

this.offset = 0;

for (var i = 0; i < this.data.length; i++) {

var section = this.data[i],

content = section.data.source,

contentLength = content.length;

this.writeUint(section.id, draft);

this.writeUint(contentLength, draft);

if (!draft) {

this.source.set(content, this.offset);

}

this.offset += contentLength;

}

return this.offset;

};

WebmContainer.prototype.updateByData = function () {

// run without accessing this.source to determine total length - need to know it to create Uint8Array

var length = this.writeSections("draft");

this.source = new Uint8Array(length);

// now really write data

this.writeSections();

};

WebmContainer.prototype.getSectionById = function (id) {

for (var i = 0; i < this.data.length; i++) {

var section = this.data[i];

if (section.id === id) {

return section.data;

}

}

return null;

};

function WebmFile(source) {

WebmContainer.call(this, "File", "File");

this.setSource(source);

}

doInherit(WebmFile, WebmContainer);

WebmFile.prototype.fixDuration = function (duration, options) {

var logger = options && options.logger;

if (logger === undefined) {

logger = function (message) {

console.log(message);

};

} else if (!logger) {

logger = function () {};

}

var segmentSection = this.getSectionById(0x8538067);

if (!segmentSection) {

logger("[fix-webm-duration] Segment section is missing");

return false;

}

var infoSection = segmentSection.getSectionById(0x549a966);

if (!infoSection) {

logger("[fix-webm-duration] Info section is missing");

return false;

}

var timeScaleSection = infoSection.getSectionById(0xad7b1);

if (!timeScaleSection) {

logger("[fix-webm-duration] TimecodeScale section is missing");

return false;

}

var durationSection = infoSection.getSectionById(0x489);

if (durationSection) {

if (durationSection.getValue() <= 0) {

logger(

"[fix-webm-duration] Duration section is present, but the value is empty"

);

durationSection.setValue(duration);

} else {

logger("[fix-webm-duration] Duration section is present");

return false;

}

} else {

logger("[fix-webm-duration] Duration section is missing");

// append Duration section

durationSection = new WebmFloat("Duration", "Float");

durationSection.setValue(duration);

infoSection.data.push({

id: 0x489,

data: durationSection,

});

}

// set default time scale to 1 millisecond (1000000 nanoseconds)

timeScaleSection.setValue(1000000);

infoSection.updateByData();

segmentSection.updateByData();

this.updateByData();

return true;

};

WebmFile.prototype.toBlob = function (mimeType) {

return new Blob([this.source.buffer], { type: mimeType || "video/webm" });

};

function fixWebmDuration(blob, duration, callback, options) {

// The callback may be omitted - then the third argument is options

if (typeof callback === "object") {

options = callback;

callback = undefined;

}

if (!callback) {

return new Promise(function (resolve) {

fixWebmDuration(blob, duration, resolve, options);

});

}

try {

var reader = new FileReader();

reader.onloadend = function () {

try {

var file = new WebmFile(new Uint8Array(reader.result));

if (file.fixDuration(duration, options)) {

blob = file.toBlob(blob.type);

}

} catch (ex) {

// ignore

}

callback(blob);

};

reader.readAsArrayBuffer(blob);

} catch (ex) {

callback(blob);

}

}

// Support AMD import default

fixWebmDuration.default = fixWebmDuration;

return fixWebmDuration;

});

多对多 监控页面

<!DOCTYPE>

<html>

<head>

<meta charset="UTF-8">

<title>WebRTC + WebSocket</title>

<meta name="viewport" content="width=device-width,initial-scale=1.0,user-scalable=no">

<style>

html,

body {

margin: 0;

padding: 0;

background: #222;

}

#main {

position: absolute;

width: 100%;

height: 100%;

}

video {

margin: 5px;

width: 300px;

}

#buttons {

z-index: 3;

bottom: 20px;

left: 20px;

position: absolute;

}

input {

border: 1px solid #ccc;

padding: 7px 0px;

border-radius: 5px;

padding-left: 5px;

margin-bottom: 5px;

}

input :focus {

border-color: #66afe9;

outline: 0;

-webkit-box-shadow: inset 0 1px 1px rgba(0, 0, 0, .075), 0 0 8px rgba(102, 175, 233, .6);

box-shadow: inset 0 1px 1px rgba(0, 0, 0, .075), 0 0 8px rgba(102, 175, 233, .6)

}

#call {

width: 70px;

height: 35px;

background-color: #00BB00;

border: none;

color: white;

border-radius: 5px;

}

#hangup {

width: 70px;

height: 35px;

background-color: #FF5151;

border: none;

color: white;

border-radius: 5px;

}

button {

width: 70px;

height: 35px;

margin: 6px;

background-color: #6de73d;

border: none;

color: white;

border-radius: 5px;

}

</style>

</head>

<body>

<div id="main">

<div id="buttons">

<input id="myid" readonly /><br />

<input id="toUser" placeholder="用户1" /><br />

<button id="call" onclick="getStream()">获取流</button>

</div>

</div>

</body>

<!-- 可引可不引 -->

<script type="text/javascript" th:inline="javascript">

function generateRandomLetters(length) {

let result = '';

const characters = 'abcdefghijklmnopqrstuvwxyz'; // 字母表

for (let i = 0; i < length; i++) {

const randomIndex = Math.floor(Math.random() * characters.length);

const randomLetter = characters[randomIndex];

result += randomLetter;

}

return result;

}

let username = generateRandomLetters(3);

document.getElementById('myid').value = username;

let websocket = null;

let peer = {};

let candidate = null;

/* WebSocket */

function WebSocketInit() {

//判断当前浏览器是否支持WebSocket

if ('WebSocket' in window) {

websocket = new WebSocket("ws://192.168.31.14:8181/webrtc/" + username);

} else {

alert("当前浏览器不支持WebSocket!");

}

//连接发生错误的回调方法

websocket.onerror = function (e) {

alert("WebSocket连接发生错误!");

};

//连接关闭的回调方法

websocket.onclose = function () {

console.error("WebSocket连接关闭");

};

//连接成功建立的回调方法

websocket.onopen = function () {

console.log("WebSocket连接成功");

};

//接收到消息的回调方法

websocket.onmessage = async function (event) {

let { type, fromUser, toUser, msg, sdp, iceCandidate } = JSON.parse(event.data.replace(/\n/g, "\\n").replace(/\r/g, "\\r"));

console.log(type, fromUser, toUser);

if (type === 'call_back') {

if (msg === "1") {

} else if (msg === "0") {

} else {

peer[fromUser].close();

document.getElementById(fromUser).remove()

alert(msg);

}

return;

}

if (type === 'offer') {

// let stream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true })

// localVideo.srcObject = stream;

// stream.getTracks().forEach(track => {

// peer[fromUser].addTrack(track, stream);

// });

console.log("管理员收到", fromUser, peer)

await peer[fromUser].setRemoteDescription(new RTCSessionDescription({ type, sdp }));

let answer = await peer[fromUser].createAnswer();

newAnswer = answer.toJSON();

newAnswer["fromUser"] = username;

newAnswer["toUser"] = fromUser;

websocket.send(JSON.stringify(newAnswer));

await peer[fromUser].setLocalDescription(newAnswer);

return;

}

if (type === 'answer') {

peer[fromUser].setRemoteDescription(new RTCSessionDescription({ type, sdp }));

return;

}

if (type === '_ice') {

peer[fromUser].addIceCandidate(iceCandidate);

return;

}

}

}

WebSocketInit();

/* WebRTC */

function WebRTCInit(userId) {

if (peer[userId]) {

peer[userId].close();

}

const p = new RTCPeerConnection();

//ice

p.onicecandidate = function (e) {

if (e.candidate) {

websocket.send(JSON.stringify({

type: '_ice',

toUser: userId,

fromUser: username,

iceCandidate: e.candidate

}));

}

};

// 创建video元素

const videoElement = document.createElement('video');

videoElement.id = userId;

videoElement.setAttribute('playsinline', '');

videoElement.setAttribute('autoplay', '');

videoElement.setAttribute('controls', '');

// 将video元素添加到DOM中

document.body.appendChild(videoElement);

//track

p.ontrack = function (e) {

if (e && e.streams) {

console.log(e);

videoElement.srcObject = e.streams[0];

}

};

peer[userId] = p;

}

async function getStream() {

let toUser = document.getElementById('toUser').value;

WebRTCInit(toUser);

websocket.send(JSON.stringify({

type: 'getStream',

toUser: toUser,

fromUser: username

}));

}

</script>

</html>

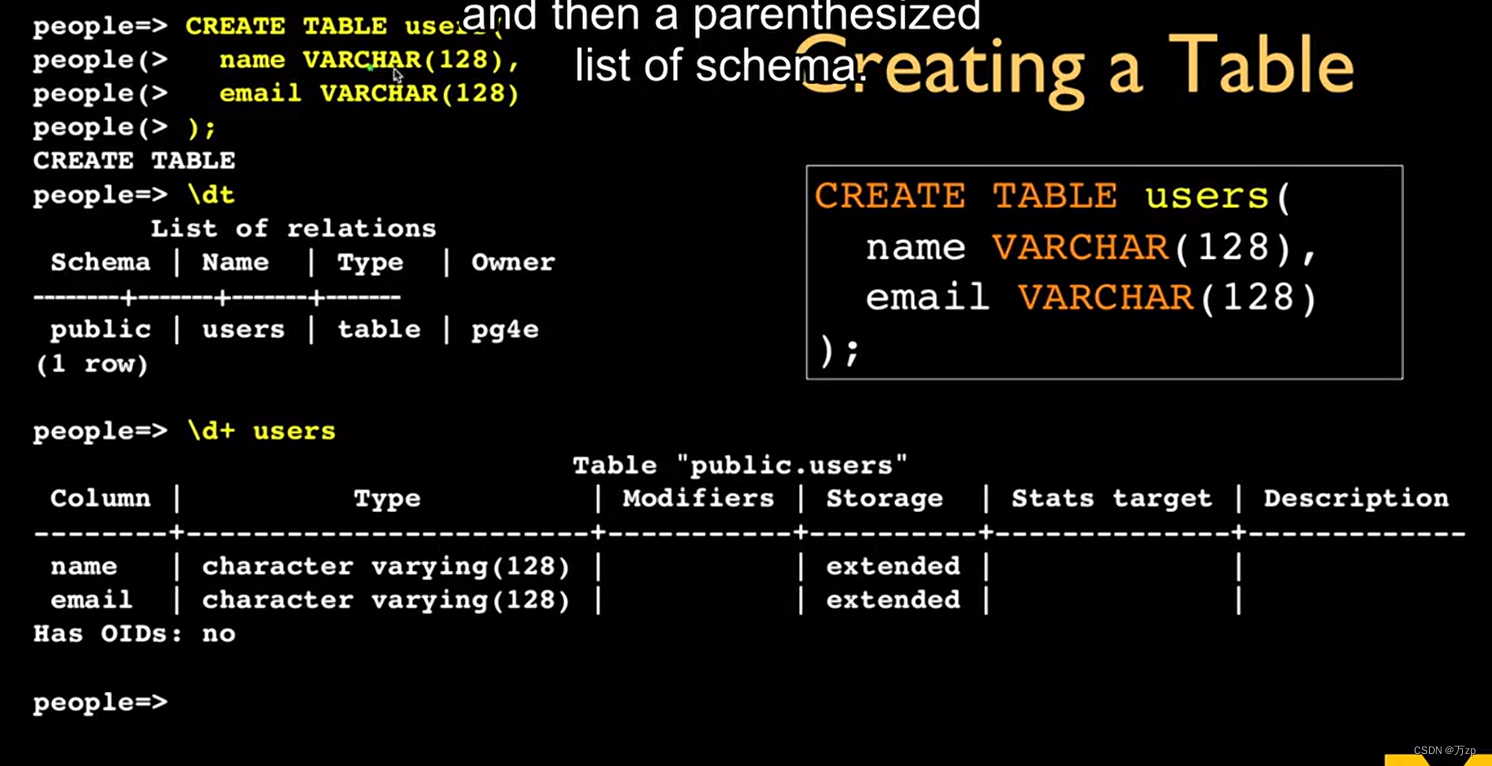

websocket java代码

import com.fasterxml.jackson.databind.DeserializationFeature;

import com.fasterxml.jackson.databind.ObjectMapper;

import jakarta.websocket.*;

import jakarta.websocket.server.PathParam;

import jakarta.websocket.server.ServerEndpoint;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Component;

import java.text.SimpleDateFormat;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

/**

* WebRTC + WebSocket

*/

@Slf4j

@Component

@ServerEndpoint(value = "/webrtc/{username}")

public class WebRtcWSServer {

/**

* 连接集合

*/

private static final Map<String, Session> sessionMap = new ConcurrentHashMap<>();

/**

* 连接建立成功调用的方法

*/

@OnOpen

public void onOpen(Session session, @PathParam("username") String username, @PathParam("publicKey") String publicKey) {

sessionMap.put(username, session);

}

/**

* 连接关闭调用的方法

*/

@OnClose

public void onClose(Session session) {

for (Map.Entry<String, Session> entry : sessionMap.entrySet()) {

if (entry.getValue() == session) {

sessionMap.remove(entry.getKey());

break;

}

}

}

/**

* 发生错误时调用

*/

@OnError

public void onError(Session session, Throwable error) {

error.printStackTrace();

}

/**

* 服务器接收到客户端消息时调用的方法

*/

@OnMessage

public void onMessage(String message, Session session) {

try{

//jackson

ObjectMapper mapper = new ObjectMapper();

mapper.setDateFormat(new SimpleDateFormat("yyyy-MM-dd HH:mm:ss"));

mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false);

//JSON字符串转 HashMap

HashMap hashMap = mapper.readValue(message, HashMap.class);

//消息类型

String type = (String) hashMap.get("type");

//to user

String toUser = (String) hashMap.get("toUser");

Session toUserSession = sessionMap.get(toUser);

String fromUser = (String) hashMap.get("fromUser");

//msg

String msg = (String) hashMap.get("msg");

//sdp

String sdp = (String) hashMap.get("sdp");

//ice

Map iceCandidate = (Map) hashMap.get("iceCandidate");

HashMap<String, Object> map = new HashMap<>();

map.put("type",type);

//呼叫的用户不在线

if(toUserSession == null){

toUserSession = session;

map.put("type","call_back");

map.put("fromUser",toUser);

map.put("msg","Sorry,呼叫的用户不在线!");

send(toUserSession,mapper.writeValueAsString(map));

return;

}

map.put("fromUser",fromUser);

map.put("toUser",toUser);

//对方挂断

if ("hangup".equals(type)) {

map.put("fromUser",fromUser);

map.put("msg","对方挂断!");

}

//视频通话请求

if ("call_start".equals(type)) {

map.put("fromUser",fromUser);

map.put("msg","1");

}

//视频通话请求回应

if ("call_back".equals(type)) {

map.put("msg",msg);

}

//offer

if ("offer".equals(type)) {

map.put("fromUser",fromUser);

map.put("toUser",toUser);

map.put("sdp",sdp);

}

//answer

if ("answer".equals(type)) {

map.put("fromUser",fromUser);

map.put("toUser",toUser);

map.put("sdp",sdp);

}

//ice

if ("_ice".equals(type)) {

map.put("iceCandidate",iceCandidate);

}

// getStream

if ("getStream".equals(type)) {

map.put("fromUser",fromUser);

map.put("toUser",toUser);

}

send(toUserSession,mapper.writeValueAsString(map));

}catch(Exception e){

e.printStackTrace();

}

}

/**

* 封装一个send方法,发送消息到前端

*/

private void send(Session session, String message) {

try {

System.out.println(message);

session.getBasicRemote().sendText(message);

} catch (Exception e) {

e.printStackTrace();

}

}

}

springboot 相关依赖和配置

// 1.pom

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-websocket</artifactId>

</dependency>

// 2.启用功能

@EnableWebSocket

public class CocoBootApplication

3.config

package com.coco.boot.config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.socket.server.standard.ServerEndpointExporter;

@Configuration

public class WebSocketConfig {

@Bean

public ServerEndpointExporter serverEndpointExporter() {

return new ServerEndpointExporter();

}

}

完结

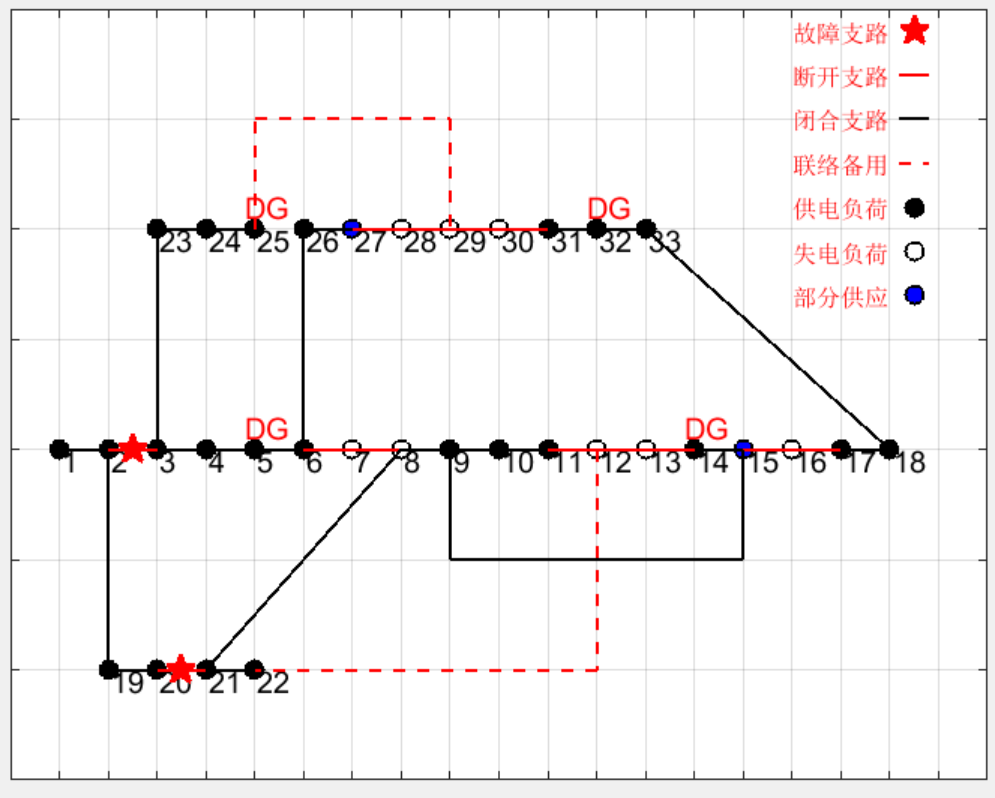

实现多人通信,通过创建多个 RTCPeerConnection 对象来实现。

每个参与者都需要与其他参与者建立连接。每个参与者都维护一个 RTCPeerConnection 对象,分别与其他参与者进行连接。

参与者数量的增加,点对点连接的复杂性和网络带宽要求也会增加。

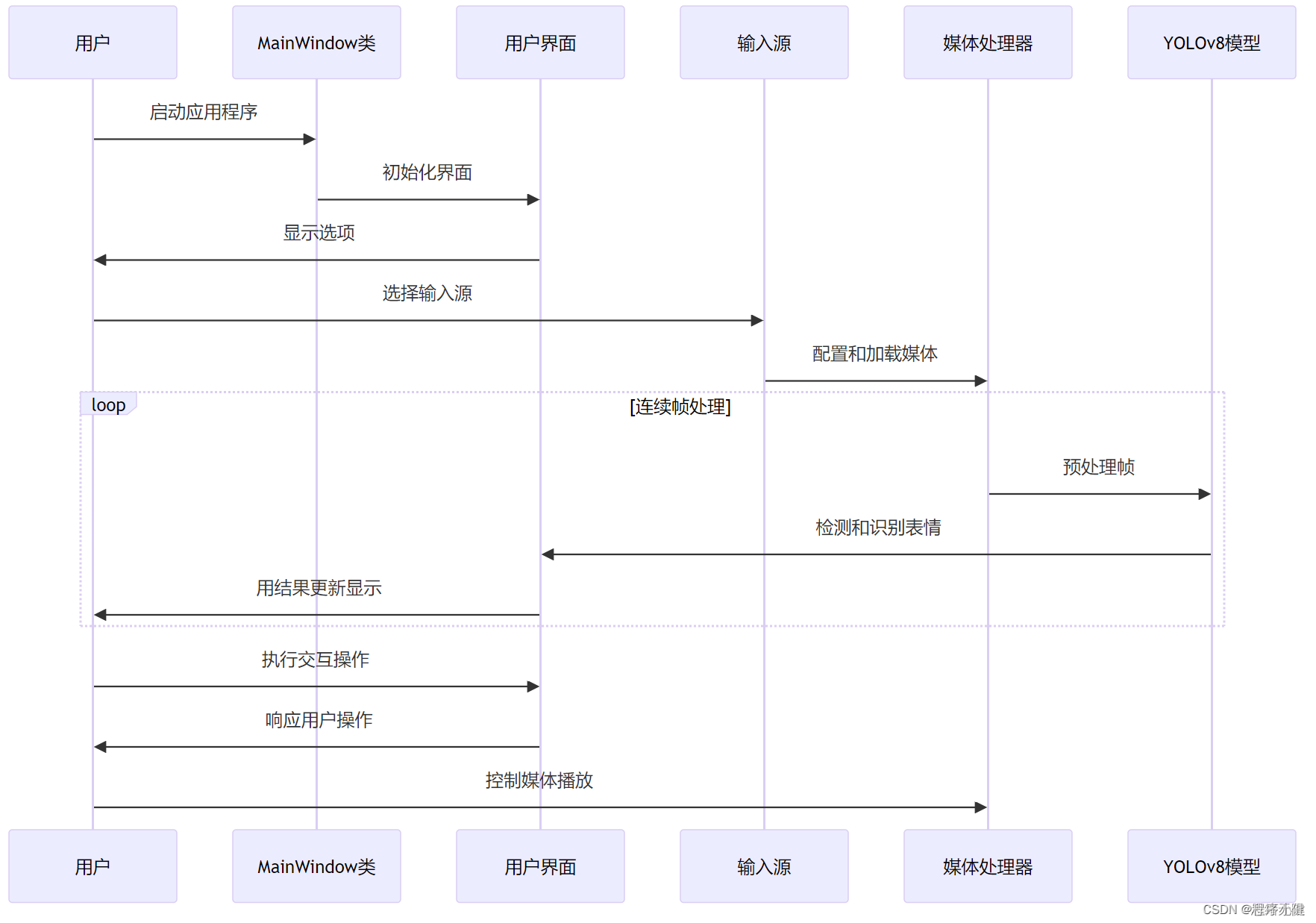

WebRTC 建立连接时,以下顺序执行:

1.创建本地 PeerConnection 对象:使用 RTCPeerConnection 构造函数创建本地的 PeerConnection 对象,该对象用于管理 WebRTC 连接。

2.添加本地媒体流:通过调用 getUserMedia 方法获取本地的音视频流,并将其添加到 PeerConnection 对象中。这样可以将本地的音视频数据发送给远程对等方。

3.创建和设置本地 SDP:使用 createOffer 方法创建本地的 Session Description Protocol (SDP),描述本地对等方的音视频设置和网络信息。然后,通过调用 setLocalDescription 方法将本地 SDP 设置为本地 PeerConnection 对象的本地描述。

4.发送本地 SDP:将本地 SDP 发送给远程对等方,可以使用信令服务器或其他通信方式发送。

5.接收远程 SDP:从远程对等方接收远程 SDP,可以通过信令服务器或其他通信方式接收。

6.设置远程 SDP:使用接收到的远程 SDP,调用 PeerConnection 对象的 setRemoteDescription 方法将其设置为远程描述。

7.创建和设置本地 ICE 候选项:使用 onicecandidate 事件监听 PeerConnection 对象的 ICE 候选项生成,在生成候选项后,通过信令服务器或其他通信方式将其发送给远程对等方。

8.接收和添加远程 ICE 候选项:从远程对等方接收到 ICE 候选项后,调用 addIceCandidate 方法将其添加到本地 PeerConnection 对象中。

9.连接建立:一旦本地和远程的 SDP 和 ICE 候选项都设置好并添加完毕,连接就会建立起来。此时,音视频流可以在本地和远程对等方之间进行传输。

主要参考:

WebRTC + WebSocket 实现视频通话

WebRTC穿透服务器防火墙配置问题

WebRTC音视频录制

WebRTC 多人视频聊天

![[云呐]固定资产盘点报告哪个部门写](https://img-blog.csdnimg.cn/img_convert/a1f26a3daf37c2b805160b9cfaf89f70.jpeg)