Query analysis

“Search”为许多用例提供支持——包括检索增强生成的“检索”部分。最简单的方法是将用户问题直接传递给检索器。为了提高性能,还可以使用“query analysis”以某种方式“优化”查询。传统上,这是通过基于规则的技术来完成的,但随着LLM的兴起,使用LLM来实现这一点变得越来越流行而且更加可行。具体来讲,这涉及将原始问题(或消息列表)传递到LLM并返回一个或多个优化查询,这些查询通常包含一个字符串和可选的其他结构化信息。

Problems Solved

“Query analysis”有助于优化发送给检索器的搜索查询。在以下情况中可能会出现:

- 检索器支持针对数据的特定字段的搜索和过滤,并且用户输入可以引用这些字段中的任何一个

- 用户输入包含多个不同的问题

- 要检索相关信息需要多次查询

- 搜索质量对措辞敏感

- 可以搜索多个检索器,并且用户输入可以引用其中任何一个

注意,不同的问题需要不同的解决方案。为了确定应该使用哪种查询分析技术,需要准确了解当前检索系统的问题所在。最好通过查看当前应用程序的故障数据点并确定常见主题来完成此操作。只有知道问题是什么时,才能开始解决它们。

Example

本节将展示如何在基本的端到端示例中使用查询分析。这将包括创建一个简单的搜索引擎,显示将原始用户问题传递到该搜索时发生的故障模式,然后提供查询分析如何帮助解决该问题的示例。

Setup

安装依赖项

%pip install -qU langchain langchain-community langchain-openai youtube-transcript-api pytube chromadb

Load Documents

使用 YouTubeLoader 来加载一些 LangChain 视频的文字记录:

from langchain_community.document_loaders import YoutubeLoader

urls = [

"https://www.youtube.com/watch?v=HAn9vnJy6S4",

"https://www.youtube.com/watch?v=dA1cHGACXCo",

]

docs = []

for url in urls:

docs.extend(YoutubeLoader.from_youtube_url(url, add_video_info=True).load())

import datetime

# Add some additional metadata: what year the video was published

for doc in docs:

doc.metadata["publish_year"] = int(

datetime.datetime.strptime(

doc.metadata["publish_date"], "%Y-%m-%d %H:%M:%S"

).strftime("%Y")

)

以下是我们加载的视频的标题:

[doc.metadata["title"] for doc in docs]

['OpenGPTs',

'Building a web RAG chatbot: using LangChain, Exa (prev. Metaphor), LangSmith, and Hosted Langserve']

这是与每个视频相关的元数据。我们可以看到每个文档还有标题、查看次数、发布日期和长度:

docs[0].metadata

{'source': 'HAn9vnJy6S4',

'title': 'OpenGPTs',

'description': 'Unknown',

'view_count': 7952,

'thumbnail_url': 'https://i.ytimg.com/vi/HAn9vnJy6S4/hq720.jpg',

'publish_date': '2024-01-31 00:00:00',

'length': 1530,

'author': 'LangChain',

'publish_year': 2024}

这是文档内容的示例:

docs[1].page_content[:500]

"hey folks I'm Eric from Lang chain and today we're going to be building a search enabled chatbot with EXA which launched today um let's get started uh to begin let's go through the main pieces of software that we're going to be using um to start we're going to be using Lang chain um Lang chain is a framework for developing llm powered applications um it's the company I work at and it's the framework we'll be using we'll be using the python library but we also offer JavaScript library um for folk"

Indexing documents

每当执行检索时,都需要创建可以查询的文档索引。我们将使用向量存储来索引我们的文档,并且我们将首先对它们进行分块以使我们的检索更加简洁和精确:

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

chunked_docs = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

vectorstore = Chroma.from_documents(

chunked_docs,

embeddings,

)

Retrieval without query analysis

可以直接对用户问题进行相似性搜索,以找到与该问题相关的块:

search_results = vectorstore.similarity_search("how do I build a RAG agent")

print(search_results[0].metadata["title"])

print(search_results[0].page_content[:500])

OpenGPTs

hardcoded that it will always do a retrieval step here the assistant decides whether to do a retrieval step or not sometimes this is good sometimes this is bad sometimes it you don't need to do a retrieval step when I said hi it didn't need to call it tool um but other times you know the the llm might mess up and not realize that it needs to do a retrieval step and so the rag bot will always do a retrieval step so it's more focused there because this is also a simpler architecture so it's always

如果我们想搜索特定时间段的结果怎么办?

如果我们想搜索特定时间段的结果怎么办?

search_results = vectorstore.similarity_search("videos on RAG published in 2023")

print(search_results[0].metadata["title"])

print(search_results[0].metadata["publish_date"])

print(search_results[0].page_content[:500])

OpenGPTs

2024-01-31 00:00:00

hardcoded that it will always do a retrieval step here the assistant decides whether to do a retrieval step or not sometimes this is good sometimes this is bad sometimes it you don't need to do a retrieval step when I said hi it didn't need to call it tool um but other times you know the the llm might mess up and not realize that it needs to do a retrieval step and so the rag bot will always do a retrieval step so it's more focused there because this is also a simpler architecture so it's always

我们的第一个结果来自 2024 年(尽管我们要求提供 2023 年的视频),与输入不太相关。由于我们只是搜索文档内容,因此无法根据任何文档属性过滤结果。

这只是可能出现的一种故障模式。现在让我们看看基本的查询分析形式如何解决这个问题!

Query analysis

可以使用“Query analysis”来改善检索结果。这将涉及定义一个包含一些日期过滤器的查询模式(query schema),并使用函数调用模型将用户问题转换为结构化查询。

Query schema

在这种情况下,我们将为发布日期提供明确的最小和最大属性,以便可以对其进行过滤。

from typing import Optional

from langchain_core.pydantic_v1 import BaseModel, Field

class Search(BaseModel):

"""Search over a database of tutorial videos about a software library."""

query: str = Field(

...,

description="Similarity search query applied to video transcripts.",

)

publish_year: Optional[int] = Field(None, description="Year video was published")

Query generation

为了将用户问题转换为结构化查询,我们将使用 OpenAI 的工具调用 API。具体来说,我们将使用新的 ChatModel.with_structed_output() 构造函数来处理将模式传递给模型并解析输出。

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI

system = """You are an expert at converting user questions into database queries. \

You have access to a database of tutorial videos about a software library for building LLM-powered applications. \

Given a question, return a list of database queries optimized to retrieve the most relevant results.

If there are acronyms or words you are not familiar with, do not try to rephrase them."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

structured_llm = llm.with_structured_output(Search)

query_analyzer = {"question": RunnablePassthrough()} | prompt | structured_llm

query_analyzer.invoke("how do I build a RAG agent")

Search(query='build RAG agent', publish_year=None)

query_analyzer.invoke("videos on RAG published in 2023")

Search(query='RAG', publish_year=2023)

Retrieval with query analysis

我们的查询分析看起来相当不错;现在让我们尝试使用生成的查询来实际执行检索。

注意:在例子中,我们指定了

tool_choice = “Search”。这将迫使LLM调用一个且仅一个工具,这意味着我们将始终有一个优化的查询可供查找。但是,情况并非总是如此。

from typing import List

from langchain_core.documents import Document

def retrieval(search: Search) -> List[Document]:

if search.publish_year is not None:

# This is syntax specific to Chroma,

# the vector database we are using.

_filter = {"publish_year": {"$eq": search.publish_year}}

else:

_filter = None

return vectorstore.similarity_search(search.query, filter=_filter)

retrieval_chain = query_analyzer | retrieval

现在可以在之前有问题的输入上运行这条链,并看到它只产生当年的结果!

results = retrieval_chain.invoke("RAG tutorial published in 2023")

[(doc.metadata["title"], doc.metadata["publish_date"]) for doc in results]

[('Getting Started with Multi-Modal LLMs', '2023-12-20 00:00:00')]

Techniques

Query decomposition

- 查询分解

如果用户输入包含多个不同的问题,可以将输入分解为单独的查询,每个查询将独立执行。

当用户提出问题时,不能保证可以通过单个查询返回相关结果。有时,为了回答一个问题,我们需要将其分成不同的子问题,检索每个子问题的结果,然后使用累积上下文进行回答。

例如,如果用户询问“How is Web Voyager different from reflection agents”,有一份解释 Web Voyager 的文档和一份解释反射代理(reflection agents)的文档,但没有比较两者的文档,那么我们通过检索“What is Web Voyager”和“What are reflection agents”并结合检索到的文档,可能会比直接根据用户问题进行检索获得更好的结果。

将输入拆分为多个不同子查询的过程就是Query decomposition,有时也称为子查询生成。

Query generation

为了将用户问题转换为子问题列表,我们将使用 OpenAI 的函数调用 API,它每轮可以返回多个函数:

import datetime

from typing import Literal, Optional, Tuple

from langchain_core.pydantic_v1 import BaseModel, Field

class SubQuery(BaseModel):

"""Search over a database of tutorial videos about a software library."""

sub_query: str = Field(

...,

description="A very specific query against the database.",

)

from langchain.output_parsers import PydanticToolsParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

system = """You are an expert at converting user questions into database queries. \

You have access to a database of tutorial videos about a software library for building LLM-powered applications. \

Perform query decomposition. Given a user question, break it down into distinct sub questions that \

you need to answer in order to answer the original question.

If there are acronyms or words you are not familiar with, do not try to rephrase them."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

llm_with_tools = llm.bind_tools([SubQuery])

parser = PydanticToolsParser(tools=[SubQuery])

query_analyzer = prompt | llm_with_tools | parser

query_analyzer.invoke({"question": "how to do rag"})

[SubQuery(sub_query='How to do rag')]

query_analyzer.invoke(

{

"question": "how to use multi-modal models in a chain and turn chain into a rest api"

}

)

[SubQuery(sub_query='How to use multi-modal models in a chain?'),

SubQuery(sub_query='How to turn a chain into a REST API?')]

query_analyzer.invoke(

{

"question": "what's the difference between web voyager and reflection agents? do they use langgraph?"

}

)

[SubQuery(sub_query='What is Web Voyager and how does it differ from Reflection Agents?'),

SubQuery(sub_query='Do Web Voyager and Reflection Agents use Langgraph?')]

Adding examples and tuning the prompt

我们可能希望它进一步分解最后一个问题,以分离有关 Web Voyager 和 Reflection Agent 的查询。如果事先不确定哪种类型的查询最适合我们的索引,我们还可以有意在查询中包含一些冗余,以便我们返回子查询和更高级别的查询。

为了调整查询生成结果,我们可以在提示中添加一些输入问题和黄金标准输出查询的示例。我们还可以尝试改进我们的系统消息。

examples = []

question = "What's chat langchain, is it a langchain template?"

queries = [

SubQuery(sub_query="What is chat langchain"),

SubQuery(sub_query="What is a langchain template"),

]

examples.append({"input": question, "tool_calls": queries})

question = "How would I use LangGraph to build an automaton"

queries = [

SubQuery(sub_query="How to build automaton with LangGraph"),

]

examples.append({"input": question, "tool_calls": queries})

question = "How to build multi-agent system and stream intermediate steps from it"

queries = [

SubQuery(sub_query="How to build multi-agent system"),

SubQuery(sub_query="How to stream intermediate steps"),

SubQuery(sub_query="How to stream intermediate steps from multi-agent system"),

]

examples.append({"input": question, "tool_calls": queries})

question = "What's the difference between LangChain agents and LangGraph?"

queries = [

SubQuery(sub_query="What's the difference between LangChain agents and LangGraph?"),

SubQuery(sub_query="What are LangChain agents"),

SubQuery(sub_query="What is LangGraph"),

]

examples.append({"input": question, "tool_calls": queries})

现在需要更新提示模板和链,以便每个提示中都包含示例。由于正在使用 OpenAI 函数调用,因此需要进行一些额外的构建,以将示例输入和输出发送到模型。我们将创建一个 tool_example_to_messages 辅助函数来为我们处理这个问题:

import uuid

from typing import Dict, List

from langchain_core.messages import (

AIMessage,

BaseMessage,

HumanMessage,

SystemMessage,

ToolMessage,

)

def tool_example_to_messages(example: Dict) -> List[BaseMessage]:

messages: List[BaseMessage] = [HumanMessage(content=example["input"])]

openai_tool_calls = []

for tool_call in example["tool_calls"]:

openai_tool_calls.append(

{

"id": str(uuid.uuid4()),

"type": "function",

"function": {

"name": tool_call.__class__.__name__,

"arguments": tool_call.json(),

},

}

)

messages.append(

AIMessage(content="", additional_kwargs={"tool_calls": openai_tool_calls})

)

tool_outputs = example.get("tool_outputs") or [

"This is an example of a correct usage of this tool. Make sure to continue using the tool this way."

] * len(openai_tool_calls)

for output, tool_call in zip(tool_outputs, openai_tool_calls):

messages.append(ToolMessage(content=output, tool_call_id=tool_call["id"]))

return messages

example_msgs = [msg for ex in examples for msg in tool_example_to_messages(ex)]

from langchain_core.prompts import MessagesPlaceholder

system = """You are an expert at converting user questions into database queries. \

You have access to a database of tutorial videos about a software library for building LLM-powered applications. \

Perform query decomposition. Given a user question, break it down into the most specific sub questions you can \

which will help you answer the original question. Each sub question should be about a single concept/fact/idea.

If there are acronyms or words you are not familiar with, do not try to rephrase them."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

MessagesPlaceholder("examples", optional=True),

("human", "{question}"),

]

)

query_analyzer_with_examples = (

prompt.partial(examples=example_msgs) | llm_with_tools | parser

)

query_analyzer_with_examples.invoke(

{

"question": "what's the difference between web voyager and reflection agents? do they use langgraph?"

}

)

[SubQuery(sub_query="What's the difference between web voyager and reflection agents"),

SubQuery(sub_query='Do web voyager and reflection agents use LangGraph'),

SubQuery(sub_query='What is web voyager'),

SubQuery(sub_query='What are reflection agents')]

Query expansion

- 查询扩展

如果索引对查询短语敏感,可以生成用户问题的多个释义版本,以增加检索相关结果的机会。

信息检索系统可能对短语和特定关键字敏感。为了缓解这种情况,一种经典的检索技术是生成查询的多个释义版本并返回查询的所有版本的结果。 LLMs 是生成查询的这些替代版本的绝佳工具。

Query generation

为了确保我们获得多个释义,我们将使用 OpenAI 的函数调用 API。

from langchain_core.pydantic_v1 import BaseModel, Field

class ParaphrasedQuery(BaseModel):

"""You have performed query expansion to generate a paraphrasing of a question."""

paraphrased_query: str = Field(

...,

description="A unique paraphrasing of the original question.",

)

from langchain.output_parsers import PydanticToolsParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

system = """You are an expert at converting user questions into database queries. \

You have access to a database of tutorial videos about a software library for building LLM-powered applications. \

Perform query expansion. If there are multiple common ways of phrasing a user question \

or common synonyms for key words in the question, make sure to return multiple versions \

of the query with the different phrasings.

If there are acronyms or words you are not familiar with, do not try to rephrase them.

Return at least 3 versions of the question."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

llm_with_tools = llm.bind_tools([ParaphrasedQuery])

query_analyzer = prompt | llm_with_tools | PydanticToolsParser(tools=[ParaphrasedQuery])

让我们看看我们的分析器为我们之前搜索的问题生成了哪些查询:

query_analyzer.invoke(

{

"question": "how to use multi-modal models in a chain and turn chain into a rest api"

}

)

ParaphrasedQuery(paraphrased_query='How to utilize multi-modal models in a sequence and convert the sequence into a REST API'),

ParaphrasedQuery(paraphrased_query='Steps for using multi-modal models sequentially and transforming the sequence into a RESTful API'),

ParaphrasedQuery(paraphrased_query='Guide on employing multi-modal models in a chain and converting the chain into a RESTful API')]

query_analyzer.invoke({"question": "stream events from llm agent"})

[ParaphrasedQuery(paraphrased_query='How to stream events from LLM agent?')]

Hypothetical document embedding(HyDE)

- 假设文档嵌入 (HyDE)

如果我们使用基于相似性搜索的索引(例如向量存储),那么对原始问题的搜索可能效果不佳,因为它们的嵌入可能与相关文档的嵌入不太相似。相反,让模型生成假设的相关文档,然后使用它来执行相似性搜索可能会有所帮助。

Hypothetical document generation

- 假设文档生成

最终生成相关的假设文档简化为尝试回答用户问题。由于我们正在为 LangChain YouTube 视频设计一个问答机器人,因此我们将提供一些有关 LangChain 的基本背景,并提示模型使用更迂腐的风格(a more pedantic style),以便我们获得更现实的假设文档:

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

system = """You are an expert about a set of software for building LLM-powered applications called LangChain, LangGraph, LangServe, and LangSmith.

LangChain is a Python framework that provides a large set of integrations that can easily be composed to build LLM applications.

LangGraph is a Python package built on top of LangChain that makes it easy to build stateful, multi-actor LLM applications.

LangServe is a Python package built on top of LangChain that makes it easy to deploy a LangChain application as a REST API.

LangSmith is a platform that makes it easy to trace and test LLM applications.

Answer the user question as best you can. Answer as though you were writing a tutorial that addressed the user question."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

qa_no_context = prompt | llm | StrOutputParser()

answer = qa_no_context.invoke(

{

"question": "how to use multi-modal models in a chain and turn chain into a rest api"

}

)

print(answer)

To use multi-modal models in a chain and turn the chain into a REST API, you can leverage the capabilities of LangChain, LangGraph, and LangServe. Here's a step-by-step guide on how to achieve this:

1. **Set up LangChain**: Start by installing LangChain using pip:

pip install langchain

2. **Build a Multi-Modal Model with LangGraph**:

- Define your multi-modal model using LangGraph. LangGraph allows you to create stateful, multi-actor LLM applications easily.

- You can define different actors in your model, each responsible for a specific task or modality.

- Ensure that your model is designed to handle multiple modalities effectively.

3. **Integrate LangServe**:

- Install LangServe using pip:

pip install langserve

- Use LangServe to deploy your LangChain application as a REST API.

- LangServe provides tools to expose your multi-modal model as an API, allowing users to interact with it over HTTP.

4. **Expose Multi-Modal Model as a REST API**:

- Define endpoints in your LangServe configuration to expose the functionalities of your multi-modal model.

- You can specify input and output formats for each endpoint to handle multi-modal data effectively.

- LangServe will handle the communication between your model and the outside world through the REST API.

5. **Test and Deploy**:

- Use LangSmith to trace and test your multi-modal model before deploying it as a REST API.

- LangSmith provides tools for debugging, testing, and monitoring your LLM applications.

- Once you are satisfied with the performance of your model, deploy it as a REST API using LangServe.

By following these steps, you can effectively use multi-modal models in a chain and turn the chain into a REST API using LangChain, LangGraph, LangServe, and LangSmith. This approach allows you to build and deploy complex LLM applications with ease.

Returning the hypothetical document and original question

- 返回假设文件和原始问题

为了提高我们的召回率,我们可能希望根据假设文档和原始问题来检索文档。我们可以轻松地返回两者,如下所示:

```python

from langchain_core.runnables import RunnablePassthrough

hyde_chain = RunnablePassthrough.assign(hypothetical_document=qa_no_context)

hyde_chain.invoke(

{

“question”: “how to use multi-modal models in a chain and turn chain into a rest api”

}

)

{‘question’: ‘how to use multi-modal models in a chain and turn chain into a rest api’,

‘hypothetical_document’: “To use multi-modal models in a chain and turn the chain into a REST API, you can leverage the capabilities of LangChain, LangGraph, and LangServe. Here’s a step-by-step guide on how to achieve this:\n\n1. Set up LangChain: Start by setting up LangChain, the Python framework that provides integrations for building LLM applications. You can install LangChain using pip:\n\nbash\npip install langchain\n\n\n2. Build a LangGraph application: LangGraph is a Python package built on top of LangChain that makes it easy to build stateful, multi-actor LLM applications. You can create a LangGraph application that incorporates multi-modal models by defining the actors and their interactions within the graph.\n\n3. Integrate multi-modal models: Within your LangGraph application, you can integrate multi-modal models by incorporating different types of input data (text, images, audio, etc.) and processing them using the appropriate LLM models. You can use pre-trained models or train your own models based on your specific requirements.\n\n4. Deploy as a REST API using LangServe: LangServe is a Python package built on top of LangChain that simplifies deploying a LangChain application as a REST API. You can easily turn your LangGraph application into a REST API by using LangServe.\n\n5. Define API endpoints: Define the API endpoints that will expose the functionality of your multi-modal LangGraph application. You can specify the input data format expected by the API, the processing logic using the multi-modal models, and the output format of the API responses.\n\n6. Start the LangServe server: Once you have defined your API endpoints, you can start the LangServe server to make your multi-modal LangGraph application accessible as a REST API. You can specify the host, port, and other configurations when starting the server.\n\nBy following these steps, you can effectively use multi-modal models in a chain (LangGraph) and turn the chain into a REST API using LangServe. This approach allows you to build and deploy sophisticated LLM-powered applications that can handle diverse types of input data and provide intelligent responses via a RESTful interface.”}

#### Using function-calling to get structured output

- 使用函数调用获得结构化输出

如果我们将此技术与其他查询分析技术结合起来,我们可能会使用函数调用来获取结构化查询对象。我们可以像这样对 HyDE 使用函数调用:

```python

from langchain_core.output_parsers.openai_tools import PydanticToolsParser

from langchain_core.pydantic_v1 import BaseModel, Field

class Query(BaseModel):

answer: str = Field(

...,

description="Answer the user question as best you can. Answer as though you were writing a tutorial that addressed the user question.",

)

system = """You are an expert about a set of software for building LLM-powered applications called LangChain, LangGraph, LangServe, and LangSmith.

LangChain is a Python framework that provides a large set of integrations that can easily be composed to build LLM applications.

LangGraph is a Python package built on top of LangChain that makes it easy to build stateful, multi-actor LLM applications.

LangServe is a Python package built on top of LangChain that makes it easy to deploy a LangChain application as a REST API.

LangSmith is a platform that makes it easy to trace and test LLM applications."""

prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "{question}"),

]

)

llm_with_tools = llm.bind_tools([Query])

hyde_chain = prompt | llm_with_tools | PydanticToolsParser(tools=[Query])

hyde_chain.invoke(

{

"question": "how to use multi-modal models in a chain and turn chain into a rest api"

}

)

[Query(answer='To use multi-modal models in a chain, you can leverage LangGraph, a Python package built on top of LangChain that makes it easy to build stateful, multi-actor LLM applications. LangGraph allows you to create complex interactions between different actors in your application, enabling the use of multi-modal models effectively. Additionally, you can use LangServe, another Python package built on top of LangChain, to deploy your LangChain application as a REST API. LangServe simplifies the process of exposing your LLM-powered application as a web service, making it accessible for external interactions through HTTP requests.')]

Query routing

- 查询路由

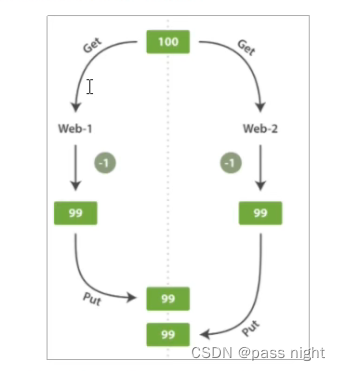

如果我们有多个索引,并且只有一个子集对任何给定的用户输入有用,我们可以将输入路由为仅从相关索引中检索结果。

例如,假设我们有一个用于所有 LangChain python 文档的向量存储索引,以及一个用于所有 LangChain js 文档的向量存储索引。给定一个有关 LangChain 使用的问题,我们想要推断问题所指的是哪种语言并查询相应的文档。查询路由是对应该对哪个索引或索引子集执行查询进行分类的过程。

Step back prompting

- 后退提示

有时,搜索质量和模型生成可能会因问题的具体情况而受到影响。处理此问题的一种方法是首先生成一个更抽象的“后退”问题,然后根据原始问题和后退问题进行查询。

Query structuring

- 查询结构

如果我们的文档具有多个可搜索/可过滤的属性,我们可以从任何原始用户问题推断出应该搜索/过滤哪些特定属性。例如,当用户输入有关视频发布日期的特定内容时,它应该成为每个文档的publish_date属性的过滤器。