特征选择集大成的包-arfs(python)

一、介绍

arfs介绍文档https://arfs.readthedocs.io/en/latest/Introduction.html

英文好的朋友可以阅读作者写的介绍:

All relevant feature selection means trying to find all features carrying information usable for prediction, rather than finding a possibly compact subset of features on which some particular model has a minimal error. This might include redundant predictors. All relevant feature selection is model agnostic in the sense that it doesn’t optimize a scoring function for a specific model but rather tries to select all the predictors which are related to the response. This package implements 3 different methods (Leshy is an evolution of Boruta, BoostAGroota is an evolution of BoostARoota and GrootCV is a new one). They are sklearn compatible. See hereunder for details about those methods. You can use any sklearn compatible estimator with Leshy and BoostAGroota but I recommend lightGBM. It’s fast, accurate and has SHAP values builtin.

It also provides a module for performing preprocessing and perform basic feature selection (autobinning, remove columns with too many missing values, zero variance, high-cardinality, highly correlated, etc.).

Moreover, as an alternative to the all relevant problem, the ARFS package provides a MRmr feature selection which, theoretically, returns a subset of the predictors selected by an arfs method. ARFS also provides a LASSO feature selection which works especially well for (G)LMs and GAMs. You can combine Lasso with the TreeDiscretizer for introducing non-linearities into linear models and perform feature selection. Please note that one limitation of the lasso is that it treats the levels of a categorical predictor individually. However, this issue can be addressed by utilizing the TreeDiscretizer, which automatically bins numerical variables and groups the levels of categorical variables.

二、文库特点

- 稍微了解这个包之后,首先想到的一个词就是特征筛选“集大成者”。

- 首先,这个包包含Boruta算法等目前流行的变量筛选的方法,并可以采用多种值来计算重要性,包括naive重要性、shap值重要性和 permutation 重要性,这样习惯使用boruta算法筛选变量的用户具有了更多的选择;

- 其次,作者还介绍了和Boruta不同的其它算法BoostARoota and GrootCV ,作者似乎最钟情于GrootCV,认为采用了lightgbm而计算快速,使用了shap重要性而更准确。

- 最后,这个包还包括了Lasso回归、相关性选择等较为传统的算法。

%%time

# GrootCV

feat_selector = arfsgroot.GrootCV(

objective="binary", cutoff=1, n_folds=5, n_iter=5, silent=True, fastshap=False

)

feat_selector.fit(X, y, sample_weight=None)

print(f"The selected features: {feat_selector.get_feature_names_out()}")

print(f"The agnostic ranking: {feat_selector.ranking_}")

print(f"The naive ranking: {feat_selector.ranking_absolutes_}")

fig = feat_selector.plot_importance(n_feat_per_inch=5)

# highlight synthetic random variable

fig = highlight_tick(figure=fig, str_match="random")

fig = highlight_tick(figure=fig, str_match="genuine", color="green")

plt.show()

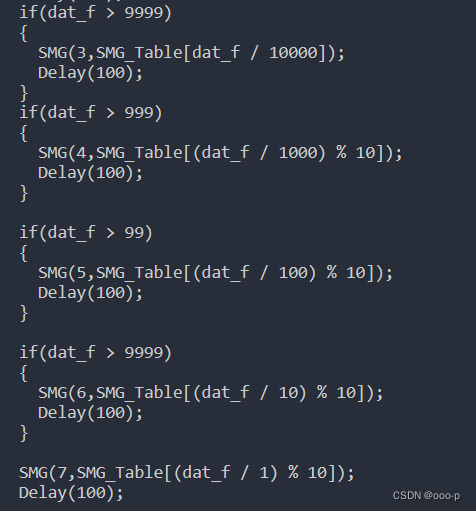

- 另一个特点是代码简洁,和scikit-learn包之间良好的兼容性,可以使用pipline;

model = clone(model)

# # Leshi/Boruta

# feat_selector = arfsgroot.Leshy(model, n_estimators=50, verbose=1, max_iter=10, random_state=42, importance='shap')

# BoostAGroota

feat_selector = arfsgroot.BoostAGroota(

estimator=model, cutoff=1, iters=10, max_rounds=10, delta=0.1, importance="shap"

)

# GrootCV

# feat_selector = arfsgroot.GrootCV(objective='binary', cutoff=1, n_folds=5, n_iter=5, silent=True)

arfs_fs_pipeline = Pipeline(

[

("missing", MissingValueThreshold(threshold=0.05)),

("unique", UniqueValuesThreshold(threshold=1)),

("collinearity", CollinearityThreshold(threshold=0.85)),

("arfs", feat_selector),

]

)

X_trans = arfs_fs_pipeline.fit(X=X, y=y).transform(X=X)

三、文库基本结构介绍

文库要求python3.9以上,GPU。Boruta与shap值结合似乎是一种趋势,还有其它的两个Python包也这样做,分别是BorutaShap和eBoruta,这一点在R语言还没有发现相似的包。

文库有如下几个模块:

- 类似Boruta的变量筛选方法,叫做relevant feature selection;

- Lasso 回归相关的变量筛选方法;

- 根据变量间的相关性(比如spearman法)筛选变量;

- 预处理模块,比如,处理缺失值、编码变量、选择列等。