文章目录

- 正常卷积

- 深度可分离卷积

- 深度卷积

- 逐点卷积

- 对比

- 代码实现查看(torch实现)

- 结果

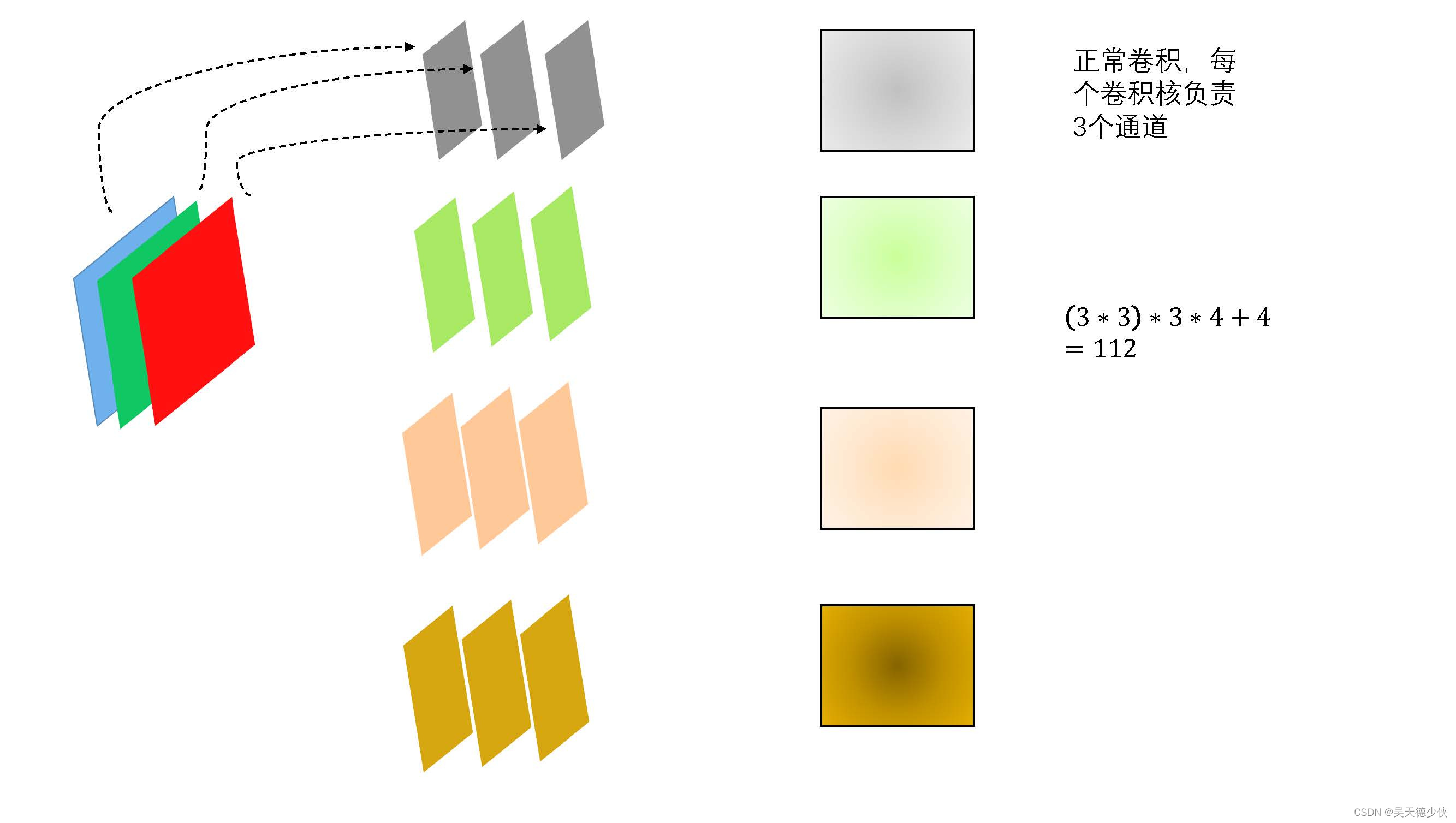

正常卷积

也就是我们平常用的比较普遍的卷积:

它的参数量是:112,即:

(

卷积核大小)

∗

输入通道

∗

输出通道

+

偏置

(卷积核大小)*输入通道*输出通道+偏置

(卷积核大小)∗输入通道∗输出通道+偏置

深度可分离卷积

等于:

深度卷积

+

逐点卷积

深度卷积+逐点卷积

深度卷积+逐点卷积

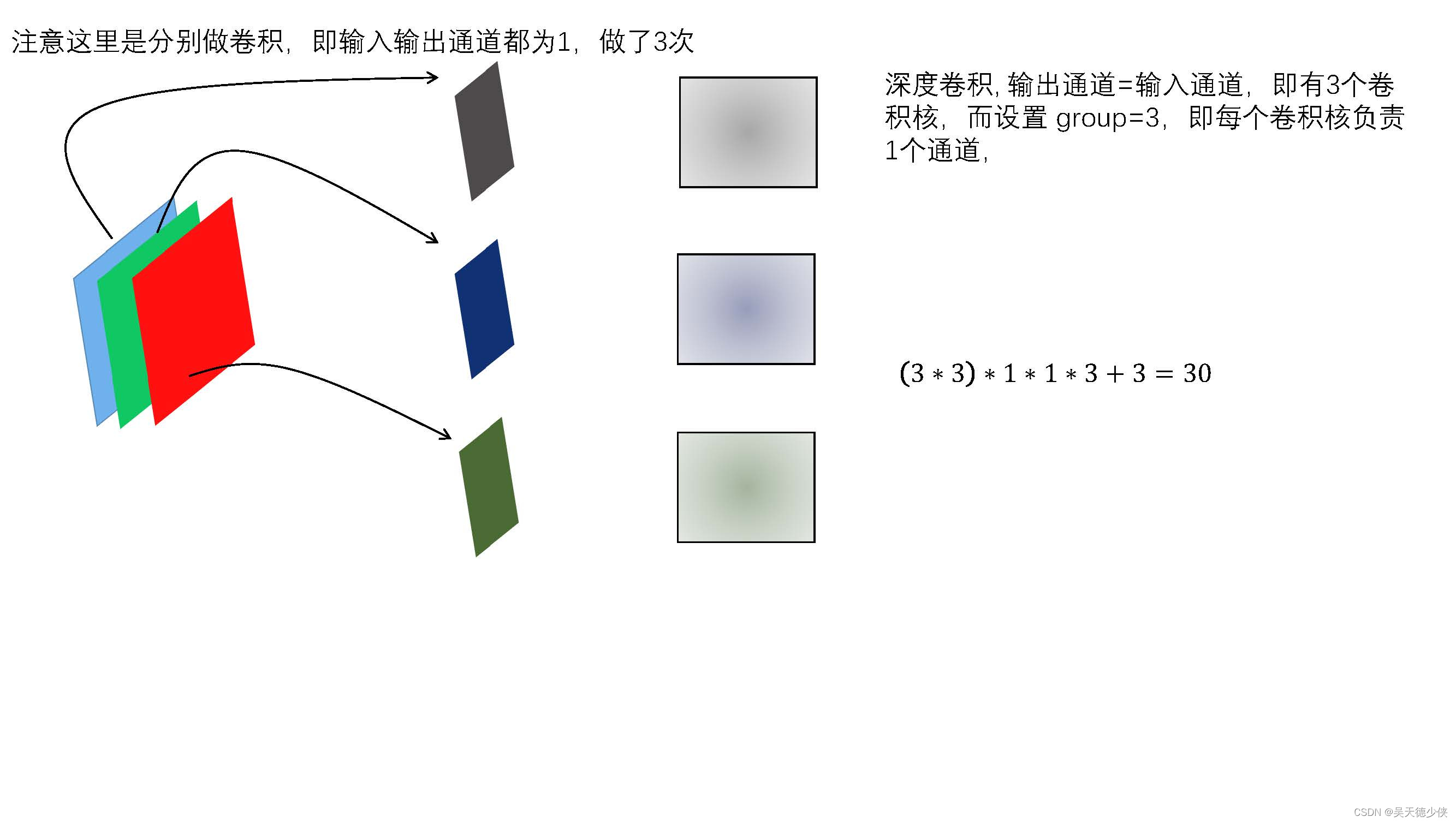

还是上面的例子,由于输入通道为3,所以我们设置group=3。

深度卷积

它的参数量是:30,即:

(

卷积核大小)

∗

输入通道

∗

输出通道

∗

g

r

o

u

p

数

+

偏置

(卷积核大小)*输入通道*输出通道*group数+偏置

(卷积核大小)∗输入通道∗输出通道∗group数+偏置

逐点卷积

就是用卷积核大小为1的卷积核,做正常卷积,此时卷积核个数为输出通道数,即4。

它的参数量是:16,即:

(

卷积核大小)

∗

输入通道

∗

输出通道

+

偏置

(卷积核大小)*输入通道*输出通道+偏置

(卷积核大小)∗输入通道∗输出通道+偏置

对比

正常卷积参数量:

112

112

112

深度可分离卷积参数量:

30

+

16

=

46

30+16=46

30+16=46

很明显,这么一个简单的卷积,就减少了一半多的参数,所以会很快。

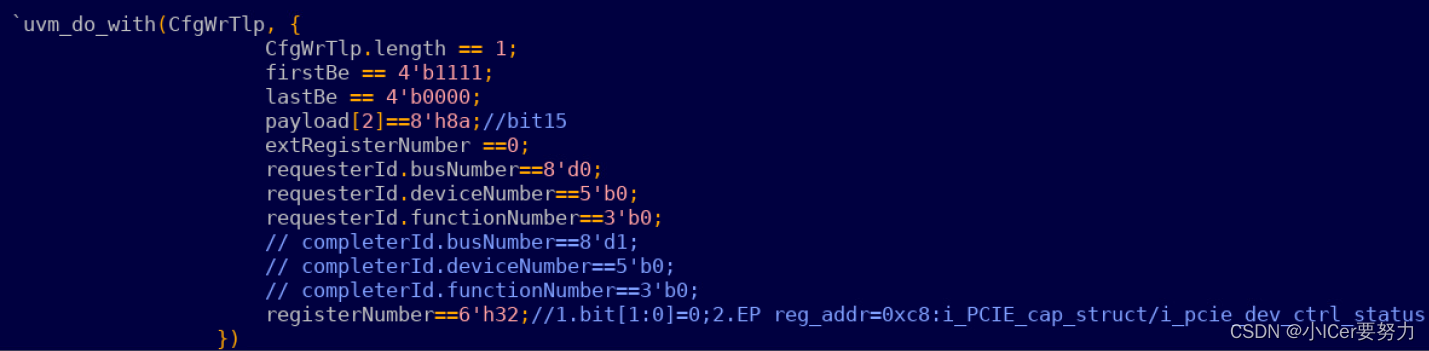

代码实现查看(torch实现)

from torch import nn

import torch

import torchsummary

import torchviz

class Model_1(nn.Module):

def __init__(self,in_channels, out_channels, groups)

super(Model_1, self).__init__()

self.conv = nn.Conv2d(in_channels=in_channels,out_channels=out_channels,groups=groups,kernel_size=3, padding=1)

def forward(self, x):

y = self.conv(x)

return y

class Model_2(nn.Module):

def __init__(self, in_channels, out_channels, groups):

super(Model_2, self).__init__()

self.conv_1 = nn.Conv2d(in_channels=in_channels,out_channels=in_channels,groups=in_channels,kernel_size=3, padding=1)

self.conv_2 = nn.Conv2d(in_channels=in_channels,out_channels=out_channels,kernel_size=1)

def forward(self, x):

x = self.conv_1(x)

y = self.conv_2(x)

return y

if __name__ == '__main__':

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = Model_1(3,4,1).to(device)

torchsummary.summary(model,input_size=(3,224,224))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = Model_2(3,4,1).to(device)

torchsummary.summary(model,input_size=(3,224,224))

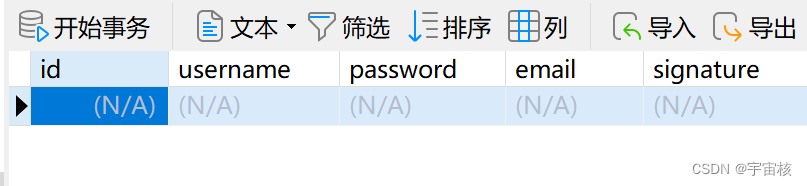

结果

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 4, 224, 224] 112

================================================================

Total params: 112

Trainable params: 112

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 1.53

Params size (MB): 0.00

Estimated Total Size (MB): 2.11

----------------------------------------------------------------

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 3, 224, 224] 30

Conv2d-2 [-1, 4, 224, 224] 16

================================================================

Total params: 46

Trainable params: 46

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 2.68

Params size (MB): 0.00

Estimated Total Size (MB): 3.25

----------------------------------------------------------------