docker 网络

准备工作:清空所有镜像和容器

docker rm -f $(docker ps -aq)

docker rmi -f $(docker images -aq)

docker0 网络

查看本地网络

ip addr

[root@iZbp15293q8kgzhur7n6kvZ /]# ip addr

# 本地回环网络

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

# 网卡地址 wifi

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:2a:98:75 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.176/24 brd 192.168.0.255 scope global dynamic eth0

valid_lft 315187556sec preferred_lft 315187556sec

inet6 fe80::216:3eff:fe2a:9875/64 scope link

valid_lft forever preferred_lft forever

# docker 0 ,docker创建的网络

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:c0:01:1f:34 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c0ff:fe01:1f34/64 scope link

valid_lft forever preferred_lft forever

-

大型项目微服务(一个服务对应一个容器)这么多,要怎么互相访问?如果通过 ip 来访问,但是当容器重新启动的时候 ip 都会被重新分配,所以显然不能用 ip 来访问容器,所以最好的办法是通过容器名来访问

-

docker 每启动一个容器,就会给他分配一个 ip。docker0 就是 docker 默认给的网络,在我们不指定网络的情况下,创建的容器都在 docker0 网络中,未来开发,我们要自定义网络。

这里我们启动一个容器,查看一下它的 ip

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name web01 centos

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

a1d0c7532777: Already exists

Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Status: Downloaded newer image for centos:latest

8708f9efa52cb166be8c9904edd95c2d37d51475c1daf78213fcb8d55c402d53

# docker每启动一个容器,就会给他分配一个ip。这个ip就是归docker0 管理

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

116: eth0@if117: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 并且容器外的主机是可以 ping 通这个容器的

[root@iZbp15293q8kgzhur7n6kvZ /]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.097 ms

我们对比一下宿主机的网络和容器的网络

可以发现,在启动容器后,容器内会分配一个网络eth0@if117,而宿主机也多了一个网络117: veth9f88f58@if116,它俩是互相配对的

再添加一个容器 web02,进行对比

web02 ip:

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name web02 centos

de6c34801c3db4a14f49a81d7532c0fb1461e989e2d7a19eaf1b587685b01ead

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

118: eth0@if119: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

宿主机 ip:

[root@iZbp15293q8kgzhur7n6kvZ /]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:2a:98:75 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.176/24 brd 192.168.0.255 scope global dynamic eth0

valid_lft 315186479sec preferred_lft 315186479sec

inet6 fe80::216:3eff:fe2a:9875/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:01:1f:34 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c0ff:fe01:1f34/64 scope link

valid_lft forever preferred_lft forever

117: veth9f88f58@if116: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 7a:cb:75:a2:a4:38 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::78cb:75ff:fea2:a438/64 scope link

valid_lft forever preferred_lft forever

119: veth5e85c8c@if118: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 62:fd:a5:83:48:4b brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::60fd:a5ff:fe83:484b/64 scope link

valid_lft forever preferred_lft forever

总结:

web01 --- linux 116:eth0@if117 --- 117:veth9f88f58@if116

web02 --- linux 118:eth0@if119 --- 119:veth5e85c8c@if118

- 只要启动一个容器,默认就会分配一对网卡

- 虚拟接口 # veth-pair 就是一对的虚拟设备接口,它都是成对出现的。一端连着协议栈,一端彼此相连着。

- 就好比一个桥梁,可以连通容器内外。

下面测试,两个容器之间通过 ip 访问情况

# web01 ping web02

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web01 ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.113 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.054 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.050 ms

^C

--- 172.17.0.3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.050/0.072/0.113/0.029 ms

# web02 ping web01

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.078 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.065 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.079 ms

^C

--- 172.17.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.065/0.074/0.079/0.006 ms

发现两个容器是可以通过 ip 来相互 ping 通的

总结:

docker 使用 Linux 桥接,在宿主机虚拟一个 docker 容器网桥(docker0),docker 启动一个容器时会根据 docker 网桥的网段分配给容器一个 IP 地址,称为 Container-IP,同时 docker 网桥是每个容器的默认网关。因为在同一宿主机内的容器都接入同一个网桥,这样容器之间就能够通过容器的Container-IP 直接通信。

docker 容器网络就很好的利用了 Linux虚拟网络技术,在本地主机和容器内分别创建一个虚拟接口,并让他们彼此联通(这样一对接口叫 veth pair);

docker 中的网络接口默认都是虚拟的接口。虚拟接口的优势就是转发效率极高(因为 Linux 是在内核中进行数据的复制来实现虚拟接口之间的数据转发,无需通过外部的网络设备交换),对于本地系统和容器系统来说,虚拟接口跟一个正常的以太网卡相比并没有区别,只是他的速度快很多。

--link参数

前面我们发现容器之间是可以通过 ip 相互 ping 通的,但是一旦容器删除后再次启动一个新的容器,ip 发生了变化怎么办?

所以最好通过容器名来相互访问,我们测试两个容器之间能否通过容器名相互 ping 通

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web01 ping web02

ping: web02: Name or service not known

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web02 ping web01

ping: web01: Name or service not known

发现两个容器是无法相互 ping 通

怎么解决这个问题?我们可以在容器运行时使用--link参数指定它可以通过容器名去 ping 通的容器

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name web03 --link web02 centos

c50590fbd3880afbaeddf1d5f67274170328d4b40d63f109c84d2754f5ecca39

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web03 ping web02

PING web02 (172.17.0.3) 56(84) bytes of data.

64 bytes from web02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.103 ms

64 bytes from web02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.055 ms

64 bytes from web02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.054 ms

^C

--- web02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.054/0.070/0.103/0.024 ms

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web02 ping web03

ping: web03: Name or service not known

发现 web03 可以 ping web02,但是 web02 不能 ping web03

我们了解一下它为什么可以 ping 通?

其实它的原理和我们熟知的域名映射原理相同

我们在访问www.baidu.com时,流程是先去本机的 hosts 中查找有没有映射,如果没有则去 DNS 找。那么 web02 就相当于www.baidu.com,--link就是在 web03 的 hosts 中配置了 web02 的映射

我们可以通过以下命令证实:

--link过于麻烦,现在已经不常用了,我们一般都是使用自定义网络

自定义网络

有关 docker 网络命令

docker network COMMOND

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

列出所有的网络

docker network ls

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4b2c8b4a7052 bridge bridge local

9377180a376d host host local

bc9bbface74e none null local

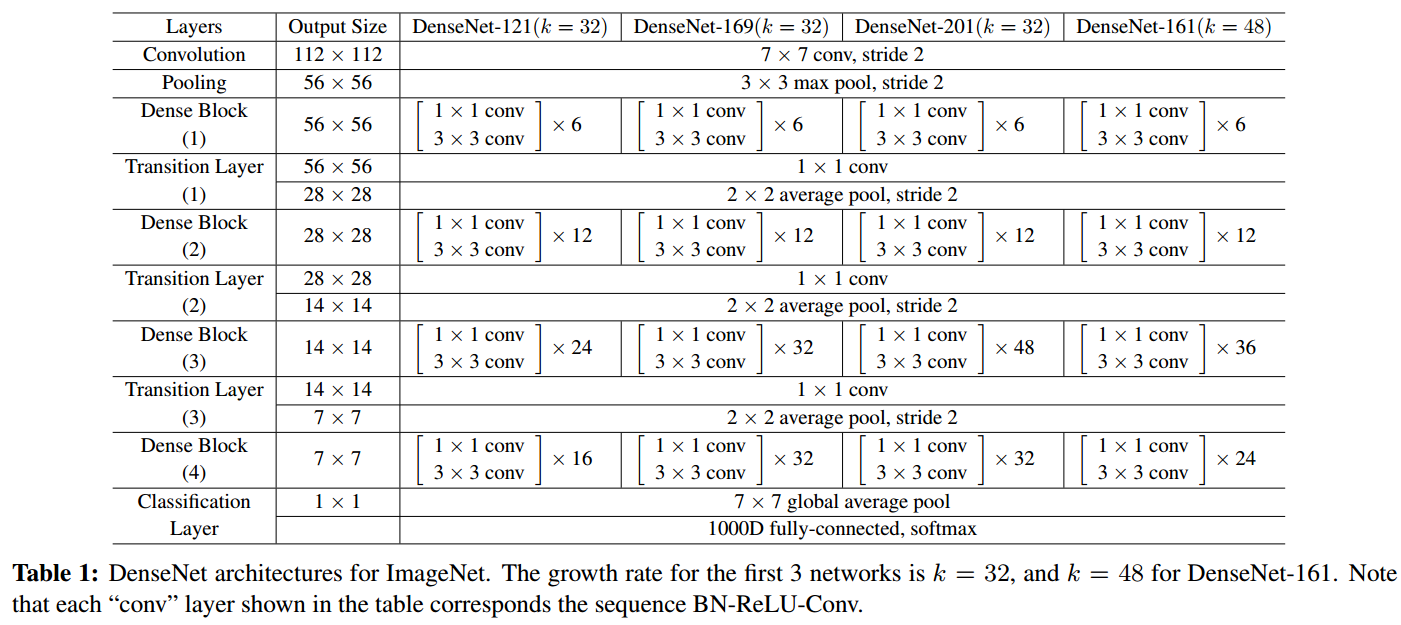

所有网络模式

| 网络模式 | 配置 | 说明 |

|---|---|---|

| bridge 模式 | –net=bridge | 默认值,在 docker 网桥 docker0 上为容器创建新的网络栈 |

| none 模式 | –net=none | 不配置网络,用户可以稍后进入容器,自行配置 |

| container 模式 | –net=container:name/id | 容器和另外一个容器共享 Network namespace。 kubernetes 中的 pod 就是多个容器共享一个Network namespace。 |

| host 模式 | –net=host | 容器和宿主机共享 Network namespace |

| 用户自定义 | –net=自定义网络 | 用户自己使用 network 相关命令定义网络,创建容器的时候可以指定为自己定义的网络 |

我们看一下 docker0 网络

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "4b2c8b4a70520b4b20808abcade35c2c4758bb404d54c57667f6b22c8f2bdd2f",

"Created": "2024-03-19T16:00:30.274778926+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

# 网络配置: config配置,后面子网网段 255*255. 65534个地址(docker0网关)

"Subnet": "172.17.0.0/16",

# docker0 网关地址

"Gateway": "172.17.0.1"

}

]

},

......

# 在这个网络下的容器地址。Name 就是容器的名字

"Containers": {

"8708f9efa52cb166be8c9904edd95c2d37d51475c1daf78213fcb8d55c402d53": {

"Name": "web01",

"EndpointID": "87460cc4bf26e390bcfd0b48308b1439155244d6127d1f52d10cf244ac7b0efa",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"c50590fbd3880afbaeddf1d5f67274170328d4b40d63f109c84d2754f5ecca39": {

"Name": "web03",

"EndpointID": "385f598cf212878438c827bb0bc07131e32227cc0485d373f238a4f518016628",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

},

"de6c34801c3db4a14f49a81d7532c0fb1461e989e2d7a19eaf1b587685b01ead": {

"Name": "web02",

"EndpointID": "f73e8417c9d5b32f5ccc69de44730af018f471f9b85a6ea9ece74b61b6e99b73",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

......

}

]

创建容器时指定网络(–net)

docker run -itd --name web01 --net bridge centos

- 它是默认的

- 域名访问不通

- –link 域名通了,但是删了又不行

示例:自己定义一个网络

准备:删除所有容器

创建网络命令:

docker network create 网络名

参数:

-d:等价于--driver,指定网络模式(默认为 bridge)--subnet:指定创建的网络的子网 IP 范围--gateway:指定网络的默认网关

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network create \

> -d bridge \

> --subnet 192.169.0.0/16 \

> --gateway 192.169.0.1 \

> mynet

12f2b8c1ebf8b17230ab8ad427f43607a68f434c71d66d6473d3e2748f252cb7

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

4b2c8b4a7052 bridge bridge local

9377180a376d host host local

12f2b8c1ebf8 mynet bridge local

bc9bbface74e none null local

未来可以通过网络来隔离项目,包括集群环境也可以自定义网络去配置

我们通过我们自定义的网络去创建两个容器

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name web01 --net mynet centos

64eae3b402fca58e8e3d1442d2d094b09d165f74c89ec8562886bbae9d8857cc

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name web02 --net mynet centos

f444f053dee9c75edd6cc091dd62cee52b4b8637d39837e6f902e6dfa9b38be5

创建完成后,查看宿主机的网络:

[root@iZbp15293q8kgzhur7n6kvZ /]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:2a:98:75 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.176/24 brd 192.168.0.255 scope global dynamic eth0

valid_lft 315182855sec preferred_lft 315182855sec

inet6 fe80::216:3eff:fe2a:9875/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:c0:01:1f:34 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:c0ff:fe01:1f34/64 scope link

valid_lft forever preferred_lft forever

122: br-12f2b8c1ebf8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:31:db:89:21 brd ff:ff:ff:ff:ff:ff

inet 192.169.0.1/16 brd 192.169.255.255 scope global br-12f2b8c1ebf8

valid_lft forever preferred_lft forever

inet6 fe80::42:31ff:fedb:8921/64 scope link

valid_lft forever preferred_lft forever

124: veth18c6e58@if123: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-12f2b8c1ebf8 state UP group default

link/ether 16:aa:84:ed:1b:99 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::14aa:84ff:feed:1b99/64 scope link

valid_lft forever preferred_lft forever

126: vethb0f6cb2@if125: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-12f2b8c1ebf8 state UP group default

link/ether 92:7a:5a:50:6a:94 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::907a:5aff:fe50:6a94/64 scope link

valid_lft forever preferred_lft forever

发现跟默认的 docker0 网络有很大不同

我们测试两个容器能否通过容器名 ping 通?

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web01 ping web02

PING web02 (192.169.0.3) 56(84) bytes of data.

64 bytes from web02.mynet (192.169.0.3): icmp_seq=1 ttl=64 time=0.071 ms

64 bytes from web02.mynet (192.169.0.3): icmp_seq=2 ttl=64 time=0.061 ms

64 bytes from web02.mynet (192.169.0.3): icmp_seq=3 ttl=64 time=0.070 ms

^C

--- web02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.061/0.067/0.071/0.008 ms

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web02 ping web01

PING web01 (192.169.0.2) 56(84) bytes of data.

64 bytes from web01.mynet (192.169.0.2): icmp_seq=1 ttl=64 time=0.067 ms

64 bytes from web01.mynet (192.169.0.2): icmp_seq=2 ttl=64 time=0.063 ms

64 bytes from web01.mynet (192.169.0.2): icmp_seq=3 ttl=64 time=0.067 ms

^C

--- web01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.063/0.065/0.067/0.009 ms

诶!,我们惊喜地发现两个容器之间可以通过容器名 ping 通了

原理:

当你创建一个自定义网络并将容器连接到该网络时,docker 会为每个容器分配一个 DNS 名称。这个 DNS 名称是容器名,它在网络中是唯一的。docker 内部的 DNS 服务器会将这些容器名映射到相应的容器 IP 地址上。

因此,当一个容器想要访问另一个容器时,它可以使用目标容器的容器名作为主机名进行访问。docker 内部的 DNS 服务器会解析容器名并将其映射到相应的 IP 地址,从而实现容器之间的通信。

这种通过容器名进行访问的机制使得容器之间的通信更加方便和灵活。无论容器的 IP 地址如何变化,容器名始终是唯一且稳定的,可以方便地进行容器之间的通信和访问。

两个不同网络下的容器相互访问

如果两个容器在不同的网络下,无论是通过 ip 还是容器名都无法相互 ping 通,那么怎样能够让两个容器相互访问呢?

我们可以将一个容器连接到另一个网络

docker network connect 网络名 容器名

我们在 docker0 网络下创建一个容器

# 不指定网络下,默认就是 docker0 网络

[root@iZbp15293q8kgzhur7n6kvZ /]# docker run -itd --name akuya centos

f45124e9a4c2f466ec160159f05eb44de0f4e090c284fe7553d8cb8c4a5ea5ef

# 发现是不能 ping 通的

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it akuya ping web01

ping: web01: Name or service not known

接下来我们将akuya容器连接到我们自定义的mynet网络下

# 连接网络

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network connect mynet akuya

# 测试相互能否 ping 通

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it akuya ping web01

PING web01 (192.169.0.2) 56(84) bytes of data.

64 bytes from web01.mynet (192.169.0.2): icmp_seq=1 ttl=64 time=0.074 ms

64 bytes from web01.mynet (192.169.0.2): icmp_seq=2 ttl=64 time=0.069 ms

64 bytes from web01.mynet (192.169.0.2): icmp_seq=3 ttl=64 time=0.066 ms

^C

--- web01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.066/0.069/0.074/0.010 ms

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it akuya ping web02

PING web02 (192.169.0.3) 56(84) bytes of data.

64 bytes from web02.mynet (192.169.0.3): icmp_seq=1 ttl=64 time=0.079 ms

64 bytes from web02.mynet (192.169.0.3): icmp_seq=2 ttl=64 time=0.056 ms

64 bytes from web02.mynet (192.169.0.3): icmp_seq=3 ttl=64 time=0.063 ms

^C

--- web02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.056/0.066/0.079/0.009 ms

[root@iZbp15293q8kgzhur7n6kvZ /]# docker exec -it web01 ping akuya

PING akuya (192.169.0.4) 56(84) bytes of data.

64 bytes from akuya.mynet (192.169.0.4): icmp_seq=1 ttl=64 time=0.060 ms

64 bytes from akuya.mynet (192.169.0.4): icmp_seq=2 ttl=64 time=0.057 ms

64 bytes from akuya.mynet (192.169.0.4): icmp_seq=3 ttl=64 time=0.102 ms

^C

--- akuya ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.057/0.073/0.102/0.020 ms

经过测试我们发现,它们都可以相互 ping 通了

我们查看一下 docker0 网络和自定义的 mynet 网络信息

docker0 网络:

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network inspect bridge

[

{

......

"Containers": {

"f45124e9a4c2f466ec160159f05eb44de0f4e090c284fe7553d8cb8c4a5ea5ef": {

"Name": "akuya",

"EndpointID": "b3b277ce1dba8c8a6e7cd7f74361c26c9261c2f2c7d3777d68fe89443dc39150",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

......

}

]

里面存在akuya容器,IP地址为 172.17.0.2

mynet 网络:

[root@iZbp15293q8kgzhur7n6kvZ /]# docker network inspect mynet

[

{

......

"Containers": {

"64eae3b402fca58e8e3d1442d2d094b09d165f74c89ec8562886bbae9d8857cc": {

"Name": "web01",

"EndpointID": "aa68171e585ec3846874531d5201019b2e412554bdffa389876f3fc1f5078ca4",

"MacAddress": "02:42:c0:a9:00:02",

"IPv4Address": "192.169.0.2/16",

"IPv6Address": ""

},

"f444f053dee9c75edd6cc091dd62cee52b4b8637d39837e6f902e6dfa9b38be5": {

"Name": "web02",

"EndpointID": "d69c67cda750727d844b3412d95f0441fbdd7f005ade3cb4d34394d8d1254734",

"MacAddress": "02:42:c0:a9:00:03",

"IPv4Address": "192.169.0.3/16",

"IPv6Address": ""

},

"f45124e9a4c2f466ec160159f05eb44de0f4e090c284fe7553d8cb8c4a5ea5ef": {

"Name": "akuya",

"EndpointID": "4b8e56d6d8ec037e88b250bf6be76ae494c645584bbf6332ddd5c82a9ea5fc10",

"MacAddress": "02:42:c0:a9:00:04",

"IPv4Address": "192.169.0.4/16",

"IPv6Address": ""

}

},

......

}

]

里面同样存在akuya容器,ip 地址为 192.169.0.4

结论:连接之后,akuya这个容器拥有了两个 ip,一个容器是可以有多个 ip 的。

如果要跨网络访问别的服务,就需要使用 docker network connect [OPTIONS] NETWORK CONTAINER 连接。