Transformers 直观解释——不仅是如何工作,而且为什么工作得这么好

- Transformers Explained Visually — Not Just How, but Why They Work So Well

- How does the input sequence reach the Attention module 输入序列如何到达Attention模块

- Each input row is a word from the sequence 每个输入行都是序列中的一个单词

- Each word goes through a series of learnable transformations 每个单词都会经历一系列可学习的转换

- Attention Score — Dot Product between Query and Key words 注意力分数——查询和关键词之间的点积

- Attention Score — Dot Product between Query-Key and Value words 注意力分数——查询关键词和值词之间的点积

- What is the role of the Query, Key, and Value words? 查询词、关键词和值词的作用是什么?

- Dot Product tells us the similarity between words 点积告诉我们单词之间的相似度

- How does the Transformer learn the relevance between words? Transformer 如何学习单词之间的相关性?

- Summarizing — What makes the Transformer tick? 总结——是什么让 Transformer 运转起来?

- Encoder Self-Attention in the Transformer Transformer 中的编码器自注意力

- Decoder Self-Attention in the Transformer Transformer 中的解码器自注意力

- Encoder-Decoder Attention in the Transformer Transformer 中的编码器-解码器注意力

原文链接:https://towardsdatascience.com/transformers-explained-visually-not-just-how-but-why-they-work-so-well-d840bd61a9d3

Transformers Explained Visually — Not Just How, but Why They Work So Well

Transformers 直观解释——不仅是如何工作,而且为什么工作得这么好

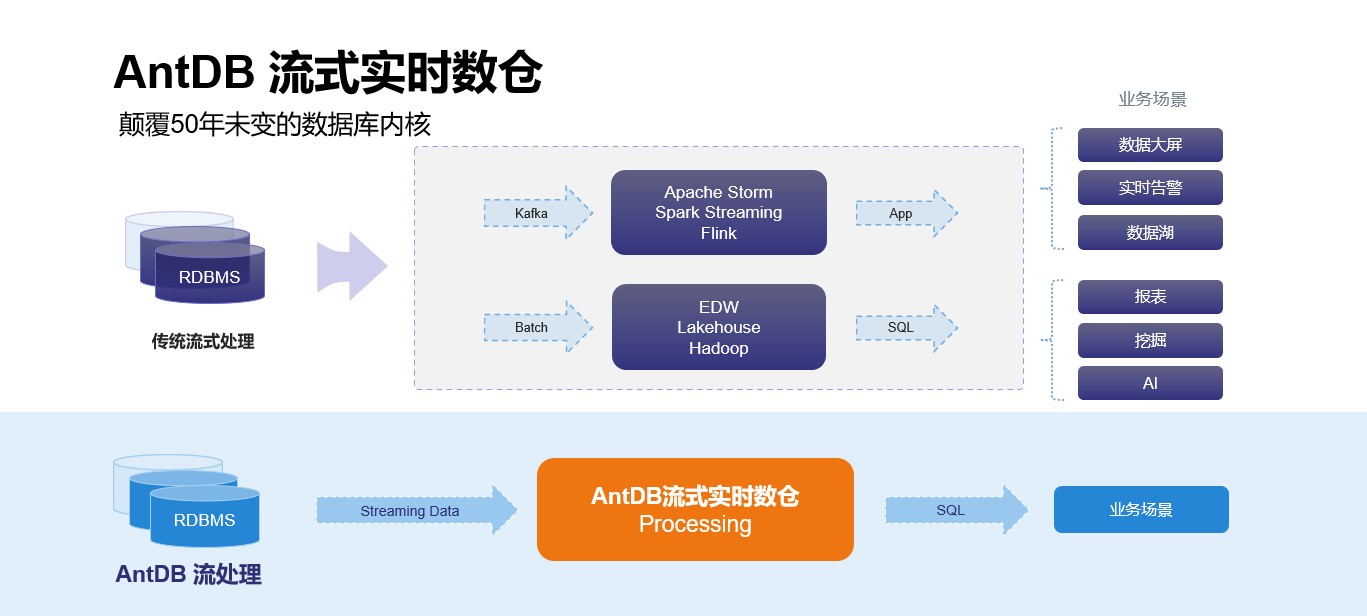

Transformers have taken the world of NLP by storm in the last few years. Now they are being used with success in applications beyond NLP as well.

在过去的几年里,Transformer 席卷了 NLP 世界。现在它们在 NLP 之外的应用中也得到了成功的使用。

The Transformer gets its powers because of the Attention module. And this happens because it captures the relationships between each word in a sequence with every other word.

Transformer 的强大得益于 Attention 模块。发生这种情况是因为它捕获了序列中每个单词与其他单词之间的关系。

But the all-important question is how exactly does it do that?

但最重要的问题是它到底是如何做到这一点的?

In this article, we will attempt to answer that question, and understand why it performs the calculations that it does.

在本文中,我们将尝试回答这个问题,并理解为什么它会执行这样的计算。

I have a few more articles in my series on Transformers. In those articles, we learned about the Transformer architecture and walked through their operation during training and inference, step-by-step. We also explored under the hood and understood exactly how they work in detail.

我的变形金刚系列中还有几篇文章。在这些文章中,我们了解了 Transformer 架构,并逐步了解了它们在训练和推理过程中的操作。我们还进行了底层探索,并准确了解了它们的工作原理。

Our goal is to understand not just how something works but why it works that way.

我们的目标不仅是了解某物是如何工作的,而且是了解它为何如此工作。

- Overview of functionality (How Transformers are used, and why they are better than RNNs. Components of the architecture, and behavior during Training and Inference)

功能概述(Transformer 的使用方式以及为什么它们比 RNN 更好。架构的组件以及训练和推理期间的行为) - How it works (Internal operation end-to-end. How data flows and what computations are performed, including matrix representations)

它是如何工作的(端到端的内部操作。数据如何流动以及执行什么计算,包括矩阵表示) - Multi-head Attention (Inner workings of the Attention module throughout the Transformer)

多头注意力(注意力模块在整个 Transformer 中的内部运作)

And if you’re interested in NLP applications in general, I have some other articles you might like.

如果您对 NLP 应用程序感兴趣,我还有一些您可能会喜欢的其他文章。

- Beam Search (Algorithm commonly used by Speech-to-Text and NLP applications to enhance predictions)

Beam Search(语音转文本和 NLP 应用程序常用来增强预测的算法) - Bleu Score (Bleu Score and Word Error Rate are two essential metrics for NLP models)

Bleu Score(Bleu Score 和 Word Error Rate 是 NLP 模型的两个基本指标)

To understand what makes the Transformer tick, we must focus on Attention. Let’s start with the input that goes into it, and then look at how it processes that input.

要了解 Transformer 的运行原理,我们必须关注注意力。让我们从进入其中的输入开始,然后看看它如何处理该输入。

How does the input sequence reach the Attention module 输入序列如何到达Attention模块

输入序列如何到达Attention模块

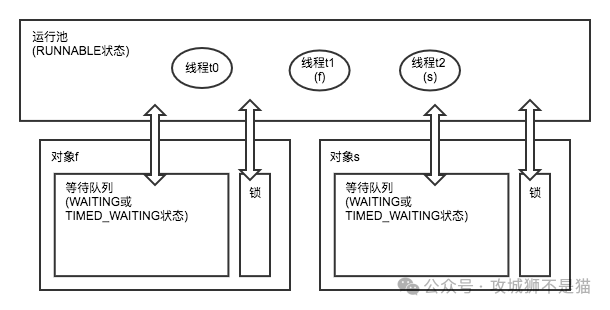

The Attention module is present in every Encoder in the Encoder stack, as well as every Decoder in the Decoder stack. We’ll zoom in on the Encoder attention first.

注意力模块存在于编码器堆栈中的每个编码器中,以及解码器堆栈中的每个解码器中。我们将首先放大编码器的注意力。

Attention in the Encoder (Image by Author)

编码器中的注意力(作者提供的图片)

As an example, let’s say that we’re working on an English-to-Spanish translation problem, where one sample source sequence is “The ball is blue”. The target sequence is “La bola es azul”.

举个例子,假设我们正在研究一个英语到西班牙语的翻译问题,其中一个示例源序列是“球是蓝色的”。目标序列是“La bola es azul”。

The source sequence is first passed through the Embedding and Position Encoding layer, which generates embedding vectors for each word in the sequence. The embedding is passed to the Encoder where it first reaches the Attention module.

源序列首先通过嵌入和位置编码层,该层为序列中的每个单词生成嵌入向量。嵌入被传递到编码器,首先到达注意力模块。

Within Attention, the embedded sequence is passed through three Linear layers which produce three separate matrices — known as the Query, Key, and Value. These are the three matrices that are used to compute the Attention Score.

在注意力机制中,嵌入序列通过三个线性层,产生三个独立的矩阵——称为查询、键和值。这是用于计算注意力分数的三个矩阵。

The important thing to keep in mind is that each ‘row’ of these matrices corresponds to one word in the source sequence.

要记住的重要一点是,这些矩阵的每一“行”对应于源序列中的一个单词。

The flow of the source sequence (Image by Author)

源序列的流程(作者提供的图片)

Each input row is a word from the sequence 每个输入行都是序列中的一个单词

每个输入行都是序列中的一个单词

The way we will understand what is going on with Attention, is by starting with the individual words in the source sequence, and then following their path as they make their way through the Transformer. In particular, we want to focus on what goes on inside the Attention Module.

我们理解注意力机制的方式是从源序列中的单个单词开始,然后跟随它们通过 Transformer 的路径。特别是,我们希望关注注意力模块内部发生的事情。

That will help us clearly see how each word in the source and target sequences interacts with other words in the source and target sequences.

这将帮助我们清楚地了解源序列和目标序列中的每个单词如何与源序列和目标序列中的其他单词相互作用。

So as we go through this explanation, concentrate on what operations are being performed on each word, and how each vector maps to the original input word. We do not need to worry about many of the other details such as matrix shapes, specifics of the arithmetic calculations, multiple attention heads, and so on if they are not directly relevant to where each word is going.

因此,当我们进行解释时,请重点关注对每个单词执行的操作以及每个向量如何映射到原始输入单词。我们不需要担心许多其他细节,例如矩阵形状、算术计算的细节、多个注意力头等等,如果它们与每个单词的去向不直接相关的话。

So to simplify the explanation and the visualization, let’s ignore the embedding dimension and track just the rows for each word.

因此,为了简化解释和可视化,我们忽略嵌入维度并仅跟踪每个单词的行。

The flow of each word in the source sequence (Image by Author)

源序列中每个单词的流程(作者提供的图片)

Each word goes through a series of learnable transformations 每个单词都会经历一系列可学习的转换

每个单词都会经历一系列可学习的转换

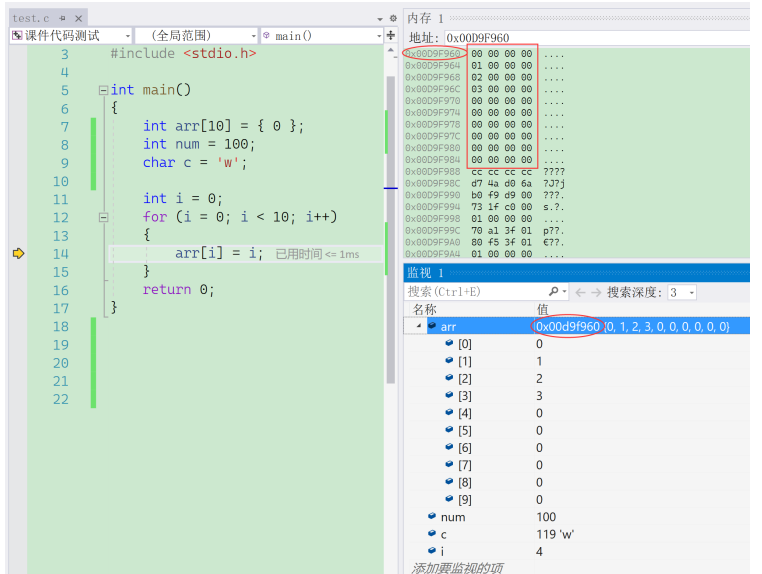

Each such row has been generated from its corresponding source word by a series of transformations — embedding, position encoding, and linear layer.

每个这样的行都是通过一系列转换(嵌入、位置编码和线性层)从其相应的源字生成的。

All of those transformations are trainable operations. This means that the weights used in those operations are not pre-decided but are learned by the model in such a way that they produce the desired output predictions.

所有这些转换都是可训练的操作。这意味着这些操作中使用的权重不是预先确定的,而是由模型学习的,从而产生所需的输出预测。

Linear and Embedding weights are learned (Image by Author)

学习线性和嵌入权重(作者提供的图片)

The key question is, how does the Transformer figure out what set of weights will give it the best results? Keep this point in the back of your mind as we will come back to it a little later.

关键问题是,Transformer 如何计算出哪一组权重能够带来最佳结果?请记住这一点,因为我们稍后会再讨论它。

Attention Score — Dot Product between Query and Key words 注意力分数——查询和关键词之间的点积

注意力分数——查询和关键词之间的点积

Attention performs several steps, but here, we will focus only on the Linear layer and the Attention Score.

注意力执行几个步骤,但在这里,我们将只关注线性层和注意力分数。

Multi-head attention (Image by Author)

多头注意力(作者提供的图片)

Attention Score calculation (Image by Author)

注意力分数计算(作者提供的图片)

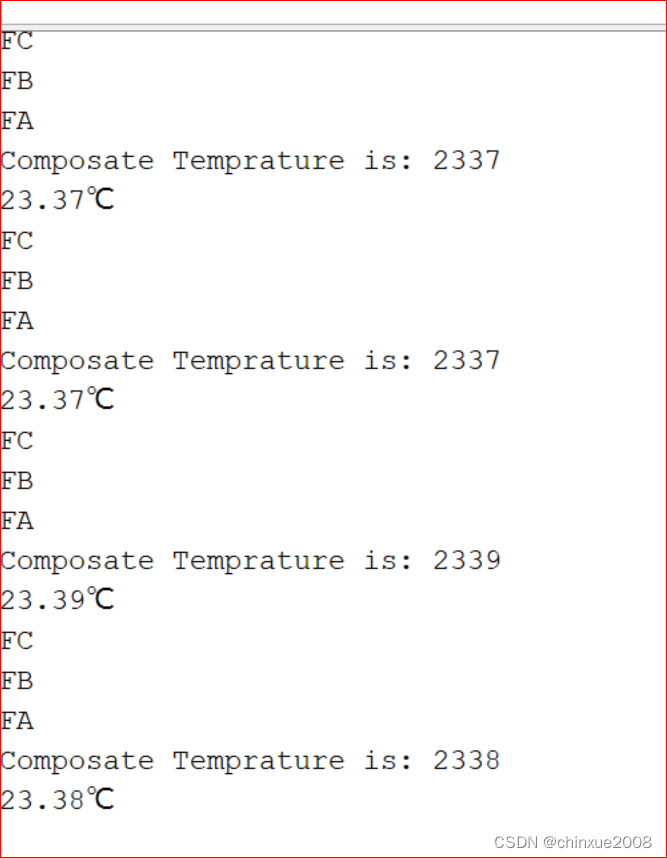

As we can see from the formula, the first step within Attention is to do a matrix multiply (ie. dot product) between the Query (Q) matrix and a transpose of the Key (K) matrix. Watch what happens to each word.

从公式中我们可以看出,Attention 的第一步是在 Query (Q) 矩阵和 Key (K) 矩阵的转置之间进行矩阵乘法(即点积)。观察每个单词发生了什么。

We produce an intermediate matrix (let’s call it a ‘factor’ matrix) where each cell is a matrix multiplication between two words.

我们生成一个中间矩阵(我们称之为“因子”矩阵),其中每个单元格都是两个单词之间的矩阵乘法。

Dot Product between Query and Key matrices (Image by Author)

查询矩阵和关键矩阵之间的点积(作者提供的图片)

For instance, each column in the fourth row corresponds to a dot product between the fourth Query word with every Key word.

例如,第四行中的每一列对应于第四个查询词与每个关键字之间的点积。

Dot Product between Query and Key matrices (Image by Author)

查询矩阵和关键矩阵之间的点积(作者提供的图片)

Attention Score — Dot Product between Query-Key and Value words 注意力分数——查询关键词和值词之间的点积

注意力分数——查询关键词和值词之间的点积

The next step is a matrix multiply between this intermediate ‘factor’ matrix and the Value (V) matrix, to produce the attention score that is output by the attention module. Here we can see that the fourth row corresponds to the fourth Query word matrix multiplied with all other Key and Value words.

下一步是这个中间“因子”矩阵和值(V)矩阵之间的矩阵乘法,以产生由注意力模块输出的注意力分数。这里我们可以看到第四行对应于第四个查询词矩阵乘以所有其他关键字和值词。

Dot Product between Query-Key and Value matrices (Image by Author)

查询键和值矩阵之间的点积(作者提供的图片)

This produces the Attention Score vector (Z) that is output by the Attention Module.

这会产生由注意力模块输出的注意力分数向量 (Z)。

The way to think about the output score is that, for each word, it is the encoded value of every word from the “Value” matrix, weighted by the “factor” matrix. The factor matrix is the dot product of the Query value for that specific word with the Key value of all words.

考虑输出分数的方法是,对于每个单词,它是“值”矩阵中每个单词的编码值,并由“因子”矩阵加权。因子矩阵是该特定单词的查询值与所有单词的关键字值的点积。

Attention Score is a weighted sum of the Value words (Image by Author)

注意力分数是价值词的加权总和(作者提供的图片)

What is the role of the Query, Key, and Value words? 查询词、关键词和值词的作用是什么?

查询词、关键词和值词的作用是什么?

The Query word can be interpreted as the word for which we are calculating Attention. The Key and Value word is the word to which we are paying attention ie. how relevant is that word to the Query word.

查询词可以解释为我们正在计算注意力的词。键和值词是我们正在关注的词,即。该词与查询词的相关程度如何。

Attention Score for the word “blue” pays attention to every other word (Image by Author)

“蓝色”一词的注意力分数会关注所有其他单词(图片由作者提供)

For example, for the sentence, “The ball is blue”, the row for the word “blue” will contain the attention scores for “blue” with every other word. Here, “blue” is the Query word, and the other words are the “Key/Value”.

例如,对于句子“The ball is blue”,单词“blue”的行将包含“blue”与其他每个单词的注意力分数。这里,“blue”是查询词,其他词是“Key/Value”。

There are other operations being performed such as a division and a softmax, but we can ignore them in this article. They just change the numeric values in the matrices but don’t affect the position of each word row in the matrix. Nor do they involve any inter-word interactions.

还有其他操作正在执行,例如除法和 softmax,但我们可以在本文中忽略它们。它们只是更改矩阵中的数值,但不影响矩阵中每个单词行的位置。它们也不涉及任何词间交互。

Dot Product tells us the similarity between words 点积告诉我们单词之间的相似度

点积告诉我们单词之间的相似度

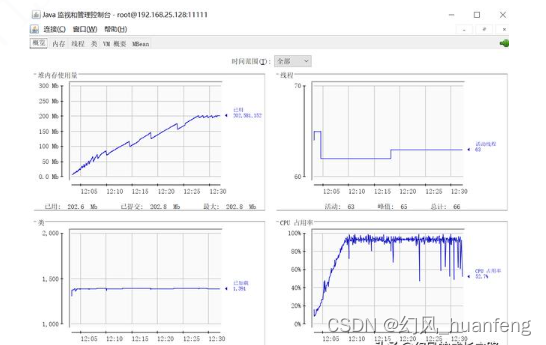

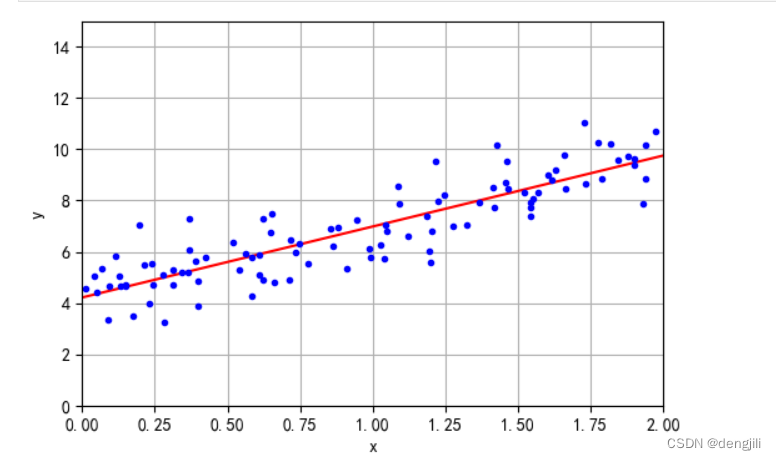

So we have seen that the Attention Score is capturing some interaction between a particular word, and every other word in the sentence, by doing a dot product, and then adding them up. But how does the matrix multiply help the Transformer determine the relevance between two words?

因此,我们已经看到,注意力分数通过进行点积,然后将它们相加,捕获特定单词与句子中每个其他单词之间的交互。但是矩阵乘法如何帮助 Transformer 确定两个单词之间的相关性呢?

To understand this, remember that the Query, Key, and Value rows are actually vectors with an Embedding dimension. Let’s zoom in on how the matrix multiplication between those vectors is calculated.

要理解这一点,请记住查询、键和值行实际上是具有嵌入维度的向量。让我们仔细看看这些向量之间的矩阵乘法是如何计算的。

Each cell is a dot product between two word vectors (Image by Author)

每个单元格都是两个词向量之间的点积(作者提供的图片)

When we do a dot product between two vectors, we multiply pairs of numbers and then sum them up.

当我们在两个向量之间进行点积时,我们将数字对相乘,然后将它们相加。

- If the two paired numbers (eg. ‘a’ and ‘d’ above) are both positive or both negative, then the product will be positive. The product will increase the final summation.

如果两个配对数字(例如上面的“a”和“d”)均为正数或均为负数,则乘积将为正数。该乘积将增加最终的总和。 - If one number is positive and the other negative, then the product will be negative. The product will reduce the final summation.

如果一个数为正数,另一个数为负数,则乘积将为负数。该乘积将减少最终的总和。 - If the product is positive, the larger the two numbers, the more they contribute to the final summation.

如果乘积为正,则两个数字越大,对最终求和的贡献就越大。

This means that if the signs of the corresponding numbers in the two vectors are aligned, the final sum will be larger.

这意味着如果两个向量中对应数字的符号对齐,则最终的和会更大。

How does the Transformer learn the relevance between words? Transformer 如何学习单词之间的相关性?

Transformer 如何学习单词之间的相关性?

This notion of the Dot Product applies to the attention score as well. If the vectors for two words are more aligned, the attention score will be higher.

点积的概念也适用于注意力分数。如果两个单词的向量更加对齐,则注意力分数会更高。

So what is the behavior we want for the Transformer?

那么我们想要 Transformer 的行为是什么?

We want the attention score to be high for two words that are relevant to each other in the sentence. And we want the score to be low for two words that are unrelated to one another.

我们希望句子中彼此相关的两个单词的注意力分数较高。我们希望两个彼此不相关的单词的分数较低。

For example, for the sentence, “The black cat drank the milk”, the word “milk” is very relevant to “drank”, perhaps slightly less relevant to “cat”, and irrelevant to “black”. We want “milk” and “drank” to produce a high attention score, for “milk” and “cat” to produce a slightly lower score, and for “milk” and “black”, to produce a negligible score.

例如,对于句子“黑猫喝牛奶”,“牛奶”一词与“喝”非常相关,可能与“猫”相关性稍差,与“黑”无关。我们希望“牛奶”和“饮料”产生较高的注意力分数,“牛奶”和“猫”产生稍低的分数,而“牛奶”和“黑色”产生可忽略不计的分数。

This is the output we want the model to learn to produce.

这是我们希望模型学习产生的输出。

For this to happen, the word vectors for “milk” and “drank” must be aligned. The vectors for “milk” and “cat” will diverge somewhat. And they will be quite different for “milk” and “black”.

为此,“牛奶”和“饮料”的词向量必须对齐。 “牛奶”和“猫”的向量会有所不同。对于“牛奶”和“黑色”来说,它们会有很大不同。

Let’s go back to the point we had kept at the back of our minds — how does the Transformer figure out what set of weights will give it the best results?

让我们回到我们一直牢记的一点——Transformer 如何计算出哪一组权重将给它带来最好的结果?

The word vectors are generated based on the word embeddings and the weights of the Linear layers. Therefore the Transformer can learn those embeddings, Linear weights, and so on to produce the word vectors as required above.

词向量是根据词嵌入和线性层的权重生成的。因此,Transformer 可以学习这些嵌入、线性权重等,以生成上述所需的词向量。

In other words, it will learn those embeddings and weights in such a way that if two words in a sentence are relevant to each other, then their word vectors will be aligned. And hence produce a higher attention score. For words that are not relevant to each other, the word vectors will not be aligned and will produce a lower attention score.

换句话说,它将以这样的方式学习这些嵌入和权重:如果句子中的两个单词彼此相关,那么它们的单词向量将对齐。从而产生更高的注意力分数。对于彼此不相关的单词,单词向量将不会对齐,并且会产生较低的注意力分数。

Therefore the embeddings for “milk” and “drank” will be very aligned and produce a high attention score. They will diverge somewhat for “milk” and “cat” to produce a slightly lower score and will be quite different for “milk” and “black”, to produce a low score.

因此,“牛奶”和“饮料”的嵌入将非常一致,并产生很高的注意力分数。对于“牛奶”和“猫”,它们会有所不同,产生稍低的分数;而对于“牛奶”和“黑色”,它们会有很大不同,产生较低的分数。

This then is the principle behind the Attention module.

这就是注意力模块背后的原理。

Summarizing — What makes the Transformer tick? 总结——是什么让 Transformer 运转起来?

总结——是什么让 Transformer 运转起来?

The dot product between the Query and Key computes the relevance between each pair of words. This relevance is then used as a “factor” to compute a weighted sum of all the Value words. That weighted sum is output as the Attention Score.

查询和密钥之间的点积计算每对单词之间的相关性。然后将该相关性用作“因子”来计算所有值词的加权和。该加权和作为注意力分数输出。

The Transformer learns embeddings etc, in such a way that words that are relevant to one another are more aligned.

Transformer 学习嵌入等,从而使彼此相关的单词更加对齐。

This is one reason for introducing the three Linear layers and making three versions of the input sequence, for the Query, Key, and Value. That gives the Attention module some more parameters that it is able to learn to tune the creation of the word vectors.

这是引入三个线性层并为查询、键和值创建三个版本的输入序列的原因之一。这为注意力模块提供了更多参数,它可以学习这些参数来调整词向量的创建。

Encoder Self-Attention in the Transformer Transformer 中的编码器自注意力

Transformer 中的编码器自注意力

Attention is used in the Transformer in three places:

Transformer 中使用了 Attention 在三个地方:

- Self-attention in the Encoder — the source sequence pays attention to itself

编码器中的自注意力——源序列关注自身 - Self-attention in the Decoder — the target sequence pays attention to itself

解码器中的自注意力——目标序列关注自身 - Encoder-Decoder-attention in the Decoder — the target sequence pays attention to the source sequence

Decoder 中的 Encoder-Decoder-attention — 目标序列关注源序列

Attention in the Transformer (Image by Author)

Transformer 中的注意力(作者提供的图片)

In the Encoder Self Attention, we compute the relevance of each word in the source sentence to each other word in the source sentence. This happens in all the Encoders in the stack.

在编码器自注意力中,我们计算源句子中每个单词与源句子中每个其他单词的相关性。这种情况发生在堆栈中的所有编码器中。

Decoder Self-Attention in the Transformer Transformer 中的解码器自注意力

Transformer 中的解码器自注意力

Most of what we’ve just seen in the Encoder Self Attention applies to Attention in the Decoder as well, with a few small but significant differences.

我们刚刚在编码器自注意力中看到的大部分内容也适用于解码器中的注意力,但有一些微小但显着的差异。

Attention in the Decoder (Image by Author)

解码器中的注意力(作者提供的图片)

In the Decoder Self Attention, we compute the relevance of each word in the target sentence to each other word in the target sentence.

在解码器自注意力中,我们计算目标句子中每个单词与目标句子中每个其他单词的相关性。

Decoder Self Attention (Image by Author)

解码器自注意力(作者提供的图片)

Encoder-Decoder Attention in the Transformer Transformer 中的编码器-解码器注意力

Transformer 中的编码器-解码器注意力

In the Encoder-Decoder Attention, the Query is obtained from the target sentence and the Key/Value from the source sentence. Thus it computes the relevance of each word in the target sentence to each word in the source sentence.

在Encoder-Decoder Attention中,Query是从目标句子中获取的,Key/Value是从源句子中获取的。因此,它计算目标句子中每个单词与源句子中每个单词的相关性。

Encoder-Decoder Attention (Image by Author)

编码器-解码器注意力(作者提供的图片)