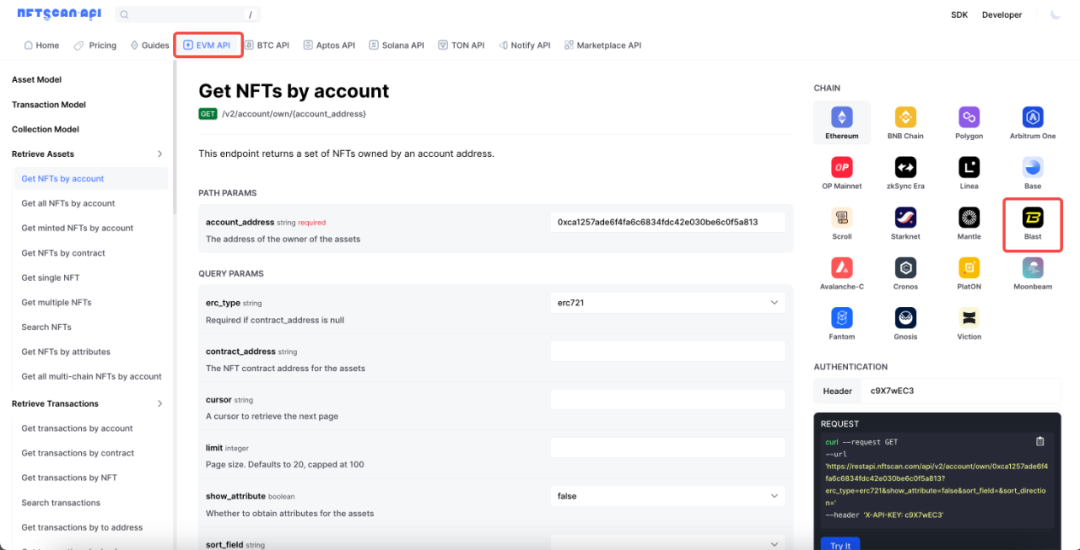

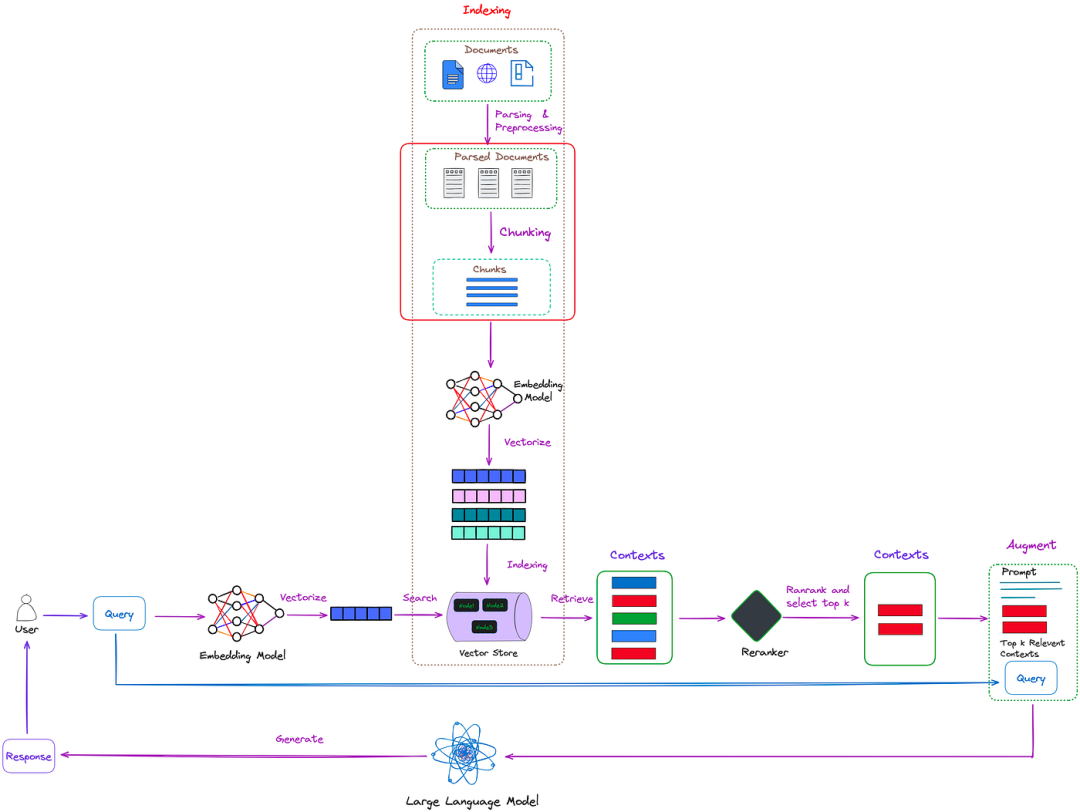

在LLM之RAG实战(二十九)| 探索RAG PDF解析解析文档后,我们可以获得结构化或半结构化的数据。现在的主要任务是将它们分解成更小的块来提取详细的特征,然后嵌入这些特征来表示它们的语义,其在RAG中的位置如图1所示:

最常用的分块方法是基于规则的,采用固定的块大小或相邻块的重叠等技术。对于多级文档,我们可以使用Langchain提供的RecursiveCharacterTextSplitter[1]来定义多级分隔符。

然而,在实际应用中,由于严格的预定义规则(块大小或重叠部分的大小),基于规则的分块方法很容易导致检索上下文不完整或包含噪声的块大小过大等问题。

因此,对于分块,最优雅的方法显然是基于语义的分块。语义分块旨在确保每个分块包含尽可能多的语义独立信息。

本文将探讨语义分块的方法,并解释了它们的原理和应用。我们将介绍三种类型的方法:

- Embedding-based

- Model-based

- LLM-based

一、基于Embedding的方法

LlamaIndex和Langchain都提供了一个基于embedding的语义分块器。这两个框架的实现思路基本是一样的,我们将以LlamaIndex为例进行介绍。

请注意,要访问LlamaIndex中的语义分块器,您需要安装最新的版本。我安装的前一个版本0.9.45没有包含此算法。因此,我创建了一个新的conda环境,并安装了更新版本0.10.12:

pip install llama-index-corepip install llama-index-readers-filepip install llama-index-embeddings-openai

值得一提的是,LlamaIndex的0.10.12可以灵活安装,因此这里只安装了一些关键组件。安装的版本如下:

(llamaindex_010) Florian:~ Florian$ pip list | grep llamallama-index-core 0.10.12llama-index-embeddings-openai 0.1.6llama-index-readers-file 0.1.5llamaindex-py-client 0.1.13

测试代码如下:

from llama_index.core.node_parser import (SentenceSplitter,SemanticSplitterNodeParser,)from llama_index.embeddings.openai import OpenAIEmbeddingfrom llama_index.core import SimpleDirectoryReaderimport osos.environ["OPENAI_API_KEY"] = "YOUR_OPEN_AI_KEY"# load documentsdir_path = "YOUR_DIR_PATH"documents = SimpleDirectoryReader(dir_path).load_data()embed_model = OpenAIEmbedding()splitter = SemanticSplitterNodeParser(buffer_size=1, breakpoint_percentile_threshold=95, embed_model=embed_model)nodes = splitter.get_nodes_from_documents(documents)for node in nodes:print('-' * 100)print(node.get_content())

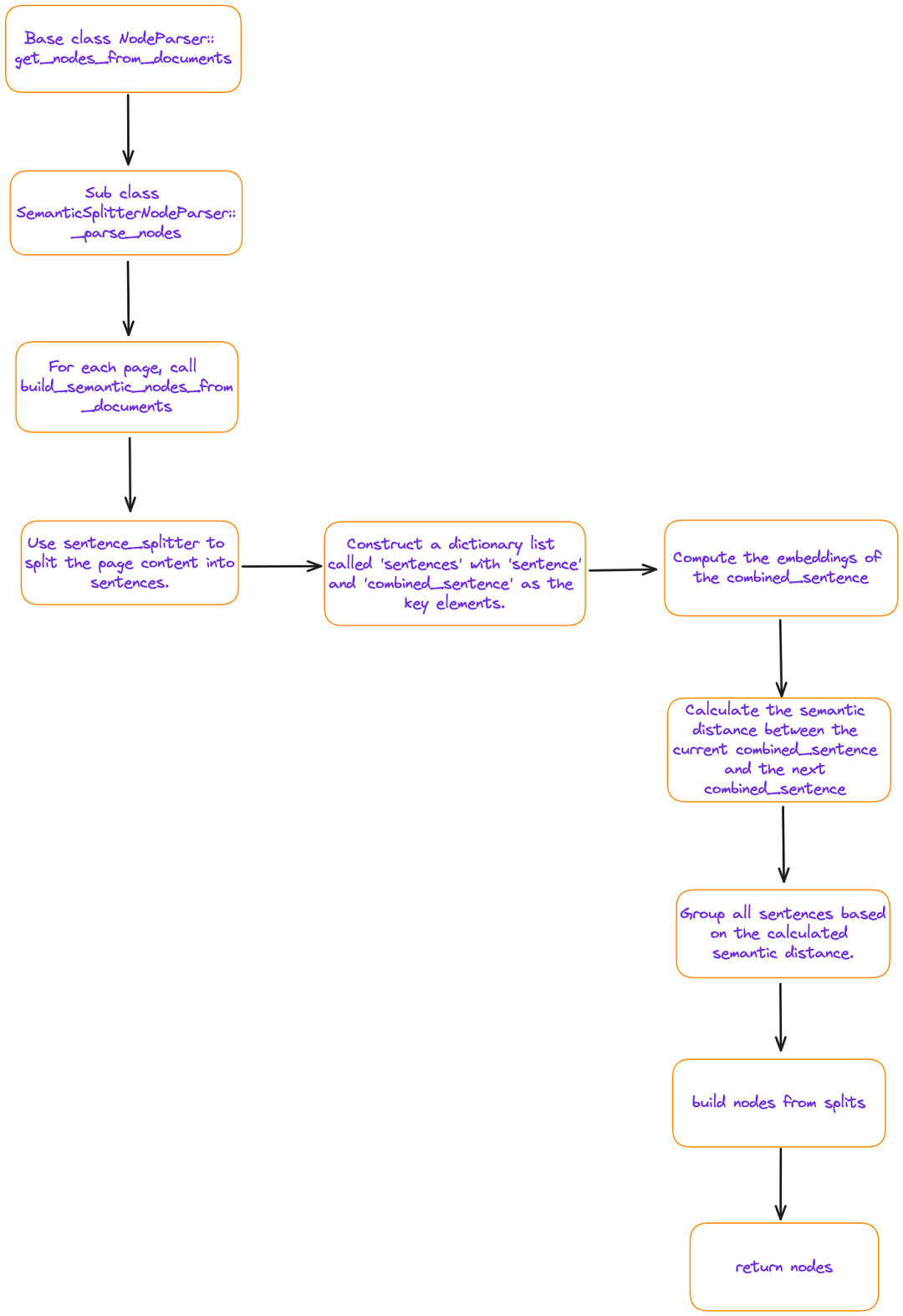

splitter.get_nodes_from_documents函数的主要过程如图2所示:

图2中提到的“sentences”是一个python列表,其中每个成员都是一个字典,包含四个(键、值)对,键的含义如下:

- sentences:当前句子;

- index:当前句子的序号;

- combined_sentence:一个滑动窗口,包括[index-self-buffer_size,index,index+self.buffer_size]3句话(默认情况下,self-buffer_size=1)。它是一种用于计算句子之间语义相关性的工具。组合前句和后句的目的是减少噪音,更好地捕捉连续句子之间的关系;

- combined_sentence_embedding:combined_sentence的嵌入。

从以上分析中可以明显看出,基于embedding的语义分块本质上包括基于滑动窗口(combined_sentence)计算相似度。那些相邻的并且满足阈值的句子被分类到一个块中。

下面我们使用BERT论文[2]作为目录路径,以下是一些运行结果:

(llamaindex_010) Florian:~ Florian$ python /Users/Florian/Documents/june_pdf_loader/test_semantic_chunk.py......----------------------------------------------------------------------------------------------------We argue that current techniques restrict thepower of the pre-trained representations, espe-cially for the fine-tuning approaches. The ma-jor limitation is that standard language models areunidirectional, and this limits the choice of archi-tectures that can be used during pre-training. Forexample, in OpenAI GPT, the authors use a left-to-right architecture, where every token can only at-tend to previous tokens in the self-attention layersof the Transformer (Vaswani et al., 2017). Such re-strictions are sub-optimal for sentence-level tasks,and could be very harmful when applying fine-tuning based approaches to token-level tasks suchas question answering, where it is crucial to incor-porate context from both directions.In this paper, we improve the fine-tuning basedapproaches by proposing BERT: BidirectionalEncoder Representations from Transformers.BERT alleviates the previously mentioned unidi-rectionality constraint by using a “masked lan-guage model” (MLM) pre-training objective, in-spired by the Cloze task (Taylor, 1953). Themasked language model randomly masks some ofthe tokens from the input, and the objective is topredict the original vocabulary id of the maskedarXiv:1810.04805v2 [cs.CL] 24 May 2019----------------------------------------------------------------------------------------------------word based only on its context. Unlike left-to-right language model pre-training, the MLM ob-jective enables the representation to fuse the leftand the right context, which allows us to pre-train a deep bidirectional Transformer. In addi-tion to the masked language model, we also usea “next sentence prediction” task that jointly pre-trains text-pair representations. The contributionsof our paper are as follows:• We demonstrate the importance of bidirectionalpre-training for language representations. Un-like Radford et al. (2018), which uses unidirec-tional language models for pre-training, BERTuses masked language models to enable pre-trained deep bidirectional representations. Thisis also in contrast to Peters et al.----------------------------------------------------------------------------------------------------......

基于embedding的方法:总结

- 测试结果表明,块的粒度相对较粗。

- 图2还显示了这种方法是基于页面的,并且没有直接解决跨越多个页面的块的问题。

- 通常,基于嵌入的方法的性能在很大程度上取决于嵌入模型。实际效果需要进一步评估。

二、基于模型的方法

2.1 Naive BERT

回忆一下BERT的预训练过程,其中有个二元分类任务(NSP)来让模型学习两个句子之间的关系。两个句子同时输入到BERT中,并且该模型预测第二个句子是否在第一个句子之后。

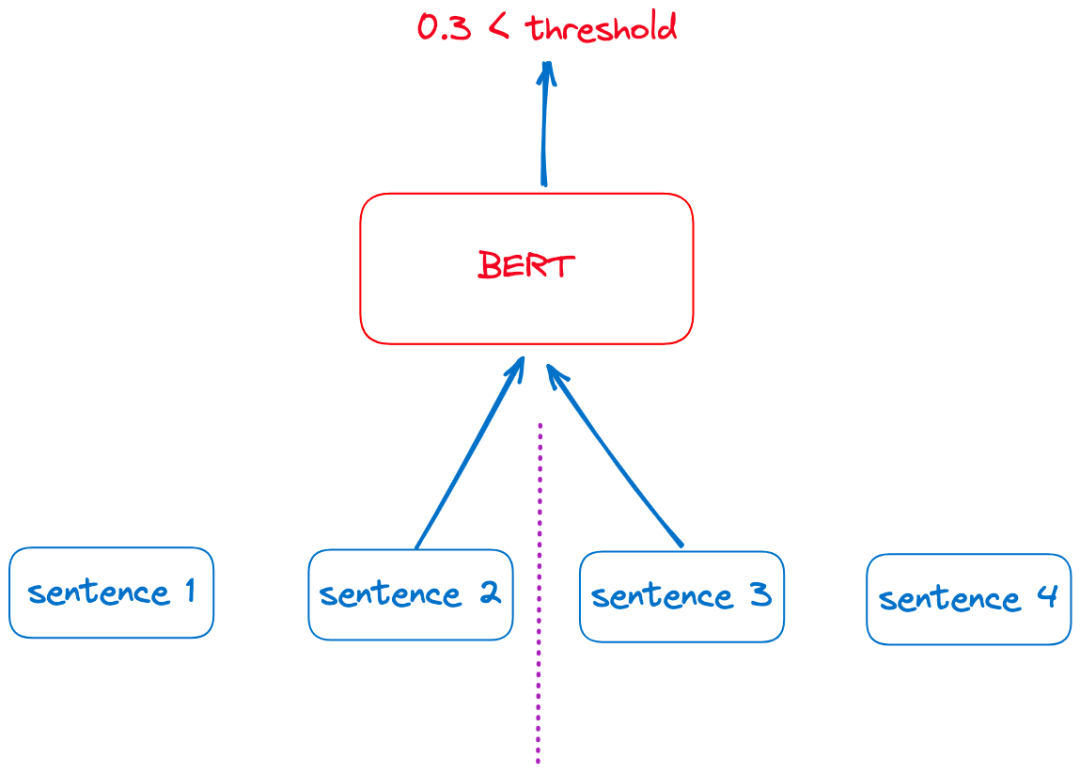

我们可以将这一原理应用于设计一种简单的分块方法。对于文档,请将其拆分为多个句子。然后,使用滑动窗口将两个相邻的句子输入到BERT模型中进行NSP判断,如图3所示:

如果预测得分低于预设阈值,则表明两句之间的语义关系较弱。这可以作为文本分割点,如图3中句子2和句子3之间所示。

这种方法的优点是可以直接使用,而不需要训练或微调。

然而,这种方法在确定文本分割点时只考虑前句和后句,忽略了来自其他片段的信息。此外,这种方法的预测效率相对较低。

2.2 Cross Segment Attention

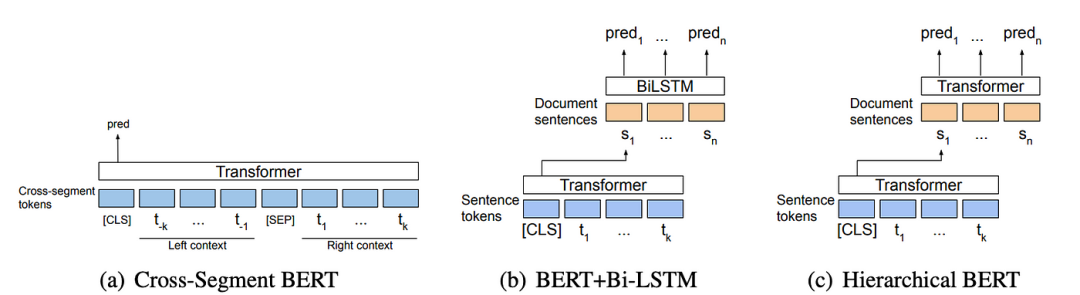

论文《Text Segmentation by Cross Segment Attention》[3]提出了三种跨段注意力模型,如图4所示:

图4(a)显示了跨段BERT模型,该模型将文本分割定义为逐句分类任务。潜在中断的上下文(两侧的k个令牌)被输入到模型中。将与[CLS]相对应的隐藏状态传递给softmax分类器,以做出关于在潜在断点处进行分割的决定。

本论文还提出了另外两个模型:一种是使用BERT模型来获得每个句子的向量表示。然后,将多个连续句子的这些向量表示输入到Bi-LSTM(图4(b))或另一个BERT(图4),以预测每个句子是否是文本分割边界。

当时,这三个模型取得了SOTA的结果,如图5所示:

然而,到目前为止,只发现了本论文的训练代码[4],推理模型没有找到。

2.3 SeqModel

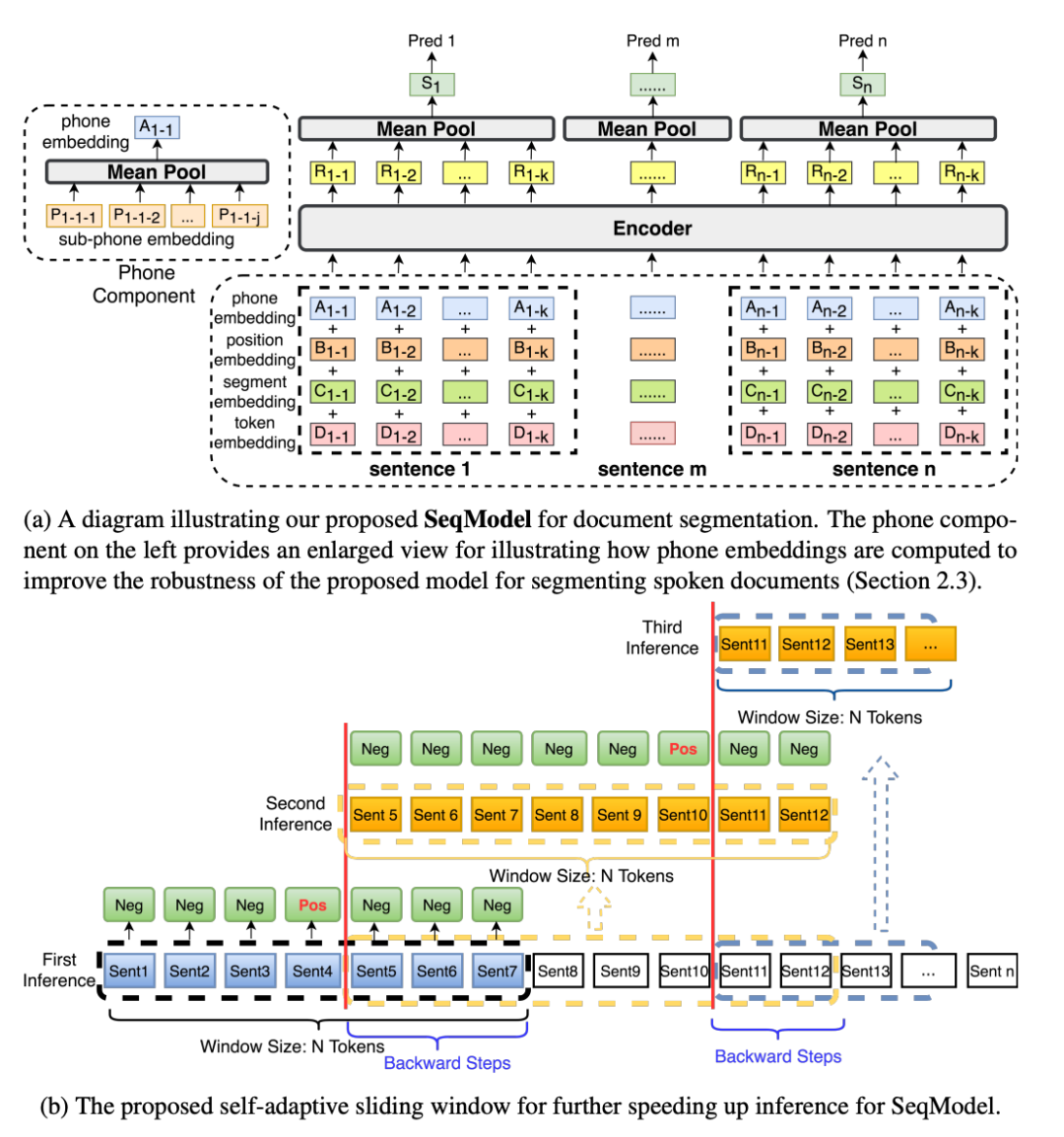

跨段模型独立地对每个句子进行矢量化,不考虑任何更广泛的上下文信息。SeqModel中提出了进一步的增强,如论文“Sequence Model with Self-Adaptive Sliding Window for Efficient Spoken Document Segmentation”[5]中所述。

SeqModel[6]使用BERT同时对多个句子进行编码,在计算句子向量之前对较长上下文中的依赖关系进行建模。然后它预测文本分割是否发生在每个句子之后。此外,该模型利用自适应滑动窗口方法在不影响精度的情况下提高推理速度。SeqModel的示意图如图6所示。

SeqModel可以通过ModelScope[7]框架使用。代码如下所示:

from modelscope.outputs import OutputKeysfrom modelscope.pipelines import pipelinefrom modelscope.utils.constant import Tasksp = pipeline(task = Tasks.document_segmentation,model = 'damo/nlp_bert_document-segmentation_english-base')print('-' * 100)result = p(documents='We demonstrate the importance of bidirectional pre-training for language representations. Unlike Radford et al. (2018), which uses unidirectional language models for pre-training, BERT uses masked language models to enable pretrained deep bidirectional representations. This is also in contrast to Peters et al. (2018a), which uses a shallow concatenation of independently trained left-to-right and right-to-left LMs. • We show that pre-trained representations reduce the need for many heavily-engineered taskspecific architectures. BERT is the first finetuning based representation model that achieves state-of-the-art performance on a large suite of sentence-level and token-level tasks, outperforming many task-specific architectures. Today is a good day')print(result[OutputKeys.TEXT])

测试数据最后附加了一句话,“Today is a good day”,但结果并没有把“Today is a good day”分开。

(modelscope) Florian:~ Florian$ python /Users/Florian/Documents/june_pdf_loader/test_seqmodel.py2024-02-24 17:09:36,288 - modelscope - INFO - PyTorch version 2.2.1 Found.2024-02-24 17:09:36,288 - modelscope - INFO - Loading ast index from /Users/Florian/.cache/modelscope/ast_indexer......----------------------------------------------------------------------------------------------------......We demonstrate the importance of bidirectional pre-training for language representations.Unlike Radford et al.(2018), which uses unidirectional language models for pre-training, BERT uses masked language models to enable pretrained deep bidirectional representations.This is also in contrast to Peters et al.(2018a), which uses a shallow concatenation of independently trained left-to-right and right-to-left LMs.• We show that pre-trained representations reduce the need for many heavily-engineered taskspecific architectures.BERT is the first finetuning based representation model that achieves state-of-the-art performance on a large suite of sentence-level and token-level tasks, outperforming many task-specific architectures.Today is a good day

三、基于LLM的方法

论文《Dense X Retrieval: What Retrieval Granularity Should We Use?》[8]引入了一个新的检索单元,称为proposition。proposition被定义为文本中的原子表达式,每个命题都封装了一个不同的事实,并以简洁、自包含的自然语言格式呈现。

那么,我们如何获得这个所谓的命题呢?在本文中,它是通过构建提示和与LLM的交互来实现的。

LlamaIndex和Langchain都实现了相关的算法,下面使用LlamaIndex进行了演示。

LlamaIndex的实现思想包括使用论文中提供的提示生成命题:

PROPOSITIONS_PROMPT = PromptTemplate("""Decompose the "Content" into clear and simple propositions, ensuring they are interpretable out ofcontext.1. Split compound sentence into simple sentences. Maintain the original phrasing from the inputwhenever possible.2. For any named entity that is accompanied by additional descriptive information, separate thisinformation into its own distinct proposition.3. Decontextualize the proposition by adding necessary modifier to nouns or entire sentencesand replacing pronouns (e.g., "it", "he", "she", "they", "this", "that") with the full name of theentities they refer to.4. Present the results as a list of strings, formatted in JSON.Input: Title: ¯Eostre. Section: Theories and interpretations, Connection to Easter Hares. Content:The earliest evidence for the Easter Hare (Osterhase) was recorded in south-west Germany in1678 by the professor of medicine Georg Franck von Franckenau, but it remained unknown inother parts of Germany until the 18th century. Scholar Richard Sermon writes that "hares werefrequently seen in gardens in spring, and thus may have served as a convenient explanation for theorigin of the colored eggs hidden there for children. Alternatively, there is a European traditionthat hares laid eggs, since a hare’s scratch or form and a lapwing’s nest look very similar, andboth occur on grassland and are first seen in the spring. In the nineteenth century the influenceof Easter cards, toys, and books was to make the Easter Hare/Rabbit popular throughout Europe.German immigrants then exported the custom to Britain and America where it evolved into theEaster Bunny."Output: [ "The earliest evidence for the Easter Hare was recorded in south-west Germany in1678 by Georg Franck von Franckenau.", "Georg Franck von Franckenau was a professor ofmedicine.", "The evidence for the Easter Hare remained unknown in other parts of Germany untilthe 18th century.", "Richard Sermon was a scholar.", "Richard Sermon writes a hypothesis aboutthe possible explanation for the connection between hares and the tradition during Easter", "Hareswere frequently seen in gardens in spring.", "Hares may have served as a convenient explanationfor the origin of the colored eggs hidden in gardens for children.", "There is a European traditionthat hares laid eggs.", "A hare’s scratch or form and a lapwing’s nest look very similar.", "Bothhares and lapwing’s nests occur on grassland and are first seen in the spring.", "In the nineteenthcentury the influence of Easter cards, toys, and books was to make the Easter Hare/Rabbit popularthroughout Europe.", "German immigrants exported the custom of the Easter Hare/Rabbit toBritain and America.", "The custom of the Easter Hare/Rabbit evolved into the Easter Bunny inBritain and America." ]Input: {node_text}Output:""")

在上一节基于嵌入的方法中,我们安装了LlamaIndex 0.10.12的关键组件。但如果我们想使用DenseXRetrievalPack,我们还需要运行pip install-lama-index-llms-openai。安装后,当前与LlamaIndex相关的组件如下:

(llamaindex_010) Florian:~ Florian$ pip list | grep llamallama-index-core 0.10.12llama-index-embeddings-openai 0.1.6llama-index-llms-openai 0.1.6llama-index-readers-file 0.1.5llamaindex-py-client 0.1.13

测试代码如下:

from llama_index.core.readers import SimpleDirectoryReaderfrom llama_index.core.llama_pack import download_llama_packimport osos.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_KEY"# Download and install dependenciesDenseXRetrievalPack = download_llama_pack("DenseXRetrievalPack", "./dense_pack")# If you have already downloaded DenseXRetrievalPack, you can import it directly.# from llama_index.packs.dense_x_retrieval import DenseXRetrievalPack# Load documentsdir_path = "YOUR_DIR_PATH"documents = SimpleDirectoryReader(dir_path).load_data()# Use LLM to extract propositions from every document/nodedense_pack = DenseXRetrievalPack(documents)response = dense_pack.run("YOUR_QUERY")

通过上述测试代码,学会了初步使用类DenseXRetrievalPack,下面分析一下类DenseXRetrievalPack的源代码:

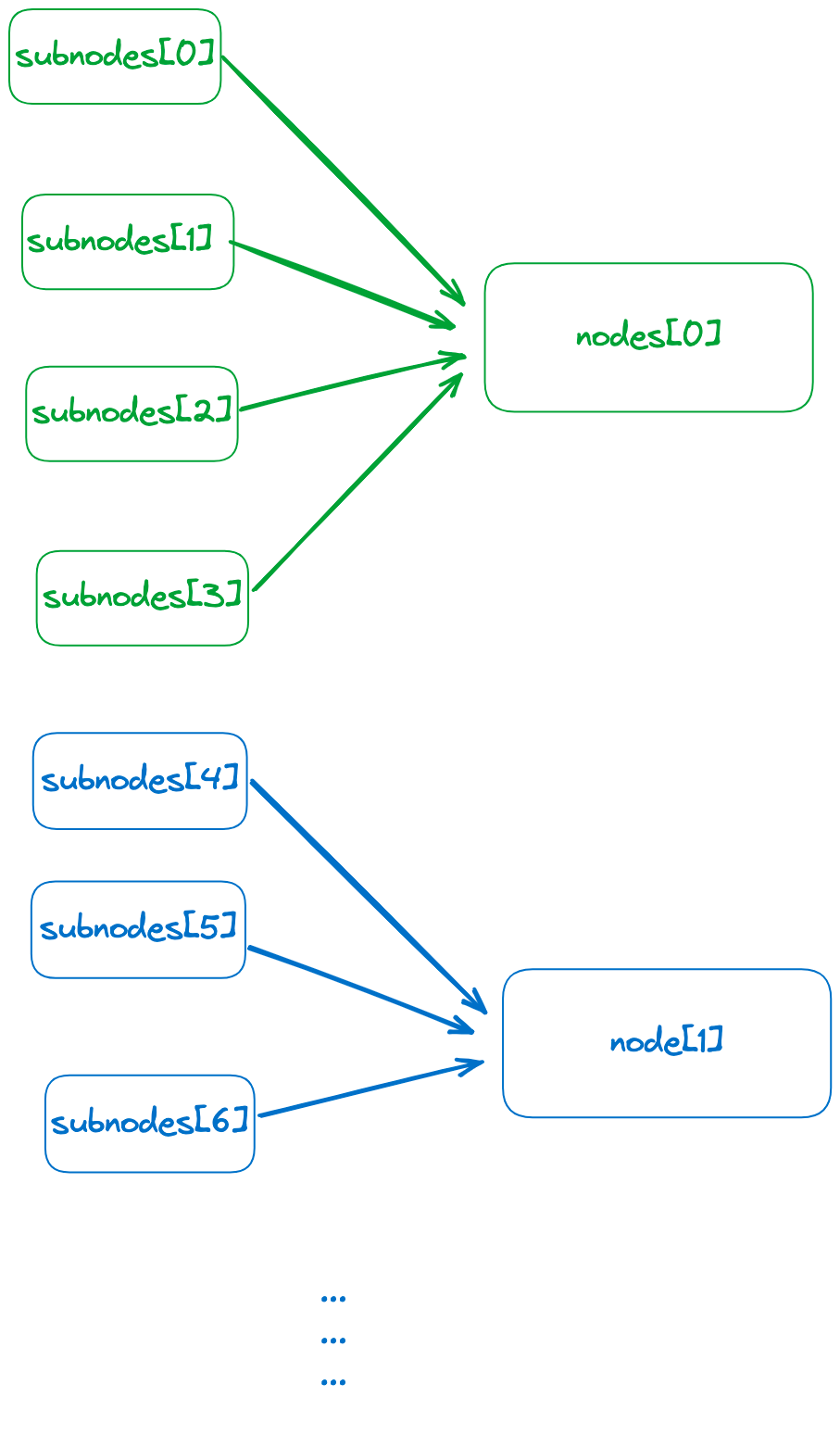

class DenseXRetrievalPack(BaseLlamaPack):def __init__(self,documents: List[Document],proposition_llm: Optional[LLM] = None,query_llm: Optional[LLM] = None,embed_model: Optional[BaseEmbedding] = None,text_splitter: TextSplitter = SentenceSplitter(),similarity_top_k: int = 4,) -> None:"""Init params."""self._proposition_llm = proposition_llm or OpenAI(model="gpt-3.5-turbo",temperature=0.1,max_tokens=750,)embed_model = embed_model or OpenAIEmbedding(embed_batch_size=128)nodes = text_splitter.get_nodes_from_documents(documents)sub_nodes = self._gen_propositions(nodes)all_nodes = nodes + sub_nodesall_nodes_dict = {n.node_id: n for n in all_nodes}service_context = ServiceContext.from_defaults(llm=query_llm or OpenAI(),embed_model=embed_model,num_output=self._proposition_llm.metadata.num_output,)self.vector_index = VectorStoreIndex(all_nodes, service_context=service_context, show_progress=True)self.retriever = RecursiveRetriever("vector",retriever_dict={"vector": self.vector_index.as_retriever(similarity_top_k=similarity_top_k)},node_dict=all_nodes_dict,)self.query_engine = RetrieverQueryEngine.from_args(self.retriever, service_context=service_context)

如代码所示,首先使用text_splitter将文档划分为原始nodes,然后调用self._gen_propositions来获得相应的sub_nodes。然后,它使用nodes+sub_nodes构建VectorStoreIndex,该索引可以通过RecursiveRetriever进行检索。递归检索器可以使用小块进行检索,但它会将相关的大块传递到生成阶段。

测试文档仍然是BERT论文。通过调试,我们发现sub_nodes[].text不是原来的文本,它们被重写了:

> /Users/Florian/anaconda3/envs/llamaindex_010/lib/python3.11/site-packages/llama_index/packs/dense_x_retrieval/base.py(91)__init__()90---> 91 all_nodes = nodes + sub_nodes92 all_nodes_dict = {n.node_id: n for n in all_nodes}ipdb> sub_nodes[20]IndexNode(id_='ecf310c7-76c8-487a-99f3-f78b273e00d9', embedding=None, metadata={}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text='Our paper demonstrates the importance of bidirectional pre-training for language representations.', start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n', index_id='8deca706-fe97-412c-a13f-950a19a594d1', obj=None)ipdb> sub_nodes[21]IndexNode(id_='4911332e-8e30-47d8-a5bc-ed7cbaa8e042', embedding=None, metadata={}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text='Radford et al. (2018) uses unidirectional language models for pre-training.', start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n', index_id='8deca706-fe97-412c-a13f-950a19a594d1', obj=None)ipdb> sub_nodes[22]IndexNode(id_='83aa82f8-384a-4b06-92c8-d6277c4162bf', embedding=None, metadata={}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text='BERT uses masked language models to enable pre-trained deep bidirectional representations.', start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n', index_id='8deca706-fe97-412c-a13f-950a19a594d1', obj=None)ipdb> sub_nodes[23]IndexNode(id_='2ac635c2-ccb0-4e62-88c7-bcbaef3ef38a', embedding=None, metadata={}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text='Peters et al. (2018a) uses a shallow concatenation of independently trained left-to-right and right-to-left LMs.', start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n', index_id='8deca706-fe97-412c-a13f-950a19a594d1', obj=None)ipdb> sub_nodes[24]IndexNode(id_='e37b17cf-30dd-4114-a3c5-9921b8cf0a77', embedding=None, metadata={}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text='Pre-trained representations reduce the need for many heavily-engineered task-specific architectures.', start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n', index_id='8deca706-fe97-412c-a13f-950a19a594d1', obj=None)

sub_nodes和nodes之间的关系如图7所示,是一个从小到大的索引结构。

从小到大的索引结构是通过 self._gen_propositions构建的,代码如下:

async def _aget_proposition(self, node: TextNode) -> List[TextNode]:"""Get proposition."""inital_output = await self._proposition_llm.apredict(PROPOSITIONS_PROMPT, node_text=node.text)outputs = inital_output.split("\n")all_propositions = []for output in outputs:if not output.strip():continueif not output.strip().endswith("]"):if not output.strip().endswith('"') and not output.strip().endswith(","):output = output + '"'output = output + " ]"if not output.strip().startswith("["):if not output.strip().startswith('"'):output = '"' + outputoutput = "[ " + outputtry:propositions = json.loads(output)except Exception:# fallback to yamltry:propositions = yaml.safe_load(output)except Exception:# fallback to next outputcontinueif not isinstance(propositions, list):continueall_propositions.extend(propositions)assert isinstance(all_propositions, list)nodes = [TextNode(text=prop) for prop in all_propositions if prop]return [IndexNode.from_text_node(n, node.node_id) for n in nodes]def _gen_propositions(self, nodes: List[TextNode]) -> List[TextNode]:"""Get propositions."""sub_nodes = asyncio.run(run_jobs([self._aget_proposition(node) for node in nodes],show_progress=True,workers=8,))# Flatten listreturn [node for sub_node in sub_nodes for node in sub_node]

对于每个原始node,异步调用self_aget_proposition通过PROPOSITIONS_PROMPT获取LLM的返回inital_output,然后基于inital_out获取命题并构建TextNode。最后,将这些TextNode与原始node相关联,即[IndexNode.from_text_node(n,node.node_id)for n in nodes]。

值得一提的是,原始论文使用LLM生成的命题作为训练数据来进一步微调文本生成模型。文本生成模型[9]现在可以公开访问。感兴趣的读者可以尝试一下。

基于LLM的方法:综述

一般来说,这种使用LLM构造命题的分块方法实现了更精细的分块。它与原始节点形成了一个从小到大的索引结构,从而为语义分块提供了一个新的思路。然而,这种方法依赖于LLM,这是相对昂贵的。

四、结论

本文探讨了三种类型的语义分块方法的原理和实现方法,并提供了一些综述。

一般来说,语义分块是一种更优雅的方式,也是优化RAG的关键。

参考文献:

[1] https://github.com/langchain-ai/langchain/blob/v0.1.9/libs/langchain/langchain/text_splitter.py#L851C1-L851C6

[2] https://arxiv.org/pdf/1810.04805.pdf

[3] https://arxiv.org/abs/2004.14535

[4] https://github.com/aakash222/text-segmentation-NLP/

[5] https://arxiv.org/pdf/2107.09278.pdf

[6] https://github.com/alibaba-damo-academy/SpokenNLP

[7] https://github.com/modelscope/modelscope/

[8] https://arxiv.org/pdf/2312.06648.pdf

[9] https://github.com/chentong0/factoid-wiki

![中间件 | RPC - [Dubbo]](https://img-blog.csdnimg.cn/direct/f2296201d2604c08aea759d34b456e9a.png)