网上充斥着ONNX Runtime的简单科普,却没有一个系统介绍ONNX Runtime的博客,因此本博客旨在基于官方文档进行翻译与进一步的解释。ONNX runtime的官方文档:https://onnxruntime.ai/docs/

如果尚不熟悉ONNX格式,可以参照该博客专栏,本专栏对onnx 1.16文档进行翻译与进一步解释,

ONNX 1.16学习笔记专栏:https://blog.csdn.net/qq_33345365/category_12581965.html

如果觉得有收获,麻烦点赞收藏关注,目前仅在CSDN发布,本博客会分为多个章节,目前尚在连载中。

开始编辑时间:2024/3/5;最后编辑时间:2024/3/5

所有资料均来自书写时的最新官方文档内容。

本专栏链接如下所示,所有相关内容均会在此处收录。

https://blog.csdn.net/qq_33345365/category_12589378.html

介绍

参考:https://onnxruntime.ai/docs/get-started/with-python.html

本教程第一篇:介绍ONNX Runtime(ORT)的基本概念。

本教程第二篇(本博客):是一个快速指南,包括安装使用ONNX进行模型序列化和使用ORT进行推理。

目录:

- 安装ONNX Runtime

- 安装ONNX进行模型输出

- Pytorch, TensorFlow和SciKit的快速开始例子

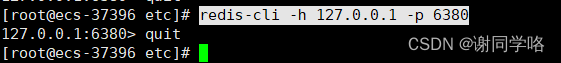

安装ONNX Runtime

ONNX运行时有两个Python包。在任何一个环境中,一次只能安装这些包中的一个。GPU包包含了大部分的CPU功能。

pip install onnxruntime-gpu

如果你运行在Arm CPU和/或macOS上,请使用CPU包。

pip install onnxruntime

安装ONNX进行模型输出

## ONNX在pytorch中自带

pip install torch

## tensorflow安装方法

pip install tf2onnx

## sklearn安装方法

pip install skl2onnx

Pytorch, TensorFlow和SciKit的快速开始例子

1. PyTorch CV

此处描述如何将PyTorch CV模型导出为ONNX格式,然后使用ORT进行推理。

模型代码来自PyTorch Fundamentals learning path on Microsoft Learn,如果出现版本问题,请参照网址:

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor, Lambda

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor()

)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)

train_dataloader = DataLoader(training_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28 * 28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

nn.ReLU()

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork()

learning_rate = 1e-3

batch_size = 64

epochs = 10

# Initialize the loss function

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

def train_loop(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

for batch, (X, y) in enumerate(dataloader):

# Compute prediction and loss

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

def test_loop(dataloader, model, loss_fn):

size = len(dataloader.dataset)

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= size

correct /= size

print(f"Test Error: \n Accuracy: {(100 * correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

for t in range(epochs):

print(f"Epoch {t + 1}\n-------------------------------")

train_loop(train_dataloader, model, loss_fn, optimizer)

test_loop(test_dataloader, model, loss_fn)

print("Done!")

torch.save(model.state_dict(), "data/model.pth")

print("Saved PyTorch Model State to model.pth")

模型已经被保存到data/model.pth中,

我们进行如下操作,

- 创建一个新python文件,构建模型类,读取模型文件data/model.pth,

- 将模型转换成ONNX格式,使用

torch.onnx.export - 使用

onnxruntime.InferenceSession创建推理会话

import torch

import onnxruntime

from torch import nn

import torch.onnx

# import onnx

import torchvision.models as models

from torchvision import datasets

from torchvision.transforms import ToTensor

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28 * 28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

nn.ReLU()

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork()

model.load_state_dict(torch.load('data/model1.pth'))

model.eval()

input_image = torch.zeros((1, 28, 28))

onnx_model = 'data/model.onnx'

torch.onnx.export(model, input_image, onnx_model)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)

classes = [

"T-shirt/top",

"Trouser",

"Pullover",

"Dress",

"Coat",

"Sandal",

"Shirt",

"Sneaker",

"Bag",

"Ankle boot",

]

x, y = test_data[0][0], test_data[0][1]

# onnx_model = onnx.load("data/model.onnx")

# onnx.checker.check_model(onnx_model)

session = onnxruntime.InferenceSession(onnx_model, None)

input_name = session.get_inputs()[0].name

output_name = session.get_outputs()[0].name

result = session.run([output_name], {input_name: x.numpy()})

predicted, actual = classes[result[0][0].argmax(0)], classes[y]

print(f'Predicted: "{predicted}", Actual: "{actual}"')

2. PyTorch NLP

在这个例子中,我们将学习如何将PyTorch NLP模型导出为ONNX格式,然后使用ORT进行推理。创建AG新闻模型的代码来自this PyTorch tutorial。可以直接参考如下代码:

版本要求:pytorch版本2.2.1,torchtext 0.17

模型代码:

import torch

from torchtext.datasets import AG_NEWS

train_iter = iter(AG_NEWS(split="train"))

from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

tokenizer = get_tokenizer("basic_english")

train_iter = AG_NEWS(split="train")

def yield_tokens(data_iter):

for _, text in data_iter:

yield tokenizer(text)

vocab = build_vocab_from_iterator(yield_tokens(train_iter), specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])

text_pipeline = lambda x: vocab(tokenizer(x))

label_pipeline = lambda x: int(x) - 1

from torch.utils.data import DataLoader

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def collate_batch(batch):

label_list, text_list, offsets = [], [], [0]

for _label, _text in batch:

label_list.append(label_pipeline(_label))

processed_text = torch.tensor(text_pipeline(_text), dtype=torch.int64)

text_list.append(processed_text)

offsets.append(processed_text.size(0))

label_list = torch.tensor(label_list, dtype=torch.int64)

offsets = torch.tensor(offsets[:-1]).cumsum(dim=0)

text_list = torch.cat(text_list)

return label_list.to(device), text_list.to(device), offsets.to(device)

train_iter = AG_NEWS(split="train")

dataloader = DataLoader(

train_iter, batch_size=8, shuffle=False, collate_fn=collate_batch

)

from torch import nn

class TextClassificationModel(nn.Module):

def __init__(self, vocab_size, embed_dim, num_class):

super(TextClassificationModel, self).__init__()

self.embedding = nn.EmbeddingBag(vocab_size, embed_dim, sparse=False)

self.fc = nn.Linear(embed_dim, num_class)

self.init_weights()

def init_weights(self):

initrange = 0.5

self.embedding.weight.data.uniform_(-initrange, initrange)

self.fc.weight.data.uniform_(-initrange, initrange)

self.fc.bias.data.zero_()

def forward(self, text, offsets):

embedded = self.embedding(text, offsets)

return self.fc(embedded)

train_iter = AG_NEWS(split="train")

num_class = len(set([label for (label, text) in train_iter]))

vocab_size = len(vocab)

emsize = 64

model = TextClassificationModel(vocab_size, emsize, num_class).to(device)

import time

def train(dataloader):

model.train()

total_acc, total_count = 0, 0

log_interval = 500

start_time = time.time()

for idx, (label, text, offsets) in enumerate(dataloader):

optimizer.zero_grad()

predicted_label = model(text, offsets)

loss = criterion(predicted_label, label)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 0.1)

optimizer.step()

total_acc += (predicted_label.argmax(1) == label).sum().item()

total_count += label.size(0)

if idx % log_interval == 0 and idx > 0:

elapsed = time.time() - start_time

print(

"| epoch {:3d} | {:5d}/{:5d} batches "

"| accuracy {:8.3f}".format(

epoch, idx, len(dataloader), total_acc / total_count

)

)

total_acc, total_count = 0, 0

start_time = time.time()

def evaluate(dataloader):

model.eval()

total_acc, total_count = 0, 0

with torch.no_grad():

for idx, (label, text, offsets) in enumerate(dataloader):

predicted_label = model(text, offsets)

loss = criterion(predicted_label, label)

total_acc += (predicted_label.argmax(1) == label).sum().item()

total_count += label.size(0)

return total_acc / total_count

from torch.utils.data.dataset import random_split

from torchtext.data.functional import to_map_style_dataset

# Hyperparameters

EPOCHS = 10 # epoch

LR = 5 # learning rate

BATCH_SIZE = 64 # batch size for training

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=LR)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, 1.0, gamma=0.1)

total_accu = None

train_iter, test_iter = AG_NEWS()

train_dataset = to_map_style_dataset(train_iter)

test_dataset = to_map_style_dataset(test_iter)

num_train = int(len(train_dataset) * 0.95)

split_train_, split_valid_ = random_split(

train_dataset, [num_train, len(train_dataset) - num_train]

)

train_dataloader = DataLoader(

split_train_, batch_size=BATCH_SIZE, shuffle=True, collate_fn=collate_batch

)

valid_dataloader = DataLoader(

split_valid_, batch_size=BATCH_SIZE, shuffle=True, collate_fn=collate_batch

)

test_dataloader = DataLoader(

test_dataset, batch_size=BATCH_SIZE, shuffle=True, collate_fn=collate_batch

)

for epoch in range(1, EPOCHS + 1):

epoch_start_time = time.time()

train(train_dataloader)

accu_val = evaluate(valid_dataloader)

if total_accu is not None and total_accu > accu_val:

scheduler.step()

else:

total_accu = accu_val

print("-" * 59)

print(

"| end of epoch {:3d} | time: {:5.2f}s | "

"valid accuracy {:8.3f} ".format(

epoch, time.time() - epoch_start_time, accu_val

)

)

print("-" * 59)

print("Checking the results of test dataset.")

accu_test = evaluate(test_dataloader)

print("test accuracy {:8.3f}".format(accu_test))

ag_news_label = {1: "World", 2: "Sports", 3: "Business", 4: "Sci/Tec"}

def predict(text, text_pipeline):

with torch.no_grad():

text = torch.tensor(text_pipeline(text))

output = model(text, torch.tensor([0]))

return output.argmax(1).item() + 1

ex_text_str = "MEMPHIS, Tenn. – Four days ago, Jon Rahm was \

enduring the season’s worst weather conditions on Sunday at The \

Open on his way to a closing 75 at Royal Portrush, which \

considering the wind and the rain was a respectable showing. \

Thursday’s first round at the WGC-FedEx St. Jude Invitational \

was another story. With temperatures in the mid-80s and hardly any \

wind, the Spaniard was 13 strokes better in a flawless round. \

Thanks to his best putting performance on the PGA Tour, Rahm \

finished with an 8-under 62 for a three-stroke lead, which \

was even more impressive considering he’d never played the \

front nine at TPC Southwind."

model = model.to("cpu")

print("This is a %s news" % ag_news_label[predict(ex_text_str, text_pipeline)])

在上述代码的最后,可以插入一下代码,来使用ORT:

text = "Text from the news article"

text = torch.tensor(text_pipeline(text))

offsets = torch.tensor([0])

#输出模型

torch.onnx.export(model, # 上面的模型

(text, offsets), # 模型输入 (or a tuple for multiple inputs)

"data/ag_news_model.onnx", # 保存模型的位置

export_params=True, # 将训练好的参数权重存储在模型文件中

opset_version=10, # 输出模型的ONNX版本

do_constant_folding=True, # 是否执行常量折叠以进行优化

input_names=['input', 'offsets'], # 模型输入的名称

output_names=['output'], # 模型输出的名称

dynamic_axes={'input': {0: 'batch_size'}, # 变长轴

'output': {0: 'batch_size'}})

import onnxruntime as ort

import numpy as np

ort_sess = ort.InferenceSession('data/ag_news_model.onnx')

outputs = ort_sess.run(None, {'input': text.numpy(),

'offsets': torch.tensor([0]).numpy()})

# Print Result

result = outputs[0].argmax(axis=1) + 1

print("This is a %s news" % ag_news_label[result[0]])

3 TensorFlow CV

在这个例子中,我们将学习如何将TensorFlow CV模型导出为ONNX格式,然后使用ORT进行推理。使用的模型来自这个GitHub Notebook for Keras resnet50。

加载如下图片,图片另存为,或是使用如下所示获取:

wget -q https://raw.githubusercontent.com/onnx/tensorflow-onnx/main/tests/ade20k.jpg

安装python包

pip install tensorflow tf2onnx onnxruntime

使用自带的模型,那么使用运行ORT的代码如下所示:

import os

import tensorflow as tf

from tensorflow.keras.applications.resnet50 import ResNet50

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet50 import preprocess_input, decode_predictions

import numpy as np

import onnxruntime

img_path = 'ade20k.jpg'

img = image.load_img(img_path, target_size=(224, 224))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

model = ResNet50(weights='imagenet')

print(model.name)

preds = model.predict(x)

print('Keras Predicted:', decode_predictions(preds, top=3)[0])

model.save(os.path.join("data/", model.name))

print("saved")

import tf2onnx

import onnxruntime as rt

spec = (tf.TensorSpec((None, 224, 224, 3), tf.float32, name="input"),)

output_path = "data/resnet_ooo.onnx"

model_proto, _ = tf2onnx.convert.from_keras(model, input_signature=spec, opset=13, output_path=output_path)

output_names = [n.name for n in model_proto.graph.output]

providers = ['CPUExecutionProvider']

m = rt.InferenceSession(output_path, providers=providers)

onnx_pred = m.run(output_names, {"input": x})

print('ONNX Predicted:', decode_predictions(onnx_pred[0], top=3)[0])

# make sure ONNX and keras have the same results

np.testing.assert_allclose(preds, onnx_pred[0], rtol=1e-5)

输出是:

resnet50

1/1 [==============================] - 1s 1s/step

Keras Predicted: [('n04285008', 'sports_car', 0.34477925), ('n02974003', 'car_wheel', 0.2876423), ('n03100240', 'convertible', 0.10070901)]

WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model.

saved

2024-03-05 15:39:04.665299: I tensorflow/core/grappler/devices.cc:75] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 (Note: TensorFlow was not compiled with CUDA or ROCm support)

2024-03-05 15:39:04.665528: I tensorflow/core/grappler/clusters/single_machine.cc:357] Starting new session

2024-03-05 15:39:06.550557: I tensorflow/core/grappler/devices.cc:75] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 (Note: TensorFlow was not compiled with CUDA or ROCm support)

2024-03-05 15:39:06.550828: I tensorflow/core/grappler/clusters/single_machine.cc:357] Starting new session

enter this

ONNX Predicted: [('n04285008', 'sports_car', 0.34477764), ('n02974003', 'car_wheel', 0.2876437), ('n03100240', 'convertible', 0.100708835)]

4 SciKit Learn CV

在本例中,我们将介绍如何将SciKit Learn CV模型导出为ONNX格式,然后使用ORT进行推理。我们将使用著名的iris数据集。这里不再使用教程的例子(终于不用在看教程了…)

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

from sklearn.linear_model import LogisticRegression

clr = LogisticRegression()

clr.fit(X_train, y_train)

print(clr)

LogisticRegression()

将模型转换或导出为ONNX格式

from skl2onnx import convert_sklearn

from skl2onnx.common.data_types import FloatTensorType

initial_type = [('float_input', FloatTensorType([None, 4]))]

onx = convert_sklearn(clr, initial_types=initial_type)

with open("logreg_iris.onnx", "wb") as f:

f.write(onx.SerializeToString())

使用ONNX Runtime加载和运行模型我们将使用ONNX Runtime来计算此机器学习模型的预测。

import numpy

import onnxruntime as rt

sess = rt.InferenceSession("logreg_iris.onnx")

input_name = sess.get_inputs()[0].name

pred_onx = sess.run(None, {input_name: X_test.astype(numpy.float32)})[0]

print(pred_onx)

输出是:

[0 1 0 0 1 2 2 0 0 2 1 0 2 2 1 1 2 2 2 0 2 2 1 2 1 1 1 0 2 1 1 1 1 0 1 0 0

1]

可以修改代码,在列表中指定输出的名称,以获得特定的输出。

import numpy

import onnxruntime as rt

sess = rt.InferenceSession("logreg_iris.onnx")

input_name = sess.get_inputs()[0].name

label_name = sess.get_outputs()[0].name

pred_onx = sess.run(

[label_name], {input_name: X_test.astype(numpy.float32)})[0]

print(pred_onx)

输出是:

[2 2 1 2 1 2 0 2 0 1 1 0 1 2 2 2 1 0 1 1 2 2 1 0 0 2 2 0 2 0 2 0 0 2 1 1 1

1]

![BUUCTF:[MRCTF2020]ezmisc](https://img-blog.csdnimg.cn/direct/54ca5247aacd47d3ac878540b42ab044.png)