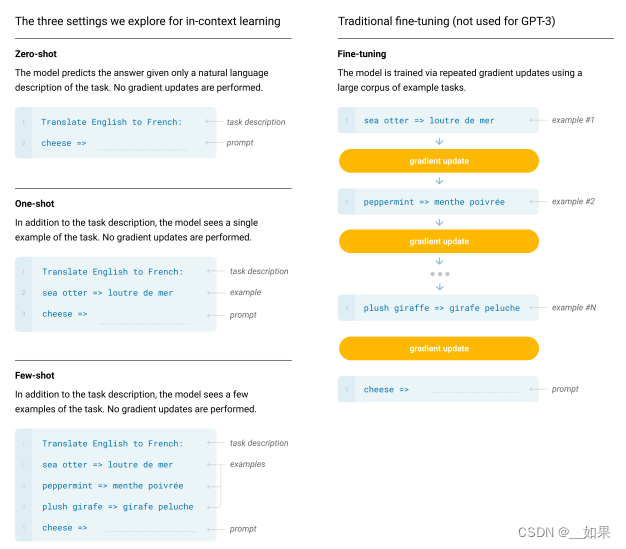

不像GPT2一样追求zero-shot,而换成了few-shot

Abstract

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions – something which current NLP systems still largely struggle to do. Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art finetuning approaches. Specifically, we train GPT-3, an autoregressive language model with 175 billion parameters, 10x more than any previous non-sparse language model, and test its performance in the few-shot setting. For all tasks, GPT-3 is applied without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. At the same time, we also identify some datasets where GPT-3’s few-shot learning still struggles, as well as some datasets where GPT-3 faces methodological issues related to training on large web corpora. Finally, we find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans. We discuss broader societal impacts of this finding and of GPT-3 in general.

翻译:

最近的工作表明,通过对大量文本语料库进行预训练,然后在特定任务上进行微调,可以在许多NLP任务和基准测试上实现重大增益。尽管在架构上通常是任务不可知的,但这种方法仍然需要成千上万个示例的任务特定微调数据集。相比之下,人类通常可以仅从几个示例或简单指令就完成新的语言任务 - 这是目前的NLP系统仍然在很大程度上难以做到的。在这里,我们展示了扩大语言模型可以大大提高任务不可知的few-shot性能,有时甚至可以达到与以前的最新微调方法相媲美的竞争力。具体来说,我们训练了GPT-3,一个有1750亿参数的自回归语言模型,比任何以前的非稀疏语言模型多10倍,并测试其在少样本设置中的性能。对于所有任务,GPT-3都是没有任何梯度更新或微调的应用,任务和少样本演示完全是通过与模型的文本交互来指定的。GPT-3在许多NLP数据集上取得了强大的性能,包括翻译、问答和完形填空任务,以及在需要即时推理或领域适应的任务上,例如拼词、在句子中使用新词或进行3位数的算术。同时,我们还确定了一些数据集,在这些数据集上GPT-3的少样本学习仍然存在困难,以及一些由于在大规模网络语料库上训练而面临的方法论问题。最后,我们发现GPT-3可以生成新闻文章的样本,这些样本使人类评估者难以将其与人类撰写的文章区分开来。我们讨论了这一发现和GPT-3一般的更广泛的社会影响。

总结:

更大更强;虽然是few-shot,但是不在子任务的样本上做微调,而是用prompt做few-shot

Introduction

Recent years have featured a trend towards pre-trained language representations in NLP systems, applied in increasingly flexible and task-agnostic ways for downstream transfer. First, single-layer representations were learned using word vectors [MCCD13, PSM14] and fed to task-specific architectures, then RNNs with multiple layers of representations and contextual state were used to form stronger representations [DL15, MBXS17, PNZtY18] (though still applied to task-specific architectures), and more recently pre-trained recurrent or transformer language models [VSP+17] have been directly fine-tuned, entirely removing the need for task-specific architectures [RNSS18, DCLT18, HR18].

翻译:

近年来,NLP系统中的趋势是使用预训练的语言表示,并以越来越灵活和与任务无关的方式应用于下游任务。首先,使用词向量[MCCD13, PSM14]学习单层表示,并输入到特定任务的架构中,然后使用具有多层表示和上下文状态的RNN来形成更强的表示[DL15, MBXS17, PNZtY18](尽管仍然应用于特定任务的架构),最近,直接对预训练的循环或转换器语言模型[VSP+17]进行微调,完全消除了对特定任务架构的需求[RNSS18, DCLT18, HR18]。

总结:

大家都喜欢用预训练模型微调

This last paradigm has led to substantial progress on many challenging NLP tasks such as reading comprehension, question answering, textual entailment, and many others, and has continued to advance based on new architectures and algorithms [RSR+19, LOG+19, YDY+19, LCG+19]. However, a major limitation to this approach is that while the architecture is task-agnostic, there is still a need for task-specific datasets and task-specific fine-tuning: to achieve strong performance on a desired task typically requires fine-tuning on a dataset of thousands to hundreds of thousands of examples specific to that task. Removing this limitation would be desirable, for several reasons.

First, from a practical perspective, the need for a large dataset of labeled examples for every new task limits the applicability of language models. There exists a very wide range of possible useful language tasks, encompassing anything from correcting grammar, to generating examples of an abstract concept, to critiquing a short story. For many of these tasks it is difficult to collect a large supervised training dataset, especially when the process must be repeated for every new task.

Second, the potential to exploit spurious correlations in training data fundamentally grows with the expressiveness of the model and the narrowness of the training distribution. This can create problems for the pre-training plus fine-tuning paradigm, where models are designed to be large to absorb information during pre-training, but are then fine-tuned on very narrow task distributions. For instance [HLW+20] observe that larger models do not necessarily generalize better out-of-distribution. There is evidence that suggests that the generalization achieved under this paradigm can be poor because the model is overly specific to the training distribution and does not generalize well outside it [YdC+19, MPL19]. Thus, the performance of fine-tuned models on specific benchmarks, even when it is nominally at human-level, may exaggerate actual performance on the underlying task [GSL+18, NK19].

Third, humans do not require large supervised datasets to learn most language tasks – a brief directive in natural language (e.g. “please tell me if this sentence describes something happy or something sad”) or at most a tiny number of demonstrations (e.g. “here are two examples of people acting brave; please give a third example of bravery”) is often sufficient to enable a human to perform a new task to at least a reasonable degree of competence. Aside from pointing to a conceptual limitation in our current NLP techniques, this adaptability has practical advantages – it allows humans to seamlessly mix together or switch between many tasks and skills, for example performing addition during a lengthy dialogue. To be broadly useful, we would someday like our NLP systems to have this same fluidity and generality.

翻译:

这种最新范式在许多具有挑战性的NLP任务上取得了实质性进展,如阅读理解、问答、文本蕴含以及许多其他任务,并基于新的架构和算法继续推进[RSR+19, LOG+19, YDY+19, LCG+19]。然而,这种方法的一个主要局限性在于,尽管架构是任务无关的,但仍然需要特定于任务的数据集和任务特定的微调:要在期望的任务上实现强大的性能,通常需要在该任务的具体数据集上进行微调,这些数据集包含数千到数十万个示例。消除这一局限性是可取的,原因有几个。

首先,从实际的角度来看,对于每个新任务都需要大量标记示例数据集的需求限制了语言模型的应用范围。存在非常广泛的可能有用的语言任务,包括从纠正语法到生成一个抽象概念的示例,再到评论短篇小说等任何事物。对于许多这样的任务,收集大型监督训练数据集是很困难的,特别是当这个过程必须针对每个新任务重复进行时。

其次,利用训练数据中的虚假相关性的潜在可能性随着模型的表达能力和训练分布的狭窄程度而根本增长。这对于预训练加微调范式可能造成问题,在这种范式中,模型被设计得很大,以便在预训练期间吸收信息,但随后在非常狭窄的任务分布上进行微调。例如,[HLW+20]观察到,更大的模型并不一定能更好地在分布外泛化。有证据表明,在这种范式下实现的泛化可能很差,因为模型过于特定于训练分布,并且不能很好地在分布之外泛化[YdC+19, MPL19]。因此,即使在名义上达到人类水平的特定基准测试上,微调后的模型的性能也可能夸大在实际任务上的实际性能[GSL+18, NK19]。

第三,人类在学习大多数语言任务时并不需要大量的监督数据集——通常,一句自然语言的简短指示(例如“请告诉我这个句子是描述快乐的事情还是悲伤的事情”)或者最多极少数量的演示(例如“这里有两个勇敢行为的例子;请给出第三个勇敢的例子”)就足以使人类至少能够以合理的程度执行新任务。除了指出我们当前NLP技术的一个概念性限制之外,这种适应性还具有实际优势——它允许人类无缝地混合或切换许多任务和技能,例如在冗长的对话中执行加法。为了具有广泛的实用性,我们希望有朝一日我们的NLP系统能够具备同样的流动性和通用性。

总结:

问题一:子任务太多,一个个找对应训练数据集不现实

问题二:微调任务中的数据可能在训练时就看过一些了

问题三:与人相比泛化能力不够

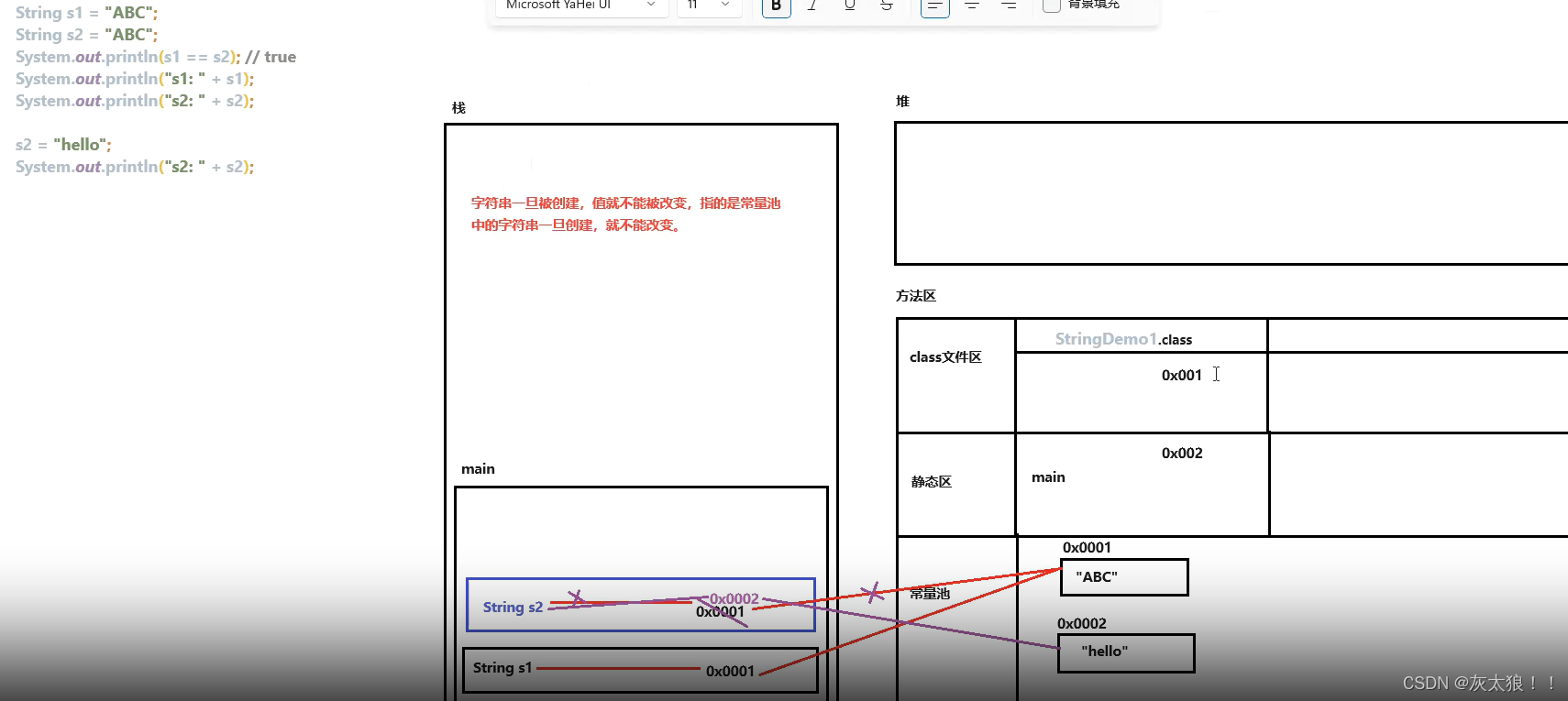

One potential route towards addressing these issues is meta-learning1 – which in the context of language models means the model develops a broad set of skills and pattern recognition abilities at training time, and then uses those abilities at inference time to rapidly adapt to or recognize the desired task (illustrated in Figure 1.1). Recent work [RWC+19] attempts to do this via what we call “in-context learning”, using the text input of a pretrained language model as a form of task specification: the model is conditioned on a natural language instruction and/or a few demonstrations of the task and is then expected to complete further instances of the task simply by predicting what comes next.

翻译:

解决这些问题的一条潜在途径是元学习(meta-learning)——在语言模型的背景下,这意味着模型在训练时发展出一套广泛的技能和模式识别能力,然后在推理时使用这些能力快速适应或识别所需的任务(如图1.1所示)。最近的工作[RWC+19]试图通过我们所说的“上下文学习”(in-context learning)来实现这一点,使用预训练语言模型的文本输入作为一种任务规范:模型被自然语言指令和/或任务的几个示例条件化,然后预期模型仅通过预测接下来会发生什么来完成任务的更多实例。

总结:

meta-learning:训练一个又大又强的模型

in-context learning:不利用子任务的少样本更新权重

每个sequence对应不同任务的数据,模型在大量不同任务的数据上训练,多多少少有在做一个元学习的过程,而且每个sequence是上下文的学习

While it has shown some initial promise, this approach still achieves results far inferior to fine-tuning – for example [RWC+19] achieves only 4% on Natural Questions, and even its 55 F1 CoQa result is now more than 35 points behind the state of the art. Meta-learning clearly requires substantial improvement in order to be viable as a practical method of solving language tasks.

翻译:

尽管这种方法已经显示出一些初步的潜力,但它的结果仍然远远不如微调——例如,[RWC+19]在Natural Questions上的准确率仅为4%,即使是它的55 F1 CoQa结果,现在也落后于最新技术35分以上。元学习显然需要实质性的改进,才能成为一种实用的解决语言任务的方法。

Another recent trend in language modeling may offer a way forward. In recent years the capacity of transformer language models has increased substantially, from 100 million parameters [RNSS18], to 300 million parameters [DCLT18], to 1.5 billion parameters [RWC+19], to 8 billion parameters [SPP+19], 11 billion parameters [RSR+19], and finally 17 billion parameters [Tur20]. Each increase has brought improvements in text synthesis and/or downstream NLP tasks, and there is evidence suggesting that log loss, which correlates well with many downstream tasks, follows a smooth trend of improvement with scale [KMH+20]. Since in-context learning involves absorbing many skills and tasks within the parameters of the model, it is plausible that in-context learning abilities might show similarly strong gains with scale.

翻译:

语言建模领域的另一个近期趋势可能提供了一种前进的方式。近年来,transformer语言模型的能力已经有了实质性的提升,从10亿参数[RNSS18],到30亿参数[DCLT18],再到150亿参数[RWC+19],再到800亿参数[SPP+19],1100亿参数[RSR+19],最后到1700亿参数[Tur20]。每次能力的提升都带来了文本合成和/或下游NLP任务的改进,并且有证据表明,与许多下游任务相关联的对数损失随着规模的增长呈现出平滑的改进趋势[KMH+20]。由于上下文学习涉及到在模型的参数内吸收许多技能和任务,因此可以推测上下文学习能力可能会随着规模的扩大而显示出类似的强劲增长。

总结:

模型越搞越大

In this paper, we test this hypothesis by training a 175 billion parameter autoregressive language model, which we call GPT-3, and measuring its in-context learning abilities. Specifically, we evaluate GPT-3 on over two dozen NLP datasets, as well as several novel tasks designed to test rapid adaptation to tasks unlikely to be directly contained in the training set. For each task, we evaluate GPT-3 under 3 conditions: (a) “few-shot learning”, or in-context learning where we allow as many demonstrations as will fit into the model’s context window (typically 10 to 100), (b) “one-shot learning”, where we allow only one demonstration, and (c) “zero-shot” learning, where no demonstrations are allowed and only an instruction in natural language is given to the model. GPT-3 could also in principle be evaluated in the traditional fine-tuning setting, but we leave this to future work.

翻译:

在本文中,我们通过训练一个具有1750亿参数的自回归语言模型——我们称之为GPT-3,并测量其上下文学习能力来测试这一假设。具体来说,我们在超过二十个NLP数据集上评估GPT-3,以及几个设计用于测试快速适应训练集中不可能直接包含的任务的新任务。对于每个任务,我们在以下三种条件下评估GPT-3:(a)“少样本学习”,或者在我们允许尽可能多的演示适应模型上下文窗口的上下文学习(通常为10到100个),(b)“单样本学习”,我们只允许一个演示,以及(c)“零样本”学习,不允许任何演示,只给模型一个自然语言的指令。从理论上讲,GPT-3也可以在传统的微调设置下进行评估,但我们将其留作未来工作。

总结:

三种评估:few-shot、one-shot、zero-shot

虚线是所有子任务,实线是子任务效果的平均值

At the same time, we also find some tasks on which few-shot performance struggles, even at the scale of GPT-3. This includes natural language inference tasks like the ANLI dataset, and some reading comprehension datasets like RACE or QuAC. By presenting a broad characterization of GPT-3’s strengths and weaknesses, including these limitations, we hope to stimulate study of few-shot learning in language models and draw attention to where progress is most needed.

We also undertake a systematic study of “data contamination” – a growing problem when training high capacity models on datasets such as Common Crawl, which can potentially include content from test datasets simply because such content often exists on the web. In this paper we develop systematic tools to measure data contamination and quantify its distorting effects. Although we find that data contamination has a minimal effect on GPT-3’s performance on most datasets, we do identify a few datasets where it could be inflating results, and we either do not report results on these datasets or we note them with an asterisk, depending on the severity.

翻译:

同时,我们还发现即使在GPT-3的规模下,few-shot性能在一些任务上也存在困难。这包括像ANLI数据集这样的自然语言推理任务,以及像RACE或QuAC这样的某些阅读理解数据集。通过展示GPT-3的优点和缺点的广泛特征,包括这些局限性,我们希望激发对语言模型中少样本学习的研究,并引起人们对最需要进步的地方的关注。

我们还进行了一项关于“数据污染”的系统性研究——这是一个在诸如Common Crawl这样的数据集上训练高容量模型时日益严重的问题,因为这些数据集可能包含来自测试数据集的内容,仅仅因为这些内容通常存在于网络上。在本文中,我们开发了系统性的工具来测量数据污染并量化其扭曲效应。尽管我们发现数据污染对GPT-3在大多数数据集上的性能影响最小,但我们确实识别了几个可能因数据污染而夸大结果的数据集,我们或者不报告这些数据集的结果,或者根据严重程度在结果上标注星号。

Approach

zero-shot:提供一个prompt

one-shot:提供一个prompt后插一个样本进来当作示例,希望通过注意力机制捕获有用信息帮助预测

few-shot:提供一个prompt后插一些样本进来当作示例

Model and Architectures

We use the same model and architecture as GPT-2 [RWC+19], including the modified initialization, pre-normalization, and reversible tokenization described therein, with the exception that we use alternating dense and locally banded sparse attention patterns in the layers of the transformer, similar to the Sparse Transformer [CGRS19]. To study the dependence of ML performance on model size, we train 8 different sizes of model, ranging over three orders of magnitude from 125 million parameters to 175 billion parameters, with the last being the model we call GPT-3. Previous work [KMH+20] suggests that with enough training data, scaling of validation loss should be approximately a smooth power law as a function of size; training models of many different sizes allows us to test this hypothesis both for validation loss and for downstream language tasks.

Table 2.1 shows the sizes and architectures of our 8 models. Here nparams is the total number of trainable parameters, nlayers is the total number of layers, dmodel is the number of units in each bottleneck layer (we always have the feedforward layer four times the size of the bottleneck layer, dff = 4 ∗ dmodel), and dhead is the dimension of each attention head. All models use a context window of nctx = 2048 tokens. We partition the model across GPUs along both the depth and width dimension in order to minimize data-transfer between nodes. The precise architectural parameters for each model are chosen based on computational efficiency and load-balancing in the layout of models across GPU’s. Previous work [KMH+20] suggests that validation loss is not strongly sensitive to these parameters within a reasonably broad range.

翻译:

我们使用了与GPT-2 [RWC+19]相同的模型和架构,包括其中描述的修改后的初始化、预规范化和可逆的标记化,唯一的例外是我们在transformer的层中使用交替的密集和局部带状稀疏注意力模式,类似于Sparse Transformer [CGRS19]。为了研究机器学习性能与模型大小之间的依赖关系,我们训练了8种不同大小的模型,参数范围从1.25亿到1750亿,最后一个是我们称之为GPT-3的模型。之前的工作[KMH+20]表明,只要有足够的训练数据,验证损失的规模大致上应该是一个关于大小的平滑的幂律函数;训练多种不同大小的模型允许我们同时测试这个假设对于验证损失和下游语言任务是否成立。

表2.1展示了我们8个模型的大小和架构。在这里,nparams是可训练参数的总数,nlayers是层的总数,dmodel是每个瓶颈层的单位数(我们的前馈层始终是瓶颈层大小的四倍,dff = 4 * dmodel),dhead是每个注意力头的维度。所有模型都使用nctx = 2048个令牌的上下文窗口。我们沿着模型的深度和宽度维度在GPU之间进行划分,以最小化节点之间的数据传输。每个模型的确切架构参数是基于计算效率和模型在GPU上的布局进行负载平衡而选择的。之前的工作[KMH+20]表明,验证损失在这些参数的合理范围内对这些参数不太敏感。

总结:

GPT2与GPT3:把Sparse Transformer拿过来了

GPT2与GPT:模型初始值改变、把Normalization放到前面、可以反转的词元

gpt3的模型是扁扁的, 因为计算量与宽度成平方关系

大模型的batch要大一些,方便分布式计算;小模型的batch要小一些,因为小的batch_size意味着batch中包含的噪声偏多

按道理来说,batch_size和lr应该是呈线性关系,但是在这里确实相反的,作者给出的解释:

As found in [KMH+20, MKAT18], larger models can typically use a larger batch size, but require a smaller learning rate. We measure the gradient noise scale during training and use it to guide our choice of batch size [MKAT18]. Table 2.1 shows the parameter settings we used. To train the larger models without running out of memory, we use a mixture of model parallelism within each matrix multiply and model parallelism across the layers of the network. All models were trained on V100 GPU’s on part of a high-bandwidth cluster provided by Microsoft. Details of the training process and hyperparameter settings are described in Appendix B.

翻译:

正如在[KMH+20, MKAT18]中所发现的,较大的模型通常可以使用较大的批量大小,但需要较小的学习率。我们在训练过程中测量梯度噪声尺度,并使用它来指导我们的批量大小选择[MKAT18]。表2.1展示了我们使用的参数设置。为了在不过度消耗内存的情况下训练较大的模型,我们在每个矩阵乘法内部以及网络的层之间使用了模型并行性。所有模型都是在微软提供的一部分高带宽集群上的V100 GPU上训练的。训练过程和超参数设置的细节在附录B中描述。

Training Dataset

Datasets for language models have rapidly expanded, culminating in the Common Crawl dataset2 [RSR+19] constituting nearly a trillion words. This size of dataset is sufficient to train our largest models without ever updating on the same sequence twice. However, we have found that unfiltered or lightly filtered versions of Common Crawl tend to have lower quality than more curated datasets. Therefore, we took 3 steps to improve the average quality of our datasets: (1) we downloaded and filtered a version of CommonCrawl based on similarity to a range of high-quality reference corpora, (2) we performed fuzzy deduplication at the document level, within and across datasets, to prevent redundancy and preserve the integrity of our held-out validation set as an accurate measure of overfitting, and (3) we also added known high-quality reference corpora to the training mix to augment CommonCrawl and increase its diversity.

翻译:

语言模型的数据库迅速扩展,最终形成了包含近一万亿个单词的Common Crawl数据库2 [RSR+19]。这个数据库的大小足以训练我们最大的模型,而无需重复更新相同的序列。然而,我们发现未经过滤或轻度过滤的Common Crawl版本的质量往往低于经过更多策划的数据库。因此,我们采取了三个步骤来提高我们数据库的平均质量:(1) 我们下载并过滤了一个与一系列高质量参考语料库相似的Common Crawl版本,(2) 我们在文档级别上进行了模糊去重,包括数据库内部和跨数据库,以防止重复并保持我们预留的验证集的完整性,作为准确衡量过拟合的指标,(3) 我们还向训练混合中添加了已知的高质量参考语料库,以增强Common Crawl并增加其多样性。

总结:

GPT2的时候没用Common Crawl就是因为这个数据集有点脏,但是为了练个更大的GPT3不得不拿来用

把Common Crawl的数据集下下来,把它的样本全当作负例,把之前GPT2的网页扒下来的数据集作为正例,做个逻辑回归二分类,如果Common Crawl中有被判为正例的样本,则拿出来用

用LSH算法(局部敏感哈希),如果文章相似度很高就去掉

加了一些已知的高质量语料库:

Evaluation

For few-shot learning, we evaluate each example in the evaluation set by randomly drawing K examples from that task’s training set as conditioning, delimited by 1 or 2 newlines depending on the task. For LAMBADA and Storycloze there is no supervised training set available so we draw conditioning examples from the development set and evaluate on the test set. For Winograd (the original, not SuperGLUE version) there is only one dataset, so we draw conditioning examples directly from it.

K can be any value from 0 to the maximum amount allowed by the model’s context window, which is nctx = 2048 for all models and typically fits 10 to 100 examples. Larger values of K are usually but not always better, so when a separate development and test set are available, we experiment with a few values of K on the development set and then run the best value on the test set. For some tasks (see Appendix G) we also use a natural language prompt in addition to (or for K = 0, instead of) demonstrations.

翻译:

对于小样本学习,我们通过从该任务的训练集中随机抽取K个例子作为条件,用1个或2个新行来分隔,来评估评估集中的每个例子。对于LAMBADA和Storycloze,没有可用的监督训练集,所以我们从开发集中抽取条件例子,并在测试集上进行评估。对于Winograd(原始版本,非SuperGLUE版本),只有一个数据集,所以我们直接从中抽取条件例子。

K的值可以从0到模型上下文窗口允许的最大值,对于所有模型来说,这个值是nctx = 2048,通常可以适应10到100个例子。较大的K值通常(但不总是)更好,所以当有单独的开发集和测试集可用时,我们在开发集上尝试几个K值,然后在测试集上运行最佳值。对于一些任务(见附录G),我们还在演示之外(或对于K = 0,代替演示)使用自然语言提示。

On tasks that involve choosing one correct completion from several options (multiple choice), we provide K examples of context plus correct completion, followed by one example of context only, and compare the LM likelihood of each completion. For most tasks we compare the per-token likelihood (to normalize for length), however on a small number of datasets (ARC, OpenBookQA, and RACE) we gain additional benefit as measured on the development set by normalizing by the unconditional probability of each completion, by computing P (completionjcontext) P (completionjanswer context) , where answer context is the string "Answer: " or "A: " and is used to prompt that the completion should be an answer but is otherwise generic.

On tasks that involve binary classification, we give the options more semantically meaningful names (e.g. “True” or “False” rather than 0 or 1) and then treat the task like multiple choice; we also sometimes frame the task similar to what is done by [RSR+19] (see Appendix G) for details.

On tasks with free-form completion, we use beam search with the same parameters as [RSR+19]: a beam width of 4 and a length penalty of α = 0:6. We score the model using F1 similarity score, BLEU, or exact match, depending on what is standard for the dataset at hand.

Final results are reported on the test set when publicly available, for each model size and learning setting (zero-, one-, and few-shot). When the test set is private, our model is often too large to fit on the test server, so we report results on the development set. We do submit to the test server on a small number of datasets (SuperGLUE, TriviaQA, PiQa) where we were able to make submission work, and we submit only the 200B few-shot results, and report development set results for everything else.

翻译:

对于涉及从几个选项中选择一个正确完成的任务(多项选择),我们提供K个上下文加正确完成的例子,然后提供一个只有上下文的例子,并比较每个完成的LM可能性。对于大多数任务,我们比较每令牌的可能性(以长度为标准进行归一化),然而,在少数数据集(ARC、OpenBookQA和RACE)上,我们在开发集上通过计算P(completion|context) / P(completion|answer context)来获得额外的收益,其中answer context是字符串"Answer: "或"A: ",用于提示完成应该是答案,但在其他方面是通用的。

对于涉及二元分类的任务,我们给选项赋予更有语义意义的名称(例如,使用“True”或“False”而不是0或1),然后像处理多项选择任务一样处理这个任务;我们有时也会像[RSR+19]所做的那样构建任务(详见附录G)。

对于自由形式的完成任务,我们使用与[RSR+19]相同的参数进行束搜索:束宽为4,长度惩罚α=0.6。我们根据手头数据集的常规做法,使用F1相似度评分、BLEU或精确匹配来评分模型。

最终结果在测试集上报告,当测试集公开可用时,针对每个模型大小和学习设置(零样本、单样本和小样本)。当测试集是私有的时候,我们的模型通常太大而无法适应测试服务器,所以我们报告开发集的结果。我们在少数数据集(SuperGLUE、TriviaQA、PiQa)上提交到测试服务器,因为我们能够使提交工作,我们只提交200B小样本的结果,并报告其他所有内容的开发集结果。

Results

随着计算量指数级增加,损失线性下降

Limitations

First, despite the strong quantitative and qualitative improvements of GPT-3, particularly compared to its direct predecessor GPT-2, it still has notable weaknesses in text synthesis and several NLP tasks. On text synthesis, although the overall quality is high, GPT-3 samples still sometimes repeat themselves semantically at the document level, start to lose coherence over sufficiently long passages, contradict themselves, and occasionally contain non-sequitur sentences or paragraphs. We will release a collection of 500 uncurated unconditional samples to help provide a better sense of GPT-3’s limitations and strengths at text synthesis. Within the domain of discrete language tasks, we have noticed informally that GPT-3 seems to have special difficulty with “common sense physics”, despite doing well on some datasets (such as PIQA [BZB+19]) that test this domain. Specifically GPT-3 has difficulty with questions of the type “If I put cheese into the fridge, will it melt?”. Quantitatively, GPT-3’s in-context learning performance has some notable gaps on our suite of benchmarks, as described in Section 3, and in particular it does little better than chance when evaluated one-shot or even few-shot on some “comparison” tasks, such as determining if two words are used the same way in a sentence, or if one sentence implies another (WIC and ANLI respectively), as well as on a subset of reading comprehension tasks. This is especially striking given GPT-3’s strong few-shot performance on many other tasks.

翻译:

首先,尽管GPT-3在定量上和定性上相比其直接前身GPT-2有了显著的提高,但它仍在文本合成和一些NLP任务中存在明显的弱点。在文本合成方面,尽管整体质量很高,但GPT-3的样本在文档级别上有时仍然在语义上重复,在足够长的段落中开始失去连贯性,相互矛盾,并且偶尔包含不合逻辑的句子或段落。我们将发布500个未策划的无条件样本集合,以帮助更好地了解GPT-3在文本合成方面的局限性和优势。在离散语言任务领域,我们非正式地注意到,GPT-3似乎在“常识物理”方面有特殊的困难,尽管在一些数据集(如PIQA [BZB+19])上表现良好,这些数据集测试了这个领域。具体来说,GPT-3在类型为“如果我把奶酪放进冰箱,它会融化吗?”的问题上存在困难。在定量方面,GPT-3的上下文学习性能在我们的基准测试套件中有一些显著的差距,如第3节所述,特别是在一些“比较”任务上,例如确定两个词在句子中是否以相同的方式使用,或者一个句子是否隐含另一个句子(分别指WIC和ANLI),以及在阅读理解任务的一个子集上,其表现甚至不如随机猜测。这尤其引人注目,因为GPT-3在许多其他任务上的少样本学习表现非常强。

总结:

文本生成较弱,生成文章过长会重复内容

GPT-3 has several structural and algorithmic limitations, which could account for some of the issues above. We focused on exploring in-context learning behavior in autoregressive language models because it is straightforward to both sample and compute likelihoods with this model class. As a result our experiments do not include any bidirectional architectures or other training objectives such as denoising. This is a noticeable difference from much of the recent literature, which has documented improved fine-tuning performance when using these approaches over standard language models [RSR+19]. Thus our design decision comes at the cost of potentially worse performance on tasks which empirically benefit from bidirectionality. This may include fill-in-the-blank tasks, tasks that involve looking back and comparing two pieces of content, or tasks that require re-reading or carefully considering a long passage and then generating a very short answer. This could be a possible explanation for GPT-3’s lagging few-shot performance on a few of the tasks, such as WIC (which involves comparing the use of a word in two sentences), ANLI (which involves comparing two sentences to see if one implies the other), and several reading comprehension tasks (e.g. QuAC and RACE). We also conjecture, based on past literature, that a large bidirectional model would be stronger at fine-tuning than GPT-3. Making a bidirectional model at the scale of GPT-3, and/or trying to make bidirectional models work with few- or zero-shot learning, is a promising direction for future research, and could help achieve the “best of both worlds”.

翻译:

GPT-3存在一些结构和算法上的局限性,这些局限性可能解释了上述一些问题。我们专注于探索自动回归语言模型中的上下文学习行为,因为这类模型在采样和计算可能性方面比较直接。因此,我们的实验不包括任何双向架构或其他训练目标,如去噪。这与最近的大部分文献形成了显著的区别,后者记录了在使用这些方法对标准语言模型进行微调时性能的提高[RSR+19]。因此,我们的设计决策的成本可能是在任务上的表现可能更差,这些任务从经验上受益于双向性。这可能包括填空任务、需要回顾并比较两块内容、或者需要重新阅读或仔细考虑长篇内容然后生成非常短答案的任务。这可能是GPT-3在几个任务上少样本学习表现不佳的原因,例如WIC(涉及比较两个句子中单词的使用)、ANLI(涉及比较两个句子,看看一个句子是否隐含另一个句子)和一些阅读理解任务(例如QuAC和RACE)。我们还根据过去的文献推测,一个大规模的双向模型在微调方面会比GPT-3更强大。在GPT-3规模的模型上建立一个双向模型,以及/或者尝试使双向模型与少样本或零样本学习一起工作,是未来研究的有希望的方向,并且可能有助于实现“两者兼得”。

总结:

GPT用的解码器往前看,有些双向任务上表现不佳

A more fundamental limitation of the general approach described in this paper – scaling up any LM-like model, whether autoregressive or bidirectional – is that it may eventually run into (or could already be running into) the limits of the pretraining objective. Our current objective weights every token equally and lacks a notion of what is most important to predict and what is less important. [RRS20] demonstrate benefits of customizing prediction to entities of interest. Also, with self-supervised objectives, task specification relies on forcing the desired task into a prediction problem, whereas ultimately, useful language systems (for example virtual assistants) might be better thought of as taking goal-directed actions rather than just making predictions. Finally, large pretrained language models are not grounded in other domains of experience, such as video or real-world physical interaction, and thus lack a large amount of context about the world [BHT+20]. For all these reasons, scaling pure self-supervised prediction is likely to hit limits, and augmentation with a different approach is likely to be necessary. Promising future directions in this vein might include learning the objective function from humans [ZSW+19a], fine-tuning with reinforcement learning, or adding additional modalities such as images to provide grounding and a better model of the world [CLY+19].

翻译:

本文所述的一般方法的更深层次局限性在于,无论是对自回归模型还是双向模型进行扩展,最终都可能遇到(或者可能已经遇到)预训练目标的限制。我们目前的预训练目标权重每个令牌相等,且缺乏对最需要预测和对不太重要的内容的区分。 [RRS20] 展示了根据感兴趣的实体定制预测的好处。此外,在使用自监督目标时,任务指定依赖于将所需任务强制转化为预测问题,而最终,有用的语言系统(例如虚拟助手)可能更好地被认为是在采取目标导向的行动,而不仅仅是做出预测。最后,大型预训练语言模型在其他经验领域(如视频或真实世界物理交互)缺乏经验,因此缺乏关于世界的许多上下文[BHT+20]。出于所有这些原因,单纯扩大自监督预测可能会遇到限制,因此可能需要采用不同的方法进行增强。在这一方向上,有前途的未来研究方向可能包括从人类学习目标函数[ZSW+19a],使用强化学习进行微调,或者添加其他模态(如图像)以提供语义支持和更好地模拟世界[CLY+19]。

总结:

模型对预测的每个词都视作同等重要,导致花大量时间训练了一些没用意义的虚词;没见过除文本外的数据

Another limitation broadly shared by language models is poor sample efficiency during pre-training. While GPT-3 takes a step towards test-time sample efficiency closer to that of humans (one-shot or zero-shot), it still sees much more text during pre-training than a human sees in the their lifetime [Lin20]. Improving pre-training sample efficiency is an important direction for future work, and might come from grounding in the physical world to provide additional information, or from algorithmic improvements.

翻译:

语言模型普遍存在的另一个局限性是在预训练期间样本效率低下。虽然GPT-3在测试时接近人类的样本效率(一对一或零样本),但它仍然在预训练期间看到比人类一生中看到的文本多得多的内容[Lin20]。提高预训练的样本效率是未来工作的一个重要方向,这可能来自于在物理世界中寻找语义,以提供额外的信息,或者来自于算法上的改进。

总结:

样本有效性不够,需要海量文本数据才能练出来

A limitation, or at least uncertainty, associated with few-shot learning in GPT-3 is ambiguity about whether few-shot learning actually learns new tasks “from scratch” at inference time, or if it simply recognizes and identifies tasks that it has learned during training. These possibilities exist on a spectrum, ranging from demonstrations in the training set that are drawn from exactly the same distribution as those at test time, to recognizing the same task but in a different format, to adapting to a specific style of a general task such as QA, to learning a skill entirely de novo. Where GPT-3 is on this spectrum may also vary from task to task. Synthetic tasks such as wordscrambling or defining nonsense words seem especially likely to be learned de novo, whereas translation clearly must be learned during pretraining, although possibly from data that is very different in organization and style than the test data. Ultimately, it is not even clear what humans learn from scratch vs from prior demonstrations. Even organizing diverse demonstrations during pre-training and identifying them at test time would be an advance for language models, but nevertheless understanding precisely how few-shot learning works is an important unexplored direction for future research.

翻译:

GPT-3中的few-shot学习存在一个局限性或不确定性,那就是在推理时few-shot学习是否真的从头开始学习新任务,或者它只是识别和识别在训练期间已经学习过的任务。这些可能性存在于一个光谱上,范围从训练集中的演示与测试时的分布完全相同,到识别相同的任务但以不同的格式呈现,到适应特定风格的通用任务(如问答),到完全新地学习一项技能。GPT-3在这个光谱上的位置也可能因任务而异。合成任务,如字母乱序或定义无意义的词,似乎特别有可能从头开始学习,而翻译显然必须在预训练期间学习,尽管可能从与测试数据在组织和风格上非常不同的数据中学习。最终,甚至不清楚人类是从头开始学习还是从之前的演示中学习。即使在预训练期间组织多样化的演示并在测试时识别它们,对于语言模型来说也将是一个进步,但无论如何,精确理解少样本学习的工作原理是未来研究的重要未探索方向。

总结:

不确定是真正学会知识,还是学会了知识的某种特征从而去训练数据中找相似

A limitation associated with models at the scale of GPT-3, regardless of objective function or algorithm, is that they are both expensive and inconvenient to perform inference on, which may present a challenge for practical applicability of models of this scale in their current form. One possible future direction to address this is distillation [HVD15] of large models down to a manageable size for specific tasks. Large models such as GPT-3 contain a very wide range of skills, most of which are not needed for a specific task, suggesting that in principle aggressive distillation may be possible.Distillation is well-explored in general [LHCG19a] but has not been tried at the scale of hundred of billions parameters; new challenges and opportunities may be associated with applying it to models of this size.

翻译:

与GPT-3这样规模的模型相关的一个局限性是,无论目标函数或算法如何,它们在推理时都是既昂贵又不便的,这可能对这种规模模型的实际应用性提出了挑战。一个可能的未来方向是大型模型的蒸馏[HVD15],将其缩小到特定任务的可用大小。像GPT-3这样的大型模型包含非常广泛的能力,其中大多数在特定任务中并不需要,这表明原则上可能可以进行激进的蒸馏。蒸馏在一般情况下的探索已经很充分[LHCG19a],但尚未在数百亿参数的规模上尝试;将这种方法应用于这种大小的模型可能会带来新的挑战和机会。

总结:

贵

Finally, GPT-3 shares some limitations common to most deep learning systems – its decisions are not easily interpretable, it is not necessarily well-calibrated in its predictions on novel inputs as observed by the much higher variance in performance than humans on standard benchmarks, and it retains the biases of the data it has been trained on. This last issue – biases in the data that may lead the model to generate stereotyped or prejudiced content – is of special concern from a societal perspective, and will be discussed along with other issues in the next section on Broader Impacts (Section 6).

翻译:

最后,GPT-3与大多数深度学习系统共享一些局限性——其决策不易解释,其在新型输入上的预测不一定校准良好,正如在标准基准测试中性能的变异远高于人类所观察到的那样,并且它保留了其训练数据中的偏见。最后一个问题——数据中的偏见可能会导致模型生成刻板或带有偏见的内容——从社会角度来看尤其令人关注,将在下一节关于更广泛影响的讨论中(第6节)与其他问题一起讨论。

总结:

无法解释输入到输出流程

Broader Impacts

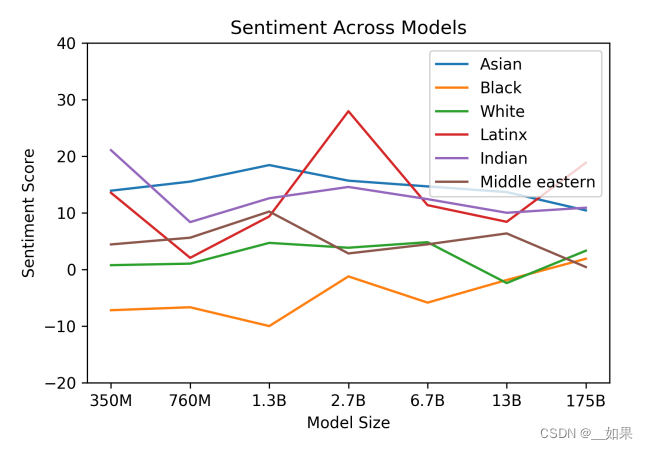

生成垃圾邮件、钓鱼邮件、造谣的新闻稿、性别偏见、种族偏见、宗教偏见、能耗

Conclusion

We presented a 175 billion parameter language model which shows strong performance on many NLP tasks and benchmarks in the zero-shot, one-shot, and few-shot settings, in some cases nearly matching the performance of state-of-the-art fine-tuned systems, as well as generating high-quality samples and strong qualitative performance at tasks defined on-the-fly. We documented roughly predictable trends of scaling in performance without using fine-tuning.We also discussed the social impacts of this class of model. Despite many limitations and weaknesses, these results suggest that very large language models may be an important ingredient in the development of adaptable, general language systems.

翻译:

我们展示了一个拥有1750亿参数的语言模型,该模型在零样本、单样本和少样本设置中的许多NLP任务和基准测试中表现强劲,在某些情况下,其性能几乎与经过微调的最先进系统相当,并且能够生成高质量的样本以及在即时定义的任务中表现出强大的定性性能。我们记录了在不使用微调的情况下,性能可预测的扩展趋势。我们还讨论了这类模型的社会影响。尽管存在许多局限性和弱点,但这些结果表明,非常大的语言模型可能是开发适应性强、通用语言系统的关键组成部分。