1、什么是守护进程

有以下这样一个商品场景:

1、用户在商城查询商品信息,查询商品信息的时候需要登录用户,如果用户想要下单,需要提交到订单服务,最后下单完成后,需要更新仓库的商品数量信息。

2、如果每一个流程都是后端的一个微服务pod形式,那么如果在某个环节出错,那我们需要去查日志,首先需要去查询商品微服务这个Pod的日志,如果日志没有错误信息,在去查用户这个微服务的Pod日志,如果没有,继续查询其他的日志。

3、如果每次这样去查询的日志的时候,就会很麻烦,不利于解决问题。

4、这次有人会想,那每个node上都装一个日志收集器就可以了,这样其实也可以,但k8s给我们提供了一种更好的的方案----守护资源。

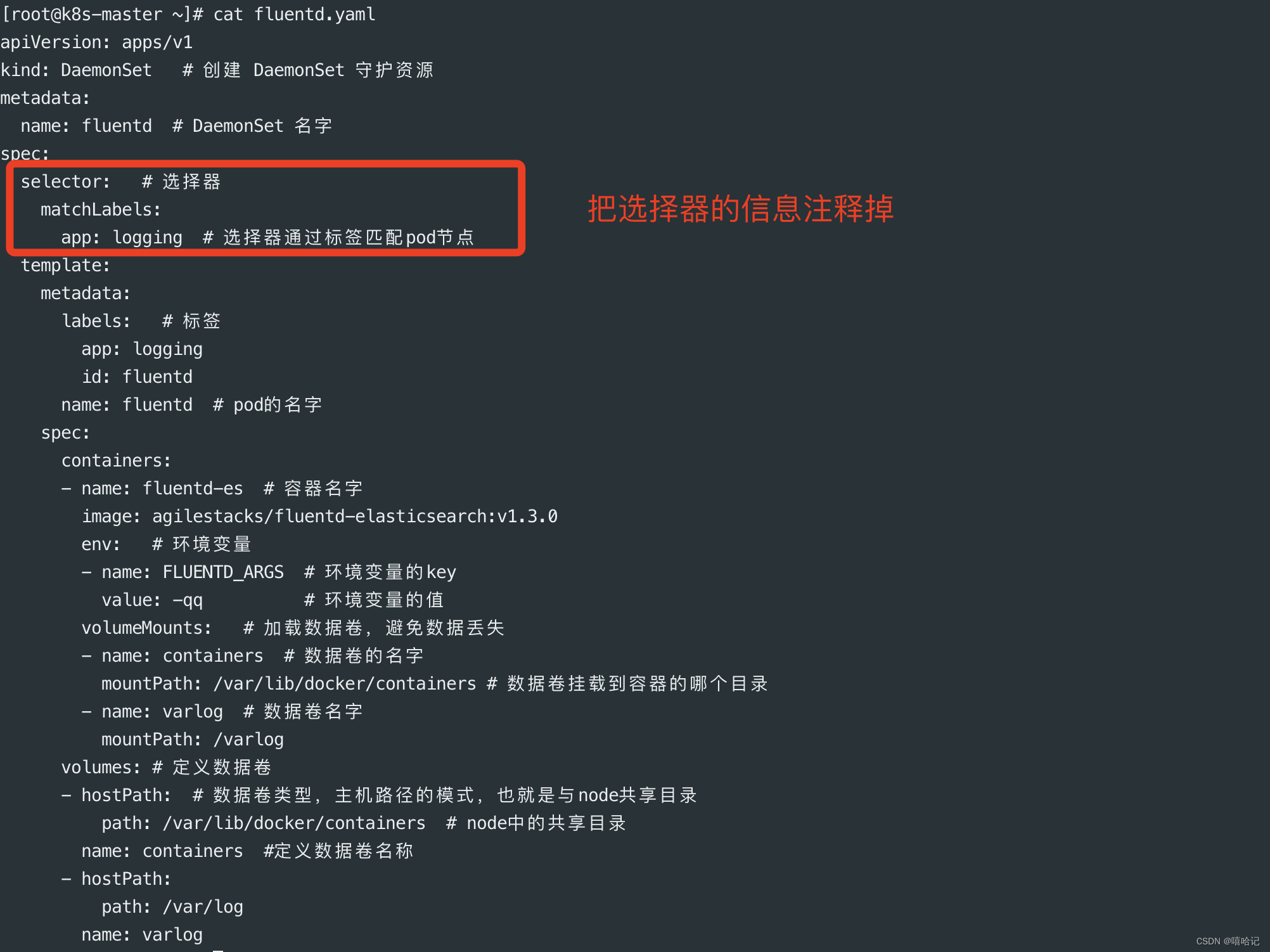

2、配置文件

apiVersion: apps/v1

kind: DaemonSet # 创建 DaemonSet 守护资源

metadata:

name: fluentd # DaemonSet 名字

spec:

selector: # 选择器

matchLabels:

app: logging # 选择器通过标签匹配pod节点

template:

metadata:

labels: # 标签

app: logging

id: fluentd

name: fluentd # pod的名字

spec:

containers:

- name: fluentd-es # 容器名字

image: agilestacks/fluentd-elasticsearch:v1.3.0

env: # 环境变量

- name: FLUENTD_ARGS # 环境变量的key

value: -qq # 环境变量的值

volumeMounts: # 加载数据卷,避免数据丢失

- name: containers # 数据卷的名字

mountPath: /var/lib/docker/containers # 数据卷挂载到容器的哪个目录

- name: varlog # 数据卷名字

mountPath: /var/log

volumes: # 定义数据卷

- hostPath: # 数据卷类型,主机路径的模式,也就是与node共享目录

path: /var/lib/docker/containers # node中的共享目录

name: containers #定义数据卷名称

- hostPath:

path: /var/log

name: varlog

3、使用DaemonSet守护进程

- DaemonSet会忽略Node的unschedulable状态,有两种方式来指定Pod只运行在指定的Node节点上:

- nodeSelector:只调度到匹配指定label的Node上

- nodeAffinity:功能更丰富的Node选择器,比如支持集合操作

- podAffinity:调度到满足条件的Pod所在的Node上

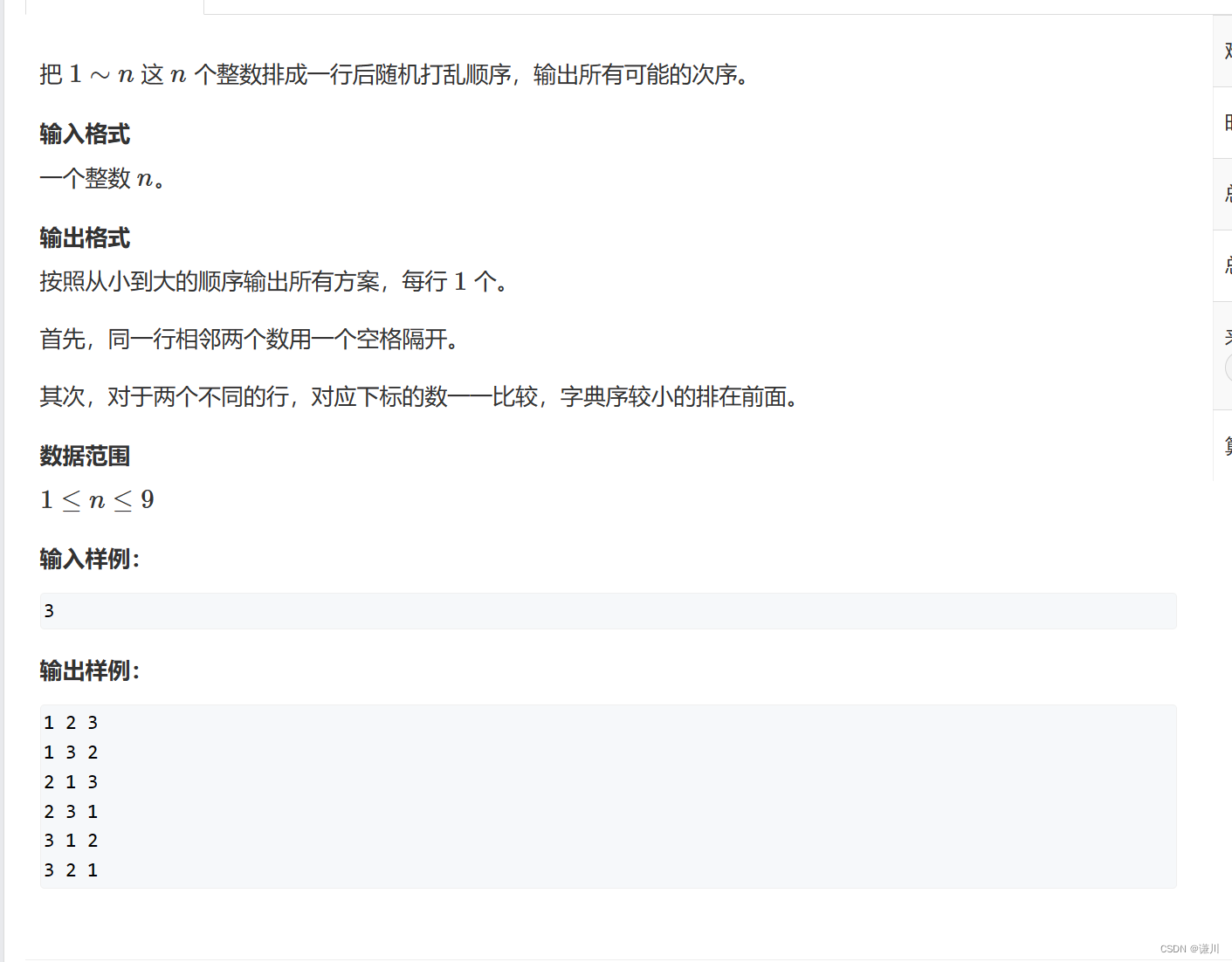

3.1 创建DaemonSet(不添加选择器)会如何?

如果在创建DaemonSet的时候,不添加选择器

创建资源的时候会提示我们必须要设置选择器

[root@k8s-master ~]# kubectl create -f fluentd.yaml

The DaemonSet "fluentd" is invalid: spec.template.metadata.labels: Invalid value: map[string]string{"app":"logging", "id":"fluentd"}: `selector` does not match template `labels`

3.2 创建DaemonSet资源(添加选择器)

[root@k8s-master ~]# kubectl create -f fluentd.yaml

daemonset.apps/fluentd created

3.3 查看daemonset的状态

可以看到给我们创建了2个fluentd资源,reday 0个,up-to-date 2个

[root@k8s-master ~]# kubectl get daemonsets.apps fluentd

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd 2 2 0 2 0 <none> 18s

3.4 查看daemonset的描述信息

可以看到创建了两个pod信息

[root@k8s-master ~]# kubectl describe daemonsets.apps fluentd

Name: fluentd

Selector: app=logging

Node-Selector: <none>

Labels: <none>

Annotations: deprecated.daemonset.template.generation: 1

Desired Number of Nodes Scheduled: 2

Current Number of Nodes Scheduled: 2

Number of Nodes Scheduled with Up-to-date Pods: 2

Number of Nodes Scheduled with Available Pods: 0

Number of Nodes Misscheduled: 0

Pods Status: 0 Running / 2 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=logging

id=fluentd

Containers:

fluentd-es:

Image: agilestacks/fluentd-elasticsearch:v1.3.0

Port: <none>

Host Port: <none>

Environment:

FLUENTD_ARGS: -qq

Mounts:

/var/lib/docker/containers from containers (rw)

/varlog from varlog (rw)

Volumes:

containers:

Type: HostPath (bare host directory volume)

Path: /var/lib/docker/containers

HostPathType:

varlog:

Type: HostPath (bare host directory volume)

Path: /var/log

HostPathType:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 35s daemonset-controller Created pod: fluentd-5rz5d

Normal SuccessfulCreate 35s daemonset-controller Created pod: fluentd-6kpbr

3.5 查看pod状态

查看到fluentd的这个pod还在创建中

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

dns-test 1/1 Running 1 (16h ago) 16h

fluentd-5rz5d 0/1 ContainerCreating 0 54s

fluentd-6kpbr 0/1 ContainerCreating 0 54s

3.6 查看pod的描述信息

从events事件中可以看到在拉取镜像和启动pod,并且已经启动成功

[root@k8s-master ~]# kubectl describe po fluentd-5rz5d

Name: fluentd-5rz5d

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node-01/10.10.10.177

Start Time: Sat, 24 Feb 2024 19:43:29 +0800

Labels: app=logging

controller-revision-hash=5c58fcb7d4

id=fluentd

pod-template-generation=1

Annotations: <none>

Status: Running

IP: 10.2.2.28

IPs:

IP: 10.2.2.28

Controlled By: DaemonSet/fluentd

Containers:

fluentd-es:

Container ID: docker://d5bf8b2018b02edb79e410041a0f0764efb472801ab446ff672631ef1904ff7e

Image: agilestacks/fluentd-elasticsearch:v1.3.0

Image ID: docker-pullable://agilestacks/fluentd-elasticsearch@sha256:9a5bd621f61191ac3bc88b8489dee4b711b22bf675cb5d99c7f0a99603e18b82

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 24 Feb 2024 19:45:30 +0800

Ready: True

Restart Count: 0

Environment:

FLUENTD_ARGS: -qq

Mounts:

/var/lib/docker/containers from containers (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-rtc4b (ro)

/varlog from varlog (rw)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

containers:

Type: HostPath (bare host directory volume)

Path: /var/lib/docker/containers

HostPathType:

varlog:

Type: HostPath (bare host directory volume)

Path: /var/log

HostPathType:

kube-api-access-rtc4b:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m6s default-scheduler Successfully assigned default/fluentd-5rz5d to k8s-node-01

Normal Pulling 2m6s kubelet Pulling image "agilestacks/fluentd-elasticsearch:v1.3.0"

Normal Pulled 7s kubelet Successfully pulled image "agilestacks/fluentd-elasticsearch:v1.3.0" in 1m58.498496853s

Normal Created 6s kubelet Created container fluentd-es

Normal Started 6s kubelet Started container fluentd-es

3.7 Pod已经成功运行,并且运行在了非master节点上

node节点上并没有我们设置的选择器标签”app=logging“,他会自动创建资源并且运行在非master节点上。

[root@k8s-master ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

dns-test 1/1 Running 1 (16h ago) 16h

fluentd-5rz5d 1/1 Running 0 2m14s

fluentd-6kpbr 1/1 Running 0 2m14s

[root@k8s-master ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dns-test 1/1 Running 1 (16h ago) 16h 10.2.1.38 k8s-node-02 <none> <none>

fluentd-5rz5d 1/1 Running 0 2m28s 10.2.2.28 k8s-node-01 <none> <none>

fluentd-6kpbr 1/1 Running 0 2m28s 10.2.1.50 k8s-node-02 <none> <none>

# node节点上并没有我们设置的选择器标签”app=logging“

[root@k8s-master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane 4d21h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node-01 Ready <none> 4d20h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-01,kubernetes.io/os=linux

k8s-node-02 Ready <none> 2d21h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-02,kubernetes.io/os=linu

3.8 给node-01节点添加一个标签

[root@k8s-master ~]# kubectl label nodes k8s-node-01 type=microsvc --overwrite

node/k8s-node-01 labeled

[root@k8s-master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane 4d22h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node-01 Ready <none> 4d21h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-01,kubernetes.io/os=linux,type=microsvc

k8s-node-02 Ready <none> 2d21h v1.25.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node-02,kubernetes.io/os=linux

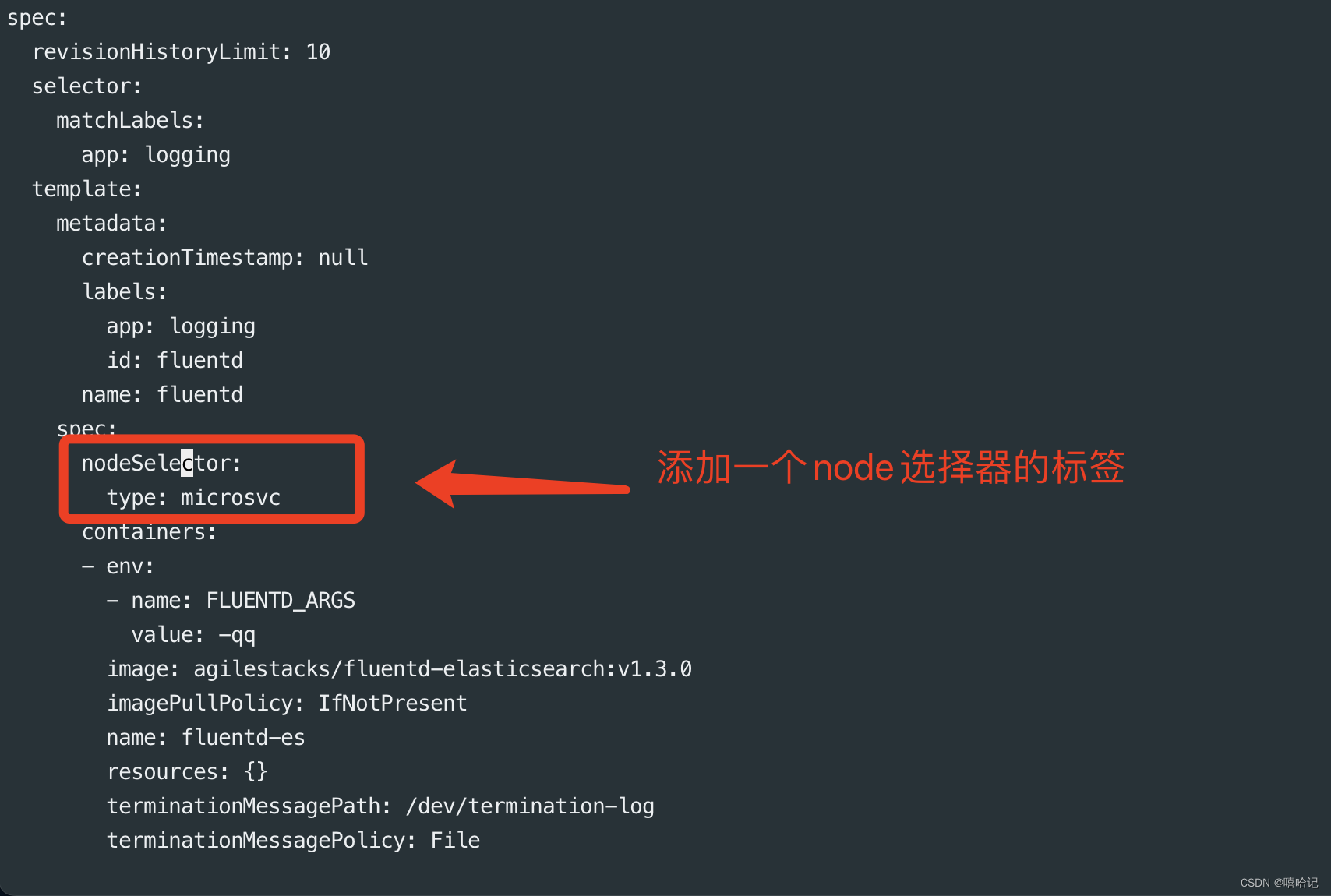

3.9 通过edit选项设置一个node选择器

3.10 查看DaemonSet资源的状态

如下代码中可以看到DaemonSet的资源变为了1个,reday也是1个,这是由于刚才我们给node-01节点打上了标签,所以只在node-01上有pod,node-02上是没有,如果给node-02上打上标签,node-02上也有会这个pod。

[root@k8s-master ~]# kubectl get daemonsets.apps fluentd

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd 1 1 1 1 1 type=microsvc 39m

[root@k8s-master ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dns-test 1/1 Running 1 (17h ago) 17h 10.2.1.38 k8s-node-02 <none> <none>

fluentd-59k8k 1/1 Running 0 22s 10.2.2.29 k8s-node-01 <none> <none>

3.11 给node-02也添加一个标签

[root@k8s-master ~]# kubectl label nodes k8s-node-02 type=microsvc

node/k8s-node-02 labeled

[root@k8s-master ~]# kubectl get daemonsets.apps fluentd

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd 2 2 2 2 2 type=microsvc 42m

[root@k8s-master ~]# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dns-test 1/1 Running 1 (17h ago) 17h 10.2.1.38 k8s-node-02 <none> <none>

fluentd-59k8k 1/1 Running 0 3m35s 10.2.2.29 k8s-node-01 <none> <none>

fluentd-hhtls 1/1 Running 0 5s 10.2.1.51 k8s-node-02 <none> <none>

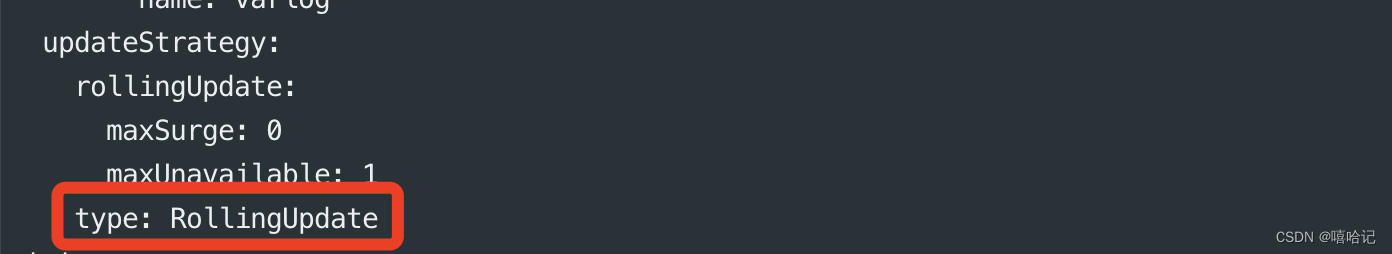

3.12 DaemonSet的滚动更新

不建议使用RollingUpdate,建议使用OnDelete模式,这样避免频繁更新导致资源的浪费

3.12.1 查看DaemonSet的默认更新方式

3.12.2 建议设置为OnDelete