Lecture 4: Value Iteration and Policy Iteration

Value Iteration Algorithm

对于Bellman最优公式:

v

=

f

(

v

)

=

m

a

x

π

(

r

+

γ

P

π

v

)

\mathbf{v} = f(\mathbf{v}) = max_{\pi}(\mathbf{r} + \gamma \mathbf{P}_{\pi} \mathbf{v})

v=f(v)=maxπ(r+γPπv)

在Lecture 3中,已知可以通过contraction mapping原理来提出迭代算法:

v

k

+

1

=

f

(

v

k

)

=

m

a

x

π

(

r

+

γ

P

π

v

k

)

k

=

1

,

2

,

3

\mathbf{v}_{k+1} = f(\mathbf{v}_k) = max_{\pi}(\mathbf{r} + \gamma \mathbf{P}_{\pi} \mathbf{v}_k) \;\;\; k=1, 2, 3

vk+1=f(vk)=maxπ(r+γPπvk)k=1,2,3

其中,

v

0

v_0

v0可以是任意的。

上述算法就是所谓的 value iteration(值迭代)。

其可以分为两步:

-

step 1: policy update (策略更新)。

π k + 1 = argmax π ( r π + γ P π v k ) \pi_{k+1}=\text{argmax}_{\pi}(r_{\pi} + \gamma P_{\pi}v_k) πk+1=argmaxπ(rπ+γPπvk)

其中, v k v_k vk是给定的。 -

step 2: value update(值更新)。

v k + 1 = r π k + 1 + γ P π k + 1 v k v_{k+1} = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k vk+1=rπk+1+γPπk+1vk

注意: v k v_k vk不是state value,因为其不满足Bellman等式。

Value iteration algorithm分析:

-

step 1: policy update

π k + 1 = argmax π ( r π + γ P π v k ) \pi_{k+1} = \text{argmax}_{\pi}(r_{\pi} + \gamma P_{\pi}v_k) πk+1=argmaxπ(rπ+γPπvk)

的元素形式为:

π k + 1 ( s ) = argmax π ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v k ( s ′ ) ) s ∈ S = argmax π ∑ a π ( a ∣ s ) q k ( s , a ) \begin{align*} \pi_{k+1}(s) &= \text{argmax}_{\pi} \sum_a \pi(a|s) \left( \sum_r p(r | s, a)r + \gamma \sum_{s'} p(s' | s, a) v_k(s') \right) \;\;\; s \in \mathcal{S} \\ &= \text{argmax}_{\pi} \sum_a \pi(a|s) q_k(s, a) \end{align*} πk+1(s)=argmaxπa∑π(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vk(s′))s∈S=argmaxπa∑π(a∣s)qk(s,a)

解决上述优化问题的最优策略为:

π k + 1 ( a ∣ s ) = { 1 a = a k ∗ ( s ) 0 a ≠ a k ∗ ( s ) \pi_{k+1}(a | s) = \left\{\begin{matrix} 1 & a = a^*_k(s)\\ 0 & a \ne a^*_{k}(s) \end{matrix}\right. πk+1(a∣s)={10a=ak∗(s)a=ak∗(s)

其中, a k ∗ ( s ) = argmax a q k ( a , s ) a^*_{k}(s)=\text{argmax}_aq_k(a, s) ak∗(s)=argmaxaqk(a,s), π k + 1 \pi_{k+1} πk+1是greedy policy(贪心策略),因为其只是简单的选择最大的q-value。 -

step 2: value update

v k + 1 = r π k + 1 + γ P π k + 1 v k v_{k+1} = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k vk+1=rπk+1+γPπk+1vk

的元素形式为:

v k + 1 ( s ) = ∑ a π k + 1 ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v k ( s ′ ) ) s ∈ S = ∑ a π k + 1 ( a ∣ s ) q k ( s , a ) \begin{align*} v_{k+1}(s) &= \sum_a \pi_{k+1}(a | s)\left( \sum_r p(r | s, a)r + \gamma \sum_{s'} p(s' | s, a) v_k(s') \right) \;\;\; s \in \mathcal{S}\\ &=\sum_a \pi_{k+1}(a | s)q_k(s, a) \end{align*} vk+1(s)=a∑πk+1(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vk(s′))s∈S=a∑πk+1(a∣s)qk(s,a)

因为 π k + 1 \pi_{k+1} πk+1是贪心的,上述等式可以简化为:

v k + 1 ( s ) = max a q k ( a , s ) v_{k+1}(s)=\text{max}_a q_k(a, s) vk+1(s)=maxaqk(a,s)

Procedure Summary:

v

k

(

s

)

→

q

k

(

s

,

a

)

→

greedy policy

π

k

+

1

(

a

∣

s

)

→

new value

v

k

+

1

→

max

a

q

k

(

s

,

a

)

v_k(s) \rightarrow q_k(s, a) \rightarrow \text{greedy policy } \pi_{k+1}(a | s) \rightarrow \text{new value } v_{k+1} \rightarrow \text{max}_a q_k(s, a)

vk(s)→qk(s,a)→greedy policy πk+1(a∣s)→new value vk+1→maxaqk(s,a)

Example:

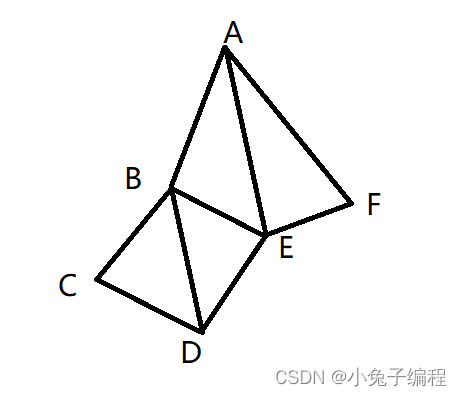

设置:reward: r boundary = r forbidden = − 1 r_{\text{boundary}} = r_{\text{forbidden}} = -1 rboundary=rforbidden=−1, r target = 1 r_{\text{target}} = 1 rtarget=1,discount rate γ = 0.9 \gamma=0.9 γ=0.9

其q-table为:

- k=0时,使 v 0 ( s 1 ) = v 0 ( s 2 ) = v 0 ( s 3 ) = v 0 ( s 4 ) = 0 v_0(s_1) = v_0(s_2) = v_0(s_3) = v_0(s_4) = 0 v0(s1)=v0(s2)=v0(s3)=v0(s4)=0

step1: policy update

π

1

(

a

5

∣

s

1

)

=

1

,

π

1

(

a

3

∣

s

2

)

=

1

,

π

1

(

a

2

∣

s

3

)

=

1

,

π

1

(

a

5

∣

s

4

)

=

1

\pi_1(a_5|s_1) = 1, \pi_1(a_3|s_2) = 1, \pi_1(a_2|s_3) = 1, \pi_1(a_5|s_4) = 1

π1(a5∣s1)=1,π1(a3∣s2)=1,π1(a2∣s3)=1,π1(a5∣s4)=1

如上图(b)

step2: value update

v

1

(

s

1

)

=

0

,

v

2

(

s

2

)

=

1

,

v

1

(

s

3

)

=

1

,

v

1

(

s

4

)

=

1

v_1(s_1) = 0, v_2(s_2) = 1, v_1(s_3) = 1, v_1(s_4)=1

v1(s1)=0,v2(s2)=1,v1(s3)=1,v1(s4)=1

-

k=1时,因为 v 1 ( s 1 ) = 0 , v 2 ( s 2 ) = 1 , v 1 ( s 3 ) = 1 , v 1 ( s 4 ) = 1 v_1(s_1) = 0, v_2(s_2) = 1, v_1(s_3) = 1, v_1(s_4)=1 v1(s1)=0,v2(s2)=1,v1(s3)=1,v1(s4)=1,可得

step1: policy update

π 2 ( a 3 ∣ s 1 ) = 1 , π 2 ( a 3 ∣ s 2 ) = 1 , π 2 ( a 2 ∣ s 3 ) = 1 , π 2 ( a 5 ∣ s 4 ) = 1 \pi_2(a_3|s_1) = 1, \pi_2(a_3|s_2) = 1, \pi_2(a_2|s_3) = 1, \pi_2(a_5|s_4) = 1 π2(a3∣s1)=1,π2(a3∣s2)=1,π2(a2∣s3)=1,π2(a5∣s4)=1

step2: value update

v 1 ( s 1 ) = γ 1 , v 2 ( s 2 ) = 1 + γ 1 , v 1 ( s 3 ) = 1 + γ 1 , v 1 ( s 4 ) = 1 + γ 1 v_1(s_1) = \gamma 1, v_2(s_2) = 1 + \gamma 1, v_1(s_3) = 1 + \gamma 1, v_1(s_4)=1 + \gamma 1 v1(s1)=γ1,v2(s2)=1+γ1,v1(s3)=1+γ1,v1(s4)=1+γ1

如上图(c) -

继续迭代,直到 ∥ v k − v k + 1 ∥ \| v_k - v_{k+1} \| ∥vk−vk+1∥小于预设的阈值。

Policy Iteration Algorithm

Algorithm description:

对于给定的初始随机policy π 0 \pi_0 π0

-

step 1: policy evaluation (PE)

这一步是计算 π k \pi_k πk的state value

v π k = r π k + γ P π k v π k \mathbf{v}_{\pi_k} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k} vπk=rπk+γPπkvπk

注意 v π k v_{{\pi}_k} vπk是state value函数 -

step 2: policy improvement (PI)

π k + 1 = argmax π ( r π + γ P π v π k ) \pi_{k+1} = \text{argmax}_{\pi} (r_\pi + \gamma P_\pi v_{\pi_k}) πk+1=argmaxπ(rπ+γPπvπk)

最大化按元素计算

算法会产生如下序列:

π

0

→

P

E

v

v

π

0

→

P

I

π

1

→

P

E

v

π

1

→

P

I

π

2

→

P

E

v

π

2

→

P

I

⋯

\pi_0 \xrightarrow[]{PE} v_{v_{\pi_0}} \xrightarrow[]{PI} \pi_1 \xrightarrow[]{PE} v_{\pi_1} \xrightarrow[]{PI} \pi_2 \xrightarrow[]{PE} v_{\pi_2} \xrightarrow[]{PI} \cdots

π0PEvvπ0PIπ1PEvπ1PIπ2PEvπ2PI⋯

其中,PE=policy evaluation,PI=policy improvement

三个问题:

In the policy evaluation step, how to get the state value

v

π

k

v_{\pi_k}

vπkby solving the Bellman equation?

v

π

k

=

r

π

k

+

γ

P

π

k

v

π

k

\mathbf{v}_{\pi_k} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k}

vπk=rπk+γPπkvπk

-

Close-form解:

v π k = ( I − γ P π k ) − 1 r π k \mathbf{v}_{\pi_k} = (I - \gamma \mathbf{P}_{\pi_k})^{-1} \mathbf{r}_{\pi_k} vπk=(I−γPπk)−1rπk -

迭代求解:

v π k ( j + 1 ) = r π k + γ P π k v π k ( j ) \mathbf{v}_{\pi_k}^{(j+1)} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k}^{(j)} vπk(j+1)=rπk+γPπkvπk(j)

policy iteration是一种迭代算法,在policy评估步骤中嵌入了另一种迭代算法!

In the policy improvement step, why is the new policy π k + 1 \pi_{k+1} πk+1 better than π k \pi_k πk?

Lemma (Policy Improvemnent)

如果:

π

k

+

1

=

argmax

π

(

r

π

+

γ

P

π

v

π

k

)

\pi_{k+1} = \text{argmax}_{\pi}(r_{\pi} + \gamma P_{\pi} v_{\pi_k})

πk+1=argmaxπ(rπ+γPπvπk)

那么,对任意

k

k

k,都有

v

π

k

+

1

≥

v

π

k

v_{\pi_{k+1}} \ge v_{\pi_k}

vπk+1≥vπk成立。

Why such an iterative algorithm can finally reach an optimal policy?

已知:

v

π

0

≤

v

π

1

≤

v

π

2

⋯

≤

v

π

k

≤

⋯

v

∗

v_{\pi_0} \le v_{\pi_1} \le v_{\pi_2} \cdots \le v_{\pi_k} \le \cdots v^*

vπ0≤vπ1≤vπ2⋯≤vπk≤⋯v∗

故,每一次迭代都会提高

v

π

k

v_{\pi_k}

vπk而且其会收敛,接下来证明其会收敛到

v

∗

v^*

v∗。

Theorem (Convergence of Policy Iteration)

由policy iteration算法产生的state value序列 { v π k } k = 0 ∞ \left\{ v_{\pi_k} \right\}^{\infty}_{k=0} {vπk}k=0∞会收敛到最优的state value v ∗ v^* v∗,因此,policy序列 { π k } k = 0 ∞ \left \{ \pi_k \right \}^{\infty}_{k=0} {πk}k=0∞也会收敛到最优的policy。

Policy iteration algorithm分析:

-

step 1: policy evaluation

maxtrix-vector form:

v π k ( j + 1 ) = r π k + γ P π k v π k ( j ) j = 0 , 1 , 2 \mathbf{v}_{\pi_k}^{(j+1)} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k}^{(j)} \;\;\; j=0, 1, 2 vπk(j+1)=rπk+γPπkvπk(j)j=0,1,2

elementwise form:

v π k ( j + 1 ) = ∑ a π k ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v π k ( j ) ( s ′ ) ) , s ∈ S v_{\pi_k}^{(j+1)} = \sum_a \pi_k(a|s) \left( \sum_rp(r| s, a)r + \gamma \sum_{s'} p(s' | s, a)v_{\pi_k}^{(j)} (s')\right), \;\;\; s \in \mathcal{S} vπk(j+1)=a∑πk(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vπk(j)(s′)),s∈S

当 j → ∞ j \rightarrow \infty j→∞或 j j j 足够大或 ∥ v π k ( j + 1 ) − v π k ( j ) ∥ \| v^{(j+1)}_{\pi_k} - v^{(j)}_{\pi_k} \| ∥vπk(j+1)−vπk(j)∥足够小时,迭代停止。 -

step 2: policy improvement

matrix-vector form:

π k + 1 = argmax π ( r π + γ P π v π k ) \mathbf{\pi}_{k+1} = \text{argmax}_{\pi}(\mathbf{r}_{\pi} + \gamma \mathbf{P}_\pi \mathbf{v}_{\pi_k}) πk+1=argmaxπ(rπ+γPπvπk)

elementwise form:

π k + 1 ( s ) = argmax π ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v π k ( s ′ ) ) s ∈ S = argmax π ∑ a π ( a ∣ s ) q π k ( s , a ) \begin{align*} \pi_{k+1}(s) &= \text{argmax}_\pi \sum_a \pi(a | s)\left( \sum_r p(r | s, a)r + \gamma \sum_{s'} p(s' | s, a) v_{\pi_k}(s') \right) \;\;\; s \in \mathcal{S}\\ &= \text{argmax}_\pi \sum_a \pi(a | s) q_{\pi_k}(s, a) \end{align*} πk+1(s)=argmaxπa∑π(a∣s)(r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vπk(s′))s∈S=argmaxπa∑π(a∣s)qπk(s,a)

其中, q π k q_{\pi_k} qπk是policy π k \pi_k πk 下的action value:

a k ∗ ( s ) = argmax a q π k ( a , s ) a^*_k(s) = \text{argmax}_a q_{\pi_k}(a, s) ak∗(s)=argmaxaqπk(a,s)

greedy policy为:

π k + 1 ( a ∣ s ) = { 1 a = a k ∗ ( s ) , 0 a ≠ a k ∗ ( s ) . \pi_{k+1}(a | s) = \begin{cases} 1 & a = a^*_k(s), \\ 0 & a \ne a^*_k(s). \end{cases} πk+1(a∣s)={10a=ak∗(s),a=ak∗(s).

Simple example:

reward设置: r boundary = − 1 r_{\text{boundary}} = -1 rboundary=−1, r target = 1 r_{\text{target}} = 1 rtarget=1,discount rate γ = 0.9 \gamma=0.9 γ=0.9。

action: a ℓ a_\ell aℓ, a 0 a_0 a0, a r a_{r} ar代表向左、保持不变和向右。

迭代: k = 0 k=0 k=0:

-

step1: policy evaluation

π 0 \pi_0 π0为上图(a),Bellman公式为:

v π 0 ( s 1 ) = − 1 + γ v π 0 ( s 1 ) v π 0 ( s 2 ) = 0 + γ v π 0 ( s 1 ) \begin{align*} &v_{\pi_0}(s_1) = -1 + \gamma v_{\pi_0}(s_1) \\ &v_{\pi_0}(s_2) = 0 + \gamma v_{\pi_0}(s_1) \end{align*} vπ0(s1)=−1+γvπ0(s1)vπ0(s2)=0+γvπ0(s1)

直接计算等式:

v π 0 ( s 1 ) = − 10 v π 0 ( s 2 ) = − 9 \begin{align*} &v_{\pi_0}(s_1) = -10\\ &v_{\pi_0}(s_2) = -9 \end{align*} vπ0(s1)=−10vπ0(s2)=−9

迭代计算等式:假定初始 v π 0 ( 0 ) ( s 1 ) = v π 0 ( 0 ) ( s 2 ) = 0 v^{(0)}_{\pi_0}(s_1) = v^{(0)}_{\pi_0}(s_2) = 0 vπ0(0)(s1)=vπ0(0)(s2)=0,则

{ v π 0 ( 1 ) ( s 1 ) = − 1 + γ v π 0 ( 0 ) ( s 1 ) = − 1 v π 0 ( 1 ) ( s 2 ) = 0 + γ v π 0 ( 0 ) ( s 1 ) = 0 { v π 0 ( 2 ) ( s 1 ) = − 1 + γ v π 0 ( 1 ) ( s 1 ) = − 1.9 v π 0 ( 2 ) ( s 2 ) = 0 + γ v π 0 ( 1 ) ( s 1 ) = − 0.9 { v π 0 ( 3 ) ( s 1 ) = − 1 + γ v π 0 ( 2 ) ( s 1 ) = − 2.71 v π 0 ( 3 ) ( s 2 ) = 0 + γ v π 0 ( 2 ) ( s 1 ) = − 1.71 ⋯ \begin{align*} &\begin{cases} v^{(1)}_{\pi_0}(s_1) = -1 + \gamma v^{(0)}_{\pi_0}(s_1) = -1\\ v^{(1)}_{\pi_0}(s_2) = 0 + \gamma v^{(0)}_{\pi_0}(s_1) = 0 \end{cases} \\ &\begin{cases} v^{(2)}_{\pi_0}(s_1) = -1 + \gamma v^{(1)}_{\pi_0}(s_1) = -1.9\\ v^{(2)}_{\pi_0}(s_2) = 0 + \gamma v^{(1)}_{\pi_0}(s_1) = -0.9 \end{cases} \\ &\begin{cases} v^{(3)}_{\pi_0}(s_1) = -1 + \gamma v^{(2)}_{\pi_0}(s_1) = -2.71\\ v^{(3)}_{\pi_0}(s_2) = 0 + \gamma v^{(2)}_{\pi_0}(s_1) = -1.71 \end{cases} \\ \end{align*}\\ \cdots {vπ0(1)(s1)=−1+γvπ0(0)(s1)=−1vπ0(1)(s2)=0+γvπ0(0)(s1)=0{vπ0(2)(s1)=−1+γvπ0(1)(s1)=−1.9vπ0(2)(s2)=0+γvπ0(1)(s1)=−0.9{vπ0(3)(s1)=−1+γvπ0(2)(s1)=−2.71vπ0(3)(s2)=0+γvπ0(2)(s1)=−1.71⋯ -

step 2: policy improvement

q π k ( s , a ) q_{\pi_k}(s, a) qπk(s,a)为:

替换 v π 0 ( s 1 ) = − 10 v_{\pi_0}(s_1) = -10 vπ0(s1)=−10、 v π 0 ( s 2 ) = − 9 v_{\pi_0}(s_2) = -9 vπ0(s2)=−9和 γ = 0.9 \gamma=0.9 γ=0.9,得:

通过寻找

q

π

0

q_{\pi_0}

qπ0的最大值,提高的policy为:

π

1

(

a

r

∣

s

1

)

=

1

π

1

(

a

0

∣

s

2

)

=

1

\pi_1(a_r | s_1) = 1\\ \pi_1(a_0 | s_2) = 1

π1(ar∣s1)=1π1(a0∣s2)=1

迭代一次之后,policy达到最优

Truncated Policy Iteration Algorithm

Compare value iteration and policy iteration

Policy iteration: 从 π 0 \pi_0 π0开始

-

policy evaluation (PE):

v π k = r π k + γ P π k v π k \mathbf{v}_{\pi_k} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k} vπk=rπk+γPπkvπk -

policy imporovement (PI):

π k + 1 = argmax π ( r π + γ P π v π k ) \pi_{k+1} = \text{argmax}_{\pi}(\mathbf{r}_{\pi} + \gamma \mathbf{P}_{\pi} \mathbf{v}_{\pi_k}) πk+1=argmaxπ(rπ+γPπvπk)

value iteration: 从 v 0 v_0 v0开始

-

policy update (PU):

π k + 1 = argmax π ( r π + γ P π v π k ) \pi_{k+1} = \text{argmax}_{\pi}(\mathbf{r}_{\pi} + \gamma \mathbf{P}_{\pi} \mathbf{v}_{\pi_k}) πk+1=argmaxπ(rπ+γPπvπk) -

value update (VU):

v k + 1 = r π k + 1 + γ P π k + 1 v k \mathbf{v}_{k+1} = \mathbf{r}_{\pi_{k+1}} + \gamma \mathbf{P}_{\pi_{k+1}}\mathbf{v}_k vk+1=rπk+1+γPπk+1vk

两个算法十分相似:

policy iteration: π 0 → P E v π 0 → P I π 1 → P E v π 1 → P I π 2 → P E v π 2 → P I ⋯ \pi_0 \xrightarrow[]{PE} v_{\pi_0} \xrightarrow[]{PI} \pi_1 \xrightarrow[]{PE} v_{\pi_1} \xrightarrow[]{PI} \pi_2 \xrightarrow[]{PE} v_{\pi_2} \xrightarrow[]{PI} \cdots π0PEvπ0PIπ1PEvπ1PIπ2PEvπ2PI⋯

value iteraton: u 0 → P U π 1 ′ → V U u 1 → P U π 2 ′ → V U u 2 → P U ⋯ u_0 \xrightarrow[]{PU} \pi'_1 \xrightarrow[]{VU} u_1 \xrightarrow[]{PU} \pi_2' \xrightarrow[]{VU} u_2 \xrightarrow[]{PU} \cdots u0PUπ1′VUu1PUπ2′VUu2PU⋯

对两个算法详细比较:

-

两个算法的初始条件是相同的

-

两个算法的前三步是相同的

-

两个算法的第四步是不同的:

在policy iteration中,计算 v π 1 = r π 1 + γ P π 1 v π 1 \mathbf{v}_{\pi_1} = \mathbf{r}_{\pi_1} + \gamma \mathbf{P}_{\pi_1}\mathbf{v}_{\pi_1} vπ1=rπ1+γPπ1vπ1需要一个迭代算法

在value iteration中,计算 v 1 = r π 1 + γ P π 1 v 0 \mathbf{v}_1 = \mathbf{r}_{\pi_{1}} + \gamma \mathbf{P}_{\pi_{1}}\mathbf{v}_0 v1=rπ1+γPπ1v0是一个one-step算法

考虑计算 v π 1 = r π 1 + γ P π 1 v π 1 \mathbf{v}_{\pi_1} = \mathbf{r}_{\pi_1} + \gamma \mathbf{P}_{\pi_1}\mathbf{v}_{\pi_1} vπ1=rπ1+γPπ1vπ1这一步:

- value iteration算法只计算一次

- policy iteraton算法计算“无穷”次

- truncated policy iteration算法计算有限次。剩下的从

j

j

j到

∞

\infty

∞次的迭代被省略。

truncted policy iteration是否会收敛:

考虑解决policy evaluaion的迭代算法:

v

π

k

(

j

+

1

)

=

r

π

k

+

γ

P

π

k

v

π

k

(

j

)

j

=

0

,

1

,

2

,

⋯

\mathbf{v}_{\pi_k}^{(j+1)} = \mathbf{r}_{\pi_k} + \gamma \mathbf{P}_{\pi_k}\mathbf{v}_{\pi_k}^{(j)} \;\;\; j=0, 1, 2, \cdots

vπk(j+1)=rπk+γPπkvπk(j)j=0,1,2,⋯

如果初始状态

v

π

k

(

0

)

=

v

π

k

−

1

v_{\pi_k}^{(0)}=v_{\pi_{k-1}}

vπk(0)=vπk−1,那么:

v

π

k

(

j

+

1

)

≥

v

π

k

(

j

)

v_{\pi_k}^{(j+1)} \ge v_{\pi_k}^{(j)}

vπk(j+1)≥vπk(j)

对所有

j

j

j 成立。

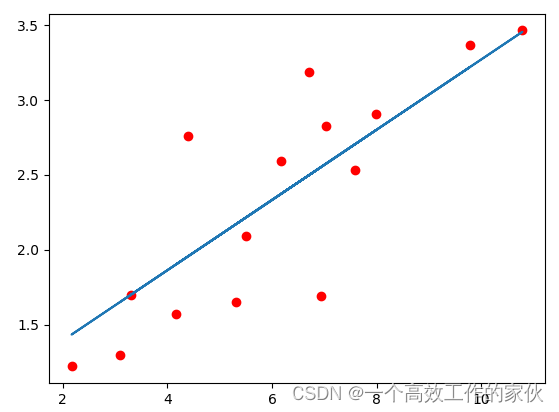

由上图可知,因为policy iteration和value iteration都会收敛到optimal state value,并且truncated policy iteration夹在两者之间,则由夹逼准则可知,其一定会收敛到最优。

例子:

如上图为初始状态,定义 ∥ v k − v ∗ ∥ \| v_k - v^* \| ∥vk−v∗∥为在步骤 k k k时的state error。算法停止的标准为 ∥ v k − v ∗ ∥ < 0.01 \| v_k - v^* \| < 0.01 ∥vk−v∗∥<0.01

- truncated policy iteration- x x x代表truncated policy iteration算法中policy evaluation的迭代次数。

- x x x越大代表值估计收敛的越快。

- 当 x x x不断增加时,其对收敛速度的贡献越来越小。

- 因此,实际上,仅迭代少数几次就已足够。

Summary

Value iteration:

求解Bellman最优等式的迭代算法,给定初始value

v

0

v_0

v0

v

k

+

1

=

m

a

x

π

(

r

π

+

γ

P

π

v

k

)

⇕

{

Policy update

:

π

k

+

1

=

argmax

π

(

r

π

+

γ

P

π

v

k

)

Value update

:

v

k

+

1

=

r

π

k

+

1

+

γ

P

π

k

+

1

v

k

v_{k+1} = max_{\pi}(r_{\pi} + \gamma P_{\pi}v_k)\\ \Updownarrow \\ \begin{cases} \text{Policy update}: \pi_{k+1} = \text{argmax}_{\pi}(r_{\pi} + \gamma P_{\pi} v_k) \\ \text{Value update}: v_{k+1} = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k \end{cases}

vk+1=maxπ(rπ+γPπvk)⇕{Policy update:πk+1=argmaxπ(rπ+γPπvk)Value update:vk+1=rπk+1+γPπk+1vk

Policy iteration: 给定初始 policy

π

0

\pi_0

π0

{

Policy evaluation

:

v

π

k

=

r

π

k

+

γ

P

π

k

v

π

k

Policy improvement

:

π

k

+

1

=

argmax

π

(

r

π

+

γ

P

π

v

π

k

)

\begin{cases} \text{Policy evaluation}: v_{\pi_k} = r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}\\ \text{Policy improvement}: \pi_{k+1} = \text{argmax}_{\pi}(r_{\pi} + \gamma P_{\pi}v_{\pi_k}) \end{cases}

{Policy evaluation:vπk=rπk+γPπkvπkPolicy improvement:πk+1=argmaxπ(rπ+γPπvπk)

Truncated policy iteration

以上内容为B站西湖大学智能无人系统 强化学习的数学原理 公开课笔记。